Continued reduction of minimum feature size and the advancements in the fabrication processes were the main reasons for the DRAM density enhancement. As soon as multi-megabit DRAM appeared on the horizon, it opened completely new application areas for the DRAMs. The use of megabit DRAMs in portable equipment, which required low-voltage operation, such that they could operate on battery for longer duration, became highly desirable. At one point in time, a 5 V supply was standard but was not suitable for long-term battery-based operation. In the low-voltage operating range a 1.5 V DRAM given by M. Aoki and others [1] was one of the first such DRAMs. It also reduced power consumption as bit line swing was reduced only up to the sum of the threshold voltages for NMOS and PMOS transistors in the sense amplifier. A 2 Kbit test DRAM worked successfully at the reduced voltage, and calculated value of the operating current was shown to be reduced from 15 mA at 5 V to less than a third of its value and the DRAM was expected to operate for 500 hours on eight 2-ampere-hour dry batteries. At this stage, reduction in supply voltage created two major problems: (1) decrease in signal-to-noise (S/N) ratio, and (2) degradation in operating speed. Soon after, a major breakthrough came in the form of another landmark 1.5 V DRAM, at 64 Mbit level with increased S/N ratio, given by Nakagome and others [2], using 0.3 μm triple-well CMOS process with no back-gate bias. The new DRAM used three new circuit modifications for making it operative at low voltage with improved performance. It used an I/O sense amplifier circuit with complementary sensing scheme having PMOS-driven current sense amplifier and NMOS read-out gates. From word line swing to bit line swing, a boost ratio of more than 1.75 was needed for low-voltage operation of 1.5 V; hence, a new feedback charge pump circuit was used. To improve S/N ratio, an accurate and highly regulated half-Vcc voltage level was also used. All these features resulted in an RAS access time of 50 ns and power dissipation was only 44 mW with active current of 29 mA and standby current of 1 mA at room temperature.

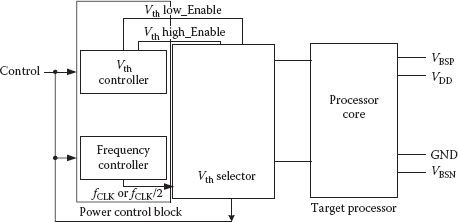

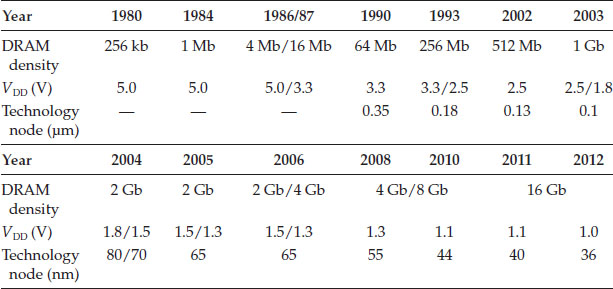

In stand-alone commercial DRAMs, standard supply voltage VDD was reduced to 1.8 V by 2004 and trend is to continuously decrease it [3]. Table 7.1 shows trend in supply voltage with DRAM density over the years. For eDRAMs the voltage was to be lowered even more as it had to be compatible with surrounding peripherals and logic and its suitability with the use of single NiCd cell having a minimum level of supply voltage of 0.9 V only [4]. At the same time advances in technology forced DRAM cell area along with its storage capacitor size to continuously decrease through using innovative cells and reduction in the technology node, and resulting in increased DRAM density accompanied with decrease in gate oxide (tox) thickness bringing a host of challenges to be taken up. Table 7.2 shows the trend of decreasing tox with DRAM density. Increased DRAM density meant, more number of cells on a single bit line, which increased bit line capacitance CBL, resulting in reduced charge transfer ratio (CS/(CS + CBL) ≈ CS/CBL), which would result in unreliable performance in presence of soft errors and other noises unless measures were taken. Another cause of unreliable operation and variable response was the variation in physical dimensions and resultant variations in the characteristics of DRAM cell transistors and capacitors and transistors used elsewhere on the chip. As with other parameters, it caused varied values of threshold Vth on the same chip. It affected the performance in different ways depending on the use of transistors. However, a more serious problem was the increase in power dissipation due to the increase in leakage currents, both in the active and inactive condition of a cell, module, or block. This chapter will discuss the ways in which the challenges that arose in designing low-voltage/low-power DRAMs were faced and the problems resolved especially with regard to power dissipation.

Predominantly Used Supply Voltage and Adopted Technology Node and (Expected) Progress with DRAM Density

Source: Y. Nakagome, et al., “Review and Future Prospects of Low-Voltage RAM Circuits,” IBM J. Res. and Dev., Vol. 47, no. 5/6, pp. 525–552, 2003; International Technology Roadmap for Semiconductors, http://public.itrs.net/Files/2001.ITRS/Home. hlm, 2001 Edition; and ITRS 2009.

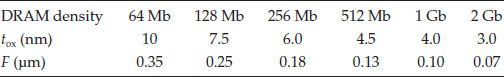

Change in Gate Oxide Thickness with Increase in DRAM Density

A closely related issue is reducing the data retention power in DRAMs. Because of inherent leakages and destructive nature of READ operation, continuous refresh also requires power. This requirement of data retention power is necessary to be reduced for battery backup-based operations. Continuous efforts have been made in this direction. Before discussing the techniques/circuit solutions or technological advancements for reducing leakage current and improving data retention time, different kinds of leakages for DRAM cells and peripherals are discussed.

A DRAM chip can broadly comprise (1) the memory cell array, (2) row and column decoders, and (3) other peripheral circuits. Each component has undergone some changes from its basic form over the years. However, there are several reasons for leakages due to which the stored signal changes and power is dissipated irrespective of the modifications in the DRAM chip components: Some of the significant ones are as follows [5]:

1. Reverse-biased junction leakage current from the storage node

2. Sub-threshold leakage current of the access transistors

3. Capacitor dielectric leakage current

4. Gate-induced drain leakage current (GIDL) at the storage node

5. Gate dielectric leakage current

6. Leakage current between the adjacent cells

While the total leakage current is the sum of all leakage currents, each of the above mentioned components has different weight and their weight has varied considerably with the changes in the technologies, fabrication processes, minimum feature size and the supply voltage. Moreover, all the peripheral circuits, like row and column decoders, sense amplifiers, voltage up/down converters, refreshing circuit, etc. do have similar types of leakages, and power is needed to recover the leaked charge as well as for the normal functioning of the DRAM cell and peripherals.

7.2.1 Junction Leakage and Sub-Threshold Currents

Leakage current flows through the reverse-biased p-n junctions wherever these are located on the chip. For the sub-micron feature size, leakage current is in the range of few pA/μm2 at room temperature and the total contribution may not be very high. However, the junction leakage currents increase exponentially with junction temperature, due to the thermally generated carriers. Hence, keeping the operating temperature low is highly desirable to keep this kind of leakage under control. Another important source of leakage is sub-threshold current of the transistors on the chip. Drain-to-source current does flow in MOSFETs when gate-to-source voltage VGS is less than its threshold voltage Vth and even when VGS = 0. The closer is the threshold voltage to zero volts, the larger is the leakage current, and the larger is the static power consumption. Standard practice to keep sub-threshold leakage small is to keep Vth not below 0.5 V–0.6 V or even higher (~0.75–1.0 V); though, keeping Vth high becomes a problem with reduced supply voltage VDD. Transistors fabricated through such technologies which produce sharper turn-off characteristics (VDS ∼ ID) are preferable, to reduce sub-threshold current.

The junction leakage current from the storage node is a dominant component among all the possible leakages when boron concentration of p-well is increased and the working temperature rises. However, this increased leakage could not be accounted for with normal reasons, that is, diffusion current and generation–recombination current, as both of these are inversely proportional to the boron concentration of the p-well [6]. The anomalous situation is well characterized by thermionic field emission (TFE) current, which has an exponential relationship to the activation energy at the deep level. Thermionic emission from a deep level is enhanced by the tunneling effect due to the strong field in the depletion region [5]. As this increased electric field is due to the enhancements of substrate doping, junction leakage current can be reduced by lowering the substrate doping to an optimum level, keeping in mind that it will affect the sub-threshold characteristics of the transistor.

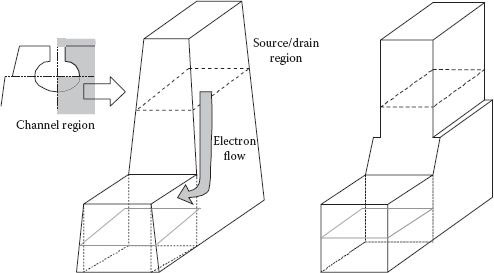

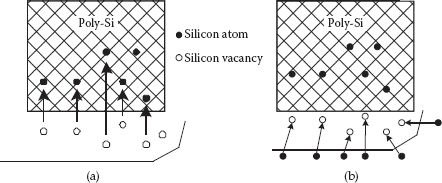

Gate tunnel leakage current is the sum of the leakage currents through the dielectrics of the gates of all transistors on the chip. This kind of leakage becomes a problem around 3 nm gate oxide thickness (tox) and increases after that at a rate of one order with tox decrement of only 0.2 nm [7]. As the DRAM cells needed a high operating voltage (because of larger Vth) for stable memory operation, tox of standard DRAMs has not been reduced as fast as the rate for static RAMs. A thinner tox could be used for peripheral circuits as these could operate on low voltage, though normally same gate oxide thickness transistors were preferred and used on the whole chip. However, for eDRAMs, a dual-VDD and dual-tox device approach has also been adopted for getting higher speeds, and it is expected that even stand-alone DRAMs would use dual-tox approach [8]. Different solutions have been proposed to reduce the leakage, such as shutting off the supply path by inserting a power switch [9]; however, these are applicable for standby mode and shall be discussed later.

7.3 Power Dissipation in DRAMs

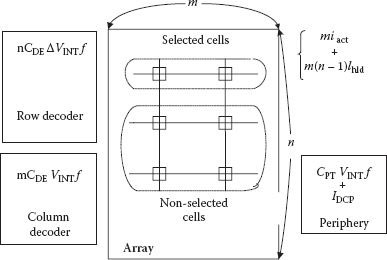

Irrespective of the nature of leakage currents (charge) and their weights, power has to be supplied to the DRAM, which, along with the normal chip-functioning requirement, shall also include the power dissipated due to the leakage currents. For discussing different components of power dissipation, Figure 7.1 shows a simplified chip architecture displaying three major blocks of cell array, row/column decoders, and peripherals. Here the core cell array is comprised of m cells in a row with n number of rows. In the simplified form one word line having m cells is activated and the remaining m(n – 1) cells remain unselected. For a CMOS DRAM total power consumption shall be P = VDD IDD, where IDD, the total chip current, shall be the sum of the following components [9]:

(7.1) |

(7.2) |

(7.3) |

(7.4) |

(7.5) |

In Equations (7.1) to (7.5), iact and ihld are the effective currents in active/selected cells and effective data retention current of inactive/nonselected cells, respectively. CDE is the output node capacitance of each decoder, Vint is the internal supply voltage, IDCP is the total static or quasi-static current of periphery, and f is the operating frequency being equal to (1/tRC) with tRC being the cycle time. The working frequency (or tRC) has considerable impact in changing the weights of different components in the power requirement. At the same time, levels of Vint and threshold voltage of transistors Vth do affect the power component requirement ratio. For example, for Equation (7.3), the decoder charging current may become negligible when a CMOS NAND decoder is used because only one each of the column and row decoders is operative, hence (n + m) is replaced by 2 only [10]. As far as Equation (7.5) is concerned, IDCP is mainly due to the on-chip voltage converters of different types. With efficient design, its contribution can become small and shall remain constant even with increasing value of f. Ordinarily, the component could have been neglected below gigabit range DRAMs; however, for low-voltage, low-power DRAMs, special efforts are needed to control this component. A discussion of on-chip voltage converters is included in Section 7.7. Even for megabit-density DRAMs, the data retention current given by Equation (7.2) was small at high frequencies in comparison to other components, but not for gigabit DRAMs. Considerable effort is now being made to contain the data retention current; the issue shall be discussed in latter parts of the chapter.

FIGURE 7.1

Important current components for power dissipation in a DRAM chip. (Redrawn from “Trends in Low-Power RAM Circuit Technologies”, K. Itoh, K. Sasaki and Y. Nakagome, Proc. IEEE, Vol. 83, No,4, pp. 524–543, 1995.)

A sense amplifier is a necessity for a DRAM cell for the refreshing process, wherein bit line is charged and discharged with a relatively large swing ΔVBL, with charging current of (CBL ΔVBL* f), where CBL is the bit line capacitance. At higher operating frequency f, power requirement components depending on it become large and while neglecting smaller components, can be combined and approximated as

(7.6) |

Expressions for the DRAM power requirement from Equations (7.1 to 7.6) show obvious ways of reducing it. Reduction in charging capacitances m CBL and CPT and lowering of external and internal voltages VDD, Vint, and ΔVBL need careful consideration; however, reduction of bit line dissipation charge (mCBL ΔVBL) needs special attention as it dominates the total active power.

Sufficient signal-to-noise (S/N) ratio must be maintained for reliable DRAM operation as the cell signal is small in magnitude and resides on the floating bit line, which itself is susceptible to noise. Charge transfer ratio between a cell and a bit line is equal to CS/(CS + CBL), where CS is the storage cell capacitance. Hence generated signal vS for the half-VDD precharging scheme is expressed as

(7.7) |

where QS = CS. ΔVBL is the cell signal charge. Hence reduction in CBL is advantageous in two ways; it reduces IDD while increasing signal vS. Whereas reducing ΔVBL reduces IDD but degrades the signal charge [4].

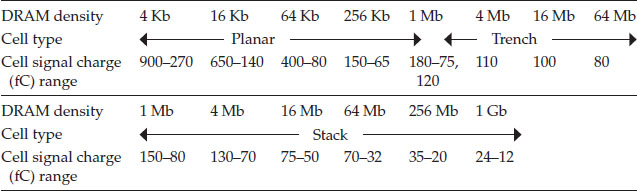

Magnitude of the signal charge has been reduced considerably with the increase in DRAM capacity, mainly due to reduction in the minimum feature size (F) and supply voltage reduction, and the type of cell, as shown in Table 7.3. It is important to keep QS above a critical value for reliable DRAM operations that should make ΔVBL distinct from the memory array noise and soft errors. Value of QS is also affected due to higher Vth, Vth variation, and Vth mismatch among devices. Increasing the value of Vth is a necessity when the memory capacity is increased, even with lower VDD, because the maximum refresh time, tREFmax, of DRAM must increase with the memory capacity as mentioned in Section 7.6.1.1. Variations in Vth change the half-VDD sensing in DRAM and Vth mismatch between cross-coupled MOSFETs present in large number of DRAM sense amplifiers also increases with increased memory capacity and decreased F; that further degrades the sensing signal of the DRAMs. Calculated maximum Vth mismatch in NMOS used in the DRAM sense amplifiers is shown in Table 7.4; it also shows improvement while using redundancy [8]. The mismatch is doubled with F changing from 0.35 μm to 0.07 μm. Though not a very serious problem for DRAM around 0.1 μm technology, it becomes serious with further small values of F, due to high process sensitivity. One of the main problems created by transistor mismatch (along with the bit lines deviating from design characteristics) is the development of offset noise. An offset-compensating bit line sensing was proposed in [11] but could not be applied to commercial DRAMs because the proposed compensating circuit was too large to fit in the cell pitch. Another offset cancellation sense amplifier, which could shrink to fit in the cell pitch, was also proposed [12]. However, it uses extra chip area and consumes extra time for offset cancellation before the word line activation can cause significant reduction in sensing speed. The large current drawn by differential amplifiers is an additional problem. Direct current sensing technique improves sensing performance by removing timing constraints of column-select line signals, but the low-voltage operation requires a multistage amplification, because of small value of ΔVBL [13]. An offset compensated pre-sensing scheme was employed along with the direct sensing scheme, which effectively reduces the total time for the read operation [14]. However, it requires additional charge pumping for possible leakage of the boost voltage source for equalization and needs at least 3% chip size overhead.

Cell Signal Charge Range Variation with DRAM Density and Cell Type

Maximum Vth Mismatch without and with Redundancy

Use of column-redundancy technique seems a better option for overcoming the Vth mismatch/offset noise problem as it can replace a certain percentage of sense amplifiers with excessive variation in Vth (δVth). For example, if the ratio of spare columns to normal columns is 1/256 (0.4% of area), memory capacity limitation is extended by at least three generations [8]; Table 7.4 shows advantage of using redundancy for reducing Vth mismatch. An efficient test method to detect and replace defective sense amplifiers or excessive δVth sense amplifier is also needed. On chip error-checking and correcting (ECC) schemes are almost essential and shall be taken up separately.

7.5 Power Dissipation for Data Retention

While the DRAM is in data retention mode, the refresh operation retains the data. In normal course, m cells of a word line are read simultaneously and restored. The process is done for the n word lines. Obviously n also becomes the number of refresh cycles and current given by Equation (7.6) flows during every cycle. The frequency f at which the current flows is (n/tREF), where tREF is the refresh time of cells in the retention mode and reduces with increasing junction temperature. So, from Equation (7.6), data retention current is given as [4]

(7.8) |

When the DRAM is in the active mode, cell leakage current and junction temperature become maximum. Hence refreshing of the cell is required at a very high rate and tREF becomes tREF max, which makes it much smaller than tREF. Thus, IDCP becomes relatively small in active mode but becomes larger than AC current component because of small (n/tREF) during refresh-only duration and it also needs minimization.

The necessity for reduction in power consumption on a DRAM chip cannot be overemphasized. Continuous attempts have been made to decrease it on the face of increasing memory density and capacity. At each generation low-power circuits have been developed and combinations of technology developments have resulted in a downward trend in power consumption [4]. Continued reduction in power dissipation could become possible mainly with the applicability of the following:

1. Partial activation of multi-divided bit line and shared input/output

2. Use of CMOS technology in place of NMOS technology, including half-VDD bit line precharging of the bit line

3. Reduction in the supply voltage (VDD) and use of on-chip voltage converters

7.6.1 Bit Line Capacitance and Its Reduction

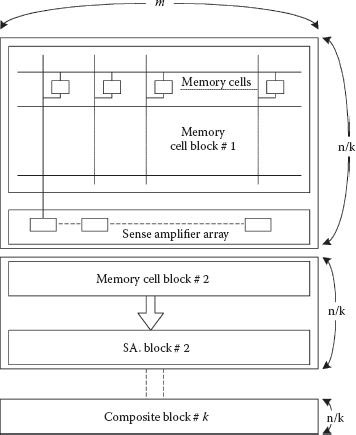

Figure 7.2 shows basic architecture of a (m × n) DRAM array comprised of m columns and n rows with k subarrays. The rows have been divided such that each subsection has (n/k) rows, thereby dividing the bit-line capacitance to a manageable value. All the m cells connected to a word line are refreshed simultaneously, and the process is repeated sequentially for the remaining (n – 1) rows one at a time, without selecting a bit line. For proper functioning (i.e., to avoid conflict with refreshing process), normal READ/WRITE operation is done during the rest period. However, a successful refresh operation needs to be performed for each row within the maximum allowable refresh time, tREF max, which depends on the maximum leakage current of the memory cell; the maximum refresh cycle time is (tREF max/n).

FIGURE 7.2

Basic architecture of an m * n DRAM core with subdivided k arrays. (Redrawn from “Trends in Megabit DRAM Circuit Design”, K. Itoh, IEEE J. Solid-State Circuits, Vol. 25, No. 3, pp. 778–788, 1990.)

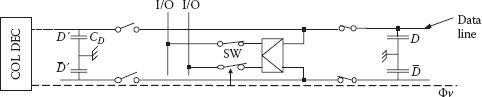

As the memory capacity (m*n) increases, the rows are further divided, that is, k is increased to limit (n/k), or in other words to keep the bit line capacitance CBL within safe bounds. It results in a sharp rise in the number of amplifiers used on the chip, m*k; a 1 Gbit chip may require even a million amplifiers. The reason behind the increase in the number of sense amplifiers was that in the initial scheme one sense amplifier at each column decoder division of sub-block was used as shown in Figure 7.2. In another approach, called shared sense amplifier scheme, which was in practice at 256 Kbit–4 Mbit DRAM capacity level, two sub-data lines were allowed to share one sense amplifier [15,16]. In this scheme cell signals become double as the bit line capacitance becomes half the conventional scheme. There was reduction in chip size because of reduced number of sense amplifiers and associated circuitry and hence power consumed in sense amplifiers was also reduced. However, drawback of power dissipation due to bit line capacitance still remained equal to the conventional case because for the purpose of (dis) charging the bit lines, there was no change. This line of action exhausted its usefulness at 16 Mbit DRAM level with combined use of shared sense amplifier, shared I/O, and shared column decoders; an arrangement is shown in Figure 7.3. Advantage of using shared I/O is that it further halves the multi-divided bit line [17]. Only one sense amplifier is activated along the bit line to achieve its partial activation, which lowers the power dissipation. More reduction in power is achieved by increasing the value of n, as discussed later.

FIGURE 7.3

Memory array organization for showing shared column decoder. (Modified from “An Experimental 1 Mb DRAM with On-Chip Voltage Limiter”, K. Itoh et al., ISSCC, pp. 282–283, 1984.)

In addition to the division of bit line, a multi-divided word line structure is also available. In hierarchical word line structure, a word line is divided into a few sub-word lines; hence partial activation of word lines becomes possible. Though the architecture has some speed performance drawback, it has great potential for power reduction [18]. The architecture can easily reduce chip power dissipation to half.

Use of partial activation of multi-divided data line reduces its effective charging capacitance, but word line length m is not reduced without increasing tREFmax of the cell. To know the reason for it, the refresh-busy rate γ, expressed in Equation (7.9), needs a bit of consideration [16]:

(7.9) |

Here M and tRCmin are the memory capacity and the minimum cycle time, respectively, and it is better to have smaller γ for having larger active time. Hence, for a given DRAM capacity M and fixed tRC min, it is necessary to maintain the product of m and tREF max, which will keep γ constant. For increased capacity of DRAM, m ∗ tREF max shall have to be increased proportionally. Normally m and tREF max are increased in the same proportion, to have a compromise between cell array power consumption and the cell leakage current. Value of tREF max has been doubled at each generation though it is difficult to maintain in practice because of its dependence on the cell leakage current [19]. Hence one of the solutions is to use a new refreshing scheme that employs multi-divided word line [18]. With divided word line, given that m is reduced, maximum power consumption is reduced, which can allow increased tREF max. More recent methods of improving refresh time shall be discussed in Section 7.8.

CMOS technology has shown conclusively that its application reduces power dissipation. For DRAMs, there are different reasons which combine together, like use of half-VCC (array voltage) bit line precharging, and use of CMOS peripheral circuits. For example, as in Equation (7.7), generated cell signal vS has been derived assuming half-VDD (supply voltage) precharged bit line, has become a standard practice now. Charging and discharging of bit lines need reduced power as the precharge voltage is not through the charging of bit lines to VDD but due to the charge sharing between two halves of the bit line. In addition, peak currents are nearly halved while sensing and precharging. It results in narrow supply lines decreasing parasitic capacitance of the wiring, which not only makes the DRAM cell fast but also reduces the generated noise.

For the NMOS case, all row decoders except the one selected were discharged from the precharge voltage, whereas for the CMOS case only one selected decoder is discharged and all the rest of the decoders remain at the precharge level. This results in a sharp decrease in power dissipation for CMOS decoders and could reach 4% of that in NMOS in normal course [10]. Peak current is also reduced to nearly half that of NMOS case, with consequential advantage.

Clock generators are essential components on DRAM chips. CMOS clock generators have been shown to consume about half the power (of NMOS clock generators) including the dissipation for (dis) charging the load capacitance. CMOS clock generators have smaller node capacitances and occupy nearly half the chip area compared to usual NMOS circuits, on account of simpler circuitry. It all results in lower power consumption [10].

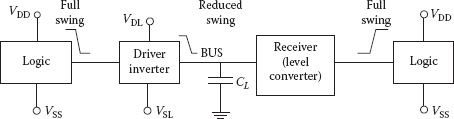

7.6.3 On-Chip Voltage Reduction/Conversion

For NMOS DRAMs normal supply voltage was +12 V in the early 1970s, which soon changed to 5 V for CMOS DRAMs for better reliability and the minimization of hot electron injections through gate oxide. It was essential to standardize it from the point of view of IC manufacturers as well as users. Around 64 Mbit DRAM density levels it became obvious to the manufacturers that continuation of single supply voltage, VDD of 5 V was not feasible on twin counts of excessive power dissipation and the scaling down of the transistors on the chip. Although practically it was also not possible to reduce supply voltage with each generation of reduced minimum feature size of the transistor, it was predicted very early that beyond 1 Mbit, it would be very difficult to reduce power dissipation without further reducing supply voltage. From the early 1990s, with 1.5 V DRAMs design for mobile device applications, sharp reduction in the voltage was foreseen. At the same time at low operating voltage of bit line (which was further reduced because of VCC/2 level precharging), it becomes necessary to increase the word line voltage even above VCC for overcoming the threshold offset voltage. A back-bias voltage (changing with the DRAM generations) was also needed on the chip. Conversion to up and down fluctuations of the standard power supply becomes essential for reducing power consumption and reliable operation. A large number of circuits/techniques are now available for this purpose and shall be discussed in Section 7.7.

At 64 Kbit DRAM density level even when supply voltage was reduced to 5 V, disturbance due to noise was not prohibitive. Architecture was simple NMOS, full VDD precharge and open bit line with enough spacing between bit lines. With DRAM density moving into Mbit range spacing between bit lines decreased, giving rise to large coupling capacitance. Introduction of CMOS technology led to lower power consumption DRAMs and then to low voltage battery-operated systems, which reduced the amount of cell signal, which was already reduced with ½ VCC precharge scheme. With the generation of larger noises and reduction of cell signal, signal-to-noise ratio started to decrease rapidly. At 4–16 Mbit stage, it became clear that unless measures were taken, differentiation of noise from signal would become difficult. The second half of the 1980s saw a lot of activity in the study of different noises on the DRAM chip and efforts in improving SNR; either cell signal was increased or noises were decreased.

For signal improvement, charge transfer (~Cs/CBL), was maintained by increasing the storage capacitance Cs, through the use of three-dimensional capacitors like trench or stacked capacitors and later on other advanced versions and techniques like hemispherical grains and use of high permittivity dielectric. However, a limit of 25–30 fF was considered practically safe and the bit line capacitance CBL was tried to be reduced. Half-VCC precharging was also an important scheme for enabling doubled storage capacitance for a fixed electric field across the capacitor insulator [19]. Multidivision of data lines in blocks, as discussed in Section 7.6.1, was found essential in this regard. Reduction in bit line wire width reduced CBL; however, interbit capacitance increased when the DRAM density increased as spacing between bit lines decreased.

With storage capacitance Cs remaining almost constant, and CBL also nearly same within practically reducible limits, only choice was to reduce noises of all types, which continued to increase with rise in DRAM density.

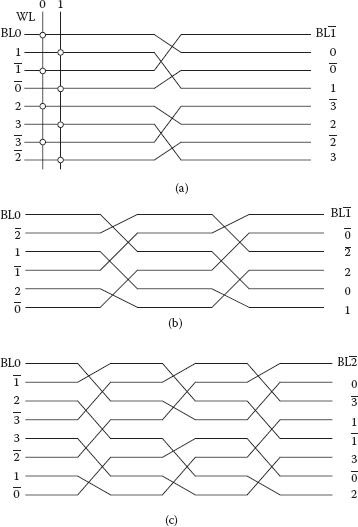

Around 16 Mbit DRAM density, coupling or interference to noise became a major problem in realizing high-speed, high-density DRAMs. There were mainly two types of noises, due to interbit line (BL) coupling capacitance and BL-word line (WL) feedback noise in the access transistor. Inter-BL coupling noise was originating because of signals in adjacent BL pairs and intrapair coupling noise was between true and complementary BLs. A twisted bit line (TBL) scheme in which BLs are divided in four sections with each BL pair twisted two times and a modified twisted bit-line (MTBL) scheme in which BLs are not placed adjacent to their pair BL, in addition to the twisting like that in TBL, eliminated inter-BL coupling noise and claimed to have eliminated intra-BL coupling noise as well [20,21]. The TBL and MTBL schemes were applicable to both the open and folded BL approaches, where BLs are equally divided into four sections and each BL pair is twisted at two of the four boundary points of the sections.

The TBL scheme has been used in some DRAM chips [21,22], but it could not suppress intra-BL coupling noise and required additional chip area for dummy cells and BL twisting. In addition, the signal loss in a 1 Gbit DRAM is estimated to be >30% of total signal. To overcome these limitations multiple TBL has been proposed [23]. It eliminated both the inter-and intra-BL coupling noise but imposed extreme constraints on chip design and required special process and design technologies, and it could not be applied to the folded-bit line arrangement, commonly used in DRAMs. In a different technique, a data-line shielded stacked capacitor (STC) cell was employed in which position of the data line was changed with respect to the storage and plate layer, compared to earlier STC cells; the data-line is shielded by either the storage or the plate layer [24]. Interference noise was reduced below 7% at 2.8 μm data-line pitch, without transposing the pair of data lines. Extra chip area was not needed.

The capacitor-over-bit-line (COB) cell structure suppresses the inter-and intra-BL interferences, but its fabrication process was considered difficult. Storage node contact formation needed small and deep contact node etching along with a large topology difference between the cell array and the peripherals, which was expected to be more pronounced in gigabit range [25]. Another modified twisted bit-line scheme (MTBL) structure was proposed to overcome the problems of earlier TBL schemes and the COB structure. Figure 7.4(a) shows one of the structures proposed [26,27] in which twisting of BLs is done in a different way. Hence, the inter-BL interference is converted into common mode noise and the intra-BL noise is suppressed by a shielding effect. The twisting can be applied to any BL precharging scheme, and compared to conventional folded bit line proposed twisting reduced 50% of inter-and intra-BL noise. The scheme does not need any special layout and/or sense amplifier and can be applied to open BL structure with same benefits. Further improvement in noise reduction is possible by twisting sets of six or eight BLs as shown in Figure 7.4(b) and (c). Resultant reduction in noise by approximately 66% and 75%, respectively, is possible by twisting the sets of six or eight BLs.

FIGURE 7.4

MTBL configuration having (a) four BLs with single twisting, (b) six BLs with double twisting, and (c) eight BLs with triple twisting. (“Multiple Twisted Data Line Techniques for Multigigabit DRAMs”, D.-S. Min and D.W. Langer, IEEE J. Solid State Circ., Vol. 34, pp. 856–865, 1999.)

In high density DRAMs, word line coupling noise also becomes troublesome mainly because of higher WL voltage than supply voltage and metal WL strapping. Even for the DRAMs with sub-WL driver schemes, with scaled down threshold voltage and supply voltage, WL coupling can become more problematic than BL-coupling noise. Similar to MTBL, a multiple twisted word-line (MTWL) scheme has been effectively used. Four WLs are twisted at the center of the WLs in the same way as it was done for BLs shown in Figure 7.4. It reduces the effective coupling capacitance of adjacent WLs. While twisting, two WLs are separated by two WL pitches, considerably reducing capacitance coupling [27]. A 256 Mbit DRAM was fabricated, with transistor threshold Vth = 1.0 V, sheet resistance of 25 and 0.07 Ω/sq. for polycide and metal WLs, respectively, and noise-to-signal ratio (NSR) was simulated and measured for the cases using (1) conventional WL, (2) proposed MTWL, and (3) combined MTWL and MTBL. Value of NSR increases rapidly as WL pitch is scaled down. For WL pitch of 0.37 μm in case of 1 Gbit DRAM level, NSR is 23% for the convention WL scheme, which when combined with BL coupling noise becomes unacceptably high for a functional DRAM. With MTWL scheme, 35% reduction in NSR is achieved in comparison to the conventional WL. For a 256 Mbit DRAM case combinations of MTWL and MTBL schemes achieved a 64% reduction in NSR compared to the conventional WL and TBL implementation.

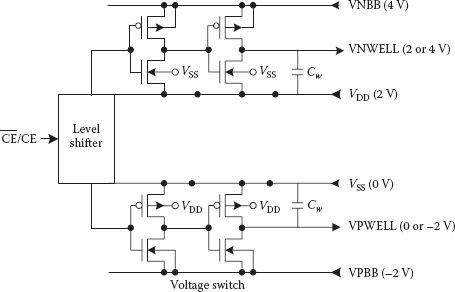

7.7 On-Chip Voltage Converter Circuits

Operation of DRAMs at low voltage became an extremely important requirement to save power consumption. It was also essential for maintaining reliability of the DRAMs. With continued reduction in oxide film thickness (tox), excess electrical stress had to be avoided and generation of hot electrons was to be minimized. With successive technology generation, internal chip voltages were different and their ratio with supply voltage continued to change. At the same time standardization of supply voltage could not be done too frequently. Only the on-chip voltage converters reconciled the conflicting requirements; hence became essential. For example, supply voltage was converted on chip to nearly 2.5 V for 64 Mbit DRAM having tox = 10 nm, which was further reduced to 2–2.5 V and 1.5–1.8 V for 256 Mbit and 1 Gbit level DRAMs [28]. Another extremely important reason for further reduction in operating voltage was the DRAM demand for battery operated hand-held mobiles and digital devices. On the other hand, voltage boosting was needed for the word line, and other on-chip voltage levels were needed for half-VCC operation of bit line, back-bias voltage, and so on.

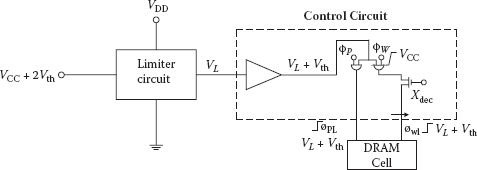

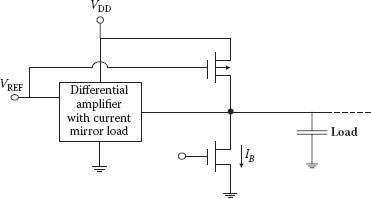

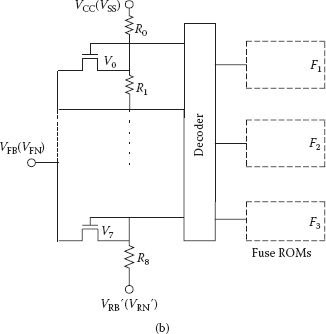

Probably the first circuit of an on-chip supply voltage converter for DRAMs was given by Mano and others in 1983 for immunizing MOS transistors from hot carrier injections [29]. A 5 V supply was converted to 3 V for cell array circuitry and the rest of the peripheral and interface worked on 5 V. Soon a voltage converter was given by Itoh and others as shown in Figure 7.5, in which limiter block converted VDD to VL (nominal value 3.7 V) and the word line voltage was (VL + Vth). Bit line was precharged to VL (not VCC/2) and precharge clock also received (VL + Vth). A charge pump was used to generate VCC + 2Vth for the voltage limiter. For on-chip voltage down converter (VDC), it is extremely important to provide regulated and accurate voltage when large DRAM array current changes from zero to peak and vice-versa. The VDC should also be suitable against changes in external power supply and temperature. At the same time, it is also important to have a provision of on-chip burn-in capability. Figure 7.6 shows an early VDC, which basically consisted of a current mirror differential amplifier and common source output transistor [30] and converts 5 volts VDD to a stable 3.3 V. Gate width of the output transistor has to be very large and the gate voltage has to respond quickly when the output goes low; VDC needs larger value of amplifier current. The bias current is needed to minimize output voltage deviation when the load current becomes nearly zero. An important requirement of the on-chip voltage converter circuits is that the reference voltage VREF must be accurate and stable with supply voltage operating temperature variation, and if there is variation in transistor parameter due to the limitation of fabrication processes. Band-gap VREF generator is considered to be a good choice and in earlier reports bipolar transistors were deployed for it [31]; later on, CMOS VREF generators making use of threshold voltage differences were proposed [32].

FIGURE 7.5

A voltage limiter arrangement for a DRAM cell. (Modified from “An Experimental 1 Mb DRAM with On-Chip Voltage Limiter”, K. Itoh et al., ISSCC, pp. 282–283, 1984.)

FIGURE 7.6

A typical voltage down converter. (Redrawn from “Trends in Low-Power RAM Circuit Technologies”, K. Itoh, K. Sasaki and Y. Nakagome, Proc. IEEE, Vol. 83, pp. 524–543, 1995.)

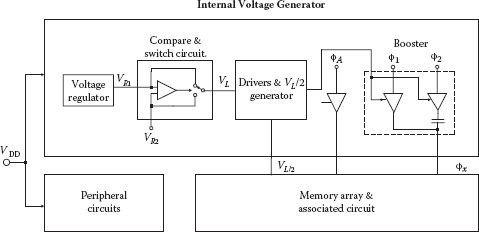

FIGURE 7.7

An internal voltage generating scheme. (Redrawn from “Dual-Operating-Voltage Scheme for a Single 5V 16 Mbit DRAM”, M. Horiguchi et al., IEEE J. Solid State Circ., Vol. 23, pp. 612–617, 1988.)

At 16 Mbit DRAM level a dual-operating-voltage scheme for a single 5 V supply was suggested in which memory array operating voltage was chosen to be 3.3 V since up to that stage of density level internal voltage converters were providing satisfactory performance. Moreover, a lower voltage <3.3 V at that stage would have required a larger storage capacitance for obtaining reliable cell signal and >3.3 V would have made excessive electric field across the memory cell capacitor [33]. Voltage converters used large standby current and dual operating-voltage was shown to be the best choice in terms of speed and reliability of the devices used; and also reduced power consumption and kept cell signal charge at a suitable level. Proposed internal voltage generator is shown in block form in Figure 7.7 along with different voltage levels. In the dual operating voltage scheme, there is an inherent problem of racing due to mismatch between memory array and peripherals. Compare-and-switch circuit is designed to overcome this problem by raising memory array operating voltage when supply voltage is considerably higher than 5 V. To achieve sufficient drivability and voltage accuracy, a driver using a simple differential amplifier with a PMOS load is found to be a suitable choice.

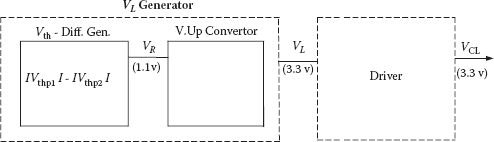

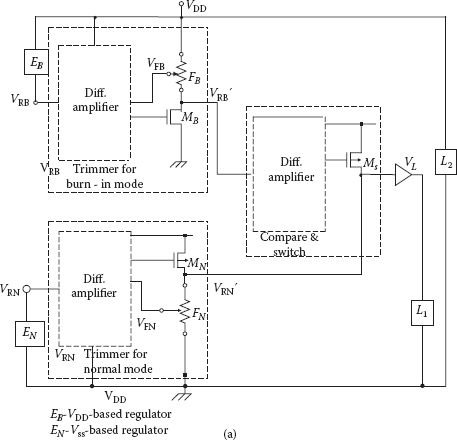

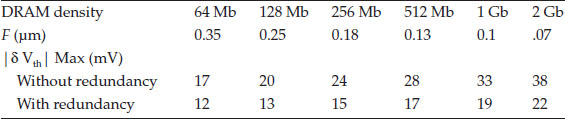

Because of the importance of on-chip voltage limiters, several designs, including the just discussed dual-operating voltage scheme, were reported. However, all of them had some common deficiencies like imprecise voltage regulation and effect of threshold voltage variation; both were due to variations in fabrication processes. In addition, effects of voltage bounce and feedback stability were also not attended to [31,33, 34, 35 and 36], and these internal voltage generators depended on the threshold voltage of MOSFETs (with inherent variations) [34,35], which had large dependence on the external supply voltage, operating temperature, or else depended on a band-gap reference [31,36], which used bipolar transistor on a CMOS chip, with consequential limitations including fabrication complexities. To overcome the deficiencies and some process limitations, a CMOS DRAM voltage limiter comprising a precise internal voltage generator and a stabilized driver, as shown in block form in Figure 7.8 was given by Horiguchi and others [32]. In this scheme the internal voltage generator comprising a PMOS-Vth difference generator with a voltage-up converter and fuse trimmings, was preferred. Generated voltage (|Vthp1| – |Vthp2|) maintains stability, and it is not affected because of bulk bias voltage (VBB) fluctuations which (dis) charges large bit line capacitance and is not affected by the noise of the main chip supply voltage.

FIGURE 7.8

A voltage limiter in block schematic form. (Redrawn from “A Tunable CMOS-DRAM Voltage Limiter with Stabilized Feedback Amplifier”, M. Horiguchi et al., IEEE J. Solid State Circ., Vol. 25, no. 5, pp. 1129–1135, 1990.)

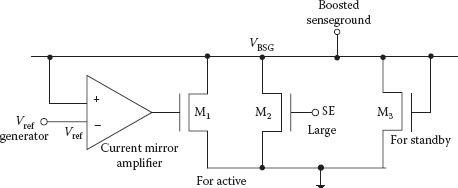

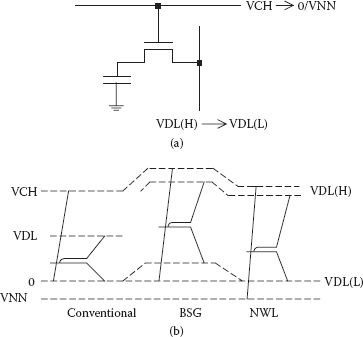

Boosted sense grounded (BSG) and negative word line (NWL) schemes are used to extend the data retention time of the DRAMs. In the BSG scheme, low level of the bit line is slightly boosted to suppress sub-threshold current of the unselected memory cell word line transistor in the active memory array. In the BSG scheme suggested by Akasura and others [37], reference voltage VREF was set at 0.5 V as shown in Figure 7.9. Large NMOS M2 turns on at the beginning of sensing, which suppresses unwanted rise in boost sense ground voltage (VBSG) due to sensing current in bit line. In the standby mode, current mirror amplifier is inactive and NMOS M3 clamps the level of VBSG. Ground line for supplying charge to the BSG line is totally separate from the global ground lines, and other circuits are not affected. The BSG scheme reduces junction leakage current helping in the increase of data retention time. However, in the BSG scheme, bit line swing becomes less than VCC which makes it a little unsuitable for low voltage operation. Negative voltage word line is another technique for the refresh time improvement [28] through the suppression of sub-threshold leakage current. As it is well known that junction leakage current under the storage node and the sub-threshold leakage current affect the refresh characteristics of the DRAMs, a small back-bias voltage VBB is used to reduce electric field between the storage node and the p-well under the memory cell. However, small VBB level reduces the threshold voltage Vth of the access transistor resulting in increased sub-threshold leakage current. Negative-voltage word line (NWL) technique suppresses the just mentioned increase of sub-threshold current. In a conventional scheme WL is controlled with VBB = −2 V; however, an improvement of 2.5-to 3-fold in the refresh time was made possible by using both VBB and low WL voltage level of −0.5 V. Since low values of Vth can be used, another advantage of the NWL technique is that is allows one to use a lower level than the conventional high level of the word lines. This suggests that even at 1.2 V, cell voltage level can be obtained in full using a boosted voltage, Vpp′ (= 2VCC), and it can be easily generated by a usual VPP generator. Figures 7.10(a) and (b) illustrate some voltage levels in conventional, BSG, and NWL schemes.

FIGURE 7.9

Circuit diagram for boosted sense-ground scheme; SE enables the beginning of sensing. (Adapted from “A 34 ns 256 Mb DRAM with Boosted Sense-Ground Scheme,” M. Akasura et al., Proc. ISSCC, pp. 140–141, 1994.)

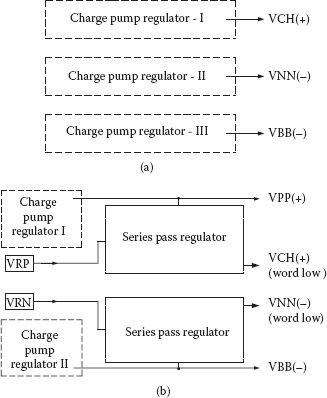

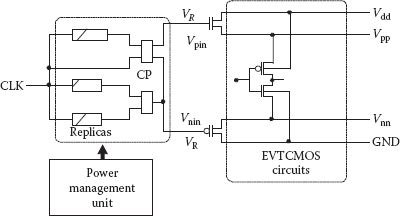

The NWL scheme requires highly regulated and correct high levels of the word line and back-bias voltage VBB; otherwise presence of noise and/or variation in chip power supply affect access transistor threshold voltage causing serious signal loss due to increased sub-threshold current. Hence, a precise on-chip voltage generator is a necessity especially for gigabit level DRAMs. Even a 0.1 V decrease in gate source voltage of the cell access transistor increases the sub-threshold leakage current by an order. Charge pump generators shown in block form in Figure 7.11(a) are used in conventional procedure for the provision of high and low WL voltages in a DRAM. The output generally has a ripple of nearly 0.2 V, which is reduced by combining series pass regulator with charge pump regulator as shown in Figure 7.11(b). The series pass regulators need accurate WL offset voltages, which are made available by combining band gap reference with a differential amplifier and current mirror offset voltage generator, where accurate offset voltages were produced using mirror current as illustrated in ref. [39]. Band gap reference voltage generator can be selected among many available circuits; however, one MOSFET is preferred over a BiCMOS for high-density DRAMs.

It is well established that on-chip supply voltage conversion has become essential and a number of schemes are available. However these conventional methods used as much as half to two-thirds of total chip power at 1 Gbit/4 Gbit DRAMs. As an alternative, two internal circuits were connected in series between supply rails [38]. Both the internal circuits had identical DRAMs with same core and peripherals except input/output buffers and both circuits operated with the same clock. As a result, the AC current waveform in both circuits was the same and more importantly voltage across each DRAM was fixed at VDD/2 without using any conversion process. The technique was successfully tested using two 4 Mbit DRAMs.

FIGURE 7.10

(a) Basic cell, and (b) voltage levels, in conventional, BSG, and NWL schemes. (Adapted from “A Precise On-Chip Voltage Generator for a Giga-Scale DRAM with a Negative Word-Line Scheme”, H. Tanaka et al., Symp. VLSI Circ. Dig. Tech. Papers, pp. 94–95, 1998.)

Substrate requires enough negative bias when chip is active. Obviously power dissipation of the bias generator should be low, and it should be able to provide adequate voltage level at greater efficiency. As the working voltage of the DRAM chip decreases, back-bias voltage level also has to go down; its value (range) is decided based on the following. Back-bias voltage (VBB) level depends on the value of the threshold voltage of the access transistor in the DRAM whose upper and lower limits are decided by the boosted word line voltage and suppression of the sub-threshold leakage current, respectively. For the upper limit, the word line voltage needs to be (VCC + 1.2 Vth), where Vth is the increased threshold voltage of the access transistor with its source at VCC level. [40]; effective back-bias voltage becomes (VCC + |VBB|). For a practically available word line voltage of ~1.7 VCC′ an upper limit of Vth is 0.88 V at VCC = 1.5 V. As mentioned, the sub-threshold leakage current decides the minimum required value of the threshold voltage. The accepted value of memory cell capacitance of 30 fF (~25 fF has also been considered safe by a large number of reports) and the data hold time of 100 ms, Vtho (with source connected to ground) should be larger than 0.6 V for keeping sub-threshold leakage current less than 11 fA per cell. It requires that VBB of lower than −1.0 V is essential in a 1.5 V DRAM [40].

FIGURE 7.11

Schematic of a voltage regulator for negative word line scheme. (a) Charge pump regulator (conventional), and (b) hybrid regulator. (Adapted from “A Precise On-Chip Voltage Generator for a Giga-Scale DRAM with a Negative Word-Line Scheme”, H. Tanaka et al., Symp. VLSI Circ. Dig. Tech. Papers, pp. 94–95, 1998.)

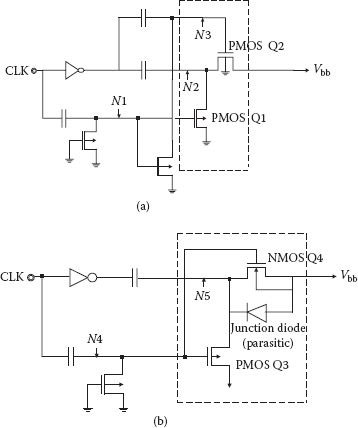

Few circuits are available for on-chip back-bias generation. Figures 7.12(a) and (b) show a conventional pumping circuit (CPC) and hybrid pumping circuit (HPC), respectively [40]. In CPC, which uses two PMOSs, the VBB could not be pumped lower than |Vthp| – VCC, whereas in HPC it could reach (–VCC). Working of the HPC can be understood by following the clock when it is low and then high. When CLK is low, node voltage N5 is clamped to ground level. When CLK changes to high, node N4 rises to |Vthp| and by capacitive coupling node N5 voltage level and VBB become −VCC. In HPC no threshold voltage is lost while generating VBB. An important precaution for the HPC is that the NMOS used in the pumping circuit needs to be fabricated in a triple-well structure to avoid the minority carrier injection, which could destroy stored data.

FIGURE 7.12

Back-bias generator: (a) conventional circuit, and (b) hybrid pumping circuit (HPC). (“An Efficient Back-Bias Generator with Hybrid Pumping Circuit for 1.5V DRAMs”, Y. Tsukikawa, el.al., Symp. VLSI Circ. Dig. Tech. Papers, pp. 85–86, 1993.)

7.7.2 Voltage Limiting Schemes

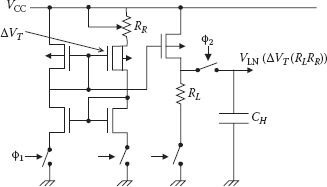

On-chip voltage limiters are extremely important for reducing DRAM power dissipation and the enhancement of device reliability. Utility of the voltage limiter is considerably improved when it also generates precise voltage during burn-in and stress conditions are applied automatically. A DRAM voltage limiter with a burn-in test mode was given by Horiguchi and others as shown in Figure 7.13(a) [41]. It is based on the simple arrangement where the DRAM core (circuit L1) operates on the internally generated voltage VL, and the peripherals (circuit L2) and the voltage limiter operate on the external supply voltage VDD. In a burn-in test VL and VDD are raised and a number of schemes are available; however, these have focused mainly on voltage stability under normal operation [41]. A dual-regulator dual-trimmer scheme shown in Figure 7.13(a) is one such practical scheme in which not only a precise high voltage for burn-in test is available, but it also maintains a constant limited voltage under normal condition. Here the compare and switch outputs exceed the two regulated and trimmed voltages VRN and VRS. The regulator EB keeps (VDD – VRB) constant, independent of temperature—an important condition for proper circuit operation. Accurate burn-in voltage is obtained by simply raising VDD. In addition, the two sets of trimmers TB and TN reduce any deviations in the generated voltage due to the process variations, especially the change in threshold voltage Vth. Circuit realization of VRB regulator and VRN regulators used biasing circuit which employed PMOS threshold voltage difference scheme; otherwise any other suitable circuit can also be used. Each trimmer block comprises a differential amplifier, an output transistor, and a variable negative feedback circuit (FB or FN), some details of which are shown in Figure 7.13(b). Deviations in burn-in voltage as well as in the normal operating voltage were reduced to ± 0.13 V while using only six fuse ROMs. Main limitation of the reference generator is the use of 100–1000 kΩ resistors and large amount of standby current because of the presence of quite a few differential amplifiers and DC current paths in the voltage divider, making it unsuitable for low power battery-operated DRAMs. A dynamic reference voltage generator that consumes considerably less current has been proposed, as shown in Figure 7.14 [42]. Threshold voltage difference ΔVth and resistance RR determine the reference current IR, which is mirrored to the output node and flows through RL, and output voltage depends only on ΔVth and resistance ratio. Hence, accurate output voltage is available even when ΔVth may vary due to fabrication process limitations, as polysilicon fuses accurately trim the resistance RR. In the experimental verification, pulse widths of φ1 and φ2 were taken as 200 ns and 100 ns, respectively, and the generator current could be reduced to less than 1 μA, making it suitable for battery-operated DRAMs [42].

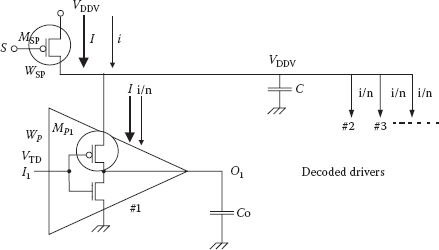

FIGURE 7.13

Voltage limiter with symmetrical dual trimmers using decoding scheme having (a) trimmers and compare-switch circuit, and (b) details of negative feedback circuit. (Redrawn from “Dual-Regilator Dual-Decoding-Trimmer DRAM Voltage Limiter for Burn-in Test”, M. Horiguchi et al., IEEE J. Solid State Circ., Vol. 26, pp. 1544–1549, 1991.)

FIGURE 7.14

A dynamic reference voltage generator. (Modified from H. Tanaka et al., “Sub-1-μA Dynamic Reference Voltage Generator for Battery-Operated DRAM”, Symp. VLSI Circ. Dig. Tech. Papers, pp. 87–88, 1993.)

Improvement of DRAM cell retention time is a critical factor in realizing high-density DRAMs, since it needs to be doubled with every generation and chances of failure of weak cells rise. At the same time the main source of trouble is that leakage currents continue to grow with reduction in minimum feature size. Several approaches have been followed for successful extension in refresh time. Reduction in leakage currents is very important in this direction and is a matter of discussion in Section 7.7. Another kind of scheme depended on the knowledge that data retention capability of the cell depended on operating temperature and voltage, and random variations in parameters which are inherent in fabrication processes. Quite a few schemes were given which tried to set optimum internal refresh periods by using temperature detectors [43,44,45] and internal voltage converters [45], or through measuring voltage degradation of weak cells [46]; however, DRAM design had to continue to improve their speed, which resulted in increased power dissipation as well. At the same time, the JEDEC standards adopted an extended temperature range of (85 to 95°C) from the earlier temperature range of 0 to 85°C, and it is now operating in servers [47,48]. DRAM data retention time became half of that in this extended temperature range compared to the standard temperature range, which was 64 ms. Corresponding to 64 ms refresh interval (tREFI), the interval at which refresh command must be sent to each DRAM (from an internal counter to the next part of the chip as per JEDEC standard) was 7.8 μs (64 ms divided by number of rows) for 256 Mbit DDR2 DRAM. DRAM density requires doubling of refresh time (tREF) with every generation using conventional methods. The problem was circumvented in some cases by doing simultaneous refreshing of a number of rows with single command [49], but it requires larger charging current and hence delay in terms of refresh cycle time (tRFC), the amount of time that each refresh takes. For the current in-use DDR3 4 Gbit DRAM, it requires tRFC of 300 ns, and it may go to 350 ns for 8 Gbit [50].

Error control codes have also been used to minimize errors along with conventional techniques for refresh time extension. The combination of longer refresh time along with shorter refresh time for a few rows has also been used [51,52]. The major drawback of all such approaches was that multiple refreshing was done on a row basis and with cumbersome measurement methods. Probably, the main reason was the failure to identify the weak cell efficiently and develop a systematic approach. A multiple refresh scheme in terms of an algorithm, which depended on error correction, was also proposed for optimal selection of multiple refreshing periods [53]. Boosted sense ground (BSG) schemes discussed in Section 7.7 have been effectively used to double the refresh period [37].

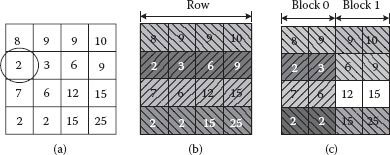

Instead of a row-based refreshing method, a novel method of refreshing on a block basis was given in which a large number of multiple refresh periods match the required refresh period of the blocks as closely as possible [54]. Consider a 16-cell memory as shown in Figure 7.15(a) where data retention time of each cell is written in each square (cell). In conventional refreshing tREF must not exceed the minimum retention time of all cells, that is, 2. If the array of Figure 7.15(a) is broken into a 4 cell block as shown in Figure 7.15(b) then four different refreshing periods can be used; 8 for row 1, 2 for rows 2 and 4, and 6 for row 3. If the array is further divided in blocks of two cells as shown in Figure 7.15(c), blocks may be refreshed with tREF of 2, 4, 6, 7, 8, 9, 12, and 15. DRAM architecture of the block-based multiple period is also made available for the purpose of generation of refresh signal, bit selection, and multiple period refreshing. A polynomial time algorithm is provided that computes set of optimal refresh periods for the selected blocks. As the selected blocks comprise cells having closely valued tREF, these refresh periods are obtained during post-fabrication testing of memory array. Moreover, the method needs nearly 6% chip area overhead and was not tested at higher operating temperature.

FIGURE 7.15

Memory with 16 cells: (a) data retention times, (b) necessary refresh period using blocks with 4-cell, and (c) 2-cells. (Redrawn from “Block-Based Multiperiod Dynamic Memory Design for Low Data-Retention Power”, J. Kim and M. Papaefthymiou, IEEE Trans. on VLSI Systems, pp. 1006–1018, 2003.)

The JEDEC DDRx standards have allowed for spacing in refresh operations. If a small number of tREF periods are delayed, data are not lost provided that all cells have been refreshed satisfactorily. This deferral of refresh count is eight for the DDR3 standard [55]. A memory controller receives read and write instructions from the CPU and places in the input queue and then moves to an available space. The memory controller also has to execute refresh operation. The selection between various operations including refreshing is done by the memory scheduler. A significant report has investigated for optimum priority scheduling read/write and refresh [55]. Scheduling of refresh command has not been used often. Usually the refresh scheduling algorithm asked for refresh operation as soon as tREF period expired as tRFC was not affected much, and at the same time it required simple hardware logic control. In a much better scheme, which has been given the name defer until empty (DUE), refresh operations are not selected over read/write until refresh deferral count reaches seven refresh operations. However, even these kinds of designs are not good enough in containing refresh penalties. For example, take the case of low-multilevel parallelism (MLP) workloads. There are many time slots when the memory controller bank queues are empty but refresh scheduler will continue to provide refreshing when tREF counter expires; along with long tRFC, it results in large penalties by the memory controller. Even with high DRAM bus utilization refresh penalties accrue due to scheduler inefficiencies [55]. The elastic refresh algorithm has been proposed, which betters the other schemes including the DUE approach, in which an additional period of time is added for waiting for the rank to remain idle before the refresh command is given. A detailed study was undertaken for evaluating the elastic refresh scheme and was compared with best-known algorithm DUE.

The temperature and supply voltage dependence of tREF and the identification of weaker cells are helpful in devising a mechanism of extending refreshing duration. An important study has shown another cause on which tREF depends. For analysis purposes, three chips were fabricated in 54 nm technology with different types of cell structures like recessed and buried word line, and variation of tREF was studied for different data patterns like all cell high and all cells low with only one cell high. It was observed that tREF is determined not only by the cell leakage but also by the bit line sense amplifier (BL SA) offset. As data patterns determine the interference between bit line and word line, this also affects tREF. Dependence of tREF on data patterns is found by studying relation between cell leakage characteristics and its own offset variation [56]. The chip having smallest variation in tREF shows the best offset variation and the worst leakage, whereas the chip having largest variation in tREF shows the best cell leakage characteristics. The study concluded that it is only important to improve the BL SA offset present due to data patterns, and it will reduce tREF variation.

7.9 Sub-Threshold Current Reduction

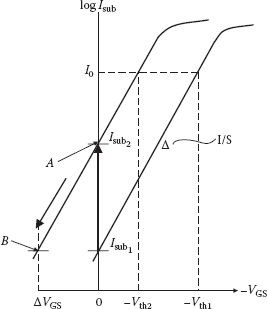

Sub-threshold current in an NMOS is given as

(7.9) |

where Vtho is the threshold voltage when source and bulk substrate are at same potential, and γ is the body effect coefficient. VGS, VBS, and VDS are the respective device terminal voltages, λ is the drain-induced barrier lowering (DIBL) factor, k is the Boltzmann constant, T is the absolute temperature, and q is the electron charge. Here, Is is the drain current coefficient, and n is the sub-threshold swing parameter, which is related with slope factor S, a quality metric of sub-threshold region as to how much reduction in VGS produces an order of magnitude reduction in the sub-threshold current. It is given as

(7.10) |

where CD is the depletion layer capacitance, and Ci is the insulator capacitance of the MOSFET.

In low-voltage DRAMs, if threshold voltage Vth is also scaled down (though not in proportion to the supply voltage), it will result in increased Isub. The most effective way of overcoming this problem of increased Isub is to keep Vth high as before in the DRAM cells and peripheral circuits, both in the active mode and in the standby mode. Depending upon the DRAM topology and its circuit operation, the importance of reducing Isub in different modules varies and shall be discussed accordingly. During fabrication, value of Vth is increased mostly by increasing the doping level of the MOSFET substrate, but application of reverse bias(es) is the most effective way even after fabrication and it can easily be applied to only the selected low Vth MOSFET circuits. Idea of affecting Isub through device terminal voltage reverse biasing is obvious from Equation (7.9) and almost all suggested techniques fall in this broad category. A fine description of the classification of reverse biasing techniques and the respective leakage reduction efficiencies is available in ref. [8].

As mentioned earlier, Isub has to be reduced in the DRAM core, and in the peripheral circuits in the active and sleep modes. Different techniques are available, some of which are specific for active or for sleep mode and some are applicable in general. For better understanding, Section 7.10 describes only those techniques which are better suited for peripheral circuits.

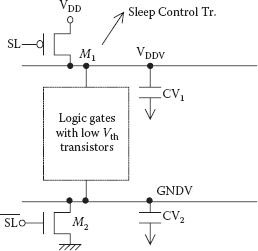

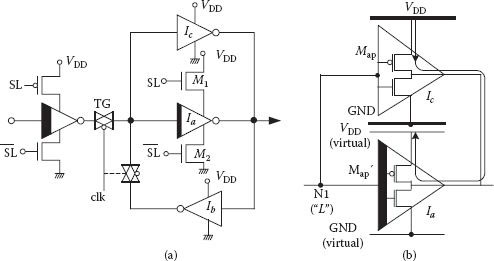

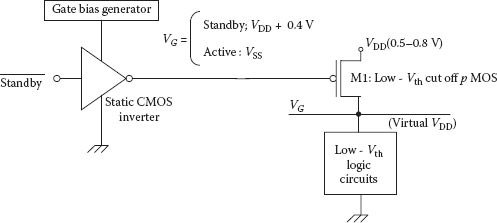

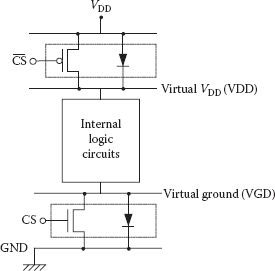

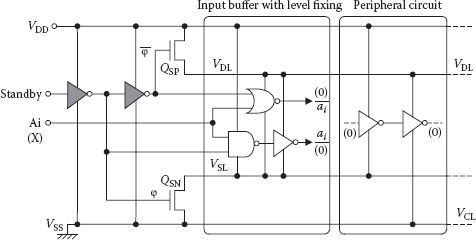

7.10 Multithreshold Voltage CMOS Schemes

Multithreshold voltage CMOS technology scheme (MTCMOS) proposed by Mutoh and others not only reduces standby current, but it also obtains high-speed performance at low supply voltage using low threshold MOSFETs [57]. Figure 7.16 shows basic MTCMOS scheme in which all logic gates use low Vth (0.2–0.3 V) MOSFETs, and its terminals are not directly connected to supply rails but to virtual supply rails. High threshold (0.5–0.6 V) MOSFETs M1 and M2 link the actual and virtual power lines, and they act as sleep mode control transistors through select signals SL and . Sleep control transistors are relatively wider with low on-resistance value; hence, when asserted, virtual supply lines function as real. However, larger I sub of low-Vth logic is almost completely suppressed by M1 and M2 having large Vth. Performance of the MTCMOS circuit depends on the size of the control transistors and the capacitances of the virtual power supply lines. Voltage deviations in supply lines due to the switching of the logic gates are suppressed by having larger values of supply line capacitances. It is claimed that MTCMOS operates almost as fast as low V th logic and at supply voltage of 1.0 V its delay time is nearly 70% less than that for the conventional logic gate with normally high Vth.

FIGURE 7.16

Basics of an MTCMOS scheme. (“1-V Power Supply High-Speed Digital Circuit Technology wth Multithreshold-Voltage CMOS”, S. Mutoh et al., IEEE J. Solid-State Circuits, Vol. 30, pp. 847–854, 1995.)

FIGURE 7.17

(a) MTCMOS latch circuit, and (b) problematic leakage current path.

Since latches and flip-flops are memory elements and should retain their data even in sleep mode they need special attention in MTCMOS. An MTCMOS latch circuit is shown in Figure 7.17(a); it has conventional inverters Ib and Ic, which have high Vth and are connected directly to the actual supply rails. Data is retained by the latch even in sleep mode because the latch path consisting of inverters Ib and Ic continues to receives power. Inverter Ia and the transmission gate TG (both with low Vth MOSFETs) form the forward path and provide high-speed operation. In this path, control transistor M1 and M2 (high Vth) are also included for maintaining proper operation which is better understood through Figure 7.17(b). Since node N1 shall be in low state during sleep mode, real and virtual rails would be connected through on PMOS transistors Map and Map′, thereby increasing current in the sleep mode. Therefore, inclusion of the control transistors M1 and M2 becomes essential.

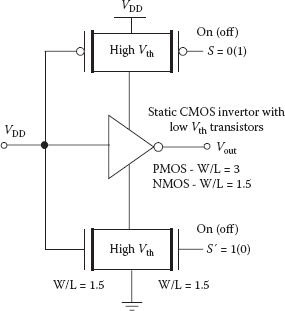

The MTCMOS circuit technique is an excellent method so that logic can work at low voltage, however, use of high Vth MOSFETs in the critical path for holding data creates bottlenecks. So, special circuits called balloon circuits have been used which help in preserving the state of nodes even during the sleep mode, without having high Vth MOSFETs in the critical paths [58]. The balloon circuit, as shown in Figure 7.18(a), is connected to the real supply directly, but it does not operate in the active period; hence, there is no requirement on its speed. Balloon circuits are therefore realized with normal high Vth, minimum size MOSFETs with low standby power, and chip area penalty. Signals B1 and B2, as shown in Figure 7.18(b), control the transmission gates (TGs), and their states decide the active mode or sleep mode period. In the active mode, node A does not float because leakage current of the low Vth TG flows and the balloon is not in the critical path, which avoids the bottleneck of the earlier MTCMOS technique.

FIGURE 7.18

(a) Typical balloon circuit and (b) sleep operation of the balloon circuit. (Modified from “A 1-V high-speed MTCMOS circuit scheme for power-down applications”, S. Shigematsu et al., Symp. VLSI Circuit, Dig. Tech. Papers, pp. 125–126, 1995.)

Use of balloon circuits improves the operation of MTCMOS circuits; however, it costs chip area and needs a timing control scheme for switching of operating modes. Another data holding circuit is proposed that uses intermittent power supply (IPS) scheme with low Vth transistors without any increase of leakage currents [59]. In this scheme, virtual power lines are cut off from the mains and connected only intermittently during sleep mode; hence, no extra state holding circuit is required. In an experimental latch, fabricated in 0.35 μm CMOS technology, saving of 30% chip area, 10% reduction in delay, and 10% reduction in active power consumption were obtained in comparison to the conventional MTCMOS case.

For battery-based application, along with performance (speed), energy consumption is critical. Hence while developing a dual Vth MOSFET process at 0.18 μm level, energy-delay product (EDP) was extracted from measured data. Investigations done with VDD and Vth variations gave following important inferences: (1) for minimum EDP, optimum (Vth/VDD) ~ (120 mV/300 mV), (2) optimum Vth is a logarithmic function of the activity factor of the application, and (3) dual Vth process gives good results with high sleep mode durations operation cases [60].

In general, leakage-sensitive circuits like dynamic NOR gates, which require level keeper for compensating loss of charge, are not preferred in DRAMs [61]. However decoders of modern CMOS DRAMs do use dynamic NAND gates for rows. With technology at 0.1 μm and beyond, use of dynamic circuit was bound to increase, even when dynamic circuits are significantly worse in terms of Isub in comparison to the static circuits. Consequently, low Vth devices, as normally used in static MTCMOS circuits, cannot be used for reducing delay in critical paths. In spite of the limitation, dynamic circuits became a necessity in high-speed submicron process technologies as they were faster by at least 25% in comparison to the static counterparts [62]. A study at 0.25 μm technology optimized for 1.8 V VDD observed that in dynamic circuits with Vth > 300 mV, Isub can be maintained at an acceptable level of nearly 1 nA/μm (at 30°C), but for Vth < 300 mV, Isub is substantially higher than 1 nA/μm, and it is independent of channel length. Moreover, Isub multiplies steeply with rise in working temperature and a functionality problem is created in dynamic circuits. A solution was proposed that dynamic substrate bias be used to raise the threshold voltage in standby mode, which would reduce Isub several orders of magnitude. Performance is not degraded since source-to-well reverse bias is not applied in active mode, retaining low values for Vth [62].

A high Vth significantly reduces Isub of a MOSFET; however, it also results in its higher equivalent on-resistance deteriorating propagation delay. Empirically, Vth is kept around 20% of VDD supply voltage for proper maintenance of balance in Isub and propagation delay [63], but for low voltage DRAMs, Vth (20% of VDD) becomes too small. The problem has been minimized by using varieties of circuits with dual-threshold MOSFETs. In one such scheme, low Vth devices are used in critical path(s) for achieving high performance, with the lower limiting value of Vth depending on noise margin, whereas high Vth devices are used in noncritical paths and the value of the Vth may range between low Vth to 0.5 VDD. However, a major concern is in the distinction and selection of paths that would use higher Vth devices, as it may convert some of the noncritical paths to critical ones; it also depends heavily on the value of higher Vth used. If the value of higher Vth is slightly more than low Vth, a large number of MOSFETs can be assigned this value without turning the path into critical one but improvement in Isub shall be small. On the contrary, with too large a Vth, only few paths can use such devices, though with improved Isub. Therefore, it needs a solution for optimum value of Vth, and a levelization back-tracing algorithm is one such attempt which selects and assigns optimal high-Vth [64]. The algorithm begins with initializing a circuit with one value of low Vth, then high Vth is assigned to some devices lying in noncritical paths (within the constraints of certain performance limits). This assignment is done by “back-tracing of the slack of each node level by level.” Here slack means possible slowdown of a gate without affecting the overall performance of the circuit. The value of Vth is increased till such time that slack becomes zero. Use of the algorithm on certain ISCAS benchmark circuits has shown reduction in active and standby leakage power even up to 80% [64].

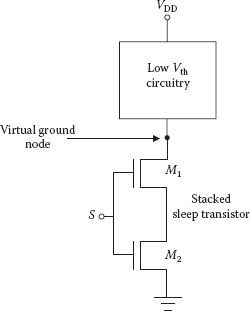

7.10.1 Stacking Effect and Leakage Reduction

Insertion of an extra transistor between the supply line and the pull-up transistor of driver circuit was shown to create a reverse-bias between gate and source of the driver transistor when both transistors were off [58]. Result was a substantial decrease in Isub and this phenomenon is referred to as the stacking effect. For a more general case a transistor stack of arbitrary height was studied, taking into account the body effect as well as drain-induced barrier lowering factor (DIBL), which also becomes significant for submicron devices [65]. It was observed that the leakage power also depended on primary gate-input combination. In another model for the stack effect, a stack effect factor, ratio of the leakage current in one off device to that for a stack of two off-devices, was found to be dependent on the process parameters, sub-threshold swing S, and the power supply voltage VDD [66]. The stack effect reduction factor was shown to increase with downward technology scaling, which was basically due to expected increase in DIBL factor λd and possible reduction in supply voltage. Stacking of devices does reduce Isub, but drive current of a forced stack is lowered, resulting in increased propagation delay. It suggests that stack forcing would be used in noncritical paths, similar to the dual Vth scheme. Of course there can be a delay leakage trade-off and paths which are faster than as per local requirement can be slowed down with a condition that such slow down does not affect the overall performance. The forced stack technique, which effectively reduces Isub of noncritical paths, can be used with and without the dual Vth process. Common gates like NAND, NOR, or more complex ones do have stacked gates in their original form. During standby, if numbers of stack devices are off, Isub can be reduced. However, it is not practical to have all stacked devices off throughout the time duration of sleep mode. In case, if stacking is forced in both n-and p-networks of a gate, leakage will surely be reduced, irrespective of the input logic levels [66].

Analysis of the scaling of stack effect and improvement in gate leakage also showed possibility of performance degradation and required sleep mode input vectors to take full advantage of stacking. An enhanced MTCMOS scheme is also available, as shown in Figure 7.19 with stack transistors M1 and M2 placed between low Vth logic and the ground for leakage control in standby mode [67]. This scheme has an important characteristic of eliminating the need of input vector set for minimizing leakage current and works on a single sleep-mode signal S for turning off both the transistors in the sleep mode. It has been shown that optimum stack height is only two, but at the same time, the size of the two MOSFETs is to be optimized, which will minimize performance degradation and leakage power consumption [67]. Since the method of optimization given here took into account only sub-threshold leakage in standby mode and not the gate leakage, a better proposition takes into account the increasing gate leakage, which is expected to be a dominant component in total leakage [68,69]. At the same time it is also important to consider leakage power drawn in the active mode, during such optimization.

FIGURE 7.19

Enhanced MTCMOS scheme with stacked sleep devices for leakage reduction. (Modified from “Analysis and Optimization of Enhanced MTCMOS Scheme”, R.M. Rao, J.F. Burns and R.B. Brown, Proc. 17th International Conf. VLSI Design (VLSID’04), 2004.)

For the forced stacking scheme, it was shown in ref. [70] that to ensure same performance as that of a conventional MTCMOS scheme with one MOSFET of width W inserted between logic and ground, sizing of the MOSFETs of Figure 7.19 (W1 and W2) must be done according to the following relation and constraint:

(7.11) |

Though the device sizes optimize sub-threshold leakage, there is always an increase in the gate leakage current if identical performance is a constraint as mentioned above, and for optimizing total gate leakage, including Isub, size of the upper device M2 must be smaller than that of M1; unfortunately, it is a contradictory condition for Isub optimization. For a circuit with smaller active duration than sleep-mode, input occurrence probability is also small and if the circuit is optimized in terms of total leakage saving, it will give more benefit than a case when it is optimized in terms of sub-threshold leakage only. Hence, it is always better to optimize for total leakage [55].

The sleepy stack leakage reduction technique suggests a structure which combines previously discussed sleep transistor technique and the forced stack technique to achieve up to two orders of leakage power reduction in comparison to the forced stack. It also retains the original state unlike the sleep transistor approach, though the advantages come at a small price of some chip area and delay cost.

Figure 7.20 shows a sleep transistor inverter which isolates the existing logic network whereas in the forced stack transistor inverter, existing transistor is broken into two to take advantage of stacking. In the sleep stack mode existing transistors are divided into two with each one being half the size of the original transistor width [71]. During active mode, as shown in the figure, all sleep transistors are turned on to switch faster than the forced stack structure. In addition high Vth transistor and its parallel transistor may be used for the sleep transistor. In the sleep mode both the sleep transistors are turned off, keeping the sleepy stack structure its original logic. Leakage current is reduced by high Vth sleep transistor/parallel transistors and in addition stacked and turned-off transistors produce stack effect with further reduction in leakage current. Combined effect of the above achieves extremely low leakage power consumption while retaining the original logic.

FIGURE 7.20

Sleepy stack inverter showing W/L of each transistor in active mode assertion, and sleep mode assertion. (Redrawn from “Sleepy Stack Leakage Reduction”, J.C. Park and V.J. Mooney, IEEE Trans. VLSI Systems, Vol. 14, pp. 1250–1263, 2006.)

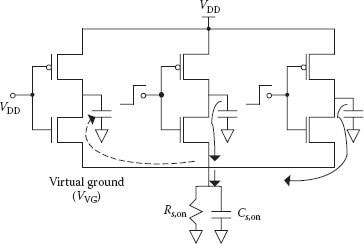

7.10.3 Importance of Transistor Sizing

Circuit designers would like to size the NMOS sleep transistor large enough for achieving a good performance. Virtual ground is preferred to be as close to the actual ground as possible, which would force drain-to-source voltage of the high-Vth sleep transistor to a small value so that it is biased in the linear region, enabling it to be represented by a linear resistor. However, if the sleep transistor is sized too large, not only the chip area shall be wasted, energy overheads during sleep-active mode switching would increase; whereas the circuit becomes slow during high-to-low transition if the NMOS transistor size is too small because of the increase of its resistance. Hence, deciding the optimum size of the sleep transistor needs further analysis. At first, let us consider the effect of its on-resistance Rs, on with the assumption that parasitic capacitance Cs,on as shown in Figure 7.21 is negligible. A voltage drop developed across Rs′on during any charge flow from low-Vth logic will affect the working of the MTCMOS scheme. It will reduce the drive of the logic transistor from VDD to VDD – VVG′, and because of the raised potential of the source of pull-down logic NMOS transistors, their threshold voltage would increase; both the effects increase high-to-low transition time tpHL. With the continued downscaling of supply voltage and almost constant Vth, effective Rs′on of the sleep transistor is bound to increase and to keep it at the same level (if not able to reduce it), another large size device is to be used. In addition for increasing its drive, overdriving of the sleep transistor gate can also be used.

FIGURE 7.21

MTCMOS block illustrating equivalent resistance, capacitance, and reverse conduction effects. (“Dual-Threshold Voltage Techniques for Low-Power Digital Circuits”, J.T. Kao and A.P. Chandrakasan, IEEE J. Solid-State Cir., Vol. 35, pp. 1009–1018, July 2000.)