INTRODUCTION TO STREAMING AND MOBILE MULTIMEDIA

We are standing on the verge, and for some it will be a precipice, of a revolution as profound as that which gave birth to the modern industry.

—Gary Hamel and C. K. Prahalad, management consultants

3.1 WHAT IS MOBILE MULTIMEDIA?

Mobile multimedia involves the creation of content designed for mobile networks and its transmission and delivery using standardized protocols. The content can be of any type, including graphics, pictures, live video or audio, multimedia messages (MMS), games, video calls, rich calls involving multimedia transfer, voice calls using voice over Internet protocol (VoIP), media portals, and streaming video and audio. The mobile networks are characterized by constrained environments due to:

• bandwidth available for transmission based on network type and mobility conditions,

• constraints on the battery power and consequently processor capability of mobile devices such as handsets,

• constraints on memory and screen size available on handsets, and

• multiple technologies such as GSM, GPRS, 3G-GSM, or CDMA and evolved 3G technologies such as 1×EV-DO.

Multimedia content for the mobile environment therefore needs to be defined in such a manner so as to be seamlessly usable in the world of mobile devices. This is done by defining the profiles of multimedia files, protocols, or applications, which are agreed as “standards” so far as the implementation of the mobile networks for handling multimedia is concerned.

In this chapter we look at the file formats, protocols, and standards that have been specially designed for the multimedia world of mobile devices. The mobile world is an entirely new world with standards that tailor the content and its delivery technologies to within the capabilities of mobile devices and networks uniformly across networks and operators.

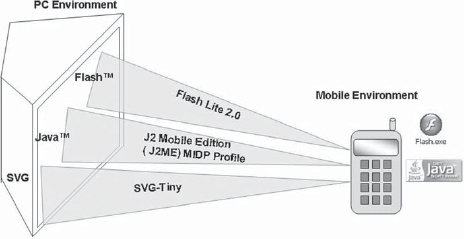

Consequently the standards prescribe the use of limited types of encoders and encoding formats, subsets of graphics applications (such as scalable vector graphics—tiny), and scaled down animation software such as Macromedia Flash Lite or Java MIDP (Mobile Information Device Profile) (Fig. 3-1).

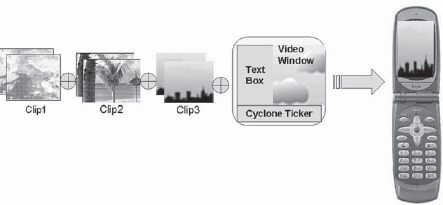

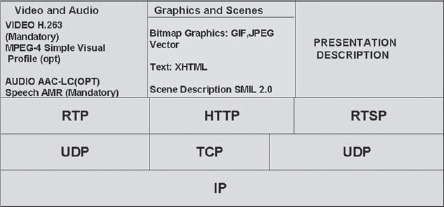

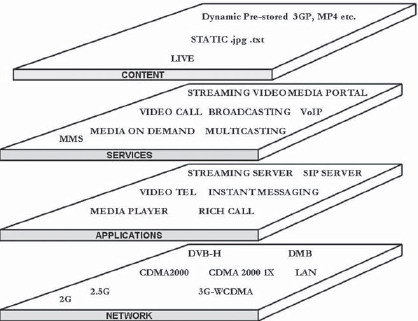

FIGURE 3-1 Elements of Mobile Multimedia

• While standardized protocols and formats that we will see in this chapter restrict the recommended use to a few specific file types or protocols, networks in practice may use other file formats, encoders, or players as well, such as Windows Media and Real, which are proprietary but are used so widely so as to be considered as standards in their own right.

We first look at the elements of streaming, which is one of the important techniques used in the handling of mobile multimedia.

Streaming of content such as audio and video became a popular technology alongside the growth of the Internet in the 1990s. The reasons for this were not hard to find; the alternative method of transfer of video was via downloading of the video and audio files and initiating playback when the download was completed. This had the drawback that owing to the size of the files involved (which can be 20 Mbytes even with MPEG-4 compression for 3 min of play) the wait time for download was generally unacceptable.

In the streaming mode the video and audio is delivered to the users mobiles or other devices at the same rate (on the average) at which it is played out. For example, for a connection at 128 kbps, video at 64–100 kbps can be streamed continuously, giving the user effectively a live and on-tap access to multimedia content.

Streaming is made possible by high compression codecs together with the technology to “stream” content by converting a storage format to a packetized format suitable for delivery over the Internet or IP networks.

In principle, there are two approaches to streaming. It is possible to provide video, audio, and Web pages using HTTP itself (i.e., without the use of any special protocol). This is referred to as progressive streaming and is possible if the delivery channel is capable of sustained HTTP data delivery at the bit rates required. The more realistic approach is by using real-time streaming, which requires special protocols (Real-Time Protocol (RTP), multicasting protocols, etc.) as well as special servers (e.g., Apple QuickTime server, RealTime server, Windows Media server).

3.2.1 Streaming Network Architecture

Streaming involves the following steps:

• capture and encoding of content,

• conversion to streaming format,

• stream serving,

• stream transport over IP networks,

• playing on a media player.

Complete streaming and delivery solutions have been developed by Real Networks, Microsoft Windows Media platforms, and Apple QuickTime multimedia. All of these are widely used. Formats such as QuickTime have support for MPEG-4 coding.

3.2.2 Capture and Encoding Process

The capture of video involves the acceptance of a video and audio stream in a format that is compatible with the video capture card of the PC or server. This format can be AVI or, for professional applications, the SDI format. Where the capture card contains hardware encoders it may accept the content in YC format, RGB format, or even analog format for composite video.

After capture the files are stored on the disk in .avi format prior to compression. After compression the files are stored in the appropriate compressed format such as .mpg or .mp4 format depending on the encoder used.

3.2.3 File Conversion to Streaming Formats

In order that the files can be delivered via streaming in real time they need to have timing control information, which can be used by the server to manage the delivery rate. For this purpose the files are converted to the streaming format, which adds the timing control information as well as metadata to help in the orderly delivery of streaming data for a variety of applications. QuickTime uses a feature called Hint Tracks to provide control information that points to the streamed video and audio information.

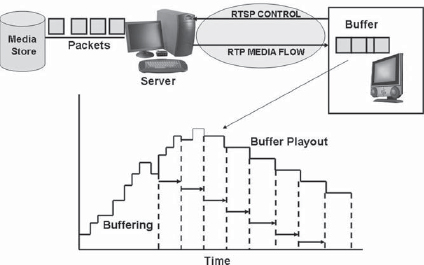

Stream serving is a specialized application that is used in a client server mode to deliver a continuous series of packets over the IP network to the client. The streaming application uses multimedia realtime file exchange protocols that have been developed by the Internet Engineering Task Force. These include the RTP, the Real-Time Control Protocol (RTCP), and the Real-Time Streaming Protocol (RTSP).

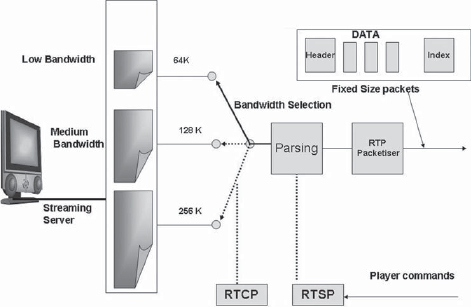

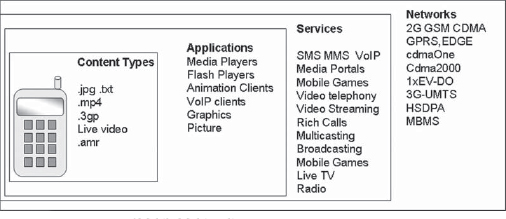

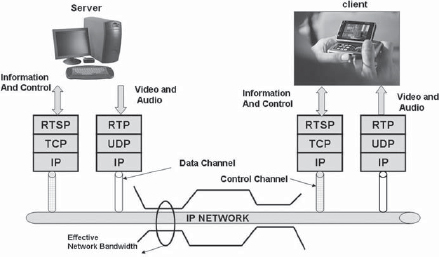

The streaming process involves two separate channels, which are set up for the streaming session. The data channel provides for the transfer of the video and audio data, whereas the control channel provides feedback from the streaming client (i.e., the media player) to the server. The video and audio data that forms the bulk of the transfer in the streaming process are handled by the RTP using UDP and IP as the underlying layers. Hence the data is delivered as a series of datagrams without needing acknowledgments. This forms the data channel of the streaming process (Fig. 3-2).

The client provides information such as the number of received packets and the quality of the incoming channel via the RTCP channel. The server based on the information received knows the network congestion and error conditions and the rate at which the client is actually receiving the packets. The server can take action to deliver the packets at the correct rate.

FIGURE 3-2 Streaming Protocol Stack

For example, based on the feedback from the client, the server can select one of the available streaming bit rates (e.g., 64, 128, 256 kbps) or choose to lower the frame rate to ensure that the sustained data rate of the transfer does not exceed the capability of the IP channel.

The control channel uses the RTSP, which operates over the TCP and IP layers of the network, for this purpose.

RTSP is thus the overall framework under which the streaming content is delivered to a client over the IP network. It supports VCR-like control of playback, such as play, forward, reverse, and pause functions, which in association with the client’s media player provide the user full control of the functionality of the playback process via streaming.

3.2.5 Stream Serving and Bandwidth Management

The streaming server and the media client who sets up a connection to the server for streaming operate in a handshake environment (Fig. 3-3).

The media stream is sent as blocks of data, which are put into the RTP packets. Each RTP packet contains a header and data. The header has the stream identifier, a time stamp, and the sequence number of the data packet. This information is essential in reassembling the packets in the correct sequence at the receiving end. The RTP maintains the negotiated and fixed delivery rate over the UDP/IP connection, while the RTSP framework supports client interaction with functions such as play and pause. Once a session is set up, it is used to create a connection at the highest bit rate that the IP network (which may involve wireless links or GSM/GPRS or 3G as well as CDMA networks) will support. The stream packets (video and audio) are continuously processed as they arrive by the media player. The client needs to do some buffering for the transmission bit rate variations, but need not store the file locally.

In this environment, if the data rate drops or there is a high error rate due to link conditions the client needs to signal the server to carry out intelligent stream switching or other measures such as dropping of frame rate. The above process constitutes a one-to-one connection and handshake and is termed a “unicast” connection. For each client (e.g., a mobile or a media player) there are separate streams (i.e., a separate data channel and separate control channel), which are set up to run the streaming process successfully. This type of connection may not be ideal when there are a large number of users accessing the same content, as the number of streams and the data to be supplied multiplies rapidly.

The other option is to have a multicast transmission. In a multicast connection, in which all users receive the same content, the data is multicast. The routers in the network that receive the multicast stream are then expected to repeat the data to the other links in the network. However, instead of hundreds or thousands of unicast sessions, each link carries only one stream of multicast content. The approach has many advantages, but the individual clients here have no control or mechanism to request the server to change the bit rate, etc., in the event of transmission disturbances.

FIGURE 3-4 MPEG-4 Layered Video Coding and Streaming

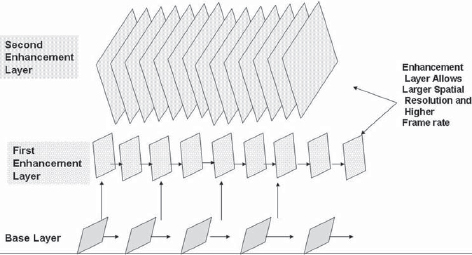

In MPEG-4 there is another mechanism to provide higher bit rates to those clients that are on a higher bandwidth network. The MPEG-4 streaming server transmits a basic low-resolution stream as well as a number of additional streams (helper streams). The client can then receive additional helper streams that assemble a higher quality of picture if bandwidth is available (Fig. 3-4).

3.3 STREAMING PLAYERS AND SERVERS

There are a number of encoders and streaming servers, some of them based on proprietary technologies.

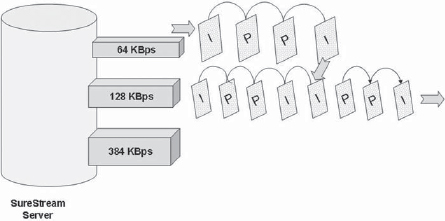

RealNetwork’s streaming setup consists of the RealVideo Codec and SureStream streaming server. RealVideo is based on the principles of MPEG-4 coding. It uses frame rate unsampling. This allows frame rates required for the selected delivery rate to be generated by motion vectors and frame interpolation. This implies that a simple media file can be created for different encoding rates. While serving streams, the RealNetwork SureStream will set up the connection after negotiation with the player. The lowest rate (duress) is streamed under the most congested conditions. SureStream uses dynamic stream switching to switch to a lower (or higher) bit rate depending on the transmission conditions and feedback from the client. For example, it can switch from a 64-kbps stream to a 128-kbps stream or vice versa (Fig. 3-5).

FIGURE 3-5 Stream Switching Takes Place at the I Frames

The RealMedia format uses both the RTP and its proprietary Real Data Package Protocol (RDP) protocol for data transfer. The RealMedia family of protocols is oriented toward unicast streaming.

3.3.1 Microsoft Windows Media Format

Windows Media is the Microsoft family of coders and decoders as well as streaming server and players. It has the following components:

• Windows Media Encoder Series

• Windows Media Services 9 (server)

• Windows Media Audio and Video Codecs 9

The encoders can take video files stored in various formats such as .avi and generate files in the .wmv or .asf format. The codecs used are of two types: Windows Media based and MPEG-4 based. Windows media players are available as a part of the Windows operating system. The servers for streaming are Windows Media servers and stream files in the .wmv (Windows Media video format) or .asf (advanced streaming format) format (Fig. 3-6).

FIGURE 3-6 Buffered Playout in Streaming

Release 9 of Windows Media provides advanced features such as fast streaming and dynamic content programming. Fast streaming provides for instant streaming, i.e., no buffering before playback and “always on” features, which is suitable for broadband connections. This ensures that there are no interruptions during playback. Windows Media streaming is proprietary and not based on the Real Time Protocol (RTP), Real Time Streaming Protocol (RTSP), and Session Description Protocol (SDP). Multicasting is supported via IGMPv3 support. Windows Media has support for IPv6 (Fig. 3-7).

3.3.2 Apple QuickTime

Apple’s QuickTime is a complete set of tools and players for handling of multimedia and streaming. QuickTime components include a browser plug-in or QuickTime multimedia player and QuickTime streaming server. QuickTime, in addition to handling video, audio, graphics, and music (MP3), can also handle virtual reality scenes. QuickTime uses the RTP and RTSP as the underlying stack in its latest release (release 7 or QuickTime 7) and also MPEG-4 as the core compression standard.

3.4 RICH MEDIA—SYNCHRONIZED MULTIMEDIA INTEGRATION LANGUAGE

Many applications require more than the display of a few images or graphics or audio files; these files need to be synchronized and presented as integrated media. An example is a voice-over associated with an image or speech associated with a presentation. This type of synchronization enables the delivery of rich media and can effectively represent a playlist running at the originating end much like a TV station. Synchronized multimedia integration language (SMIL; pronounced “smile”) is one technique to accomplish this objective. SMIL is supported by RealMedia as well as Apple’s QuickTime architectures.

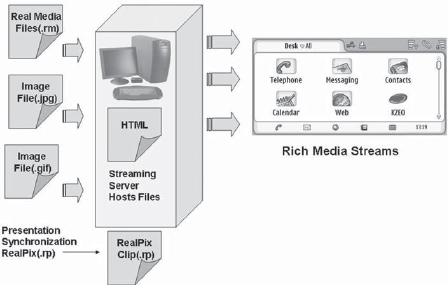

It is also possible to add media synchronization to HTML by using XML to allow the description of parameters for synchronization of streaming video, images, and text (Fig. 3-8).

In the absence of a synchronization language the images and clips are delivered as separate units, which, when opened by users in differing sequence, do not present an integrated picture, which the sender might have desired.

SMIL is a World Wide Web Consortium (W3C) standard that allows writing of interactive multimedia applications involving multimedia objects and hyperlinks and allows full control of the screen display.

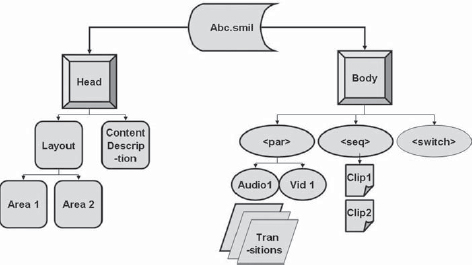

FIGURE 3-8 Rich Media Presentation Using SMIL

SMIL can be played out by SMIL-compatible players. The transmission can be either via the streaming mode (packet-switched streaming, or PSS) or downloaded, stored, and played.

SMIL is similar to HTML and can be created using a text-based editor (SMIL files have the .smil extension). The language has parameters that can define the location and sequence of displays in a sequential fashion and prescribe the content layout, i.e., windows for text, video, and graphics.

As an example, SMIL language has commands for sequencing of clips, <seq>; parallel playing of clips, <par>; switching between alternate choices (e.g., languages, bandwidth), <switch>; location of media clips on the screen, <region>; etc. Detailed SMIL language authoring guidelines and tools are widely available.

A typical case of SMIL may be the streaming of two video clips, one after another, followed by a weather bulletin containing video, a text window, and a text ticker (Fig. 3-9). The following is the SMIL file using RealMedia files.

FIGURE 3-9 SMIL-Based Content Streaming

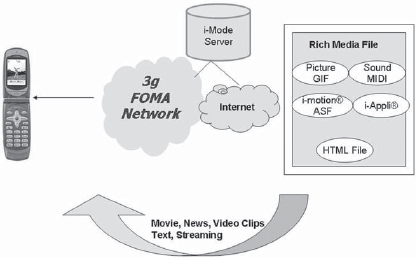

In Japan, NTT DoCoMo was one of the earliest implementers of the 3G technology and its i-mode and FOMA (Freedom of Multimedia Access) present interesting implementations of practical technologies for delivering rich calls and messaging using video, audio, text, and graphics.

At the basic level the i-mode service provides simultaneous voice communications and packet data transmissions or Internet access. The i-motion service provides for transmission (or streaming) of a page composed for a mobile screen, which can contain HTML, graphics files (.gif or .jpg), i-motion files (streaming video in ASF format), or sound files (including midi synthesized audio).

NTT DoCoMo provides the services shown in Fig. 3-10 on the FOMA mobile network.

For mobile phones to be able to play back content in the 3GPP or 3GPP2 SMIL format, they need to support this functionality. An example of a mobile phone with SMIL support on the FOMA network in Japan is the Fujitsu F900i. The phones for FOMA in Japan have been supported by the NetFront, a mobile browser and content viewer module from Access, Japan (www.access.co.jp). The NetFront application supports, among other features, SMIL and SVG (scalable vector graphics).

FIGURE 3-10 NTT DoCoMo i-motion Rich Media Delivery (Courtesy of NTT DoCoMo)

The mobile world had its origin in speech telephony. To be able to send and receive calls while on the move was in itself a significant achievement, a feat made possible by the analog wireless technologies such as AMPS or NMTS. These networks were designed for voice, as the data applications were limited at that time. Transfer of data (such as remote login to a computer) could be done by using an analog modem just as would be used in analog fixed-line networks. The second generation systems such as GSM were better, as they provided a low-speed data channel that could connect at 9.6 or 14.4 kbps. The growth of the Internet and the IP technologies that followed in its wake changed the entire scene, as all applications started migrating to IP. By the turn of the century the migration was virtually complete. IP applications such as Web browsing, file downloads, and video and audio streaming started dominating the fixed-line networks. Even voice is handled using VoIP in a large number of the networks. The migration of fixed networks to the IP modes was unmistakable.

At the same time another revolution was taking place in the mobile world. The number of mobile subscribers was growing at phenomenal rates of 30–40% or more in large parts of the world. The introduction of GSM with roaming and interoperability among networks was an impetus that took the penetration of mobile phones to nearly 100% in Europe and some other countries, including Japan. The growth in Asia has followed, with China and India growing at 5 million subscribers a month. We are living in a world in which the mobile is the basic tool of an individual. Data-handling capacities kept pace; and evolution of networks to 2.5 and 3G has followed to bring the full range of data capabilities to the mobile networks.

The real breakthrough in the use of multimedia was demonstrated in Japan (among other countries) with the launch of the i-mode service, which included virtually all elements of multimedia that a mobile network could provide with well-defined applications. Web access, download of songs (MP3) or video clips, and Web applications such as online bookings of tickets and online payments became commonplace. These developments brought the use of mobile multimedia to the forefront, across the globe.

3.5.1 Mobile Multimedia and the Wireless World

The wireless world is a very challenging environment for multimedia delivery compared to fixed-line networks. In a pre-3G world the data connectivity rates that could be achieved were generally limited to 64 kbps, if the networks were not overloaded. Even with 3G, average data rates of above 128 kbps can be provided to only a few users in a cell. The data transfer is charged by the megabyte and the wireless bandwidth is an expansive resource—a capacity provided in lieu of users being able to use more voice on the same network. The transmission requirements are different as the networks are prone to severe variation in transmission quality. The user terminal places restrictions on the use of the battery power and hence the manner in which the physical layer is to be configured. There is a wide range of technologies (GSM, GPRS, EDGE, CDMA, CDMA 2000, 3G, etc.) and user terminals deployed.

It was therefore recognized early on that the world of mobile multimedia, while deriving its technologies and services from the IP world, needed to be standardized in terms of:

• technologies used for multimedia,

• file formats used for multimedia, and

• transport protocols to be used on mobile networks.

This standardization was required for both the successful adoption of multimedia to the mobile networks and interoperability.

3.5.2 Elements of Mobile Multimedia

Mobile multimedia has a number of elements. These elements comprise:

• multimedia files.

• call set up and release procedures to deliver multimedia,

• multimedia transfer protocols, and

• multimedia players or receive-end clients.

As an example, an application may be a Windows Media file that is streamed to the mobile phone by using a 3G–PSS protocol over a 3G network. It would then require a Windows Media player to play out the file. Another example may be of a Macromedia Flash file that is delivered as .swf content and may need a Flash Lite client to run the application.

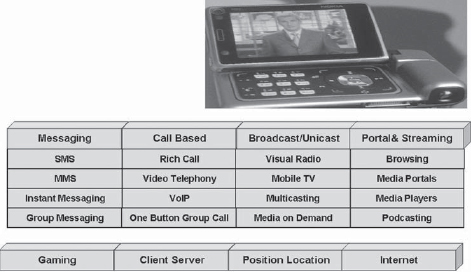

There are a number of applications that have developed over time, such as SMS, MMS and ring tone download, and MIDI, that form an inseparable part of mobile networks. Other applications such as video calling and video conferencing are the results of the camera phones making video conversation feasible with the help of supporting networks (e.g., 3G-UMTS) and call setup procedures such as 3G-324M.

Mobile TV is another such application, which uses the 3GPP packet-switched streaming protocol together with high-compression file formats defined by 3GPP to enable a continuous stream to be delivered, decoded, and displayed as TV on a mobile device.

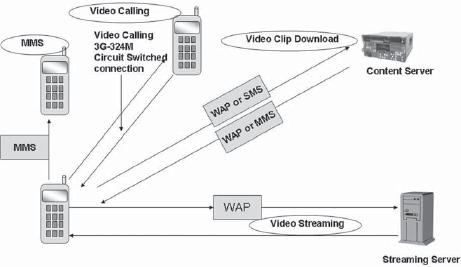

Examples of some of the applications are given in Fig. 3-11.

MMS is an extension of the SMS protocol and is defined as a new standard. The MMS messages can have multiple content types such as formatted text, pictures (.jpg or .gif formats), audio, and video. The information can be formatted for presentation using SMIL. There is no limit on the network size as per standards; however, network operators may limit the size (e.g., to 128 Kbytes or more) and the video duration to a specific time, e.g., 15–30 sec.

FIGURE 3-11 Mobile Multimedia Applications

FIGURE 3-12 Common Mobile Multimedia Services

Video Clip Download Service is a commonly used service on the mobile networks. It operates by the user sending a request for a clip via an SMS or using a WAP connection. The content can be received by an MMS or by downloading using WAP. In this case it is expected that the phone will have the appropriate players such as Real or Windows Media to play back the content downloaded as a video clip (Fig. 3-12).

Video streaming can be used to receive live content (such as a TV program or video generated by a camera such as a traffic or security camera). It is essentially an on-demand service. Video streaming services have been standardized through the use of PSS protocols. It is delivered on a unicast basis from the server to the user.

Video calling instead of plain voice call can be used if both the parties have a camera phone. Video calling standards have been formalized under 3G-324M standards, which essentially use the network for a circuit-switched connection ensuring a constant guaranteed bit rate. The video calling service can be extended to include video conferencing.

3.5.3 Standardization of Multimedia for Mobile Networks

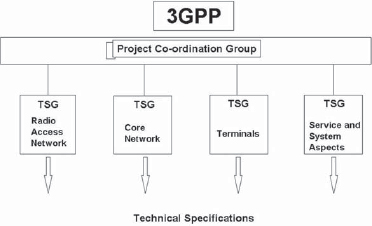

Mobile service operators, equipment manufacturers, and mobile device vendors as well as the standards bodies became very seriously involved with efforts to standardize the file formats, protocols, call setup procedures, and applications for mobile networks. It was evident to all that without the harmonization of efforts for standardization, deployment of mobile multimedia would be a difficult proposition. The standardization was done under the 3G Partnership Projects. The 3GPP is a partnership project of a number of standards bodies that are setting standards for third generation cellular mobile services. In fact there are two fora that are involved in these efforts. The 3GPP, which has its origin in the GSM-based and GSM-evolved network operators (GSM, GPRS, EDGE, and 3G-WCDMA), is the first such forum and the 3GPP2, based on the harmonization efforts of CDMA-based networks (CDMA 2000, CDMA1X, CDMA 3X, etc.) is the second forum. The 3GPP constituted in 1998 had as its objective to provide globally applicable technical specifications for a third generation system as it evolved from the 2G and 2.5G technologies. The latest releases from the 3GPP and the 3GPP2 now present a harmonized and coordinated picture of the file formats, protocols, and call setup procedures as well as the user equipment capabilities.

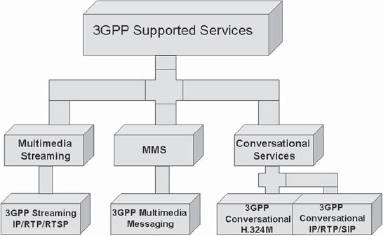

The 3GPP releases include standards for encoding and decoding of audio, video graphics, and data, as well as call control procedures and cell phones and user devices (Fig. 3-13).

3.6 INFORMATION TRANSMISSION OVER 3G NETWORKS

3G networks provide a very high bandwidth, which can support the transmission of many types of multimedia information. This can include streaming or live video, video conferencing, high-quality audio, Web browsing, or file downloads. The 3G partnership has been concerned with exactly how such calls, which will enable the exchange of video or audio or permit streaming, should be set up. There is also the issue of how complex the files can be in terms of the encoding profiles, as this is directly related to the processing power that can be made available in the phones and the latency or time delay that the processes of encoding can involve.

FIGURE 3-13 3GPP Standardization Areas

These issues have been addressed in various releases of the 3GPP specifications starting from release 0 to release 6. The procedure for setting up the call and the manner in which the control should be transferred to applications residing within the mobile phone such as media players need to be standardized for their global application. These procedures have been standardized in the various releases of the 3GPP.

3.7 FILE FORMATS FOR MOBILE MULTIMEDIA

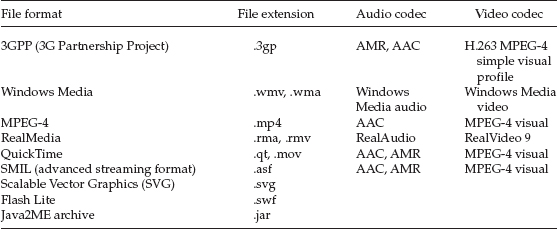

The file formats that are supported in mobile multimedia environment have been specified by the 3GPP and 3GPP2. The file formats, which are generated by the encoders, are based on MPEG-4 and H.263 coding standards for the initial releases. These files, which are used in GSM, 2.5G, and 3G WCDMA networks, are denoted by .3gpp and are based on MPEG-4 video and AAC and AMR audio. The files used in CDMA and the evolved networks CDMA2000, CDMA1X and 3X are denoted by .3g2 and are also based on the same codecs, i.e., MPEG-4 for video and AAC and AMR for audio, with additional support for Qualcomm code excited linear predictive (QCELP).

Many of the mobile phones for 2.5 or 3G support additional file formats, the files of which can be downloaded for better functionality, such as Windows Media (.wmv and .wma files) or Real files and Microsoft Mobile Office files .ppt (Power Point) or .pdf (Portable Document format), but these are not mandated by the 3GPP. The e-mail clients available can send these files as attachments.

File formats for 3GPP are based on the MPEG-4, which forms the underlying technology for a majority of multimedia applications. The MPEG-4 is an objects-based encoding standard and constitutes a layered structure with separation between the coding layers and the network abstraction layers, making it ideal for delivery over a variety of media. MPEG-4 also has a large number of profiles that can enable its application to very low bit rate applications while at the same time maintaining the flexibility to go up to higher bit rates through enhancement layers or to broadcast quality transmissions right up to high definition. MPEG-4 is also ideally suited to handling of computer-generated and animation objects such as synchronized graphics and text, face and body animation, and many other applications. MPEG-4 Part 10 is also standardized as the ITU standard H.264 and fits into the MPEG-4 framework.

3.7.1 3GPP-Specified File Formats and Encoder Specifications

As MPEG-4 has many profiles and levels, the 3GPP has standardized the following as the baseline specifications for use over 3G networks (3G-324M encoders/decoders).

Video coding: For MPEG-4 Simple Profile Level 1, the recommended support is MPEG-4 Simple Visual Profile Level 1 (ISO/IEC 14496-2). The frame rate can go up to 15 fps and horizontal and vertical pixel resolutions should be 176 × 144.

Audio coding: AMR coding and decoding are mandatory, G723.1 is recommended.

The above standardization was considered necessary to limit the complexity of the encoders and decoders used in mobile devices. MPEG-4 Simple Visual Profile Level 1 has support for the H.263 baseline profile codec. MPEG-4 Simple Visual Profile Level 1 has adequate error resilience for use on wireless networks while at the same time having low complexity. It also meets the needs for low-delay multimedia communications. The encoding mechanism recommends the enabling of all error resilience tools in the simple visual profile.

The support for the MPEG-4/AVC (H.264) codec with full baseline profile has been recommended as optional in release 6 of the 3GPP. Today the support of H.264 in many networks is quite common.

Conversational calls using 3G-324M use essentially the H.263 protocols. The 3GPP recommends the features and parameters that should be supported by such codecs, and such extensions are covered in the Mobile Extension Annex of the H.263 (Fig. 3-14).

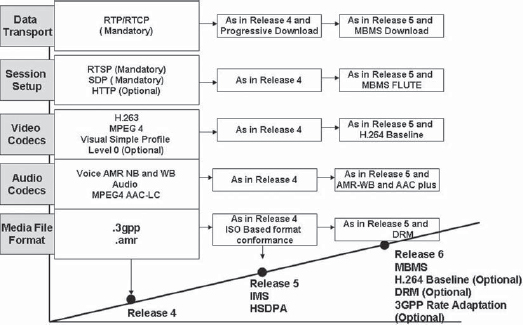

The first release of the industry-coordinated specifications for the mobile networks was in 1999. Since then there have been progressive developments, which have been reflected in further releases and upgrades.

3GPP release 1999: 3GPP release 1999 resulted in the adoption of a universal terrestrial radio access (UTRA). The UTRA is the radio standard for WCDMA and release 99 had provisions for both the FDD and the TDD (3.84 Mcps) modes. Release 99 also standardized a new codec—narrowband AMR (Fig. 3-15).

3GPP release 4, March 2001: 3GPP release 4 took the first steps toward an IP-based infrastructure. The 3GPP embraces an all-IP core network based on IPv6. It provided for the following main features:

• New messaging systems: Release 4 provided for enhanced messaging systems including rich text formatting and still image and MMS.

• Circuit-switched network architecture: Release 99 provided for bearer-independent network architecture.

• IP streaming: The release provided for a protocol stack, which provided for streaming of real-time video over the network.

• GERAN–GPRS/EDGE interface: Release 4 provided for the EDGE/GPRS interface.

FIGURE 3-15 3GPP-Supported Services

3GPP release 5, March 2002: Reflecting the rapid pace of standardization in 3G systems, the 3GPP release in 2002 unveiled the IMS (IP-based multimedia system) as the core of the 3G mobile networks. The entire network is based on IP, which is packet based, and bearer services are derived from the IP-based network. IMS provides for conversational calls based on session initiation protocol and for the first time introduced the concept of HSDPA (high-speed downlink packet access). It also provided for a wideband adaptive multirate (AMR-WB) codec and end-to-end QoS. High Speed Downlink Packet Access (HSDPA) is a major step toward services such as unicast mobile TV on 3G networks.

The framework provided by the IP multimedia system of release 5 sets the stage for end-to-end IP-based multimedia services breaking away from the circuit-switched architecture of the previous generations. It also provides for an easier integration of the instant messaging and real-time conversational services. The messaging enhancements include enhanced messaging and multimedia messaging.

It is important to note that the IMS is access independent. Hence it can support IP-to-IP sessions over packet data GPRS/EDGE or 3G, packet data CDMA, IP wireless LANS 802.11, and LANS 802.15, as well as wireline IP networks.

The IMS consists of session control, connection control, and an application services framework.

Security interfaces were also introduced in release 5, which included the Access security, Access domain security, and lawful interception interface (Fig. 3-16).

3GPP release 6: A major feature of release 6 was the introduction of the Multimedia Broadcast and Multicast Services. The following were the major new features of release 6 of 3GPP:

• Wideband codec: Release 6 introduced an enhancement of the AMR wideband codec (AMR-WB+) for better sound quality and coding.

• Packet streaming services (3GPP-PSS protocols).

• Wireless LAN to UMTS interworking whereby a mobile subscriber can connect to a wireless LAN using the IP services via the W-LAN.

• Digital rights management.

• Push services for pushing of content to mobile devices.

FIGURE 3-16 3GPP Releases for Mobile Multimedia

3GPP2 networks: At the same time there has been a continued harmonization effort with the networks that have evolved to 3G from the CDMA-based networks, a subject that has been addressed by the 3GPP2. Both groups have been attempting to harmonize the radio access and terminal design as well as the core network design. In 1999, in joint meetings known as the “Hooks and Extensions,” the interoperability between the radio access technologies and the dual-mode terminals was finalized.

After release 5 of 3GPP in 2001 there was a joint focus on common definitions for channels and traffic models as well as common physical requirements for terminals. Also there was a harmonization in the use of services across the two architectures, HSDPA of 3GPP and 1×EV-DV of 3GPP2. All the IP network and radio interface harmonization work progressed through the following years. The issues of IPv6 in 3GPP and IPV4 in 3GPP2 are also being harmonized for interworking.

FIGURE 3-17 3GPP-PSS Protocol Stack (Release 4, 2001)

Streaming application in 3G networks: Streaming, an important application, has been standardized for 3G networks under the 3GPP packet-switched streaming. The 3GPP-PSS defines the complete protocol stack for call establishment and the transfer of data using the IP layer. The audio and video file formats and formats for graphics, scene description, and presentation of information are also described. Complete protocol stacks such as 3GPP-PSS lend a uniformity to call setup and multimedia data transfers across various networks even though they may be based on different air interfaces (Fig. 3-17).

3.8 FILE FORMATS FOR 3GPP AND 3GPP2

File formats have been agreed upon for use on 3G mobile networks so that mobile phones can support the encoder and decoder types, given their CPU and power limitations, and at the same time have uniformity in applications.

For video coding two codec types are most common—these are the H.263 codec usually used for video conferencing applications (and also rich media calls using 324M circuit-switched calling on mobile networks) and the MPEG-4 codec. In MPEG-4 the visual simple profile is used. The support of other codecs such as H.264 has been made optional in release 6. Many implementations have now started supporting H.264. For audio coding, AAC codec support is required. This codec provides high-efficiency coding for high-fidelity applications with an output bit rate of 16–32 kbps. For voice applications support for AMR is required, which provides high efficiency coding from 4.75 to 12.2 kbps. The CDMA-based networks under 3GPP2 have standardized on the QCELP codec called Qualcomm PureVoice or its equivalent implementations.

3GPP file formats (.3gp) are based on the ISO-based file format, which is the primary standard for MPEG-4-based files. The ISO/IEC formats have been standardized by the ISO Moving Picture Expert Group. The 3GPP file format is a simpler version of the ISO file format (ISO-14496-1 media format) supporting only video in H.263 or MPEG-4 (visual simple profile) and audio in AMR or AAC-LC formats.

The MPEG-4 format (.mp4) (ISO 14496-14) allows multiplexing of multiple audio and video streams in one file (which can be delivered over any type of network using the network abstraction layer). It also permits variable frame rates, subtitles, and still images.

The MPEG-4 file structure has two parts—wrapper and media. The wrapper or container file supports:

• MPEG-4, H.263;

• AAC and AMR audio; and

• timed text tracks.

The media part consists of a hierarchy of atoms containing metadata and media data and the tracks consist of a single independent media data stream. Each media stream should have its own hint track.

3GPP files may conform to one of the following profiles:

• 3GPP Streaming Server profile: The profile ensures interoperability while selecting the alternative encoding options available between the streaming server and other devices.

• 3GPP Basic profile: This profile is used for PSS and the messaging application MMS. The use of the Basic profile guarantees that the server will work with the other devices.

3.9 CREATING AND DELIVERING 3GPP AND 3GPP2 CONTENT

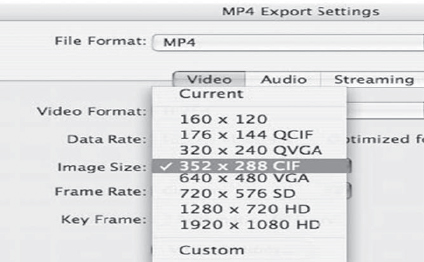

Content in the 3GPP and 3GPP2 formats can be prepared and delivered using a number of industry products available. As an example, Apple QuickTime provides a platform for creation, delivery, and playback of 3GPP and 3GPP2 multimedia content. It provides native support of mobile standards as well as the full suite of tools from ingest, editing, encoding, and stream serving.

Apple’s QuickTime Pro 7, which can be installed on Windows computers or PowerMac, allows the user to ingest video and audio files, to compress using H.264 or 3GPP2, and to prepare multimedia files using Dolby 5.1 or AAC audio. The output files can be saved as 3GPP for delivery over mobile networks as well.

Apple’s QuickTime streaming server provides the capability to stream MPEG-4, H.264, or 3GPP files over IP networks using open standards RTP/RTSP protocols. The QuickTime family also has other tools such as XServer by which playlists can be loaded with 3GPP, MPEG-4, or MP3 files so that the server can be used as an Internet or mobile network TV station (Fig. 3-18).

FIGURE 3-18 Screenshot of QuickTime Video File Creation in MPEG-4 (Courtesy of Apple Computers)

3GPP recommendations support rich media applications. SMIL, which can be used in end-to-end packet streaming networks with 3G technology (3GPP-PSS), has been defined in 3GPP TS 26.234. These specifications are based on SMIL 2.0. The elements shown in Table 3-1 are included in the 3GPP-PSS SMIL.

3.11 MESSAGING APPLICATIONS IN 3GPP

In the 3G domain, with a multiplicity of networks and devices, it has also been necessary to precisely define the conformance for the MMS. This has been done in the MMS conformance document, which specifically addresses the coding and presentation of multimedia messages. For interoperability the set of requirements has been defined at the following levels:

• message content,

• attributes of presentation language and presentation elements,

• media content format, and

• lower level capabilities.

3GPP-PSS SMIL Modules

Serial No. | 3GPP-PSS SMIL module |

1 | SMIL 2.0: Content control modules including BasicContentControl, PrefetchControl, and SkipContentControl |

2 | SMIL 2.0: Layout module—BasicLayout |

3 | SMIL 2.0: Linking module—BasicLinking, Linking Attributes |

4 | SMIL 2.0: Structure module |

5 | SMIL 2.0: Meta information module |

6 | SMIL 2.0: Transition effects module |

7 | SMIL 2.0: Media object modules (BasicMedia, MediaClipping, MediaParameter, MediaAccessibility, and MediaDescription) |

8 | SMIL 2.0: Timing and synchronization modules (BasicInline Timing, MinMaxTiming, BasicTimeContainers, RepeatTiming, and EventTiming) |

FIGURE 3-19 SMIL Document Structure

MMS SMIL has been defined in the conformance document.

A SMIL document consists of two parts—the head and the body. The head contains the author-defined content control, metadata, and layout information. The information contained in the head does not convey the sequences or parameters in which the various elements will be presented. The body contains the information related to the temporal or linking behavior of the document (Fig. 3-19).

3.12 EXAMPLES OF MOBILE NETWORKS USING 3GPP CONTENT

In Japan, in 2001, NTT DoCoMo launched its 3G service, which was based on the use of the 3GPP content. This service was called FOMA. The FOMA service permits circuit-switched data transmission at speeds up to 64 kbps and packet data transmission up to 384 kbps and provides multitasking up to three activities simultaneously (circuit-switched voice call, i-mode, and use of terminal function such as scheduler, calculator, and address book). A strong feature of the service is the use of single-key functions. Video and audio files can also be downloaded and played. One of the services offered is the “Visual Net,” which enables up to eight people to connect to a call simultaneously and permits the mobile window to show a single user or up to four users simultaneously. M-Stage V-Live, as the name suggests, was launched as a streaming service featuring one to many video streamed deliveries. i-motion is a mail service for sending multimedia content including video clips to other FOMA-compatible mobile phones. Mobile handsets for 3G services can play 3GPP content without any special players. However, most handsets provide for additional players to be able to play or handle other downloaded content. As an example, the Fujitsu F902is phone can play Windows Media 9, 3GPP, and i-motion content using technology supplied by Packetvideo. Most handsets are also equipped with Bluetooth, infrared, and contact-less IC card technology, which permits them to be used as mobile wallets for a wide range of applications.

3.13 MULTIMEDIA FORMATS FOR “BROADCAST MODE” MOBILE TV NETWORKS

The 3G file formats followed an evolutionary path from 2.5G networks such as GPRS and EDGE to 3G and were based on the need to support low-bit-rate connectivity at the lower end. Broadcast-based multimedia networks such as ISDB-T and DVB-H (discussed later in the book) were not constrained by the limitation to use codecs, which were needed to give very low bit rates, e.g., for conversational speech. Hence the use of H.264 for video and AAC+ for audio is quite common in these networks. Nevertheless the phones that are used to receive such broadcasts are also used for conversational calls, and the use of 3GPP formats is universal.

3.14 GRAPHICS AND ANIMATIONS IN THE MOBILE ENVIRONMENT

Graphics and animation form a very important part of any mobile application. The information can be of any type—weather or news, games or cartoons, music with animated graphics, animated movies, online shopping options, and much else. The quality of presentation goes up manifold when it is presented as graphics or animated video. The technologies for graphics and animations have developed along with the Internet and are well established, with hundreds of millions of users. Web sites have been using Flash graphics and animations or Java applets for animated images and device-independent applications.

3.14.1 Graphics

There are two methods for depicting graphics. The first is called “Raster graphics,” by which the images are represented as bitmaps. Bitmap images are stored with the details of all pixels that describe the image. For example, a 640 × 480-pixel image is fully defined by 640 × 320 = 307,200 pixels. If each of these is represented by 3 bytes each, the image needs 921 Kbytes. A graphics represented by pixels would therefore be represented by these 307 Kpixels. While it may be possible to use compression (such as JPEG) to reduce the file size, the graphics still require considerable memory for storage and bandwidth for their transportation. Moreover they cannot be scaled up easily, as the same 307 Kpixels that may be adequate for a VGA screen would be totally inadequate for a screen scaled 50 times.

The alternative method of representation is by vector graphics. In vector graphics all shapes are represented by mathematical equations. The information in the graphics is conveyed as equations, which are computed (executed) prior to display. For example, a circle may be represented by the center point, radius, and fill color. In this case the circle can be scaled to any level by variation of the radius without loss of quality. The use of vector graphics also requires the use of much smaller files, as only the executable instructions need to be conveyed, instead of thousands (or millions) of pixels. Vector graphics used on Web sites are therefore fast to load, as the full picture need not be downloaded. The representation also produces sharp and crisp images, as no resolution is lost due to image compression.

There are many programs that can be used for preparation of vector graphics. These include the Macromedia Flash, Adobe PhotoShop CSS, and Coreldraw. When the graphics or animations (movies) are produced by vector graphics-based programs, they can be played back using a corresponding player. For example, Flash source files have the extension .fla, while the graphics or animation produced has the extension .swf (Shock Wave Flash). The receiving device (e.g., a mobile phone) needs to have a corresponding player that can play .swf files.

3.14.2 Scalable Vector Graphics for Mobiles (SVG-T)

The leading mobile network operators and handset manufacturers were keen to provide standards-based SVG on mobile phones so that the applications could work across various networks and handsets. At the same time any standards to be adapted needed to address the limited resources on a handset. The mobile SVG profile, also called SVG-T (SVG-Tiny), was adapted by the 3GPP and is now recommended for use in mobile phones conforming to the standards (http://www.w3c.org/TR/SVGMobile). Major network operators and mobile phone manufacturers have adopted the SVG for depiction of graphics and animation in mobile multimedia applications. The first formal adaptation of the SVG 1.1 profile for mobiles (SVG-T) was in 2003 by the W3C, following which it was adapted by the 3GPP as the 2D graphics standard for applications such as MMS. In 2005, the SVG 1.2 version was adopted.

SVG-T is a rich XML-based language and by the very nature of scalable vector graphics has the attribute of automatically resizing and fitting any size of mobile display. It can provide a time-based (rather than frame-based) animation for accurate presentation and provides support for various commonly used video and audio formats and graphics files (JPEG, PNG, etc.). One of the powerful features supported is the “mouse style pointer” and “pointer click” for providing the control to steer the application through rich graphics.

3.14.3 Animation and Application Software

A considerable amount of software work is done by using either Java or Macromedia Flash. The use of these software tools helps generate applications that run uniformly in different environments and are appealing owing to the animation and support in developing “lively” applications. It is therefore no surprise that these software tools find extensive use in developing applications for mobile phones.

The mobile world is characterized by tiny screens with low pixel counts and at the same time low resources such as memory for running such programs. Hence it is common to use different profiles that are more suitable for mobile phones than for desktops for development of such applications as well as their execution on the mobile platforms (Fig. 3-20).

FIGURE 3-20 Software Environment for Mobile Phones

3.14.4 Macromedia Flash Lite

The Flash Lite version of Macromedia Flash takes into account the mobile environment of a small screen size of 176 × 208, 320 × 240, etc., and lower color depth, as well as lower bandwidths for transmissions. The applications are correspondingly made lighter in terms of memory usage and processor requirements.

Macromedia’s Flash Lite software has been available on mobile devices since 2003. It made its advent as Pocket PC Flash, and Flash Lite 1.1 was released in the same year. Flash Lite applications help develop animation, games, mobile wall paper, ring tones, and other attractive content. Japan has been a major user of Macromedia Flash Lite software and nearly 50% of Japanese phones have Flash Lite players (NTT DoCoMo, Vodafone KK, and KDDI). Flash Lite 2.0 is based on the use of Flash 7/8 and Flash Lite ActionScript 2.0. It features text enhancements, XML, device video, and mobile shared objects, among other capabilities.

3.14.5 Java Mobile Edition (J2ME)

Java is a competing set of software tools for providing a rich software development environment. The J2ME Mobile Information Device Profile (MIDP) was conceived as a basic platform (tool kit) for mobile devices that operate in an environment in which reliable connections cannot be guaranteed all the time. The Connection Limited Device Configuration, which is the basis of MIDP, is designed to provide Java support for mobile devices. The latest release of MIDP is MIDP 2.0, which provides advanced support for customer applications such as enhanced security model and HTTPS support. It also provides for over-the-air deployment of applications and enhanced graphics support.

3.14.6 Browsers for Mobile Phones

The availability of J2ME or Macromedia Flash Lite 2.0 in mobile phones means that other applications such as Web sites can use Java or Flash and the phones should be able to receive a richer content. Browsers for mobile phones have been developed for J2ME and Flash Lite support. An example is the Opera Mini browser, which can run on Java-enabled phones. The Nokia mobile browser for Symbian 9.3 OS (e.g., in Nokia N95) has support for Flash Lite 2.0.

3.15 APPLICATION STANDARDS AND OPEN MOBILE ALLIANCE

The 3GPP and 3GPP2 fora have their focus on core network capabilities, including the switching network, the terminal, and the radio. The specifications provide for the types of files that can be handled as a part of applications such as circuit switch connect, MMS, and packet-switched data.

The area of applications standardization on mobile devices has been the role of the Open Mobile Alliance (OMA). OMA as an open standards body determines how the applications on mobiles should be configured so that they can work interchangeably. OMA is thus concerned with the application stacks on mobile devices for which the underlying layers are provided by the 3G networks.

OMA was created in 2002 with the participation of over 200 companies, which included broadly four important groups: mobile operators, application and content providers, manufacturers, and IT companies. Some of the previous groups working on interoperability also merged in OMA. Examples are the Mobile Gaming Interoperability Forum, Location Interoperability Forum, Mobile Wireless Interoperability Forum, MMS Interoperability Group, and Wireless Village.

Multimedia File Formats

The mission of the OMA is to standardize applications that are based on open standards, protocols, and interfaces and are independent of the underlying networks (GSM, UMTS, CDMA, EDGE, etc.).

3.16 SUMMARY OF FILE FORMATS USED IN MOBILE MULTIMEDIA

Digital multimedia involves the use of a number of file formats for representing audio, video, and pictures. Mobile multimedia is a special environment using file formats standardized by the 3GPP fora as well industry alliances. Table 3-2 presents a summary of file formats and profiles that are typically used in the world of mobile multimedia.