Building the AI-Powered Organization

by Tim Fountaine, Brian McCarthy, and Tamim Saleh

ARTIFICIAL INTELLIGENCE IS reshaping business—though not at the blistering pace many assume. True, AI is now guiding decisions on everything from crop harvests to bank loans, and once pie-in-the-sky prospects such as totally automated customer service are on the horizon. The technologies that enable AI, like development platforms and vast processing power and data storage, are advancing rapidly and becoming increasingly affordable. The time seems ripe for companies to capitalize on AI. Indeed, we estimate that AI will add $13 trillion to the global economy over the next decade.

Yet, despite the promise of AI, many organizations’ efforts with it are falling short. We’ve surveyed thousands of executives about how their companies use and organize for AI and advanced analytics, and our data shows that only 8% of firms engage in core practices that support widespread adoption. Most firms have run only ad hoc pilots or are applying AI in just a single business process.

Why the slow progress? At the highest level, it’s a reflection of a failure to rewire the organization. In our surveys and our work with hundreds of clients, we’ve seen that AI initiatives face formidable cultural and organizational barriers. But we’ve also seen that leaders who at the outset take steps to break down those barriers can effectively capture AI’s opportunities.

Making the Shift

One of the biggest mistakes leaders make is to view AI as a plug-and-play technology with immediate returns. Deciding to get a few projects up and running, they begin investing millions in data infrastructure, AI software tools, data expertise, and model development. Some of the pilots manage to eke out small gains in pockets of organizations. But then months or years pass without bringing the big wins executives expected. Firms struggle to move from the pilots to companywide programs—and from a focus on discrete business problems, such as improved customer segmentation, to big business challenges, like optimizing the entire customer journey.

Leaders also often think too narrowly about AI requirements. While cutting-edge technology and talent are certainly needed, it’s equally important to align a company’s culture, structure, and ways of working to support broad AI adoption. But at most businesses that aren’t born digital, traditional mindsets and ways of working run counter to those needed for AI.

To scale up AI, companies must make three shifts:

From siloed work to interdisciplinary collaboration

AI has the biggest impact when it’s developed by cross-functional teams with a mix of skills and perspectives. Having business and operational people work side by side with analytics experts will ensure that initiatives address broad organizational priorities, not just isolated business issues. Diverse teams can also think through the operational changes new applications may require—they’re likelier to recognize, say, that the introduction of an algorithm that predicts maintenance needs should be accompanied by an overhaul of maintenance workflows. And when development teams involve end users in the design of applications, the chances of adoption increase dramatically.

From experience-based, leader-driven decision making to data-driven decision making at the front line

When AI is adopted broadly, employees up and down the hierarchy will augment their own judgment and intuition with algorithms’ recommendations to arrive at better answers than either humans or machines could reach on their own. But for this approach to work, people at all levels have to trust the algorithms’ suggestions and feel empowered to make decisions—and that means abandoning the traditional top-down approach. If employees have to consult a higher-up before taking action, that will inhibit the use of AI.

Decision processes shifted dramatically at one organization when it replaced a complex manual method for scheduling events with a new AI system. Historically, the firm’s event planners had used colored tags, pins, and stickers to track conflicts, participants’ preferences, and other considerations. They’d often relied on gut instinct and on input from senior managers, who also were operating on their instincts, to make decisions. The new system rapidly analyzed the vast range of scheduling permutations, using first one algorithm to distill hundreds of millions of options into millions of scenarios, and then another algorithm to boil down those millions into just hundreds, ranking the optimal schedules for each participant. Experienced human planners then applied their expertise to make final decisions supported by the data, without the need to get input from their leaders. The planners adopted the tool readily, trusting its output because they’d helped set its parameters and constraints and knew that they themselves would make the final call.

From rigid and risk-averse to agile, experimental, and adaptable

Organizations must shed the mindset that an idea needs to be fully baked or a business tool must have every bell and whistle before it’s deployed. On the first iteration, AI applications rarely have all their desired functionality. A test-and-learn mentality will reframe mistakes as a source of discoveries, reducing the fear of failure. Getting early user feedback and incorporating it into the next version will allow firms to correct minor issues before they become costly problems. Development will speed up, enabling small AI teams to create minimum viable products in a matter of weeks rather than months.

Such fundamental shifts don’t come easily. They require leaders to prepare, motivate, and equip the workforce to make a change. But leaders must first be prepared themselves. We’ve seen failure after failure caused by the lack of a foundational understanding of AI among senior executives. (Further on, we’ll discuss how analytics academies can help leaders acquire that understanding.)

Setting Up for Success

To get employees on board and smooth the way for successful AI launches, leaders should devote early attention to several tasks:

Explaining why

A compelling story helps organizations understand the urgency of change initiatives and how all will benefit from them. This is particularly critical with AI projects, because fear that AI will take away jobs increases employees’ resistance to it.

Leaders have to provide a vision that rallies everyone around a common goal. Workers must understand why AI is important to the business and how they’ll fit into a new, AI-oriented culture. In particular, they need reassurance that AI will enhance rather than diminish or even eliminate their roles. (Our research shows that the majority of workers will need to adapt to using AI rather than be replaced by AI.)

When a large retail conglomerate wanted to get its employees behind its AI strategy, management presented it as an existential imperative. Leaders described the threat that digital retailers posed and how AI could help fend it off by improving the firm’s operational efficiency and responsiveness. By issuing a call to arms in a fight for survival, management underscored the critical role that employees had to play.

In sharing their vision, the company’s leaders put a spotlight on workers who had piloted a new AI tool that helped them optimize stores’ product assortments and increase revenue. That inspired other workers to imagine how AI could augment and elevate their performance.

Anticipating unique barriers to change

Some obstacles, such as workers’ fear of becoming obsolete, are common across organizations. But a company’s culture may also have distinctive characteristics that contribute to resistance. For example, if a company has relationship managers who pride themselves on being attuned to customer needs, they may reject the notion that a machine could have better ideas about what customers want and ignore an AI tool’s tailored product recommendations. And managers in large organizations who believe their status is based on the number of people they oversee might object to the decentralized decision making or reduction in reports that AI could allow.

In other cases, siloed processes can inhibit the broad adoption of AI. Organizations that assign budgets by function or business unit may struggle to assemble interdisciplinary agile teams, for example.

Some solutions can be found by reviewing how past change initiatives overcame barriers. Others may involve aligning AI initiatives with the very cultural values that seem like obstacles. At one financial institution with a strong emphasis on relationship banking, for example, leaders highlighted AI’s ability to enhance ties with customers. The bank created a booklet for relationship managers that showed how combining their expertise and skills with AI’s tailored product recommendations could improve customers’ experiences and increase revenue and profit. The AI adoption program also included a contest for sales conversions driven by using the new tool; the winners’ achievements were showcased in the CEO’s monthly newsletter to employees.

A relatively new class of expert, analytics translators, can play a role in identifying roadblocks. These people bridge the data engineers and scientists from the technical realm with the people from the business realm—marketing, supply chain, manufacturing, risk personnel, and so on. Translators help ensure that the AI applications developed address business needs and that adoption goes smoothly. Early in the implementation process, they may survey end users, observe their habits, and study workflows to diagnose and fix problems.

Understanding the barriers to change can not only inform leaders about how to communicate with the workforce but also help them determine where to invest, what AI initiatives are most feasible, what training should be offered, what incentives may be necessary, and more.

Budgeting as much for integration and adoption as for technology (if not more)

In one of our surveys nearly 90% of the companies that had engaged in successful scaling practices had spent more than half of their analytics budgets on activities that drove adoption, such as workflow redesign, communication, and training. Only 23% of the remaining companies had committed similar resources.

Consider one telecom provider that was launching a new AI-driven customer-retention program in its call center. The company invested simultaneously in AI model development and in helping the center’s employees transition to the new approach. Instead of just reacting to calls canceling service, they would proactively reach out to customers at risk of defection, giving them AI-generated recommendations on new offers they’d be likely to accept. The employees got training and on-the-job coaching in the sales skills needed to close the business. Coaches and managers listened in on their calls, gave them individualized feedback, and continually updated the training materials and call scripts. Thanks to those coordinated efforts, the new program reduced customer attrition by 10%.

Balancing feasibility, time investment, and value

Pursuing initiatives that are unduly difficult to implement or require more than a year to launch can sabotage both current and future AI projects.

Organizations needn’t focus solely on quick wins; they should develop a portfolio of initiatives with different time horizons. Automated processes that don’t need human intervention, such as AI-assisted fraud detection, can deliver a return in months, while projects that require human involvement, such as AI-supported customer service, are likely to pay off over a longer period. Prioritization should be based on a long-term (typically three-year) view and take into consideration how several initiatives with different time lines could be combined to maximize value. For example, to achieve a view of customers detailed enough to allow AI to do microsegmentation, a company might need to set up a number of sales and marketing initiatives. Some, such as targeted offers, might deliver value in a few months, while it might take 12 to 18 months for the entire suite of capabilities to achieve full impact.

An Asian Pacific retailer determined that an AI initiative to optimize floor space and inventory placement wouldn’t yield its complete value unless the company refurbished all its stores, reallocating the space for each category of goods. After much debate, the firm’s executives decided the project was important enough to future profitability to proceed—but not without splitting it in two. Part one produced an AI tool that gave store managers recommendations for a few incremental items that would sell well in their outlets. The tool provided only a small fraction of the total return anticipated, but the managers could get the new items into stores immediately, demonstrating the project’s benefits and building enthusiasm for the multiyear journey ahead.

Organizing for Scale

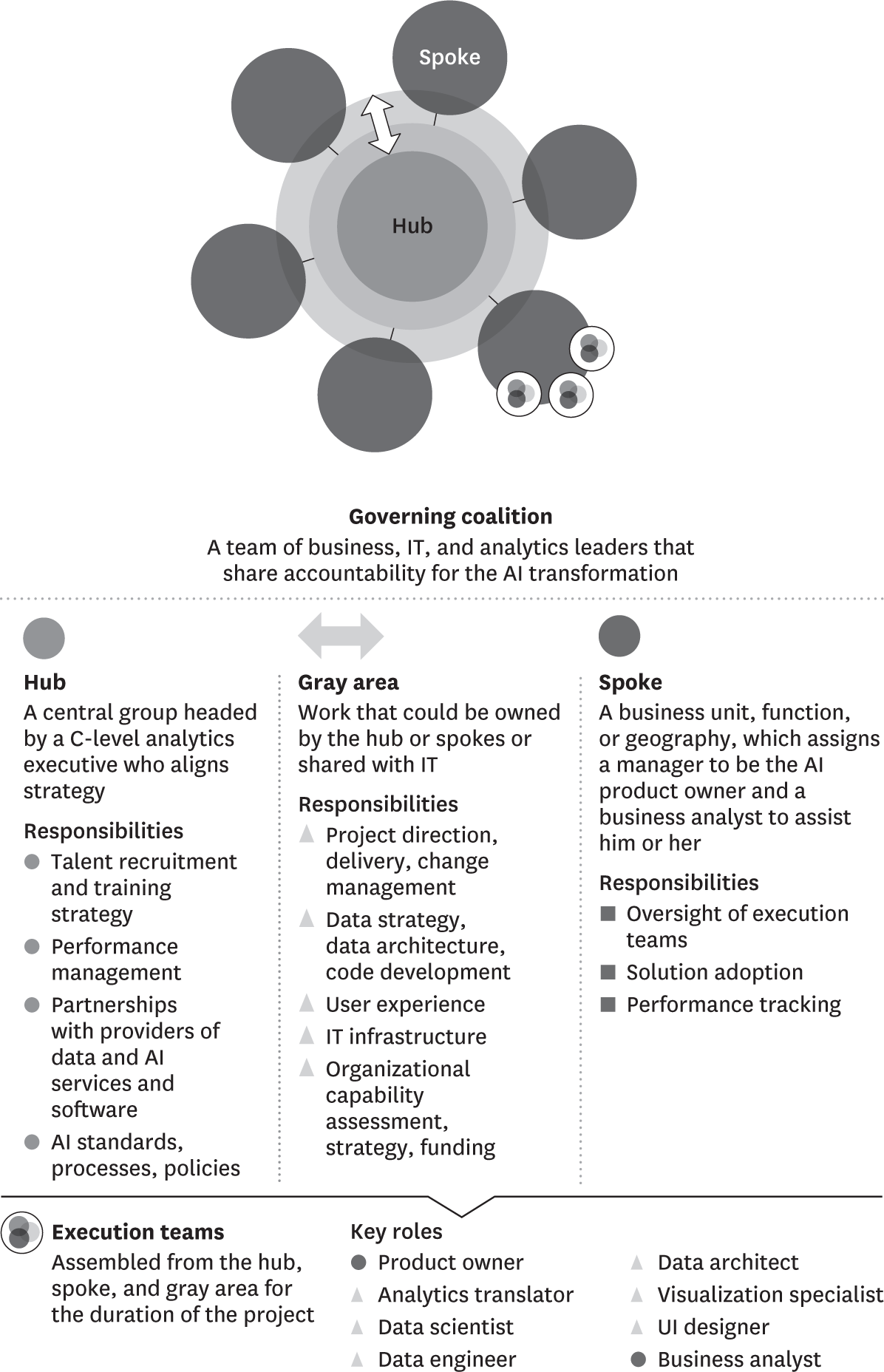

There’s a lot of debate about where AI and analytics capabilities should reside within organizations. Often leaders simply ask, “What organizational model works best?” and then, after hearing what succeeded at other companies, do one of three things: consolidate the majority of AI and analytics capabilities within a central “hub”; decentralize them and embed them mostly in the business units (“the spokes”); or distribute them across both, using a hybrid (“hub-and-spoke”) model. We’ve found that none of these models is always better than the others at getting AI up to scale; the right choice depends on a firm’s individual situation.

Consider two large financial institutions we’ve worked with. One consolidated its AI and analytics teams in a central hub, with all analytics staff reporting to the chief data and analytics officer and being deployed to business units as needed. The second decentralized nearly all its analytics talent, having teams reside in and report to the business units. Both firms developed AI on a scale at the top of their industry; the second organization grew from 30 to 200 profitable AI initiatives in just two years. And both selected their model after taking into account their organizations’ structure, capabilities, strategy, and unique characteristics.

The hub

A small handful of responsibilities are always best handled by a hub and led by the chief analytics or chief data officer. These include data governance, AI recruiting and training strategy, and work with third-party providers of data and AI services and software. Hubs should nurture AI talent, create communities where AI experts can share best practices, and lay out processes for AI development across the organization. Our research shows that companies that have implemented AI on a large scale are three times as likely as their peers to have a hub and 2.5 times as likely to have a clear methodology for creating models, interpreting insights, and deploying new AI capabilities.

Hubs should also be responsible for systems and standards related to AI. These should be driven by the needs of a firm’s initiatives, which means they should be developed gradually, rather than set up in one fell swoop, before business cases have been determined. We’ve seen many organizations squander significant time and money—spending hundreds of millions of dollars—up front on companywide data-cleaning and data-integration projects, only to abort those efforts midway, realizing little or no benefits.

In contrast, when a European bank found that conflicting data-management strategies were hindering its development of new AI tools, it took a slower approach, making a plan to unify its data architecture and management over the next four years as it built various business cases for its AI transformation. This multiphase program, which also includes an organizational redesign and a revised talent strategy, is expected to have an annual impact of more than $900 million.

The spokes

Another handful of responsibilities should almost always be owned by the spokes, because they’re closest to those who will be using the AI systems. Among them are tasks related to adoption, including end-user training, workflow redesign, incentive programs, performance management, and impact tracking.

To encourage customers to embrace the AI-enabled services offered with its smart, connected equipment, one manufacturer’s sales and service organization created a “SWAT team” that supported customers using the product and developed a pricing plan to boost adoption. Such work is clearly the bailiwick of a spoke and can’t be delegated to an analytics hub.

The gray area

Much of the work in successful AI transformations falls into a gray area in terms of responsibility. Key tasks—setting the direction for AI projects, analyzing the problems they’ll solve, building the algorithms, designing the tools, testing them with end users, managing the change, and creating the supporting IT infrastructure—can be owned by either the hub or the spoke, shared by both, or shared with IT. (See the exhibit “Organizing AI for scale.”) Deciding where responsibility should lie within an organization is not an exact science, but it should be influenced by three factors:

AI-enabled companies divide key roles between a hub and spokes. A few tasks are always owned by the hub, and the spokes always own execution. The rest of the work falls into a gray area, and a firm’s individual characteristics determine where it should be done.

The maturity of AI capabilities. When a company is early in its AI journey, it often makes sense for analytics executives, data scientists, data engineers, user interface designers, visualization specialists who graphically interpret analytics findings, and the like to sit within a hub and be deployed as needed to the spokes. Working together, these players can establish the company’s core AI assets and capabilities, such as common analytics tools, data processes, and delivery methodologies. But as time passes and processes become standardized, these experts can reside within the spokes just as (or more) effectively.

Business model complexity. The greater the number of business functions, lines of business, or geographies AI tools will support, the greater the need to build guilds of AI experts (of, say, data scientists or designers). Companies with complex businesses often consolidate these guilds in the hub and then assign them out as needed to business units, functions, or geographies.

The pace and level of technical innovation required. When they need to innovate rapidly, some companies put more gray-area strategy and capability building in the hub so they can monitor industry and technology changes better and quickly deploy AI resources to head off competitive challenges.

Let’s return to the two financial institutions we discussed earlier. Both faced competitive pressures that required rapid innovation. However, their analytics maturity and business complexity differed.

The institution that placed its analytics teams within its hub had a much more complex business model and relatively low AI maturity. Its existing AI expertise was primarily in risk management. By concentrating its data scientists, engineers, and many other gray-area experts within the hub, the company ensured that all business units and functions could rapidly access essential know-how when needed.

The second financial institution had a much simpler business model that involved specializing in fewer financial services. This bank also had substantial AI experience and expertise. So it was able to decentralize its AI talent, embedding many of its gray-area analytics, strategy, and technology experts within the business-unit spokes.

As these examples suggest, some art is involved in deciding where responsibilities should live. Every organization has distinctive capabilities and competitive pressures, and the three key factors must be considered in totality, rather than individually. For example, an organization might have high business complexity and need very rapid innovation (suggesting it should shift more responsibilities to the hub) but also have very mature AI capabilities (suggesting it should move them to the spokes). Its leaders would have to weigh the relative importance of all three factors to determine where, on balance, talent would most effectively be deployed. Talent levels (an element of AI maturity) often have an outsize influence on the decision. Does the organization have enough data experts that, if it moved them permanently to the spokes, it could still fill the needs of all business units, functions, and geographies? If not, it would probably be better to house them in the hub and share them throughout the organization.

Oversight and execution

While the distribution of AI and analytics responsibilities varies from one organization to the next, those that scale up AI have two things in common:

A governing coalition of business, IT, and analytics leaders. Fully integrating AI is a long journey. Creating a joint task force to oversee it will ensure that the three functions collaborate and share accountability, regardless of how roles and responsibilities are divided. This group, which is often convened by the chief analytics officer, can also be instrumental in building momentum for AI initiatives, especially early on.

Assignment-based execution teams. Organizations that scale up AI are twice as likely to set up interdisciplinary teams within the spokes. Such teams bring a diversity of perspectives together and solicit input from frontline staff as they build, deploy, and monitor new AI capabilities. The teams are usually assembled at the outset of each initiative and draw skills from both the hub and the spokes. Each generally includes the manager in charge of the new AI tool’s success (the “product owner”), translators, data architects, engineers and scientists, designers, visualization specialists, and business analysts. These teams address implementation issues early and extract value faster.

For example, at the Asian Pacific retailer that was using AI to optimize store space and inventory placement, an interdisciplinary execution team helped break down walls between merchandisers (who determined how items would be displayed in stores) and buyers (who chose the range of products). Previously, each group had worked independently, with the buyers altering the AI recommendations as they saw fit. That led to a mismatch between inventory purchased and space available. By inviting both groups to collaborate on the further development of the AI tool, the team created a more effective model that provided a range of weighted options to the buyers, who could then choose the best ones with input from the merchandisers. At the end of the process, gross margins on each product category that had applied the tool increased by 4% to 7%.

Educating Everyone

To ensure the adoption of AI, companies need to educate everyone, from the top leaders down. To this end some are launching internal AI academies, which typically incorporate classroom work (online or in person), workshops, on-the-job training, and even site visits to experienced industry peers. Most academies initially hire external faculty to write the curricula and deliver training, but they also usually put in place processes to build in-house capabilities.

Every academy is different, but most offer four broad types of instruction:

Leadership

Most academies strive to give senior executives and business-unit leaders a high-level understanding of how AI works and ways to identify and prioritize AI opportunities. They also provide discussions of the impact on workers’ roles, barriers to adoption, and talent development, and offer guidance on instilling the underlying cultural changes required.

Analytics

Here the focus is on constantly sharpening the hard and soft skills of data scientists, engineers, architects, and other employees who are responsible for data analytics, data governance, and building the AI solutions.

Translator

Analytics translators often come from the business staff and need fundamental technical training—for instance, in how to apply analytical approaches to business problems and develop AI use cases. Their instruction may include online tutorials, hands-on experience shadowing veteran translators, and a final “exam” in which they must successfully implement an AI initiative.

End user

Frontline workers may need only a general introduction to new AI tools, followed by on-the-job training and coaching in how to use them. Strategic decision makers, such as marketers and finance staff, may require higher-level training sessions that incorporate real business scenarios in which new tools improve decisions about, say, product launches.

Reinforcing the Change

Most AI transformations take 18 to 36 months to complete, with some taking as long as five years. To prevent them from losing momentum, leaders need to do four things:

Walk the talk

Role modeling is essential. For starters, leaders can demonstrate their commitment to AI by attending academy training.

But they also must actively encourage new ways of working. AI requires experimentation, and often early iterations don’t work out as planned. When that happens, leaders should highlight what was learned from the pilots. That will help encourage appropriate risk taking.

The most effective role models we’ve seen are humble. They ask questions and reinforce the value of diverse perspectives. They regularly meet with staff to discuss the data, asking questions such as “How often are we right?” and “What data do we have to support today’s decision?”

The CEO of one specialty retailer we know is a good example. At every meeting she goes to, she invites attendees to share their experience and opinions—and offers hers last. She also makes time to meet with business and analytics employees every few weeks to see what they’ve done—whether it’s launching a new pilot or scaling up an existing one.

Make businesses accountable

It’s not uncommon to see analytics staff made the owners of AI products. However, because analytics are simply a means of solving business problems, it’s the business units that must lead projects and be responsible for their success. Ownership ought to be assigned to someone from the relevant business, who should map out roles and guide a project from start to finish. Sometimes organizations assign different owners at different points in the development life cycle (for instance, for proof of value, deployment, and scaling). That’s a mistake too, because it can result in loose ends or missed opportunities.

A scorecard that captures project performance metrics for all stakeholders is an excellent way to align the goals of analytics and business teams. One airline company, for instance, used a shared scorecard to measure rate of adoption, speed to full capability, and business outcomes for an AI solution that optimized pricing and booking.

Track and facilitate adoption

Comparing the results of decisions made with and without AI can encourage employees to use it. For example, at one commodity company, traders learned that their non-AI-supported forecasts were typically right only half the time—no better than guessing. That discovery made them more open to AI tools for improved forecasting.

Teams that monitor implementation can correct course as needed. At one North American retailer, an AI project owner saw store managers struggling to incorporate a pilot’s output into their tracking of store performance results. The AI’s user interface was difficult to navigate, and the AI insights generated weren’t integrated into the dashboards the managers relied on every day to make decisions. To fix the issue, the AI team simplified the interface and reconfigured the output so that the new data stream appeared in the dashboard.

Provide incentives for change

Acknowledgment inspires employees for the long haul. The CEO of the specialty retailer starts meetings by shining a spotlight on an employee (such as a product manager, a data scientist, or a frontline worker) who has helped make the company’s AI program a success. At the large retail conglomerate, the CEO created new roles for top performers who participated in the AI transformation. For instance, he promoted the category manager who helped test the optimization solution during its pilot to lead its rollout across stores—visibly demonstrating the career impact that embracing AI could have.

Finally, firms have to check that employees’ incentives are truly aligned with AI use. This was not the case at a brick-and-mortar retailer that had developed an AI model to optimize discount pricing so that it could clear out old stock. The model revealed that sometimes it was more profitable to dispose of old stock than to sell it at a discount, but the store personnel had incentives to sell everything, even at steep discounts. Because the AI recommendations contradicted their standard, rewarded practice, employees became suspicious of the tool and ignored it. Since their sales incentives were also closely tied to contracts and couldn’t easily be changed, the organization ultimately updated the AI model to recognize the trade-off between profits and the incentives, which helped drive user adoption and lifted the bottom line.

![]()

The actions that promote scale in AI create a virtuous circle. The move from functional to interdisciplinary teams initially brings together the diverse skills and perspectives and the user input needed to build effective tools. In time, workers across the organization absorb new collaborative practices. As they work more closely with colleagues in other functions and geographies, employees begin to think bigger—they move from trying to solve discrete problems to completely reimagining business and operating models. The speed of innovation picks up as the rest of the organization begins to adopt the test-and-learn approaches that successfully propelled the pilots.

As AI tools spread throughout the organization, those closest to the action become increasingly able to make decisions once made by those above them, flattening organizational hierarchies. That encourages further collaboration and even bigger thinking.

The ways AI can be used to augment decision making keep expanding. New applications will create fundamental and sometimes difficult changes in workflows, roles, and culture, which leaders will need to shepherd their organizations through carefully. Companies that excel at implementing AI throughout the organization will find themselves at a great advantage in a world where humans and machines working together outperform either humans or machines working on their own.

Originally published in July–August 2019. Reprint R1904C