The mere mention of multithreading can strike fear in the hearts of some programmers. For others, it fires them up for a good challenge. No matter how you react to the subject, multithreading is an area riddled with minefields. Unless you show due diligence, a threading bug can jump up and bite you—and bite you in a place where you cannot seem to find it easily. Threading bugs can be among the hardest to find and they are hard enough to find on a single-processor machine,; add more processors and cores, and the bugs can become even harder to find. In fact, some threading bugs don't even rear their ugly head until you run your application on a multiprocessor machine, because that's the only way to get true concurrent multithreading. For this reason, I always advise anyone developing a multithreaded application to test, and test often, on a multiprocessor machine. Otherwise, you run the risk of sending your product out the door with lurking threading bugs.

I remember it as if it were a meal ago: At a former employer of mine, we were soon to ship our gold master to the manufacturer and have hundreds of thousands of disks made, and then someone finally happened to test the application on a multiprocessor machine in the lab. Back in those days, multiprocessor desktops were few and far between. Needless to say, a great lesson was learned across the entire team, and a nasty bug was sniped before it got out the door.

Even though threading environments have presented many challenges and hurdles over the years, and will continue to do so, the CLR and the .NET base class library mitigate many of these risks and provide a clean model to build upon. It's still true that the greatest challenge of creating high-quality threaded code is that of synchronization. The .NET Framework makes it easier than ever to create new threads or utilize a system-managed pool of threads, and it provides intuitive objects that help you synchronize those threads with each other. However, it's still your duty to make sure you use those objects properly.

Managed threads are virtual threads in the sense that they don't necessarily map one-to-one to OS threads. Managed threads do actually run concurrently, but it would be erroneous to assume that the OS thread currently running a particular managed thread's code will only run managed code for that thread only. In fact, an OS thread could run managed code for multiple managed threads in multiple application domains in an implementation of the CLR. The bottom line is, don't make any assumptions about the correlation between OS threads and managed threads. If you burrow down to the OS thread using the P/Invoke layer to make direct Win32 calls, be sure that you only use platform thread information for debugging purposes and base no program logic on it at all. Otherwise, you'll end up with something that may break as soon as you run it on another CLR implementation.

It would be erroneous to conclude that multithreaded programming is just about creating extra threads to do something that can take a long time to do. Sure, that's part of the puzzle. And when you create a desktop application, you definitely want to use threading techniques to ensure that the UI stays responsive during a long computational operation, because we all know what impatient users tend to do when desktop applications become unresponsive: They kill them! But it's important to realize that there is much more to the threading puzzle than creating an extra thread to run some random code. That task is actually quite easy in the C# environment, so let's take a look and see how easy it really is.

As I said, creating a thread is very simple. Take a look at the following example to see what I mean:

using System;

using System.Threading;

public class EntryPoint

{

private static void ThreadFunc() {

Console.WriteLine( "Hello from new thread {0}!",

Thread.CurrentThread.GetHashCode() );

}

static void Main() {

// Create the new thread.

Thread newThread =

new Thread( new ThreadStart(EntryPoint.ThreadFunc) );

Console.WriteLine( "Main Thread is {0}",

Thread.CurrentThread.ManagedThreadId );

Console.WriteLine( "Starting new thread..." );

// Start the new thread.

newThread.Start();

// Wait for new thread to finish.

newThread.Join();

Console.WriteLine( "New thread has finished" );

}

}All you have to do is create a new System.Thread object and pass an instance of the ThreadStart delegate as the parameter to the constructor. The ThreadStart delegate references a method that takes no parameters and returns no parameters. In the previous example, I chose to use the static ThreadFunc method as the start of execution for the new thread. I could have just as easily chosen to use any other method visible to the code creating the thread, as long as it neither accepted nor returned parameters. Notice that the code also outputs the managed thread identifier in two different ways to demonstrate how you can identify threads in the managed world. In the unmanaged C++ world, you would use the thread ID obtained via the Win32 API. In the managed world of .NET 1.1, you instead use the value returned by GetHashCode. As long as this thread is alive, it is guaranteed never to collide with any other thread in any application domain of this process. The thread hash code is not globally unique on the entire system. Starting with .NET 2.0, you can get the managed thread id by accessing the Thread.ManagedThreadId property. Also, you can see how you can get a reference to the current thread by accessing the static property Thread.CurrentThread. Finally, notice the call to the Join method on the newThread object. In native Win32 code, you normally wait for a thread to finish by waiting on its handle. When the thread finishes running, the operating system signals its handle and the wait completes. The Thread.Join method encapsulates this functionality. In this case, the code waits forever for the thread to finish. Thread.Join also provides a few overloads that allow you to specify a timeout period on the wait.

Note

There is some confusion in the MSDN documentation regarding whether one should call Thread.GetHashCode or access the Thread.ManagedThreadId property. If you read the MSDN documentation carefully, the summary page for System.Thread indicates that GetHashCode is what you should use to obtain the unique managed thread identifier during the duration the thread is alive. But if you look at the documentation for Thread.GetHashCode, it states that you should use the ManagedThreadId property instead. By debugging into the example application above using windbg in the Debugging Tools for Windows package in concert with the sos.dll debugger extension, I was able to determine that the results of GetHashCode and the ManagedThreadId property accessor both harvest the value from the same location within an internal structure of the Thread object instance. Arguably, ManagedThreadId makes the code easier to read because it's more obvious what it is. Additionally, ManagedThreadId was introduced in .NET 2.0 and if you are targeting .NET 1.1, then you must use GetHashCode. I expect this confusion in the MSDN documentation to go away at some point soon, as it is a documentation bug. You should always rely upon ManagedThreadId even though GetHashCode returns the same value for the purposes of backwards compatibility. Throughout the rest of this chapter, I will be using ManagedThreadId rather than GetHashCode.

When you create a separate thread, it is subject to the rules of the thread scheduler on the system, just like any other thread. However, sometimes you need to create threads that carry a little more or a little less weight when the scheduler algorithm is deciding which thread to execute next. You can control the priority of a managed thread via the Thread.Priority property. You can adjust this value as necessary during execution of the thread. It's actually a rare occurrence that you'll need to adjust this value. All threads start out with the priority of Normal from the ThreadPriority enumeration.

In the managed environment, the System.Thread class nicely encapsulates all of the operations that you may perform on a thread. If you have some sort of state data that you must transmit to the new thread so that it has that data available when it starts execution, you can simply create a helper object and initialize the ThreadStart delegate to point to an instance method on that object. Yet again, you solve another problem by introducing another level of indirection in the form of a class. Suppose you have a system where you fill multiple queues with tasks, and then at some point you want to create a new thread to process the items in a specific queue that you pass into it. The following code demonstrates one way you can achieve such a goal:

using System;

using System.Threading;

using System.Collections;

public class QueueProcessor

{public QueueProcessor( Queue theQueue ) {

this.theQueue = theQueue;

theThread = new Thread( new ThreadStart(this.ThreadFunc) );

}

private Queue theQueue;

private Thread theThread;

public Thread TheThread {

get {

return theThread;

}

}

public void BeginProcessData() {

theThread.Start();

}

public void EndProcessData() {

theThread.Join();

}

private void ThreadFunc() {

// ... drain theQueue here.

}

}

public class EntryPoint

{

static void Main() {

Queue queue1 = new Queue();

Queue queue2 = new Queue();

// ... operations to fill the queues with data.

// Process each queue in a separate thread.

QueueProcessor proc1 = new QueueProcessor( queue1 );

proc1.BeginProcessData();

QueueProcessor proc2 = new QueueProcessor( queue2 );

proc2.BeginProcessData();

// ... do some other work in the meantime.

// Wait for the work to finish.

proc1.EndProcessData();

proc2.EndProcessData();

}

}There are some potential synchronization problems here if anyone were to access the queues after the new threads begin their work. But I'll save synchronization issues until later in the chapter. The class adding the extra level of indirection is the QueueProcessor class. It cleanly encapsulates the worker thread and exposes a lightweight interface to get the work done. In this example, the main thread waits for the work to finish by calling EndProcessData. That method merely calls Join on the encapsulated thread. However, had you required some sort of status regarding the completion of the work, the EndProcessData method could have returned it to you.

For those of you familiar with and accustomed to starting native threads in Win32, you know that you can provide a thread function that accepts a single parameter through which you can pass the start-up state for the thread. In System.Threading.Thread, there is an overloaded version of the constructor that allows you to provide it with a delegate of type ParameterizedThreadStart which is a delegate that accepts a single object reference and returns void. Note however, that because the parameter passed in is an object instance, you must cast it to the expected type in order to use it and that may expose you to run-time cast exceptions if you are not careful. Using the constructor that accepts a ParameterizedThreadStart delegate, the example above would then become the following:

using System;

using System.Threading;

using System.Collections;

public class EntryPoint

{

static void Main() {

Queue queue1 = new Queue();

Queue queue2 = new Queue();

// ... operations to fill the queues with data.

// Process each queue in a separate threda.

Thread proc1 = new Thread( EntryPoint.ThreadFunc );

proc1.Start( queue1 );

Thread proc2 = new Thread( EntryPoint.ThreadFunc );

proc2.Start( queue2 );

// ... do some other work in the meantime.

// Wait for the work to finish.

proc1.Join();

proc2.Join();

}

static private void ThreadFunc( object obj ) {

// We must cast the incoming object into a Queue.

Queue theQueue = (Queue) obj;

// ... drain the queue

}

}In a later section titled "Asynchronous Method Calls," where I discuss asynchronous I/O and thread pools, you'll see that the BeginProcessData/EndProcessData is a common pattern of asynchronous processing used throughout the .NET Framework. The BeginMethod/EndMethod pattern of asynchronous programming in the .NET Framework is similar to the IOU pattern described by Allan Vermeulen in his article, "An Asynchronous Design Pattern" (Dr. Dobb's Journal, June 1996). In that pattern, a function is called to start the asynchronous operation and in return, the caller is given an "I owe you" (IOU) object. Later, the caller can use that object to retrieve the result of the asynchronous operation. The beauty of this pattern is that it completely decouples the caller wanting to get the asynchronous work done from the mechanism used to actually do the work. This pattern is used extensively in the .NET Framework, and I suggest that you employ it for asynchronous method calls, as it will give your clients a familiar look and feel.

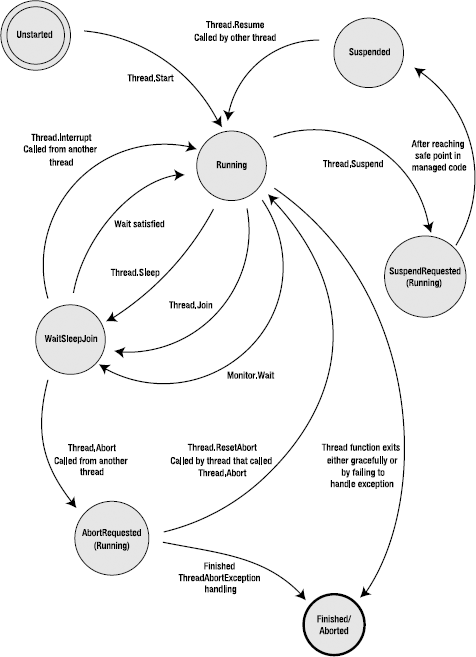

The states of a managed thread are well defined by the runtime. Although the state transitions may seem confusing at times, they aren't much more confusing than the state transitions of an OS thread. There are other considerations to address in the managed world, so the allowable states and state transitions are naturally more complex. Figure 12-1 shows a state diagram for managed threads.

The states in the state diagram are based upon the states defined by the CLR for managed threads, as defined in the ThreadState enumeration. Every managed thread starts life in the Unstarted state. As soon as you call Start on the new thread, it enters the Running state. OS threads that enter the managed runtime start immediately in the Running state, thus bypassing the Unstarted state. Notice that there is no way to get back to the Unstarted state. The dominant state in the state diagram is the Running state. This is the state of the thread when it is executing code normally, including any exception handling and execution of any finally blocks. If the main thread method, passed in via an instance of the ThreadStart delegate during thread creation, finishes normally, then the thread enters the Finished state, as shown in Figure 12-1. Once in this state, the thread is completely dead and will never wake up again. If all of the foreground threads in your process enter the Finished state, the process will exit normally.

The three states mentioned previously cover the basics of managed thread state transition, assuming you have a thread that simply executes some code and exits. Once you start to add synchronization constructs in the execution path or wish to control the state of the thread, whether from another thread or the current thread, things become more complicated.

For example, suppose you're writing code for a new thread and you want to put it to sleep for a while. You would call Thread.Sleep and provide it a timeout, such as how many milliseconds to sleep. This is similar to how you put an OS thread to sleep. When you call Sleep, the thread enters the WaitSleepJoin state, where its execution is suspended for the duration of the timeout. Once the sleep expires, the thread reenters the running state.

Synchronization operations can also put the thread into the WaitSleepJoin state. As may be obvious by the name of the state, calling Thread.Join on another thread in order to wait for it to finish puts the calling thread into the WaitSleepJoin state. Calling Monitor.Wait also enters the WaitSleepJoin state. Now you know the three factors that went into naming the state in the first place. You can use other synchronization methods with a thread, and I'll cover those later in the chapter in the "Synchronizing Work Between Threads" section. As before, once the thread's wait requirements have been met, it reenters the Running state and continues execution normally.

It's important to note that any time the thread is sitting in the WaitSleepJoin state, it can be forcefully pushed back into the Running state when another thread calls Thread.Interrupt on the waiting thread. Win32 programmers will recognize that this behavior is similar to alertable wait states in the operating system. Beware that when a thread calls Thread.Interrupt on another thread, the interrupted thread receives a thrown ThreadInterruptedException. So, even though the interrupted thread reenters the Running state, it won't stay there for long unless an appropriate exception-handling frame is in place. Otherwise, the thread will soon enter the Finished state once the exception boils its way up to the top of the thread's stack unhandled.

Another way that the thread state can transition out of the WaitSleepJoin state is when another thread calls Thread.Abort on the current thread. Technically, a thread could call Abort on itself. However, I consider that a rare execution flow and have not shown it in Figure 12-1. Once Thread.Abort is called, the thread enters the AbortRequested state. This state is actually a form of a running state, because the thread is thrown a ThreadAbortException and must handle the exception. However, as I explain later on, the managed thread treats this exception in a special way, such that the next state will be the final Aborted state unless the thread that called Thread.Abort manages to call Thread.ResetAbort before that happens. Incidentally, there's nothing to stop the thread that is aborting from calling ResetAbort. However, you must refrain from doing such a thing because it could create some ill behavior. For example, if a foreground thread can never be aborted because it keeps resetting the abort, the process will never exit.

Note

Beginning in .NET 2.0, the host has the ability to forcefully kill threads during application domain shutdown by using what's called a rude thread abort. In such a situation, it is impossible for the thread to keep itself alive by using Thread.ResetAbort.

Finally, a running thread enters the SuspendRequested state after calling Thread.Suspend on itself, or after another thread calls Suspend on it. Very shortly after that, the thread automatically enters the Suspended state. Once a thread enters the SuspendRequested state, there is no way to keep it from eventually entering the Suspended state. Later on, in the section titled "Halting Threads and Waking Sleeping Threads," I discuss why this intermediate state is needed when a thread is suspended. But for now, it's important to realize that the SuspendRequested state is a form of a running state in the sense that it is still executing managed code.

That wraps up the big picture regarding managed-thread state transitions. Be sure to refer to Figure 12-1 throughout the rest of the chapter when reading about topics that affect the state of the thread.

When you call Thread.Abort, the thread in question eventually receives a ThreadAbortException. So, naturally, in order to handle this situation gracefully, you must process the ThreadAbortException if there is anything specific you must do when the thread is being aborted. There is also an overload of Abort that accepts an arbitrary object reference, which is then encapsulated in the subsequent ThreadAbortException. This allows the code that is aborting the thread to pass some sort of context information to the ThreadAbortException handler, such as a reason why Abort was called in the first place.

The CLR doesn't deliver a ThreadAbortException unless the thread is running within the managed context. If your thread has called out to a native function via the P/Invoke layer, and that function takes a long time to complete, then a thread abort on that thread is pended until execution returns to managed space.

Note

In .NET 2.0 and later, if a finally block is executing, delivery of a ThreadAbortException is pended until execution leaves the finally block. In .NET 1.x, the abort exception is delivered anyway.

Calling Abort on a thread doesn't forcefully terminate the thread, so if you need to wait until the thread is truly finished executing, you must call Join on that thread to wait until all of the code in the ThreadAbortException exception handler is finished. During such a wait, it is wise to wait with a timeout so that you don't get stuck waiting forever for a thread to finish cleaning up after itself. Even though the code in the exception handler should follow other exception-handler coding guidelines, it's still possible for the handler to take a long time or, gasp, forever to complete its work. Let's take a look at a ThreadAbortException handler and see how this works:

using System; using System.Threading; public class EntryPoint

{

private static void ThreadFunc() {

ulong counter = 0;

while( true ) {

try {

Console.WriteLine( "{0}", counter++ );

}

catch( ThreadAbortException ) {

// Attempt to swallow the exception and continue.

Console.WriteLine("Abort! " );

}

}

}

static void Main() {

Thread newThread =

new Thread( new ThreadStart(EntryPoint.ThreadFunc) );

newThread.Start();

Thread.Sleep( 2000 );

// Abort the thread.

newThread.Abort();

// Wait for thread to finish.

newThread.Join();

}

}From a cursory glance at the code, it would appear that the call to Join on the newThread instance will block forever. However, that's not what happens. It would appear that because the ThreadAbortException is handled within the loop of the thread function, the exception will be swallowed and the loop will continue no matter how many times the main thread attempts to abort the thread. As it turns out, the ThreadAbortException thrown via the Thread.Abort method is special. When your thread finishes processing the abort exception, the runtime implicitly rethrows it at the end of your exception handler. It's the same as if you had rethrown the exception yourself. Therefore, any outer exception handlers or finally blocks will still execute normally. In the example, the call to Join won't be waiting forever as initially expected.

There is a way to keep the system from rethrowing the ThreadAbortException, by calling the Thread.ResetAbort static method. However, the general recommendation is that you only call ResetAbort from the thread that called Abort. This would require some sort of tricky intrathread communication technique if you wanted to cause this to happen from within the abort handler of the thread being aborted. If you find yourself trying to implement such a technique to abort a thread abort, then maybe it's time to reassess the design of the system in the first place. In other words, bad design alert!

Even though the runtime provides a much cleaner mechanism for aborting threads such that you can inform interested parties when the thread is aborting, you still have to implement a ThreadAbortException handler properly.

Note

The fact that ThreadAbortException instances can be thrown asynchronously into a random managed thread makes it tricky to create robust exception-safe code. Be sure to read the "Constrained Execution Regions" section in Chapter 7.

Similar to native threads, there are mechanisms in place for putting a thread to sleep for a defined period of time or actually halting execution until it is explicitly released again. If a thread just wants to suspend itself for a prescribed period of time, it may call the static method Thread.Sleep to enter the WaitSleepJoin state. The only parameter to the Sleep method is the number of milliseconds the thread should sleep. When called, this method causes the thread to relinquish the rest of its time slice with the processor and go to sleep. After the time has expired, the thread may be considered for scheduling again. Naturally, the time duration you pass to Sleep is reasonably accurate, but not exact. That's because, at the end of the duration, the thread is not immediately given time on the processor. There could be other, higher-priority threads in the queue before it. Therefore, using Sleep to synchronize execution between two threads is strongly discouraged.

Note

If you find yourself solving synchronization problems by introducing calls to Sleep within your code, you're not solving the problems at all. You're merely covering them up even more.

There is even a special value, Timeout.Infinite, that you can pass to Sleep to make the thread go to sleep forever. You can wake a sleeping thread by interrupting it via the Thread.Interrupt instance method. Interrupt is similar to Abort in that it wakes up the target thread and throws a ThreadInterruptedException. Therefore, if your thread function is not equipped to handle the exception, the exception will percolate all the way up the call stack until the runtime ends the thread's execution because of an unhandled exception. If you really do want to implement an alertable sleep state using this mechanism, then to be safe, you should make your call to Sleep within a try block and catch the ThreadInterruptException. Unlike the ThreadAbortException, the ThreadInterruptException is not automatically rethrown by the runtime at the end of the exception handler. Note, however, that you should never have to implement an alertable sleep mechanism because the Monitor type, introduced shortly, provides a more efficient way to achieve the same goal.

Note

Another special parameter value for Thread.Sleep is 0. If you pass 0, Thread.Sleep will cause the thread to relinquish the rest of its time slice. The thread will then be allowed to run again once the system thread scheduler comes back around to it. If you are running on the .NET 4.0 platform or later, you should use the new method Thread.Yield instead.

Another way to put a thread to sleep for an indefinite time is via the Thread.Suspend instance method. Calling Suspend will suspend execution of the thread until it is explicitly resumed. You can resume the thread by calling the Resume instance method or Interrupt. However, with Interrupt, the target thread needs to have a proper exception handler around the Suspend call; otherwise, the thread could exit. Technically, calling Abort on the thread will resume the thread, but only to send it a ThreadAbortException ultimately causing the thread to exit. Keep in mind that any thread with sufficient privileges can call Suspend on a thread—even the current thread can call Suspend. If the current thread calls Suspend, it blocks at that point, waiting for the next Resume call.

It's important to note that when you call Suspend on a thread, the thread is not suspended immediately in its tracks. Instead, the thread is allowed to execute to what's called a safe point. Once it reaches the safe point, the thread is suspended. A safe point is a place in the managed code where it is safe to allow garbage collection. For instance, if the CLR determines it is time to perform a garbage collection, it must suspend all threads temporarily while it performs the collection. However, as you can imagine, if a thread is in the middle of a multi-instruction operation that accesses an object on the heap, and then the GC comes along and moves that object to a different place in system memory, only bad things will happen. For that reason, when the GC suspends threads for collection, it must wait until they all have reached a safe point where it is OK to move things around on the heap. For this reason, the call to Suspend allows the thread to reach a safe point before actually suspending it. I also want to stress that you should never use Suspend and Resume to orchestrate thread synchronization. Of course, the fact that the system allows the thread to continue running until it reaches a safe point is a good enough reason not to rely on this mechanism, but it's also a bad design practice.

In this chapter's previous examples, I've used the Join method to wait for a specific thread to exit. In fact, that is exactly what it is used for. In an unmanaged Win32 application, you may have been accustomed to waiting for the thread handle to become signaled to indicate the completion of the thread. The Join method is the same mechanism indeed. The name of the method is suggestive of the fact that you're joining the current thread's execution path to that of the thread you're calling Join on, and you cannot proceed until your joined thread arrives.

Naturally, you'll want to avoid calling Join on the current thread. The effect is similar to calling Suspend from the current thread. The thread is blocked until it is interrupted. Even when a thread is blocked from calling Join, it can be awoken via a call to Interrupt or Abort as described in the previous section.

Sometimes, you'll want to call Join to wait for another thread to complete, but you won't want to get stuck waiting forever. Join offers overloads that allow you to designate the amount of time you're willing to wait. Those overloads return a Boolean value that returns true to indicate that the thread actually terminated, or false to indicate that the timeout expired.

When you create a thread in the .NET managed environment, it exists as a foreground thread by default. This means that the managed execution environment, and thus the process, will remain alive as long as the thread is alive. Consider the following code:

using System;

using System.Threading;

public class EntryPoint

{

private static void ThreadFunc1() {Thread.Sleep( 5000 );

Console.WriteLine( "Exiting extra thread" );

}

static void Main() {

Thread thread1 =

new Thread( new ThreadStart(EntryPoint.ThreadFunc1) );

thread1.Start();

Console.WriteLine( "Exiting main thread" );

}

}If you run this code, you'll see that Main exits before the extra thread finishes, as shown in the following output.

Exiting main thread Exiting extra thread

C++ developers will find that very different from the behavior they're used to, where the process normally terminates once the main routine in the application exits.

At times, you might want the process to terminate when the main thread finishes, even when there are extra threads in the background. You can accomplish this in the runtime by turning the extra thread into a background thread by setting the Thread.IsBackground property to true. You'll want to consider doing this for threads that do stuff such as listen on a port for network connections, or some other background task such as that. Keep in mind, though, that you always want to make sure that your threads get a proper chance to clean up if they need to before they are shut down. When a background thread is shut down as the process exits, it doesn't receive an exception of any type as it does when someone calls Interrupt or Abort. So, if the thread has persistent data in some sort of half-baked state, shutting down the process will definitely not be good for that persistent data. Therefore, when creating background threads, make sure they are coded so that they can be terminated rudely at any point without any adverse effects. You can also implement some sort of mechanism to notify the thread that the process is to shut down soon. Creating such a mechanism will prove messy, because the main thread will need to wait a reasonable amount of time after firing the notification for the extra thread to do its cleanup work. At that point, it almost becomes reasonable to turn the thread back into a foreground thread.

You can create thread-local storage in the managed environment. Depending on your application, it may be necessary for you to have a static field of a class that is unique for each thread that the class is used in. Doing so is trivially easy in the majority of the cases in C#. If you have a static field that must be thread-relative, simply adorn it with the ThreadStaticAttribute attribute. Once you do that, the field will be initialized for each thread that accesses it. Under the covers, each thread is given its own thread-relative location to save the value or reference. However, when using references to objects, be careful with your assumptions about object creation. The following code shows a pitfall to avoid:

using System; using System.Threading;

public class TLSClass

{

public TLSClass() {

Console.WriteLine( "Creating TLSClass" );

}

}

public class TLSFieldClass

{

[ThreadStatic]

public static TLSClass tlsdata = new TLSClass();

}

public class EntryPoint

{

private static void ThreadFunc() {

Console.WriteLine( "Thread {0} starting...",

Thread.CurrentThread.ManagedThreadId );

Console.WriteLine( "tlsdata for this thread is "{0}"",

TLSFieldClass.tlsdata );

Console.WriteLine( "Thread {0} exiting",

Thread.CurrentThread.ManagedThreadId );

}

static void Main() {

Thread thread1 =

new Thread( new ThreadStart(EntryPoint.ThreadFunc) );

Thread thread2 =

new Thread( new ThreadStart(EntryPoint.ThreadFunc) );

thread1.Start();

thread2.Start();

}

}This code creates two threads that access a thread-relative static member of TLSFieldClass. To illustrate the trap, I've made that thread-specific slot of type TLSClass, and the code attempts to initialize that slot with an initializer in the class definition that simply calls new on the default constructor of the class. Now, look how surprising the output is:

Thread 3 starting...

Thread 4 starting... Creating TLSClass tlsdata for this thread is "TLSClass" Thread 3 exiting

tlsdata for this thread is ""

Thread 4 exiting

Warning

Always remember that ordering of execution in multithreaded programs is never guaranteed unless you employ specific synchronization mechanisms. This output was generated on a single-processor system. If you run the same application on a multiprocessor system, you'll likely see that the output executes in a completely different order. Nevertheless, the purpose of the example does not change.

The important thing to take note of is that the constructor for TLSClass was only called once. The constructor was called for the first thread, but not for the second thread. For the second thread, the field is initialized to null. tlsdata is static, therefore its initialization is actually done at the time the static constructor for the TLSFieldClass is called. However, static constructors can only be called once per class per application domain. For this reason, you want to avoid assigning thread-relative slots at the point of declaration. That way, they will always be assigned to their default values. For reference types, that means null, and for value types, it means the equivalent of setting all of the bits in the value's underlying storage to 0. Then, upon first access to the thread-specific slot, you can test the value for null and create an instance as appropriate. The cleanest way to achieve this is always to access the thread-local slot via a static property.

As an added note, don't think that you can outsmart the compiler by adding a level of indirection, such as assigning the thread-relative slot based on the return value of a static method. You'll find that your static method will only get called once. If the CLR were to "fix" this problem for you, it would undoubtedly be less efficient because it would have to test whether the field is being accessed for the first time and call the initialization code if that is the case. If you think about it, you'll find that task is a lot harder than it sounds, because it will be impossible to do the right thing 100% of the time.

There is another way to use thread-local storage that doesn't involve decorating a static variable with an attribute. You can allocate thread-specific storage dynamically by using either of the Thread.AllocateDataSlot or Thread.AllocateNamedDataSlot methods. You'll want to use these methods if you won't know how many thread-specific slots you'll need to allocate until runtime. Otherwise, it's generally much easier to use the static field method. When you call AllocateDataSlot, a new slot is allocated in all threads to hold a reference to an instance of type System.Object. The method returns a handle of sorts in the form of a LocalDataStoreSlot object instance. You can access this location using the GetData and SetData methods on the thread. Let's look at a modification of the previous example:

using System;

using System.Threading;

public class TLSClass

{

static TLSClass() {

tlsSlot = Thread.AllocateDataSlot();

}

private TLSClass() {Console.WriteLine( "Creating TLSClass" );

}

public static TLSClass TlsSlot {

get {

Object obj = Thread.GetData( tlsSlot );

if( obj == null ) {

obj = new TLSClass();

Thread.SetData( tlsSlot, obj );

}

return (TLSClass) obj;

}

}

private static LocalDataStoreSlot tlsSlot = null;

}

public class EntryPoint

{

private static void ThreadFunc() {

Console.WriteLine( "Thread {0} starting...",

Thread.CurrentThread.ManagedThreadId );

Console.WriteLine( "tlsdata for this thread is "{0}"",

TLSClass.TlsSlot );

Console.WriteLine( "Thread {0} exiting",

Thread.CurrentThread.ManagedThreadId );

}

static void Main() {

Thread thread1 =

new Thread( new ThreadStart(EntryPoint.ThreadFunc) );

Thread thread2 =

new Thread( new ThreadStart(EntryPoint.ThreadFunc) );

thread1.Start();

thread2.Start();

}

}As you can see, using dynamic slots is a little more involved than using the static field method. However, it does provide some extra flexibility. Notice that the slot is allocated in the type initializer, which is the static constructor you see in the example. That way, the slot is allocated for all threads at the point where the runtime initializes the type for use. Notice that I'm testing the slot for null in the property accessor of the TLSClass. When you allocate the slot using AllocateDataSlot, the slot is initialized to null for each thread.

You may find it convenient to access your thread-specific storage via a string name rather than with a reference to a LocalDataStoreSlot instance. You can do that if you create your TLS slot using Thread.AllocateNamedDataSlot. However, you must be careful to use a reasonably unique name so that use of that same name elsewhere in the code won't cause adverse effects. You may consider naming your slot using a string representation of a GUID, so that you can reasonably assume that nobody will attempt to create one with the same name. When you need to access the slot, you can call GetNamedDataSlot, which will simply translate your string into a LocalDataStoreSlot instance. I urge you to read the MSDN documentation regarding named thread-local storage slots to get more details.

Most of this will be familiar to those developers who have used thread-local storage in Win32. There is one improvement, though: Because managed TLS slots are implemented in a different way, the limitation on the number of Win32 TLS slots doesn't apply.

It is possible for unmanaged threads to enter the managed environment from the outside. For example, managed objects can be exposed to native code via the COM interop layer. When the native thread calls through to the object, it enters the managed environment. When this happens, the CLR makes note of that fact, and if it is the first time the unmanaged thread has called into the CLR, it sets up the necessary bookkeeping structures allowing it to run as a managed thread within the managed runtime. As I mentioned before, threads that enter the managed environment this way initially start their managed thread existence in the Running state, as shown in Figure 12-1. Once this bookkeeping is set up, then each time the same unmanaged thread enters the runtime, it is associated with the same managed thread.

Just as managed objects can be exposed to the native world as COM objects, COM objects can be exposed to the managed world as managed objects. When a managed thread calls out to a COM object in this way, the runtime relinquishes control over the thread's state until it reenters the managed environment.

Suppose a COM object, written in native C++, calls the WaitForSingleObject Win32 API function to wait for a particular synchronization object to become signaled. Then, if a managed thread calls Thread.Abort or Thread.Interrupt to wake up the thread, the wakeup will be pended until the thread reenters the managed environment. In other words, it will have no effect while the thread is executing unmanaged code. Therefore, you want to be reasonably cognizant of what sorts of synchronization mechanisms are being used by native COM objects that your managed code is calling out to.

Finally, if you've ever done an extensive amount of COM development in the past, then you're familiar with the notion of a COM apartment and the proxies and stubs that go along with them.[43] When managed code calls out into COM objects, it is important that the managed code be set up to call the unmanaged COM object through either a single-threaded apartment (STA) or a multithreaded apartment (MTA). You can set this property on a new managed thread by calling the Thread.SetApartmentState method. Once the thread starts, the apartment state gets locked in. In other words, you cannot change it afterwards. When you call out to COM objects from managed code, it's best to know the type of apartment the COM objects will run in. That way, you can judiciously choose which type of COM apartment you want your thread to run in. Choosing the wrong type may introduce inefficiencies by forcing calls to go through proxies and stubs. In even worse cases, COM objects may not be callable from other apartment types.

Using Thread.SetApartmentState, you can control the COM apartment property for new managed threads that you create. But what about the main thread of an application? The fact is that once the main thread of a managed application is running, it's already too late to set the apartment state. That's because the managed runtime initializes the main thread to the MTA state as the managed application is initialized. If you need to change the apartment state of the main thread to STA, the only way to do so is by decorating the Main method with the STAThreadAttribute attribute. Incidentally, you could also decorate it with the MTAThreadAttribute attribute, but that would be redundant because that's the CLR's default choice. The following code shows an example of what I'm talking about:

public class EntryPoint

{

[STAThread]

static void Main() {

}

}If you've ever worked with Windows Forms applications, especially those generated by the wizards of Visual Studio, you probably have already seen this attribute and wondered what it was all about. By decorating the main UI thread of GUI applications with this attribute, you can integrate native ActiveX controls more easily in the GUI, because those normally run in an STA.

The apartment state of a managed thread only pertains to COM interop situations. Note that the apartment state of a managed thread has no effect on the execution of managed code. And more importantly, when managed objects are consumed by native applications via the COM interop layer, the apartment state doesn't control what apartment the object appears to live in from the perspective of the native application. From the native side of the fence, all managed objects appear as COM objects that live in the MTA and integrate the Free Threaded Marshaller (FTM). Also, all threads created in the CLR's thread pool always live in the MTA for the process.

Synchronization is arguably the most difficult part of creating multithreaded applications. You can create extra threads to do work all day long without having to worry about synchronization, as long as those threads do not concurrently access data that other threads access. Nobody needs to know when they finish or what the results of their operations are. Obviously, it's a rare case that you'll create such a thread. In most cases, you need to communicate with the running thread, wait for it to reach a defined state in the code, or possibly work on the same object or value instances that other threads are working on.

In all of those cases, and more, you must rely upon synchronization techniques to synchronize the threads to avoid race conditions and deadlocks. With race conditions, two threads may need to access the same piece of memory and only one can safely do so at a time. In these cases, you must use a synchronization mechanism that will only allow one thread at a time to access the data and lock out the other thread, making it wait until the first one is done. Multithreaded environments are stochastic in nature, and you never know when the scheduler will take away control from the thread. The classic example is where one thread gets halfway through changing a block of memory, loses control, and then the other thread is given control and starts reading the memory, assuming that it is in a valid state. An example of a deadlock is when two threads are waiting for each other to release a resource. Both threads end up waiting for each other, and because neither one of them can run until the wait is satisfied, they will end up waiting forever.

In all synchronization tasks, you should use the most lightweight sync mechanism that you can get away with and no heavier. For example, if you're trying to share a data block between two threads in the same process and you must gate access between the two, use something such as a Monitor (discussed shortly) lock rather than a Mutex. Why? Because a Mutex is meant to gate access to a shared resource between processes, and therefore, is a heavyweight OS object that slows down the process when acquiring and releasing the lock. If no interprocess locking is necessary, use the Monitor instead. Even more lightweight than the Monitor is a set of methods in the Interlocked class. These are ideal when you know that the likelihood of actually having to wait a good while when acquiring a lock is low.

Note

Any type of wait on a kernel object—such as waiting on a Mutex, Semaphore, EventWaitHanldle, or any other wait that boils down to waiting on a Win32 kernel object—requires a transition to kernel mode. Transitions to kernel mode are expensive, and you should avoid them if at all possible. For example, if the threads you are synchronizing live in the same process, kernel synchronization objects are probably too heavy. The lightest synchronization technique involves crafty use of the Threading.Interlocked class. Its methods are all implemented completely in user mode, thus allowing you to avoid the user-to-kernel mode transition. However, using the Threading.Interlocked class can be tricky, so moving up to a slightly higher level locking mechanism such as Monitor (or any other mechanism that does not require transitions to and from kernel mode) is often desired when synchronizing threads within the same process.

When using synchronization objects in a multithreaded environment, you want to hold the lock for as little time as possible. For example, if you acquire a synchronization lock to read a shared structure instance, and code within the method that acquires the lock uses that instance of the structure for some purpose, it's best to make a local copy of the structure on the stack and then release the lock immediately, unless it is logically impossible or degrading to performance. That way, you don't tie up other threads in the system that need to access the guarded variable.

When you need to synchronize thread execution, never rely upon methods such as Thread.Suspend or Thread.Resume to control thread synchronization. If you recall from a previous section in this chapter, calling Thread.Suspend doesn't actually suspend the thread immediately. Instead, it must get to a safe point within the managed code before it can suspend execution. And never use Thread.Sleep to synchronize threads. Thread.Sleep is appropriate when you're doing some sort of polling loop on an entity, such as device hardware that has just been reset and has no way of notifying anyone that it is back online. In that case, you don't want to check the state in a loop repeatedly. Instead, it's much nicer to sleep a little bit between polling, to allow the scheduler to let other threads run. I've said this in a previous section, but I'll say it again because it's so important: If you ever find yourself solving a synchronization bug by introducing a call to Thread.Sleep at some seemingly random point in the code, you're not solving the problem at all. Rather, you're hiding it even deeper. Just don't do it!

Those of you who come from the unmanaged world of programming against the Win32 API probably already know about the Interlocked... family of functions. Thankfully, those functions have been exposed to managed C# developers via static methods on the Interlocked class in the System.Threading namespace. Sometimes, when running multiple threads, it's necessary to maintain a simple variable—typically, a value, but possibly an object—between the multiple threads. For example, suppose you have some reason to track the number of running threads in a static integer somewhere. When a thread begins, it increments that value, and when it finishes, it decrements that value. Obviously, you must synchronize access to that value somehow, because the scheduler could take away control from one thread and give it to another when the first one is in the process of updating the value. Even worse, the same code could be executing concurrently on a multiprocessor machine. For this task, you can use Interlocked.Increment and Interlocked.Decrement. These methods are guaranteed to modify the value atomically across all processors in the system. Take a look at the following example:

using System; using System.Threading;

public class EntryPoint

{

static private volatile int numberThreads = 0;

static private Random rnd = new Random();

private static void RndThreadFunc() {

// Manage thread count and wait for a

// random amount of time between 1 and 12

// seconds.

Interlocked.Increment( ref numberThreads );

try {

int time = rnd.Next( 1000, 12000 );

Thread.Sleep( time );

}

finally {

Interlocked.Decrement( ref numberThreads );

}

}

private static void RptThreadFunc() {

while( true ) {

int threadCount = 0;

threadCount =

Interlocked.CompareExchange( ref numberThreads,

0, 0 );

Console.WriteLine( "{0} thread(s) alive",

threadCount );

Thread.Sleep( 1000 );

}

}

static void Main() {

// Start the reporting threads.

Thread reporter =

new Thread( new ThreadStart(

EntryPoint.RptThreadFunc) );

reporter.IsBackground = true;

reporter.Start();

// Start the threads that wait random time.

Thread[] rndthreads = new Thread[ 50 ];

for( uint i = 0; i < 50; ++i ) {

rndthreads[i] =

new Thread( new ThreadStart(

EntryPoint.RndThreadFunc) );

rndthreads[i].Start();

}

}

}This little program creates 50 foreground threads that do nothing but wait a random period of time between 1 and 12 seconds. It also creates a background thread that reports how many threads are currently alive. If you look at the RndThreadFunc method, which is the thread function that the 50 threads use, you can see it increment and decrement the integer value using the Interlocked methods. Notice that I use a finally block to ensure that the value gets decremented no matter how the thread exits. You could use the disposable trick with the using keyword by wrapping the increment and decrement in a separate class that implements IDisposable. That would get rid of the ugly finally block. But, in this case, it wouldn't help you at all, because you'd also have to create a reference type to contain the integer count variable, as you cannot store a ref to the integer as a field in the helper class.

Note

Jon Skeet makes an excellent point regarding the previous sample code that underscores the importance of knowing how types behave in concurrent situations. The static instance of Random in the sample code above is not thread aware. Thus, if two threads call methods on it at just the right time, the internal state of the Random instance could theoretically become inconsistent thus sending it into the land of undefined behavior. Jon suggests wrapping Random within another type that provides the necessary synchronization. However, for the purposes of this example code above, doing so would clutter the real message.

You've already seen Interlocked.Increment and Interlocked.Decrement in action. But what about Interlocked.CompareExchange, which the reporter thread uses? Remember, because multiple threads are attempting to write to the threadCount variable, the reporter thread must read the value in a synchronized way as well. That's where Interlocked.CompareExchange comes in. Interlocked.CompareExchange, as its name implies, allows you to exchange the value of a variable if the current value is equal to the comparand with that of another in an atomic fashion, and it returns the value that was stored previously in that location. I'm not so interested in replacing the value with zero, which is why I only do so if it is already zero, but I'm more interested in the side effect that the Interlocked.ComapreExchange method returns to me the value that was in the slot.

The last method to cover in the Interlocked class is CompareExchange. As you have already seen, this little method is handy indeed. It's similar to Interlocked.Exchange, in that it allows you to exchange the value of a location or slot in an atomic fashion. However, it only does the exchange if the original value compares equal to a provided comparand and all of these operations are collectively performed atomically. In any event, the method always returns the original value. One extremely handy use of the CompareExchange method is to create a lightweight spin lock. A spin lock gets its name from the fact that if it cannot acquire the lock, it will spin in a tight loop until it can. Typically, when implementing a spin lock, you put your thread to sleep for a very brief slice of time with each failed attempt to acquire the lock. That way, the thread scheduler can give processor time to another thread while you wait. If you don't want the thread to sleep but only to release its time slice, you can pass a value of 0 to Thread.Sleep. Let's look at an example:

using System;

using System.IO;

using System.Threading;

public class MySpinLock

{

public MySpinLock( int spinWait ) {

this.spinWait = spinWait;

}

public void Enter() {

while( Interlocked.CompareExchange(ref theLock,

1,

0) == 1 ) {

// The lock is taken, spin.

Thread.Sleep( spinWait );

}

}

public void Exit() {

// Reset the lock.

Interlocked.Exchange( ref theLock,

0 );

}

private volatile int theLock = 0;

private int spinWait;

}

public class MySpinLockManager : IDisposable

{

public MySpinLockManager( MySpinLock spinLock ) {

this.spinLock = spinLock;

spinLock.Enter();

}

public void Dispose() {

spinLock.Exit();

}

private MySpinLock spinLock;

}

public class EntryPoint

{

static private Random rnd = new Random();

private static MySpinLock logLock = new MySpinLock( 10 );private static StreamWriter fsLog =

new StreamWriter( File.Open("log.txt",

FileMode.Append,

FileAccess.Write,

FileShare.None) );

private static void RndThreadFunc() {

using( new MySpinLockManager(logLock) ) {

fsLog.WriteLine( "Thread Starting" );

fsLog.Flush();

}

int time = rnd.Next( 10, 200 );

Thread.Sleep( time );

using( new MySpinLockManager(logLock) ) {

fsLog.WriteLine( "Thread Exiting" );

fsLog.Flush();

}

}

static void Main() {

// Start the threads that wait random time.

Thread[] rndthreads = new Thread[ 50 ];

for( uint i = 0; i < 50; ++i ) {

rndthreads[i] =

new Thread( new ThreadStart(

EntryPoint.RndThreadFunc) );

rndthreads[i].Start();

}

}

}This example is similar to the previous one. It creates 50 threads that wait a random amount of time. However, instead of managing a thread count, it outputs a line to a log file. This writing is happening from multiple threads, and instance methods of StreamWriter are not thread-safe, therefore you must do the writing in a safe manner within the context of a lock. That is where the MySpinLock class comes in.

Internally, it manages a lock variable in the form of an integer, and it uses Interlocked.CompareExchange to gate access to the lock. The call to Interlocked.CompareExchange in MySpinLock.Enter is saying

If the lock value is equal to 0, replace the value with 1 to indicate that the lock is taken; otherwise, do nothing.

If the value of the slot already contains 1, it's taken, and you must sleep and spin.

Both of those items occur in an atomic fashion via the Interlocked class, so there is no possible way that more than one thread at a time can acquire the lock. When the MySpinLock.Exit method is called, all it needs to do is reset the lock. However, that must be done atomically as well—hence, the call to Interlocked.Exchange.

Note

Because the internal lock is represented by an int (which is an Int32), one could simply set the value to zero in MySpinLock.Exit. However, as mentioned in the previous sidebar, you must be careful if the lock were a 64-bit value and you are running on a 32-bit platform. Therefore, for the sake of example, I err on the side of caution. What if a maintenance engineer came along and changed the underlying storage from an int to an IntPtr (which is a pointer sized type, thus storage size is dependent on the platform) and didn't change the place where theLock is reset as well?

In this example, I decided to illustrate the use of the disposable/using idiom to implement deterministic destruction, where you introduce another class—in this case, MySpinLockManager—to implement the RAII idiom. This saves you from having to remember to write finally blocks all over the place. Of course, you still have to remember to use the using keyword, but if you follow the idiom more closely than this example, you would implement a finalizer that could assert in the debug build if it ran and the object had not been disposed.[44]

Keep in mind that spin locks implemented in this way are not reentrant. In other words, the lock cannot be acquired more than once like a critical section or a mutex can, for example. This doesn't mean that you cannot use spin locks with recursive programming techniques. It just means that you must release the lock before recursing, or else suffer a deadlock.

Note

If you require a reentrant wait mechanism, you can use wait objects that are more structured, such as the Monitor class, which I cover in the next section, or kernel-based wait objects.

Incidentally, if you'd like to see some fireworks, so to speak, try commenting out the use of the spin lock in the RndThreadFunc method and run the result several times. You'll most likely notice the output in the log file gets a little ugly. The ugliness should increase if you attempt the same test on a multiprocessor machine.

The .NET 4.0 BCL introduced a new type, System.Threading.SpinLock. You should certainly use SpinLock rather than the MySpinLock class that I used for the sake of the example in the previous section. SpinLock should be used when you have a reasonable expectation that the thread acquiring it will rarely have to wait. If the threads using SpinLock have to wait often, efficiency will suffer due to the excessive spinning these threads will perform. Therefore, when a thread holds a SpinLock, it should hold it for as little time as possible and avoid blocking on another lock while it holds the SpinLock at all costs. Also, just like MySpinLock in the previous section, SpinLock cannot be acquired reentrantly. That is, if a thread already owns the lock, attempting to acquire the lock again will throw an exception if you passed true for the enableThreadOwnerTracking parameter of the SpinLock constructor or it will introduce a deadlock.

Note

Thread owner tracking in SpinLock is really intended for use in debugging.

There is an old adage in software development that states that early optimization is the root of all evil. Although this statement is rather harsh sounding and does have notable exceptions, it is a good rule of thumb to follow. Therefore, you should probably start out using a higher level or heavier, more flexible locking mechanism that trades efficiency for flexibility. Then, if you determine during testing and profiling that a fast, lighter weight locking mechanism should be used, then investigate using SpinLock.

Warning

SpinLock is a value type. Therefore, be very careful to avoid any unintended copying or boxing. Doing so may introduce unforeseen surprises. If you must pass a SpinLock as a parameter to a method, for example, be sure to pass it by ref to avoid the extra copy.

To demonstrate how to use SpinLock, I have modified the previous example removing MySpinLock and replacing it with SpinLock as shown below:

using System;

using System.IO;

using System.Threading;

public class EntryPoint

{

static private Random rnd = new Random();

private static SpinLock logLock = new SpinLock( false );

private static StreamWriter fsLog =

new StreamWriter( File.Open("log.txt",

FileMode.Append,

FileAccess.Write,

FileShare.None) );

private static void RndThreadFunc() {

bool lockTaken = false;

logLock.Enter( ref lockTaken );

if( lockTaken ) {

try {

fsLog.WriteLine( "Thread Starting" );

fsLog.Flush();

}

finally {

logLock.Exit();

}}

int time = rnd.Next( 10, 200 );

Thread.Sleep( time );

lockTaken = false;

logLock.Enter( ref lockTaken );

if( lockTaken ) {

try {

fsLog.WriteLine( "Thread Exiting" );

fsLog.Flush();

}

finally {

logLock.Exit();

}

}

}

static void Main() {

// Start the threads that wait random time.

Thread[] rndthreads = new Thread[ 50 ];

for( uint i = 0; i < 50; ++i ) {

rndthreads[i] =

new Thread( new ThreadStart(

EntryPoint.RndThreadFunc) );

rndthreads[i].Start();

}

}

}There are some very important things I want to point out here. First, notice that the call to SpinLock.Enter takes a ref to a bool. This bool is what indicates whether the lock was taken or not. Therefore, you much check it after the call to Enter. But most importantly, you must initialize the bool to false before calling Enter. The SpinLock does not implement IDisposable, therefore, you cannot use it with a using block, therefore you can see I am using a try/finally construct instead to guarantee proper clean-up. Had the BCL team implemented IDisposable on SpinLock, it would have been a disaster waiting to happen. That's because any time you cast a value type into an instance of an interface it implements, the value type is boxed. Boxing is highly undesirable for SpinLock instances and should be avoided.

In the previous section, I showed you how to implement a spin lock using the methods of the Interlocked class. A spin lock is not always the most efficient synchronization mechanism, especially if you use it in an environment where a wait is almost guaranteed. The thread scheduler keeps having to wake up the thread and allow it to recheck the lock variable. As I mentioned before, a spin lock is ideal when you need a lightweight, non-reentrant synchronization mechanism and the odds are low that a thread will have to wait in the first place. When you know the likelihood of waiting is high, you should use a synchronization mechanism that allows the scheduler to avoid waking the thread until the lock is available. .NET provides the System.Threading.Monitor class to allow synchronization between threads within the same process. You can use this class to guard access to certain variables or to gate access to code that should only be run on one thread at a time.

Note

The Monitor pattern provides a way to ensure synchronization such that only one method, or a block of protected code, executes at one time. A Mutex is typically used for the same task. However, Monitor is much lighter and faster. Monitor is appropriate when you must guard access to code within a single process. Mutex is appropriate when you must guard access to a resource from multiple processes.

One potential source of confusion regarding the Monitor class is that you cannot instantiate an instance of this class. The Monitor class, much like the Interlocked class, is merely a containing namespace for a collection of static methods that do the work. If you're used to using critical sections in Win32, you know that at some point you must allocate and initialize a CRITICAL_SECTION structure. Then, to enter and exit the lock, you call the Win32 EnterCriticalSection and LeaveCriticalSection functions. You can achieve exactly the same task using the Monitor class in the managed environment. To enter and exit the critical section, you call Monitor.Enter and Monitor.Exit. Whereas you pass a CRITICAL_SECTION object to the Win32 critical section functions, in contrast, you pass an object reference to the Monitor methods.

Internally, the CLR manages a sync block for every object instance in the process. Basically, it's a flag of sorts, similar to the integer used in the examples of the previous section describing the Interlocked class. When you obtain the lock on an object, this flag is set. When the lock is released, this flag is reset. The Monitor class is the gateway to accessing this flag. The versatility of this scheme is that every object instance in the CLR potentially contains one of these locks. I say potentially because the CLR allocates them in a lazy fashion, because not every object instance's lock will be utilized. To implement a critical section, all you have to do is create an instance of System.Object. Let's look at an example using the Monitor class by borrowing from the example in the previous section:

using System;

using System.Threading;

public class EntryPoint

{

static private readonly object theLock = new Object();

static private int numberThreads = 0;

static private Random rnd = new Random();

private static void RndThreadFunc() {

// Manage thread count and wait for a

// random amount of time between 1 and 12

// seconds.

Monitor.Enter( theLock );

try {

++numberThreads;

}

finally {

Monitor.Exit( theLock );

}

int time = rnd.Next( 1000, 12000 );

Thread.Sleep( time );

Monitor.Enter( theLock );try {

--numberThreads;

}

finally {

Monitor.Exit( theLock );

}

}

private static void RptThreadFunc() {

while( true ) {

int threadCount = 0;

Monitor.Enter( theLock );

try {

threadCount = numberThreads;

}

finally {

Monitor.Exit( theLock );

}

Console.WriteLine( "{0} thread(s) alive",

threadCount );

Thread.Sleep( 1000 );

}

}

static void Main() {

// Start the reporting threads.

Thread reporter =

new Thread( new ThreadStart(

EntryPoint.RptThreadFunc) );

reporter.IsBackground = true;

reporter.Start();

// Start the threads that wait random time.

Thread[] rndthreads = new Thread[ 50 ];

for( uint i = 0; i < 50; ++i ) {

rndthreads[i] =

new Thread( new ThreadStart(

EntryPoint.RndThreadFunc) );

rndthreads[i].Start();

}

}

}Notice that I perform all access to the numberThreads variable within a critical section in the form of an object lock. Before each access, the accessor must obtain the lock on the theLock object instance. The type of theLock field is of type object simply because its actual type is inconsequential. The only thing that matters is that it is a reference type—that is, an instance of object rather than a value type. You only need the object instance to utilize its internal sync block, therefore you can just instantiate an object of type System.Object.

Tip

As a safeguard, you may want to mark the internal lock object readonly as I have done above. This may prevent you or another developer from inadvertently reassigning theLock with another instance thus wreaking havoc in the system.

One thing you've probably also noticed is that the code is uglier than the version that used the Interlocked methods. Whenever you call Monitor.Enter, you want to guarantee that the matching Monitor.Exit executes no matter what. I mitigated this problem in the examples using the MySpinLock class by wrapping the usage of the Interlocked class methods within a class named MySpinLockManager. Can you imagine the chaos that could ensue if a Monitor.Exit call was skipped because of an exception? Therefore, you always want to utilize a try/finally block in these situations. The creators of the C# language recognized that developers were going through a lot of effort to ensure that these finally blocks were in place when all they were doing was calling Monitor.Exit. So, they made our lives easier by introducing the lock keyword. Consider the same example again, this time using the lock keyword:

using System;

using System.Threading;

public class EntryPoint

{

static private readonly object theLock = new Object();

static private int numberThreads = 0;

static private Random rnd = new Random();

private static void RndThreadFunc() {

// Manage thread count and wait for a

// random amount of time between 1 and 12

// seconds.

lock( theLock ) {

++numberThreads;

}

int time = rnd.Next( 1000, 12000 );

Thread.Sleep( time );

lock( theLock ) {

-numberThreads;

}

}

private static void RptThreadFunc() {

while( true ) {

int threadCount = 0;

lock( theLock ) {

threadCount = numberThreads;

}

Console.WriteLine( "{0} thread(s) alive",

threadCount );Thread.Sleep( 1000 );

}

}

static void Main() {

// Start the reporting threads.

Thread reporter =

new Thread( new ThreadStart(

EntryPoint.RptThreadFunc) );

reporter.IsBackground = true;

reporter.Start();

// Start the threads that wait random time.

Thread[] rndthreads = new Thread[ 50 ];

for( uint i = 0; i < 50; ++i ) {

rndthreads[i] =

new Thread( new ThreadStart(

EntryPoint.RndThreadFunc) );

rndthreads[i].Start();

}

}

}Notice that the code is much cleaner now, and in fact, there are no more explicit calls to any Monitor methods at all. Under the hood, however, the compiler is expanding the lock keyword into the familiar try/finally block with calls to Monitor.Enter and Monitor.Exit. You can verify this by examining the generated IL code using ILDASM.

In many cases, synchronization implemented internally within a class is as simple as implementing a critical section in this manner. But when only one lock object is needed across all methods within the class, you can simplify the model even more by eliminating the extra dummy instance of System.Object by using the this keyword when acquiring the lock through the Monitor class. You'll probably come across this usage pattern often in C# code. Although it saves you from having to instantiate an object of type System.Object—which is pretty lightweight, I might add—it does come with its own perils. For example, an external consumer of your object could actually attempt to utilize the sync block within your object by passing your instance to Monitor.Enter before even calling one of your methods that will try to acquire the same lock. Technically, that's just fine, because the same thread can call Monitor.Enter multiple times. In other words, Monitor locks are reentrant, unlike the spin locks of the previous section. However, when a lock is released, it must be released by calling Monitor.Exit a matching number of times. So, now you have to rely upon the consumers of your object to either use the lock keyword or a try/finally block to ensure that their call to Monitor.Enter is matched appropriately with Monitor.Exit. Any time you can avoid such uncertainty, do so. Therefore, I recommend against locking via the this keyword, and I suggest instead using a private instance of System.Object as your lock. You could achieve the same effect if there were some way to declare the sync block flag of an object private, but alas, that is not possible.

When you're using the Monitor methods to implement locking, internally Monitor uses the sync block of object instances to manage the lock. Because every object instance can potentially have a sync block, you can use any reference to an object, even an object reference to a boxed value. Even though you can, you should never pass a value type instance to Monitor.Enter, as demonstrated in the following code example:

using System;

using System.Threading;

public class EntryPoint

{

static private int counter = 0;

// NEVER DO THIS !!!

static private int theLock = 0;

static private void ThreadFunc() {

for( int i = 0; i < 50; ++i ) {

Monitor.Enter( theLock );

try {

Console.WriteLine( ++counter );

}

finally {

Monitor.Exit( theLock );

}

}

}

static void Main() {

Thread thread1 =

new Thread( new ThreadStart(EntryPoint.ThreadFunc) );

Thread thread2 =

new Thread( new ThreadStart(EntryPoint.ThreadFunc) );

thread1.Start();

thread2.Start();

}

}If you attempt to execute this code, you will immediately be presented with a SynchronizationLockException, complaining that an object synchronization method was called from an unsynchronized block of code. Why does this happen? In order to find the answer, you need to remember that implicit boxing occurs when you pass a value type to a method that accepts a reference type. And remember, passing the same value type to the same method multiple times will result in a different boxing reference type each time. Therefore, the reference object used within the body of Monitor.Exit is different from the one used inside of the body of Monitor.Enter. This is another example of how implicit boxing in the C# language can cause you grief. You may have noticed that I used the old try/finally approach in this example. That's because the designers of the C# language created the lock statement such that it doesn't accept value types. So, if you just stick to using the lock statement for handling critical sections, you'll never have to worry about inadvertently passing a boxed value type to the Monitor methods.

I cannot overstate the utility of the Monitor methods to implement critical sections. However, the Monitor methods have capabilities beyond that of implementing simple critical sections. You can also use them to implement handshaking between threads, as well as for implementing queued access to a shared resource.

When a thread has entered a locked region successfully, it can give up the lock and enter a waiting queue by calling one of the Monitor.Wait overloads where the first parameter to Monitor.Wait is the object reference whose sync block represents the lock being used and the second parameter is a timeout value. Monitor.Wait returns a Boolean that indicates whether the wait succeeded or if the timeout was reached. If the wait succeeded, the result is true; otherwise, it is false. When a thread that calls Monitor.Wait completes the wait successfully, it leaves the wait state as the owner of the lock again.

Note

You may want to consult the MSDN documentation for the Monitor class to become familiar with the various overloads available for Monitor.Wait.

If threads can give up the lock and enter into a wait state, there must be some mechanism to tell the Monitor that it can give the lock back to one of the waiting threads as soon as possible. That mechanism is the Monitor.Pulse method. Only the thread that currently holds the lock is allowed to call Monitor.Pulse. When it's called, the thread first in line in the waiting queue is moved to a ready queue. Once the thread that owns the lock releases the lock, either by calling Monitor.Exit or by calling Monitor.Wait, the first thread in the ready queue is allowed to run. The threads in the ready queue include those that are pulsed and those that have been blocked after a call to Monitor.Enter. Additionally, the thread that owns the lock can move all waiting threads into the ready queue by calling Monitor.PulseAll.

There are many fancy synchronization tasks that you can accomplish using the Monitor.Pulse and Monitor.Wait methods. For example, consider the following example that implements a handshaking mechanism between two threads. The goal is to have both threads increment a counter in an alternating manner:

using System;

using System.Threading;

public class EntryPoint

{

static private int counter = 0;

static private object theLock = new Object();

static private void ThreadFunc1() {

lock( theLock ) {

for( int i = 0; i < 50; ++i ) {

Monitor.Wait( theLock, Timeout.Infinite );

Console.WriteLine( "{0} from Thread {1}",

++counter,

Thread.CurrentThread.ManagedThreadId );

Monitor.Pulse( theLock );

}

}

}

static private void ThreadFunc2() {

lock( theLock ) {

for( int i = 0; i < 50; ++i ) {Monitor.Pulse( theLock );

Monitor.Wait( theLock, Timeout.Infinite );

Console.WriteLine( "{0} from Thread {1}",

++counter,

Thread.CurrentThread.ManagedThreadId );

}

}

}

static void Main() {

Thread thread1 =

new Thread( new ThreadStart(EntryPoint.ThreadFunc1) );

Thread thread2 =