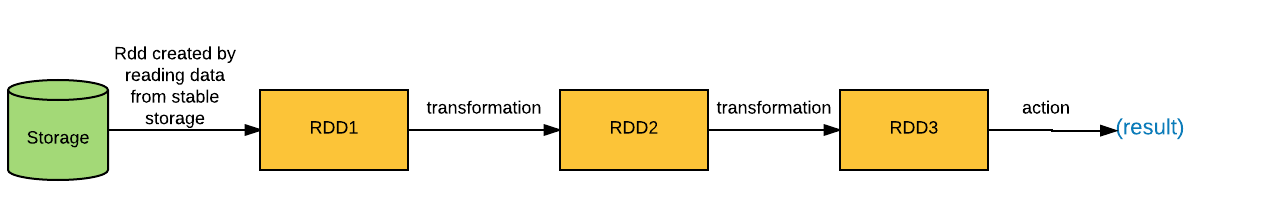

Another important thing to understand about RDD is Lazy evaluation. Spark creates a DAG, also called the lineage graph, of all the operations you perform on an RDD. Execution of the graph starts only when an action is performed on RDD. Let's consider an example of DAG operations on RDD:

Here, first an RDD is calculated by reading data from a stable storage and two of the transformations are performed on the RDD and then finally an action is performed to get the result.

Look at the previous diagram; one would infer that RDD1 will be created as soon as a Spark job finds the step to create it from the database and then it will find the transformation steps, so it will perform transformations. Then it finds an action and so it will run the given action to calculate the result. However, this is not true.

In reality, a Spark job will start creating DAG steps until it finds a step that asks it to perform action on RDD. When the job finds this step, it starts executing the DAG from the first vertex.

The following are the benefits of this approach:

- Fault tolerance: The lineage graph of the operations on an RDD, makes it fault tolerant. Since Spark is well aware of the steps it needs to perform to create an RDD, it can recalculate the RDD or its partitions in case of failure of the previous step instead of repeating the whole process again. For example, with DAG, if a partition of RDD is lost while processing, it can be calculated from RDD2, instead of repeating the process of calculating it from the database and performing two transformations. This gives a huge benefit of saving time and resources in case of failures.

- Optimizing resource usage: As Spark knows all the steps to be performed to calculate the end result in advance, it can leverage this information to use the cluster resources in a most optimized manner.