Jobs is the default tab of Spark UI. It shows the status of all the applications executed within a SparkContext. It can be accessed at http://localhost:4040/jobs/.

It consists of three sections:

- Active Jobs: This section is for the jobs that are currently running

- Completed Jobs: This section is for the jobs that successfully completed

- Failed Jobs: This section is for the jobs that were failed

It is shown in the following screenshot:

The Jobs tab section of Spark UI is rendered using the org.apache.spark.ui.jobs.JobsTab class that uses org.apache.spark.ui.jobs.JobProgressListener to get the statistics of the job.

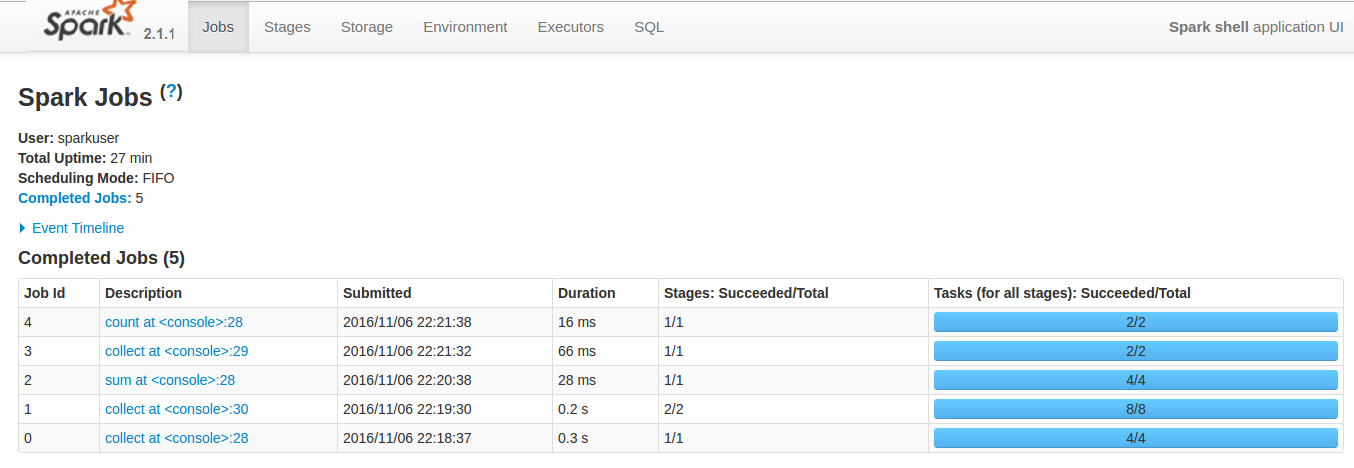

After executing all the jobs mentioned in the Spark REPL also known as CLI section, Spark UI will look as follows:

Also, if you expand the Event Timeline section, you can see the time at which SparkContext started (that is, driver was initiated) and the jobs were executed along with their status:

Also, by clicking on any of the jobs, you can see the details of the job, that is, the Event Timeline of the job and the DAG of the transformations and stages executed during the execution of the job, as follows: