Starting with this chapter, we will go deep into some technologies for using Apache Spark for machine learning. While this chapter focuses on notebooks for Spark, Chapter 6, Churn Prediction on Spark will focus on machine learning libraries including MLlib, and Chapter 7, Recommendations on Spark, will focus on SPSS with Spark.

Specifically, in this chapter, we will review machine learning methods and analytical processes for a risk scoring project, and get them implemented by R notebooks on Apache Spark in a special DataScientistWorkbench environment. We will also discuss how Apache Spark notebooks help to get everything well-organized and easy. The following topics will be covered in this chapter:

- Spark for risk scoring

- Methods for risk scoring

- Data and feature preparation

- Model estimation

- Model evaluation

- Results explanation

- Deployment of risk scoring

In this section, we will start with a real business case, and then describe how to prepare an Apache Spark environment to use R notebooks to work on this real life risk scoring project.

XST Corp provides loans and other financial assistance to millions of individuals who need the cash either to continue their business or to take care of some emergent personal needs. This company accepts applications online and then makes instant decisions on most of the received applications. For this purpose, they use the data collected from the online applications and data collected in the past in their data warehouse, along with additional data provided by third parties.

Their online applications provide identity data and some financial data of the applicants. The company's compiled data consists of information related to location, economy, and others. The third-party data has a lot of rich data related to past credit, current employment, and others.

This company works in a fast-changing industry with many competitors. So they are constantly looking for better risk scoring models that can enable them to outperform their competitors. Specifically, the model should predict defaults more accurately than their competitors, and should be easily deployed to allow the company to approve more applicants, with low default risks on the approved applicants.

With these three sets of data, the company has more than two thousand features (variables) to use for their machine learning. Therefore, feature selection is a big task, and so is data preparation, because the data quality is not as good as it should be, with a lot of missing values.

The company has clear ideas about evaluating their models by following the industry standards as well as by meeting their own goal of approving more applicants with low risk. It is also clear on how to deploy their models. But all these tasks need to be completed in the minimum amount of time, or even be automated if possible, to enable their instant decision-making needs and their need for constantly refining models. For this reason, a notebook approach is ideal, as notebook facilitates replication and iterative computing with options for quick modification. At the same time, new data comes in frequently, so the models need to be refined very often just to accommodate the new data.

As for the machine learning part, for this project, we do have a target variable of loan default, and applicant data from online applications along with credit data, consumer data, public record data, and social media data from the three data sources mentioned earlier.

As mentioned in the previous section, for this project, we will need to organize our machine learning for replication and possibly automation. For this, we will use notebooks to organize all the code, and then get them implemented on Apache Spark. Notebooks facilitate replication, and also provide a good foundation for future automation.

Most R users are familiar with the R package Markdown, which makes it easy to create R notebooks that enable easy creation of dynamic documents, analytics, presentations, and reports from R.

Note

Readers unfamiliar with Markdown may visit the following web links to gain a quick understanding, and also view an example of an R notebook:

http://rmarkdown.rstudio.com/ and http://ramnathv.github.io/rNotebook/

To prepare notebooks on Apache Spark, one option is to use Zeppelin, which is an open source product, and has been used widely. For building a notebook on Spark by using Zeppelin, the following two links explain everything very clearly:

But this will take a lot of coding and system configuration work and, for R notebook, you will even need to use an R interpreter.

You can also use R on the Jupyter notebook, for which you can find clear instructions at the following website:

http://blog.revolutionanalytics.com/2015/09/using-r-with-jupyter-notebooks.html.

There have been many successful efforts at using the Jupyter notebook to organize R programming, with one example at http://nbviewer.ipython.org/github/carljv/Will_it_Python/blob/master/MLFH/CH2/ch2.ipynb.

Like Zeppelin, using Jupyter also needs a lot of work on coding and system configuration. If you want to avoid too much coding and configuration work, you can use the Databricks environment as described in the previous chapters, where R notebooks can be easily implemented on Apache Spark and data clusters.

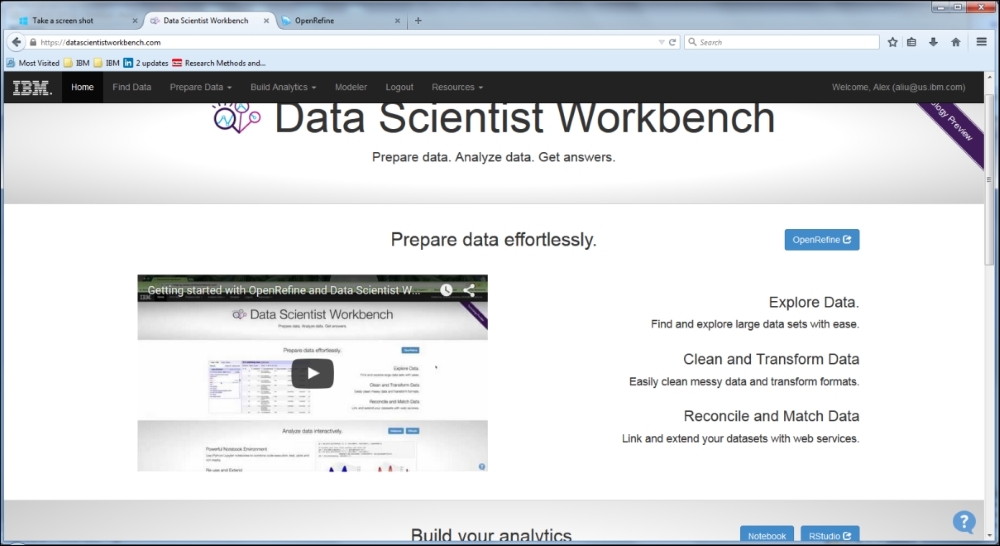

Besides Databricks, another option is to utilize the IBM Data Scientist Workbench at https://datascientistworkbench.com/.

The DataScientistWorkbench has Apache Spark installed, and also has an integrated data-cleaning system, OpenRefine, so that our data preparation work can be made easier and more organized.

For this project, we will use the DataScientistWorkbench for data cleaning, R notebook creation, and Apache Spark implementation. For this setup, some of the Apache Spark techniques described in the previous chapters may apply.