The Practice of Applied Network Security Monitoring

Chris Sanders, [email protected]

Abstract

The first chapter is devoted to defining network security monitoring and its relevance in the modern security landscape. It begins by discussing the four domains of security and then describes how network security monitoring fits into them. Key security terms are defined in route to comparing and contrasting traditional intrusion detection and modern network security monitoring. The NSM Cycle and its components (collection, detection, and analysis) are introduced. Next, the role of the analyst is introduced, along with critical analyst skills and potential specializations. Next, techniques for promoting analyst success are described. Finally, the Security Onion distribution is introduced, along with step-by-step instructions on the installation, initial configuration, and testing of Security Onion.

Keywords

Network Security Monitoring; Collection; Detection; Analysis; Intrusion Detection System; Analyst; Skills; Alert; Threat; Vulnerability; Exploit; Asset; Adversary; Security Onion

Chapter Contents

Vulnerability-Centric vs. Threat-Centric Defense

The NSM Cycle: Collection, Detection, and Analysis

The current state of security for Internet-connected systems makes me think of the Wild West. The West represented a lot of things to Americans at that time. As a mostly untapped resource, the west was seen a vast undiscovered land of opportunity. As more and more people journeyed west, small communities were established and individuals and families alike were able to prosper. With this prosperity and success, inevitably there came crime. Towns were dispersed and law was exclusively localized, allowing rogue groups of desperados to roam from town to town, robbing and pillaging local resources. The lack of coordination and communication between “the law” in these towns meant that the desperados rarely got caught unless they happened to be gunned down by a local sheriff.

Fast-forward to the modern era and the picture isn’t painted too differently. The Internet represents a similar land of untapped opportunity where someone is only a domain name and a little elbow grease away from achieving the American dream. Just like the West however, the Internet is not without its own group of desperados. Whereas the West had bank robbers and hijackers, we now contend with botnet masters and click jackers. We are also currently suffering from similar problems with localized law enforcement. The threat we face is global, yet every country, and in some cases individual states, operate within their own disparate set of laws.

In the West, the crux of the issue was that the criminals were organized and law enforcement wasn’t. Although the computer security field has improved drastically over the past ten years; on a global scale, defenders are still playing catch up to the groups who are able to operate global criminal networks. Unfortunately, this isn’t something that is fixable overnight, if ever.

This reality puts the focus on the individuals in the trenches to do whatever is possible to defend computer networks and the data contained within them from these criminals. It is my belief that the most effective way to do this is through the practice of Network Security Monitoring (NSM).

NSM is the collection, detection, and analysis of network security data. Information security has traditionally been divided into many different focus areas, but I tend to lean most towards the way the United States Department of Defense (US DoD) categorizes the domains of Computer Network Defense (CND) per DoD 8500.2.1 These are:

Protect. The protect domain focuses on securing systems to prevent exploitation and intrusion from occurring. Some of the functions that typically occur within this domain include vulnerability assessment, risk assessment, anti-malware management, user awareness training, and other general information assurance tasks.

Detect. This domain centers on detecting compromises that are actively occurring or have previously occurred. This includes network security monitoring and attack sense and warning.

Respond. The third domain focuses on the response after a compromise has occurred. This includes incident containment, network and host-based forensics, malware analysis, and incident reporting.

Sustain. The final CND domain deals with the management of the people, processes, and technology associated with CND. This includes contracting, staffing and training, technology development and implementation, and support systems management.

As you may have guessed, this book deals primarily in the Detect domain, but if done correctly, the benefits of proper NSM will extend to all domains of CND.

Key NSM Terms

Before diving in, there are several terms that must be defined due to their extensive use throughout this book. With NSM and network security being a relatively new science, it’s hard to find common, discrete definitions for a lot of these terms. The sources I’ve chosen most closely align with US DoD documentation, CISSP certification literature, and other NSM text. They have been mostly paraphrased, and directly quoted and cited as appropriate.

Asset

An asset is anything within your organization that has value. At an easily quantifiable level, this may include computers, servers, and networking equipment. Beyond this, assets will also include data, people, processes, intellectual property, and reputation.

When I refer to an “asset” I will generally be referring to something within the scope of your trusted network. This may also include networks that are separate from yours, but still considered trusted (think of government allies, subsidiary organizations, or supply chain partners). I will use the terms asset, good guy, target, victim, and friendly interchangeably.

Threat

A threat is a party with the capabilities and intentions to exploit a vulnerability in an asset. A threat is relative, as a threat to a civilian might be different than a threat to a large corporation. Furthermore, a threat to an emerging nation might be different than that of a global superpower.

Threats can primarily be classified in two categories: structured and unstructured.

A structured threat utilizes formal tactics and procedures and has clearly defined objectives. This often includes organized criminals, hacktivist groups, government intelligence agencies, and militaries. These are typically groups of individuals; although, it’s not unheard of for a single individual to represent an structured threat. A structured threat almost always pursues targets of choice, chosen for a specific reason or goal.

An unstructured threat lacks the motivation, skill, strategy, or experience of a structured threat. Individuals or small loosely organized groups most often represent this type of threat. Unstructured threats typically pursue targets of opportunity, which are selected because they appear easily vulnerable.

Regardless of the scope or nature of the threat, they all have something in common: they want to steal something from you. This can be stolen money, intellectual property, reputation, or simply time.

I will use the terms threat, bad guy, adversary, attacker, and hostile interchangeably.

Vulnerability

A vulnerability is a software, hardware, or procedural weakness that may provide an attacker the ability to gain unauthorized access to a network asset.

This might take the form of improperly written code that allows for exploitation via a buffer overflow attack, an active network port in a public area that presents the opportunity for physical network access, or even an improperly devised authentication system that allows an attacker to guess an victim’s username. Keep in mind that a human can also be considered a vulnerability.

Exploit

An exploit is the method by which a vulnerability is attacked. In the case of software exploitation, this may take the form of a piece of exploit code that contains a payload that allows the attacker to perform some type of action on the system remotely, such as spawning a command shell. In a web application, a vulnerability in the way the application processes input and output may allow an attacker to exploit the application with SQL injection. In another scenario, an attacker breaking into an office building by tailgating off of another user’s access card swipe would be considered an exploit.

Risk

The study of risk management is extensive, and as such there are several different definitions for risk. In relation to NSM, I think the most appropriate definition of risk is the measurement of the possibility that a threat will exploit a vulnerability. Although most managers desire some quantifiable metric, often times quantifying risk is a fruitless endeavor because of the intrinsic difficulty in placing a value on network and data assets.

I will frequently discuss things that may add or decrease the level of a risk to an asset, but I won’t be speaking in depth on calculations for quantifying risk beyond what is necessary for defining a collection strategy.

Anomaly

An anomaly is an observable occurrence in a system or network that is considered out of the ordinary. Anomalies generate alerts by detection tools such as an intrusion detection systems or log review applications. An anomaly may include a system crash, malformed packets, unusual contact with an unknown host, or a large amount of data being transferred over a short period of time.

Incident

When an event is investigated, it may be reclassified as part of an incident. An incident is a violation or imminent threat of violation of computer security policies, acceptable use policies, or standard security practices2. More simply stated, an incident means that something bad has happened, or is currently happening on your network. This might include the root-level compromise of a computer, a simple malware installation, a denial of service attack, or the successful execution of malicious code from a phishing e-mail. Keep in mind that all incidents include one or more events, but most events will not directly represent an incident.

Intrusion Detection

Prior to the coining of the term NSM, the detect domain was typically described simply as Intrusion Detection. Although NSM has been around for nearly ten years, these terms are often used interchangeably. These are not synonyms, but rather, intrusion detection is a component of modern NSM.

The detect domain built solely around the old paradigm of intrusion detection often had a few distinct characteristics:

Vulnerability-Centric Defense. The most common model of the computer network attacker breaking into a network is by exploiting a software vulnerability. Since this model is so simple and clear cut, it’s what most early intrusion detection programs were built around. The intrusion detection system (IDS) is deployed with the goal of detecting the exploitation of these vulnerabilities.

Detection in Favor of Collection. The majority of effort placed on this domain lies within detection. While data collection was occurring, it was often unfocused and collection strategies weren’t tied to detection goals. A lack of focus on collection often fostered the mindset “too much data is always better than not enough” and “capture everything and sort it out later”.

Mostly Signature-Based. The exploitation of a software vulnerability is often a fairly static action that can be developed into an IDS signature rather easily. As such, traditional intrusion detection relied on having knowledge of all known vulnerabilities and developing signatures for their detection.

Attempts to Fully Automate Analysis. The simplistic vulnerability-centric intrusion detection model lends itself to the belief that most IDS generated alerts can be trusted with reasonably high confidence. As such, this paradigm often relies on little involvement by human analysts, and attempts to automate post-detection analysis as much as possible.

While moderately successful in its time, the current state of security has led us to a point where traditional intrusion detection isn’t effective. The primary reason for this is the failure of the vulnerability-centric defense.

Bejtlich provides one of the better explanations for this.2 Consider a scenario where several houses in a neighborhood experience break-ins. When this happens, the police could respond by putting up barbed wire fences around the other houses in the neighborhood. They could also install large steel doors on all of the houses or put bars on all of the windows. This would be considered a vulnerability-centric approach. It’s not surprising that you don’t often hear of law enforcement doing such things. That’s because the criminals will simply find other vulnerabilities in the houses to exploit if they are determined and are targeting that specific neighborhood.

Network Security Monitoring

NSM has advanced in large part thanks to the military, which has traditionally been one of the biggest proponents of this defensive mindset. That’s no real surprise given the military’s extensive use of information technology, the critical importance of their operations, and the high confidentiality of the data they generate.

United States Information Operations (IO) doctrine3 mentions that a commander’s IO capabilities should be used to accomplish the following:

• Destroy: To damage a system or entity so badly that it cannot perform any function or be restored to a usable condition without being entirely rebuilt.

• Disrupt: To break or interrupt the flow of information.

• Degrade: To reduce the effectiveness or efficiency of adversary command, control, or communication systems, and information collection efforts or means. IO can also degrade the morale of a unit, reduce the target’s worth or value, or reduce the quality of adversary decisions and actions.

• Deny: To prevent the adversary from accessing and using critical information, systems, and services.

• Deceive: To cause a person to believe that which is not true. Seeking to mislead adversary decision makers by manipulating their perception of reality.

• Exploit: To gain access to adversary command and control systems to collect information or to plant false or misleading information.

• Influence: To cause others to behave in a manner favorable to friendly forces.

• Protect: To take action to guard against espionage or capture of sensitive equipment and information.

• Detect: To discover or discern the existence, presence, or fact of an intrusion into information systems.

• Restore: To bring information and information systems back to their original state.

• Respond: To react quickly to an adversary’s or others’ IO attack or intrusion.

Many of these goals are interconnected. The majority of NSM is dedicated to Detect in an effort to better Respond. On occasion, this may include elements of other areas. In this book, we will touch on deception and degradation to some extent when we talk about honeypots.

The detect portion of this IO doctrine also lines up with the US DoD definition of Attack Sense and Warning (AS&W).4 AS&W is the detection, correlation, identification and characterization of intentional unauthorized activity, including computer intrusion or attack, across a large spectrum coupled with the notification to command and decision-makers so that an appropriate response can be developed. AS&W also includes attack/intrusion related intelligence collection tasking and dissemination; limited immediate response recommendations; and limited potential impact assessments.

NSM is considered the new paradigm for the detect domain and has its own set of characteristics that are drastically different than traditional intrusion detection:

Prevention Eventually Fails. One of the hardest realities for an individual with the defender’s mindset to accept is that they will eventually lose. No matter how strong your defenses are or what proactive steps have been taken, eventually a motivated attacker will find a way to get in.

Beyond information security, the reality is that the defender will always be playing catch-up. When the defender builds a stronger bunker, the attacker builds a bigger bomb. When the defender starts using a bulletproof vest, the attacker starts using armor-piercing bullets. It should be no surprise that when a defender deploys enterprise grade firewalls or ensures that his servers are fully patched, the attacker will utilize social engineering attacks to gain a foothold onto the network or utilize a zero-day exploit to gain root access to your patched server.

Once someone accepts that they will eventually be compromised, they can shift their mindset to one that doesn’t solely rely on prevention, but rather, puts an additional focus on detection and response. In doing this, when the big compromise happens, your organization is positioned to respond effectively and stop the bleeding.

Focus on Collection. The previous mindset where all data sources that are available were collected and thrown into a central repository has resulted in deployments that are incredibly cost ineffective to manage. Not only that, but they don’t provide any real value because the right types of data aren’t available and the detection tools can’t scale with the amount of data they are forced to contend with.

If an ounce of prevention is worth a pound of cure, then I wouldn’t hesitate to say that an ounce of collection is worth a pound of detection. In order to perform any type of detection or analysis, you must have data to parse. If you can perform the same level of detection with less data, then you are saving CPU cycles and being more efficient. Furthermore, if you can provide the human analyst with only the data they need, they can make sound decisions much faster, which can make the difference in a small compromise or a full on data breach.

Cyclical Process. Old paradigm intrusion detection is a linear process. You receive an alert, you validate the alert, you respond as necessary, and then you are done. This linear process is both naive and irresponsible. Placing every network security incident in a vacuum does not serve the purpose of defending the network. Although some compromises do take place in a matter of seconds, skilled attackers are often slow and methodical, sometimes taking months to manifest the goals of their attack.

In order to move away from this vacuum approach, it is necessary that the process of detecting and responding to intrusion be cyclical. That means that collection should feed detection, detection should feed analysis, and analysis should feed back into collection. This allows the defender to build intelligence over time that may be used to better serve the defense of the network.

Threat-Centric Defense. All of the characteristics I’ve discussed thus far have led to the concept of threat-centric defense. Whereas vulnerability-centric defense focuses on the “how”, threat-centric defense focuses on the “who” and “why”. Specifically, you must ask yourself who would be interested in attacking your network, and why would they stand to gain from such an action?

Threat-centric defense is a much harder to perform than its predecessor. This is because it requires two things: extensive visibility into your network, and the ability to collect and analyze intelligence related to the intent and capability of attackers. The former of these is incredibly easy to accomplish for just about any organization with a proper time investment. The latter is much harder when you are operating in any industry other than the federal government, but it is certainly not impossible.

Consider the scenario we discussed previously of robberies in a neighborhood. Instead of a vulnerability-centric approach that may involve additional prevention mechanisms such as barbed wire fences and steel doors, in a threat-centric approach, the police closely examine the houses that were broken into. They look for similarities, or indicators that are common amongst the break-ins, to include a determination of the attacker’s perceived goals. With this intelligence, the police can build a profile of the criminals. Combining this intelligence into something resembling a threat profile, law enforcement can then check prior arrest records to see if they can locate criminals who have used similar tactics in the past. This type of analysis combined with other forms of attribution can ultimately lead to the arrest of the criminal, preventing further break-ins. This approach is the essence of threat-centric defense and NSM.

Vulnerability-Centric vs. Threat-Centric Defense

Consider a hockey match where your goal is defended by either a brick wall or a goalie. Initially, the brick wall might seem like the best option. Someone who thinks vulnerability-centric prefers a brick wall. The brick wall seems solid at first because it protects most of the goal and the attacker can only get in if they break through it. Over time however, shots do break through the brick wall. Eventually, entire bricks might get knocked out. Sure, you can replace the bricks, but while you are replacing one brick, another might get knocked loose.

Someone who thinks threat-centric prefers to have a goalie backing them up. Sure, it’s very important that the goalie stops all of the shots. However, when the occasional shot does beat the goalie, the goalie will notice that the shot was low and on the stick side. The next time the goalie encounters the same shooter, you better believe that they will be keeping an eye on the low stick side and will be a lot less likely to allow that to happen again.

The key difference is that the brick wall never changes its tactics and never learns. The goalie, on the other hand, learns the habits of a particular shooter. The goalie learns, the goalie adapts, and the goalie thrives.

Although vulnerability-centric defense and threat-centric defense both seek to defend the network, they attempt it in different ways. Table 1.1 outlines the differences I’ve just discussed.

Table 1.1

Vulnerability-Centric vs. Threat-Centric Defense

| Vulnerability Centric | Threat Centric |

| Relies on prevention | Knows that prevention eventually fails |

| Focus on detection | Focus on collection |

| Assumes universal view of all threats | Knows that threats use different tools, tactics, and procedures |

| Analyzes every attack in a vacuum | Combines intelligence from every attack |

| Heavy reliance on signature-based detection | Utilizes all-source data |

| Minimal ability to detect unknown threats | Stronger ability to detect adversarial activities beyond known signatures |

| Linear process | Cyclical process |

The NSM Cycle: Collection, Detection, and Analysis

The NSM Cycle consists of three distinct phases: Collection, Detection, and Analysis. This book is organized into three sections for each of these phases (Figure 1.1).

Collection

The NSM cycle begins with its most important step, collection. Collection occurs with a combination of hardware and software that are used to generate, organize, and store data for NSM detection and analysis. Collection is the most important part of this cycle because the steps taken here shape an organization’s ability to perform effective detection and analysis.

There are several types of NSM data and several ways it can be collected. The most common categories of NSM data include Full Content Data, Session Data, Statistical Data, Packet String Data, and Alert Data. Depending on organizational needs, network architecture, and available resources, these data types may be used primarily for detection, exclusively for analysis, or for both.

Initially, collection can be one of the more labor-intensive parts of the NSM cycle due to the amount of human resources required. Effective collection requires a concerted effort from organizational leadership, the information security team, and network and systems administration groups.

Detection

Detection is the process by which collected data is examined and alerts are generated based upon observed events and data that are unexpected. This is typically done through some form of signature, anomaly, or statistically based detection. This results in the generation of alert data.

Detection is most often a function of software, with some of the more popular software packages being the Snort IDS and Bro IDS from a network intrusion detection system (NIDS) perspective, and OSSEC, AIDE or McAfee HIPS from a host intrusion detection system (HIDS) perspective. Some Security Information and Event Management (SIEM) applications will utilize both network and host-based data to do detection based upon correlated events.

Although the bulk of detection is done by software, some detection does occur by manual analysis of data sources. This is especially the case with retrospective analysis.

Analysis

Analysis is the final stage of the NSM cycle, and it occurs when a human interprets and investigates alert data. This will often involve gathering additional investigative data from other data sources, researching open source intelligence (OSINT) related to the type of alert generated by the detection mechanism, and performing OSINT research related to any potentially hostile hosts.

There are multitudes of ways that analysis can be performed, but this may include tasks such as:

Analysis is the most time consuming portion of the NSM cycle. At this point an event may be formally escalated to the classification of an incident, wherein incident response measures can begin.

The loop on the NSM Cycle is closed by taking the lessons learned from the detection and analysis phase for any given anomaly and further shaping the collection strategy of the organization.

Challenges to NSM

As with any paradigm shift, the introduction of NSM and threat-centric security has been met with a fair share of challenges. The primary issue is that NSM is an immature science in itself, and it exists within another immature science that is information technology as a whole. While some effort has been put forth to standardize various nomenclature and protocols, there is still a wide disparity in what is written and what is actually implemented. This is evident in the operating systems we use, the applications that run on them, and the protocols they talk to each other with.

Focusing on information security specifically, a conversation about the same topic with three different people may use three different sets of nomenclature. This is incredibly limiting from a training perspective. One of the reasons the medical field is so successful in training of new physicians is that regardless of what medical school someone graduates from, they all (in theory) have the same baseline level of knowledge prior to entering residency. Further, based upon standardization of residency program requirements and medical board testing, all resident physicians are expected to maintain a similar level of competency in order to practice medicine as an attending physician. This is all based upon acceptance of common theory, practice, and requirements. The fact that NSM lacks this regulation means that we have a group of practitioners that often speak on different wavelengths. Furthermore, although these practitioners are saying the same thing, they are often speaking different languages. Again, medicine has a few thousand years of a jump start on NSM, so it’s something that we have and will continue to make great strides in, but for now, it’s a problem that won’t likely be going away soon.

Another issue plaguing NSM is the amount of skill required to practice effectively. Simply put, there aren’t enough people with the experience and knowledge required to meet demand. In a struggling economy where a large number of people are having difficulty finding employment, it is staggering to see the large number of jobs available for someone with NSM or other similar skills. Although NSM can certainly be an entry-level security job, it requires experience to be done at a senior level in order to guide junior staff members. These mid to senior level staffers are quite hard to keep employed as they often end up in higher paying consulting roles, or migrating to some sort of management position.

A final issue worth mentioning as a large challenge to the advancement of NSM is the cost required to establish and maintain an NSM program. Although this high cost of entry is usually associated with the hardware required to collect and parse the amount of data generated from NSM functions, the bulk of the cost is commonly a result of the workforce required to do the analysis portion of NSM, and to support the NSM infrastructure used by the analysts. This is compounded for larger organizations that require 24 × 7 × 365 NSM. Unfortunately, another cost point is added for organizations that require the use of commercial SIEM software. Although these packages aren’t always necessary, when they are deemed an organizational “must” they can often be accompanied with six to seven figure price tags.

Defining the Analyst

The biggest defining characteristic of an NSM program is the human analyst. The analyst is the individual who interprets alert data, analyzes and investigates that data along with related data, and determines whether the event is a false positive or requires further investigation. Depending on the size and structure of the organization, an analyst may also take part in the incident response process or perform other tasks such as host-based forensics or malware analysis.

The human analyst is the crux of the organization. It is the analyst who is poring through packet captures looking for a single bit that’s out of place. This same analyst is expected to be up to date on all of the latest tools, tactics, and procedures that the adversary may use. The simple fact is that the security of your network depends on the human analysts’ ability to do their job effectively.

Critical Skills

There are several important skills that an analyst should have. I generally define baseline knowledge that is good for all analysts to possess, and then define areas of specialization that will set an analyst apart. In an ideal world, an analyst would have two or three areas of specialization, but practically when I’ve managed teams I ask for them to have at least one.

Baseline Skills

Specializations

There are several specializations that an analyst might possess. Some of these include:

Offensive Tactics. This specialty generally focuses on penetration testing and security assessments. Analysts specializing in this area will attempt to gain access to attack the network in the same way an adversary would. These types of exercises are crucial for identifying weaknesses in the way other analysts perform their duties. In addition, analysts who are knowledgeable in offensive tactics are typically better equipped to recognize certain attacker activity when performing NSM analysis. Specific knowledge and skills useful to the offensive tactics specialty include network reconnaissance, software and service exploitation, backdoors, malware usage, and data exfiltration techniques.

Defensive Tactics. The defensive tactician is the master of detection and analysis. This specialty usually involves the analyst conceptualizing new development tools and analytic methods. This analyst will also be counted on to keep abreast of new tools and research related to network defense, and to evaluate those tools for use within the organization’s NSM program. Specific knowledge and skills useful to the defensive tactics specialty include a more detailed knowledge of network communication, extensive knowledge of IDS operation and mechanics, IDS signatures, and statistical detection.

Programming. Being able to write code is a useful ability in almost any facet of information technology, especially in information security and NSM. An analyst who is proficient in programming will be able to develop custom detection and analysis solutions for an NSM team. Additionally, this person will often be very good at parsing large data sets. Generally, someone who chooses to specialize in programming for the purposes of NSM should have a very strong understanding of the Linux BASH environment. Once they have done this, they should become well versed in an interpreted language such as Python or PERL, a web language such as PHP or Java, and eventually, a compiled language such a C or C++.

Systems Administration. Although systems administration itself is a more general skill, it is possible to specialize in systems administration as it relates to NSM. Analysts with this specialty are heavily involved with collection processes such as configuring IDS and moving data around so that it may be properly ingested by various detection software packages. An analyst may also perform sensor hardening and the development of friendly host intelligence collection. An in-depth knowledge of both Windows and Linux platforms is the basis for the specialization, along with an adept understanding of data and log collection.

Malware Analysis. Performing NSM will frequently result in the collection of known and suspected malware samples. It should be expected that any analyst could do basic malware sandboxing in order to extract indicators, but if an organization ever detects the use of targeted malware, it is immensely valuable to have someone with the ability to perform a higher level of malware analysis. This includes knowledge of both dynamic and static analysis.

Host-Based Forensics. An individual specializing in host-based forensics gains intelligence from an asset that has been compromised by doing a forensic analysis of the host. This intelligence can then be used to refine the collection processes within the organization. This knowledge can also be used to evaluate and implement new host-based detection mechanisms, and to generate new indicators of compromise based upon the analysis of host-based artifacts. Useful skills in this specialty include hard drive and file system forensics, memory forensics, and incident time line creation.

Classifying Analysts

Generally, I’ve seen most organizations classify analysts as either junior or senior level based upon their years of experience. I prefer a more discrete method of classifying analysts based upon three levels of ability. This is useful for hiring and scheduling, as well as providing analysts achievable goals to advance their careers. This type of model doesn’t necessarily fit within every organization, but it provides a good starting point.

Level One (L1) Analyst

The entry-level analyst is considered to be at L1. This analyst possesses a reasonable grasp on several of the baseline skills listed previously, but will likely not have settled into any particular specialization. A typical L1 will spend the majority of their time reviewing IDS alerts and performing analysis based upon their findings. The biggest factor that can contribute to the success of an L1 is getting more experience under their belt. The more protocols, packets, and events that are seen, the better an analyst is equipped to handle the next event that comes down the wire. This can be related to the career advancement of a surgeon, who becomes better with every surgery they perform. In most organizations, the majority of analysts fall within the L1 classification.

Level Two (L2) Analyst

The L2 analyst is one who has a solid grasp of the majority of the baseline skills. Usually, this analyst has selected at least one specialization and has begun to spend time outside of normal event review and investigation trying to enhance their skills in that area. The L2 serves as a mentor to the L1, and will begin to identify ‘best practices’ within the scope of an organization’s NSM program. The L2 will become increasingly involved with helping to shape the detection processes within the team by creating signatures based upon other network events or OSINT research. The L2 analyst also develops the ability to look through various data sources manually to attempt to find potential events instead of solely relying upon automated detection tools.

Level Three (L3) Analyst

The level three (L3) analyst is the most senior analyst within an organization. These analysts are adept at all of the baseline skills and at least one specialty. They are the thought leaders within the organization and rather than spending their time reviewing events, they are primarily tasked with mentoring other analysts, developing and providing training, and providing guidance on complex investigations. The L3 analyst is also primarily responsible for helping to develop and enhance the organization’s collection and detection capabilities, which may include conceptualizing or developing new tools, as well as evaluating existing tools.

Measuring Success

Measuring the success or failure of an NSM program is often handled incorrectly by most organizations. If a compromise occurs, senior management views this as a critical failure of their security team as a whole. Under a vulnerability-centric model where prevention is relied upon fully, this might be an appropriate thought pattern. However, once an organization accepts that prevention eventually fails, they should also expect compromises to occur. Once this mindset becomes prevalent, you should not measure the effectiveness of an NSM program by whether a compromise occurs, but rather, how effectively it is detected, analyzed, and escalated. In the scope of an intrusion, NSM is ultimately responsible for everything that occurs from detection to escalation, with the goal of geting the appropriate information to incident responders as quickly as possible once it is determined that an incident has occurred. Of course, in anything but larger organizations the NSM team may also be the incident response team, but the functions are still logically separate. Ultimately, instead of asking “why did this happen?”, the questions leadership should be asking your NSM team after a compromise are, “how quickly were we able to detect it, how quickly were we able to escalate it to response, and how we can adjust our NSM posture to be better prepared next time?”

Most readers of this book will be analysts rather than managers, but I’ve included this section so that its contents may be shared with management, and in the hopes that readers may one day be in a position to impact some of these changes.

The most important part of an NSM program, and the people who will ultimately be responsible for answering these questions, are the human analysts. I’ve had the privilege to work with and observe several security teams from organizations of all sizes, and I’ve seen several good programs and several bad programs. There are a lot of ways to create a great NSM team, but all of the organizations that I’ve witnessed failing at providing effective security through NSM have one thing in common: they fail to recognize that the human analyst is the most important facet of the mission.

Rather than investing in and empowering the analysts, these organizations invest in expensive software packages or unnecessary automation. Two years down the road when a large compromise happens, the stakeholders who made these decisions are left wondering why their SIEM solution and its seven figure price tag didn’t catch a compromise that started six months prior.

Worse yet, these organizations will scrimp on staffing until they only utilize entry-level staff without the required experience or background to perform the task at hand. Although some entry-level staffers are expected, a lack of experienced technical leadership means that your junior level analysts won’t have an opportunity to grow their expertise. These are often the same organizations that refuse to provide adequate training budgets, whether this is financial budgeting or time budgeting.

There are several common traits amongst successful NSM teams:

Create a Culture of Learning

NSM thrives on ingenuity and innovation, which are the products of motivation and education. It is one thing to occasionally encourage education with periodic training opportunities, but it is a completely different animal to create an entire work culture based on learning. This means not only allowing for learning, but facilitating, encouraging, and rewarding it.

This type of culture requires overcoming a lot of the resistance associated with a typical workplace. In a traditional workplace, it might be frowned on to walk into an office and see several employees reading books or working on personal technical projects that don’t relate to reviewing events or packets. It might even be unfathomable for the majority of the staff to abscond from their desks to discuss the finer points of time travel in front of a whiteboard. The truth of the matter is that these things should be welcomed, as they increase morale and overall happiness, and at the end of the day your analysts go home with an excitement that makes them want to come back with fresh ideas and renewed motivation the next day.

Although some members of the old guard will never be able to accept such a work environment, it’s proven to be very successful. Google is an example of an organization that has created a successful culture of learning, and a large portion of their success is directly related to that.

This mantra of a culture of learning can be summed up very simply. In every action an analyst takes, they should either be teaching or learning. No exceptions.

Emphasize Teamwork

It’s a bit cliché, but the team dynamic ensures mutual success over individual success. This means that team building is a must. Ensuring team cohesiveness starts with hiring the right people. An individual’s capacity to perform is important, but their ability to mesh with existing team members is of equal importance. I’ve seen multiple instances where one bad apple has soured the whole bunch.

At some point, something bad is going to happen that requires an extensive time commitment from all parties involved. Analysts who trust each other and genuinely enjoy spending time together are going to be much more effective at ensuring the incident is handled properly. As an added bonus, a cohesive team will help promote a learning culture.

Provide Formalized Opportunities for Professional Growth

One of the biggest fears of managers is that their staff will become highly trained and certified and then leave the organization for greener pastures. Although this does happen, it shouldn’t steer an organization away from providing opportunities.

In interviewing NSM analysts who have left various organizations, it’s rarely ever something as simple as a higher salary that has caused them to jump ship. Rather, they almost always cite that they weren’t provided enough opportunity for growth within their organization. Generally, people don’t like change. Changing jobs, especially when it involves relocating, is a big step and something people would generally like to avoid if at all possible. This means that you are likely to keep your staff if you can provide opportunities for professional certifications, advancements in position, or migrations to management roles. Simply having a clearly defined path for this type of advancement can often make the difference. This is one of the reasons why having something like the L1/L2/L3 classification system can benefit an organization.

Encourage Superstars

Information security is notorious for having a culture of people with incredibly large egos. Although there is something to be said for being humble, you can’t change the personal traits that are built into someone and you have to do your best to work with it. If your organization has an employee with a big ego, then turn him into a superstar. People who have an excessive amount of confidence typically desire to succeed in a big way, so if you can make this happen then they will thrive. This is done by challenging them, providing learning opportunities, and instilling responsibility in them. A superstar is rare, so some will flounder when it’s crunch time. If this happens, then the reality check often serves to lessen a big ego. If the person continually succeeds, then you’ve found your superstar.

Once you have a superstar, people will want to imitate their success. Their greatness pushes others to be more than they thought they were capable of, and everybody benefits. As long as your superstar isn’t negatively impacting others by being rude, abrasive, or otherwise overbearing, then he is an asset. The difference between Allen Iverson and Kobe Bryant is that Allen Iverson played great, where as Kobe Bryant made everyone around him great. That’s why Iverson’s 76ers didn’t have any championships, and Bryant’s Lakers had 5 under their respective tenures. Make your superstar into a Kobe Bryant.

Reward Success

Positive reinforcement can be a monumental difference maker in morale. If an analyst finds something that nobody else found, everybody should know about it. Furthermore, if an analyst stays late for five hours to follow up on an incident, you should let them know you appreciate their efforts. The mechanism for reward doesn’t particularly matter as long as it is something desirable.

Learn from Failure

Analytical work can get mundane quickly. This is especially the case in a smaller environment where there simply just aren’t as many events or significant attacks occurring. When this occurs, it becomes very easy for analysts to miss something. Instead of punishing the entire group, take this as another learning opportunity.

Exercise Servant Leadership

The most successful organizations I’ve had the privilege to work with are those who practice the concept of servant leadership. Servant leadership is something that has been around for quite a while, and I was introduced to it as a University of Kentucky basketball fan from UK coach John Calipari.

The premise of servant leadership is that rather than establishing leadership based upon a title or some given authority, servant leaders achieve results by giving priority to the needs of their colleagues. This humble mindset is one in which you look to help others achieve their mission so that the organization will prosper. This has the potential to breed an organization that isn’t anchored by one strong leader, but rather, a group of leaders with different strengths and weaknesses working in harmony to achieve a common mission. Although it sounds like a lofty goal, with the right mindset and buy in from all parties involved, this type of environment can become a reality.

Security Onion

Moving forward, this book will go beyond theory and contain several practical demonstrations and examples. To level the playing field, I’ve chosen to do all of these demonstrations using Security Onion, a Linux distribution designed for intrusion detection and NSM. Security Onion is the product of Doug Burks and a handful of contributors, and is one of my absolute favorite teaching and learning tools. With its simple setup process, you can have a full NSM collection, detection, and analysis suite deployed in less than 15 minutes. The value of Security Onion goes well beyond that of an educational tool, as I’ve seen several smaller organizations leverage it for production use as well. As a matter of fact, I use for my home office and personal networks.

Initial Installation

If you plan on following along with the exercises in this book, then I recommend downloading and installing Security Onion (SO). It already has several of the tools I will discuss preinstalled, including Snort, Bro, Argus, and more. If you have an old physical machine laying around and a couple of extra network cards, then you can actually place it on your home network to examine real traffic. However, for the purposes of this book, installing Security Onion into a virtual machine is perfectly acceptable. VMWare Player or VirtualBox work great for this.

Once you have virtualization software setup, you will want to download the Security Onion ISO file. The latest version of this file can be found linked from http://securityonion.blogspot.com/. This page also contains a great number of helpful resources for installing and configuring various aspects of Security Onion. Once you’ve completed the download, follow these steps in order to get Security Onion up and running:

1. Create a new virtual machine within the virtualization platform you are using. It is recommended that you provide at least 1 GB of RAM for each monitored network interface, and a minimum of 2 GB total. You should also ensure your network interfaces are connected to the virtual machine at this time.

2. Mount the downloaded ISO as a virtual CD/DVD drive in your virtualization software.

3. When you start the VM, allow it to boot to fully into the live operating system. Once this process completes, select the “Install SecurityOnion” icon on the desktop to begin installing the operating system to the virtual disk.

4. Follow the prompts presented to you by the XUbuntu installer. During this installation, you will be asked for a number of items, including how you would like to configure disk partitioning, the time zone you are located in, Internet connectivity, the name of the system, and a username and password for your user account (shown in Figure 1.2). These options can be configured to your liking, but it is important that you do not choose the option to encrypt your home folder, and that you do not enable automatic updates. These options are disabled by default. Once you have completed XUbuntu installation, you will be prompted to reboot the system.

Figure 1.2 Configuring User Information During Security Onion Installation

This completes the operating system installation process.

Updating Security Onion

Once you’ve completed the operating system installation and the machine reboots, the next step is ensuring Security Onion is up to date. Even if you’ve just downloaded the ISO, it is likely that there are updates to SO packages. An update can be initiated by issuing the following command from a command prompt:

sudo apt-get update && sudo apt-get dist-upgrade

This process may take a while depending on the number of updates released since the last ISO was generated. Once this is finished, you should then have a fully up to date installation of Security Onion.

Running NSM Services Setup

In order to get NSM services up and running on Security Onion you must complete its automated setup process. Once you have logged into SO, follow these steps:

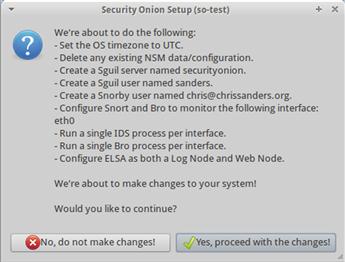

1. Initiate the setup process by clicking the “Setup” icon on the desktop.

2. After entering your password again, you will be prompted to configure /etc/network/interfaces. Select “Yes.” If you have multiple interfaces, you will be prompted to choose one interface as the management interface, which is the interface you will use to access the system. If you only have a single interface, that interface will be used for management. Proceed through this process by choosing the static IP address option and configuring the interface’s IP address, subnet mask, default gateway, DNS server address, and local domain name. You will be asked to confirm this information, and the system will be rebooted.

3. Initiate the setup process again by clicking the “Setup” icon on the desktop.

4. Skip the network configuration process since that has already been completed.

5. Choose “Quick Setup.” (You can select advanced setup, but the quick setup will suffice for our purposes here. Feel free to explore more advanced setup options on your own.)

6. If you have multiple interfaces, you will be prompted to select a monitoring interface. Select the appropriate interface(s).

7. Enter a username and password for use by various NSM services.

8. When prompted to enable ELSA, choose “Yes.”

9. Finally, you will be prompted to confirm the configuration of the sensor (Figure 1.3). Selecting “Yes, proceed with the changes!” will instruct SO to apply the changes.

Figure 1.3 Confirming the Setup Changes

Once you’ve completed this setup, Security Onion will provide you with the location of several important log and configuration files. If you encounter any issues with setup or notice that a service hasn’t started correctly, you can examine the setup log at /var/log/nsm/sosetup.log. The remainder of this book will assume you completed this setup with the quick setup configuration unless otherwise specified.

Testing Security Onion

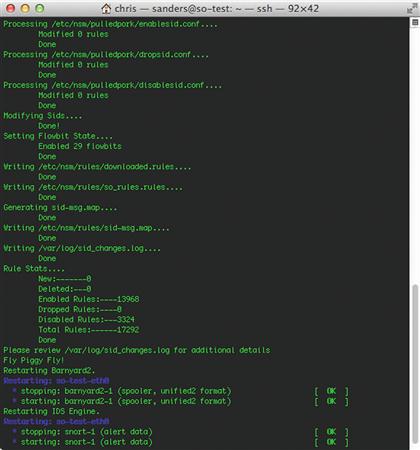

The fastest way to ensure that NSM services on Security Onion are running is to force Snort to generate an alert from one of its rules. Prior to doing this, I like to update the rule set used by Snort. You can do this by issuing the command sudo rule-update. This will used the PulledPork utility to download the latest set of rules from Emerging Threats, generate a new sid-map (used to map rule names to their unique identifiers) and restart Snort so that the new rules are applied. The partial output of this command is shown in Figure 1.4.

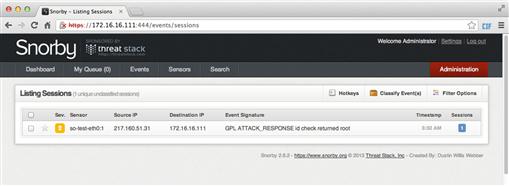

To test the functionality of the NSM services, launch Snorby by selecting the Snorby icon on the desktop. You will be prompted to login with the e-mail address and password you provided during the setup process. Next, click the “Events” tab at the top of the screen. At this point, it’s likely this window will be empty.

In order to generate a Snort alert, open another tab within the browser window and browse to http://www.testmyids.com.

Now, if you switch back over to the tab with Snorby opened and refresh the Events page, you should see an alert listed with the event signature “GPL ATTACK_RESPONSE id check returned root” (Figure 1.5). If you see this alert, then congratulations! You’ve successfully setup your first NSM environment with Security Onion! Feel free to examine the alert by clicking on it and viewing the output in Snorby. We will return to examine Snorby more closely in later chapters.

This alert should appear pretty quickly, but if you don’t see it after a few minutes, then something isn’t working correctly. You should reference the Security Onion website for troubleshooting steps, and if you are still running into trouble you should try the Security Onion mailing list or their IRC channel #securityonion on Freenode.

These processes are up to date as of Security Onion 12.04, which was the newest version available during the writing of this book. If you find that this process has changed since the book’s writing, then you should reference the SO wiki for up to date procedures: https://code.google.com/p/security-onion/w/list. We will come back to Security Onion many times throughout the course of this book, but if you’d like to learn more about it in the meantime, the SO wiki is the best resource.

Conclusion

This chapter introduced NSM and threat-centric security, along with several other related concepts. We also looked at Security Onion and detailed the process of installing and configuring an NSM environment in only a few minutes. If you are new to NSM, then it is incredibly important that you understand the concepts presented in this chapter, as they provide the foundation for the rest of Applied NSM. The remainder of this book is broken into the three parts of the NSM Cycle: collection, detection, and analysis.

1US Department of Defense Instruction 8500.2, Information Assurance (IA) Implementation (6 February 2003) - http://www.dtic.mil/whs/directives/corres/pdf/850002p.pdf.

2Bejtlich, Richard, TaoSecurity Blog, “Real Security is Threat Centric” (Nov 2009). http://taosecurity.blogspot.com/2009/11/real-security-is-threat-centric.html

3United States Department of Defense Joint Publication 3-13, “Information Operations” (13 February 2006). http://www.carlisle.army.mil/DIME/documents/jp3_13.pdf

4United Stated Department of Defense Directive O-8530.1, “Computer Network Defense (CND)” (8 January 2001). http://www.doncio.navy.mil/uploads/0623IYM47223.pdf