DB2 with BLU Acceleration and SAP integration

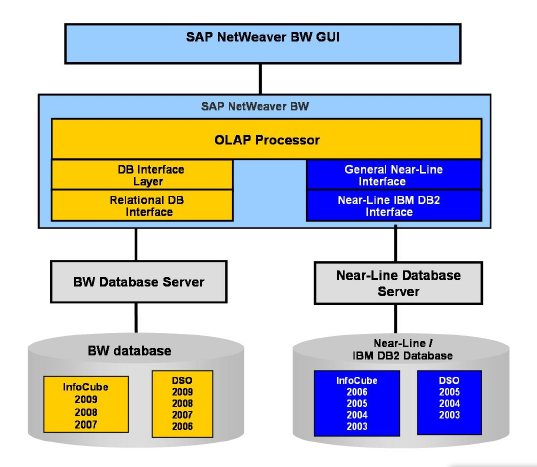

This chapter contains information about the integration of DB2 for Linux, UNIX, and Windows (DB2) with BLU Acceleration into SAP Business Warehouse (SAP BW) and into the SAP BW near-line storage solution (NLS). We list the SAP releases in which BLU Acceleration is supported and the SAP and DB2 software prerequisites.

We discuss ABAP Dictionary integration of BLU Acceleration and how to monitor the DB2 columnar data engine in the SAP DBA Cockpit, as well as the usage of BLU Acceleration in SAP BW in detail. We explain which SAP BW objects BLU Acceleration can be used with, how to create new SAP BW objects that use column-organized tables, and how to convert existing SAP BW objects from row-organized to column-organized tables.

We provide information about installing SAP BW systems on DB2 10.5 and about the handling of BLU Acceleration in migrations of SAP BW systems to DB2 10.5. Results from IBM internal lab tests give a description of performance and compression benefits that you can achieve with BLU Acceleration in SAP BW systems. We also provide preferred practices and suggestions for DB2 parameter settings when BLU Acceleration is used in SAP BW.

We explain is the usage of BLU Acceleration in SAP’s near-line storage solution on DB2 in detail, showing for which SAP BW objects in the near-line storage archive BLU Acceleration can be used, and how to create new data archiving processes (DAPs) that use column-organized tables in the near-line storage database.

Finally, we demonstrate installing an NLS database on DB2 10.5 and the preferred practices for DB2 parameter settings when using BLU Acceleration in SAP BW NLS systems.

The following topics are covered:

8.1 Introduction to SAP Business Warehouse (BW)

This section briefly describes the elements of the SAP BW information model. The information in this section is an excerpt from the SAP Help Portal for SAP BW (Technology → SAP NetWeaver Platform → 7.3 → Modeling → Enterprise Data Warehouse Layer)1.

SAP BW is part of the SAP NetWeaver platform. Key capabilities of SAP BW are the buildup and management of enterprise, and departmental data warehouses for business planning, enterprise reporting and analysis. SAP BW can extract master data and transactional data from various data sources and store it in the data warehouse. This data flow is defined by the SAP BW information model (Figure 8-1).

Furthermore, SAP BW is the basis technology for several other SAP products such as SAP Strategic Enterprise Management (SAP SEM), SAP Business Planning and Consolidation (SAP BPC).

Figure 8-1 SAP BW information model

8.1.1 Persistent Staging Area (PSA)

Usually, data extracted from source systems is first stored directly in the Persistent Staging Area (PSA) as it was received from the source system. PSA is the inbound storage area in SAP BW for data from the source systems. PSA is implemented with one transparent database table per data source.

8.1.2 InfoObjects

InfoObjects are the smallest information units in SAP BW. They can be divided into three groups:

•Sets of characteristics, modeled as master data references, form entities. Such a reference remains unchanged, even if the corresponding value (attribute) is changed. Additional time characteristics are used to model the state of an entity over the course of time.

•Numerical attributes of entities are modeled as key figures. They are used to describe their state at a certain point of time.

•Units and other technical characteristics are used for processing and transformation purposes.

InfoObjects for characteristics consist of several database tables. They contain information about time-independent and time-dependent attributes, hierarchies, and texts in the languages needed. The central table for characteristics is the surrogate identifiers (SIDs) table that associates the characteristics with an integer called SID. That is used as foreign key to the characteristic in the dimension tables of InfoCubes.

8.1.3 DataStore Objects (DSOs)

DSOs store consolidated and cleansed transactional or master data on an atomic level. SAP BW offers three types of DSOs:

•Standard

•Write-optimized

•Transactional

Write-optimized and transactional DSOs consist of one database table that is called the DSO active table.

Standard DSOs consist of three database tables:

•The activation queue table

•The active table

•The change log table

The data that is loaded into standard DSOs is first stored in the activation queue table. The data in the activation queue table is not visible in the data warehouse unless it has been processed by a complex procedure that is called DSO data activation. Data activation merges the data into the active table and writes before and after images of the data into the change log table. The change log table can be used to roll back activated data if the data is not correct or other issues occur.

8.1.4 InfoCubes

InfoCubes are used for multidimensional data modeling according to the star schema. There are several types of InfoCubes. Two of them are introduced in this section:

Standard InfoCubes

SAP BW uses the enhanced star schema as shown in Figure 8-2 on page 250, which is partly normalized. An InfoCube consists of two fact tables, F and E. They contain recently loaded and already compressed data. To provide a single point of access, a UNION ALL view is defined on these two tables. The fact tables contain the key figures and foreign keys to up to 16 dimension tables. The dimension tables contain an integer number DIMID as primary key and the SIDs to the characteristics that belong to the dimension.

The F fact table is used for staging new data into the InfoCube. Each record contains the ID of the package with which the record was loaded. To accelerate the load process, data from one package is loaded to the F fact table in blocks. Although a certain combination of characteristics will be unique in each block, there can be duplicates in different blocks and packages. By the assignment of loaded data to packages, incorrect or mistakenly loaded data can be deleted easily as long as it resides in the F fact table.

The process of bringing the data from the F fact table to the E fact table is called InfoCube compression. In the course of this, the relation to the package is removed and multiple occurrences of characteristic combinations are condensed. Thus the F fact table contains only unique combinations of characteristics. Compressed packages are deleted from the F fact table after compression is completed.

Figure 8-2 SAP BW extended star schema

InfoCube query processing

The SAP BW OLAP processor maps the query to the underlying InfoProvider. It processes reporting queries and translates them into a series of SQL statements that are sent to the database. Depending on the query, the OLAP processor might run additional complex processing to combine the results of the SQL queries into the final result that is sent back to the user.

A typical BW InfoCube query involves joins between the fact, dimension, and master data tables, as shown in Example 8-1.

Example 8-1 Sample SAP BW Query

-- Projected columns

SELECT "X1"."S__0INDUSTRY" AS "S____1272"

, "DU"."SID_0BASE_UOM" AS "S____1277"

, "DU"."SID_0STAT_CURR" AS "S____1278"

, "X1"."S__0COUNTRY" AS "S____1270"

, SUM ( "F"."CRMEM_CST" ) AS "Z____1279"

, SUM ( "F"."CRMEM_QTY" ) AS "Z____1280"

, SUM ( "F"."CRMEM_VAL" ) AS "Z____1281"

, COUNT( * ) AS "Z____031"

-- F fact table

FROM "/BI0/F0SD_C01" "F“

-- Dimension tables

JOIN "/BI0/D0SD_C01U" "DU" ON "F“ ."KEY_0SD_C01U" = "DU"."DIMID“

JOIN "/BI0/D0SD_C01T" "DT" ON "F“ ."KEY_0SD_C01T" = "DT"."DIMID“

JOIN "/BI0/D0SD_C01P" "DP" ON "F“ ."KEY_0SD_C01P" = "DP"."DIMID“

JOIN "/BI0/D0SD_C013" "D3" ON "F“ ."KEY_0SD_C013" = "D3"."DIMID“

JOIN "/BI0/D0SD_C011" "D1" ON "F“ ."KEY_0SD_C011" = "D1"."DIMID"

-- Master data attribute table

JOIN "/BI0/XCUSTOMER" "X1" ON "D1"."SID_0SOLD_TO" = "X1"."SID“

-- Predicates

WHERE ( ( ( ( "DT"."SID_0CALMONTH" IN ( 201001, 201101, 201201,

201301, 201401 ) ) )

AND ( ( "DP"."SID_0CHNGID" = 0 ) )

AND ( ( "D3"."SID_0DISTR_CHAN" IN ( 9, 7, 5, 3 ) ) )

AND ( ( "D3"."SID_0DIVISION" IN ( 3, 5, 7, 9 ) ) )

AND ( ( "DP"."SID_0RECORDTP" = 0 ) )

AND ( ( "DP"."SID_0REQUID" <= 536 ) )

AND ( ( "X1"."S__0INDUSTRY" IN ( 2, 4, 6, 9 ) ) )

) )

AND "X1"."OBJVERS" = 'A‘

-- Aggregation

GROUP BY "X1"."S__0INDUSTRY"

, "DU"."SID_0BASE_UOM"

, "DU"."SID_0STAT_CURR"

, "X1"."S__0COUNTRY"

;

In this case, the fact table, the F fact table /BI0/F0SD_C01, is joined to several dimension tables, /BI0/D0SD_C01x, and the table of dimension 1, /BI0/D0SD_C011, is joined to a master data attribute table, /BI0/XCUSTOMER, to extract information about customers in certain industries.

SAP BW query processing might involve SQL queries that generate intermediate temporary results that are stored in database tables. Consecutive SQL queries join these tables to the InfoCube tables. The following examples make use of such tables:

•Evaluation of master data hierarchies

•Storage of a large number of master data SIDs, considered as too large for an IN-list in the SQL query

•Pre-materialization of joins between a dimension table and master data tables when the SAP BW query involves a large number of tables to be joined

Flat InfoCubes

Flat InfoCubes are similar to standard InfoCubes. However, they use a simplified schema as shown in Figure 8-3. The E fact table and all dimension tables other than the package dimension table are eliminated from the original schema. The SIDs of the characteristics are directly stored in the fact table. For databases other than SAP HANA, flat InfoCubes are only available in SAP BW 7.40 Support Package 8 and higher.

Figure 8-3 Flat InfoCube

From the storage point of view, one aspect is that this denormalization increases the redundancy of data in the fact table significantly; the other aspect is that two columns per dimension are dropped which were required for the foreign key relation. Furthermore, the compression algorithm of DB2 with BLU Acceleration can reach high compression rates on redundant data. Thus, in most cases, the impact of the denormalization on the total storage consumption is low.

The number of columns of the fact table is considerably increased by the denormalization. Using BLU Acceleration this does not affect the query performance, because only those columns are read, which are actually required.

You can run InfoCube compression on flat InfoCubes. Compressed and uncompressed data are stored in the same fact table (the F fact table).

Flat InfoCubes have the following advantages:

•Reporting queries might run faster because less tables have to be joined.

•Data propagation into flat InfoCubes might run faster because the dimension tables do not need to be maintained. This is especially true in cases where some dimension tables are large.

•Administration and modelling simplified because line items do not have to be handled separately and the logical assignment of characteristics to dimensions can be changed without any effort.

In SAP BW, the following restrictions apply to flat InfoCubes:

•Flat InfoCubes cannot be stored in the SAP Business Warehouse Accelerator (BWA).

•Aggregates cannot be created for flat InfoCubes.

Aggregates and SAP Business Warehouse Accelerator

To increase speed of reporting, SAP BW allows the creation of aggregates for non flat InfoCubes. Aggregates contain pre-calculated aggregated data from the InfoCubes. They are comparable to materialized query tables but are completely managed by SAP BW. The SAP BW OLAP processor decides when to route a query to an SAP BW aggregate. The creation and maintenance of aggregates is a complex and time-consuming task that takes a large portion of the extract, transform, and load (ETL) processing time.

SAP offers the SAP Business Warehouse Accelerator (SAP BW Accelerator) for speeding up reporting performance without the need to create aggregates. SAP BW Accelerator runs on a separate hardware and does not support flat InfoCubes. InfoCubes and master data can be replicated into SAP BW Accelerator. Starting with SAP BW 7.30, InfoCubes that reside only in SAP BW Accelerator can be created. When InfoCubes and master data are replicated, the SAP BW Accelerator data must be updated when new data is loaded into SAP BW. This creates ETL processing overhead.

8.1.5 BLU Acceleration benefits for SAP BW

Creating column-organized database tables for SAP BW objects provides the following benefits:

•Reporting queries run faster on column-organized tables. Performance improvements vary and depend on the particular query, but results from customer proof of concepts show that a factor greater than ten can be achieved in many cases.

•These performance improvements can be achieved with little or no database tuning effort. Table statistics are collected automatically. Table reorganization, the creation of indexes for specific queries, statistical views, or database hints are usually not needed.

•Because of the much faster query performance, aggregates might not be required any more, or at least the number of aggregates can be reduced significantly. Even the SAP BW Accelerator might become obsolete. This greatly improves the ETL processing time because aggregate rollup and updating the SAP BW Accelerator data is no longer required.

•Except for primary key and unique constraints, column-organized tables have no indexes. Thus, during ETL processing, hardly any time needs to be spent on index maintenance and index logging. This significantly reduces the SAP ETL processing time and the active log space consumption, especially for ETL operations such as InfoCube data propagation and compression. Also data deletion might run much faster, especially when MDC rollout is not applicable on a certain object.

•The compression ratio of column-organized tables is much higher than the ratio achieved with adaptive compression on row-organized tables. In addition, space requirements for indexes are drastically reduced.

•Modelling of InfoCubes is simplified by the use of flat InfoCubes because the logical assignment of characteristics to dimensions can be changed without any effort.

8.2 Prerequisites and restrictions for using BLU Acceleration in SAP BW

This section discusses the following topics:

•Important SAP documentation and SAP Notes that you should read

•Prerequisites and restrictions for using DB2 BLU Acceleration in SAP BW

•Required SAP Support Packages and DBSL kernel patches

•Required SAP Kernel parameter settings

8.2.1 Important SAP documentation and SAP notes

Before you use BLU Acceleration in SAP BW and SAP NLS on DB2, see the available SAP documentation:

•Database administration guide:

Select <Your SAP NetWeaver Main Release> → Operations → Database-Specific Guides.

The name of the guide is: SAP Business Warehouse on IBM DB2 for Linux, UNIX, and Windows: Administration Tasks.

•How-to guide:

Select <Your SAP NetWeaver Main Release> → Operations → Database-Specific Guides

The name of the guide is: Enabling SAP Business Warehouse Systems to Use IBM DB2 for Linux, UNIX, and Windows as Near-Line Storage (NLS).

•Database upgrade guide:

Database Upgrade Guide Upgrading to Version 10.5 of IBM DB2 for Linux, UNIX, and Windows2:

Database Upgrades → DB2 UDB → Upgrade to Version 10.5 of IBM DB2 for LUW

SAP Notes3 with general information about the enablement of DB2 10.5 with BLU Acceleration are listed in Table 8-1.

Table 8-1 SAP Notes regarding the enablement of BLU Acceleration in SAP Applications

|

SAP Note

|

Description

|

|

1555903

|

Gives an overview on the supported DB2 features.

|

|

1851853

|

Describes the SAP software prerequisites for using DB2 10.5 in general for SAP applications. The note also provides an overview of the new DB2 10.5 features that are relevant for SAP, including BLU Acceleration.

|

|

1851832

|

Contains a section about DB2 parameter settings for BLU Acceleration.

|

|

1819734

|

Describes prerequisites and restrictions for using BLU Acceleration in the SAP environment (includes corrections).

|

The SAP Notes in Table 8-2 contain corrections and advises that you must install to enable BLU Acceleration for the supported SAP BW based applications. You can install these SAP Notes using SAP transaction SNOTE.

Table 8-2 SAP Notes regarding BLU Acceleration of SAP BW based Applications

|

SAP Note

|

Description

|

|

1889656

|

Contains mandatory fixes you must apply before you use BLU Acceleration in SAP BW. With the Support Packages installed, which are listed in 8.2.2, “Prerequisites and restrictions for using DB2 BLU Acceleration” on page 258, this is only necessary for SAP BW 7.0 Support Package 32.

|

|

1825340

|

Describes prerequisites and the procedure for enabling BLU Acceleration in SAP BW.

|

|

2034090

|

Contains recommendations and best practices for SAP BW objects with BLU Acceleration created on DB2 10.5 FP3aSAP or earlier.

|

|

1911087

|

Contains an advise regarding a DBSL patch.

|

|

1834310

|

Describes how to enable BLU Acceleration for SAP’s near-line storage solution on DB2 (includes corrections).

|

|

1957946

|

Describes potential issues with column-organized InfoCubes. This note is only relevant if you use DB2 10.5 FP3aSAP. If you often make structural changes to InfoCubes, for example, by adding key figures or by adding or changing dimensions, you should upgrade to DB2 Cancun Release 10.5.0.4.

|

The list of SAP Notes listed in Table 8-3 contain further improvements and code fixes. They should be installed as well to achieve the best functionality.

Table 8-3 SAP Notes with further improvements and fixes

|

SAP Note

|

Description

|

|

1964464

|

Contains specific fixes for the conversion report from row-organized to column-organized tables. This is needed if you want to convert existing InfoCubes to BLU on DB2 10.5 FP3aSAP or earlier.

|

|

1996587 2022487

|

Contain code fixes for the InfoCube compression of the accelerated InfoCubes with BLU Acceleration.

|

|

1979563

|

Contains a fix for eliminating mistakenly created warning messages in the SAP work process trace files.

|

|

2019648

|

Handles issues that might occur when you transport a BLU InfoCube to a system that does not use automatic storage or does not use reclaimable storage tablespaces.

|

|

2020190

|

Contains corrections for BLU InfoCubes with dimensions that contain more than 15 characteristics

|

Starting with DB2 Cancun Release 10.5.0.4, several additional features have been added, which can be enabled by applying the SAP Notes in Table 8-4.

Table 8-4 SAP Notes for DB2 Cancun Release 10.5.0.4 specific features

|

SAP Note

|

Description

|

|

1997314

|

Required to use BLU Acceleration for DataStore Objects, InfoObjects, and PSA tables. This is only supported as of DB2 Cancun Release 10.5.0.4.

|

|

2038632

|

Required for using flat InfoCubes in SAP BW 7.40 Support Package 8 or higher. This is only supported as of DB2 Cancun Release 10.5.0.4.

|

|

1947559 1969500

|

These notes are required for SAP system migrations. They enable the generation of BW object tables directly as BLU tables without the need to run conversions to BLU in the migration target system later. This is only supported when your DB2 target system is set up on DB2 Cancun Release 10.5.0.4 or higher.

|

8.2.2 Prerequisites and restrictions for using DB2 BLU Acceleration

BLU Acceleration is currently only supported in SAP BW, SAP Strategic Enterprise Management (SAP SEM), and SAP NLS on DB2. BLU Acceleration is not supported in other SAP applications, including applications that are based on SAP BW, such as SAP Supply Chain Management (SAP SCM). The latest list of supported products can be found in SAP Note 1819734.

The following additional restrictions for your DB2 database apply:

•You need at least DB2 10.5 Fix Pack 3aSAP. The preferred version is DB2 Cancun Release 10.5.0.4 or later, which provides additional significant optimizations and performance improvements for SAP and enables the BLU implementation for DSOs, InfoObjects, and PSA tables.

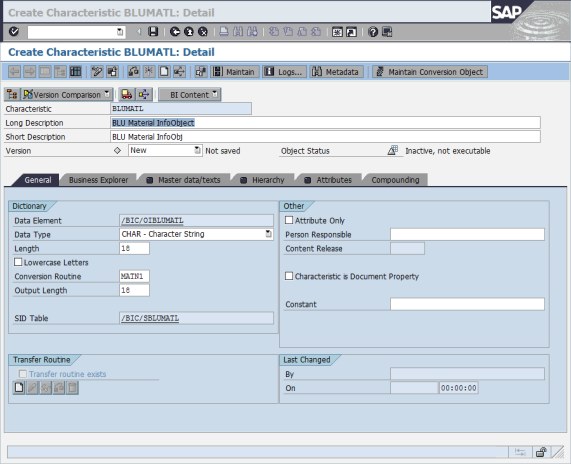

•BLU DSOs, InfoObjects, and PSA tables are only supported as of DB2 Cancun Release 10.5.0.4 (see SAP Note 1997314). All InfoObject types except for key figures (for which no database tables are created) are supported.

•Flat InfoCubes are only supported as of DB2 Cancun Release 10.5.0.4 and SAP BW 7.40 Support Package 8.

•Your database server runs on platform that supports BLU Acceleration. At the time of writing these are AIX or Linux on the X86_64 platform.

•Your database uses Unicode.

•You do not use DB2 pureScale and do not use the DB2 Database Partitioning Feature (DPF).

•If you use DB2 10.5 Fix Pack 3aSAP you do not use HADR. This restriction does not exist any more in DB2 Cancun Release 10.5.0.4 release.

•Your database is set up with DB2 automatic storage.

•You provide reclaimable storage tablespaces for the BLU tables.

8.2.3 Required Support Packages and SAP DBSL Patches

SAP BW 7.0 and later are supported. The lowest preferred Support Packages for each SAP BW release are shown in Table 8-5. With DB2 Cancun Release 10.5.0.4 and these Support Packages and additional SAP notes that have to be installed on top, BLU Acceleration for InfoCubes, DataStore objects, InfoObjects, PSA tables, and BW temporary tables is available. Furthermore, it is possible to directly create column-organized tables in SAP BW system migrations to DB2 10.5.

Table 8-5 Supported SAP BW releases and Support Packages

|

SAP NetWeaver release

|

SAP BASIS Support Package

|

SAP BW Support

Package |

|

7.0

|

30

|

32

|

|

7.01

|

15

|

15

|

|

7.02

|

15

|

15

|

|

7.11

|

13

|

13

|

|

7.30

|

11

|

11

|

|

7.31/7.03

|

11

|

11

|

|

7.40

|

6

|

6

|

These support packages contain ABAP code that is specific to DB2 in the SAP Basis and SAP BW components that enable the use of BLU Acceleration and extensions to the DBA Cockpit for monitoring and administering SAP DB2 databases. They are prerequisites for using BLU Acceleration in SAP BW. For more details, see SAP Notes 1819734 and 1825340.

We suggest that you also install the latest available SAP kernel patches and SAP Database Shared Library (DBSL). The minimum required DBSL patch level for using DB2 Cancun Release 10.5.0.4 with BLU Acceleration in SAP BW and SAP’s near-line storage solution on DB2 can be found in SAP Note 2056603. These patch levels are higher than the levels listed in the SAP database upgrade guide: Upgrading to Version 10.5 of IBM DB2 for Linux, UNIX, and Windows.

8.2.4 Required SAP kernel parameter settings

As of DB2 Cancun Release 10.5.0.4 and SAP BW support InfoObjects, the following SAP kernel parameters are required:

•dbs/db6/dbsl_ur_to_cc=*

This parameter sets the default DB2 isolation level that is used in the SAP system to Currently Committed. This applies to all SQL statements that otherwise run with isolation level UR (uncommitted read) by default. This parameter is needed to avoid potential data inconsistencies during the update of existing master data and insertion of new master data in the SAP BW system.

•dbs/db6/deny_cde_field_extension=1

This parameter prevents operations in the SAP BW system that extend the length of a VARCHAR column in a column-organized table. Extending the length of a VARCHAR column in a column-organized table is not supported in DB2 10.5 and causes SQL error SQL1667N. (The operation failed because the operation is not supported with the type of the specified table. Specified table: <tabschema>.<tabname>. Table type: “ORGANIZE BY COLUMN”. Operation: “ALTER TABLE”. SQLSTATE=42858). When the SAP profile parameter is set, the SAP ABAP Dictionary triggers a table conversion if extending the length of a VARCHAR column in a column-organized table is requested.

8.3 BLU Acceleration support in the ABAP Dictionary

In the ABAP Dictionary, storage parameters are used to identify column-organized tables. The property, including the column-organized table, can be retrieved from the database and displayed in the “Storage Parameter” window of the SAP data dictionary.

In the example in Figure 8-4, the /BIC/FICBLU01 table is a column-organized table. Use the following steps to look up the storage parameters of the transaction table SE11:

1. Enter the table name in the Database table field and select Display.

Figure 8-4 ABAP Dictionary initial window

2. In the “Dictionary: Display” table window, select Utilities → Database Object → Database Utility from the menu (Figure 8-5).

Figure 8-5 ABAP Dictionary: retrieval of table properties

3. In the “ABAP Dictionary: Utility for Database Tables” window, select Storage Parameters, as shown in Figure 8-6.

Figure 8-6 ABAP Dictionary: retrieval of table database storage parameters

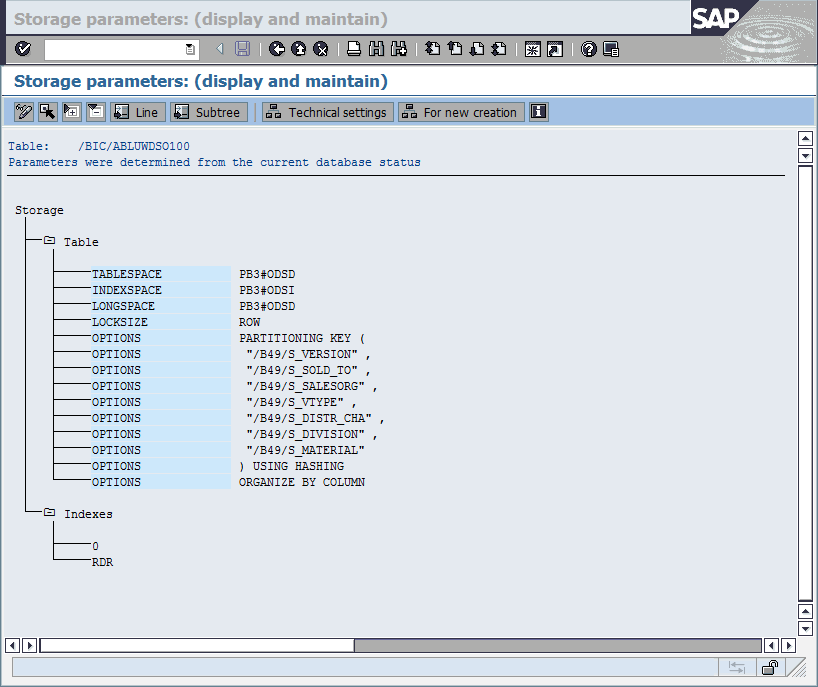

The “Storage parameters: (display and maintain)” window (Figure 8-7) shows the table properties that are retrieved from the DB2 system catalog. The last entry with the OPTIONS label is ORGANIZE BY COLUMN, which shows that the table is organized column-oriented.

Figure 8-7 Table storage parameters of a column-organized SAP BW F fact table

|

Notes:

•Although BLU Acceleration does not require DPF for parallel query processing, DB2 accepts the specification of a distribution key. In SAP BW, distribution keys are always created for InfoCube fact, DSO, and PSA tables.

SAP uses the term PARTITIONING KEY for distribution keys in the ABAP Dictionary.

•SAP NetWeaver 7.40 introduces an option in the ABAP Dictionary to specify whether a table should be created in Column Store or in Row Store. This option does not trigger creation of column-organized tables in DB2.

|

8.4 BLU Acceleration support in the DBA Cockpit

The DBA Cockpit has been enhanced with the DB2 monitoring elements for column-organized tables.

The DBA Cockpit extensions for BLU Acceleration are available in the SAP NetWeaver releases and Support Packages listed in the second column of Table 8-6. With these Support Packages, time spent analysis, the BLU Acceleration buffer pool, and I/O metrics are only available in the Web Dynpro user interface. When you upgrade to the Support Packages listed in column three, these metrics are included in the SAP GUI user interface.

Table 8-6 Availability of DBA Cockpit enhancements for BLU Acceleration in the SAP Web Dynpro and SAP GUI user interfaces

|

SAP NetWeaver release

|

SAP BASIS Support Package for BLU Acceleration metrics in Web Dynpro

|

SAP BASIS Support Package for BLU Acceleration metrics in SAP GUI

|

|

7.02

|

14

|

15

|

|

7.30

|

10

|

11

|

|

7.31

|

9

|

11

|

|

7.40

|

4

|

6

|

We suggest that you also implement SAP Note 1456402. This SAP Note contains a patch collection for the DBA Cockpit that is updated regularly. You can install the most recent DBA Cockpit patches by reinstalling the patch collection SAP Note. When you reinstall the SAP Note, only the delta between your current state and the most recently available patches is installed.

When you monitor your SAP systems using SAP Solution Manager, you need SAP Solution Manager 7.1 Support Package 11 to get the BLU Acceleration metrics.

For SAP BW 7.0 and SAP BW 7.01, a compatibility patch for DB2 10.5 is available in the Support Packages that are required for using BLU Acceleration in SAP BW. This patch handles only changes in the DB2 monitoring interfaces that are used in the DBA Cockpit. It does not provide any extra metrics for BLU Acceleration in the DBA Cockpit of SAP NetWeaver 7.0 and 7.01.

With the DBA Cockpit enhancements for BLU Acceleration, you can obtain the following information:

•Individual table information, including whether the table is column-organized

•Database information, including the databases that contain column-organized tables

•Columnar data processing information as part of time spent analysis

•Buffer pool hit ratio, buffer pool read and write activity, and prefetcher activity for the whole database, buffer pools, table spaces, and applications

In the following sections, we provide examples of BLU Acceleration monitoring using the DBA Cockpit. The windows in the figures are from an SAP BW 7.40 system running on Support Package stack 5.

8.4.1 Checking whether individual tables in SAP database are column-organized

In the DBACOCKPIT transaction, choose Space → Single Table Analysis in the navigation frame (Figure 8-8). Enter the table name in the Name field of the Space: Table and Indexes Details window and press Enter. You find the table organization in the Table Organization field on the System Catalog tab.

Figure 8-8 DBA Cockpit: properties of a single table

8.4.2 Checking if SAP database contains column-organized tables

In the DBACOCKPIT transaction, choose Configuration → Parameter Check in the navigation frame (Figure 8-9). On the Check Environment tab in the Parameter Check window, find the System Characteristics panel. If the CDE attribute has the value YES, the database contains column-organized tables.

Figure 8-9 DBA Cockpit: DB2 configuration parameter check window

8.4.3 Monitoring columnar data processing time in the SAP database

Time spent analysis was originally only available in the Web Dynpro user interface. With the service packs listed Table 8-6 on page 265 it is available in SAP GUI as well. The configuration of the Web Dynpro user interface is described in SAP Note 1245200.

Choose Performance → Time Spent Analysis in the navigation frame of the DBA Cockpit. A window similar to the one shown in Figure 8-10 opens. Enter the time frame for which you want to retrieve the information. The window is updated and shows how much time DB2 spent in various activities, including the columnar processing time in the selected time frame.

Figure 8-10 DBA Cockpit: Analysis of time spent in DB2

8.4.4 Monitoring columnar processing-related prefetcher and buffer pool activity in the SAP database

You can monitor buffer pool and prefetcher activity at database, workload, table space, and application level.

Choose Performance → (Snapshots → )4 Database in the DBA Cockpit. Select the time frame for which you want to display the information. On the “Buffer Pool” tab in the “History Details” area (Figure 8-11), you see the logical and physical reads, the physical writes, synchronous reads and writes, and temporary logical and physical reads on column-organized tables in the “Columnar” section. In the “Buffer Quality” section, you see the buffer pool hit ratio for column-organized tables.

Figure 8-11 DBA Cockpit: Buffer pool metrics

As shown in Figure 8-12, when you switch to the “Asynchronous I/O” tab, you find separate counters for column-organized tables in these sections:

•Prefetcher I/O

•Total No. of Skipped Prefetch Pages

•Skipped Prefetch Pages Loaded by Agents in UOW sections.

For example, the “Prefetcher I/O” section shows “Columnar Prefetch Requests”, which is the number of column-organized prefetch requests successfully added to the prefetch queue. This corresponds to the pool_queued_async_col_reqs DB2 monitoring element.

Figure 8-12 DBA Cockpit: Asynchronous I/O metrics

To retrieve the buffer pool and prefetcher information for individual table spaces, buffer pools, and for the current unit of work of an application, choose Performance → (Snapshots → ) Tablespaces, Bufferpools, or Applications in the DBA Cockpit navigation frame.

8.5 BLU Acceleration support in SAP BW

You can do the following operations in SAP BW:

•In the SAP Data Warehousing Workbench, you can create InfoCubes, DSOs, InfoObjects, and PSA tables as column-organized tables in the database. The following list indicates the supported BW object types:

– Basic cumulative InfoCubes

– Basic non-cumulative InfoCubes

– Multi-Cubes with underlying column-organized basic InfoCubes

– Multi-Provider which consolidate several InfoProvider

– In SAP BW 7.30 and higher, semantically partitioned InfoCubes

– As of DB2 Cancun Release 10.5.0.4 BLU Acceleration is also supported for the following BW objects:

• Standard DSOs

• Write-optimized DSOs

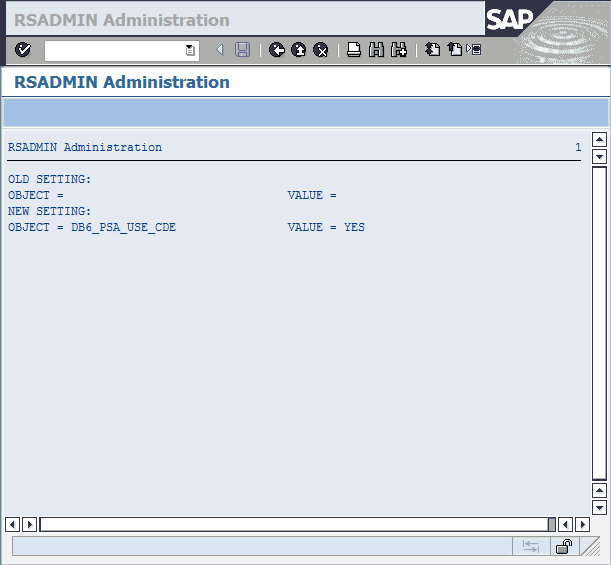

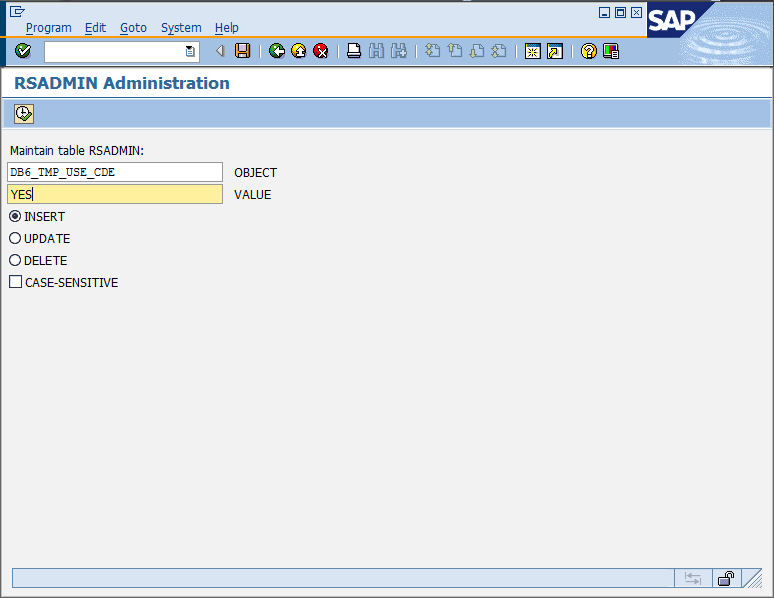

• InfoObjects (characteristics, time characteristics, units), through a new RSADMIN parameter

• PSA tables, through a new RSADMIN parameter

You cannot create column-organized real-time InfoCubes and transactional DSOs.

•As of SAP BW 7.40 Support Package 8 and DB2 Cancun Release 10.5.0.4, you can work with flat InfoCubes. Flat InfoCubes have a simplified star schema with only one fact table and no dimension tables except for the package dimension table. You can create flat InfoCubes and you can convert existing standard InfoCubes to flat InfoCubes (see 8.5.2, “Flat InfoCubes in SAP BW 7.40” on page 297).

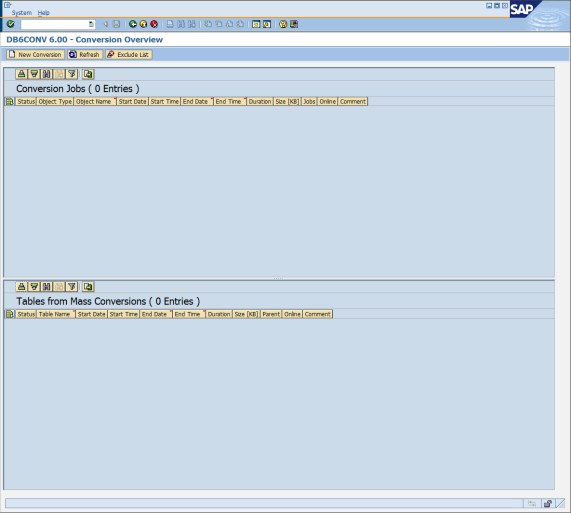

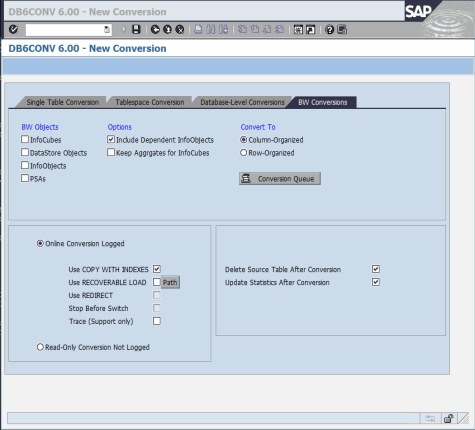

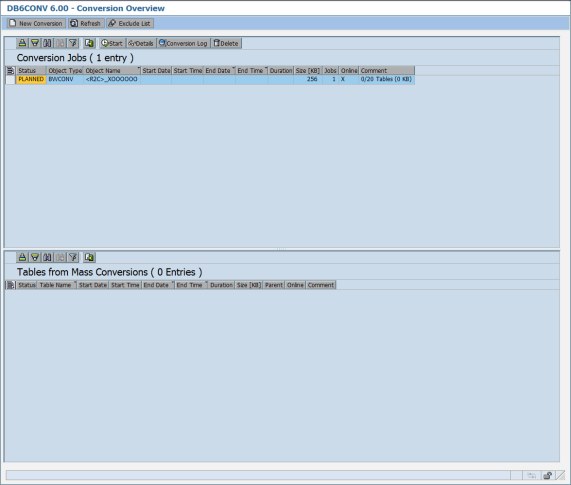

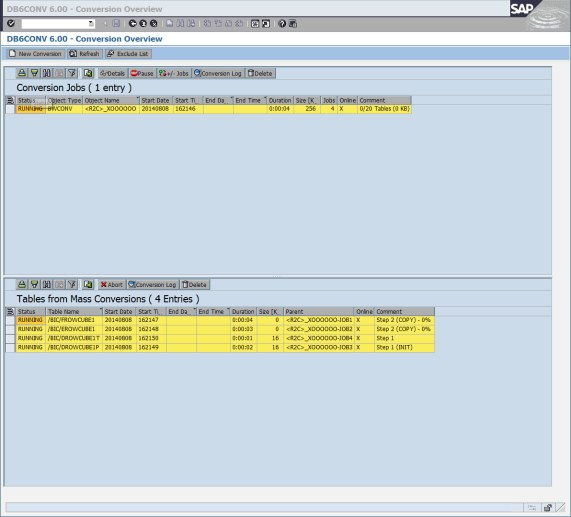

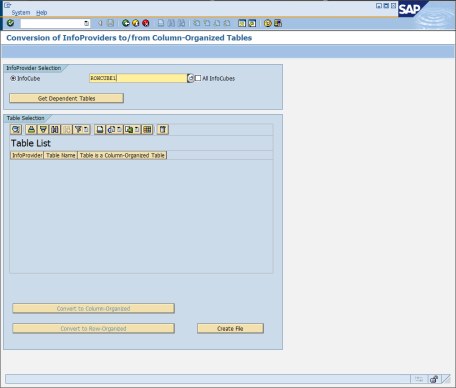

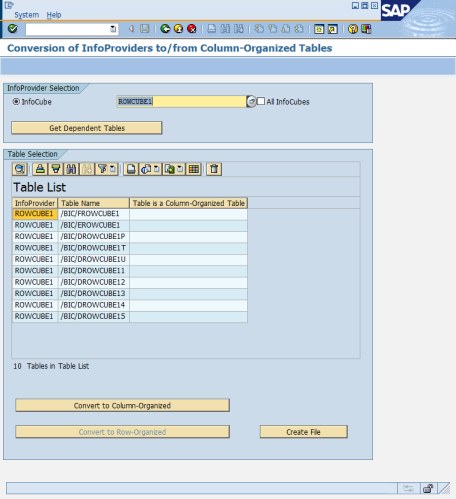

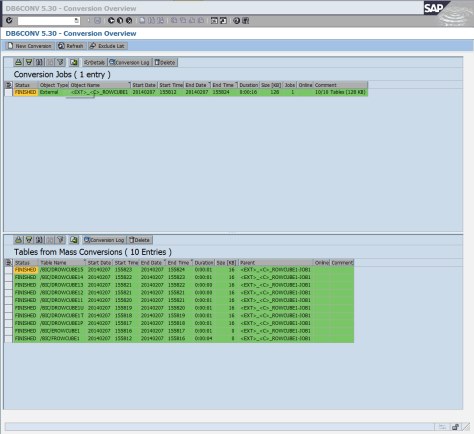

•DB6CONV is enhanced with the option to convert BW objects (InfoCubes, DSOs, InfoObjects, and PSA tables) from row-organized to column-organized tables. You can convert selected BW objects or all BW objects in your system (see 8.6, “Conversion of SAP BW objects to column-organized tables” on page 350). Thus, the SAP_CDE_CONVERSION report is discontinued with DB2 Cancun Release 10.5.0.4.

•You can set an SAP BW RSADMIN configuration parameter that causes new InfoObject SID and attribute SID tables to be created as column-organized tables.

•You can set an SAP BW RSADMIN configuration parameter that causes new PSA tables to be created as column-organized tables.

•You can set an SAP BW RSADMIN configuration parameter that causes new SAP BW temporary tables to be created as column-organized tables.

In this section, we explain in detail how to perform these operations.

8.5.1 Column-organized standard InfoCubes in SAP BW

This section shows how to create a new standard InfoCube that uses column-organized tables in the Data Warehousing Workbench of SAP BW. We illustrate the procedure with an example in an SAP BW 7.40 system in which the BI DataMart Benchmark InfoCubes have been installed (InfoArea BI Benchmark). The example works in exactly the same way in the other SAP BW releases in which BLU Acceleration is supported.

Follow these steps to create a new InfoCube that uses column-organized tables in the Data Warehousing Workbench of SAP BW:

1. Call transaction RSA1 to get to the Data Warehousing Workbench and create the InfoArea BLU Acceleration Example InfoArea (Figure 8-13).

Figure 8-13 SAP Data Warehousing Workbench main window

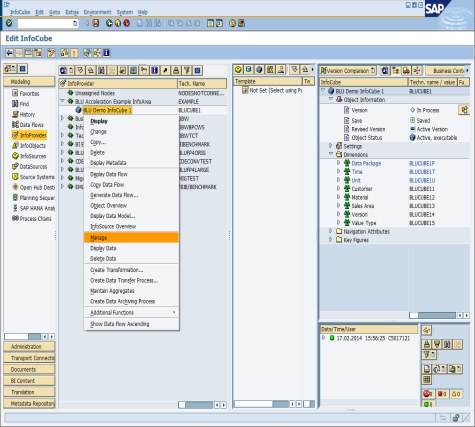

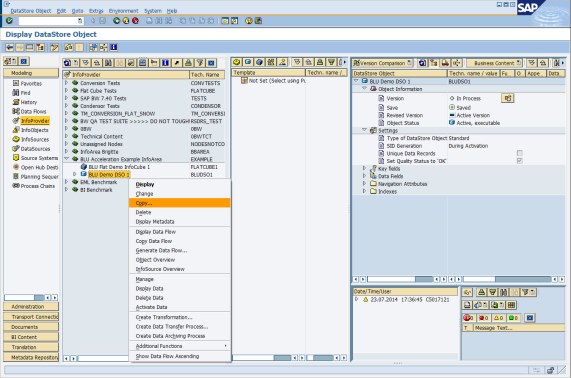

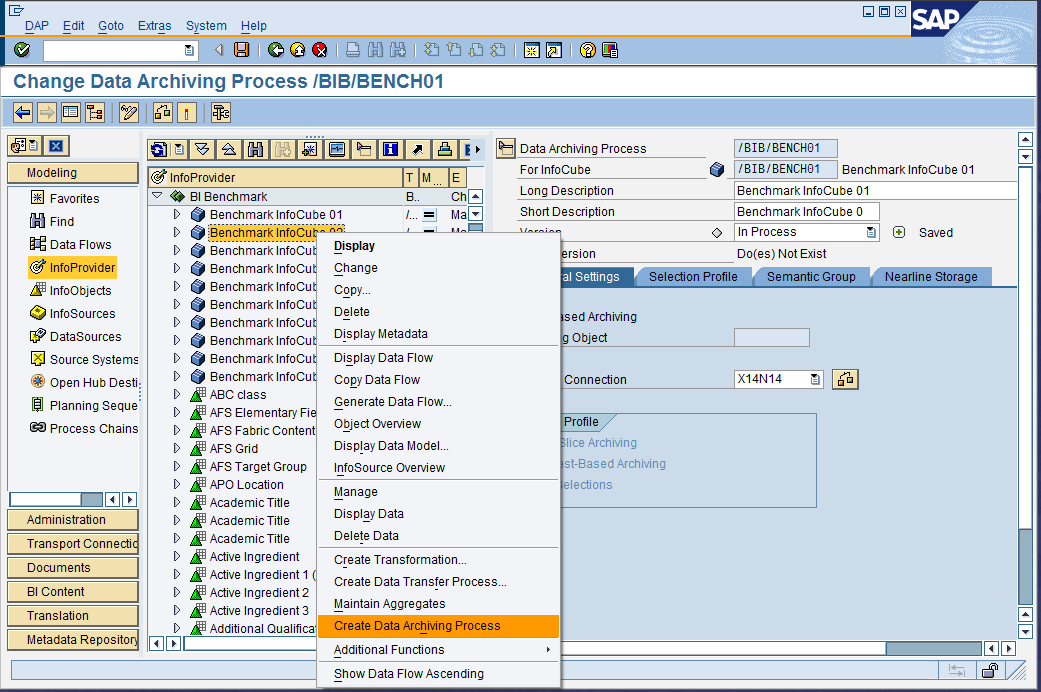

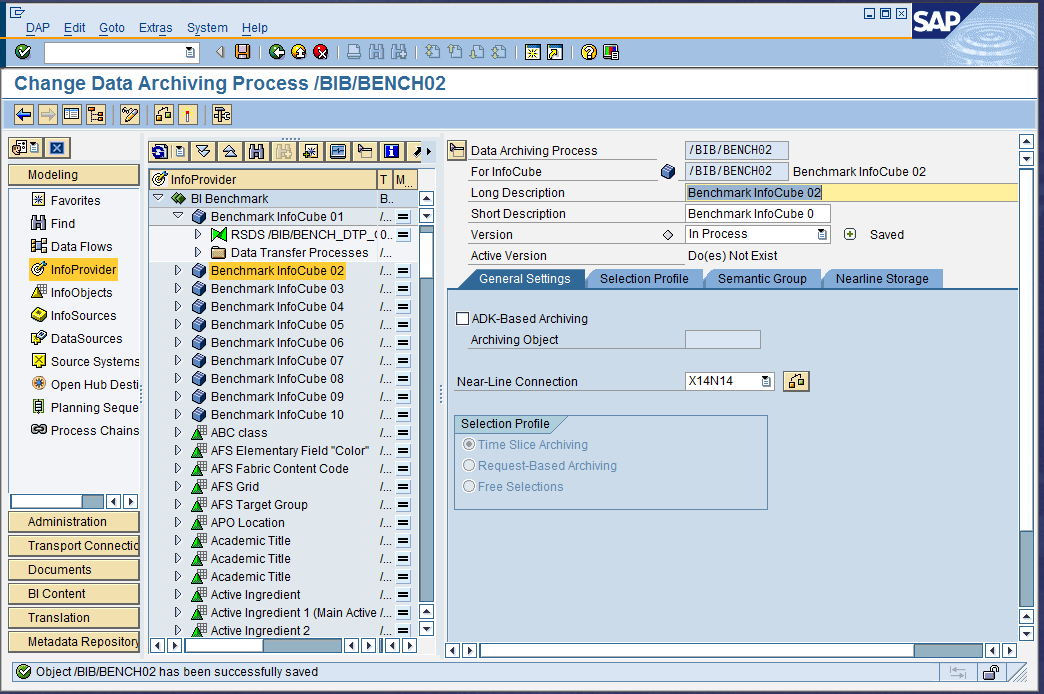

2. In BI Benchmark, select Benchmark InfoCube 01 (technical name is /BIB/BENCH01), and choose Copy from the context menu (Figure 8-14).

Figure 8-14 SAP Data Warehousing Workbench: Copying an InfoCube

3. In the Edit InfoCube window, enter BLUCUBE1 as the technical name, BLU Demo InfoCube 1 as the description, and select EXAMPLE (BLU Acceleration Example InfoArea) as the InfoArea. Click the Create icon (Figure 8-15).

Figure 8-15 SAP Data Warehousing Workbench: Editing InfoCube window

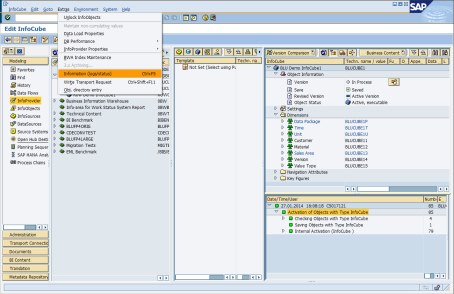

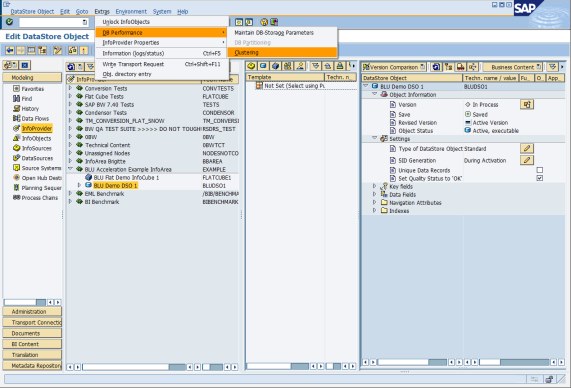

4. The Edit InfoCube window in the Data Warehousing Workbench opens. From the menu, choose Extras → DB Performance → Clustering (Figure 8-16).

Figure 8-16 SAP Data Warehousing Workbench: Specifying DB2-specific properties

5. In the Selection of Clustering dialog window, select Column-Organized and then click the check mark icon to continue (Figure 8-17).

Figure 8-17 SAP Data Warehousing Workbench: Select column-organized tables

6. Save and activate the InfoCube.

7. Inspect the tables:

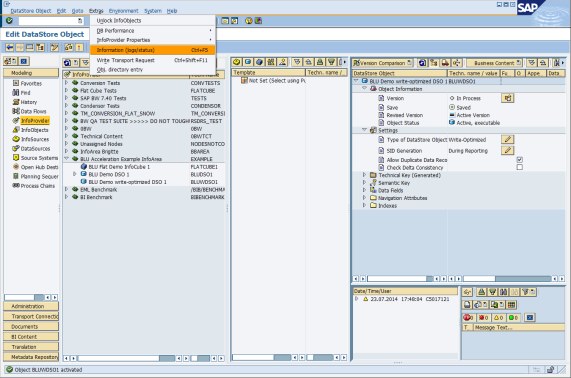

In transaction RSA1, you can inspect the tables of an InfoCube by choosing Extras → Information (logs/status) from the menu (Figure 8-18).

Figure 8-18 SAP Data Warehousing Workbench: Accessing protocol and status information

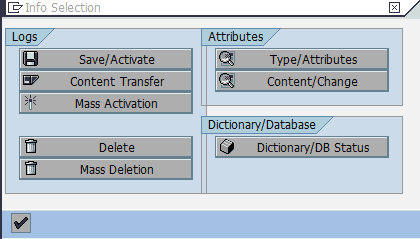

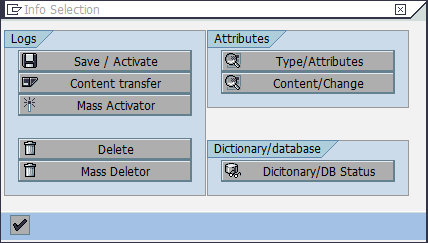

In the Info Selection dialog window, click Dictionary/DB Status (Figure 8-19).

Figure 8-19 Accessing ABAP Dictionary for the tables of an InfoCube

The “Status Information” window displays the tables of the InfoCube. To look up the storage parameters for the table, double-click a table to get to the ABAP Dictionary information for the table (Figure 8-20).

Figure 8-20 ABAP Dictionary information for a InfoCube table

When you work with BLU Acceleration InfoCubes, consider the following information:

•When you copy an existing InfoCube, the target InfoCube inherits the clustering and table organization settings of the source InfoCube. You can change the settings of the target InfoCube in the “Selection of Clustering” window in the Data Warehousing Workbench before you activate the target InfoCube.

•You might no longer need aggregates when you work with BLU Acceleration InfoCubes because the query performance on the basic InfoCubes is fast enough. However, you can create aggregates for BLU Acceleration InfoCubes just as for row-organized InfoCubes if needed. The tables for any aggregates of BLU Acceleration InfoCubes are also created as column-organized tables.

•When you install InfoCubes from SAP BI Content, they are created with the default clustering settings for DB2, which is index clustering. If you want to create BLU Acceleration InfoCubes, you must change the clustering settings in the Data Warehousing Workbench after you install the InfoCubes, and then reactivate the InfoCubes.

•When you transport an InfoCube from your development system into the production system, the behavior is as follows:

– If the InfoCube already exists in the production system and contains data, the current clustering settings and table organization are preserved.

– If the InfoCube does not yet exist in the production system or does not contain any data, the clustering and table organization settings from the development system are used. If the tables exist, they are dropped and re-created.

•When you create a semantically partitioned InfoCube, the clustering settings that you select for the semantically partitioned InfoCube are propagated to the partitions of the InfoCube. Therefore, when you choose to create column-organized tables, the tables of all partitions of the semantically partitioned InfoCube are created automatically as column-organized.

Table layout of column-organized InfoCubes

This section shows the differences in the layout of row-organized and column-organized InfoCube tables.

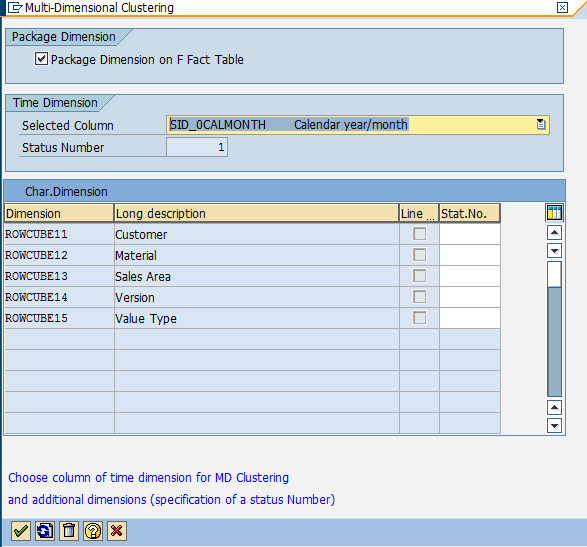

For comparison, we also create ROWCUBE1, a row-organized InfoCube. Here we use multidimensional clustering (MDC) on the package dimension key column of the InfoCube F fact table and on the time characteristic calendar month on the InfoCube F and E fact tables:

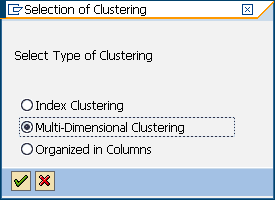

1. You select these clustering settings for the row-organized InfoCube in SAP BW by choosing Multi-Dimensional Clustering in the “Selection of Clustering” dialog window (Figure 8-21).

Figure 8-21 SAP Data Warehousing Workbench: Creating a sample row-organized InfoCube

2. Then make selections in the “Multi-Dimensional Clustering” dialog window (Figure 8-22).

Figure 8-22 Specification of multi-dimensional clustering for the sample row-organized InfoCube

3. Save and activate the InfoCube. After that, the tables listed in Figure 8-23 exist for ROWCUBE1 InfoCube.

Figure 8-23 Tables of the sample row-organized InfoCube

Comparison of the F fact tables

You can use the DB2 db2look tool to extract the DDL for the two fact tables:

db2look -d D01 -a -e -t "/BIC/FBLUCUBE1"

Example 8-2 shows the DDL of the table.

Example 8-2 DDL of column-organized F fact table

CREATE TABLE "SAPD01 "."/BIC/FBLUCUBE1" (

"KEY_BLUCUBE1P" INTEGER NOT NULL WITH DEFAULT 0 ,

"KEY_BLUCUBE1T" INTEGER NOT NULL WITH DEFAULT 0 ,

"KEY_BLUCUBE1U" INTEGER NOT NULL WITH DEFAULT 0 ,

"KEY_BLUCUBE11" INTEGER NOT NULL WITH DEFAULT 0 ,

"KEY_BLUCUBE12" INTEGER NOT NULL WITH DEFAULT 0 ,

"KEY_BLUCUBE13" INTEGER NOT NULL WITH DEFAULT 0 ,

"KEY_BLUCUBE14" INTEGER NOT NULL WITH DEFAULT 0 ,

"KEY_BLUCUBE15" INTEGER NOT NULL WITH DEFAULT 0 ,

"/B49/S_CRMEM_CST" DECIMAL(17,2) NOT NULL WITH DEFAULT 0 ,

"/B49/S_CRMEM_QTY" DECIMAL(17,3) NOT NULL WITH DEFAULT 0 ,

"/B49/S_CRMEM_VAL" DECIMAL(17,2) NOT NULL WITH DEFAULT 0 ,

"/B49/S_INCORDCST" DECIMAL(17,2) NOT NULL WITH DEFAULT 0 ,

"/B49/S_INCORDQTY" DECIMAL(17,3) NOT NULL WITH DEFAULT 0 ,

"/B49/S_INCORDVAL" DECIMAL(17,2) NOT NULL WITH DEFAULT 0 ,

"/B49/S_INVCD_CST" DECIMAL(17,2) NOT NULL WITH DEFAULT 0 ,

"/B49/S_INVCD_QTY" DECIMAL(17,3) NOT NULL WITH DEFAULT 0 ,

"/B49/S_INVCD_VAL" DECIMAL(17,2) NOT NULL WITH DEFAULT 0 ,

"/B49/S_OPORDQTYB" DECIMAL(17,3) NOT NULL WITH DEFAULT 0 ,

"/B49/S_OPORDVALS" DECIMAL(17,2) NOT NULL WITH DEFAULT 0 ,

"/B49/S_ORD_ITEMS" DECIMAL(17,3) NOT NULL WITH DEFAULT 0 ,

"/B49/S_RTNSCST" DECIMAL(17,2) NOT NULL WITH DEFAULT 0 ,

"/B49/S_RTNSQTY" DECIMAL(17,3) NOT NULL WITH DEFAULT 0 ,

"/B49/S_RTNSVAL" DECIMAL(17,2) NOT NULL WITH DEFAULT 0 ,

"/B49/S_RTNS_ITEM" DECIMAL(17,3) NOT NULL WITH DEFAULT 0 )

DISTRIBUTE BY HASH("KEY_BLUCUBE11",

"KEY_BLUCUBE12",

"KEY_BLUCUBE13",

"KEY_BLUCUBE14",

"KEY_BLUCUBE15",

"KEY_BLUCUBE1T",

"KEY_BLUCUBE1U")

IN "D01#FACTD" INDEX IN "D01#FACTI"

ORGANIZE BY COLUMN;

ORGANIZE BY COLUMN shows that the table is a column-organized table. The table has no indexes.

Together with each column-organized table, DB2 automatically creates a corresponding synopsis table. The synopsis table is automatically maintained when data is inserted or loaded into the column-organized table and is used for data skipping during SQL query execution. Space consumption and the cost for updating the synopsis tables when the data in the column-organized table is changed are much lower than that for secondary indexes. Synopsis tables reside in the SYSIBM schema use the following naming convention:

SYN<timestamp>_<column-organized table name>.

You can retrieve the synopsis table for /BIC/FBLUCUBE1 with the SQL statement as shown in Example 8-3.

Example 8-3 Determine name of synopsis table for column-organized F fact table

db2 => SELECT TABNAMEFROM SYSCAT.TABLES WHERE TABNAME LIKE ‘SYN%_/BIC/FBLUCUBE1’

TABNAME

---------------------------------------

SYN140205190245349822_/BIC/FBLUCUBE1

You can retrieve the layout of the synopsis table with the DB2 DESCRIBE command, as shown in Example 8-4.

Example 8-4 Structure of synopsis table of column-organized F fact table

db2 => DESCRIBE TABLE SYSIBM.SYN140205190245349822_/BIC/FBLUCUBE1

Data type Column

Column name schema Data type name Length Scale Nulls

------------------------------- --------- ------------------- ---------- ----- ------

KEY_BLUCUBE1PMIN SYSIBM INTEGER 4 0 No

KEY_BLUCUBE1PMAX SYSIBM INTEGER 4 0 No

KEY_BLUCUBE1TMIN SYSIBM INTEGER 4 0 No

KEY_BLUCUBE1TMAX SYSIBM INTEGER 4 0 No

KEY_BLUCUBE1UMIN SYSIBM INTEGER 4 0 No

KEY_BLUCUBE1UMAX SYSIBM INTEGER 4 0 No

KEY_BLUCUBE11MIN SYSIBM INTEGER 4 0 No

KEY_BLUCUBE11MAX SYSIBM INTEGER 4 0 No

KEY_BLUCUBE12MIN SYSIBM INTEGER 4 0 No

KEY_BLUCUBE12MAX SYSIBM INTEGER 4 0 No

KEY_BLUCUBE13MIN SYSIBM INTEGER 4 0 No

KEY_BLUCUBE13MAX SYSIBM INTEGER 4 0 No

KEY_BLUCUBE14MIN SYSIBM INTEGER 4 0 No

KEY_BLUCUBE14MAX SYSIBM INTEGER 4 0 No

KEY_BLUCUBE15MIN SYSIBM INTEGER 4 0 No

KEY_BLUCUBE15MAX SYSIBM INTEGER 4 0 No

/B49/S_CRMEM_CSTMIN SYSIBM DECIMAL 17 2 No

/B49/S_CRMEM_CSTMAX SYSIBM DECIMAL 17 2 No

/B49/S_CRMEM_QTYMIN SYSIBM DECIMAL 17 3 No

/B49/S_CRMEM_QTYMAX SYSIBM DECIMAL 17 3 No

/B49/S_CRMEM_VALMIN SYSIBM DECIMAL 17 2 No

/B49/S_CRMEM_VALMAX SYSIBM DECIMAL 17 2 No

/B49/S_INCORDCSTMIN SYSIBM DECIMAL 17 2 No

/B49/S_INCORDCSTMAX SYSIBM DECIMAL 17 2 No

/B49/S_INCORDQTYMIN SYSIBM DECIMAL 17 3 No

/B49/S_INCORDQTYMAX SYSIBM DECIMAL 17 3 No

/B49/S_INCORDVALMIN SYSIBM DECIMAL 17 2 No

/B49/S_INCORDVALMAX SYSIBM DECIMAL 17 2 No

/B49/S_INVCD_CSTMIN SYSIBM DECIMAL 17 2 No

/B49/S_INVCD_CSTMAX SYSIBM DECIMAL 17 2 No

/B49/S_INVCD_QTYMIN SYSIBM DECIMAL 17 3 No

/B49/S_INVCD_QTYMAX SYSIBM DECIMAL 17 3 No

/B49/S_INVCD_VALMIN SYSIBM DECIMAL 17 2 No

/B49/S_INVCD_VALMAX SYSIBM DECIMAL 17 2 No

/B49/S_OPORDQTYBMIN SYSIBM DECIMAL 17 3 No

/B49/S_OPORDQTYBMAX SYSIBM DECIMAL 17 3 No

/B49/S_OPORDVALSMIN SYSIBM DECIMAL 17 2 No

/B49/S_OPORDVALSMAX SYSIBM DECIMAL 17 2 No

/B49/S_ORD_ITEMSMIN SYSIBM DECIMAL 17 3 No

/B49/S_ORD_ITEMSMAX SYSIBM DECIMAL 17 3 No

/B49/S_RTNSCSTMIN SYSIBM DECIMAL 17 2 No

/B49/S_RTNSCSTMAX SYSIBM DECIMAL 17 2 No

/B49/S_RTNSQTYMIN SYSIBM DECIMAL 17 3 No

/B49/S_RTNSQTYMAX SYSIBM DECIMAL 17 3 No

/B49/S_RTNSVALMIN SYSIBM DECIMAL 17 2 No

/B49/S_RTNSVALMAX SYSIBM DECIMAL 17 2 No

/B49/S_RTNS_ITEMMIN SYSIBM DECIMAL 17 3 No

/B49/S_RTNS_ITEMMAX SYSIBM DECIMAL 17 3 No

TSNMIN SYSIBM BIGINT 8 0 No

TSNMAX SYSIBM BIGINT 8 0 No

Space consumption and performance of synopsis tables can be monitored in the DBA Cockpit. Choose, for example, Tables and Indexes → Top Space Consumers, in the DBA Cockpit navigation frame, and set the table schema filter to SYSIBM and the table name filter to SYN*. Space consumption information about synopsis tables is displayed in the table on the right (Figure 8-24).

Figure 8-24 DBA Cockpit: Space consumption information for synopsis tables

Now run db2look for the row-organized table:

db2look -d D01 -a -e -t "/BIC/FROWCUBE1"

Example 8-5 shows the DDL for the table.

Example 8-5 DDL of row-organized F fact table

CREATE TABLE "SAPD01 "."/BIC/FROWCUBE1" (

"KEY_ROWCUBE1P" INTEGER NOT NULL WITH DEFAULT 0 ,

"KEY_ROWCUBE1T" INTEGER NOT NULL WITH DEFAULT 0 ,

"KEY_ROWCUBE1U" INTEGER NOT NULL WITH DEFAULT 0 ,

"KEY_ROWCUBE11" INTEGER NOT NULL WITH DEFAULT 0 ,

"KEY_ROWCUBE12" INTEGER NOT NULL WITH DEFAULT 0 ,

"KEY_ROWCUBE13" INTEGER NOT NULL WITH DEFAULT 0 ,

"KEY_ROWCUBE14" INTEGER NOT NULL WITH DEFAULT 0 ,

"KEY_ROWCUBE15" INTEGER NOT NULL WITH DEFAULT 0 ,

"SID_0CALMONTH" INTEGER NOT NULL WITH DEFAULT 0 ,

"/B49/S_CRMEM_CST" DECIMAL(17,2) NOT NULL WITH DEFAULT 0 ,

"/B49/S_CRMEM_QTY" DECIMAL(17,3) NOT NULL WITH DEFAULT 0 ,

"/B49/S_CRMEM_VAL" DECIMAL(17,2) NOT NULL WITH DEFAULT 0 ,

"/B49/S_INCORDCST" DECIMAL(17,2) NOT NULL WITH DEFAULT 0 ,

"/B49/S_INCORDQTY" DECIMAL(17,3) NOT NULL WITH DEFAULT 0 ,

"/B49/S_INCORDVAL" DECIMAL(17,2) NOT NULL WITH DEFAULT 0 ,

"/B49/S_INVCD_CST" DECIMAL(17,2) NOT NULL WITH DEFAULT 0 ,

"/B49/S_INVCD_QTY" DECIMAL(17,3) NOT NULL WITH DEFAULT 0 ,

"/B49/S_INVCD_VAL" DECIMAL(17,2) NOT NULL WITH DEFAULT 0 ,

"/B49/S_OPORDQTYB" DECIMAL(17,3) NOT NULL WITH DEFAULT 0 ,

"/B49/S_OPORDVALS" DECIMAL(17,2) NOT NULL WITH DEFAULT 0 ,

"/B49/S_ORD_ITEMS" DECIMAL(17,3) NOT NULL WITH DEFAULT 0 ,

"/B49/S_RTNSCST" DECIMAL(17,2) NOT NULL WITH DEFAULT 0 ,

"/B49/S_RTNSQTY" DECIMAL(17,3) NOT NULL WITH DEFAULT 0 ,

"/B49/S_RTNSVAL" DECIMAL(17,2) NOT NULL WITH DEFAULT 0 ,

"/B49/S_RTNS_ITEM" DECIMAL(17,3) NOT NULL WITH DEFAULT 0 )

COMPRESS YES ADAPTIVE

DISTRIBUTE BY HASH("KEY_ROWCUBE11",

"KEY_ROWCUBE12",

"KEY_ROWCUBE13",

"KEY_ROWCUBE14",

"KEY_ROWCUBE15",

"KEY_ROWCUBE1T",

"KEY_ROWCUBE1U")

IN "D01#FACTD" INDEX IN "D01#FACTI"

ORGANIZE BY ROW USING (

( "KEY_ROWCUBE1P" ) ,

( "SID_0CALMONTH" ) )

;

ALTER TABLE "SAPD01 "."/BIC/FROWCUBE1" LOCKSIZE BLOCKINSERT;

CREATE INDEX "SAPD01 "."/BIC/FROWCUBE1~020" ON "SAPD01 "."/BIC/FROWCUBE1"

("KEY_ROWCUBE1T" ASC)

PCTFREE 0 COMPRESS YES

INCLUDE NULL KEYS ALLOW REVERSE SCANS;

CREATE INDEX "SAPD01 "."/BIC/FROWCUBE1~040" ON "SAPD01 "."/BIC/FROWCUBE1"

("KEY_ROWCUBE11" ASC)

PCTFREE 0 COMPRESS YES

INCLUDE NULL KEYS ALLOW REVERSE SCANS;

CREATE INDEX "SAPD01 "."/BIC/FROWCUBE1~050" ON "SAPD01 "."/BIC/FROWCUBE1"

("KEY_ROWCUBE12" ASC)

PCTFREE 0 COMPRESS YES

INCLUDE NULL KEYS ALLOW REVERSE SCANS;

CREATE INDEX "SAPD01 "."/BIC/FROWCUBE1~060" ON "SAPD01 "."/BIC/FROWCUBE1"

("KEY_ROWCUBE13" ASC)

PCTFREE 0 COMPRESS YES

INCLUDE NULL KEYS ALLOW REVERSE SCANS;

CREATE INDEX "SAPD01 "."/BIC/FROWCUBE1~070" ON "SAPD01 "."/BIC/FROWCUBE1"

("KEY_ROWCUBE14" ASC)

PCTFREE 0 COMPRESS YES

INCLUDE NULL KEYS ALLOW REVERSE SCANS;

CREATE INDEX "SAPD01 "."/BIC/FROWCUBE1~080" ON "SAPD01 "."/BIC/FROWCUBE1"

("KEY_ROWCUBE15" ASC)

PCTFREE 0 COMPRESS YES

INCLUDE NULL KEYS ALLOW REVERSE SCANS;

The table has two MDC dimensions and six single-column indexes that must be maintained during INSERT/UPDATE/DELETE and LOAD operations.

Comparison of the E fact tables

You can use the DB2 db2look tool to extract the DDL for the two fact tables.

db2look -d D01 -a -e -t "/BIC/EBLUCUBE1"

Example 8-6 shows the DDL for the table.

Example 8-6 DDL of column-organized E fact table

CREATE TABLE "SAPD01 "."/BIC/EBLUCUBE1" (

"KEY_BLUCUBE1P" INTEGER NOT NULL WITH DEFAULT 0 ,

"KEY_BLUCUBE1T" INTEGER NOT NULL WITH DEFAULT 0 ,

"KEY_BLUCUBE1U" INTEGER NOT NULL WITH DEFAULT 0 ,

"KEY_BLUCUBE11" INTEGER NOT NULL WITH DEFAULT 0 ,

"KEY_BLUCUBE12" INTEGER NOT NULL WITH DEFAULT 0 ,

"KEY_BLUCUBE13" INTEGER NOT NULL WITH DEFAULT 0 ,

"KEY_BLUCUBE14" INTEGER NOT NULL WITH DEFAULT 0 ,

"KEY_BLUCUBE15" INTEGER NOT NULL WITH DEFAULT 0 ,

"/B49/S_CRMEM_CST" DECIMAL(17,2) NOT NULL WITH DEFAULT 0 ,

"/B49/S_CRMEM_QTY" DECIMAL(17,3) NOT NULL WITH DEFAULT 0 ,

"/B49/S_CRMEM_VAL" DECIMAL(17,2) NOT NULL WITH DEFAULT 0 ,

"/B49/S_INCORDCST" DECIMAL(17,2) NOT NULL WITH DEFAULT 0 ,

"/B49/S_INCORDQTY" DECIMAL(17,3) NOT NULL WITH DEFAULT 0 ,

"/B49/S_INCORDVAL" DECIMAL(17,2) NOT NULL WITH DEFAULT 0 ,

"/B49/S_INVCD_CST" DECIMAL(17,2) NOT NULL WITH DEFAULT 0 ,

"/B49/S_INVCD_QTY" DECIMAL(17,3) NOT NULL WITH DEFAULT 0 ,

"/B49/S_INVCD_VAL" DECIMAL(17,2) NOT NULL WITH DEFAULT 0 ,

"/B49/S_OPORDQTYB" DECIMAL(17,3) NOT NULL WITH DEFAULT 0 ,

"/B49/S_OPORDVALS" DECIMAL(17,2) NOT NULL WITH DEFAULT 0 ,

"/B49/S_ORD_ITEMS" DECIMAL(17,3) NOT NULL WITH DEFAULT 0 ,

"/B49/S_RTNSCST" DECIMAL(17,2) NOT NULL WITH DEFAULT 0 ,

"/B49/S_RTNSQTY" DECIMAL(17,3) NOT NULL WITH DEFAULT 0 ,

"/B49/S_RTNSVAL" DECIMAL(17,2) NOT NULL WITH DEFAULT 0 ,

"/B49/S_RTNS_ITEM" DECIMAL(17,3) NOT NULL WITH DEFAULT 0 )

DISTRIBUTE BY HASH("KEY_BLUCUBE11",

"KEY_BLUCUBE12",

"KEY_BLUCUBE13",

"KEY_BLUCUBE14",

"KEY_BLUCUBE15",

"KEY_BLUCUBE1T",

"KEY_BLUCUBE1U")

IN "D01#FACTD" INDEX IN "D01#FACTI"

ORGANIZE BY COLUMN;

ALTER TABLE "SAPD01 "."/BIC/EBLUCUBE1"

ADD CONSTRAINT "/BIC/EBLUCUBE1~P" UNIQUE

("KEY_BLUCUBE1T",

"KEY_BLUCUBE11",

"KEY_BLUCUBE12",

"KEY_BLUCUBE13",

"KEY_BLUCUBE14",

"KEY_BLUCUBE15",

"KEY_BLUCUBE1U",

"KEY_BLUCUBE1P");

The table has one UNIQUE constraint. In addition, a synopsis table is created in schema SYSIBM.

Use the DB2 db2look tool to extract the DDL of the second row-organized fact table:

db2look -d D01 -a -e -t "/BIC/EROWCUBE1"

Example 8-7 shows the DDL for the table.

Example 8-7 DDL for the row-organized fact table

CREATE TABLE "SAPD01 "."/BIC/EROWCUBE1" (

"KEY_ROWCUBE1P" INTEGER NOT NULL WITH DEFAULT 0 ,

"KEY_ROWCUBE1T" INTEGER NOT NULL WITH DEFAULT 0 ,

"KEY_ROWCUBE1U" INTEGER NOT NULL WITH DEFAULT 0 ,

"KEY_ROWCUBE11" INTEGER NOT NULL WITH DEFAULT 0 ,

"KEY_ROWCUBE12" INTEGER NOT NULL WITH DEFAULT 0 ,

"KEY_ROWCUBE13" INTEGER NOT NULL WITH DEFAULT 0 ,

"KEY_ROWCUBE14" INTEGER NOT NULL WITH DEFAULT 0 ,

"KEY_ROWCUBE15" INTEGER NOT NULL WITH DEFAULT 0 ,

"SID_0CALMONTH" INTEGER NOT NULL WITH DEFAULT 0 ,

"/B49/S_CRMEM_CST" DECIMAL(17,2) NOT NULL WITH DEFAULT 0 ,

"/B49/S_CRMEM_QTY" DECIMAL(17,3) NOT NULL WITH DEFAULT 0 ,

"/B49/S_CRMEM_VAL" DECIMAL(17,2) NOT NULL WITH DEFAULT 0 ,

"/B49/S_INCORDCST" DECIMAL(17,2) NOT NULL WITH DEFAULT 0 ,

"/B49/S_INCORDQTY" DECIMAL(17,3) NOT NULL WITH DEFAULT 0 ,

"/B49/S_INCORDVAL" DECIMAL(17,2) NOT NULL WITH DEFAULT 0 ,

"/B49/S_INVCD_CST" DECIMAL(17,2) NOT NULL WITH DEFAULT 0 ,

"/B49/S_INVCD_QTY" DECIMAL(17,3) NOT NULL WITH DEFAULT 0 ,

"/B49/S_INVCD_VAL" DECIMAL(17,2) NOT NULL WITH DEFAULT 0 ,

"/B49/S_OPORDQTYB" DECIMAL(17,3) NOT NULL WITH DEFAULT 0 ,

"/B49/S_OPORDVALS" DECIMAL(17,2) NOT NULL WITH DEFAULT 0 ,

"/B49/S_ORD_ITEMS" DECIMAL(17,3) NOT NULL WITH DEFAULT 0 ,

"/B49/S_RTNSCST" DECIMAL(17,2) NOT NULL WITH DEFAULT 0 ,

"/B49/S_RTNSQTY" DECIMAL(17,3) NOT NULL WITH DEFAULT 0 ,

"/B49/S_RTNSVAL" DECIMAL(17,2) NOT NULL WITH DEFAULT 0 ,

"/B49/S_RTNS_ITEM" DECIMAL(17,3) NOT NULL WITH DEFAULT 0 )

COMPRESS YES ADAPTIVE

DISTRIBUTE BY HASH("KEY_ROWCUBE11",

"KEY_ROWCUBE12",

"KEY_ROWCUBE13",

"KEY_ROWCUBE14",

"KEY_ROWCUBE15",

"KEY_ROWCUBE1T",

"KEY_ROWCUBE1U")

IN "D01#FACTD" INDEX IN "D01#FACTI"

ORGANIZE BY ROW USING (

( "SID_0CALMONTH" ) )

;

ALTER TABLE "SAPD01 "."/BIC/EROWCUBE1" LOCKSIZE BLOCKINSERT;

CREATE INDEX "SAPD01 "."/BIC/EROWCUBE1~020" ON "SAPD01 "."/BIC/EROWCUBE1"

("KEY_ROWCUBE1T" ASC)

PCTFREE 0 COMPRESS YES

INCLUDE NULL KEYS ALLOW REVERSE SCANS;

CREATE INDEX "SAPD01 "."/BIC/EROWCUBE1~040" ON "SAPD01 "."/BIC/EROWCUBE1"

("KEY_ROWCUBE11" ASC)

PCTFREE 0 COMPRESS YES

INCLUDE NULL KEYS ALLOW REVERSE SCANS;

CREATE INDEX "SAPD01 "."/BIC/EROWCUBE1~050" ON "SAPD01 "."/BIC/EROWCUBE1"

("KEY_ROWCUBE12" ASC)

PCTFREE 0 COMPRESS YES

INCLUDE NULL KEYS ALLOW REVERSE SCANS;

CREATE INDEX "SAPD01 "."/BIC/EROWCUBE1~060" ON "SAPD01 "."/BIC/EROWCUBE1"

("KEY_ROWCUBE13" ASC)

PCTFREE 0 COMPRESS YES

INCLUDE NULL KEYS ALLOW REVERSE SCANS;

CREATE INDEX "SAPD01 "."/BIC/EROWCUBE1~070" ON "SAPD01 "."/BIC/EROWCUBE1"

("KEY_ROWCUBE14" ASC)

PCTFREE 0 COMPRESS YES

INCLUDE NULL KEYS ALLOW REVERSE SCANS;

CREATE INDEX "SAPD01 "."/BIC/EROWCUBE1~080" ON "SAPD01 "."/BIC/EROWCUBE1"

("KEY_ROWCUBE15" ASC)

PCTFREE 0 COMPRESS YES

INCLUDE NULL KEYS ALLOW REVERSE SCANS;

CREATE UNIQUE INDEX "SAPD01 "."/BIC/EROWCUBE1~P" ON "SAPD01 "."/BIC/EROWCUBE1"

("KEY_ROWCUBE1T" ASC,

"KEY_ROWCUBE11" ASC,

"KEY_ROWCUBE12" ASC,

"KEY_ROWCUBE13" ASC,

"KEY_ROWCUBE14" ASC,

"KEY_ROWCUBE15" ASC,

"KEY_ROWCUBE1U" ASC,

"KEY_ROWCUBE1P" ASC)

PCTFREE 0 COMPRESS YES

INCLUDE NULL KEYS ALLOW REVERSE SCANS;

The table has one MDC dimension, six single-column indexes, and one unique multi-column index. The multi-column index corresponds to the unique constraint of the column-organized table.

Comparison of dimension tables

As an example, we use the tables created for dimension 3 of InfoCubes /BIC/DBLUCUBE13 and /BIC/DROWCUBE13.

We use the DB2 db2look tool to extract the DDL for the column-organized dimension table:

db2look -d D01 -a -e -t "/BIC/DBLUCUBE13"

Example 8-8 shows the DDL for the table.

Example 8-8 DDL of column-organized dimension table

CREATE TABLE "SAPD01 "."/BIC/DBLUCUBE13" (

"DIMID" INTEGER NOT NULL WITH DEFAULT 0 ,

"/B49/S_DISTR_CHA" INTEGER NOT NULL WITH DEFAULT 0 ,

"/B49/S_DIVISION" INTEGER NOT NULL WITH DEFAULT 0 ,

"/B49/S_SALESORG" INTEGER NOT NULL WITH DEFAULT 0 )

IN "D01#DIMD" INDEX IN "D01#DIMI"

ORGANIZE BY COLUMN;

ALTER TABLE "SAPD01 "."/BIC/DBLUCUBE13"

ADD CONSTRAINT "/BIC/DBLUCUBE13~0" PRIMARY KEY

("DIMID");

ALTER TABLE "SAPD01 "."/BIC/DBLUCUBE13"

ADD CONSTRAINT "/BIC/DBLUCUBE13~99" UNIQUE

("/B49/S_DISTR_CHA",

"/B49/S_DIVISION",

"/B49/S_SALESORG",

"DIMID");

The table has one primary key constraint and a unique constraint /BIC/DBLUCUBE13~99. This constraint is not created on row-organized dimension tables. It is used during ETL processing when new data is inserted into the InfoCube.

We use the DB2 db2look tool to extract the DDL for the row-organized dimension table.

db2look -d D01 -a -e -t "/BIC/DBLUCUBE13"

Example 8-9 shows the DDL for the table.

Example 8-9 DDL of row-organized dimension table

CREATE TABLE "SAPD01 "."/BIC/DROWCUBE13" (

"DIMID" INTEGER NOT NULL WITH DEFAULT 0 ,

"/B49/S_DISTR_CHA" INTEGER NOT NULL WITH DEFAULT 0 ,

"/B49/S_DIVISION" INTEGER NOT NULL WITH DEFAULT 0 ,

"/B49/S_SALESORG" INTEGER NOT NULL WITH DEFAULT 0 )

COMPRESS YES ADAPTIVE

IN "D01#DIMD" INDEX IN "D01#DIMI"

ORGANIZE BY ROW;

-- DDL Statements for Indexes on Table "SAPD01 "."/BIC/DROWCUBE13"

CREATE UNIQUE INDEX "SAPD01 "."/BIC/DROWCUBE13~0" ON "SAPD01 "."/BIC/DROWCUBE13"

("DIMID" ASC)

PCTFREE 0 COMPRESS YES

INCLUDE NULL KEYS ALLOW REVERSE SCANS;

-- DDL Statements for Primary Key on Table "SAPD01 "."/BIC/DROWCUBE13"

ALTER TABLE "SAPD01 "."/BIC/DROWCUBE13"

ADD CONSTRAINT "/BIC/DROWCUBE13~0" PRIMARY KEY

("DIMID");

CREATE INDEX "SAPD01 "."/BIC/DROWCUBE13~01" ON "SAPD01 "."/BIC/DROWCUBE13"

("/B49/S_DISTR_CHA" ASC,

"/B49/S_DIVISION" ASC,

"/B49/S_SALESORG" ASC)

PCTFREE 0 COMPRESS YES

INCLUDE NULL KEYS ALLOW REVERSE SCANS;

CREATE INDEX "SAPD01 "."/BIC/DROWCUBE13~02" ON "SAPD01 "."/BIC/DROWCUBE13"

("/B49/S_DIVISION" ASC)

PCTFREE 0 COMPRESS YES

INCLUDE NULL KEYS ALLOW REVERSE SCANS;

CREATE INDEX "SAPD01 "."/BIC/DROWCUBE13~03" ON "SAPD01 "."/BIC/DROWCUBE13"

("/B49/S_SALESORG" ASC)

PCTFREE 0 COMPRESS YES

INCLUDE NULL KEYS ALLOW REVERSE SCANS;

The row-organized table has the same primary key constraint as the column-organized table and three additional indexes. There is no correspondence to the unique constraint /BIC/DBLUCUBE13~99.

Due to the structure and access patterns of dimensions tables, data skipping brings no benefit. Thus, as of DB2 Cancun Release 10.5.0.4, synopsis tables are no longer created for InfoCube dimension tables. This is achieved with the DB2 registry setting DB2_CDE_WITHOUT_SYNOPSIS=%:/%/D% which is part of DB2_WORKLOAD=SAP.

If you have created column-organized InfoCubes with DB2 10.5 FP3aSAP or lower, you can remove the dimension synopsis tables with the following steps:

1. Identify the dimension tables for which synopsis tables exist with the following SQL statement:

SELECT

VARCHAR(TABNAME,40) AS SYNOPSIS,

SUBSTR(TABNAME,23,20) AS DIMENSION

FROM

SYSCAT.TABLES

WHERE

TABSCHEMA='SYSIBM' AND

TABNAME LIKE 'SYN%/%/D%'

For example, the output might contain entries similar to Example 8-10 for the dimension tables of BLUCUBE1.

Example 8-10 Example output

SYNOPSIS DIMENSION

---------------------------------------- --------------------

SYN140723110501110392_/BIC/DBLUCUBE13 /BIC/DBLUCUBE13

SYN140723110501383201_/BIC/DBLUCUBE14 /BIC/DBLUCUBE14

SYN140723110500543747_/BIC/DBLUCUBE11 /BIC/DBLUCUBE11

SYN140723110500936031_/BIC/DBLUCUBE12 /BIC/DBLUCUBE12

SYN140723110501571646_/BIC/DBLUCUBE15 /BIC/DBLUCUBE15

SYN140723110501770345_/BIC/DBLUCUBE1P /BIC/DBLUCUBE1P

SYN140723110501952166_/BIC/DBLUCUBE1T /BIC/DBLUCUBE1T

SYN140723110502188692_/BIC/DBLUCUBE1U /BIC/DBLUCUBE1U

2. Run ADMIN_MOVE_TABLE for each dimension table, where <schema> is the table schema and <table> is the table name, as follows:

CALL SYSPROC.ADMIN_MOVE_TABLE(‘<schema>’,’<table>’,’’,’’,’’,’’,’’,’’,’’,’COPY_USE_LOAD’,’MOVE’)

With this step, the dimension tables are copied to new column-organized tables without synopsis tables.

InfoCube index, statistics, and space management

When you load data into InfoCubes, you can drop fact table indexes before the data load and re-create them after. For row-organized InfoCubes with many indexes defined on the fact tables, re-creating the fact table indexes might take a long time if the fact table is large.

Because BLU Acceleration needs only primary key and unique constraints but not secondary indexes, the drop and re-create index operations in SAP BW do not perform any work. Therefore, they are fast. If have dropping and re-creating fact table indexes included in your process chains, modifications are necessary. These operations return immediately.

The SAP BW administration window for InfoCubes also contains the options to drop and to re-create InfoCube indexes. You get there when you call up the context menu of an InfoCube and select Manage, as shown in Figure 8-25.

Figure 8-25 Call-up of the InfoCube administration window in the SAP BW Datawarehousing Workbench

Figure 8-26 shows the “Performance” tab page of the InfoCube administration window that has the following options:

•Delete DB Indexes (Now)

•Repair DB Indexes (Now)

•Create DB Index (Batch)

•Delete DB Index (Batch)

These options perform no work on BLU Acceleration InfoCubes.

Figure 8-26 InfoCube index and statistics management panel

As for row-organized tables, DB2 collects statistics on BLU Acceleration tables automatically. You might still want to include statistics collection into your data load process chains to make sure that InfoCube statistics are up-to-date immediately after new data was loaded.

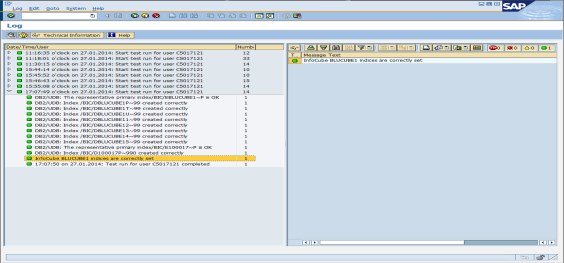

SAP BW transaction RSRV provides an option to check and repair the indexes of an InfoCube. You can run this check also for BLU Acceleration InfoCubes. Figure 8-27 shows “Database indexes of InfoCube and its Aggregates” is run for the BLU Acceleration InfoCube BLUCUBE1.

Figure 8-27 SAP BW Transaction RSRV: InfoCube index check and repair function

The result is shown in Figure 8-28. Only the unique constraint that is defined on the E fact table and the unique constraints that are defined on the dimension tables are listed.

Figure 8-28 Result of index check for sample BLU Acceleration InfoCube

Figure 8-29 shows the output for the row-organized InfoCube ROWCUBE1 in comparison. For this InfoCube, many more indexes are listed.

Figure 8-29 Comparison of index check output of BLU Acceleration InfoCube with row-organized InfoCube

8.5.2 Flat InfoCubes in SAP BW 7.40

This section provides information about flat InfoCubes, which become available for DB2 as of SAP BW 7.40 Support Package 8. We show how to create flat InfoCubes and how to convert existing standard InfoCubes to flat InfoCubes. We illustrate the procedure with an example in an SAP BW 7.40 system in which the BI DataMart Benchmark InfoCubes have been installed (InfoArea BI Benchmark).

Note the following important information about flat InfoCubes:

•Flat InfoCubes are only supported with DB2 for LUW Cancun Release 10.5.0.4 and higher. The tables of flat InfoCubes are always created as column-organized tables. For optimal performance of reporting on flat InfoCubes the InfoObjects that are referenced by the InfoCubes should also be implemented with column-organized tables (see 8.5.4, “Column-Organized InfoObjects in SAP BW” on page 332).

•Both cumulative and non-cumulative InfoCubes can be created as flat InfoCubes. However, DB2 does not support the creation of real-time InfoCubes as flat InfoCubes.

•Flat InfoCubes cannot be created directly in the Data Warehousing Workbench. You must create a standard InfoCube first and then convert it to flat with report RSDU_REPART_UI.

•You can also convert existing standard InfoCubes that contain data to flat InfoCubes with report RSDU_REPART_UI.

•When you install InfoCubes from the SAP BI Content, they are created as non-flat InfoCubes. If you want these InfoCubes to be flat you need to convert them with report RSDU_REPART_UI.

•If you want to create a flat semantically partitioned InfoCube, you must first create a non-flat InfoCube and then convert the InfoCubes of which the semantically partitioned InfoCube consists to flat InfoCubes with report RSDU_REPART_UI.

•When you create a flat InfoCube and transport it to another SAP BW system, the following is created in the target system:

– If the InfoCube already exists in the target system, the target system settings are preserved, that is:

• If the InfoCube is flat in the target system it remains flat.

• If the InfoCube is non-flat in the target system it remains non-flat.

– If the InfoCube does not yet exist in the target system it is created as non-flat InfoCube and has to be converted to flat with report RSDU_REPART_UI.

Follow these steps to create a new flat InfoCube:

1. Create a new InfoCube in the Data Warehousing Workbench. New InfoCubes are always created as standard non-flat InfoCubes with two fact tables and up to 16 dimension tables.

2. Run report RSDU_REPART_UI to schedule and run a batch job that converts the standard InfoCube into a flat InfoCube.

In the following example, the creation of a flat InfoCube is shown that illustrates these steps in more detail:

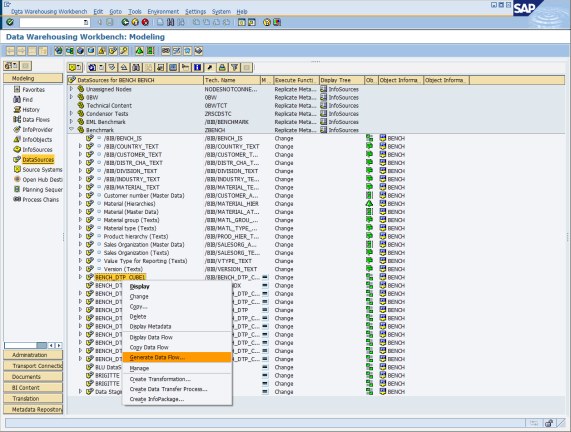

1. Call transaction RSA1 to get to the Data Warehousing Workbench. In the InfoArea BI Benchmark, select Benchmark InfoCube 01 (technical name is /BIB/BENCH01), and choose Copy from the context menu (Figure 8-30).

Figure 8-30 Create a copy of InfoCube /BIB/BENCH01

2. In the Edit InfoCube window, enter FLATCUBE1 as the technical name, BLU Flat Demo InfoCube 1 as the description, and select EXAMPLE (BLU Acceleration Example InfoArea) as the InfoArea. Click the Create icon (Figure 8-31).

Figure 8-31 Create InfoCube window for InfoCube FLATCUBE1

3. The “Edit InfoCube” window in the Data Warehousing Workbench opens. Save and activate the InfoCube (Figure 8-32 on page 300). You do not need to choose between index clustering, multi-dimensional clustering (MDC) or column-organized tables in the Clustering dialog because it is not important how the InfoCube tables are created. When you convert the InfoCube to a flat InfoCube new column-organized tables are created for the InfoCube.

Figure 8-32 Edit InfoCube window for InfoCube FLATCUBE1

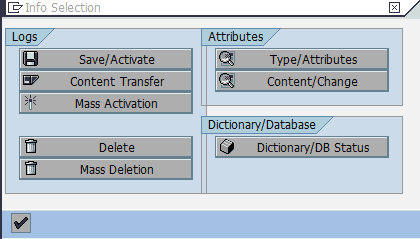

4. First the InfoCube is created as an enhanced star schema InfoCube with two fact and 16 dimension tables. You can check this by displaying the list of database tables that were created for the InfoCube: Choose Extras → Information (logs/status) from the menu. The “Info Selection” pop-up window is shown. Choose Dictionary/DB Status (Figure 8-33).

Figure 8-33 Info Selection window for InfoCube FLATCUBE1

5. You see the list of tables (Figure 8-34).

Figure 8-34 List of database tables of InfoCube FLATCUBE1 before conversion to a flat InfoCube

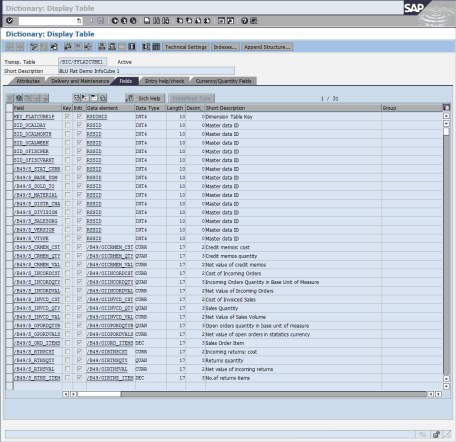

6. Double-click the F fact table /BIC/FFLATCUBE1. In the Dictionary: Display Table window, you see that the table has 8 dimension key columns and 16 key figures (Figure 8-35).

Figure 8-35 Display of F fact table of InfoCube FLATCUBE1 before conversion to a flat InfoCube

7. You have to convert the InfoCube to a flat InfoCube using report RSDU_REPART_UI: Make sure that you switch from Edit mode to Display mode in the Data Warehousing Workbench or that you close the Data Warehousing Workbench so that the InfoCube is not locked in the SAP BW system. Call transaction SE38 and run report RSDU_REPART_UI (Figure 8-36).

Figure 8-36 Report for converting standard InfoCubes to flat InfoCubes

8. Enter the name of the InfoCube (FLATCUBE1) in the InfoCube entry field and select Non-flat to flat conversion. For the conversion, a batch job is created that you can schedule. Choose Initialize (Figure 8-37 on page 304).

Figure 8-37 Initialize flat InfoCube conversion

9. Several pop-up windows with questions are shown. These questions are important when you convert InfoCubes that contain data to flat InfoCubes. In the current example, where the InfoCube is new and thus empty, you can answer these questions with Yes:

– Confirm that you have taken a database backup (Figure 8-38).

Figure 8-38 Inquiry about database backup

This is important when you convert existing InfoCubes with data to flat InfoCubes. During the conversion, new database tables are created, the data is copied to the new tables and the old tables are dropped. When you have a database backup, you can always go back to the state before the conversion was started.

– Confirm that you have compressed all requests that contain historical movements (Inquiry about compression of requests with historical movements). See Figure 8-39.

Figure 8-39 Inquiry about compression of requests with historical movements

This is important when you convert existing InfoCubes with data to flat InfoCubes. When you compress historical movements of non-flat InfoCubes, you set the “No Marker Update” flag in the compression window. For flat InfoCubes, this is handled differently: you define directly in the Data Transfer Process (DTP) whether you load requests that contain historical movements. However, this only has an effect for requests that are newly loaded after the conversion of the InfoCube to a flat InfoCube. For requests that already reside in the F fact table, it is no longer possible to detect and correctly compress requests that contain historical movements after the InfoCube conversion to a flat InfoCube.

|

Note: When you convert existing InfoCubes with data, the report checks whether any uncompressed requests with package dimension ID 1 or 2 exist. If this is the case you must compress these requests before the conversion. The package dimension IDs 1 and 2 are reserved for marker records of non-cumulative InfoCubes and for historical movement data in flat InfoCubes.

|

10. The information message shown in Figure 8-40 is displayed.

Figure 8-40 Message about flat InfoCube conversion job creation

When you confirm the message, the “Start Time” window in Figure 8-41 is shown.

Figure 8-41 Schedule flat InfoCube conversion job

Choose Immediate as start time for the conversion batch job and choose Enter. The following information message is shown (Figure 8-42).

Figure 8-42 Message about start of flat InfoCube conversion job

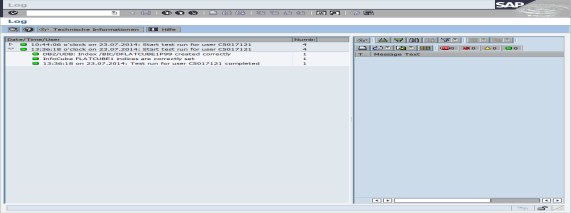

11. On the window of report RSDU_REPART_UI, choose Monitor to track the progress of the conversion until it is finished successfully (Figure 8-43).

Figure 8-43 Successful conversion of InfoCube FLATCUBE1 to a flat InfoCube

12. Go back to the Data Warehousing Workbench and display the InfoCube FLATCUBE1 (Figure 8-44).

Figure 8-44 Edit InfoCube window for flat InfoCube FLATCUBE1

13. Choose Extras → Information (logs/status) from the menu. From the Info Selection pop-up window, select Dictionary/DB status. Now the list of InfoCube tables only contains two tables: the F fact table and the package dimension table (Figure 8-45).

Figure 8-45 Overview of database tables of flat InfoCube FLATCUBE1

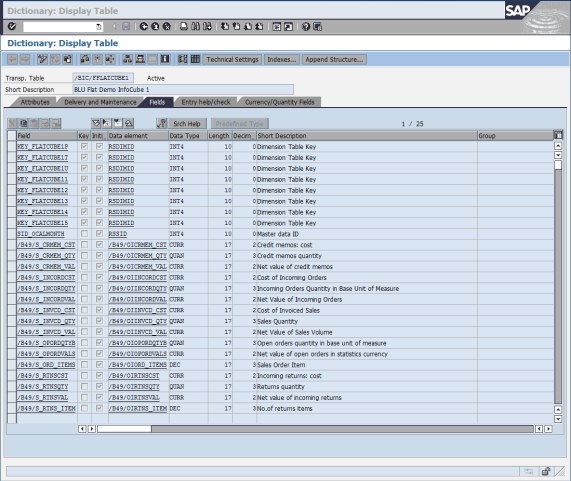

14. Double-click the F fact table /BIC/FFLATCUBE1 to get to the SAP Data Dictionary.

The table fields contain the package dimension key column, 14 columns for the SIDs of all the characteristics, and the 16 key figure columns (Figure 8-46).

Figure 8-46 Display table /BIC/FFLATCUBE1

15. Choose Utilities → Database Object → Database Utility from the menu and select Storage Parameters. In the storage parameters window, you see that the table is column-organized (Figure 8-47).

Figure 8-47 Storage parameters of fact table of flat InfoCube FLATCUBE1

16. Repeat steps 14 and 15 for the package dimension table. You see that the package dimension table is also column-organized.

Table layout of flat InfoCubes

You can use the DB2 db2look tool to extract the DDL for the fact table:

db2look -d D01 -a -e -t "/BIC/FFLATCUBE1"

Example 8-11 shows the DDL of the table.

Example 8-11 DDL describing the fact table of a flat InfoCube

CREATE TABLE "SAPPB3 "."/BIC/FFLATCUBE1" (

"KEY_FLATCUBE1P" INTEGER NOT NULL WITH DEFAULT 0 ,

"/B49/S_BASE_UOM" INTEGER NOT NULL WITH DEFAULT 0 ,

"/B49/S_DISTR_CHA" INTEGER NOT NULL WITH DEFAULT 0 ,

"/B49/S_DIVISION" INTEGER NOT NULL WITH DEFAULT 0 ,

"/B49/S_MATERIAL" INTEGER NOT NULL WITH DEFAULT 0 ,

"/B49/S_SALESORG" INTEGER NOT NULL WITH DEFAULT 0 ,

"/B49/S_SOLD_TO" INTEGER NOT NULL WITH DEFAULT 0 ,

"/B49/S_STAT_CURR" INTEGER NOT NULL WITH DEFAULT 0 ,

"/B49/S_VERSION" INTEGER NOT NULL WITH DEFAULT 0 ,

"/B49/S_VTYPE" INTEGER NOT NULL WITH DEFAULT 0 ,

"SID_0CALDAY" INTEGER NOT NULL WITH DEFAULT 0 ,

"SID_0CALMONTH" INTEGER NOT NULL WITH DEFAULT 0 ,

"SID_0CALWEEK" INTEGER NOT NULL WITH DEFAULT 0 ,

"SID_0FISCPER" INTEGER NOT NULL WITH DEFAULT 0 ,

"SID_0FISCVARNT" INTEGER NOT NULL WITH DEFAULT 0 ,

"/B49/S_CRMEM_CST" DECIMAL(17,2) NOT NULL WITH DEFAULT 0 ,

"/B49/S_CRMEM_QTY" DECIMAL(17,3) NOT NULL WITH DEFAULT 0 ,

"/B49/S_CRMEM_VAL" DECIMAL(17,2) NOT NULL WITH DEFAULT 0 ,

"/B49/S_INCORDCST" DECIMAL(17,2) NOT NULL WITH DEFAULT 0 ,

"/B49/S_INCORDQTY" DECIMAL(17,3) NOT NULL WITH DEFAULT 0 ,

"/B49/S_INCORDVAL" DECIMAL(17,2) NOT NULL WITH DEFAULT 0 ,

"/B49/S_INVCD_CST" DECIMAL(17,2) NOT NULL WITH DEFAULT 0 ,

"/B49/S_INVCD_QTY" DECIMAL(17,3) NOT NULL WITH DEFAULT 0 ,

"/B49/S_INVCD_VAL" DECIMAL(17,2) NOT NULL WITH DEFAULT 0 ,

"/B49/S_OPORDQTYB" DECIMAL(17,3) NOT NULL WITH DEFAULT 0 ,

"/B49/S_OPORDVALS" DECIMAL(17,2) NOT NULL WITH DEFAULT 0 ,

"/B49/S_ORD_ITEMS" DECIMAL(17,3) NOT NULL WITH DEFAULT 0 ,

"/B49/S_RTNSCST" DECIMAL(17,2) NOT NULL WITH DEFAULT 0 ,

"/B49/S_RTNSQTY" DECIMAL(17,3) NOT NULL WITH DEFAULT 0 ,

"/B49/S_RTNSVAL" DECIMAL(17,2) NOT NULL WITH DEFAULT 0 ,

"/B49/S_RTNS_ITEM" DECIMAL(17,3) NOT NULL WITH DEFAULT 0 )

DISTRIBUTE BY HASH("/B49/S_SOLD_TO",

"/B49/S_MATERIAL",

"/B49/S_DISTR_CHA",

"/B49/S_VERSION",

"/B49/S_VTYPE",

"SID_0CALDAY",

"/B49/S_BASE_UOM")

IN "PB3#FACTD" INDEX IN "PB3#FACTI"

ORGANIZE BY COLUMN;

Use the following command to get the DDL of the package dimension table.

db2look -d D01 -a -e -t "/BIC/FFLATCUBE1"

Example 8-12 shows the result.

Example 8-12 DDL describing the package dimension table of a flat InfoCube

CREATE TABLE "SAPPB3 "."/BIC/DFLATCUBE1P" (

"DIMID" INTEGER NOT NULL WITH DEFAULT 0 ,

"SID_0CHNGID" INTEGER NOT NULL WITH DEFAULT 0 ,

"SID_0RECORDTP" INTEGER NOT NULL WITH DEFAULT 0 ,

"SID_0REQUID" INTEGER NOT NULL WITH DEFAULT 0 )

IN "PB3#DIMD" INDEX IN "PB3#DIMI"

ORGANIZE BY COLUMN;

-- DDL Statements for Primary Key on Table "SAPPB3 "."/BIC/DFLATCUBE1P"

ALTER TABLE "SAPPB3 "."/BIC/DFLATCUBE1P"

ADD CONSTRAINT "/BIC/DFLATCUBE1P~0" PRIMARY KEY

("DIMID");

-- DDL Statements for Unique Constraints on Table "SAPPB3 "."/BIC/DFLATCUBE1P"

ALTER TABLE "SAPPB3 "."/BIC/DFLATCUBE1P"

ADD CONSTRAINT "/BIC/DFLATCUBE1P99" UNIQUE

("SID_0CHNGID",

"SID_0RECORDTP",

"SID0REQUID");

Flat InfoCube index, statistics, and space management

The fact table of flat InfoCubes has no primary key or unique constraint and no secondary indexes. Therefore overhead for index management does not occur.

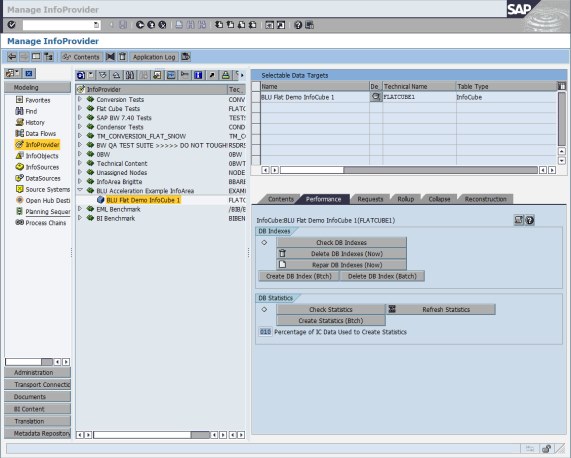

The “Performance” tab of the SAP BW administration window for flat InfoCubes (Figure 8-48) does not contain any options for aggregates because aggregates cannot be defined on flat InfoCubes.

Figure 8-48 Manage flat InfoCube FLATCUBE1 - Performance tab

The “DB indexes” options perform no work on flat InfoCubes.

SAP BW transaction RSRV provides an option to check and repair the indexes of an InfoCube. You can run this check also for flat InfoCubes. Figure 8-49 shows “Database indexes of InfoCube and its Aggregates” is run for the BLU Acceleration InfoCube FLATCUBE1.

Figure 8-49 Check database indexes of flat InfoCube FLATCUBE1

The result is shown in Figure 8-50. Only the unique constraint that is defined on the package dimension table is listed.

Figure 8-50 Result of database index check for flat InfoCube FLATCUBE1

8.5.3 Column-Organized DataStore Objects (DSOs) in SAP BW

In this section, we show how to create standard and write-optimized DSOs such that the active table is created as column-organized table. We illustrate the procedure with an example in an SAP BW 7.40 system in which the BI DataMart Benchmark InfoCubes have been installed (InfoArea BI Benchmark). The example works in exactly the same way in the other SAP BW releases in which BLU Acceleration for DSOs is supported.

The creation of DSOs with a column-organized active table is supported as of DB2 for LUW Cancun Release 10.5.0.4 and in SAP BW as of SAP BW 7.0. For detailed information about the required SAP Support Packages and SAP Notes see 8.2, “Prerequisites and restrictions for using BLU Acceleration in SAP BW”.

Creating DSOs with a column-organized active table can have the following advantages: