Whenever an organization performs a risk assessment, they try to consider multiple variables based on the user, asset, criticality, location, and many technical criteria like hardening, exploits, vulnerabilities, risk surface, exposure, and maintenance. A complete risk assessment model is a daunting task to manually complete if you consider all the possible vectors and methodologies to actually quantify the risk.

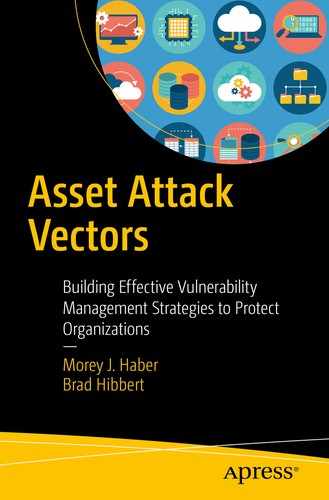

In general, risk assessments start with a simple model (as shown in Figure 3-1) and each vector gets documented and a risk outcome assigned. When we are dealing with multiple risk vectors, the results can be averaged, summed, weighted, or used with other models to produce a final risk score. To make this process efficient and reliable, automation and the minimization of human interaction is of primary concern. Anytime human judgment is applied to a risk vector, the potential for deviations in the results is higher due to basic human opinions and errors. This implies that risk assessment models benefit the most when reliable and automated data is readily available for interpretation versus just user discretion and assignment.

Figure 3-1 Typical risk matrix

When documenting risks for cyber security, the industry has several well-known standards we discussed previously from CVE to CVSS. In the latest revisions, these focus on the technical and environmental aspects of cyber security and more reliable overall scoring. Models such as CVSS have been designed to capture two distinct characteristics of the vulnerability. First, they provide a mechanism to communicate the inherent risk associated with the weakness in a “base” score. The model then enables an organization to adjust the risk base using an environmental and temporal modifier. The environmental modifier is used to adjust the risk for a specific organization by examining the vulnerability in terms of frequency and criticality of the assets for which it is found. The temporal modifier is used to adjust for the likelihood of the vulnerability actually being leveraged, which may change over time. Together these two modifiers can be implemented to account for specific environmental factors, exploits, criticality, and threat intelligence as a part of their risk assessment. Unfortunately, while these modifiers can help to determine the “real risk” associated with vulnerabilities to more appropriately prioritize risk and mobilize remediation activities, the reality is that many organizations do not actively and consistently utilize environmental and temporal scoring mechanisms due to complexity and time. It is, after all, a manual process to assign them to assets and vulnerabilities. In addition, limiting the risk analysis to only these elements does not take into consideration several other factors including, but not limited to:

The existence and availability of the vulnerabilities in noncommercial exploit toolkits

The successful leveraging of the vulnerabilities to breach companies in the wild

The association of vulnerabilities to specific control objectives within regulatory mandates

The likelihood and detection of a breach based on the behavior of the users, mitigating controls, and detection capabilities of the organization

For example, an application may have a vulnerability. It may have a CVSS score and have a security patch from the manufacturer, and the risk score is consistent when communicating the results. This operates as expected. User behavior aspects like application usage, vertical markets targeted for the vulnerability, zero-day exploits, etc., all represent threat intelligence data that must be considered as a part of your risk assessment in order to truly understand the threat.

As an example, consider the recent WannaCry outbreak based on EternalBlue and DoublePulsar. It represented the highest and most extreme risk from a vulnerability and exploit perspective. That was true from day one and is still true today. However, without Threat Intelligence, there is no gauge to understand if the actual threat to the organization is the same today as when it was propagating through corporate networks in 2017. The risk is the same (CVSS vulnerability score), but the actual threat is lower due to the kill switch discovered and implemented on the Internet to stop the wormable aspects of the ransomware. Traditional vulnerability assessment solutions do not take this into consideration and still provide the same score when the threat is actually much higher. The same is true for Meltdown and Spectre based on Intel Microcode. As of the writing of this book, the CVSS score is a 5.6 (out of 0 to 10), but the actual perceived threat is significantly higher. It then begs the question, is it hype, reality, or truly something to watch for the future? A RowHammer attack might actually be perceived as a bigger threat due the nature of its exploitation.

Threat Intelligence is more than just a data feed of user behavior, real-time threats in the wild, active exploits, and temporal data. It gains the highest value when it is merged with relevant information from your organization and your business vertical to provide a profile of the risk and threat: much like the sample risk matrix Impact versus Likelihood. Threat Intelligence helps define the Likelihood in the matrix based on activity in the wild and within other organizations, while traditional technical measurements define the Impact.

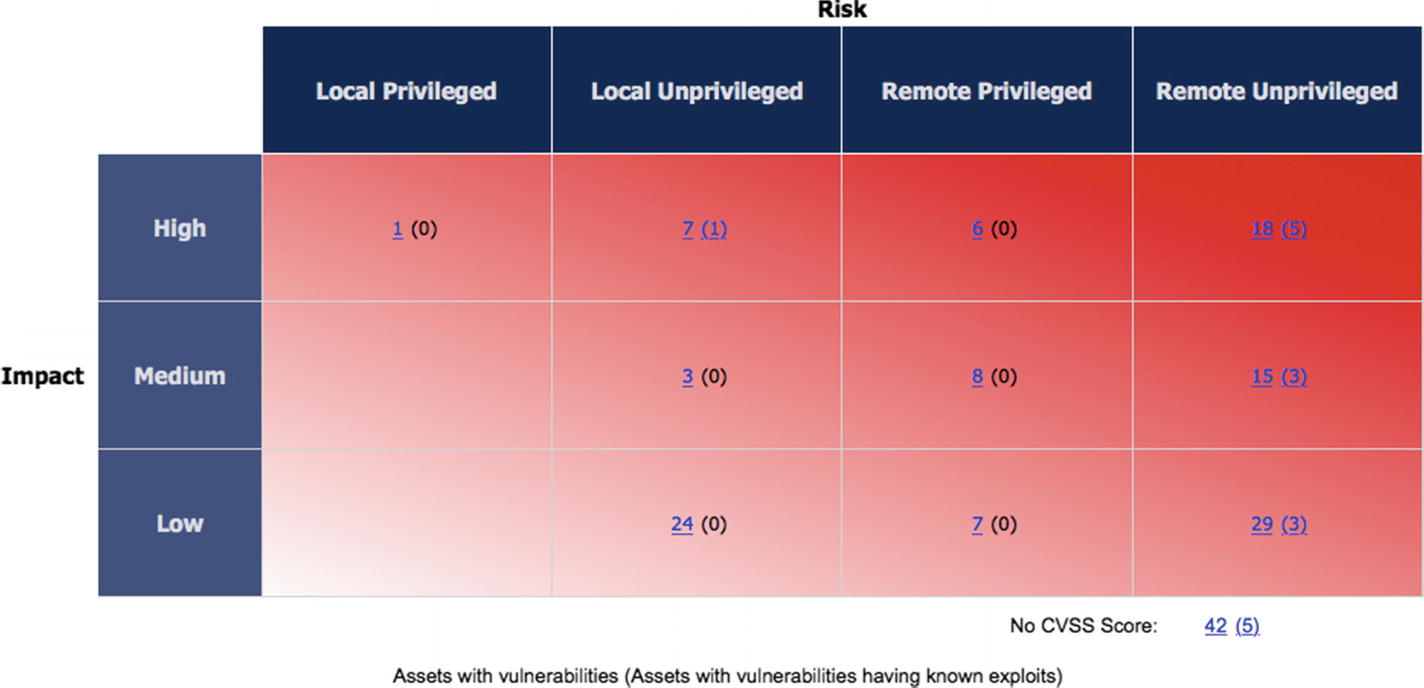

Threat intelligence with well-established methods for application, user, and environmental risk provides the foundation for enhancing vulnerability data. If you can calculate the risk of vulnerable applications, application usage (user behavior), and threat intelligence (exploits), all aspects of the risk can be reported in an automated and coherent fashion. This provides a perspective over time, based on real-world problems and the threats your users and assets face as they use information technology to perform their daily business tasks. Figure 3-2 illustrates this mapping from a sample environment. It illustrates Impact to Risk and asset vulnerabilities to known exploits (shown by numbers in parentheses). It is processed based on current temporal parameters to decide the highest risk (and threat) information for assets in your organization and provides a view that encompasses the requirements for threat intelligence.

Figure 3-2 Risk Matrix for vulnerability data and exploits

Vendors often bolt on or integrate with industry standard models and implement proprietary and sometimes patented threat analytics technologies that can be run stand alone or integrated into broader risk reporting and threat analysis solutions. When making security investments, it is important to understand how these solutions will fit into your overall security program. When examining vulnerability and risk information, plan ahead and know where and how this data may be used including a SWOT analysis. Perhaps consider how this information may be shared across solutions and potentially across organizations. While the focus of this book is not threat analytics or threat intelligence, it is important to acknowledge that there have been a lot of advancements in common frameworks, standards, and community projects that may be applicable to your environment and your vulnerability management program:

CybOX – Cyber Observable eXpression

CIF – Collective Intelligence Framework

IODEF – Incident Object Description and Exchange Format

MILE – Managed Incident Lightweight Exchange

OpenIOC – Open Indicators of Compromise framework

OTX – Open Threat Exchange

STIX – Structured Threat Information Expression

TAXII – Trusted Automated eXchange of Indicator Information

VERIS – Vocabulary for Event Recording and Incident Sharing

It is clear that to achieve meaningful threat analytics, organizations need a core set of security tools to provide the foundational elements for analyzing the risk. It is important to understand that vulnerability visibility provides a wealth of information to drive better threat analytics. As well, threat analytics provides a wealth of information to drive a better understanding of risk and improved remediation response. When planning your vulnerability program, examine your entire security stack to understand where and how to integrate these feeds and to extract as much value and knowledge from your investment. The data should never be an island within the organization. Additionally, organizations should consider augmenting their internal data and threat sources with external sources and service providers to fill in gaps and help target “real” risk where possible. That is intelligence.