Devices and Components Commonly Found in the System/Application Domain

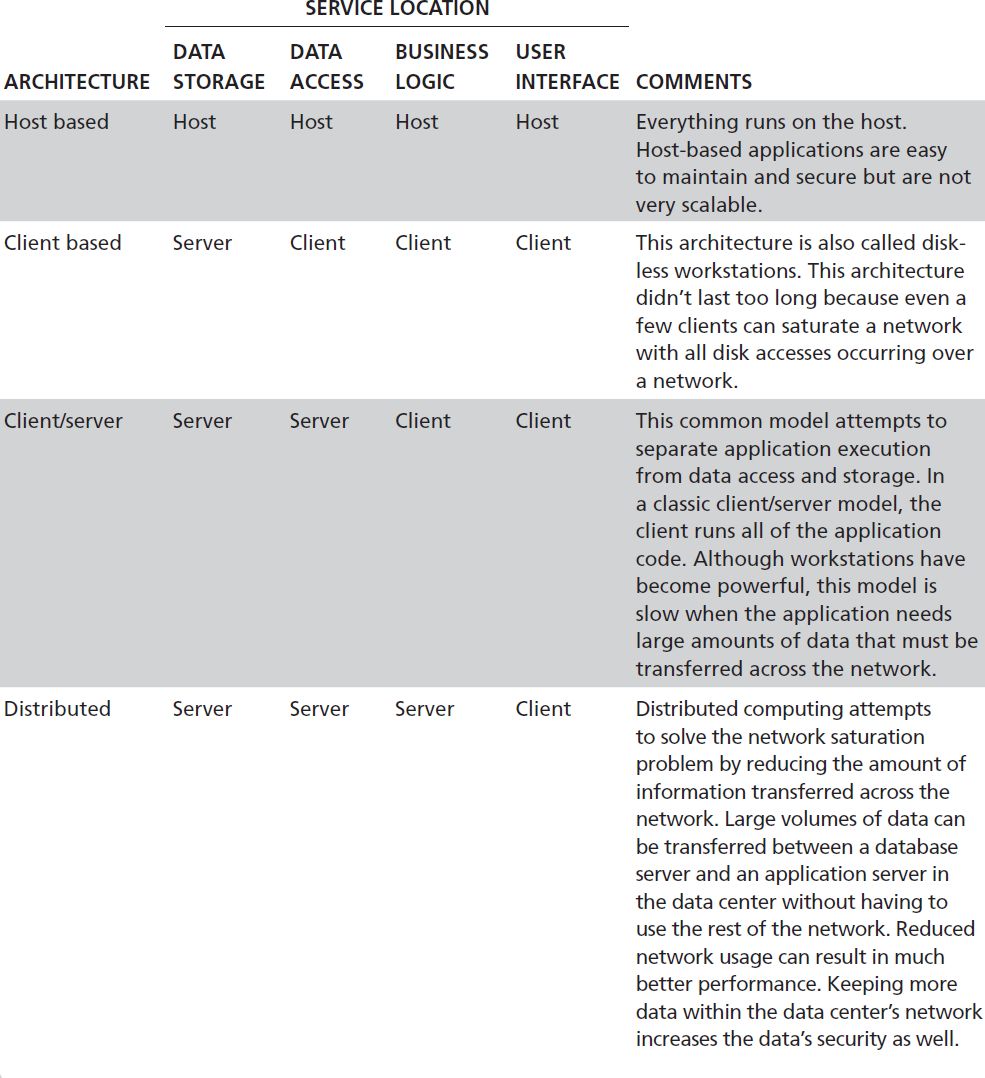

The System/Application Domain contains the application components that your organization runs and the computer systems on which the applications reside. This domain also contains computers, devices, and software components that support the domain’s application software. The rest of this section lists the devices and components you’ll commonly find in the System/Application Domain and some of the controls to ensure compliance. Figure 14-2 shows the devices and components commonly found in the System/Application Domain.

FIGURE 14-2 Devices and components commonly found in the System/Application Domain.

Computer Room/Data Center

The components in the System/Application Domain commonly reside in the same room. The room in which central server computers and hardware reside is called a data center, or just a computer room. This data center can be a facility owned by the organization or service provided. Many companies today are migrating applications to the cloud, which eliminates the need for or reduces the size of the data center. Because the software and data in this domain are central to your organization’s operation, the hardware must stay operational. A well-equipped data center generally has at least the following characteristics:

-

Physical access control—Secure data centers have doors with locks that only a limited number of people can open. Electronic locks or combination locks are common to easily enable a group of people access to the room. Physical access control reduces the likelihood an attacker could physically damage data center hardware or launch an attack using removable media. Inserting a universal serial bus (USB) drive that is infected with malware is one type of attack. Limiting physical access to critical hardware can mitigate that type of attack.

-

Controlled environment—Heating, ventilating, and air conditioning (HVAC) services control the temperature and humidity of a secure data center. Data centers routinely have dozens or even hundreds of computers and devices, all running at the same time. Keeping the temperature and humidity at proper levels allows the hardware to operate without overheating. Data centers also need dependable electrical power. A data center requires enough reliable power to run all computers and devices currently located in the data center, leaving room for growth.

-

Fire-suppression equipment—A data center fire has the potential to wipe out large amounts of data and hardware. Extinguishing a fire helps protect the hardware assets and the data they contain. Unfortunately, water could damage computing hardware as much as fire, so sprinklers aren’t appropriate in data centers. A common solution is the deployment of a roomwide fire-suppression gas to displace the oxygen in the entire room.

-

Easy access to hardware and wiring—Data center components tend to change frequently. Data center personnel must upgrade old hardware, add new hardware, reconfigure existing hardware, and fix broken hardware. Each of these tasks generally involves moving hardware components from one place to another and attaching necessary wires and cables. Data center computers generally don’t have cases like desktop computers do. Often they look like bare components on rails. This design allows them to be used in rack systems. A rack system is an open cabinet with tracks into which multiple computers can be mounted. You can slide computers in and out like drawers. Using rack systems makes it easy to manage hardware. Because there tends to be a lot of wiring in a data center, many use a raised floor design. Using raised floors with removable access panels makes it easy to access wires and increases the overall airflow throughout the data center.

-

High-speed internal LAN—Many computers in the data center are high-performance server computers. To optimize communication between servers, high-bandwidth networks, such as fiber-optic networks, are common within the data center.

When designing a data center, make sure it can support all the components you need today and in the foreseeable future. Data centers that are flexible and scalable allow your organization to change and grow to reflect business demands.

Redundant Computer Room/Data Center

A disaster recovery plan contains the steps to restore your IT infrastructure to a point where your organization can continue operations. If a disaster occurs that causes damage and interrupts your business functions, it is important to return to productive activities as soon as possible. If your organization can’t carry out its main business functions, it cannot fulfill its purpose. A solid disaster recovery plan (DRP) carefully identifies each component of your IT infrastructure that is critical to your primary business functions. Then, the plan states the steps you can take to replace damaged or destroyed components.

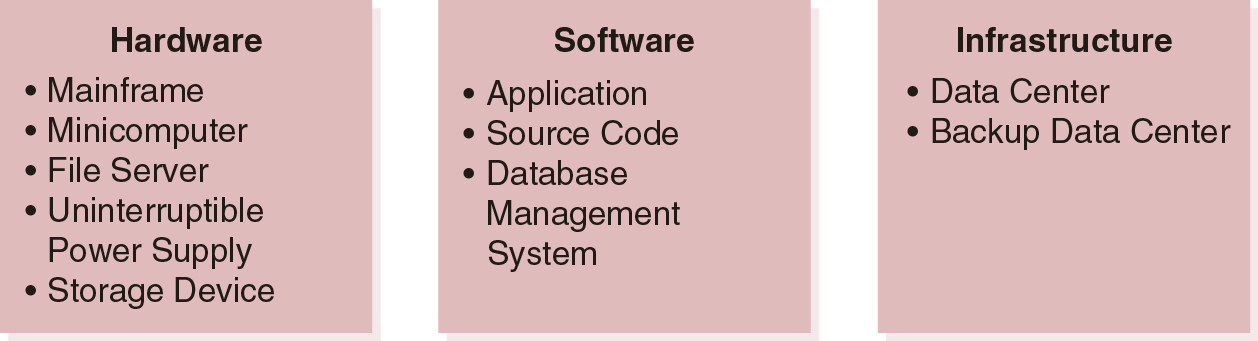

Several options are available for serious disasters that damage or destroy major IT infrastructure components. These are a few of the most common options, starting with the most expensive option with the shortest cutover time:

-

Hot site—This is a complete copy of your environment at a remote site. Hot sites are kept as current as possible with replicated data so switching from your original environment to the alternate environment can occur with a minimum of downtime.

-

Warm site—This is a complete copy of your environment at a remote site. Warm sites are updated with current data only periodically, normally daily or even weekly. When a disaster occurs, there will be a short delay while a switchover team prepares the warm site with the latest data updates.

-

Cold site—This is a site that may have hardware in place, but it will not likely be set up or configured. Cold sites take more time to bring into operation because of the extensive amount of configuration work required for hardware and software.

-

Service level agreement (SLA)—This is a contract with a vendor that guarantees replacement hardware or software within a specific amount of time.

-

Cooperative agreement—A cooperative agreement is between two or more organizations to help each other in case a disaster hits one of the parties. The organization that is not affected by the disaster agrees to allow the other organization to use part of its own IT infrastructure capacity to conduct minimal business operations. There is usually a specified time limit that allows the organization that suffered damage time to rebuild its IT infrastructure.

Figure 14-3 shows disaster recovery options in terms of switchover time and cost.

FIGURE 14-3 Disaster recovery options.

Regardless of which option best suits your organization, the purpose of a disaster recovery plan is to repair or replace damaged IT infrastructure components as quickly as possible to allow the business to continue operation.

Uninterruptible Power Supplies and Diesel Generators to Maintain Operations

There is a lot of confusion between a DRP and a business continuity plan (BCP). The two plans work closely with each other and depend on each other for success. You can summarize the difference between the two plans as follows:

-

A DRP for IT ensures the IT infrastructure is operational and ready to support primary business functions. A DRP for IT focuses mainly on the IT department.

-

A BCP is an organizational plan. It doesn’t focus only on IT. The BCP ensures the organization can survive any disruption and continue operating. If the disruption is major, the BCP will rely on the DRP to provide an IT infrastructure the organization can use.

-

A DRP is a component of a comprehensive BCP.

To summarize, a comprehensive BCP will take effect any time there is a disruption of business functions. An example is a water main break that interrupts water flow to your main office. A DRP takes effect when an event causes a major disruption. A major disruption is one where you must intervene and take some action to restore a functional IT infrastructure. A fire that damages your data center is an example of a disaster.

One type of interruption addressed by a BCP is a power outage. If a data center loses power, computers cannot operate, and the environment can no longer support the hardware. With no power, there is no HVAC, lights, or anything else that relies on electricity. It is important to plan for power outages and place corrective controls to address a loss of power. Two main methods address a power outage. The first method addresses short-term outages, whereas the second method addresses longer-term outages:

-

Uninterruptible power supply (UPS)—A UPS provides continuous usable power to one or more devices. UPS units for data centers are typically much larger than workstation UPS units and can support several devices for longer periods of time. A UPS protects data center devices from power fluctuations and outages from several minutes to even several hours for large, expensive units.

-

Power generator—Generators, which commonly use diesel fuel to create electricity, can deliver power to critical data center components for long periods of time. When a power outage lasts longer than a UPS can power devices, generators can produce electricity as long as they have fuel. Generators are generally not extremely long-term solutions. They will provide power until either regular power is restored or you can move to an alternative data center that has reliable power. If you must locate a data center in a place that does not have reliable power, however, generators can become the primary power source.

Mission-critical data centers require multiple levels of protection to ensure continuous operation. UPS devices and generators are integral parts of a BCP that keep an organization in operation.

Mainframe Computers

Several types of computers make their homes in data centers. The largest type of computer is the mainframe computer. The term mainframe dates back to the early days of computers and originally referred to the large cabinets that housed the processing units and memory modules of early computers. The term came to be used to describe large and extremely powerful computers that can run many applications supporting thousands of users simultaneously. Mainframe computers also have the characteristic of being extremely reliable. Most mainframe computers run without interruption and can even be serviced and upgraded while still operating.

Because the hardware, software, environmental requirements, and maintenance for mainframe computers are all expensive, only the largest organizations typically can justify their use. Mainframe environmental and power requirements created the need for early dedicated data centers. Today’s mainframe computers are powerful hosts for multiple operating systems that run as virtual machines. A virtual machine is a software program that looks and runs like a physical computer. A large mainframe computer can run many virtual machines and provide the services of many physical computers.

Minicomputers

Many organizations realize they need more computing power than basic workstations or PC-based hardware but aren’t ready to commit to a mainframe computer. The first minicomputers started appearing in data centers in the 1960s as an alternative to mainframe computers. Minicomputers are more powerful than workstations but less powerful than mainframe computers. They fit somewhere in the middle and address the needs of medium-sized businesses.

Before the 1980s, minicomputers and mainframe computers were the only types of computers that could handle multiple users and multiple applications at the same time. Smaller computers could only handle single users and one application at a time. The 1980s saw the growth of more capable hardware and operating systems for low-cost computers. These small, inexpensive computers are called microcomputers and still dominate the personal computer and workstation markets.

Minicomputers still exist to address the needs of medium-sized businesses, but they aren’t as common as they were in the past. Some of today’s minicomputers are distinct hardware platforms, and some are actually high-end microcomputers running operating system versions that cater to high performance and reliability. Either way, minicomputer performance and cost fill a need between workstations and mainframe computers.

Server Computers

Some computers in a data center aren’t multipurpose computers but fill specific roles. Computer roles most commonly focus on satisfying client needs for specific services. Computers that perform specific functions for clients are generally called server computers, or just servers. Common servers you’ll find in today’s environments may include the following:

-

File servers

-

Web servers

-

Authentication servers

-

Database servers

-

Application servers

-

Mail servers

-

Media servers

These are only a few of the types of servers in many data centers. Server computers help organizations by allowing a computer to focus all of its resources on a single task, providing a specific service to clients. A collection of separate server computers, each providing a different service, can increase the performance of the entire environment by removing interservice conflicts and competition for a single computer’s resources. Isolating services on separate server computers can also limit the effects of attacks. An attack that compromises a server computer running a single service will have less impact than a compromise of a single computer running many applications and services.

Data Storage Devices

Data centers are convenient places to locate shared storage devices. The central location, managed environment, and higher general level of security make the data center an ideal environment for protected shared storage. Many networks offer managed storage devices that are shared among network users. Shared devices can be attached to file servers or be separate from server computers. Shared storage devices can be disk drives, tape libraries, optical jukeboxes, solid state storage, or any other mechanism used to store data.

One common method to provide shared storage capability to network users is using a storage area network (SAN). A SAN is a collection of storage devices that is attached to a network in such a way that the devices appear to be local storage devices. In effect, the storage devices form their own network that the operating system accesses just like local drives. The SAN devices protect the data by limiting how clients can access the storage devices. SANs can make it easy to keep shared data available and secure.

Another storage device method is referred to as network-attached storage (NAS). While they are both network-based storage solutions, a SAN typically uses Fiber Channel connectivity, while NAS typically ties into the network through a standard Ethernet connection. A SAN stores data at the block level, while NAS accesses data as files.

Applications

Computer applications have matured along with computer hardware capability. Early computing systems placed all data and software capabilities on a central host computer. Clients used simple terminals to connect directly to the host computer to run applications. Application design has changed through several generations to its current level of maturity, the distributed application model. Each architectural change depended on advances in networking support and changed the way applications use networks and resources. Application architectures differ in the location of critical resources. Critical application resources are as follows:

-

Data storage—The interface to physical storage devices, such as disk drives

-

Data access—Software to access stored data, such as database management systems or document management systems

-

Business logic—Application software that accesses and processes data

-

User interface—Application software that interacts with end users

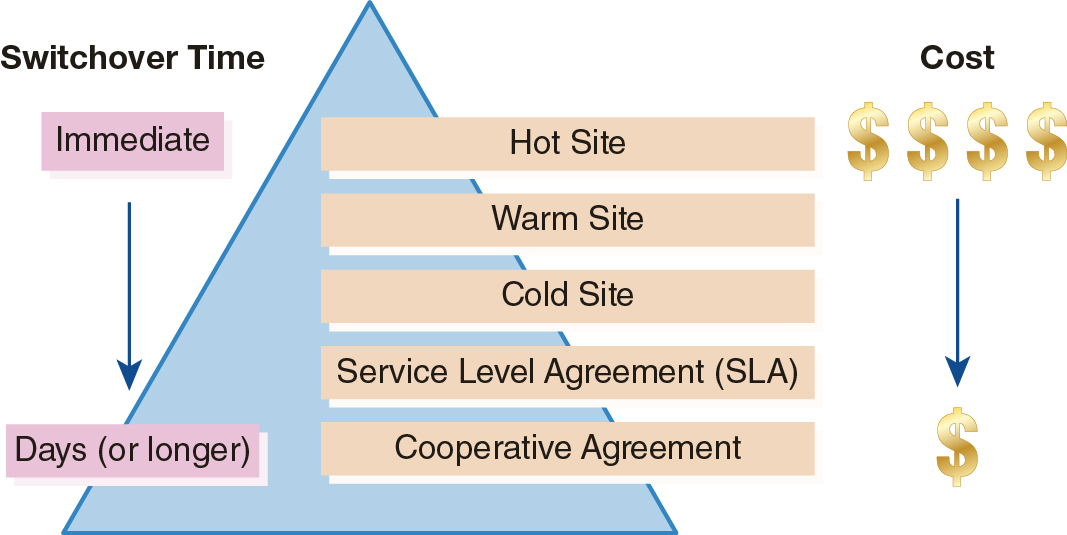

Table 14-1 lists major application architectures and their impact on network resources.

TABLE 14-1 Application architectures.

Although not all applications are fully distributed, the trend for new development efforts is to deploy distributed applications. More and more applications are specifically written to run on application server computers. This move toward distributed applications has an impact on security and compliance. Although many organizations use application servers in a secure data center to run application components, others may just run applications on a generic network computer. Each application must ensure it protects the security of the data it handles.

Source Code

Application software is a collection of computer programs that fulfills some purpose. Programs that computers can run are the result of a process that starts with programmers creating text files for programs, called source code. Source code files are then compiled into programs that computers can run.

The process of changing how an application program runs starts with changing the source code files that correspond to the program you want to change. The programmer would then follow the prescribed procedure to convert the source code into a program the computer can run. This process works for attackers as well. Although it is possible to modify a computer program directly, it is far more difficult than modifying the source code. An important step in securing applications is to remove the source code. Without source code, it is very difficult to modify an application.

Databases and Privacy Data

Very few applications run as standalone programs. Nearly every application accesses data of some sort. Enterprise applications may access databases that are hundreds of gigabytes or even terabytes in size. Databases that store this much data are valuable targets for attackers and should be the focus of your security efforts in the System/Application Domain. Data are crucial assets in many of today’s organizations. An organization’s ability to keep its data secure is critical to its public image and is mandatory to maintain compliance with many requirements.

Because the database is where many organizations store sensitive data, it is the last barrier an attacker must compromise. In a secure environment, an attacker must compromise several layers of security controls to get to the actual database. Even though the hope is that an attacker never gets that far, you should implement additional controls to ensure you protect the data in your database from local attacks. The database should be the center of your security control efforts. You should take every opportunity to restrict access to the sensitive data in your database, including using controls provided by your database management system.

You’ll learn about specific database controls in the “Access Rights and Access Controls in the System/Application Domain” section later in this chapter. Just because your database resides in a secure data center, you shouldn’t assume it is safe. Use the security controls available to you at every level possible. Your job is to make an attacker’s job as difficult as possible

Secure Coding

For the fifth straight year, the U.S. Department of Commerce’s National Institute of Standards and Technology’s (NIST’s) National Vulnerability Database recorded 18,376 vulnerabilities as of December 8, 2021, which surpassed the 2020 record of 18,351. That averages more than 50 new cybersecurity vulnerabilities every day in 2021. With that in mind, the principle of secure coding helps software developers anticipate and code counter measures to make applications less susceptible to attack.

In simple terms, secure coding is the practice of writing a source code that is compliant with the best security principles for a given function and interface. Secure considers how software will be coded, how to detect and defend against cyberattack.

The principle of secure coding assumes every input is suspect and a potential attack vector for a would-be hacker. For example, one strategy is to “validate input” to make sure that it comes from a trusted source. Another strategy is to check for buffer overflow vulnerability. A good developer thinks like a hacker and eliminates any potential unauthorized access.

To illustrate lets consider a simple SQL-injection vulnerability and type of attack. A SQL injection attack consists of inserting SQL queries as part of the input data. For example, assume your application has a search function for a name of a customer that can be up to 30 characters in length. The application takes input fields and forms a SQL call against a database like MS SQL Server or Oracle. Now let’s type the input as expected: We type “Dwayne Johnson the Rock!” into the name field and his customer information pops up. All good. But what would happen if we typed a well-formed SQL statement instead of a name such as “DELETE FROM Customers.” Potentially the entire customer table was just deleted.

For discussion purposes, we will not show how to create a well-formed SQL injection statement into an input field as this is not a book to teach hacking methods. SQL injection attacks are one of the most prevalent vulnerabilities. A successful SQL injection exploit can read sensitive data from the database, modify database data, and delete database data. The essential point is that secure coding should prevent such vulnerabilities. This includes coding software that ensures inputs entered should be validated against expected input types:

-

SQL statements should not appear in text input fields.

-

Field types and lengths should match expected inputs.

Secure code will help to prevent many cyberattacks from happening because it removes the vulnerabilities that attack vectors rely on. If the software has a security vulnerability it can be exploited. As the security community becomes more cognizant of common vulnerabilities, best practices and improved secure coding techniques are published. A good developer continually researches vulnerability and the latest secure coding techniques.

Additional secure coding practices include strong coding practices related to the following:

-

Input validation

-

File integrity checking such as checksum

-

Encryption

-

Authentication

-

Password management

-

Session management

-

Error handling

-

Audit logs

-

System configuration

-

Database security

-

File management

-

Memory management

Does that seem like a lot to keep in mind when coding an application? It is. Fortunately, today we have scanners that can automate the source code analysis looking for common vulnerability and poor coding habits. These tools in addition to reducing vulnerabilities also help the developer create cleaner and more optimized code.