![]()

Resources, References, and Tools

There is a plethora of big data products available from large and small vendors. Some of these products cater to niche areas like social media analytics and NoSQL databases, while some have a Hadoop ecosystem with a combination of infrastructure, visualization, and analytical capabilities. This chapter gives a broad overview of many of the products you will need to implement the architecture and patterns described in this book.

Problem

List the main big data product areas and the associated vendors of those products.

Solution

Table 11-1 lists tools you can use as a solution, as well as the vendors providing those tools.

Table 11-1. Distributed and Clustered Flume Taxonomy

Tools |

Vendors |

|---|---|

Hadoop Distributions |

Cloudera |

In-Memory Hadoop |

Intel |

Hadoop Alternatives |

HPCC Systems from LexisNexis |

Hadoop SQL Interfaces |

Apache Hive |

Ingestion Tools |

Flume |

Map Reduce Alternatives |

Spark |

Cloud Options |

AWS EMR |

NoSQL Databases |

IBM Netezza |

In-Memory Database |

SAP HANA |

Visualization |

Tableau |

Search |

Solr |

Analytics |

SAS |

Integration Tools |

Talend |

Operational Intelligence Tools |

Splunk |

Graph Databases |

Neo4J |

Document Store Database |

MongoDB |

Datasets |

InfoChimps |

Social Media Integrator |

Clarabridge |

Archive Infrastructure |

EMC Ipsilon |

Data Discovery |

IBM Vivisimo |

Table-Style Database |

Cassandra |

Problem

Apache Hadoop does not come integrated with all the components required for an enterprise-scale big data system. Do I have any better options to save the time and effort to configure multiple frameworks?

Solution

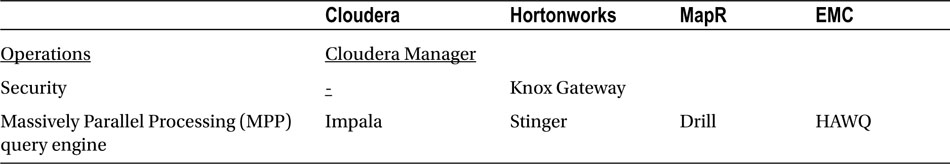

Because the Hadoop ecosystem is made up of multiple entities (Hive, Pig, HDFS, Ambari, and others), with each entity maturing individually and coming up with newer versions, there are chances of version incompatibility, security, and performance-tuning issues. Vendors like Cloudera, MapR and Hortonworks do a good job to package it all together into one distribution and manage the incompatibility, performance, and security issues within the packaged distribution. This greatly helps because the maintenance support for these open source entities is only through forums.

These vendors are coming up with their own SQL interfaces and monitoring dashboards and contributing back to the Apache Hadoop community, thereby enriching the open source community. Here are some examples:

- MapR stands out as a unique file system. Unlike the HDFS file systems in Apache Hadoop, MapR allows you to mount the cluster as an NFS volume. “HDFS” is replaced by “Direct Access NFS.” Further details can be found at http://www.mapr.com/Download-document/4-MapR-M3-Datasheet .

- Hortonworks stands out for its offering on Windows operating systems. Hortonworks is the only vendor providing Hadoop on Windows OS.

- Intel provides Hadoop storage that is in-memory.

A typical packaged distribution covers all of the open source Hadoop entities.

Intel provides optimization for solid state disks and cache acceleration. See www.intel.com/bigdata for information on Intel’s big data resources in general.

Over and above the core open source Hadoop entities, vendors provide additional services such as those shown in Table 11-2.

Table 11-2. Vendor-Specific Hadoop Services

Problem

Is Apache Hadoop the only option to implement big data, map reduce, and a distributed file system?

Solution

The nearest open source alternative to Hadoop is the HPCC system. Refer to http://hpccsystems.com/Why-HPCC/How-it-works .

Unlike Hadoop, HPCC provides massively parallel processing and a shared nothing architecture not based on any type of key-value NoSQL databases. See http://hpccsystems.com/Why-HPCC/case-studies/lexisnexis for a case study of HPCC implementation in LexisNexis document management.

Problem

How can I improve the performance of my Hive queries?

Solution

Apache Hive is the most widely used open source SQL interface. Apache Hive can run over HDFS or over the NoSQL HBase columnar database. Apache Hive was developed to make a SQL developer’s life easier. Instead of forcing developers to learn a new language or learning new CLI commands to run MapReduce code, Apache Hive provides a SQL-like language to trigger map reduce jobs.

Cloudera Impala, EMC HAWQ, and Hortonworks Stinger are some of the products available that can overcome the performance issues encountered while using Apache Hive. Hortonworks Stinger is relatively new to the market. New aggregate functions, optimized query, and optimized Hive runtime are some of the features added in these products.

There are many resources you can consult to find comparisons of EMC HAWQ with Cloudera Impala and other such products. One that you may find useful is at: https://www.informationweek.com/software/information-management/cloudera-impala-brings-sql-querying-to-h/240153861 . You find more information going to http://www.cloudera.com/content/cloudera/en/products/cdh/impala.html and http://hortonworks.com/blog/100x-faster-hive .

Problem

What are the essential tools/frameworks required in your big data ingestion layer?

Solution

There are many product options to facilitate batch-processing-based ingestion. Here are some major frameworks available:

- Apache Sqoop: A tool used for transferring bulk data from RDBMS to Apache Hadoop and vice-versa. It offers two-way replication, with both snapshots and incremental updates.

- Chukwa: Chukwa is a Hadoop subproject that is designed for efficient log processing. It provides a scalable distributed system for monitoring and analyzing log-based data. It supports appending to existing files and can be configured to monitor and process logs that are generated incrementally across many machines.

- Apache Kafka: A distributed publish-subscribe messaging system. It is designed to provide high-throughput persistent messaging that’s scalable and allows for parallel data loads into Hadoop. Its features include the use of compression to optimize I/O performance and mirroring to improve availability and scalability and to optimize performance in multiple-cluster scenarios. It can be used as the framework between the router and Hadoop in the multi-destination pattern implementation.

- Flume: A distributed system for collecting log data from many sources, aggregating it, and writing it to HDFS. It is based on streaming data flows. Flume provides extensibility for online analytic applications. However, Flume requires a fair amount of configuration, which can become complex for very large systems.

- Storm: Supports event-stream processing and can respond to individual events within a reasonable time frame. Storm is a general-purpose, event-processing system that uses a cluster of services for scalability and reliability. In Storm terminology, you create a topology that runs continuously over a stream of incoming data. The data sources for the topology are called spouts, and each processing node is called a bolt. Bolts can perform sophisticated computations on the data, including output to data stores and other services. It is common for organizations to run a combination of Hadoop and Storm services to gain the best features of both platforms.

- InfoSphere Streams: Performs complex analytics of heterogeneous data types. InfoSphere Streams can support all data types. It can perform real-time and look-ahead analysis of regularly generated data, using digital filtering, pattern/correlation analysis, and decomposition, as well as geospacial analysis.

- Apache S4: A real-time data ingestion tool used for processing continuous streams of data. Client programs that send and receive events can be written in any programming language. S4 is designed as a highly distributed system. Throughput can be increased linearly by adding nodes into a cluster.

Problem

For multinode parallel processing, is MapReduce the only algorithm option?

Solution

Spark and Nokia DISCO are some of the alternatives to MapReduce. A fair comparison can be found at http://www.bytemining.com/2011/08/Hadoop-fatigue-alternatives-to-Hadoop/ .

Because most vendor products and enhancements are focused on MapReduce jobs, it makes sense to stick to MapReduce unless there is a pressing need to look for a massive parallel processing option.

Problem

Buying inexpensive hardware for large big data implementations can still be a very large capital expense. Are there any pay-as-you-go cloud options?

Solution

Amazon EMR is a public cloud based web service that provides huge data computing power. Amazon EMR uses Amazon S3 for storage unlike the Hadoop HSFs storage. Amazon EMR data ingestion, analysis and import/export concerns are different from a typical HDFS based Hadoop system.

Amazon EMR data ingestion, analysis, and import/export concerns are discussed at http://media.amazonwebservices.com/AWS_Amazon_EMR_Best_Practices.pdf .

Table-Style Database Management Services

Problem

My existing database and analytics experts have RDBMS and SQL skills. How can I quickly make them big data users?

Solution

Cassandra, HP Vertica, DataStax, Oracle Exadata, and Aster Teradata are some table-style, database-management services that run over Hadoop and provide the abstraction needed to reduce latency and improve performance.

Compared to NoSQL DBMSs, table-style DBMSs bring vendor lock-in, hardware dependencies, and complicated clustering. Table-style DBMSs also hog memory and network, and hence have consistency issues across clusters. Data integrity and consistency suffer as performance improves.

Some table-style DBMSs come packaged as a combo of hardware, platform, and software. Hence, the eventual cost and ROI need to be thoroughly investigated before opting for it.

You can find information concerning the total cost of ownership (TCO) for moving to Oracle Exadata at the following web site: http://www.zdnet.com/reproducing-youtube-on-oracle-exadata-1339318266/ .

Problem

Is there a “one solution fits all” NoSQL database?

Solution

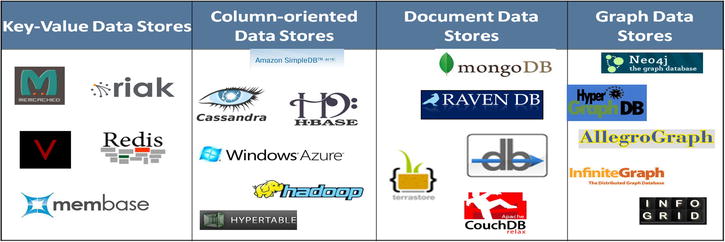

NoSQL databases (Figure 11-1) are very use-case-centric, unlike RDBMS, which are generic and cater to multiple system needs. Maintenance of data integrity and consistency can become a concern in the long run.

Figure 11-1. NoSQL databases

Also, there are multiple key-value pair, graph, and document databases that have very different implementations. Moving from one to another might raise issues.

In-Memory Big Data Management Systems

Problem

Real-time big data analysis requires in-memory processing capabilities. Which are the leading products?

Solution

SAP HANA and Oracle Exalytics are some of the leading products for in-memory processing. Oracle and SAP HANA provide their own set of adapters to connect to various other products, as well. Though different as far as hardware and platform, both products provide comparable features. The only concern is vendor lock-in and the inability to integrate with any other NoSQL database. Implementing these vendor products might require significant changes to a customer’s existing high-availability and disaster-recovery processes.

Problem

Are there any large data sets available in the public domain that I can use for my big data pilot projects?

Solution

Data.gov is an official US government web site. You can visit http://catalog.data.gov/dataset to see about 100,000 datasets belonging to different categories.

Data discovery and search capabilities have gained more importance because of the new non-enterprise and social-media data that has started feeding in to an organization’s decision-making systems. Discovering insights from unstructured data (videos, call center audios, blogs, twitter feeds, Facebook posts) has been a challenge for existing decision-making systems and has given birth to new products like IBM Vivisimo, Oracle Endeca, and others.

You can find more information at the following web sites: https://wikis.oracle.com/display/endecainformationdiscovery/Home;jsessionid=7EF303D9FADB3215001F27A4F4DACFE5 and http://www.ndm.net/datawarehouse/IBM/infosphere-dataexplorer-vivisimo .

Problem

There are so many cool visualization tools coming up every day, how do I select the appropriate tool for my enterprise?

Solution

High-volume, real-time analytics has brought in newer products in the market like the following ones:

- Tableau

- QLikView

- Tibco Spotfire

- MicroStrategy

- SAS VA

From a market presence perspective, QLikView has been around for a while. Comparatively, Tableau is a new entrant into the market. SAS has also jumped into the fray with its SAS Visual Analytics offering. MicroStrategy and Tibco Spotfire also have a substantial market presence.

Though these are in-memory visualization tools that are highly integrated with the Hadoop ecosystem, issues such as the following exist with them:

- Data is expected in a certain format for better performance.

- Integration with Apache Hive is at times not of very high performance.

- Apache Hive has to be modified to provide data in an aggregated manner to get high performance.

Here are some of the available analytics tools:

- Revolution R Analytics

- SAS

Though Revolution R Analytics is a new entrant into the market, it has made an immense impact. Because SAS is the old horse, it has an advantage over Revolution Analytics. However, it might be too costly an affair in the long run.

Problem

I already invested in business intelligence (BI) tools like Talend, Informatica, and others. Can I use them for big data integration?

Solution

Talend, Informatica, Pentaho, and IBM DataStage are some of the tools that can act as ETL tools, as well as scheduling tools.

Some tools are still not mature and do not have all the adapters and accelerators needed to connect to all the Hadoop ecosystem entities. There might be restrictions on which versions of the entities these might work with.

Not all the tools are compatible with different distributions of Hadoop (like Cloudera, Hortonworks, MapR, Intel, and IBM BigInsights).

You can read about the Talend features at http://www.talend.com/products/big-data/matrix .

You can find additional information about Informatica PowerExchange at http://www.informatica.com/us/products/enterprise-data-integration/powerexchange/ .

Summary

Since the big data industry is still evolving, there might be more products that will emerge as leaders in the field. As I am writing this, Oracle has launched Big Data X4-2 appliance, Pivotal has launched a Platform as a Service (PaaS) called “Pivotal One,” and AWS has upgraded its Elastic MapReduce offering to Hadoop 2.2 with the YARN framework. More updates and upgrades with better performance will follow. Architects should keep themselves abreast of the latest developments so that they can recommend the right products to their customers.