CHAPTER 2

Cloud Concepts, Architecture, and Design

This chapter covers the following topics in Domain 1:

• Cloud computing players and terms

• Cloud computing essential characteristics

• Cloud service categories

• Cloud deployment models

• Cloud shared considerations

• Impact of related technologies

• Security concepts relevant to cloud computing

• Certifications

• Cloud cost-benefit analysis

• Cloud architecture models

The term “cloud” is heard almost everywhere in advertising and popular culture. From television commercials proclaiming “take it to the cloud” to the ubiquitous presence of products such as Apple’s iCloud, Microsoft’s OneDrive, and the plethora of Google apps, the term is one that even the most novice of technology consumers know, even if they have scant knowledge or understanding of what a cloud is or does.

As security professionals working in a cloud environment, much of our knowledge and best practices from traditional data center models still apply, but a thorough understanding of cloud computing concepts and the different types of clouds and cloud services is paramount to successfully implementing and overseeing a security policy and compliance.

The National Institute of Standards and Technology (NIST) of the United States has published Special Publication (SP) 800-145, “The NIST Definition of Cloud Computing,” which gives their official definition of cloud:

Cloud computing is a model for enabling ubiquitous, convenient, on-demand network access to a shared pool of configurable computer resources (e.g., networks, servers, storage, applications, and services) that can be rapidly provisioned and released with minimal management effort or service provider interaction. This cloud model is composed of five essential characteristics, three service models, and four deployment models.

Rather than the classic data center model with server hardware, network appliances, cabling, power units, and environmental controls, cloud computing is predicated on the concept of purchasing “services” to comprise various levels of automation and support based on the needs of the customer at any point in time. This is in contrast to the classic data center model, which requires a customer to purchase and configure systems for their maximum capacity at all times, regardless of need, due to business cycles and changing demands.

Cloud Computing Concepts

Before we dive into more thorough discussions of cloud concepts and capabilities, it is important to lay a strong foundation of cloud computing definitions first via a general overview of the technologies involved. This will form the basis for the rest of this chapter as well as the book as a whole.

Cloud Computing Definitions

The following list presents some introductory definitions for this chapter, based on ISO/IEC 17788, “Cloud Computing—Overview and Vocabulary.” Many more definitions will be given later to build on this definitions primer (see also the glossary in this book).

• Cloud application An application that does not reside or run on a user’s device but rather is accessible via a network.

• Cloud application portability The ability to migrate a cloud application from one cloud provider to another.

• Cloud computing Network-accessible platform that delivers services from a large and scalable pool of systems, rather than dedicated physical hardware and more static configurations.

• Cloud data portability The ability to move data between cloud providers.

• Cloud deployment model How cloud computing is delivered through a set of particular configurations and features of virtual resources. The cloud deployment models are public, private, hybrid, and community.

• Cloud service Capabilities offered via a cloud provider and accessible via a client.

• Cloud service category A group of cloud services that have a common set of features or qualities.

• Community cloud A cloud services model where the tenants are limited to those that have a relationship together with shared requirements, and are maintained or controlled by at least one member of the community.

• Data portability The ability to move data from one system or another without having to re-enter it.

• Hybrid cloud A cloud services model that combines two other types of cloud deployment models.

• Infrastructure as a Service (IaaS) A cloud service category where infrastructure-level services (such as processing, storage, and networking) are provided by a cloud service provider.

• Measured service Cloud services are delivered and billed for in a metered way.

• Multitenancy Having multiple customers and applications running within the same environment, but in a way that they are isolated from each other and oftentimes not visible to each other, yet share the same resources.

• On-demand self-service A cloud customer can provision services in an automatic manner, when needed, with minimal involvement from the cloud provider.

• Platform as a Service (PaaS) A cloud service category where platform services, such as Azure or AWS, are provided to the cloud customer, and the cloud provider is responsible for the system up to the level of the actual application.

• Private cloud Cloud services model where the cloud is owned and controlled by a single entity for their own purposes.

• Public cloud Cloud services model where the cloud is maintained and controlled by the cloud provider, but the services are available to any potential cloud customers.

• Resource pooling The aggregation of resources allocated to cloud customers by the cloud provider.

• Reversibility The ability of a cloud customer to remove all data and applications from a cloud provider and completely remove all data from their environment, along with the ability to move into a new environment with minimal impact to operations.

• Software as a Service (SaaS) A cloud service category in which a full application is provided to the cloud customer, and the cloud provider maintains responsibility for the entire infrastructure, platform, and application.

• Tenant One or more cloud customers sharing access to a pool of resources.

Cloud Computing Roles

These definitions represent the basic and most important roles within a cloud system and the relationships between them, based on ISO/IEC 17788:

• Cloud auditor An auditor that is specifically responsible for conducting audits of cloud systems and cloud applications

• Cloud service broker A partner that serves as an intermediary between a cloud service customer and cloud service provider

• Cloud service customer One that holds a business relationship for services with a cloud service provider

• Cloud service partner One that holds a relationship with either a cloud service provider or a cloud service customer to assist with cloud services and their delivery. ISO/IEC 17788 includes the cloud auditor, cloud service broker, and cloud service customer all under the umbrella of cloud service partners.

• Cloud service provider One that offers cloud services to cloud service customers

• Cloud service user One that interacts with and consumes services offered to a cloud service customer by a cloud service provider

Key Cloud Computing Characteristics

Cloud computing has five essential characteristics. In order for an implementation to be considered a cloud in a true sense, each of these five characteristics must be present and operational:

• On-demand self-service

• Broad network access

• Resource pooling

• Rapid elasticity

• Measured service

Each of these characteristics is discussed in more detail in the following sections.

On-Demand Self-Service

Cloud services can be requested, provisioned, and put into use by the customer through automated means without the need to interact with a person. This is typically offered by the cloud provider through a web portal but can also be provided in some cases through web API calls or other programmatic means. As services are expanded or contracted, billing is adjusted through automatic means.

In the sense of billing, this does not just apply to large companies or firms that have contractual agreements with cloud providers for services and open lines of credit or financing agreements. Even small businesses and individuals can take advantage of the same services through such simple arrangements as having a credit card on file and an awareness of the cloud provider’s terms and charges, and many systems will tell the user at the time of request what the additional and immediate costs will be.

Self-service is an integral component of the “pay-as-you-go” nature of cloud computing and the convergence of computing resources as a utility service.

Broad Network Access

All cloud services and components are accessible over the network and, in most cases, through many different vectors. This ability for heterogeneous access through a variety of clients is a hallmark of cloud computing, where services are provided while staying agnostic to the access methods of the consumers. In the case of cloud computing, services can be accessed typically from either web browsers or thick or thin clients, regardless of whether the consumer is using a mobile device, laptop, or desktop, and either from a corporate network or from a personal device on an open network.

The cloud revolution in computing has occurred concurrently with the mobile computing revolution, making the importance being agnostic concerning the means of access a top priority. Because many companies have begun allowing bring-your-own-device (BYOD) access to their corporate IT systems, it is imperative that any environments they operate within be able to support a wide variety of platforms and software clients.

Resource Pooling

One of the most important concepts in cloud computer is resource pooling, or multitenancy. In a cloud environment, regardless of the type of cloud offering, you always will have a mix of applications and systems that coexist within the same set of physical and virtual resources. As cloud customers add to and expand their usage within the cloud, the new resources are dynamically allocated within the cloud, and the customer has no control over (and, really, no need to know) where the actual services are deployed. This aspect of cloud can apply to any type of service deployed within the environment, including processing, memory, network utilization and devices, as well as storage. At the time of provisioning, services can and will be automatically deployed throughout the cloud infrastructure, and mechanisms are in place for locality and other requirements based on the particular needs of the customer and any regulatory or legal requirements that they be physically housed in a particular country or data center. However, these will have been configured within the provisioning system via contract requirements before they are actually requested by the customer, and then they are provisioned within those rules by the system without the customer needing to specify them at that time.

Many corporations have computing needs that are cyclical in nature. With resource pooling and a large sample of different systems that are utilized within the same cloud infrastructure, companies can have the resources they need on their own cycles without having to build out systems to handle the maximum projected load, which means these resources won’t sit unused and idle at other nonpeak times. Significant cost savings can be realized for all customers of the cloud through resource pooling and the economies of scale that it affords.

Rapid Elasticity

With cloud computing being decoupled from hardware and with the programmatic provisioning capabilities, services can be rapidly expanded at any time when additional resources are needed. This capability can be provided through the web portal or initiated on behalf of the customer, either in response to an expected or projected increase in demand of services or during such an increase in demand; the decision to change scale is balanced against the funding and capabilities of the customer. If the applications and systems are built in a way where they can be supported, elasticity can be automatically implemented such that the cloud provider through programmatic means and based on predetermined metrics can automatically scale the system by adding additional resources and can bill the customer accordingly.

In a classic data center model, a customer needs to have ready and configured enough computing resources at all times to handle any potential and projected load on their systems. Along with what was previously mentioned under “Resource Pooling,” many companies that have cyclical and defined periods of heavy load can run leaner systems during off-peak times and then scale up, either manually or automatically as the need arises. A prime example of this would be applications that handle healthcare enrollment or university class registrations. In both cases, the systems have very heavy peak use periods and largely sit idle the remainder of the year.

Measured Service

Depending on the type of service and cloud implementation, resources are metered and logged for billing and utilization reporting. This metering can be done in a variety of ways and using different aspects of the system, or even multiple methods. This can include storage, network, memory, processing, the number of nodes or virtual machines, and the number of users. Within the terms of the contract and agreements, these metrics can be used for a variety of uses, such as monitoring and reporting, placing limitations on resource utilization, and setting thresholds for automatic elasticity. These metrics also will be used to some degree in determining the provider’s adherence to the requirements set forth in the service level agreement (SLA).

Many large companies as a typical practice use internal billing of individual systems based on the usage of their data centers and resources. This is especially true with companies that contract IT services to other companies or government agencies. In a classic data center model with physical hardware, this is much more difficult to achieve in a meaningful way. With the metering and reporting metrics that cloud providers are able to offer, this becomes much more simplistic for companies and offers a significantly greater degree of flexibility, with granularity of systems and expansion.

Building-Block Technologies

Regardless of the service category or deployment model used for a cloud implementation, the core components and building blocks are the same. Any cloud implementation at a fundamental level is composed of processor or CPU, memory/RAM, networking, and storage solutions. Depending on the cloud service category, the cloud customer will have varying degrees of control over or responsibility for those building blocks. The next section introduces the three main cloud service categories and goes into detail about what the cloud customer has access to or responsibility for.

Cloud Reference Architecture

A few major components fit together to form the full picture of a cloud architecture and implementation. These components include the activities, roles, and capabilities that go into managing and operating a cloud environment, as well as the actual cloud service categories and cloud deployment models that serve as the basis for delivery of cloud hosting and services. This includes numerous features and components common to all cloud environments, regardless of the service category or deployment model.

Cloud Computing Activities

Different sets of cloud computing activities are performed by the cloud service customer, the cloud service provider, and the cloud service partner, as outlined in ISO/IEC 17789:2014, “Information technology—Cloud computing—Reference architecture.” To keep things simple, we will limit our discussion here to a high-level overview and sampling of the activities, all of which we discuss in greater detail throughout the book.

Cloud Service Customer

The following roles are performed by the cloud service customer:

• Cloud service user Uses the cloud services

• Cloud service administrator Tests cloud services, monitors services, administers security of services, provides usage reports on cloud services, and addresses problem reports

• Cloud service business manager Oversees business and billing administration, purchases the cloud services, and requests audit reports as necessary

• Cloud service integrator Connects and integrates existing systems and services to the cloud

Cloud Service Provider

The following roles are performed by the cloud service provider:

• Cloud service operations manager Prepares systems for the cloud, administers services, monitors services, provides audit data when requested or required, and manages inventory and assets

• Cloud service deployment manager Gathers metrics on cloud services, manages deployment steps and processes, and defines the environment and processes

• Cloud service manager Delivers, provisions, and manages the cloud services

• Cloud service business manager Oversees business plans and customer relationships as well as processes financial transactions

• Customer support and care representative Provides customer service and responds to customer requests

• Inter-cloud provider Responsible for peering with other cloud services and providers as well as overseeing and managing federations and federated services

• Cloud service security and risk manager Manages security and risks and oversees security compliance

• Network provider Responsible for network connectivity, network services delivery, and management of network services

Cloud Service Partner

The following roles are performed by the cloud service partner:

• Cloud service developer Develops cloud components and services and performs the testing and validation of services

• Cloud auditor Performs audits as well as prepares and authors audit reports

• Cloud service broker Obtains new customers, analyzes the marketplace, and secures contracts and agreements

Cloud Service Capabilities

In a discussion of cloud service delivery, three main cloud service capabilities form the basis for the cloud service categories:

• Infrastructure service capability The cloud customer can provision and have substantial configuration control over processing, storage, and network resources.

• Platform service capability The cloud customer can deploy code and applications using programming languages and libraries that are maintained and controlled by the cloud provider.

• Software service capability The cloud customer uses a fully established application provided by the cloud provider, with minimal user configuration options allowed.

Cloud Service Categories

Although many different terms are used for the specific types of cloud service models and offerings, only three main models are universally accepted:

• Infrastructure as a Service (IaaS)

• Platform as a Service (PaaS)

• Software as a Service (SaaS)

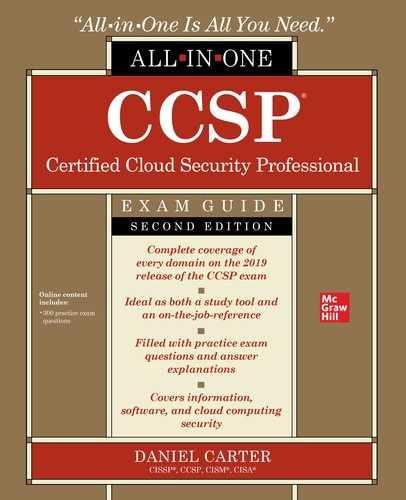

A brief overview of these models is given in Figure 2-1, with more information on each model provided in the following sections.

Figure 2-1 An overview of cloud service categories

Infrastructure as a Service (IaaS)

IaaS is the most basic cloud service and the one where the most customization and control is available for the customer. The following is from the NIST SP 800-145 definition for IaaS:

The capability provided to the consumer is to provision processing, storage, networks, and other fundamental computing resources where the consumer is able to deploy and run arbitrary software, which can include operating systems and applications. The consumer does not manage or control the underlying cloud infrastructure but has control over operating systems, storage, and deployed applications; and possibly limited control of selected networking components (e.g., host firewalls).

Key Features and Benefits of IaaS The following are the key features and benefits of IaaS. Some key features overlap with other cloud service models, but others are unique to IaaS.

• Scalability Within an IaaS framework, the system can be rapidly provisioned and expanded as needed, either for predictable events or in response to unexpected demand.

• Cost of ownership of physical hardware Within IaaS, the customer does not need to procure any hardware either for the initial launch and implementation or for future expansion.

• High availability The cloud infrastructure, by definition, meets high availability and redundancy requirements, which would result in additional costs for a customer to meet within their own data center.

• Physical security requirements Because you’re in a cloud environment and don’t have your own data centers, the cloud provider assumes the cost and oversight of the physical security of its data centers.

• Location and access independence The cloud-based infrastructure has no dependence on the physical location of the customer or users of the system, as well as no dependence on specific network locations, applications, or clients to access the system. The only dependency is on the security requirements of the cloud itself and the application settings used.

• Metered usage The customer only pays for the resources they are using and only during the durations of use. There is no need to have large data centers with idle resources for large chunks of time just to cover heavy-load periods.

• Potential for “green” data centers Many customers and companies are interested in having more environmentally friendly data centers that are high efficiency in terms of both power consumption and cooling. Within cloud environments, many providers have implemented “green” data centers that are more cost effective with the economies of scale that would prohibit many customers from having on their own. Although this is not a requirement for a cloud provider, many major providers do market this as a feature, which is of interest to many customers. Even without specific concerns or priorities for environmental reasons, any organization can appreciate the power and cooling demands of a data center and the financial savings realized within a large cloud environment.

Platform as a Service (PaaS)

PaaS allows a customer to fully focus on their core business functions from the software and application levels, either in development or production environments, without having to worry about the resources at the typical data center operations level. The following is from the NIST SP 800-145 definition of PaaS:

The capability provided to the customer is to deploy onto the cloud infrastructure consumer-created or acquired applications created using programming languages, libraries, services, and tools supported by the provider. The customer does not manage or control the underlying cloud infrastructure, including network, servers, operating systems, or storage, but has control over the deployed applications and possibly configuration settings for the application-hosting environment.

Key Features and Benefits of PaaS The following are the key features of the PaaS cloud service model. Although there is some overlap with IaaS and SaaS, each model has its own unique set of features and details.

• Auto-scaling As resources are needed (or not needed), the system can automatically adjust the sizing of the environment to meet demand without interaction from the customer. This is especially important for those systems whose load is cyclical in nature, and it allows an organization to only configure and use what is actually needed so as to minimize idle resources.

• Multiple host environments With the cloud provider responsible for the actual platform, the customer has a wide choice of operating systems and environments. This feature allows software and application developers to test or migrate their systems between different environments to determine the most suitable and efficient platform for their applications to be hosted under without having to spend time configuring and building new systems on physical servers. Because the customer only pays for the resources they are using in the cloud, different platforms can be built and tested without a long-term or expensive commitment by the customer. This also allows a customer evaluating different applications to be more open to underlying operating system requirements.

• Choice of environments Most organizations have a set of standards for what their operations teams will support and offer as far as operating systems and platforms are concerned. This limits the options for application environments and operating system platforms that a customer can consider, both for homegrown and commercial products. The choice of environments not only extends to actual operating systems, but it also allows enormous flexibility as to specific versions and flavors of operating systems, contingent on what the cloud provider offers and supports.

• Flexibility In a traditional data center setting, application developers are constrained by the offerings of the data center and are locked into proprietary systems that make relocation or expansion difficult and expensive. With those layers abstracted in a PaaS model, the developers have enormous flexibility to move between providers and platforms with ease. With many software applications and environments now open source or built by commercial companies to run on a variety of platforms, PaaS offers development teams enormous ease in testing and moving between platforms or even cloud providers.

• Ease of upgrades With the underlying operating systems and platforms being offered by the cloud provider, upgrades and changes are simpler and more efficient than in a traditional data center model, where system administrators need to perform actual upgrades on physical servers, which also means downtime and loss of productivity during upgrades.

• Cost effective Like with other cloud categories, PaaS offers significant cost savings for development teams because only systems that are currently in use incur costs. Additional resources can be added or scaled back as needed during development cycles in a quick and efficient manner.

• Ease of access With cloud services being accessible from the Internet and regardless of access clients, development teams can easily collaborate across national and international boundaries without needing to obtain accounts or access into proprietary corporate data centers. The location and access methods of development teams become irrelevant from a technological perspective, but the Cloud Security Professional needs to be cognizant of any potential contractual or regulatory requirements. For example, with many government contracts, there may be requirements that development teams or the hosting of systems and data be constrained within certain geographic or political borders.

• Licensing In a PaaS environment, the cloud provider is responsible for handling proper licensing of operating systems and platforms, which would normally be incumbent on an organization to ensure compliance. Within a PaaS cloud model, those costs are assumed as part of the metered costs for services and incumbent on the cloud provider to track and coordinate with the vendors.

Software as a Service (SaaS)

SaaS is a fully functioning software application for a customer to use in a turnkey operation, where all the underlying responsibilities and operations for maintaining systems, patches, and operations are abstracted from the customer and are the responsibility of the cloud services provider. The following is from the NIST SP 800-145 definition of SaaS:

The capability provided to the customer is to use the provider’s applications running on a cloud infrastructure. The applications are accessible from various client devices through either a thin client interface, such as a web browser (e.g., web-based email), or a program interface. The consumer does not manage or control the underlying cloud infrastructure, including network, servers, operating systems, storage, or even individual application capabilities, with the possible exception of limited user-specific application settings.

Key Features and Benefits of SaaS The following are the key features and benefits of the SaaS cloud service model. Some are similar to those of IaaS and PaaS, but due to the nature of SaaS being a fully built software platform, certain aspects are unique to SaaS.

• Support costs and efforts In the SaaS service category, the cloud services are solely the responsibility of the cloud provider. Because the customer only licenses access to the software platform and capabilities, the entire underlying system—from network to storage and operating systems, as well as the software and application platforms themselves—is entirely removed from the responsibility of the consumer. Only the availability of the software application is important to the customer, and any responsibility for upgrades, patching, high availability, and operations solely resides with the cloud provider. This enables the customer to focus on productivity and business operations instead of IT operations.

• Reduced overall costs The customer in a SaaS environment is only licensing use of the software. The customer does not need to have systems administrators or security staff on hand, nor do they need to purchase hardware and software, plan for redundancy and disaster recovery, perform security audits on infrastructure, or deal with utility and environmental costs. Apart from licensing access for appropriate resources, features, and user counts from the cloud provider, the only cost concern for the customer is training in the use of the application platform and the device or computer access that their employees or users need to use the system.

• Licensing Similar to PaaS, within a SaaS model the licensing costs are the responsibility of the cloud provider. Whereas PaaS offers the licensing of the operating system and platforms to the cloud provider, SaaS takes it a step further with the software and everything included, leaving the customer to just “lease” licenses as they consume resources within the provided application. This removes the bookkeeping and individual costs of licenses from the customer’s perspective and instead rolls everything into the single cost of utilization of the actual software platform. This model allows the cloud provider, based on the scale of their implementations, to also negotiate far more beneficial bulk licensing savings than a single company or user would ever be able to do on their own, and thus drive lower the total costs to their customers as well.

• Ease of use and administration With a SaaS implementation being a fully featured software installation and product, the cost and efforts of administration are substantially lowered as compared to a PaaS or IaaS model. The customer only bears responsibility for configuring user access and access controls within the system, as well as minimal configurations. The configurations typically allowed within a SaaS system are usually very restricted and may only allow slight tweaks to the user experience, such as default settings or possibly some degree of branding; otherwise, all overhead and operations are held by the cloud provider exclusively.

• Standardization Because SaaS is a fully featured software application, all users will by definition be running the exact same version of the software at all times. A major challenge that many development and implementation teams face relates to patching and versioning, as well as configuration baselines and requirements. Within a SaaS model, because this is all handled by the cloud provider, it is achieved automatically.

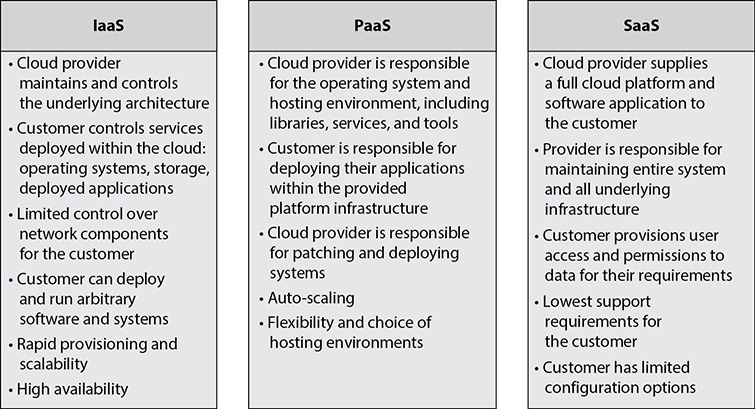

Cloud Deployment Models

As shown in Figure 2-2, four main types of cloud deployment and hosting models are in common use, each of which can host any of the three main cloud service models.

Figure 2-2 Cloud deployment models

Public Cloud

A public cloud is just what it sounds like. It is a model that provides cloud services to the general public or any company or organization at large without restriction beyond finances and planning. The following is the NIST SP 800-145 definition:

The cloud infrastructure is provisioned for open use by the general public. It may be owned, managed, and operated by a business, academic, or government organization, or some combination of them. It exists on the premises of the cloud provider.

Key Benefits and Features of the Public Cloud Model The following are key and unique benefits and features of the public cloud model:

• Setup Setup is very easy and inexpensive for the customer. All aspects of infrastructure, including hardware, network, licensing, bandwidth, and operational costs, are controlled and assumed by the provider.

• Scalability Even though scalability is a common feature of all cloud implementations, most public clouds are offered from very large corporations that have very broad and extensive resources and infrastructures. This allows even large implementations the freedom to scale as needed and as budgets allow, without worry of hitting capacity or interfering with other hosted implementations on the same cloud.

• Right-sizing resources Customers only pay for what they use and need at any given point in time. Their sole investment is scoped to their exact needs and can be completely fluid and agile over time based on either expected demand or unplanned demand at any given point in time.

Private Cloud

A private cloud differs from a public cloud in that it is run by and restricted to the organization that it serves. A private cloud model may also be opened up to other entities, expanding outward for developers, employees, contractors, and subcontractors, as well as potential collaborators and other firms that may offer complementary services or subcomponents. The following is the NIST SP 800-145 definition:

The cloud infrastructure is provisioned for exclusive use by a single organization comprising multiple consumers (e.g., business units). It may be owned, managed, and operated by the organization, a third party, or some combination of them, and it may exist on or off premises.

Key Benefits and Features of the Private Cloud Model The following are key benefits and features of the private cloud model and how it differs from a public cloud:

• Ownership retention Because the organization that utilizes the cloud also owns and operates it and controls who has access to it, that organization retains full control over it. This includes control of the underlying hardware and software infrastructures, as well as control throughout the cloud in regard to data policies, access policies, encryption methods, versioning, change control, and governance as a whole. For any organization that has strict policies or regulatory controls and requirements, this model would facilitate easier compliance and verification for auditing purposes versus the more limited controls and views offered via a public cloud. In cases where contracts or regulations stipulate locality and limitations as to where data and systems may reside and operate, a private cloud ensures compliance with requirements beyond just the contractual controls that a public cloud might offer, which also would require extensive reporting and auditing to validate compliance.

• Control over systems With a private cloud, the operations and system parameters of the cloud are solely at the discretion of the controlling organization. Whereas in a public cloud model an organization would be limited to the specific offerings for software and operating system versions, as well as patch and upgrade cycles, a private cloud allows the organization to determine what versions and timelines are offered without the need for contractual negotiations or potentially increased costs if specific versions need to be retained and supported beyond the time horizon that a public cloud is willing to offer.

• Proprietary data and software control Whereas a public cloud requires extensive software and contractual requirements to ensure the segregation and security of hosted systems, a private cloud offers absolute assurance that no other hosted environments can somehow gain access or insight into another hosted environment.

Community Cloud

A community cloud is a collaboration between similar organizations that combine resources to offer a private cloud. It is comparable to a private cloud with the exception of multiple ownership and/or control versus singular ownership of a private cloud. The following is the NIST SP 800-145 definition:

The cloud infrastructure is provisioned for exclusive use by a specific community of consumers from organizations that have shared concerns (e.g., mission, security requirements, policy, and compliance considerations). It may be owned, managed, and operated by one or more of the organizations in the community, a third party, or some combination of them, and may exist on or off premises.

Hybrid Cloud

As the name implies, a hybrid cloud combines the use of both private and public cloud models to fully meet an organization’s needs. The following is the NIST SP 800-145 definition:

The cloud infrastructure is a composition of two or more distinct cloud infrastructures (private, community, or public) that remain unique entities, but are bound together by standardized or proprietary technology that enables data and application portability (e.g., cloud busting for load balancing between clouds).

Key Benefits and Features of the Hybrid Cloud Model Building upon key features and benefits of the public and private cloud models, these are the key features of the hybrid model:

• Split systems for optimization With a hybrid model, a customer has the opportunity and benefit of splitting out their operations between public and private clouds for optimal scaling and cost effectiveness. If desired by the organization, some parts of systems can be maintained internally while leveraging the expansive offerings of public clouds for other systems. This can be done for cost reasons, security concerns, regulatory requirements, or to leverage toolsets and offerings that a public cloud may provide that their private cloud does not.

• Retain critical systems internally When a company has the option to leverage a public cloud and its services, critical data systems can be maintained internally with private data controls and access controls.

• Disaster recovery An organization can leverage a hybrid cloud as a way to maintain systems within its own private cloud but utilize and have at its disposal the resources and options of a public cloud for disaster recovery and redundancy purposes. This would allow an organization to utilize its own private resources but have the ability to migrate systems to a public cloud when needed, without having to incur the costs of a failover site that sits idle except when an emergency arises. Because public cloud systems are only used in the event of a disaster, no costs would be incurred by the organization until such an event occurs. Also, with the organization building and maintaining its own images on its private cloud, these same images could be loaded into the provisioning system of a public cloud and be ready to use if and when required.

• Scalability Along the same lines as disaster recovery usage, an organization can have at the ready a contract with a public cloud provider to handle periods of burst traffic, either forecasted or in reaction to unexpected demand. In this scenario, an organization can keep its systems internal with its private cloud but have the option to scale out to a public cloud on short notice, only incurring costs should the need arise.

Cloud Shared Considerations

Several aspects of cloud computing are universal, regardless of the particular service category or deployment model.

Interoperability

Interoperability is the ease with which one can move or reuse components of an application or service. The underlying platform, operating system, location, API structure, or cloud provider should not be an impediment to moving services easily and efficiently to an alternative solution. An organization that has a high degree of interoperability with its systems is not bound to one cloud provider and can easily move to another if the level of service or price is not suitable. This keeps pressure on cloud providers to offer a high level of services and be competitive with pricing or else risk losing customers to other cloud providers at any time. With customers only incurring costs as they use services, it is even easier to change providers with a high degree of interoperability because long-term contracts are not set. Further, an organization also maintains flexibility to move between different cloud hosting models, such as moving from public to private clouds, and vice versa, as its internal needs or requirements change over time. With an interoperability mandate, an organization can seamlessly move between cloud providers, underlying technologies, and hosting environments, or it can split components apart and host them in different environments without impacting the flow of data or services.

Performance, Availability, and Resiliency

The concepts of performance, availability, and resiliency should be considered de facto aspects of any cloud environment due to the nature of cloud infrastructures and models. Given the size and scale of most cloud implementations, performance should always be second nature to a cloud unless it is incorrectly planned or managed. Resiliency and high availability are also hallmarks of a cloud environment. If any of these areas falls short, then customers will not stay long with a cloud provider and will quickly move to other providers. With proper provisioning and scaling by the cloud provider, performance should always be a top concern and focus. In a virtualized environment, it is easy for a cloud provider with proper management to move virtual machines and services around within its environment to maintain performance and even load. This capability is also what allows a cloud provider to maintain high availability and resiliency within its environment. As with many other key aspects of cloud computing, SLAs will determine and test the desired performance, availability, and resiliency of the cloud services.

Portability

Portability is the key feature that allows data to easily and seamlessly move between different cloud providers. An organization that has its data optimized for portability opens up enormous flexibility to move between different providers and hosting models, and it can leverage the data in a variety of ways. From a cost perspective, portability allows an organization to continually shop for cloud hosting services. Although cost can be a dominant driving factor, an organization may change providers for improved customer service, better feature sets and offerings, or SLA compliance issues. Apart from reasons to shop around for a cloud provider, portability also enables an organization to span its data across multiple cloud hosting arrangements. This can be for disaster recovery reasons, locality diversity, or high availability, for example.

Service Level Agreements (SLAs)

Whereas a contract will spell out the general terms and costs for services, the SLA is where the real meat of the business relationship and concrete requirements come into play. The SLA spells out in clear terms the minimum requirements for uptime, availability, processes, customer service and support, security controls and requirements, auditing and reporting, and potentially many other areas that will define the business relationship and the success of it. Failure to meet the SLA requirements will give the customer either financial benefits or form the basis for contract termination if acceptable performance cannot be rectified on behalf of the cloud provider.

Regulatory Requirements

Regulatory requirements are those imposed on a business and its operations either by law, regulation, policy, or standards and guidelines. These requirements are specific to the locality in which the company or application is based or specific to the nature of the data and transactions conducted. These requirements can carry financial, legal, or even criminal penalties for failure to comply, either willfully or accidently. Sanctions and penalties can apply to the company itself or even in some cases the individuals working for the company and on its behalf, depending on the locality and the nature of the violation. Specific industries often have their own regulations and laws governing them above and beyond general regulations, such as the Health Insurance Portability and Accountability Act (HIPAA) in the U.S. healthcare sector, the Federal Information Security Management Act (FISMA) for U.S. federal agencies and contractors, and the Payment Card Industry Data Security Standard (PCI DSS) for the financial/retail sectors. These are just a few examples of specific regulations that go above and beyond general regulations that apply to all businesses, such as the Sarbanes-Oxley (SOX) Act. The Cloud Security Professional needs to be aware of any and all regulations with which their systems and applications are required to comply; in most cases, failure to understand the requirements or ignorance of the requirements will not shield a company from investigations, penalties, or potential damage to its reputation.

Security

Security is of course always a paramount concern for any system or application. Within a cloud environment, there can be a lot of management and stakeholder unease with using a newer technology, and many will be uncomfortable with the idea of having corporate and sensitive data not under direct control of internal IT staff and hardware, housed in proprietary data centers. Depending on company policy and any regulatory or contractual requirements, different applications and systems will have their own specific security requirements and controls. Within a cloud environment, this becomes of particular interest because many customers are tenants within the same framework, and the cloud provider needs to ensure each customer that their controls are being met, and done so in a way that the cloud provider can support, with varying requirements. Another challenge exists with large cloud environments that likely have very strong security controls but will not publicly document what these controls are so as not to expose themselves to attacks. This is often mitigated within contract negotiations through nondisclosure agreements and privacy requirements, although this is still not the same level of understanding and information as an organization would have with its own internal and proprietary data centers.

The main way a cloud provider implements security is by setting baselines and minimum standards, while offering a suite of add-ons or extensions to security that typically come with an additional cost. This allows the cloud provider to support a common baseline and offer additional controls on a per-customer basis to those that require or desire them. On the other hand, for many smaller companies and organizations, which would not typically have extensive financial assets and expertise, moving to a major cloud provider may very well offer significantly enhanced security for their applications at a much lower cost than they could get on their own. In effect, they are realizing the economies of scale, and the demands of larger corporations and systems will benefit their own systems for a cheaper cost.

Privacy

Privacy in the cloud environment requires particular care due to the large number of regulatory and legal requirements that can differ greatly by use and location. Adding additional complexity is the fact that laws and regulations may differ based on where the data is stored (data at rest) and where the data is exposed and consumed (data in transit). In cloud environments, especially large public cloud systems, data has the inherent ability to be stored and moved between different locations, from within a country, between countries, and even across continents.

Cloud providers will very often have in place mechanisms to keep systems housed in geographic locations based on a customer’s requirements and regulations, but it is incumbent on the Cloud Security Professional to verify and ensure that these mechanisms are functioning properly. Contractual requirements need to be clearly spelled out between the customer and cloud provider, but strict SLAs and the ability to audit compliance are also important. In particular, European countries have strict privacy regulations that a company must always be cognizant of or else face enormous penalties that many other countries do not have; the ability of the cloud provider to properly enforce location and security requirements will not protect a company from sanctions and penalties for compliance failure because the burden resides fully on the owner of the application and the data held within.

Auditability

Most leading cloud providers supply their customers with a good deal of auditing, including reports and evidence that show user activity, compliance with controls and regulations, systems and processes that run and an explanation of what they do, as well as information, data access, and modification records. Auditability of a cloud environment is an area where the Cloud Security Professional needs to pay particular attention because the customer does not have full control over the environment like they would in a proprietary and traditional data center model. It is up to the cloud provider to expose auditing, logs, and reports to the customer and show diligence and evidence that they are capturing all events within their environment and properly reporting them.

Governance

Governance at its core involves assigning jobs, tasks, roles, and responsibilities and ensuring they are satisfactorily performed. Whether in a traditional data center or a cloud model, governance is mostly the same and undertaken by the same approach, with a bit of added complexity in a cloud environment due to data protection requirements and the role of the cloud provider. Although the cloud environment adds complexity to governance and oversight, it also brings some benefits as well. Most cloud providers offer extensive and regular reporting and metrics, either in real time from their web portals or in the form of regular reporting. These metrics can be tuned to the cloud environment and configured in such a way so as to give an organization greater ease in verifying compliance as opposed to a traditional data center, where reporting and collection mechanisms have to be established and maintained. However, care also needs to be taken with portability and migration between different cloud providers or hosting models to ensure that metrics are equivalent or comparable to be able to maintain a consistent and ongoing governance process.

Maintenance and Versioning

With the different types of cloud service categories, it is important for the contract and SLA to clearly spell out maintenance responsibilities. With a SaaS implementation, the cloud provider is basically responsible for all upgrades, patching, and maintenance, whereas with PaaS and certainly IaaS, some duties belong to the cloud customer while the rest are retained by the cloud provider. Outlining maintenance and testing practices and timelines with the SLA is particularly important for applications that may not always work correctly because of new versions or changes to the underlying system. This requires the cloud provider and cloud customer to work out a balance between the needs of the cloud provider to maintain a uniform environment and the needs of the cloud customer to ensure continuity of operations and system stability. Whenever a system upgrade or maintenance is performed, it is crucial to establish version numbers for platforms and software. With versioning, changes can be tracked and tested, with known versions available to fall back to if necessary due to problems with new versions. There should be an overlap period where a previous version (or versions) is available, which should be spelled out in the SLA.

Reversibility

Reversibility is the ability of a cloud customer to take all their systems and data out of a cloud provider and have assurances from the cloud provider that all the data has been securely and completely removed within an agreed-upon timeline. In most cases this will be done by the cloud customer by first retrieving all their data and processes from the cloud provider, serving notice that all active and available files and systems should be deleted, and then removing all traces from long-term archives or storage at an agreed-upon point in time. Reversibility also encompasses the ability to smoothly transition to a different cloud provider with minimal operational impact.

Impact of Related Technologies

There are many technologies that, while not explicitly part of cloud computing, are widely used within cloud environments. These emerging and related technologies will play increasing roles in cloud computing infrastructure and the common uses of cloud resources by customers.

Artificial Intelligence

Artificial intelligence (AI) allows machines to learn from processing experiences, adjusting to new data inputs and sources, and ultimately performing human-like analyses and adaptations to them. The primary way that AI operates is by consuming very large amounts of data and recognizing and analyzing patterns in the data. There are three main types of artificial intelligence: analytical, human-inspired, and humanized.

Analytical AI is purely cognitive-based. It focuses on the ability of systems to analyze data from past experiences and to extrapolate ways to make better future decisions. It is completely dependent on data and making decisions only from it, with the incorporation of external considerations or factors.

Human-inspired AI expands on the solely cognitive limitation of analytical AI by incorporating emotional intelligence. This adds the consideration of emotional responses and perspectives to decision-making processes. Both the emotional perspective of potential inputs and the predicted emotional response of the outputs are considered.

Humanized AI is the highest order of intelligence and strives to incorporate all aspects of the human experience. Humanized AI incorporates cognitive learning and responses as well as emotional intelligence, but then expands to also add social intelligence. With the addition of social intelligence, the artificial system becomes both aware of itself and self-conscious as it processes interactions.

Artificial intelligence plays a prominent role with cloud computing through big data systems and data mining. It allows systems to adapt to new trends and what they might mean with data inputs and to make informed and properly impacting decisions based on them, especially as users and human interaction are concerned. Of importance to cloud security is the impact on data inputs and their integrity that will directly influence decisions made by AI systems. Without properly secure and applicable inputs, it is impossible to gain a beneficial advantage through their synthesis.

Machine Learning

Machine learning involves using scientific and statistical data models and algorithms to allow machines to adapt to situations and perform functions that they have not been explicitly programmed to perform. Machine learning can use a variety of different input data to make determinations and perform a variety of tasks. This is often performed by using training or “seed” data to begin system optimization, along with the continual ingesting and analysis of data during runtime.

Prime examples of where machine learning is used very prevalently today are with intrusion detection, e-mail filtering, and virus scanning. With all three examples, systems are provided with a set of seed data and patterns to look for, and then continually adapt based on that knowledge and analyzing ongoing trends to perform their tasks. In all three cases, it would be impossible to program a system to adequately protect against any and all threats, especially considering the continual adaptation and evolution of threats. The best systems work by analyzing known patterns and strategies to deal with new threats that follow similar, but not explicitly identical, patterns and methods.

Within cloud computing, machine learning performs very important tasks to allow systems to learn and adapt to computing needs. This especially impacts the scalability and elasticity of systems. While administrators can pre-program specific thresholds of system utilization or load to add or remove resources, more responsive and adaptive systems that can take a broader view of computing demands and appropriately modify resources and scale properly to meet demand will allow companies to have the most cost-effective approach to the consumption of resources.

Blockchain

Blockchain is a list of records that are linked together via cryptography. As the name alludes to, each successive transaction is linked to the previous record in the chain. When a new link is added, information about the previous block, including the timestamp, new transaction data, as well as the cryptographic hash of the previous block, is attached, allowing for the continuation of the chain.

With blockchain, there is not a centralized repository of the chains. They are actually distributed across a number of systems, which can be in the hundreds for small applications, up to enormous systems that contain millions of systems, which is common with cryptocurrencies. This allows for the integrity of the chain and verification that it has not been modified. With the lack of a central repository or authority, there is also not a central means for compromise. An attacker would have to be able to compromise all repositories and modify them in the same manner; otherwise, the lack of integrity would be known to the users. Additionally, with the nature of the chaining of blocks together, and the use of cryptographic hashes to maintain the chain, it is impossible for an attacker to modify any single block of the chain, as it would also cause a downstream change of every other block, thus breaking the integrity of the chain.

Much like cloud environments, there are four types of blockchains: public, private, consortium, and hybrid. With a public blockchain, anyone can access, join, become a validator/repository, and use it for transactions. A private blockchain requires permission to join and utilize, but otherwise functions the same as a public blockchain. A consortium blockchain is semi-private in nature, as it requires permission to join, but can be open to a group of different organizations that are working together or homogenous in nature. A hybrid blockchain draws from the characteristics of the other three types. For example, it can be a combination of public and private, where some pieces are public and others are maintained privately.

With the distributed nature of cloud computing, where resources can be anywhere and the protection of data is paramount, blockchain is likely to continue to grow in use and needs to be understood within the realm of cloud security. Many providers are starting to offer blockchain as a service within their cloud environments as well.

Mobile Device Management

Mobile device management (MDM) is an encompassing term for a suite of policies, technologies, and infrastructure that enables an organization to manage and secure mobile devices that are granted access to its data across a homogenous environment. This is typically accomplished by installing software on a mobile device that allows the IT department to enforce security configurations and policies, regardless of whether the device is owned by the organization or is a private device owned by the user.

MDM allows for “bring your own device” (BYOD) for users and the granting of access to corporate data, such as internal networks, applications, and especially e-mail. Through the use of installed software and policies, the organization can control how the data is accessed and ensure that specific security requirements and configurations are in place. It also allows the organization at any time to remove its data from the device or block access to it, particularly important at times when a user is terminated from their relationship with the organization, or if the device is lost or stolen.

MDM can significantly lower costs to an organization by allowing users to use their own devices, on their own plans, and through whatever provider works best for them. Organizations no longer need to have enterprise data plans or purchase and maintain devices for users, nor do they need to force their users onto specific platforms or ecosystems. This is of particular importance with cloud environments and the nature of allowing broad network access to resources, especially when collaborating with users who may not work specifically for an organization but need to be granted access to its resources and data.

Internet of Things

The concept of the Internet of Things (IoT) refers to the extension of Internet connectivity to devices beyond traditional computing platforms. This can include home appliances, thermostats, sensors, lighting fixtures, and more, and is very common within the scope of “smart home” technologies. While the most rapid expansion of IoT has been in regard to smart home uses, the potential exists to provide virtually any type of device or system with Internet connectivity and remote automation.

While IoT provides enormous capabilities for automation and ease of use, it also poses enormous security and privacy concerns. Each device, once enabled for Internet access, is open to compromise and hacking, just the same as a traditional computer would be. Most of the devices do not have the kind of security software and support infrastructure in place for regular patching and security fixes. This opens them to any compromise that is discovered after they are initially programmed. Any devices, including microphones and cameras, can then be hacked and used within their capabilities or as a platform to attack other devices within what might otherwise be a protected environment.

Cloud providers are begging to offer IoT services within their environments, and many companies that integrate these services into their products highly leverage major cloud platforms to collect and process data from them as well as host the configuration or management of interfaces for consumers.

Containers

A container allows for the rapid deploying of applications throughout cloud environments, especially where they are heterogeneous in nature. A container is a wrapper that contains all of the code, configurations, and libraries needed for an application to operate, packaged inside a single unit. This can then be rapidly deployed throughout host environments without the need for specific server configuration or larger deployments, as only the particular components that are needed for the application to function are deployed, and they are not dependent on underlying operating systems or hardware. With the components all configured within a single wrapper, there is also no longer the need to confirm and validate that potentially large numbers of files have all been successfully deployed, synchronized, and loaded across all systems serving an application, thus increasing integrity and decreasing time and costs.

Many vendors and application providers now offer images where the user needs to only configure to their specific requirements and then deploy into use. This cuts down on deployment and configuration time, as well as provides for easier upgrades and patching. In many instances, only a single configuration or initialization file may need to be copied into an image and deployed into use. Due to the abstraction from underlying systems, containers can be easily deployed between physical servers, virtual servers, cloud environments, or a combination of different systems, while still presenting a uniform experience to users.

Quantum Computing

Quantum computing involves the use of quantum phenomena, such as the interactions between atoms or wave movements to aid in computation. The most prominent potential impact to cloud computing in regard to quantum computing is how it affects cryptography. Many public-key systems today are based on prime number factorization, where the numbers used are so large that it would take an enormous amount of time for computing platforms to break them. However, with the introduction of quantum computing, the potential exists to break these systems efficiently and render them moot, though the practical application does not apply to all cryptographic models. It is a rapidly emerging field that a Cloud Security Professional should stay somewhat abreast of concerning its potential impact to the heavy reliance cloud computing places on cryptography.

Security Concepts Relevant to Cloud Computing

Most security concepts for any system or data center are the same for cloud computing:

• Cryptography

• Access control

• Data and media sanitation

• Network security

• Virtualization security

• Common threats

However, due to the unique nature of the cloud, specific considerations are needed for each concept.

Cryptography

In any environment, data encryption is incredibly important to prevent unauthorized exposure of data, either internally or externally. If a system is compromised by an attack, having the data encrypted on the system will prevent its unauthorized exposure or export, even with the system itself being exposed. This is especially important where there are strict regulations for data security and privacy, such as healthcare, education, tax payment, and financial information.

Encryption

There are many different types and levels of encryption. Within a cloud environment, it is the duty of the Cloud Security Professional to evaluate the needs of the application, the technologies it employs, the types of data it contains, and the regulatory or contractual requirements for its protection and use. Encryption is important for many aspects of a cloud implementation. This includes the storage of data on a system, both when it is being accessed and while it is at rest, as well as the actual transmission of data and transactions between systems or between the system and a consumer. The Cloud Security Professional must ensure that appropriate encryption is selected that will be strong enough to meet regulatory and system requirements, but also efficient and accessible enough for operations to seamlessly work within the application.

Data in Transit

Data in transit is the state of data when it is actually being used by an application and is traversing systems internally or going between the client and the actual application. Whether the data is being transmitted between systems within the cloud or going out to a user’s client, data in transit is when data is most vulnerable to exposure of unauthorized capture. Within a cloud hosting model, the transmission between systems is even more important than with a traditional data center due to multitenancy; the other systems within the same cloud are potential security risks and vulnerable points where data capture could happen successfully.

In order to maintain portability and interoperability, the Cloud Security Professional should make the processes for the encryption of data in transit vendor-neutral in regard to the capabilities or limitations of a specific cloud provider. The Cloud Security Professional should be involved in the planning and design of the system or application from the earliest stages to ensure that everything is built properly from the ground up, and not retrofitted after design or implementation has been completed. Whereas the use of encryption with the operations of the system is crucial during the design phase, the proper management of keys, protocols, and testing/auditing are crucial once a system has been implemented and deployed.

The most common method for data-in-transit encryption is to use the well-known SSL and TLS technologies under HTTPS. With many modern applications utilizing web services as the framework for communications, this has become the prevailing method, which is the same method used by clients and browsers to communicate with servers over the Internet. This method is now being used within cloud environments for server-to-server internal communication as well. Beyond using HTTPS, other common encryption methods for data in transit are VPNs (virtual private networks) and IPSec. These methods can be used by themselves but are most commonly used in parallel to provide the highest level of protection possible.

Data at Rest

Data at rest refers to information stored on a system or device (versus data that is actively being transmitted across a network or between systems). The data can be stored in many different forms to fit within this category. Some examples include databases, file sets, spreadsheets, documents, tapes, archives, and even mobile devices.

Data residing on a system is potentially exposed and vulnerable far longer than short transmission and transaction operations would be, so special care is needed to ensure its protection from unauthorized access. With transaction systems and data in transit, usually a small subset of records or even a single record is transmitted at any time, versus the comprehensive record sets maintained in databases and other file systems.

While encrypting data is central to the confidentiality of any system, the availability and performance of data are equally as important. The Cloud Security Professional must ensure that encryption methods provide high levels of security and protection and do so in a manner that facilitates high performance and system speed. Any use of encryption will cause higher load and processing times, so proper scaling and evaluation of systems are critical when testing deployments and design criteria.

With portability and vendor lock-in considerations, it is important for a Cloud Security Professional to ensure that encryption systems do not effectively cause a system to be bound to a proprietary cloud offering. If a system or application ends up using a proprietary encryption system from a cloud provider, portability will likely be far more difficult and thus tie that customer to that particular cloud provider. With many cloud implementations spanning multiple cloud providers and infrastructures for disaster recovery and continuity planning, having encryption systems that can maintain consistency and performance is important.

Key Management

With any encryption system, a method is needed to properly issue, maintain, and organize keys. If a customer has their own key management systems and procedures, they can better ensure their own data security, as well as prevent being “locked in” with a cloud provider and the systems they provide, which may be proprietary in nature. Beyond the vendor lock-in that can occur with using a key management system from the cloud provider, your keys are also being managed within a system that contains similar keys for other systems. A customer that maintains control of their own key management systems ensures a higher degree of portability and segregation of systems for security reasons.

Two main key management services (KMSs) are commonly used within cloud computing systems: remote and client-side.

Remote Key Management Service A remote key management service is maintained and controlled by the customer at their own location. This offers the highest degree of security for the customer because the keys will be contained under their sole control and outside of the boundaries of the cloud provider. This implementation allows the customer to fully configure and implement their own keys and fully control who can access and generate key pairs. The main drawback to a remote KMS is that connectivity will have to be open and always maintained in order for the systems and applications hosted by the cloud provider to function properly. This introduces the potential for network connectivity latency or periods of downtime, either accidental or by design, which eliminates the high-availability features of a cloud provider and is dependent on the availability of the KMS.

Client-Side Key Management Service Most common with SaaS implementations, client-side KMS is provided by the cloud provider but is hosted and controlled by the customer. This allows for seamless integration with the cloud environment, but also allows complete control to still reside with the customer. The customer is fully responsible for all key generation and maintenance activities.

Access Control

Access control combines the two main concepts of authentication and authorization but adds a crucial third concept of accounting as well. With authentication, a person or system verifies who they are, and with authorization they acquire the appropriate minimum system access rights that they should have based on their role to use the system and consume data. Accounting involves maintaining the logs and records of authentication and authorization activities, and for both, operational and regulatory needs are absolutely crucial.

Access control systems can have a variety of different types of authentication mechanisms that provide increasing levels of security based on the type of data sensitivity. On the low end, this can involve the use of a user ID and password, which everyone is familiar with in regard to typical system access. For higher levels of security, systems can and should use multiple factors of authentication in combination. This will typically be a combination of the classic user ID and password with an additional requirement such as a physical possession. Types of secondary factors typically include biometric tests (fingerprints and retina scans), a physical token device that is plugged into a computer and read by the system, and the use of a mobile device or callback feature, where the user is provided with a code to input in addition to their password for access. There are many other types of potential secondary authentication methods, but the ones just mentioned are the most common. Physical secondary types of authentication can also be layered in combination; for example, the user could have to provide a retina scan as well as a physical token device.

The four main areas of access management concerns are described in the following sections.

Account Provisioning

Before any system access can be granted and roles determined, accounts must be created on the system that will form the basis of access. At this stage, the most crucial aspect for an organization is the validation of users and verification of their credentials to be allowed to acquire accounts for the system. It is incumbent on an organization and their security policies and practices to determine the appropriate level of proof required to verify a new user and issue credentials. This can be based solely on the policies of the organization, or it can include extensive additional processes based on contractual, legal, or regulatory requirements. A prime example would be government contracts, where specific documentation for verification must be submitted to approve authorities outside of the organization, or even to obtain security clearance through separate vetting processes as an additional requirement before account access can be provisioned. The big key for any organization is making a process that is efficient and consistent across the user base so that the account provisioning process can be audited and trusted. While most of the discussion so far has been based on granting and verifying access, it is equally important for an organization to have a well-defined and efficient procedure for removing accounts from the systems at the appropriate time, either for security incident responses purposes, job role changes, or the termination or resignation of employees.

Directory Services

The backbone of any access management system is the directory server that contains all of the information that applications need to make proper authentication and authorization decisions. Although various solutions for directory services are offered by many different vendors, the core of virtually all of them is the Lightweight Directory Access Protocol (LDAP). LDAP is a highly optimized system of representing data as objects with associated attributes, which can be single- or multi-valued in nature. The data is stored in a hierarchical representation where a DN (distinguished name) acts as the primary key for an object. When users log in to a system, LDAP can provide authentication. Then, depending on the application and its needs, it can provide a variety of information about that user from the attributes associated with their object. This information can be anything, such as the department of an organization they are part of, job titles or codes, flags to determine managers and other special designations, specific system information and roles the user is allowed to access, and essentially any other type of information an organization has determined to be part of their user object. LDAP systems are highly optimized to handle very large numbers of queries of a read-only nature and are able to scale out quickly and efficiently with a model of data replication to servers that can be placed behind load balancers or geographically distributed, depending on the needs and designs of the system implementation.

Administrative and Privileged Access