Chapter 3

Security Architecture and Engineering

This chapter covers the following topics:

Engineering Processes Using Secure Design Principles: Concepts discussed include the ISO/IEC 15288:2015 and NIST SP 800-160 systems engineering standards, objects and subjects, closed versus open systems, threat modeling, least privilege, defense in depth, secure defaults, fail securely, separation of duties (SoD), keep it simple, Zero Trust, Privacy by design, Trust but verify, and shared responsibility.

Security Model Concepts: Concepts discussed include confidentiality, integrity, and availability; security modes; security model types; security models; system architecture steps; ISO/IEC 42010:2011; computing platforms; security services; and system components.

System Security Evaluation Models: Concepts discussed include TCSEC, ITSEC, Common Criteria, security implementation standards, and controls and countermeasures.

Certification and Accreditation: Concepts discussed include certification, accreditation, and the phases of accreditation.

Control Selection Based on Systems Security Requirements: Concepts discussed include selecting controls for systems based on security requirements.

Security Capabilities of Information Systems: Concepts discussed include memory protection, virtualization, Trusted Platform Module, interfaces, fault tolerance, policy mechanisms, and encryption/decryption.

Security Architecture Maintenance: Concepts discussed include maintaining security architecture.

Vulnerabilities of Security Architectures, Designs, and Solution Elements: Concepts discussed include client-based systems, server-based systems, database systems, cryptographic systems, industrial control systems, cloud-based systems, large-scale parallel data systems, distributed systems, Internet of Things, microservices, containerization, serverless, high-performance computing (HPC) systems, edge computing systems, and virtualized systems.

Vulnerabilities in Web-Based Systems: Concepts discussed include maintenance hooks, time-of-check/time-of-use attacks, web-based attacks, XML, SAML, and OWASP.

Vulnerabilities in Mobile Systems: Concepts discussed include the vulnerabilities encountered when using mobile systems, such as device security, application security, and mobile device concerns.

Vulnerabilities in Embedded Systems: Concepts discussed include the issues that are currently being seen with the advent of machine-to-machine communication and the Internet of Things.

Cryptographic Solutions: Topics discussed include cryptographic concepts, cryptography history, cryptosystem features, cryptographic mathematics, and cryptographic life cycle.

Cryptographic Types: Concepts discussed include running key and concealment ciphers, substitution ciphers, transposition ciphers, symmetric algorithms, asymmetric algorithms, hybrid ciphers, elliptic curves, and quantum cryptography.

Symmetric Algorithms: Algorithms discussed include Digital Encryption Standard and Triple Data Encryption Standard, Advanced Encryption Standard, IDEA, Skipjack, Blowfish, Twofish, RC4/RC5/RC6/RC7, and CAST.

Asymmetric Algorithms: Algorithms discussed include Diffie-Hellman, RSA, El Gamal, ECC, Knapsack, and zero-knowledge proof.

Public Key Infrastructure and Digital Certificates: Concepts discussed include CAs and RAs, certificates, certificate life cycle, CRLs, OCSP, PKI steps, and cross-certification.

Key Management Practices: Concepts discussed include the key management practices that organizations should understand, including symmetric key management and asymmetric key management.

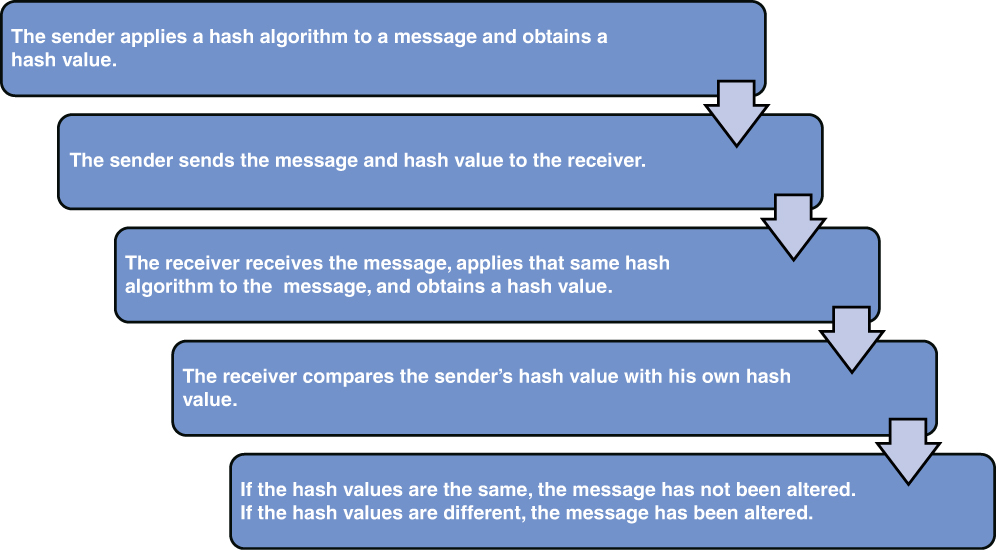

Message Integrity: Concepts discussed include hashing, one-way hash, message authentication code, and salting.

Digital Signatures and Non-repudiation: This section covers the use of digital signatures, including DSS and non-repudiation.

Applied Cryptography: This section covers link encryption, end-to-end encryption, email security, and Internet security.

Cryptanalytic Attacks: Attacks discussed include ciphertext-only attack, known plaintext attack, chosen plaintext attack, chosen ciphertext attack, social engineering, brute force, differential cryptanalysis, linear cryptanalysis, algebraic attack, frequency analysis, birthday attack, dictionary attack, replay attack, analytic attack, statistical attack, factoring attack, reverse engineering, meet-in-the-middle attack, ransomware attack, side-channel attack, implementation attacks, fault injection, timing attack, pass-the-hash attack, and Kerberos exploitation.

Digital Rights Management: This section explains digital rights management, including document, music, movie, video game, and e-book DRM.

Site and Facility Design: Concepts discussed include a layered defense model, CPTED, physical security plan, and facility selection issues.

Site and Facility Security Controls: Controls discussed include doors, locks, biometrics, glass entries, visitor control, wiring closets/intermediate distribution facilities, restricted and work areas (secure data center, restricted work area, server room, media storage facilities, and evidence storage), environmental security and issues, and equipment security.

The Security Architecture and Engineering domain addresses a broad array of topics including security engineering processes, security models, security controls, assessing and mitigating vulnerabilities, cryptography, and site and facility security controls. Out of 100 percent of the exam, this domain carries an average weight of 13 percent, which ties with two other domains for the third highest weight.

Security architecture and engineering are mainly concerned with the design, implementation, monitoring, and securing of information security assets. These assets include computers, equipment, networks, and applications. Within this area, a security professional must understand security models, system vulnerabilities, cryptography, and physical security. But simply understanding security architecture and engineering is not enough. A security professional must also know how to implement security architecture engineering to ensure that assets are protected. Organizations must understand what they need to secure, why they need to secure it, and how it will be secured.

Foundation Topics

Engineering Processes Using Secure Design Principles

Systems engineering is an approach for the design, realization, technical management, operations, and retirement of a system. In general, a system is a collection of elements that together produce results not obtainable by the individual elements alone. In IT specifically, a system may involve single or multiple computers or devices working together to achieve a particular result. For example, an online ordering system may involve a web server, an e-commerce server, and a database server. However, these systems alone cannot provide adequate security to online transactions. An organization may need to include routers, firewalls, and other security mechanisms to ensure that security is integrated into the total design solutions.

Organizations must implement and manage systems engineering processes using secure design principles. Systems engineering is usually modeled based on a life cycle. Chapter 1, “Security and Risk Management,” discusses groups that establish standards, including International Organization for Standardization (ISO)/International Electrotechnical Commission (IEC) and the National Institute of Standards and Technology (NIST). These groups both have established standards for systems engineering: ISO/IEC 15288:2015 and NIST Special Publication (SP) 800-160, which supersedes NIST SP 800-27.

ISO/IEC 15288:2015 establishes four categories of processes:

Agreement processes: This category includes acquisition and supply.

Organizational project-enabling processes: This category includes life cycle model management, infrastructure management, portfolio management, human resource management, quality management, and knowledge management.

Technical management processes: This category includes project planning, project assessment and control, decision management, risk management, configuration management, information management, measurement, and quality assurance.

Technical processes: This category includes business or mission analysis, stakeholder needs and requirements definition, system requirements definition, architecture definition, design definition, system analysis, implementation, integration, verification, transition, validation, operation, maintenance, and disposal.

The systems life cycle stages of this standard include concept, development, production, utilization, support, and retirement. While this standard defines system life cycle processes, it does not by itself address security during systems engineering.

NIST SP 800-160 is based on ISO/IEC 15288:2015 and discussed in Chapter 1.

To understand engineering using secure design principles, organizations must understand the difference between objects and subjects and closed versus open systems.

Objects and Subjects

Objects are resources that a user or process wants to access, and subjects are the users or processes requesting access. If the resource is requesting access, it is a subject. If a resource is being accessed, it is an object. Many resources can be both objects and subjects.

Let’s look at an example. Suppose Jim, a user, wants to access an application. In this case, Jim is a subject, and the application is an object. If the application Jim uses needs to access a database, then the application is the subject, and the database is the object.

Closed Versus Open Systems

A closed system is a proprietary system that is designed to work with a limited range of other systems. Open systems conform to industry standards and can work with systems that support the same standard. When an organization is integrating these systems, closed systems are harder to integrate, whereas open systems are much easier to integrate.

Threat Modeling

Threat modeling is the process of identifying potential threats and determining possible mitigations and countermeasures, if any, for the threats. The process can provide an organization with an attacker’s profile, likely attack vectors, targeted asset, and an analysis of the controls or defenses that should be implemented for the identified threats.

Least Privilege

The principle of least privilege is important in the design of systems. Least privilege means that users are granted access only to the information and resources that are minimally necessary for them to do their jobs. If the principle of least privilege is strictly enforced across an organization and the appropriate procedures are in place, privilege creep, wherein user privileges accumulate over time, will be prevented so that systems are as secure as possible.

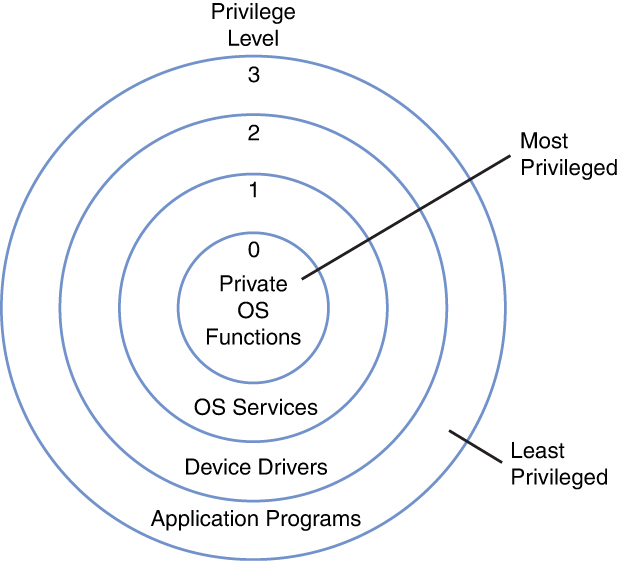

When designing operating system processes, security professionals should ensure that system processes run in user mode when possible. When a process executes in privileged mode, the potential for vulnerabilities greatly increases. If a process needs access to privileged services, it is best to use an application programming interface (API) to ask for supervisory-mode services.

Related to the principle of least privilege, the principle of least functionality is that systems and devices should be configured to provide only essential or minimally required capabilities and specifically prohibit or restrict the use of functions, ports, protocols, and services.

Defense in Depth

Communications security management and techniques are designed to prevent, detect, and correct errors so that the CIA of transactions over networks might be maintained. Most computer attacks result in a violation of one of the security properties: confidentiality, integrity, or availability. A defense-in-depth approach refers to deploying layers of protection. For example, even when you are deploying firewalls, access control lists (ACLs) should still be applied to resources to help prevent access to sensitive data in case the firewall is breached.

Secure Defaults

Secure defaults, also referred to as secure by default, is a term used to describe a condition wherein an application’s or device’s default settings are set to the most secure settings possible. If the application or device is reset, these secure defaults should be maintained. Security professionals should keep in mind that the most secure settings are not often the most user-friendly settings.

If a technology follows secure defaults principles, the technology will have embedded, built-in cybersecurity principles. Depending on the technology, device, or application, best practices in secure defaults should include password prompts, history, length and strength, the closing of unused ports or services, encryption, and remote access disablement.

Fail Securely

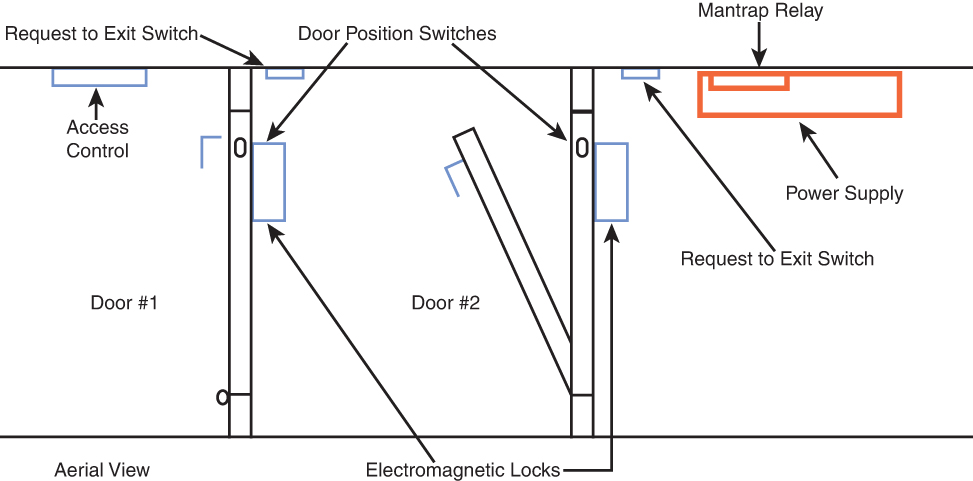

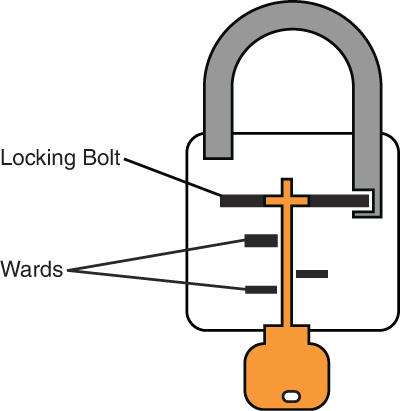

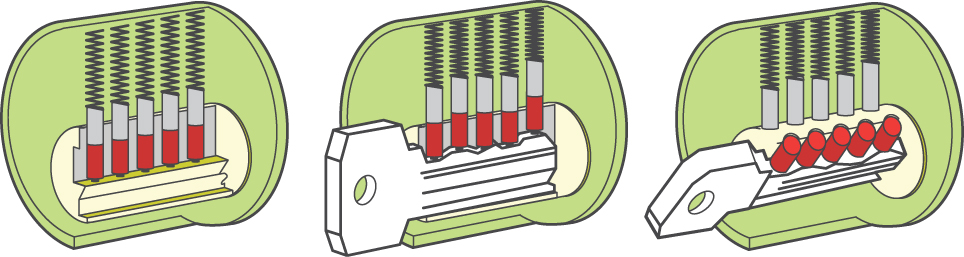

To understand the concept of fail securely, security professionals must understand two related terms: fail safe and fail secure. Most often these terms are used in conjunction with physical controls, particularly door locks.

A product that is fail safe is unlocked when power is removed. Personnel therefore can enter or leave the area. A product that is fail secure is locked when power is removed. In this case, personnel can leave the area, but they must use a key to enter the area. These terms refer to the status of the secure side of the door. Most products provide free egress or exit whether they are fail safe or fail secure.

Fail-safe products should never be used for areas of high security. Simply cutting the power would give a threat actor access to the security area. Security professionals should ensure that public or general areas use fail-safe products, whereas secure areas, such as data centers, use fail-secure products.

Separation of Duties (SoD)

Separation of duties (SoD) is an internal personnel control that distributes the tasks and associated privileges for a security process among multiple personnel. It is most often associated with an organization’s financial accounting policies whereby controls are put into place for issuing checks. The person inputting the payment information is usually a separate person than the one who signs or otherwise authorizes the checks after printing. In addition, organizations often implement policies whereby checks above a certain amount require two signatures.

SoD, as it relates to security, has two primary objectives: (1) to prevent conflict of interest, fraud, abuse, or errors and (2) to detect control failures, including breaches, data theft, and circumvention of security controls.

SoD restricts the power or influence held by any one person. It also ensures that personnel do not have conflicting responsibilities and are not responsible for reporting on themselves or their superiors. For example, in an IT department, the person who creates the user accounts can only do so with the proper request made by a department head or asset owner, and the person who assigns the appropriate permissions can only do so with the proper request made by the data or asset owner.

Keep It Simple

Keep it simple, also referred to as keep it simple, stupid (KISS), is a design principle that states a design and/or system should be as simple as possible and avoid unneeded complexity. Simplicity guarantees the greatest levels of user acceptance and interaction.

Security professionals should ensure that organizations focus on implementing simple controls to provide security for confidentiality, integrity, and availability. To offset the need for controls to be simple, organizations should deploy a layered security model.

Zero Trust

Zero trust is a security model based on the principle of maintaining strict access controls and not trusting anyone or anything by default. Zero trust requires strict identity verification for every person and device trying to access resources on a private network, regardless of whether they are sitting within or outside of the network perimeter.

In a zero trust environment, each access request is fully identified, authenticated, authorized, and encrypted before granting access. A zero trust environment is built upon five fundamental elements:

The person or device is always assumed to be hostile.

External and internal threats exist at all times.

Location is not sufficient for deciding trust in a person or device.

Every device, user, and communication is identified, authenticated, and authorized.

Policies must be dynamic and calculated from as many sources of data as possible.

To fully implement zero trust, organizations must deploy multifactor authentication (MFA), the principle of least privilege, and endpoint validation.

Privacy by Design

Privacy by design is a term that implies data protection through technology design. Behind this is the thought that data protection in data processing procedures is best adhered to when it is already integrated in the technology at creation. It is a concept in the General Data Protection Regulation (GDPR).

In new systems, security professionals should implement privacy by design by emphasizing privacy and security throughout the design process. Privacy should be integrated into a system from day one. Security professionals should implement privacy by design in an existing system by deconstructing and analyzing the system. This is more difficult and time-consuming. A privacy audit should be performed on the existing system to examine how privacy has been embedded into the system, identify weak-points, and create new user-friendly solutions.

Trust but Verify

Trust but verify is a principle that is used when communicating entities trust each other but verify that such trust should be provided through the verification of an established relationship. When implementing trust but verify, security professionals should ensure their organizations

Use multiple asset inventory tools for verification and validation.

Use vulnerability management scanning tools to verify patching and hardening settings.

Use account reviews to verify that the principle of least privilege is enforced.

Use penetration tests and security posture testing in production environments.

Document supporting artifacts and evidence for audits and reviews.

Using a trust but verify approach ensures that checks and balances are implemented and that the appropriate controls are deployed to meet the expectations of the organization.

Shared Responsibility

Shared responsibility is a principle that requires that each user is accountable for different aspects of security and all must work together to ensure full coverage. All personnel within an organization must be aware of their responsibilities regarding security. During annual security and awareness training, personnel should be given scenarios wherein they see when and how to report security issues they may encounter.

All organizations should implement a security issues reporting portal so that it becomes part of organizational culture. This portal should include a feature that allows personnel to anonymously report security issues or violations that they observe to the appropriate management. Personnel can choose to remain anonymous or to disclose their identity.

Security Model Concepts

Security measures must have a defined goal to ensure that the measure is successful. All measures are designed to provide one of a core set of protections. In the following sections, the three fundamental principles of security are discussed. Also, an approach to delivering these goals is covered. In addition, these sections cover the security modes, security model types, security models, and system architecture. Finally, it covers ISO/IEC 42010:2011, computing platforms, security services, and system components.

Confidentiality, Integrity, and Availability

The essential security principles of confidentiality, integrity, and availability are referred to as the CIA triad. Confidentiality is provided if the data cannot be read either through access controls and encryption for data as it exists on a hard drive or through encryption as the data is in transit. With respect to information security, confidentiality is the opposite of disclosure.

Integrity is provided if you can be assured that the data has not changed in any way. This is typically provided with a hashing algorithm or a checksum of some kind. Both methods create a number that is sent along with the data. When the data gets to the destination, this number can be used to determine whether even a single bit has changed in the data by calculating the hash value from the data that was received. This approach helps to protect data against undetected corruption.

Some additional integrity goals are to

Prevent unauthorized users from making modifications.

Maintain internal and external consistency.

Prevent authorized users from making improper modifications.

Availability describes what percentage of the time the resource or the data is available. This is usually measured as a percentage of “up” time, with 99.9 percent up time representing more availability than 99 percent up time. Making sure that the data is accessible when and where it is needed is a prime goal of security.

Confinement

Confinement is a term used to describe isolating processes or machines/subsystems in a larger system. When a process is confined, the process is only allowed to read from and write to certain memory locations and resources. Confinement is usually carried out using the operating system, through a confinement service, or using a hypervisor.

Bounds

On a system, processes run at an assigned authority level, which defines what the process can do. Two common authority levels are user and kernel. The bounds of a process set limits on the memory addresses and resources the process can access. The bounds logically segment memory areas for each process to use. Highly secure systems will physically bound the processes, meaning that the processes run in memory areas that are physically separated from each other. Logically bounded memory is cheaper than but not as secure as physically bounded memory.

Isolation

A process runs in isolation when it is confined using bounds. Process isolation ensures that any actions taken by the process will only affect the memory and resources used by the isolated process. Isolation prevents other processes, applications, or resources from accessing the memory or resources of another.

Security Modes

A mandatory access control (MAC) system operates in different security modes at various times, based on variables such as sensitivity of data, the clearance level of the user, and the actions users are authorized to take. The following sections provide descriptions of these modes.

Dedicated Security Mode

A system is operating in dedicated security mode if it employs a single classification level. In this system, all users can access all data, but they must sign a nondisclosure agreement (NDA) and be formally approved for access on a need-to-know basis.

System High Security Mode

In a system operating in system high security mode, all users have the same security clearance (as in the dedicated security model), but they do not all possess a need-to-know clearance for all the information in the system. Consequently, although users might have clearance to access an object, they still might be restricted if they do not have need-to-know clearance pertaining to the object.

Compartmented Security Mode

In the compartmented security mode system, all users must possess the highest security clearance (as in both dedicated and system high security), but they must also have valid need-to-know clearance, a signed NDA, and formal approval for all information to which they have access. The objective is to ensure that the minimum number of people possible have access to information at each level or compartment.

Multilevel Security Mode

When a system allows two or more classification levels of information to be processed at the same time, it is said to be operating in multilevel security mode. Users must have a signed NDA for all the information in the system and will have access to subsets based on their clearance level, need-to-know, and formal access approval. These systems involve the highest risk because information is processed at more than one level of security, even when all system users do not have appropriate clearances or a need to know for all information processed by the system. This is also sometimes called controlled security mode. Table 3-1 compares the four security modes and their requirements.

Table 3-1 Security Modes Summary

| Signed NDA | Proper Clearance | Formal Approval | Valid Need to Know |

Dedicated | All information | All information | All information | All information |

System high | All information | All information | All information | Some information |

Compartmented | All information | All information | Some information | Some information |

Multilevel | All information | Some information | Some information | Some information |

Assurance and Trust

Whereas a trust level describes the protections that can be expected from a system, assurance refers to the level of confidence that the protections will operate as planned. Typically, higher levels of assurance are achieved by dedicating more scrutiny to security in the design process. The section “System Security Evaluation Models,” later in this chapter, discusses various methods of rating systems for trust levels and assurance.

Security Model Types

A security model describes the theory of security that is designed into a system from the outset. Formal models have been developed to approach the design of the security operations of a system. In the real world, the use of formal models is often skipped because it delays the design process somewhat (although the cost might be a lesser system). This section discusses some basic model types along with some formal models derived from the various approaches available.

A security model maps the desires of the security policy makers to the rules that a computer system must follow. Different model types exhibit various approaches to achieving this goal. The specific models that are contained in the section “Security Models” incorporate various combinations of these model types.

State Machine Models

The state of a system is its posture at any specific point in time. Activities that occur in the process of the system operating alter the state of the system. After the security professional examines every possible state the system could be in and ensures that the system maintains the proper security relationship between objects and subjects in each state, the system is said to be secure. The Bell-LaPadula model discussed in the later section “Security Models” is an example of a state machine model.

Multilevel Lattice Models

The lattice-based access control model or multilevel lattice model was developed mainly to deal with confidentiality issues and focuses itself mainly on information flow. Each security subject is assigned a security label that defines the upper and lower bounds of the subject’s access to the system. Controls are then applied to all objects by organizing them into levels or lattices. Objects are containers of information in some format. These pairs of elements (object and subject) are assigned a least upper bound of values and a greatest lower bound of values that define what can be done by that subject with that object.

A subject’s label (remember a subject can be a person, but it can also be a process) defines what level someone can access and what actions can be performed at that level. With the lattice-based access control model, a security label is also called a security class. This model associates every resource and every user of a resource with one of an ordered set of classes. The lattice-based model aims at protecting against illegal information flow among the entities.

Matrix-Based Models

A matrix-based model organizes tables of subjects and objects indicating what actions individual subjects can take upon individual objects. This concept is found in other model types as well, such as the lattice model discussed in the previous section. Access control to objects is often implemented as a control matrix. It is a straightforward approach that defines access rights to subjects for objects. The two most common implementations of this concept are ACLs and capabilities. In its table structure, a row would indicate the access one subject has to an array of objects. Therefore, a row could be seen as a capability list for a specific subject. It consists of the following parts:

A list of objects

A list of subjects

A function that returns an object’s type

The matrix itself, with the objects making the columns and the subjects making the rows

Noninterference Models

In multilevel security models, the concept of noninterference prescribes those actions that take place at a higher security level but do not affect or influence those that occur at a lower security level. Because this model is less concerned with the flow of information and more concerned with a subject’s knowledge of the state of the system at a point in time, it concentrates on preventing the actions that take place at one level from altering the state presented.

One of the attack types that this conceptual model is meant to prevent is interference, which occurs when someone has access to information at one level that allows them to infer information about another level.

Information Flow Models

Any of the models discussed in the next section that attempt to prevent the flow of information from one entity to another that violates or negates the security policy is called an information flow model. In the information flow model, what relates two versions of the same object is called the flow. A flow is a type of dependency that relates two versions of the same object, and thus the transformation of one state of that object into another, at successive points in time. In a multilevel security (MLS) system, a one-way information flow device called a pump prevents the flow of information from a lower level of security classification or sensitivity to a higher level.

For example, the Bell-LaPadula model (discussed in the section “Security Models”) concerns itself with the flow of information in the following three cases:

When a subject alters an object

When a subject accesses an object

When a subject observes an object

The prevention of illegal information flow among the entities is the aim of an information flow model.

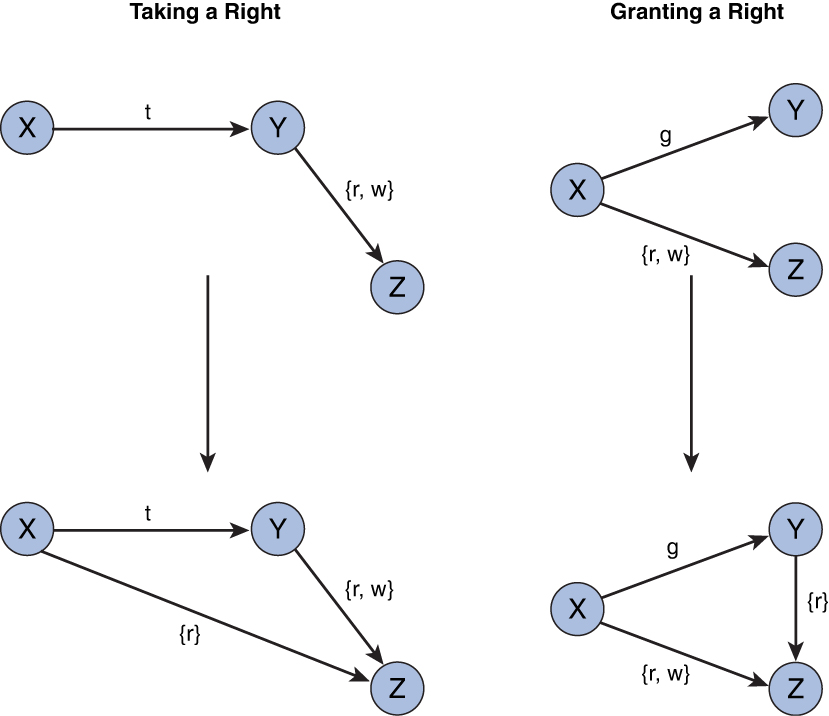

Take-Grant Model

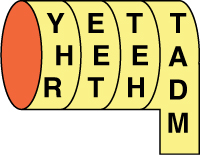

A system in the Take-Grant model is represented as a directed graph, called a protection graph. The subjects and objects of the computer system are the vertices, and the access rights of subjects to objects are represented by arcs. Although the Take-Grant model uses standard access rights like read and write, the Take-Grant model includes two additional access rights:

Take (t) is the right to take any access rights from the subject.

Grant (g) is the right to assign its access rights to any subject.

Figure 3-1 shows a graph of the Take-Grant model’s Take and Grant access rights.

Figure 3-1 Take-Grant Model’s Example of Take and Grant

Security Models

A number of formal models incorporating the concepts discussed in the previous section have been developed and used to guide the security design of systems. The following sections discuss some of the more widely used or important security models, including the following:

Bell-LaPadula model

Biba model

Clark-Wilson integrity model

Lipner model

Brewer-Nash (Chinese Wall) model

Graham-Denning model

Harrison-Ruzzo-Ullman model

Goguen-Meseguer model

Sutherland model

Bell-LaPadula Model

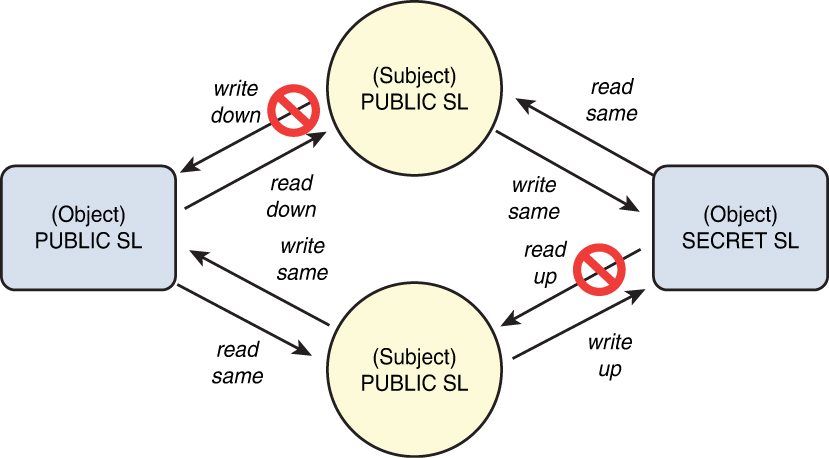

The Bell-LaPadula model was the first mathematical model of a multilevel system that used both the concepts of a state machine and those of controlling information flow. It formalizes the U.S. DoD multilevel security policy. It is a state machine model capturing confidentiality aspects of access control. Any movement of information from a higher level to a lower level in the system must be performed by a trusted subject.

Bell-LaPadula, known as “no read up and no write down,” incorporates three basic rules with respect to the flow of information in a system:

The simple security rule: A subject cannot read data located at a higher security level than that possessed by the subject (also called no read up).

The star (*)-property rule: A subject cannot write to a lower level than that possessed by the subject (also called no write down or the confinement rule).

The strong star property rule: A subject can perform both read and write functions only at the same level granted to the subject.

The *-property rule is depicted in Figure 3-2.

Figure 3-2 The *-Property Rule

The main concern of the Bell-LaPadula security model and its use of these rules is confidentiality. Although its basic model is a MAC system, another property rule called the discretionary security property (ds-property) makes a mixture of mandatory and discretionary controls possible. This property allows a subject to pass along permissions at its own discretion. In the discretionary portion of the model, access permissions are defined through an access control matrix using a process called authorization, and security policies prevent information flowing downward from a high security level to a low security level.

The Bell-LaPadula security model does have limitations. Among those are

It contains no provision or policy for changing data access control. Therefore, it works well only with access systems that are static in nature.

It does not address what are called covert channels. A low-level subject can sometimes detect the existence of a high-level object when it is denied access. Sometimes it is not enough to hide the content of an object; also their existence might have to be hidden.

Its main contribution at the expense of other concepts is confidentiality.

This security policy model was the basis for the Orange Book, discussed in the later section “TCSEC.”

Biba Model

The Biba model came after the Bell-LaPadula model and shares many characteristics with that model. These two models are the most well-known of the models discussed here. It is also a state machine model that uses a series of lattices or security levels, but the Biba model concerns itself more with the integrity of information rather than the confidentiality of that information. It does this by relying on a data classification system to prevent unauthorized modification of data. Subjects are assigned classes according to their trustworthiness; objects are assigned integrity labels according to the harm that would be done if the data were modified improperly.

Like the Bell-LaPadula model, the Biba model applies a series of properties or axioms to guide the protection of integrity. Its effect is that data must not flow from a receptacle of given integrity to a receptacle of higher integrity:

Integrity axiom: Subjects cannot write to a higher integrity level than that to which they have access (no write up).

Simple integrity axiom: Subjects cannot read to a lower integrity level than that to which they have access (no read down).

Invocation property: Subjects cannot invoke (request service of) higher integrity.

Clark-Wilson Integrity Model

Developed after the Biba model, the Clark-Wilson integrity model also concerns itself with data integrity. The model describes a series of elements that are used to control the integrity of data as listed here:

User: An active user’s agent

Transformation procedure (TP): An abstract operation, such as read, write, and modify, implemented through programming

Constrained data item (CDI): An item that can be manipulated only through a TP

Unconstrained data item (UDI): An item that can be manipulated by a user via read and write operations

Integrity verification procedure (IVP): A check of the consistency of data with the real world

This model enforces these elements by allowing data to be altered only through programs and not directly by users. Rather than employing a lattice structure, it uses a three-part relationship of subject/program/object known as a triple. It also sets as its goal the concepts of separation of duties and well-formed transactions:

Separation of duties: This concept ensures that certain operations require additional verification or that all personnel do their part.

Well-formed transaction: This concept ensures that all values are checked before and after the transaction by carrying out particular operations to complete the change of data from one state to another.

To ensure that integrity is attained and preserved, the Clark-Wilson model asserts, integrity-monitoring and integrity-preserving rules are needed. Integrity-monitoring rules are called certification rules, and integrity-preserving rules are called enforcement rules.

Lipner Model

The Lipner model is an implementation that combines elements of the Bell-LaPadula model and the Biba model. The first way of implementing integrity with the Lipner model uses Bell-LaPadula and assigns subjects to one of two sensitivity levels—system manager and anyone else—and to one of four job categories. Objects are assigned specific levels and categories. Categories become the most significant integrity (such as access control) mechanism. The second implementation uses both Bell-LaPadula and Biba. This method prevents unauthorized users from modifying data and prevents authorized users from making improper data modifications. The implementations also share characteristics with the Clark-Wilson model in that it separates objects into data and programs.

Brewer-Nash (Chinese Wall) Model

The Brewer-Nash (Chinese Wall) model introduced the concept of allowing access controls to change dynamically based on a user’s previous actions. One of its goals is to do this while protecting against conflicts of interest. This model is also based on an information flow model. Implementation involves grouping data sets into discrete classes, each class representing a different conflict of interest. Isolating data sets within a class provides the capability to keep one department’s data separate from another in an integrated database.

Graham-Denning Model

The Graham-Denning model addresses an issue ignored by the Bell-LaPadula (with the exception of the ds-property) and Biba models. It deals with the delegate and transfer rights. It focuses on issues such as

Securely creating and deleting objects and subjects

Securely providing or transferring access rights

Harrison-Ruzzo-Ullman Model

The Harrison-Ruzzo-Ullman model also deals with access rights. It restricts the set of operations that can be performed on an object to a finite set to ensure integrity. It is used by software engineers to prevent unforeseen vulnerabilities from being introduced by overly complex operations.

Goguen-Meseguer Model

Although not as well known as Biba and other integrity models, the Goguen-Meseguer model is the foundation of the noninterference model. With this model, the list of objects that a subject can access is predetermined. Subjects can then perform these predetermined actions only against the predetermined objects. Subjects are unable to interfere with each other’s activities.

Sutherland Model

The Sutherland model focuses on preventing interference in support of integrity. Based on the state machine and information flow models, this model defines a set of system states, initial states, and state transitions. Using these predetermined secure states, the Sutherland model maintains integrity and prohibits interference.

System Architecture Steps

Various models and frameworks discussed in this chapter might differ in the exact steps toward developing a system architecture but do follow a basic pattern. The main steps include

Design phase: In this phase system requirements are gathered and the manner in which the requirements will be met is mapped out using modeling techniques that usually graphically depict the components that satisfy each requirement and the interrelationships of these components. At this phase many of the frameworks and security models discussed later in this chapter are used to help meet the architectural goals.

Development phase: In this phase hardware and software components are assigned to individual teams for development. At this phase the work done in the first phase can help to ensure these independent teams are working toward components that will fit together to satisfy requirements.

Maintenance phase: In this phase the system and security architecture are evaluated to ensure that the system operates properly and that security of the systems is maintained. The system and security should be periodically reviewed and tested.

Retirement phase: In this phase the system is retired from use in the live environment. Security professionals must ensure that the organization follows proper disposal procedures and ensure that data cannot be obtained from disposed assets.

ISO/IEC 42010:2011

ISO/IEC 42010:2011 uses specific terminology when discussing architectural frameworks. The following is a review of some of the most important terms:

Architecture: The organization of the system, including its components and their interrelationships, along with the principles that guide its design and evolution

Architectural description (AD): The set of documents that convey the architecture in a formal manner

Stakeholder: Individuals, teams, and departments, including groups outside the organization with interests or concerns to consider

View: The representation of the system from the perspective of a stakeholder or a set of stakeholders

Viewpoint: A template used to develop individual views that establish the audience, techniques, and assumptions made

Computing Platforms

A computing platform is composed of the hardware and software components that allow software to run. This typically includes the physical components, the operating systems, and the programming languages used. From a physical and logical perspective, a number of possible frameworks or platforms are in use. The following sections discuss some of the most common.

Mainframe/Thin Clients

When a mainframe/thin client platform is used, a client/server architecture exists. The server holds the application and performs all the processing. The client software runs on the user machines and simply sends requests for operations and displays the results. When a true thin client is used, very little exists on the user machine other than the software that connects to the server and renders the result.

Distributed Systems

The distributed platform also uses a client/server architecture, but the division of labor between the server portion and the client portion of the solution might not be quite as one-sided as you would find in a mainframe/thin client scenario. In many cases multiple locations or systems in the network might be part of the solution. Also, sensitive data may be more likely to be located on the user’s machine, and therefore the users play a bigger role in protecting it with best practices.

Another characteristic of a distributed environment is multiple processing locations that can provide alternatives for computing in the event a site becomes unavailable.

Data is stored at multiple, geographically separate locations. Users can access the data stored at any location with the users’ distance from those resources transparent to the user.

Distributed systems can introduce security weaknesses into the network that must be considered. The following are some examples:

Desktop systems can contain sensitive information that might be at risk of being exposed.

Users might generally lack security awareness.

Modems present a vulnerability to dial-in attacks.

Lack of proper backup might exist.

Middleware

In a distributed environment, middleware is software that ties the client and server software together. It is neither a part of the operating system nor a part of the server software. It is the code that lies between the operating system and applications on each side of a distributed computing system in a network. It might be generic enough to operate between several types of client/server systems of a particular type.

Embedded Systems

An embedded system is a piece of software built into a larger piece of software that is in charge of performing some specific function on behalf of the larger system. The embedded part of the solution might address specific hardware communications and might require drivers to talk between the larger system and some specific hardware.

Mobile Computing

Mobile code is instructions passed across the network and executed on a remote system. An example of mobile code is Java and ActiveX code downloaded into a web browser from the World Wide Web. Any introduction of code from one system to another is a security concern but is required in some situations. An active content module that attempts to monopolize and exploit system resources is called a hostile applet. The main objective of the Java Security Model (JSM) is to protect the user from hostile, network mobile code. It does this by placing the code in a sandbox, which restricts its operations.

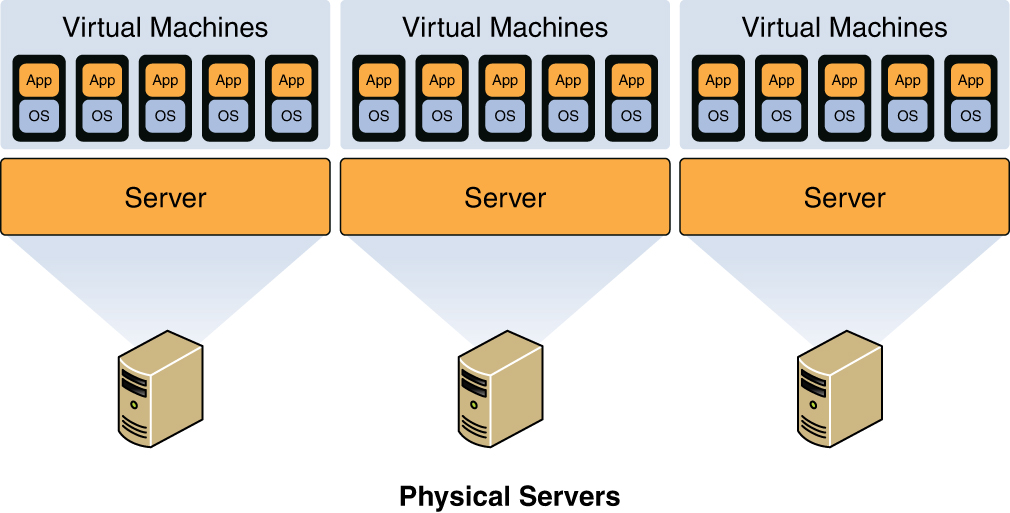

Virtual Computing

Virtual environments are increasingly being used as the computing platform for solutions. Most of the same security issues that must be mitigated in the physical environment must also be addressed in the virtual network.

In a virtual environment, instances of an operating system are called virtual machines (VMs). A host system can contain many VMs. Software called a hypervisor manages the distribution of resources (CPU, memory, and disk) to the VMs. Figure 3-3 shows the relationship between the host machine, its physical resources, the resident VMs, and the virtual resources assigned to them.

Figure 3-3 Virtualization

Security Services

The process of creating system architecture also includes design of the security that will be provided. These services can be classified into several categories depending on the protections they are designed to provide. The following sections briefly examine and compare types of security services.

Boundary Control Services

These services are responsible for placing various components in security zones and maintaining boundary control among them. Generally, this task is accomplished by indicating components and services as trusted or not trusted. As an example, memory space insulated from other running processes in a multiprocessing system is part of a protection boundary.

Access Control Services

In Chapter 5, you will learn about various methods of access control and how they can be deployed. An appropriate method should be deployed to control access to sensitive material and to give users the minimum required access they need to do their jobs.

Integrity Services

As you might recall, integrity implies that data has not been changed. When integrity services are present, they ensure that data moving through the operating system or application can be verified to not have been damaged or corrupted in the transfer.

Cryptography Services

If the system is capable of scrambling or encrypting information in transit, it is said to provide cryptography services. In some cases this service is not natively provided by a system and if desired must be provided in some other fashion, but if the capability is present, it is valuable, especially in instances where systems are distributed and talk across the network.

Auditing and Monitoring Services

If the system has a method of tracking the activities of the users and of the operations of the system processes, it is said to provide auditing and monitoring services. Although our focus here is on security, the value of this service goes beyond security because it also allows for monitoring what the system itself is actually doing.

System Components

When discussing the way security is provided in an architecture, having a basic grasp of the components in computing equipment is helpful. The following sections discuss those components and some of the functions they provide.

CPU

The central processing unit (CPU), also called the processor, is the hardware in the system that executes all the instructions in the code. The CPU is the heart of a computer or IT systems. It has its own set of instructions for its internal operation, and those instructions define its architecture. The software that runs on the system must be compatible with this architecture, which really means the CPU and the software can communicate.

When more than one processor is present and available, the system becomes capable of multiprocessing. This capability allows the computer to execute multiple instructions in parallel. It can be done with separate physical processors or with a single processor with multiple cores. When multiple cores are used, each core operates as a separate CPU.

CPUs have their own memory, and the CPU is able to access this memory faster than any other memory location. It also typically has cache memory where the most recently executed instructions are kept in case they are needed again. When a CPU gets an instruction from memory, the process is called fetching.

An arithmetic logic unit (ALU) in the CPU performs the actual execution of the instructions. The control unit acts as the system manager while instructions from applications and operating systems are executed. CPU registers contain the instruction set information and data to be executed and include general registers, special registers, and a program counter register.

CPUs can work in user mode or privileged mode, which is also referred to as kernel or supervisor mode. When applications are communicating with the CPU, it is in user mode. If an instruction that is sent to the CPU is marked to be performed in privileged mode, it must be a trusted operating system process and is given functionality not available in user mode.

The CPU is connected to an address bus. Memory and I/O devices recognize this address bus. These devices can then communicate with the CPU, read requested data, and send it to the data bus.

When microcomputers were first developed, the instruction fetch time was much longer than the instruction execution time because of the relatively slow speed of memory access. This situation led to the design of the Complex Instruction Set Computer (CISC) CPU. In this arrangement, the set of instructions was reduced (while made more complex) to help mitigate the relatively slow memory access.

After memory access was improved to the point where not much difference existed in memory access times and processor execution times, the Reduced Instruction Set Computer (RISC) architecture was introduced. The objective of the RISC architecture was to reduce the number of cycles required to execute an instruction, which was accomplished by making the instructions less complex.

Multitasking and Multiprocessing

Multitasking is the process of carrying out more than one task at a time. Multitasking can be done in two different ways. When the computer has a single processor with one core, it is not really doing multiple tasks at once. It is dividing its CPU cycles between tasks at such a high rate of speed that it appears to be doing multiple tasks at once. However, when a computer has more than one processor or has a processor with multiple cores, then it is capable of actually performing two tasks at the same time. It can do this in two different ways:

Symmetric mode: In this mode the processors or cores are handed work on a round-robin basis, thread by thread.

Asymmetric mode: In this mode a processor is dedicated to a specific process or application; when work needs done for that process, it always is done by the same processor. Figure 3-4 shows the relationship between these two modes.

Figure 3-4 Types of Multiprocessing

Preemptive multitasking means that task switches can be initiated directly out of interrupt handlers. With cooperative (nonpreemptive) multitasking, a task switch is only performed when a task calls the kernel and allows the kernel a chance to perform a task switch.

Multithreading

Multithreading allows multiple tasks to be performed within a single process. A thread is a self-contained sequence of instruction that can execute in parallel with other threads that are part of the same process. Multithreading is often used in applications to reduce overhead and increase efficiency. An example of multithreading is having multiple Microsoft Excel spreadsheets open at the same time. In this situation, the computer does not run multiple instances of Microsoft Excel. Each spreadsheet is treated as a single thread within the single Microsoft Excel process with the software managing which thread is being accessed.

Single-State Versus Multistate Systems

Single-state systems manage information at different levels using policy mechanisms approved by security administrators. These systems handle one security level at a time. Multistate systems manage multiple security levels at the same time using the protection mechanisms described in the next section. Multistate systems are uncommon because they are so expensive to implement.

Process States

Process states are the different modes in which a process may run. A process can operate in one of several states:

Ready: The process is ready to start processing when needed.

Waiting: The process is ready for execution but is waiting for access to an object.

Running: The process is being executed until the process is finished, the time expires, or the process is blocked or aborted.

Supervisory: The process is performing an action that requires higher privileges.

Stopped: The process is finished or terminated.

Memory and Storage

A computing system needs somewhere to store information, both on a long-term basis and a short-term basis. There are two types of storage locations: memory, for temporary storage needs, and long-term storage media. Information can be accessed much faster from memory than from long-term storage, which is why the most recently used instructions or information is typically kept in cache memory for a short period of time, which ensures the second and subsequent accesses will be faster than returning to long-term memory.

Computers can have both random-access memory (RAM) and read-only memory (ROM). RAM is volatile, meaning the information must continually be refreshed and will be lost if the system shuts down. Table 3-2 contains some types of RAM used in laptops and desktops.

Table 3-2 Memory Types

Desktop Memory | Description |

SDRAM—synchronous dynamic random-access memory | Synchronizes itself with the CPU’s bus. |

DDR SDRAM—double data rate synchronous dynamic random-access memory | Supports data transfers on both edges of each clock cycle (the rising and falling edges), effectively doubling the memory chip’s data throughput. |

DDR2 SDRAM—double data rate two (2) synchronous dynamic random-access memory | Transfers 64 bits of data twice every clock cycle and is not compatible with current DDR SDRAM memory slots. |

DDR3-SDRAM—double data rate three (3) synchronous dynamic random-access memory | Offers reduced power consumption, a doubled pre-fetch buffer, and more bandwidth because of its increased clock rate. Allows for DIMMs of up to 16 GB in capacity. |

DDR4-SDRAM—double data rate four (4) synchronous dynamic random-access memory | Includes higher module density and lower voltage requirements. Theoretically allows for DIMMs of up to 512 GB in capacity. |

Laptop Memory | Description |

SODIMM—small outline DIMM | Differs from desktop RAM in physical size and pin configuration. A full-size DIMM has 100, 168, 184, 240, or 288 pins and is usually 4.5 to 5 inches in length. In contrast, a SODIMM has 72, 100, 144, 200, 204, or 260 pins and is smaller—2.5 to 3 inches. |

ROM, on the other hand, is not volatile and also cannot be overwritten without executing a series of operations that depend on the type of ROM. It usually contains low-level instructions of some sort that make the device on which it is installed operational. Some examples of ROM are

Flash memory: A type of electrically programmable ROM

Programmable logic device (PLD): An integrated circuit with connections or internal logic gates that can be changed through a programming process

Field-programmable gate array (FPGA): A type of PLD that is programmed by blowing fuse connections on the chip or using an antifuse that makes a connection when a high voltage is applied to the junction

Firmware: A type of ROM where a program or low-level instructions are installed

Memory directly addressable by the CPU, which is for the storage of instructions and data that are associated with the program being executed, is called primary memory. Regardless of which type of memory in which the information is located, in most cases the CPU must get involved in fetching the information on behalf of other components. If a component has the ability to access memory directly without the help of the CPU, it is called direct memory access (DMA).

Some additional terms you should be familiar with in regard to memory include the following:

Associative memory: The type of memory that searches for a specific data value in memory rather than using a specific memory address.

Implied addressing: The type of memory addressing that refers to registers usually contained inside the CPU.

Absolute addressing: The type of memory addressing that addresses the entire primary memory space. The CPU uses the physical memory addresses that are called absolute addresses.

Cache: A relatively small amount (when compared to primary memory) of very high speed RAM that holds the instructions and data from primary memory and that has a high probability of being accessed during the currently executing portion of a program.

Indirect addressing: The type of memory addressing where the address location that is specified in the program instruction contains the address of the final desired location.

Logical address: The address at which a memory cell or storage element appears to reside from the perspective of an executing application program.

Relative address: The address that specifies its location by indicating its distance from another address.

Virtual memory: A location on the hard drive used temporarily for storage when memory space is low.

Memory leak: A failure that occurs when a computer program incorrectly manages memory allocations, which can exhaust available system memory as an application runs.

Secondary memory: Magnetic, optical, or flash-based media or other storage devices that contain data that must first be read by the operating system and stored into memory. This memory is less expensive than primary memory.

Volatile memory: Memory that is emptied when the device shuts down or when an application cleans up.

Nonvolatile memory: Long-term persistent storage that remains even when the device shuts down.

Random Versus Sequential Access

Random access devices read data immediately from any point on the drive. Sequential access devices read data as it is stored on the drive in the order in which it is stored. RAM, magnetic hard drives, and USB flash drives are random access devices, while magnetic tapes are sequential access devices.

Input/Output Devices

Input/output (I/O) devices are used to send and receive information to the system. Examples are the keyboard, mouse, displays, and printers. The operating system controls the interaction between the I/O devices and the system. In cases where the I/O device requires the CPU to perform some action, it may signal the CPU with a message called an interrupt. Not all devices require an interrupt to communicate with the CPU.

Input/Output Structures

Some computer activities are general I/O operations that require manual configuration of devices. The I/O structures used by those activities utilize memory-mapped I/O, interrupt requests (IRQs), and direct memory access (DMA).

With memory-mapped I/O, the CPU manages access to a series of mapped memory addresses or locations. Using these memory-mapped locations, the user actually obtains input from the corresponding device. The input is copied to those memory locations when the device signals that it is ready. When the user writes to the memory-mapped locations, the output to the device is copied from the memory location to the device when the CPU indicates that the output is ready. When memory-mapped I/O is used, a single device or piece of hardware should map to a specific memory address. That address should be used by no other device or hardware. The operating system manages access to mapped-memory locations.

An IRQ assigns specific signal lines to a device through an interrupt controller. IRQs are mapped to specific CPU-addressed memory locations. When a device wants to communicate, it sends a signal to the CPU through its assigned IRQ. Older devices must have exclusive use of an IRQ, while newer plug-and-play (PnP) devices can share an IRQ. Older computers had IRQs 0–15, while newer computers have IRQs 0–23. If an IRQ conflict occurs, none of the devices sharing the IRQ will be available. The operating system manages access to IRQs.

DMA access uses a channel with two signal lines, one of which is the DMA request (DMQ) line and the other of which is the DMA acknowledgment (DACK) line. This I/O structure type allows devices to work directly with memory without waiting on the CPU. The CPU simply authorizes the access and then lets the device communicate with memory directly. A DACK signal is used to release the memory location back to the CPU. DMA is much faster than the other two methods. The operating system manages DMA assignments.

Firmware

Firmware is software that is stored on an EPROM or EEPROM chip within a device. While updates to firmware may become necessary, they are infrequent. Firmware can exist as the basic input/output system (BIOS) on a computer or device firmware.

BIOS/UEFI

A computer’s BIOS contains the basic instruction that a computer needs to boot and load the operating system from a drive. The process of updating the BIOS with the latest software is referred to as flashing the BIOS. Security professionals should ensure that any BIOS updates are obtained from the BIOS vendor and have not been tampered with in any way.

The traditional BIOS has been replaced with the Unified Extensible Firmware Interface (UEFI). UEFI maintains support for legacy BIOS devices but is considered a more advanced interface than traditional BIOS. BIOS uses the master boot record (MBR) to save information about the hard drive data, while UEFI uses the GUID partition table (GPT). BIOS partitions were a maximum of 4 partitions, each being only 2 terabytes (TB). UEFI allows up to 128 partitions, with the total disk limit being 9.4 zettabytes (ZB) or 9.4 billion terabytes. UEFI is also faster and more secure than traditional BIOS. UEFI Secure Boot requires boot loaders to have a digital signature.

UEFI is an open standard interface layer between the firmware and the operating system that requires firmware updates to be digitally signed. Security professionals should understand the following points regarding UEFI:

It was designed as a replacement for traditional PC BIOS.

Additional functionality includes support for Secure Boot, network authentication, and universal graphics drivers.

It protects against BIOS malware attacks including rootkits.

Secure Boot requires that all boot loader components (e.g., OS kernel, drivers) attest to their identity (digital signature) and the attestation is compared to the trusted list.

When a computer is manufactured, a list of keys that identify trusted hardware, firmware, and operating system loader code (and in some instances, known malware) is embedded in the UEFI.

It ensures the integrity and security of the firmware.

It prevents malicious files from being loaded.

Can be disabled for backward compatibility.

Device Firmware

Hardware devices, such as routers and printers, require some processing power to complete their tasks. This firmware is contained in the firmware chips located within the devices. Like with computers, this firmware is often installed on EEPROM to allow it to be updated. Again, security professionals should ensure that updates are obtained only from the device vendor and that the updates have not been changed in any manner, including modified by a third party.

Operating Systems

The operating system is the software that enables a human to interact with the hardware that comprises the computer. Without the operating system, the computer would be useless. Operating systems perform a number of noteworthy and interesting functions as part of the interfacing between the human and the hardware. In this section, we look at some of these activities.

A thread is an individual unit of an application for a specific process. A process is a set of threads that are part of the same larger application. An application’s instructions are not considered processes until they have been loaded into memory where all instructions must first be copied to be processed by the CPU. A process can be in a running state, ready state, or blocked state. When a process is blocked, it is simply waiting for data to be transmitted to it, usually through user data entry. A group of processes that share access to the same resources is called a protection domain.

CPUs can be categorized according to the way in which they handle processes. A superscalar computer architecture is characterized by a processor that enables concurrent execution of multiple instructions in the same pipeline stage. A processor in which a single instruction specifies more than one concurrent operation is called a Very Long Instruction Word (VLIW) processor. A pipelined processor overlaps the steps of different instructions, whereas a scalar processor executes one instruction at a time, consequently increasing pipelining.

From a security perspective, processes are placed in a ring structure according to the concept of least privilege, meaning they are only allowed to access resources and components required to perform the task. A common visualization of this structure is shown in Figure 3-5.

Figure 3-5 Ring Structure

When a computer system processes I/O instructions, it is operating in supervisor mode. The termination of selected, noncritical processing when a hardware or software failure occurs and is detected is referred to as a fail soft state. It is in a fail safe state if the system automatically leaves system processes and components in a secure state when a failure occurs or is detected in the system.

Memory Management

Because all information goes to memory before it can be processed, secure management of memory is critical. Memory space insulated from other running processes in a multiprocessing system is part of a protection domain.

System Security Evaluation Models

In an attempt to bring order to the unexpected security threats that happen, several evaluation models have been created to assess and rate the security of these products. An assurance level examination attempts to examine the security-related components of a system and assign a level of confidence that the system can provide a particular level of security. In the following sections, we discuss organizations that have created such evaluation systems.

TCSEC

The Trusted Computer System Evaluation Criteria (TCSEC) was developed by the National Computer Security Center (NCSC) for the U.S. DoD to evaluate products. NCSC has issued a series of books focusing on both computer systems and the networks in which they operate. They address confidentiality, but not integrity. In 2005, TCSEC was replaced by the Common Criteria, discussed later in the chapter. However, security professionals still need to understand TCSEC because of its effect on security practices today and because some of its terminology is still in use.

With TCSEC, functionality and assurance are evaluated separately and form a basis for assessing the effectiveness of security controls built into automatic data-processing system products. For example, the concept of least privilege is derived from TCSEC. In the following sections, we discuss those books and the ratings they derive.

Rainbow Series

The original publication created by the TCSEC was the Orange Book, but as time went by, other books were also created that focused on additional aspects of the security of computer systems. Collectively, this set of more than 20 books is now referred to as the Rainbow Series, alluding to the fact that each book is a different color. For example, the Green Book focuses solely on password management. Next, we cover the most important books: the Red Book, Orange Book, and Green Book.

Red Book

The Trusted Network Interpretation (TNI) extends the evaluation classes of the TCSEC (DOD 5200.28-STD) to trusted network systems and components in the Red Book. So where the Orange Book focuses on security for a single system, the Red Book addresses network security.

Orange Book

The Orange Book is a collection of criteria based on the Bell-LaPadula model that is used to grade or rate the security offered by a computer system product. Covert channel analysis, trusted facility management, and trusted recoveries are concepts discussed in this book.

The goals of this system can be divided into two categories, operational assurance requirements and life cycle assurance requirements, the details of which are defined next.

The operational assurance requirements specified in the Orange Book are as follows:

System architecture

System integrity

Covert channel analysis

Trusted facility management

Trusted recovery

The life cycle assurance requirements specified in the Orange Book are as follows:

Security testing

Design specification and testing

Configuration management

Trusted distribution

TCSEC uses a classification system that assigns an alphabetic letter and a number to describe systems’ security effectiveness. The assigned letter refers to a security assurance level or division as A, B, C, D, and the number refers to gradients within that security assurance level or class. Each division and class incorporates all the required elements of the ones below it.

In order of least secure to most secure, the four classes and their constituent divisions and requirements are as follows:

D—Minimal Protection

Reserved for systems that have been evaluated but that fail to meet the requirements for a higher division.

C—Discretionary Protection

C1—Discretionary Security Protection

– Requires identification and authentication.

– Requires separation of users and data.

– Uses discretionary access control (DAC) capable of enforcing access limitations on an individual or group basis.

– Requires system documentation and user manuals.

C2—Controlled Access Protection

– Uses a more finely grained DAC.

– Provides individual accountability through login procedures.

– Requires protected audit trails.

– Invokes object reuse theory.

– Requires resource isolation.

B—Mandatory Protection

B1—Labeled Security Protection

– Uses an informal statement of the security policy.

– Requires data sensitivity or classification labels.

– Uses MAC over selected subjects and objects.

– Capable of label exportation.

– Requires removal or mitigation of discovered flaws.

– Uses design specifications and verification.

B2—Structured Protection

– Requires a clearly defined and formally documented security policy.

– Uses DAC and MAC enforcement extended to all subjects and objects.

– Analyzes and prevents covert storage channels for occurrence and bandwidth.

– Structures elements into protection-critical and non-protection-critical categories.

– Enables more comprehensive testing and review through design and implementation.

– Strengthens authentication mechanisms.

– Provides trusted facility management with administrator and operator segregation.

– Imposes strict configuration management controls.

B3—Security Domains

– Satisfies reference monitor requirements.

– Excludes code not essential to security policy enforcement.

– Minimizes complexity through significant systems engineering.

– Defines the security administrator role.

– Requires an audit of security-relevant events.

– Automatically detects and responds to imminent intrusion detection, including personnel notification.

– Requires trusted system recovery procedures.

– Analyzes and prevents covert timing channels for occurrence and bandwidth.

– An example of such a system is the XTS-300, a precursor to the XTS-400.

A—Verified Protection

A1—Verified Design

– Provides higher assurance than B3, but is functionally identical to B3.

– Uses formal design and verification techniques, including a formal top-level specification.

– Requires that formal techniques are used to prove the equivalence between the Trusted Computer Base (TCB) specifications and the security policy model.

– Provides formal management and distribution procedures.

– An example of such a system is Honeywell’s Secure Communications Processor (SCOMP), a precursor to the XTS-400.

Green Book

The Green Book provides guidance on password creation and management. It includes single sign-on (SSO) responsibilities, user responsibilities, authentication mechanisms, and password protection. The following major features are advocated in this guideline:

Users should be able to change their own passwords.

Passwords should be machine-generated rather than user-created.

Certain audit reports (e.g., date and time of last login) should be provided by the system directly to the user.

ITSEC

TCSEC addresses confidentiality only and bundles functionality and assurance. In contrast to TCSEC, the Information Technology Security Evaluation Criteria (ITSEC) addresses integrity and availability as well as confidentiality. Another difference is that the ITSEC was mainly a set of guidelines used in Europe, whereas the TCSEC was relied on more in the United States.

ITSEC has a rating system in many ways similar to that of TCSEC. ITSEC has 10 classes, F1 to F10, to evaluate the functional requirements and 7 TCSEC classes, E0 to E6, to evaluate the assurance requirements.

Security functional requirements include the following:

F00: Identification and authentication

F01: Audit

F02: Resource utilization

F03: Trusted paths/channels

F04: User data protection

F05: Security management

F06: Product access

F07: Communications

F08: Privacy

F09: Protection of the product’s security functions

F10: Cryptographic support

Security assurance requirements include the following:

E00: Guidance documents and manuals

E01: Configuration management

E02: Vulnerability assessment

E03: Delivery and operation

E04: Life cycle support

E05: Assurance maintenance

E06: Development

E07: Testing

The TCSEC and ITSEC systems can be mapped to one another, but the ITSEC provides a number of ratings that have no corresponding concept in the TCSEC ratings. Table 3-3 shows a mapping of the two systems.

Table 3-3 Mapping of ITSEC and TCSEC

ITSEC | TCSEC |

E0 | D |

F1+E1 | C1 |

F2+E2 | C2 |

F3+E3 | B1 |

F4+E4 | B2 |

F5+E5 | B3 |

F6+E6 | A1 |

F6 | Systems that provide high integrity |

F7 | Systems that provide high availability |

F8 | Systems that provide high data integrity during communication |

F9 | Systems that provide high confidentiality (using cryptography) |

F10 | Networks with high demands on confidentiality and integrity |

The ITSEC has been largely replaced by Common Criteria, discussed in the next section.

Common Criteria

In 1990 the ISO identified the need for a standardized rating system that could be used globally. The Common Criteria (CC) for Information Technology Security Evaluation was the result of a cooperative effort to establish this system. This system uses Evaluation Assurance Levels (EALs) to rate systems, with each EAL representing a successively higher level of security testing and design in a system. The resulting rating represents the potential the system has to provide security. It assumes that the customer will properly configure all available security solutions, so it is required that the vendor always provide proper documentation to allow the customer to fully achieve the rating. ISO/IEC 15408-1:2009 is the ISO version of the CC.

The CC represents requirements for IT security of a product or system in two categories: functionality and assurance. This means that the rating should describe what the system does (functionality), and the degree of certainty the raters have that the functionality can be provided (assurance).

The CC has seven assurance levels, which range from EAL1 (lowest), where functionality testing takes place, through EAL7 (highest), where thorough testing is performed and the system design is verified.

The assurance designators used in the CC are as follows:

EAL1: Functionally tested

EAL2: Structurally tested

EAL3: Methodically tested and checked

EAL4: Methodically designed, tested, and reviewed

EAL5: Semi-formally designed and tested

EAL6: Semi-formally verified design and tested