Chapter 7

Security Operations

This chapter covers the following topics:

Investigations: Concepts discussed include forensic and digital investigations and procedures, reporting and documentation, investigative techniques, evidence collection and handling, and digital forensics tools, tactics, and procedures.

Logging and Monitoring Activities: Concepts discussed include audit and review, intrusion detection and prevention, security information and event management, continuous monitoring, egress monitoring, log management, threat intelligence, and user and entity behavior analytics (UEBA).

Configuration and Change Management: Concepts discussed include configuration management and change management, resource provisioning, baselining, and automation.

Security Operations Concepts: Concepts discussed include need to know/least privilege; account, group, and role management; separation of duties and responsibilities; privileged account management; job rotation and mandatory vacation; two-person control; sensitive information procedures; record retention; information life cycle; and service-level agreements.

Resource Protection: Concepts discussed include protecting tangible and intangible assets and managing media, hardware, and software assets.

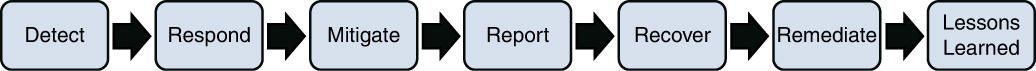

Incident Management: Concepts discussed include event versus incident, incident response team and incident investigations, rules of engagement, authorization, scope, incident response procedures, incident response management, and the steps in the incident response process.

Detective and Preventive Measures: Concepts discussed include IDS/IPS, firewalls, whitelisting/blacklisting, third-party security services, sandboxing, honeypots/honeynets, anti-malware/antivirus, clipping levels, deviations from standards, unusual or unexplained events, unscheduled reboots, unauthorized disclosure, trusted recovery, trusted paths, input/output controls, system hardening, vulnerability management systems, and machine learning and artificial intelligence (AI) based tools.

Patch and Vulnerability Management: Concepts discussed include the enterprise patch management process.

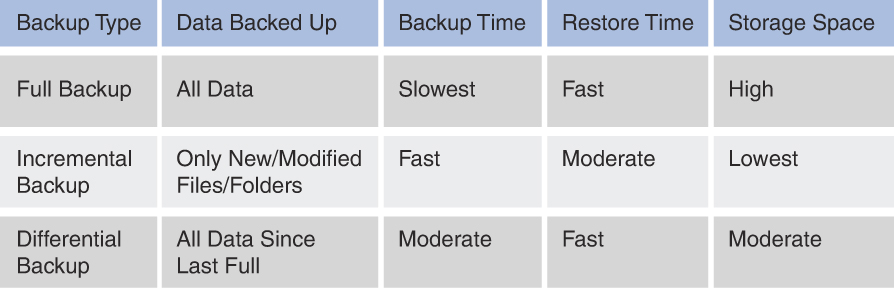

Recovery Strategies: Concepts discussed include creating recovery strategies; backup storage strategies; recovery and multiple site strategies; redundant systems, facilities, and power; fault-tolerance technologies; insurance; data backup; fire detection and suppression; high availability; quality of service; and system resilience.

Disaster Recovery: Concepts discussed include response, personnel, communications, assessment, restoration, training and awareness, and lessons learned.

Testing Disaster Recovery Plans: Concepts discussed include read-through test, checklist test, table-top exercise, structured walk-through test, simulation test, parallel test, full-interruption test, functional drill, and evacuation drill.

Business Continuity Planning and Exercises: Concepts discussed include business continuity planning and exercises.

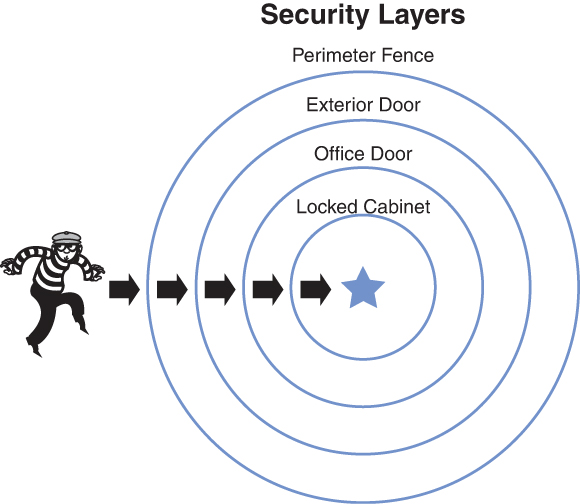

Physical Security: Concepts discussed include perimeter security controls and internal security controls.

Personnel Safety and Security: Concepts discussed include duress, travel, monitoring, emergency management, and security training and awareness.

Security operations involves ensuring that all operations within an organization are carried out in a secure manner. It is concerned with investigating, managing, and preventing events or incidents. It also covers logging activities as they occur, provisioning and protecting resources as needed, managing events and incidents, recovering from events and disasters, and providing physical security. Ultimately, security operations involves the day-to-day operation of an organization. Security professionals should receive the appropriate training in these areas or employ experts in them to ensure that the organization’s assets are properly protected.

The Security Operations domain within CISSP addresses a broad array of topics, including investigations, logging, monitoring, provisioning, security operations concepts, resource protection, incident management, detective and preventive measures, patch and vulnerability management, change management, disaster recovery, business continuity, physical security, and personnel safety. The Security Operations domain carries an average weight of 13 percent of the CISSP certification exam, which is the third highest weight of all the eight domains and is tied with two other domains. So, pay close attention to the many details in this chapter!

Foundation Topics

Investigations

Investigations must be carried out in the appropriate manner to ensure that any evidence collected can be used in court. Without proper investigations and evidence collection, attackers will not be held responsible for their actions. In the following sections, we discuss forensic and digital investigations and evidence.

Forensic and Digital Investigations

Computer investigations require different procedures than regular investigations because the timeframe for the investigator is compressed, and a security or other technical expert might be required to assist in the investigation. Also, computer information is intangible and often requires extra care to ensure that the data is retained in its original format. Finally, the evidence in a computer crime is much more difficult to gather.

After a decision has been made to investigate a computer crime, you, as a security professional, should follow standardized procedures, including the following:

Identify what type of system is to be seized.

Identify the search and seizure team members.

Determine the risk that the suspect will destroy evidence.

After law enforcement has been informed of a computer crime, the organization’s investigator’s constraints are increased. You might need to turn the investigation over to law enforcement to ensure that evidence is preserved properly.

In the investigation of a computer crime, evidentiary rules must be addressed. Computer evidence should prove a fact that is material to the case and must be reliable. The chain of custody must be maintained, as described later in the chapter. Computer evidence is less likely to be admitted in court as evidence if the process for producing the evidence has not been properly documented.

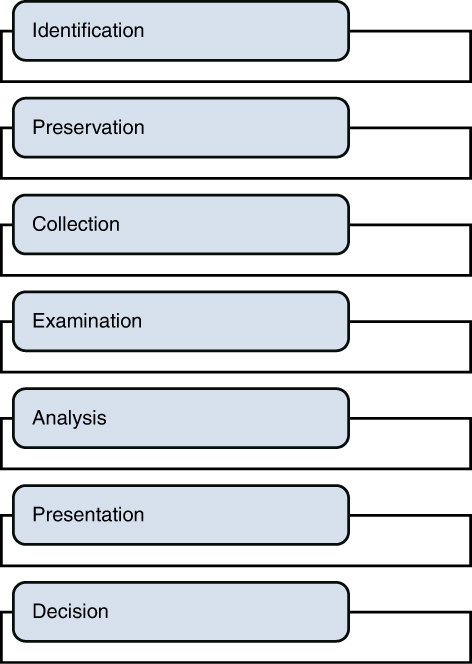

Any forensic investigation involves the following steps:

Identification

Preservation

Collection

Examination

Analysis

Presentation

Decision

The forensic investigation process is shown in Figure 7-1.

Figure 7-1 Forensic Investigation Process

The following sections cover these forensic investigation steps in detail as well as explain forensic procedures, reporting and documentation, IOCE/SWGDE and NIST, the crime scene, MOM, the chain of custody, interviewing, and investigative techniques.

Identify Evidence

The first step in any forensic investigation is to identify and secure the crime scene and identify the evidence. The evidence is identified by reviewing audit logs, monitoring systems, analyzing user complaints, and analyzing detection mechanisms. Initially, the investigators might be unsure of which evidence is important. Preserving evidence that you might not need, which is the next step in the process, is always better than wishing you had evidence that you did not retain. Therefore, a logical first step in any investigation is identifying the evidence and securing the crime scene.

In digital investigations, the attacked system is considered the crime scene. In some cases, the system from which the attack originated can also be considered part of the crime scene. However, fully capturing the attacker’s systems may not always be possible. For this reason, you should ensure that you capture any data, such as IP addresses, usernames, and other identifiers, that can point to a specific system.

Preserve and Collect Evidence

The next steps in forensic investigations include preserving and collecting evidence. This process involves making system images, implementing chain of custody (which is discussed in detail in its own section later), documenting the evidence, and recording timestamps.

Before collecting any evidence, consider the order of volatility. This order ensures that investigators collect evidence from the components that are most volatile first.

The order of volatility, from most volatile to least volatile, is as follows:

Memory contents

Swap files

Network processes

System processes

File system information

Raw disk blocks

To make system images, you need to use a tool that creates a bit-level copy of the system. In most cases, you must isolate the system and remove it from production or the live environment to create this bit-level copy. You should ensure that two copies of the image are retained. One copy of the image will be stored to ensure that an undamaged, accurate copy is available as evidence. The other copy will be used during the examination and analysis steps. Message digests should be used to ensure data integrity.

Although the system image is usually the most important piece of evidence, it is not the only piece of evidence you need. You might also need to capture data that is stored in cache, process tables, memory, and the registry. When documenting a computer attack, you should use a bound notebook to keep notes.

Remember that you might need to include experts in digital investigations to ensure that evidence is properly preserved and collected. Investigators usually assemble a field kit to help in the investigation process. This kit might include tags and labels, disassembly tools, and tamper-evident packaging. Commercial field kits are available, or you could assemble your own based on organizational needs.

Examine and Analyze Evidence

After evidence has been preserved and collected, the investigator then needs to examine and analyze the evidence. While examining evidence, any characteristics, such as timestamps and identification properties, should be determined and documented. After the evidence has been fully analyzed using scientific methods, the full incident should be reconstructed and documented.

Present Findings

After an examination and analysis of the evidence, it must be presented as evidence in court. In most cases when presenting evidence in court, presenting the findings in a format the audience can understand is best. Although an expert should be used to testify as to the findings, it is important that the expert be able to articulate to a nontechnical audience the details of the evidence.

Decide

At the end of the court proceeding, a decision will be made as to the guilt or innocence of the accused party. At that time, evidence may no longer need to be retained, provided there is no possibility of an appeal. However, documenting any lessons learned from the incident is important. Any individuals involved in any part of the investigation should be a part of this lessons-learned session.

Forensic Procedures

Collecting digital evidence is trickier than collecting physical evidence and must be completed by trained forensic technicians and investigators. These individuals must stay abreast of the latest tools and technologies that can be used to investigate a computer crime.

Technicians and investigators must follow established forensic procedures to ensure that any evidence collected will be admissible in a court of law. It is the trained individual’s responsibility to ensure that the procedures they use follow established standards. Organizations, such as the National Institute of Standards and Technology (NIST) and the International Organization for Standardization and the International Electrotechnical Commission (ISO/IEC), establish standards that help to guide organizations in the proper establishment of these and other procedures. Always consult with these standards prior to performing any investigation to determine whether suggested procedures have changed or if new tools are available.

Reporting and Documentation

After any investigation is over, security professionals should provide reports and documentation to management regarding the incident. This report should be presented to management as quickly as possible so that management can determine whether controls need to be implemented to prevent the incident. This submission to management will often happen prior to the presentation of any legal findings in a court of law. Organizations should establish procedures for ensuring that individuals to whom the reports are submitted have the appropriate clearance. It may also be necessary to redact certain parts of the report to ensure that any criminal cases are not negatively affected.

Although internal reporting is important, security professionals should also have guidelines for when to report incidents to law enforcement. The earlier that law enforcement is involved, the more likely that evidence will be admissible in a court of law. However, most local law enforcement personnel do not have the knowledge or skills to carry out a full digital investigation. If the organization does not have properly trained personnel, a forensic investigator will need to be called in to perform the investigation. Legal professionals should also be brought in to help.

Proper documentation must be maintained throughout the investigation and include logs, chain of custody forms, and documented procedures and guidelines.

IOCE/SWGDE and NIST

The International Organization on Computer Evidence (IOCE) and Scientific Working Group on Digital Evidence (SWGDE) are two groups that study digital forensics and help to establish standards for digital investigations. Both groups release guidelines on many formats of digital information, including computer data, mobile device data, automobile computer systems data, and so on. Any investigators should ensure that they comply with the principles from these groups.

Although the IOCE is no longer a functioning evidence body, it did establish some principles that are still applicable today. The main principles as documented by IOCE are as follows:

The general rules of evidence should be applied to all digital evidence.

Upon the seizure of digital evidence, any actions taken to preserve the evidence should not change that evidence in any way.

When a person needs to access original digital evidence, that person should be suitably trained and authorized for the purpose.

All activity relating to the seizure, access, storage, or transfer of digital evidence must be fully documented, preserved, and available for review.

An individual is responsible for all actions taken with respect to digital evidence while the digital evidence is in that individual’s possession.

Any agency that seizes, accesses, stores, or transfers digital evidence is responsible for compliance with IOCE principles.

NIST SP 800-86, “Guide to Integrating Forensic Techniques into Incident Response,” provides guidelines on the data collection, examination, analysis, and reporting related to digital forensics. It explains the use of forensic investigators, IT staff, and incident handlers as part of any forensic investigation. It discusses how cost, response time, and data sensitivity should affect any forensic investigation.

Crime Scene

A crime scene is the environment in which potential evidence exists. After the crime scene has been identified, steps should be taken to ensure that the environment is protected, including both the physical and virtual environment. To secure the physical crime scene, an investigator might need to isolate the systems involved by removing them from a network. However, the systems should not be powered down until the investigator is sure that all digital evidence has been captured. Remember: Live computer data is dynamic and is possibly stored in several volatile locations.

In response to a possible crime, it is important to ensure that the crime scene environment is protected using the following steps:

Identify the crime scene.

Protect the entire crime scene.

Identify any pieces of evidence or potential sources of evidence that are part of the crime scene.

Collect all evidence at the crime scene.

Minimize contamination by properly securing and preserving all evidence.

Remember that there can be more than one crime scene, especially in digital crimes. If an attacker breaches an organization’s network, all assets that were compromised are part of the crime scene, and any assets that the attacker used are also part of the crime scene.

Access to the crime scene should be tightly controlled and limited only to individuals who are vital to the investigation. As part of the documentation process, make sure to note anyone who has access to the crime scene. After a crime scene is contaminated, no way exists to restore it to the original condition.

MOM

Documenting motive, opportunity, and means (MOM) is the most basic strategy for determining suspects. Motive is all about why the crime was committed and who committed the crime. Opportunity is all about where and when the crime occurred. Means is all about how the crime was carried out by the suspect. Any suspect who is considered must possess all three of these qualities. For example, a suspect might have a motive for a crime (being dismissed from the organization) and an opportunity for committing the crime (user accounts were not disabled properly) but might not possess the means to carry out the crime.

Understanding MOM can help any investigator narrow down the list of suspects.

Chain of Custody

At the beginning of any investigation, you should ask the questions who, what, when, where, and how. These questions can help you get all the data needed for the chain of custody. The chain of custody shows who controlled the evidence, who secured the evidence, and who obtained the evidence. A proper chain of custody must be preserved to successfully prosecute a suspect. To preserve a proper chain of custody, you must collect the evidence following predefined procedures in accordance with all laws and regulations.

Chain of custody forms should be used to track who has access to the evidence, when that access occurs, and other valuable details based on the organization’s or investigation’s needs. This chain of custody form should be kept with the evidence at all times. For example, if a forensic investigator plans to analyze the contents of a digital log, that investigator should complete the appropriate information on the chain of custody form to indicate when a copy of the digital log was obtained, the type of analysis being performed, and other details.

The primary purpose of the chain of custody is to ensure that evidence is admissible in court. Law enforcement officers emphasize chain of custody in any investigations that they conduct. Involving law enforcement early in the process during an investigation can help to ensure that the proper chain of custody is followed.

Interviewing

An investigation often involves interviewing suspects and witnesses. One person should be in charge of all interviews. Because evidence needs to be obtained, ensuring that the interviewer understands what information needs to be obtained and all the questions to cover is important. Reading rights to a suspect is necessary only if law enforcement is performing the interview. Recording the interview might be a good idea to provide corroboration later when the interview is used as evidence.

If an employee is suspected of a computer crime, a representative of the human resources department should be involved in any interrogation of the suspect. The employee should be interviewed only by an individual who is senior to that employee.

Investigative Techniques

A computer crime involves the use of investigative techniques, which include interviewing (discussed above), surveillance, forensics, and undercover operations.

Surveillance includes both physical surveillance and computer surveillance. Physical surveillance uses security cameras, wiretaps, and visual tracking to monitor movement. Computer surveillance monitors elements of computer use and online behavior. It may also include sting operations, like setting up a honeypot or honeynet.

After interviews are completed and surveillance gathers enough evidence, investigators will want to perform advanced forensic analysis. Organizations can do this by continually monitoring activity, but if law enforcement is involved, a warrant will need to be obtained that will allow forensic analysis of identified computers and devices. Investigators should follow the electronic trail wherever it leads, looking for digital fingerprints in emails, files, and web-browsing histories.

In some cases, crimes may require investigators to go undercover, adopting fake online personae to trap criminals. In this case, investigators should log all interactions as evidence and may even arrange a face-to-face meeting to arrest the perpetrator.

Evidence Collection and Handling

For evidence to be admissible, it must be relevant, legally permissible, reliable, properly identified, and properly preserved. Relevant means that it must prove a material fact related to the crime in that it shows a crime has been committed, can provide information describing the crime, can provide information regarding the perpetrator’s motives, or can verify what occurred. Legally permissible means that evidence is deemed by the judge to be useful in helping the jury or judge reach a decision and cannot be objected to on the basis that it is irrelevant, immaterial, or violates the rules against hearsay and other objections. Reliability means that it has not been tampered with or modified. Properly identified means that the evidence is labeled appropriately and entered into the evidence log. Preservation means that the evidence is not damaged, modified, or corrupted in anyway.

All evidence must be tagged. When creating evidence tags, be sure to document the mode and means of transportation, a complete description of evidence including quality, who received the evidence, and who had access to the evidence.

Any investigator must ensure that evidence adheres to the five rules of evidence (see the following section). In addition, the investigator must understand each type of evidence that can be obtained and how each type can be used in court. Investigators must follow surveillance, search, and seizure guidelines. Finally, investigators must understand the differences among media, software, network, and hardware/embedded device analysis.

Five Rules of Evidence

When gathering evidence, an investigator must ensure that the evidence meets the five rules that govern it:

Be authentic.

Be accurate.

Be complete.

Be convincing.

Be admissible.

Because digital evidence is more volatile than other evidence, it still must meet these five rules.

Types of Evidence

An investigator must be aware of the types of evidence used in court to ensure that all evidence is admissible. Sometimes the type of evidence determines its admissibility.

The types of evidence that you should understand are as follows:

Best evidence

Secondary evidence

Direct evidence

Conclusive evidence

Circumstantial evidence

Corroborative evidence

Opinion evidence

Hearsay evidence

Best Evidence

The best evidence rule states that when evidence, such as a document or recording, is presented, only the original will be accepted unless a legitimate reason exists for why the original cannot be used. In most cases, digital evidence is not considered best evidence because investigators must capture copies of the original data and state.

However, courts can apply the best evidence rule to digital evidence on a case-by-case basis, depending on the evidence and the situation. In this situation, the copy must be proved by an expert witness who can testify as to the contents and confirm that it is an accurate copy of the original.

Secondary Evidence

Secondary evidence has been reproduced from an original or substituted for an original item. Copies of original documents and oral testimony are considered secondary evidence.

Direct Evidence

Direct evidence proves or disproves a fact through oral testimony based on information gathered through the witness’s senses. A witness can testify on what he saw, smelled, heard, tasted, or felt. This is considered direct evidence. Only the witness can give direct evidence. No one else can report on what the witness told them because that is considered hearsay evidence.

Conclusive Evidence

Conclusive evidence does not require any other corroboration and cannot be contradicted by any other evidence.

Circumstantial Evidence

Circumstantial evidence provides inference of information from other intermediate relevant facts. This evidence helps a jury come to a conclusion by using a fact to imply that another fact is true or untrue. An example is implying that a former employee committed an act against an organization due to his dislike of the organization after his dismissal. Circumstantial evidence is often dismissed or never presented, although it is impossible to control the behavior of jurors in this regard once they start deliberation.

Corroborative Evidence

Corroborative evidence supports another piece of evidence. For example, if a suspect produces a receipt to prove she was at a particular restaurant at a certain time and then a waiter testifies that he waited on the suspect at that time, then the waiter provides corroborating evidence through his testimony.

Opinion Evidence

Opinion evidence is based on what the witness thinks, feels, or infers regarding the facts. A witness in opinion evidence is not normally an expert because if an expert witness is used, that expert is able to testify on a fact based on knowledge in a certain area. For example, a psychiatrist can testify as to conclusions on a suspect’s state of mind. Expert testimony is not considered opinion evidence because of the expert’s knowledge and experience.

Hearsay Evidence

Hearsay evidence is evidence that is secondhand, where the witness does not have direct knowledge of the fact asserted but knows it only from being told by someone. In some cases, computer-based evidence is considered hearsay, especially if an expert cannot testify as to the accuracy and integrity of the evidence.

Surveillance, Search, and Seizure

Surveillance, search, and seizure are important facets of any investigation. Surveillance is the act of monitoring behavior, activities, or other changing information, usually of people. Search is the act of pursuing items or information. Seizure is the act of taking custody of physical or digital components.

Investigators use two types of surveillance: physical surveillance and computer surveillance. Physical surveillance occurs when a person’s actions are reported or captured using cameras, direct observance, or closed-circuit TV (CCTV). Computer surveillance occurs when a person’s actions are reported or captured using digital information, such as audit logs.

A search warrant is required in most cases to actively search a private site for evidence. For a search warrant to be issued, probable cause that a crime has been committed must be proven to a judge. The judge must also be given corroboration regarding the existence of evidence. The only time a search warrant does not need to be issued is during exigent circumstances, which are emergency circumstances that are necessary to prevent physical harm, the destruction of evidence, the suspect’s escape, or some other consequence improperly frustrating legitimate law enforcement efforts. Exigent circumstances will have to be proven when the evidence is presented in court.

Seizure of evidence can occur only if the evidence is specifically listed as part of the search warrant unless the evidence is in plain view. Evidence specifically listed in the search warrant can be seized, and the search can occur only in areas specifically listed in the warrant.

Search and seizure rules do not apply to private organizations and individuals. Most organizations warn their employees that any files stored on organizational resources are considered property of the organization. This is usually part of any no-expectation-of-privacy policy.

A discussion of evidence would be incomplete without discussing jurisdiction. Because computer crimes can involve assets that cross jurisdictional boundaries, investigators must understand that the civil and criminal laws of countries can differ greatly. It is always best to consult local law enforcement personnel for any criminal or civil investigation and follow any advice they give for investigations that cross jurisdictions.

Media Analysis

Investigators can perform many types of media analysis, depending on the media type. A media recovery specialist may be employed to provide a certified forensic image, which is an expensive process. An artifact in a digital forensics investigation includes things like registry keys, files, timestamps, and event logs. These are the traces security professionals follow in digital forensic work. They will vary depending on the device type, operating system, and other factors.

The following types of media analysis can be used:

Disk imaging: Creates an exact image of the contents of the hard drive.

Slack space analysis: Analyzes the slack (marked as empty or reusable) space on the drive to see whether any old (marked for deletion) data can be retrieved.

Content analysis: Analyzes the contents of the drive and gives a report detailing the types of data by percentage.

Steganography analysis: Analyzes the files on a drive to see whether the files have been altered or to discover the encryption used on the file.

Software Analysis

Software analysis is a little more difficult to perform than media analysis because it often requires the input of an expert on software code, including source code, compiled code, or machine code. It often involves decompiling or reverse engineering. This type of analysis is often used during malware analysis and copyright disputes.

Software analysis techniques include the following:

Content analysis: Analyzes the content of software, particularly malware, to determine for which purpose the software was created.

Reverse engineering: Retrieves the source code of a program to study how the program performs certain operations.

Author identification: Attempts to determine the software’s author.

Context analysis: Analyzes the environment the software was found in to discover clues to determining risk.

Network Analysis

Network analysis involves the use of networking tools to preserve logs and activity for evidence.

Network analysis techniques include the following:

Communications analysis: Analyzes communication over a network by capturing all or part of the communication and searching for particular types of activity.

Log analysis: Analyzes network traffic logs.

Path tracing: Traces the path of a particular traffic packet or traffic type to discover the route used by the attacker.

Hardware/Embedded Device Analysis

Hardware/embedded device analysis involves using the tools and firmware provided with devices to determine the actions that were performed on and by the device. The techniques used to analyze the hardware/embedded device vary based on the device. In most cases, the device vendor can provide advice on the best technique to use depending on what information you need. Log analysis, operating system analysis, and memory inspections are some of the general techniques used.

This type of analysis is used when mobile devices are analyzed. For performing this type of analysis, NIST makes the following recommendations:

Any analysis should not change the data contained on the device or media.

Only competent investigators should access the original data and must explain all actions they took.

Audit trails or other records must be created and preserved during all steps of the investigation.

The lead investigator is responsible for ensuring that all these procedures are followed.

All activities regarding digital evidence, including its seizure, access to it, its storage, or its transfer, must be documented, preserved, and available for review.

Digital Forensic Tools, Tactics, and Procedures

For evidence collection, investigators will need a digital toolkit. The following should be included as part of any digital toolkit:

Forensic laptops and power supplies

Tool sets

Digital camera

Case folder

Blank forms

Evidence collection and packaging supplies

Software

Air card for Internet access

Cables for data transfer (network, crossover, USB, and so on)

Blank hard drives and other media

Hardware write blockers

The digital toolkit should contain forensic tools that will enable an investigator to obtain data that can be used as evidence. The tools used by investigators are classified according to the type of information they obtain, as shown in the following list:

Disk and data capture tools

File viewers

File analysis tools

Registry analysis tools

Internet analysis tools

Email analysis tools

Mobile device analysis tools

macOS analysis tools

Network forensic tools

Database forensic tools

Many of the tools available today can provide services in multiple areas listed here. Investigators should obtain training in the proper usage of these tools.

Tools that can be included in a digital forensic toolkit include the following:

Digital Forensics Framework (DFF)

Open Computer Forensics Architecture (OCFA)

Computer Aided INvestigative Environment (CAINE)

X-Ways Forensics

SANS Investigative Forensics Toolkit (SIFT)

EnCase Forensic

Registry Recon

The Sleuth Kit (TSK)

LibForensics

Volatility

WindowsSCOPE

The Coroner’s Toolkit (TCT)

Oxygen Forensic Suite

Bulk_Extractor

Xplico

RedLine

Computer Online Forensic Evidence Extractor (COFEE)

PlainSight

XRY

Helix3

UFED

Investigators must also be familiar with the proper digital forensic tactics and procedures that are commonly used. For this reason, investigators should be properly trained to ensure that the tools, tactics, and procedures are followed so that evidence collected will be admissible in court. Keep in mind that you should not be tested on the functionality of the individual tools or the digital forensics tactics and procedures on the CISSP exam; however, you should understand that these tools, tactics, and procedures provide digital forensic investigation automation and investigatory standards compliance. A CISSP candidate’s job role is not defined as performing individual forensic investigation tasks; however, the CISSP professional should be familiar with the tools, tactics, and procedures available to ensure that an organization’s investigator obtains the appropriate tools to perform digital investigations and follows appropriate tactics and procedures.

Logging and Monitoring Activities

As part of operations security, administrators must ensure that user activities are logged and monitored regularly. These activities include audit and review, intrusion detection and prevention, security information and event management, continuous monitoring, egress monitoring, log management, threat intelligence, and user and entity behavior analytics (UEBA).

Audit and Review

Accountability is impossible without a record of activities and review of those activities. Capturing and monitoring audit logs provide the digital proof when someone who is performing certain activities needs to be identified. This goes for both the good guys and the bad guys. In many cases it is required to determine who misconfigured something rather than who stole something. Audit trails based on access and identification codes establish individual accountability. The questions to address when reviewing audit logs include the following:

Are users accessing information or performing tasks that are unnecessary for their jobs?

Are repetitive mistakes (such as deletions) being made?

Do too many users have special rights and privileges?

The level and amount of auditing should reflect the security policy of the company. Audits can be either self-audits or performed by a third party. Self-audits always introduce the danger of subjectivity to the process. Logs can be generated on a wide variety of devices including intrusion detection systems (IDSs), servers, routers, and switches. In fact, a host-based IDS makes use of the operating system logs of the host machine.

When assessing controls over audit trails or logs, address the following questions:

Does the audit trail provide a trace of user actions?

Is access to online logs strictly controlled?

Is there separation of duties between security personnel who administer the access control function and those who administer the audit trail?

Keep and store logs in accordance with the retention policy defined in the organization’s security policy. They must be secured to prevent modification, deletion, and destruction. When auditing is functioning in a monitoring role, it supports the detection security function in the technical category. When formal review of the audit logs takes place, it is a form of detective administrative control. Reviewing audit data should be a function separate from the day-to-day administration of the system.

Log Types

Logging is the process of recording event information to a log file or database. It captures system events, changes, messages, and other information that shows the activities that occur on a system or device. The different types of logs that security professionals use include security logs, systems logs, application logs, firewall logs, proxy logs, and change logs.

Security logs record access to resources, including access to files, folders, and printers. They can record when a user accesses, modifies, or deletes a file or folder. Although most systems will record when key files are accessed, it is often necessary for an administrator to enable auditing on other resources, such as data folders or network printers. When auditing is running on a device, it will affect the performance of that device. For this reason, security professionals should configure auditing only when necessary based on the organization’s security policies.

System logs record system events, such as system and service startup and shutdown. They can help a security professional to determine the actions taken by a malicious user.

Applications logs record actions that occur within a specific application. Security professionals should work with application developers or vendors to determine which type of information should be logged.

Firewall logs record network traffic information, including incoming and outgoing traffic. This usually includes important data, such as IP addresses and port numbers that can be used to determine the origin of an attack.

Proxy logs record details on the Internet traffic that passes through the proxy server, including the sites being visited by users, how much time is being spent on those sites, and if attempts are being made to access prohibited sites.

Change logs report changes made to a specific device or application as part of the change management process.

Audit Types

When auditing is enabled, administrators can select individual events to monitor to ensure user accountability. Audit types include access review audits, user privilege audits, and privileged group audits.

Access review audits ensure that object access and user account management practices adhere to the organization’s security policy. User privilege audits monitor right and permission usage for all users. Privileged group audits monitor when high-level groups and administrator accounts are used.

Intrusion Detection and Prevention

IDSs alert organizations when unauthorized access or actions occur, while intrusion prevention systems (IPSs) monitor the same kind of activity but actually work to prevent the actions from being successful. IDS and IPS devices can be used during investigations to provide information regarding traffic patterns that occur just before an attack succeeds. Security professionals must constantly tune IDS and IPS devices to ensure that the correct activity is being detected or prevented. As changes occur in the way that attacks are carried out, these systems must be adjusted.

Security Information and Event Management (SIEM)

SIEM can collect log and system information to comply with regulatory requirements, provide internal accountability, provide risk management, and perform monitoring and trending. SIEM stores raw information from various systems and devices and aggregates that information into a single database. Security professionals must work together to ensure that the appropriate actions will be monitored and to ensure that the correct examinations of the records occur. Because SIEM systems are centralized repositories of security information, organizations should take particular care to provide adequate security for these systems to ensure that attackers cannot access or alter the records contained in them.

Continuous Monitoring

Any logging and monitoring activities should be part of an organizational continuous monitoring program. The continuous monitoring program must be designed to meet the needs of the organization and implemented correctly to ensure that the organization’s critical infrastructure is guarded. Organizations may want to look into Continuous Monitoring as a Service (CMaaS) solutions deployed by cloud service providers.

Egress Monitoring

Egress monitoring occurs when an organization monitors the outbound flow of information from one network to another. The most popular form of egress monitoring is carried out using firewalls that monitor and control outbound traffic.

Data leakage occurs when sensitive data is disclosed to unauthorized personnel either intentionally or inadvertently. Data loss prevention (DLP) software attempts to prevent data leakage. It does this by maintaining awareness of actions that can and cannot be taken with respect to a document. For example, it might allow printing of a document but only at the company office. It might also disallow sending the document through email. DLP software uses ingress and egress filters to identify sensitive data that is leaving the organization and can prevent such leakage.

Another scenario might be the release of product plans that should be available only to the Sales group. A security professional could set a policy like the following for that document:

It cannot be emailed to anyone other than Sales group members.

It cannot be printed.

It cannot be copied.

A DLP can be implemented in two locations:

Network DLP: Installed at network egress points near the perimeter, network DLP analyzes network traffic.

Endpoint DLP: Endpoint DLP runs on end-user workstations or servers in the organization.

You can use both precise and imprecise methods to determine what is sensitive:

Precise methods: These methods involve content registration and trigger almost zero false-positive incidents.

Imprecise methods: These methods can include keywords, lexicons, regular expressions, extended regular expressions, metadata tags, Bayesian analysis, and statistical analysis.

The value of a DLP system lies in the level of precision with which it can locate and prevent the leakage of sensitive data.

Log Management

Log management is the process of storing and handling log events generated by applications, devices, and infrastructure components. It includes collecting, aggregating, parsing, storing, analyzing, searching, archiving, and disposing of logs. The goal of log management is to use the events entered in the logs for troubleshooting.

Log files contain a record of events and are often divided into categories. Through log management, an administrator can gather the event data in one place and examine it together, thereby allowing the administrator to analyze the data and identify issues and patterns. Log management provides improved monitoring and troubleshooting, operations, resource usage, and security.

Log management includes five key functions:

Log collection

Log aggregation

Log search and analysis

Log monitoring and alerting

Log visualization and reporting

Organizations should adopt a log management policy. This policy should include guidelines on what to log, where to store logs, how long to store logs, how often logs should be reviewed, and whether logs should be encrypted or archived for audit purposes.

Threat Intelligence

Threat intelligence is threat information that allows organizations to implement controls to protect against the threats. A threat intelligence feed (TI feed), also referred to as a threat feed, is an ongoing stream of data related to identified potential threats to an organization’s security, usually provided by threat intelligence sources. Threat intelligence sources include open-source intelligence (OSINT), social media intelligence, human intelligence, and technical intelligence, including intelligence from the dark web. Threat hunting is a cyber defense activity that proactively and iteratively searches networks to detect and isolate advanced threats that evade existing security solutions. The actual process of threat hunting is beyond the scope of the CISSP exam with its management focus. Threat hunting requires that an organization employs an experienced cybersecurity analyst or firm to use manual or machine-based techniques to proactively identify threats that currently deployed automated detection methods did not catch.

User and Entity Behavior Analytics (UEBA)

User and entity behavior analytics (UEBA), also known as user behavior analytics (UBA), is the process of gathering data regarding daily user network events so that normal conduct by users is understood. Organizations can use UEBA to detect compromised credentials, lateral movement, and other malicious behavior. UEBA are often added on as a layer to existing SIEM deployments. The key to UEBA is detecting behavior anomalies that could be indicative of a threat.

Configuration and Change Management

Configuration management specifically focuses on bringing order out of the chaos that can occur when multiple engineers and technicians have administrative access to the computers and devices that make the network function.

Configuration management documents the setup for computers and devices, including operating system and firmware versions. Change management provides the mechanism whereby the configuration can be changed through formal approval. Some standards consider configuration management a subset of change management, whereas others say change management is a subset of configuration management. It is just as important to recognize that there is a relationship between the two. Configuration management documents the configuration items (CIs) that can then be changed via the change management process.

The following are the functions of configuration management:

Report the status of change processing.

Document the functional and physical characteristics of each CI.

Perform information capture and version control.

Control changes to the CIs, and issue versions of CIs from the software library.

Examples of these types of changes are

Operating system configuration

Software configuration

Hardware configuration

From a CISSP perspective, the biggest contribution of configuration management control is ensuring that changes to the system do not unintentionally diminish security. It is the main reason why all changes must be documented, and all network diagrams, both logical and physical, must be updated regularly and consistently to accurately reflect the state of each CI currently and not as it was a few months or years ago. Verifying that all configuration management policies are being followed should be an ongoing process.

In many cases, it is beneficial to form a configuration control board. The tasks of the configuration control board can include

Ensuring that changes made are approved, tested, documented, and implemented correctly.

Meeting periodically to discuss configuration status accounting reports.

Maintaining responsibility for ensuring that changes made do not jeopardize the soundness of the verification system.

In summary, the components of configuration management are

Configuration control

Configuration status accounting

Configuration audit

All networks and devices evolve, grow, and change over time. Companies and their processes also evolve and change, which is a good thing. But organizations should manage change in a structured way so as to maintain a common sense of purpose about the changes. By following recommended steps in a formal process, change can be prevented from becoming a small element that controls the larger process. The following are guidelines to include as a part of any change control policy:

All changes should be formally requested. Change logs should be maintained.

Each request should be analyzed to ensure that it supports all goals and policies. This analysis includes baselining and security impact analysis.

Prior to formal approval, all costs and effects of the methods of implementation should be reviewed. Using the collected data, changes should be approved or denied.

After they’re approved, the change steps should be developed.

During implementation, incremental testing should occur, and it should rely on a predetermined fallback strategy if necessary. Versioning should be used to effectively track and control changes to a collection of entities.

Complete documentation should be produced and submitted with a formal report to management.

One of the key benefits of following this method is the ability to make use of the documentation in future planning. Lessons learned can be applied and even the process itself can be improved through analysis.

Resource Provisioning

Resource provisioning is a process in security operations which ensures that an organization deploys only the assets it currently needs. Resource provisioning must follow the organization’s resource life cycle. To properly manage the resource life cycle, an organization must maintain an accurate asset inventory and use appropriate configuration management processes. Resources that are involved in provisioning include physical assets, virtual assets, cloud assets, and applications.

Asset Inventory and Management

An asset is any item of value to an organization, including physical devices and digital information. Recognizing when assets are stolen or improperly deployed is impossible if no item count or inventory system exists or if the inventory is not kept updated. All equipment should be inventoried, and all relevant information about each device should be maintained and kept up to date. Each asset should be fully documented, including serial numbers, model numbers, firmware version, operating system version, responsible personnel, and so on. The organization should maintain this information both electronically and in hard copy. Maintaining this inventory will aid in determining when new assets should be deployed or when currently deployed assets should be decommissioned.

Security devices, such as firewalls, network address translation (NAT) devices, and IDSs and IPSs, should receive the most attention because they relate to physical and logical security. Beyond this, devices that can easily be stolen, such as laptops, tablets, and smartphones, should be locked away. If that is not practical, then consider locking these types of devices to stationary objects (for example, using cable locks with laptops).

When the technology is available, tracking of small devices can help mitigate the loss of both devices and their data. Many smartphones now include tracking software that allows you to locate a device after it has been stolen or lost by using either cell tower tracking or GPS. Deploy the device tracking technology when available.

Another useful feature available on many smartphones and other portable devices is a remote wiping feature. This allows the user to send a signal to a stolen device, instructing it to wipe out all the data contained on the device. Similarly, these devices typically also come with the ability to be remotely locked when misplaced or stolen.

Strict control of the use of portable media devices can help prevent sensitive information from leaving the network or office premises. These devices include CDs, DVDs, flash drives, and external hard drives. Although written rules should be in effect about the use of these devices, using security policies to prevent the copying of data to these media types is also possible. Allowing the copying of data to these drive types as long as the data is encrypted is also possible. If these functions are provided by the network operating system, you should deploy them.

It should not be possible for unauthorized persons to access and tamper with any devices. Tampering includes defacing, damaging, or changing the configuration of a device. Organizations should use integrity verification programs to look for evidence of data tampering, errors, and omissions.

Encrypting sensitive data stored on devices can help prevent the exposure of data in the event of a theft or in the event of inappropriate access of the device.

Physical Assets

Physical assets include servers, desktop computers, laptops, mobile devices, and network devices that are deployed in the enterprise. Physical assets should be deployed and decommissioned based on organizational need. For example, suppose an organization deploys a wireless access point (WAP) for use by a third-party auditor. Proper resource provisioning should ensure that the WAP is decommissioned after the third-party auditor no longer needs access to the network. Without proper inventory and configuration management, the WAP may remain deployed and can be used at some point to carry out a wireless network attack.

Virtual Assets

Virtual assets include software-defined networks, virtual storage-area networks (VSANs), guest operating systems deployed on virtual machines (VMs), and virtual routers. As with physical assets, the deployment and decommissioning of virtual assets should be tightly controlled as part of configuration management because virtual assets, just like physical assets, can be compromised. For example, a Windows 10 VM deployed on a Windows Server system should be retained only until it is no longer needed. As long as the VM is being used, it is important to ensure that the appropriate updates, patches, and security controls are deployed on it as part of configuration management. When users no longer access the VM, it should be removed.

Virtual storage occurs when physical storage (including hard drives, DVDs, and other storage media) from multiple network devices is compiled into a single logical space and appears as a single drive to the regular user. Block virtualization separates the logical storage from the physical storage. File virtualization eliminates the dependency between data accessed at the file level and the physical storage location of the files. Host-based virtual storage requires software running on the host. Storage device–based virtual storage runs on a storage controller and allows other storage controllers to be attached. Network-based virtual storage uses network-based devices, such as iSCSI or Fibre Channel, to create a storage solution.

Cloud Assets

Cloud assets include cloud services, virtual machines, storage networks, and other cloud services contracted through a cloud service provider. Cloud assets are usually billed based on usage and should be carefully provisioned and monitored to prevent the organization from paying for portions of service that it does not need. Configuration management should ensure that the appropriate monitoring policies are in place to make certain that only resources that are needed are deployed.

Applications

Applications include commercial applications that are locally installed, web services, and any cloud-deployed application services, such as Software as a Service (SaaS). The appropriate number of licenses should be maintained for all commercial applications. An organization should periodically review its licensing needs. For cloud deployments of software services, configuration management should be used to ensure that only personnel who have valid needs for the software are given access to it.

Baselining

Baselining is the process of documenting the attributes of a CI at a point in time, which serves as a basis for defining change. Configuration baselines should be documented for all CIs. If a change is approved and completed to a CI, then the baseline of the CI needs to be adjusted based on the changes made.

Baseline configurations should be maintained over time. This requires creating new baselines as organizational information systems change.

Automation

Automation software reduces cost, complexity, and errors in configuration management by maintaining a CI database. With automation tools, CI baselines can be saved. Then if a change has unanticipated consequences, administrators can simply roll the CI back to the saved baseline.

Automation tools allow administrators to make changes and deployments faster and remove the potential for administrator error. They also allow administrators to track the state of resources, preventing duplicated effort by attempting to install something that is already deployed to a CI. Finally, configuration management tools can audit an organization’s CIs so that administrators can easily pinpoint CIs with certain issues or needs.

Security Operations Concepts

Throughout this book, you’ve seen references made to policies and principles that can guide all security operations. In the following sections, we review some concepts more completely that have already been touched on and introduce some new issues concerned with maintaining security operations.

Need to Know/Least Privilege

With regard to allowing access to resources and assigning rights to perform operations, always apply the concept of least privilege (also called need to know). In the context of resource access, that means that the default level of access should be no access. Give users access only to resources required to do their job, and that access should require manual implementation after the requirement is verified by a supervisor.

Discretionary access control (DAC) and role-based access control (RBAC) are examples of systems based on a user’s need to know. To ensure least privilege requires that the user’s job be identified and each user be granted the lowest clearance required for their tasks. Another example is the implementation of views in a database. Need to know requires that the operator have the minimum knowledge of the system necessary to perform a task.

Managing Accounts, Groups, and Roles

Devices, computers, and applications implement user and group accounts and roles to allow or deny access. User accounts are created for each user needing access. Group accounts are used to configure permissions on resources. User accounts are added to the appropriate group accounts to inherit the permissions granted to that group. User accounts can also be assigned to roles. Roles are most often used by applications.

Security professionals should understand the following accounts:

Root or built-in administrator accounts: These are the most powerful accounts on the system. Root accounts are used in Linux-based systems, whereas administrator accounts are used in Windows-based systems. It is best to disable such an account after you have created another account with the same privileges, because most of these account names are well known and can be used by attackers. If you decide to keep these accounts, most vendors suggest that you change the account name and give it a complex password. Root or administrator accounts should be used only when performing administrative duties, and use of these accounts should always be audited.

Service accounts: These accounts are used to run system services and applications. Therefore, security professionals can limit the service account’s access to the system. Always research the default user accounts that are used. Make sure that you change the passwords for these accounts on a regular basis. Use of these accounts should always be audited.

Regular administrator accounts: These administrator accounts are created and assigned only to a single individual. Any user who has an administrative account should also have a regular/standard user account to use for normal day-to-day operations. Administrative accounts should be used only when performing administrative-level duties, and use of these accounts should always be audited.

Power user accounts: These accounts have more privileges and permissions than normal user accounts. These accounts should be reviewed on a regular basis to ensure that only users who need the higher-level permissions have these accounts. Most modern operating systems limit the abilities of the power users or even remove this account type entirely.

Regular/standard user accounts: These are the accounts users use while performing their normal everyday job duties. These accounts must strictly follow the principle of least privilege.

Separation of Duties and Responsibilities

The concept of separation of duties prescribes that sensitive operations be divided among multiple users so that no one user has the rights and access to carry out the operation alone. Separation of duties and responsibilities is valuable in deterring fraud by ensuring that no single individual can compromise a system. It is considered a preventive administrative control. An example would be one person initiating a request for a payment and another authorizing that payment to be made.

Privilege Account Management

Security professionals should ensure that organizations establish the proper account, group, and role life cycle management procedures to ensure they are properly created, managed, and removed. The provisioning life cycle is covered in more detail in Chapter 5, “Identity and Access Management (IAM).”

Inevitably, some users, especially supervisors or those in the IT support department, will require special rights and privileges that other users do not possess. For example, one requirement might be that a set of users who work the help desk might need to be able to reset passwords or perhaps make changes to user accounts. These types of rights carry with them a responsibility to exercise the rights responsibly and ethically.

Although in a perfect world we would like to assume that we can expect ethical and secure behavior from all users, in the real world we know this is not always true. Therefore, one of the things to monitor is the use of these privileges and privileged accounts. Although security professionals should be concerned with the amount of monitoring performed and the amount of data produced by the monitoring of privilege usage, recording the exercise of special privileges or the use of privileged accounts should not be sacrificed, even if it means regularly saving the data as a log file and clearing the event gathering system.

Job Rotation and Mandatory Vacation

From a security perspective, job rotation refers to the training of multiple users to perform the duties of a position to help prevent fraud by any individual employee. The idea is that by making more than one person familiar with the legitimate functions of the position, the higher the likelihood that unusual activities by any one person will be noticed. This approach is often used in conjunction with mandatory vacations, in which all users are required to take time off, allowing another to fill their position while gone, which enhances the opportunity to discover unusual activity. Beyond the security aspects of job rotation, additional benefits include

Trained backup in case of emergencies

Protection against fraud

Cross training of employees

Rotation of duties, separation of duties, and mandatory vacations are all administrative controls.

Two-Person Control

A two-person control, also referred to as a two-man rule, occurs when certain access and actions require the presence of two authorized people at all times. Common examples are the requirement for two people to sign checks over a certain dollar amount or for two people to be present to perform a certain activity, such as opening a safe.

Sensitive Information Procedures

Access control and its use in preventing unauthorized access to sensitive data are important for organizational security. It follows that the secure handling of sensitive information is critical. Although we tend to think in terms of the company’s information, it is also critical that the company protect the private information of its customers and employees. A leak of users’ and customers’ personal information causes, at a minimum, embarrassment for the company and possibly fines and lawsuits.

Regardless of whether the aim is to protect company data or personal data, the key is to apply the access control principles to both sets of data. When you are examining access control procedures and policies, the following questions need to be answered:

Are data or privileges available to the user that are not required for the job?

How many users have access to sensitive data, and why?

Record Retention

Proper access control is not possible without auditing. It allows us to track activities and discover problems before they are fully realized. Because this analysis can sometimes lead to a mountain of data to analyze, you should monitor only the most sensitive of activities, and retain and review all records. Moreover, in many cases companies are required by law or regulation to maintain records of certain data.

Most auditing systems allow for the configuration of data retention options. In some cases, the default operation is to start writing over the older records in the log when the maximum log size is full. Regularly clearing and saving the log can prevent this from happening and avoid the loss of important events. In cases of extremely sensitive data, having a server shut off access when a security log is full and cannot record any more events is even advisable.

Information Life Cycle

In security operations, security professionals must understand the life cycle of information, which includes creation/reception, distribution, usage, maintenance, and disposal of information. After information is gathered, it must be classified to ensure that only authorized personnel can access the information.

Service-Level Agreements

Service-level agreements (SLAs) are agreements about the ability of the support system to respond to problems within a certain timeframe while providing an agreed-upon level of service. SLAs can be internal between departments or external to a service provider. When parties agree on the speed and accuracy with which various problems related to the provided service are addressed, some predictability is introduced to the response to problems, which ultimately supports the maintenance of access to resources.

An SLA should contain a description of the services to be provided and the service levels and metrics that the customer can expect. It also includes the duties and responsibilities of each party of the SLA. It lists the service specifics, exclusions, service levels, escalation procedures, and cost. It should include a clause regarding payment to the customers resulting from a breach of the SLA. Although SLAs can be transferable, they are not transferable by law. Metrics that should be measured include service availability, service levels, defect rates, technical quality, and security. SLAs should be periodically reviewed to ensure that the business needs, technical environment, or workloads have not changed. In addition, metrics, measurement tools, and processes should be reviewed to see if they have improved.

Resource Protection

Enterprise resources include both assets you can see and touch (tangible), such as computers and printers, and assets you cannot see and touch (intangible), such as trade secrets and processes. Although typically you would think of resource protection as preventing the corruption of digital resources and as the prevention of damage to physical resources, this concept also includes maintaining the availability of those resources. In the following sections, we discuss both aspects of resource protection.

Protecting Tangible and Intangible Assets

In some cases, among the most valuable assets of a company are intangible assets such as secret recipes, formulas, and trade secrets. In other cases the value of the company is derived from its physical assets such as facilities, equipment, and the talents of its people. All are considered resources and should be included in a comprehensive resource protection plan. Next, we explore some specific concerns with these various types of resources.

Facilities

Usually, the largest tangible asset an organization has is the building in which it operates and the surrounding land. Physical security is covered later in this chapter, but it bears emphasizing that vulnerability testing (discussed more fully in Chapter 6) ought to include the security controls of the facility itself. Some examples of vulnerability testing as it relates to facilities include

Do doors close automatically, and does an alarm sound if they are held open too long?

Are the protection mechanisms of sensitive areas, such as server rooms and wiring closets, sufficient and operational?

Does the fire suppression system work?

Are sensitive documents shredded as opposed to being thrown in the dumpster?

Beyond the access issues, the main systems that are needed to ensure operations are not disrupted include fire detection/suppression, HVAC (including temperature and humidity controls), water and sewage systems, power/backup power, communications equipment, and intrusion detection.

Hardware

Another of the more tangible assets that must be protected is all the hardware that makes the network operate. This hardware includes not only the computers and printers with which the users directly come in contact but also the infrastructure devices that they never see such as routers, switches, and firewall appliances. Maintaining access to these critical devices from an availability standpoint is covered later in the sections “Redundancy and Fault Tolerance” and “Backup and Recovery Systems.”

From a management standpoint, these devices are typically managed remotely. Special care must be taken to safeguard access to these management features as well as protect the data and commands passing across the network to these devices. Some specific guidelines include

Change all default administrator passwords on the devices.

Limit the number of users who have remote access to these devices.

Rather than Telnet (which sends commands in cleartext), use an encrypted command-line tool such as Secure Shell (SSH).

Manage critical systems locally.

Limit physical access to these devices.

Software

Software assets include any propriety application, scripts, or batch files that have been developed in house that are critical to the operation of the organization. Secure coding and development practices can help to prevent weaknesses in these systems. Security professionals also must pay attention to preventing theft of these assets.

Moreover, closely monitoring the use of commercial applications and systems in the enterprise can prevent unintentional breach of licensing agreements. One of the benefits of giving users only the applications they require to do their job is that it limits the number of users that have an application, helping to prevent exhaustion of licenses for software.

Information Assets

Information assets are the last asset type that needs to be discussed, but by no means are they the least important. The primary purpose of operations security is to safeguard information assets that are resident in the system. These assets include recipes, processes, trade secrets, product plans, and any other type of information that enables the enterprise to maintain competitiveness within its industry. The principles of data classification and access control apply most critically to these assets. In some cases the dollar value of these assets might be difficult to determine, although it might be clear to all involved that the asset is critical. For example, the secret formula for Coca-Cola has been closely guarded for many years due to its value to the company.

Asset Management

In the process of managing these assets, several issues must be addressed. Certainly, access to the asset must be closely controlled to prevent its deletion, theft, or corruption (in the case of digital assets) and from physical damage (in the case of physical assets). Moreover, the asset must remain available when needed. This section covers methods of ensuring availability, authorization, and integrity.

Redundancy and Fault Tolerance

One way to provide uninterrupted access to information assets is through redundancy and fault tolerance. Redundancy refers to providing multiple instances of either a physical or logical component such that a second component is available if the first fails. Fault tolerance is a broader concept that includes redundancy but refers to any process that allows a system to continue making information assets available in the case of a failure.

In some cases, redundancy is applied at the physical layer, such as network redundancy provided by a dual backbone in a local network environment or by using multiple network cards in a critical server. In other cases, redundancy is applied logically such as when a router knows multiple paths to a destination in case one fails.

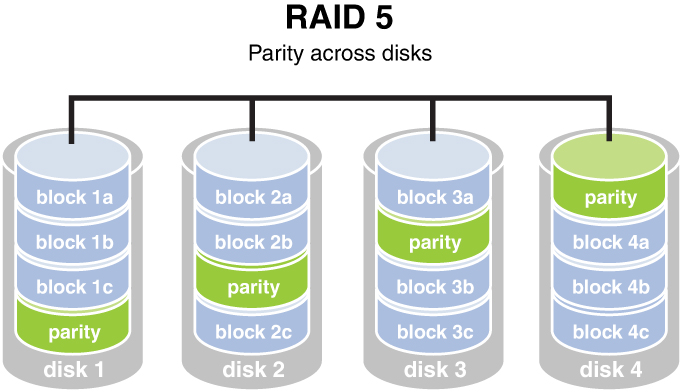

Fault tolerance countermeasures are designed to combat threats to design reliability. Although fault tolerance can include redundancy, it also refers to systems such as Redundant Array of Independent Disks (RAID) in which data is written across multiple disks in such a way that a disk can fail and the data can be quickly made available from the remaining functioning disks in the array without resorting to backup media. Be familiar with a number of RAID types because not all provide fault tolerance. Regardless of the technique employed for fault tolerance to operate, a system must be capable of detecting and correcting the fault.

Backup and Recovery Systems

Although comprehensive coverage of backup and recovery systems is found throughout this chapter, it is important to emphasize here the role of operations in carrying out those activities. After the backup schedule has been designed, there will be daily tasks associated with carrying out the plan. One of the most important parts of this system is an ongoing testing process to ensure that all backups are usable in case a recovery is required. The time to discover that a backup did not succeed is during testing and not during a live recovery.

Identity and Access Management

From an operations perspective, it is important to realize that managing these things is an ongoing process that might require creating accounts, deleting accounts, creating and populating groups, and managing the permissions associated with all of these concepts. An essential task is ensuring that the rights to perform these actions are tightly controlled and that a formal process is established for removing permissions when they are no longer required and disabling accounts that are no longer needed.

Another area to focus on is the control of the use of privileged accounts or accounts that have rights and permissions that exceed those of a regular user account. Although this control obviously applies to built-in administrator, root, or supervisor accounts (which in some operating systems are called root accounts) that have vast permissions, it also applies to any account that confers special privileges to the user.

Moreover, as a security professional, you should maintain the same tight control over the numerous built-in groups that exist in Windows to grant special rights to the group members. When using these groups, make note of any privileges held by the default groups that are not required for your purposes. You might want to remove some of the privileges from the default groups to support the concept of least privilege. You learn more about identity and access management in Chapter 5.

Media Management

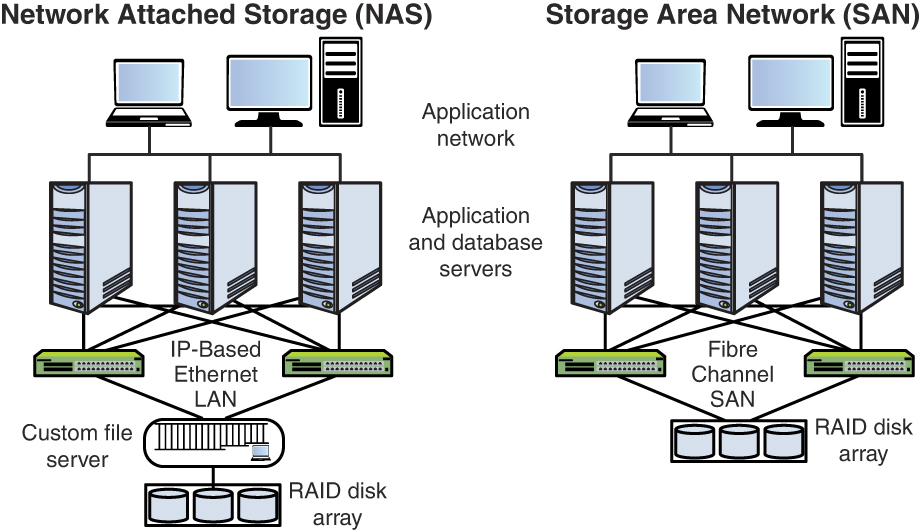

Media management is an important part of operations security because media is where data is stored. Media management includes RAID, SAN, NAS, and HSM.

RAID

Redundant Array of Independent Disks (RAID) refers to a system whereby multiple hard drives are used to provide either a performance boost or fault tolerance for the data. When we speak of fault tolerance in RAID, we mean maintaining access to the data even in a drive failure without restoring the data from backup media. The following are the types of RAID with which you should be familiar.

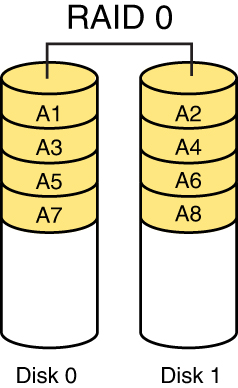

RAID 0, also called disk striping, writes the data across multiple drives or disks. Although it improves performance, it does not provide fault tolerance. Figure 7-2 depicts RAID 0.

Figure 7-2 RAID 0

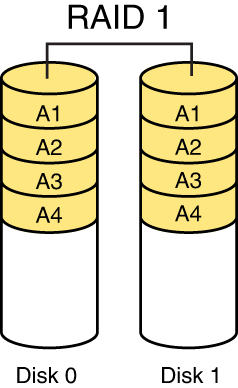

RAID 1, also called disk mirroring, uses two drives or disks and writes a copy of the data to both disks, providing fault tolerance in the case of a single drive failure. Figure 7-3 depicts RAID 1.

Figure 7-3 RAID 1