Erasure code is implemented by creating a Ceph pool of the type erasure. This pool is based on an erasure code profile that defines erasure-coding characteristics. We will first create an erasure code profile, and then we will create an erasure-coded pool based on this profile.

- The command mentioned in this recipe will create an erasure code profile with the name EC-profile, which will have characteristics of k=3 and m=2, which are the numbers of data and coding chunks respectively. So, every object that is stored in the erasure-coded pool will be divided into 3 (k) data chunks, and 2 (m) additional coding chunks are added to them, making a total of 5 (k + m) chunks. Finally, these 5 (k + m) chunks are spread across different OSD failure zones.

- Create the erasure code profile:

# ceph osd erasure-code-profile set EC-profile rulesetfailure-domain=osd k=3 m=2 - List the profile:

# ceph osd erasure-code-profile ls - Get the contents of your erasure code profile:

# ceph osd erasure-code-profile get EC-profile

- Create the erasure code profile:

- Create a Ceph pool of the erasure type, which is based on the erasure code profile that we created in Step 1:

# ceph osd pool create EC-pool 16 16 erasure EC-profileCheck the status of your newly created pool; you should find that the size of the pool is 5 (k + m), that is, the erasure size 5. Hence, data will be written to five different OSDs:

# ceph osd dump | grep -i EC-pool

- Let's now add some data to this newly created Ceph pool. To do this, we will create a dummy file,

hello.txt, and add this file to theEC-pool.

- To verify if the erasure coded pool is working correctly, we will check the OSD map for the

EC-poolandobject1.

If you observe the above output, you will notice that

object1is stored in the placement group47.c, which in turn is stored in theEC-pool. You will also notice that the placement group is stored on five OSDs, that is,osd.5,osd.3,osd.2,osd.8, andosd.0. If you go back to Step 1, you will recall that we created the erasure-coded profile of (3,2). This is whyobject1is stored on five OSDs.At this stage, we have completed the setting up of an erasure pool in a Ceph cluster. Now, we will deliberately try to break OSDs to see how the erasure pool behaves when OSDs are unavailable.

- We will now try to bring down

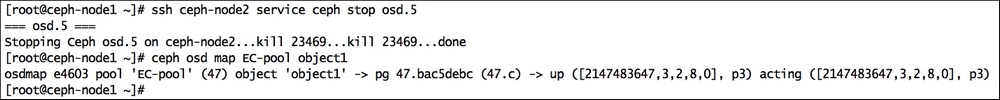

OSD.5andOSD.3, one by one.Bring down

osd.5and check the OSD map forEC-poolandobject1. You should notice thatosd.5is replaced by a random number,2147483647, which means thatosd.5is no longer available for this pool:# ssh ceph-node2 service ceph stop osd.5 # ceph osd map EC-pool object1

- Similarly, break one more OSD, that is,

osd.3, and notice the OSD map for theEC-poolandobject1. You will notice that, likeosd.5,osd.3also gets replaced by the random number,2147483647, which means thatosd.3is also no longer available for thisEC-pool:# ssh ceph-node2 service ceph stop osd.3 # ceph osd map EC-pool object1

- Now, the

EC-poolis running on three OSDs, which is the minimum requirement for this setup of erasure pool. As discussed earlier, theEC-poolwill require any three chunks out of five in order to read the data. Now, we have only three chunks left, which are onosd.2,osd.8, andosd.0, and we can still access the data. Let's verify the data reading:# rados -p EC-pool ls # rados -p EC-pool get object1 /tmp/object1 # cat /tmp/object1

The Erasure code feature is greatly benefited by Ceph's robust architecture. When Ceph detects the unavailability of any failure zone, it starts its basic operation of recovery. During the recovery operation, erasure pools rebuild themselves by decoding failed chunks on to new OSDs, and after that, they make all the chunks available automatically.

- In the last two steps mentioned, we intentionally broke

osd.5andosd.3. After a while, Ceph will start recovery and will regenerate missing chunks onto different OSDs. Once the recovery operation is complete, you should check the OSD map for theEC-poolandobject1. You will be amazed to see the new OSD IDs asosd.7andosd.4. And thus, an erasure pool becomes healthy without administrative input.