CHAPTER 6

Developing the Architecture

In this chapter, we address planning for architecture development projects. Topics include an overview of the project plan and a discussion of important project implementation issues, such as the statement of work (SOW) for an enterprise architecture (EA) project, EA implementation steps as identified in the selected EA development methodology, and the EA life cycle, tools, and repositories. Corporate culture influences an enterprise’s approach to each of these topics.

Overview of the Project Plan

Planning for the last few steps of the Six-Step Process, illustrated in Figure 6-1, focuses on planning the architecture development project or program and how the work will be done. The scope of the work, identified in the initial steps of the Six-Step Process, must be determined in order to develop the SOW for a project. This SOW may take one or more fiscal years or budget funding cycles to accomplish. It is important to plan the architecture project and prioritize the development of views and data into multiple releases as necessary.

Figure 6-1 How the work will be done

Some of the decisions made in planning the details of the architecture project may cause the initial decisions about the views (project deliverables) to be revisited. The methodologies, techniques, and tools selected for the management and technical approaches used in a project may impact or constrain the data and views to be developed. For example, a decision to use a service-oriented architecture (SOA) approach for system/software development means that steps 3 and 4 of the Six-Step Process will need to be reviewed to ensure that the data and views that focus on services have been included. The selected tools may limit the views that can be developed and constrain the options and tailoring that can be used.

Some tools have specific forms of tailoring for views built-in. If such a tool is selected one must use a specific format for each of those views. Other tools may have limitations on the views and tailoring they can support. If such a tool is selected, compromises may have to be made regarding the original set of views or tailoring selected in step 4.

The Project Plan

In this section, we focus on the elements of the architecture project plan.

Rather than use a specific outline for the project plan, sometimes also called a project or program management plan, we review the key topics that any project plan needs to cover:

• What is to be done? SOW, work breakdown structure (WBS), deliverables, reviews

• Who is involved? Stakeholders and project members with roles identified

• How the work will be done? Management and technical processes, methods, techniques, tools, computer resources and facilities

• When will the work be done? Project schedule and milestones

Each of these key topics is discussed in the following sections.

What Is to Be Done

A key element of the project plan is a discussion of the work that is to be accomplished. Typically, a project will start with the development of a SOW or the receipt of a SOW from a customer who may be internal or external to the development group. The SOW spells out the work to be done in terms of deliverables (both management and technical), high-level schedule, and required reviews. Figure 6-2 provides a list of elements for an EA project SOW. A discussion of the contents of each of the suggested SOW sections is provided later in the chapter.

Figure 6-2 Suggested EA project SOW elements

The usual response to a SOW is developing a WBS, which outlines the tasks necessary to manage and create the deliverables identified in the SOW and identifies dependencies among these tasks. These tasks include both technical and management tasks and may be guided by the specific methodology or process that will be used to perform the work. The WBS is input into the detailed project work plans and schedules. Many types of system and software development projects have generic WBSs that can be used as starting places for specific projects in these areas. Typically, generic WBSs can be found in textbooks or are part of corporate or enterprise management standards. Unfortunately, EA is a discipline that lacks generic WBSs. Some corporations or EA development departments may have such WBSs for use in-house, but they have not been generally accepted as standard approaches. A generic EA project WBS is something an EA development group may want to develop for themselves after experience with several EA projects or several iterations of releases for an evolving EA.

The deliverables listed in the SOW and addressed by tasks in the WBS will include the views and related data identified in steps 3 and 4 of the Six-Step Process, as well as any necessary management reports, technical briefings, and management processes such as configuration management. Since the DoDAF (and many other architecture frameworks) provides only high-level guidance, the description of the EA views needs to include any required options and tailoring as determined in step 4 of the Six-Step Process. The list of views also needs to include any selected Fit-for-Purpose views, with explicit descriptions of the desired format and integration requirements of these views.

Technical deliverables may also include the results of analyses, which may include analyses needed to develop the architecture as well as additional types in which the developed architecture data is just one of the sources of input. Many types of analysis needed to support decision-makers include both architecture and financial data. Examples of such analyses include return on investment (ROI), business case analysis, and activity based costing. Other types of technical analyses include performance or sensitivity analysis based on simulations.

The reviews of the technical deliverables also need careful identification and description. There are no standard reviews for EA views and data during development such as there are for systems and software. Each development organization will have to evolve its own way toward a reasonable set of reviews. However, there are at some known pitfalls that should be avoided. A common mistake is to try a review of “final” operational views and data prior to the development of systems or services views and data. The DoDAF views need to form an integrated set. Development of systems or services views will uncover data that will cause changes in operational views and data. Placing the operational views under formal configuration management (CM) too early (that is, accepting a set of operational views and corresponding data as “final” through a review process prior to the development of system or services views) may significantly slow down the development process, since development of the systems or services views will predictably result in a fair number of needed changes. Review of draft views and data by viewpoint may be appropriate, but premature CM of single viewpoints should be avoided.

Who Is Responsible

The project plan needs to identify all the participants in the development process together with their roles and responsibilities. The organizational structure of the development team needs to be spelled out and the skills needed by the various team roles identified. As EA development is a collaborative process involving both members of the enterprise and the development team, the business or mission personnel and IT personnel of the enterprise in question have roles to play as well as the members of the EA development team. The “owners” of the EA need to provide overall enterprise vision and direction. While the EA development team can help facilitate the development of an enterprise strategy, ultimately, it is the executives and business or mission leaders who must buy into and own the vision and strategy. Otherwise the EA effort is wasted. Executives, business leaders, and subject matter experts (SMEs) must also participate in the review and validation of the architecture. This review and validation can be part of the development process and also part of the governance process discussed in Chapter 9.

An enterprise engaged in developing and using an EA should have an organization that is charged with the development, maintenance, and evolution of the EA. In government, this organization is usually called the EA program office and is usually headed by a chief architect. Whatever the name, best practice says that this organization needs to report directly to the enterprise’s chief information officer (CIO) or equivalent. If the EA program office is removed, in terms of the organizational structure, from the CIO’s office, this is an indication that the EA program does not have an appropriate level of buy-in or support from the enterprise high-level management. Note that the EA program office should report to the CIO rather than the chief technology officer (CTO) since the EA needs the active participation of the mission or business management and cannot be treated as simply an IT matter if the EA program is to be successful.

Best practice also indicates that an EA executive steering committee (EAESC) should be established to review and formally accept each architecture release. The EAESC includes representatives of senior management and business unit executives. A technical review committee and a business review committee are usually established to assist the EAESC with reviewing IT and business aspects of the architecture, respectively. The EAESC may also approve the appointment of the chief architect.

The EA program office needs adequate staff and resources in order to be credible, even if most of the development or update work is outsourced to an outside contractor. The EA program office may have dedicated staff or may depend on staff matrixed in from other organizations. Integrated product teams (IPTs) composed of SMEs and development team members can be used to help jumpstart as-is portions of architectures, to brainstorm to-be portions of architectures, and for short-term architecture validation activities. However, IPTs are not effective for long-term activities because the SMEs have other jobs and cannot usually devote large amounts of their time on the architecture tasks.

The roles and skills needed by the EA program office include management, technical and business expertise, architecture development specialists, reviewers and validators, tool support, and liaison with external enterprise organizations.

The chief architect’s job requires a number of skills, as illustrated in Figure 6-3. The chief architect is usually the manager of the EA project, so he or she needs managerial skills and the ability to handle both in-house development and contract issues. The chief architect needs a good understanding of the enterprise missions and business as well as sufficient understanding of technical issues to know what is appropriate and feasible from a technical point of view with respect to the enterprise’s domain. The chief architect needs a good understanding of architecture frameworks, views, methodologies, and tools, as well as the uses to which EA data will be put within the enterprise. The chief architect needs strong communications skills to interface with executive management, business management, and IT management. Because all these skills and knowledge are rarely found in a single individual, a chief architect will typically have deputies to provide expertise is areas where the chief architect is not as strong.

Figure 6-3 Chief architect skills

The development specialists have expertise in the various modeling techniques and views needed for the viewpoints involved in the architecture. In addition, the development specialists may include architect’s assistants who manage the input of architecture data into complex architecture development tools. The architect’s assistants enable the other development specialists to focus on modeling issues and view content and free them from having to deal with the details of the tools.

Architecture reviewers, who check the views and data for correctness, consistency, completeness, adherence to enterprise standards, and other quality issues, may include members of the development team, business and technical managers, and SMEs. Architecture validators determine whether the views and data appropriately reflect the current enterprise status (for as-is views) or the desired enterprise state (for to-be views). Validators need to include managers and SMEs. Carefully select validators, because SME groups may include multiple tribes (see Chapter 2) and may have conflicting opinions and priorities. Representatives from all relevant corporate tribes need to be included. If conflicting options arise, the architects should use the views and data of the architecture to illustrate the underlying issues to the appropriate level of enterprise management as soon as possible, so that the issues can be resolved and any risks appropriately managed and mitigated.

The development team will need access to IT specialists for installation, customization, and maintenance of the architecture development and analysis tools and their integration, as well as for general computing and communications support. Complex architecture development tools frequently have customization features that enable the tool to be adjusted to support specific view tailoring. This customization needs to be set up initially for the tailoring selected in step 4 of the Six-Step Process and specified in any enterprise EA standards. The customization will need adjustment as the EA project progresses and the tailoring is refined. In addition, the various tools used in the EA development project, including development, repository, analysis, management, and presentation tools, frequently need additional custom software to support their integration. These IT specialists may be dedicated members of the development team for large EA projects, or they may be available from some centralized support organization within the enterprise.

The EA project team needs the support of additional personnel to handle tasks associated with the CM process. These personnel act as the secretariat for the configuration control board (CCB) and handle such tasks as setting up CCB meetings and agendas and publishing reports on CCB activities. These personnel may be directly associated with the EA project team or may be organized in other ways, depending on corporate practices. The EA project team will need the support of quality assurance (QA) personnel. Again, these personnel may be directly associated with the EA project team or with a separate QA organization. Best practice directs that the head of QA reports to someone other than the chief architect.

Finally, the EA project team will need to maintain liaisons with other organizations or groups within the enterprise whose decisions may impact the architecture. While the architecture provides information to groups such as strategic planning and investment management, decisions or changes made by these groups directly impact the architecture. Although one of the chief architect’s roles is to be the primary liaison with executive and management groups within the enterprise, the chief architect may want to appoint team members as special liaisons to critical external groups.

How Work Will Be Done: Managerial and Technical Approaches

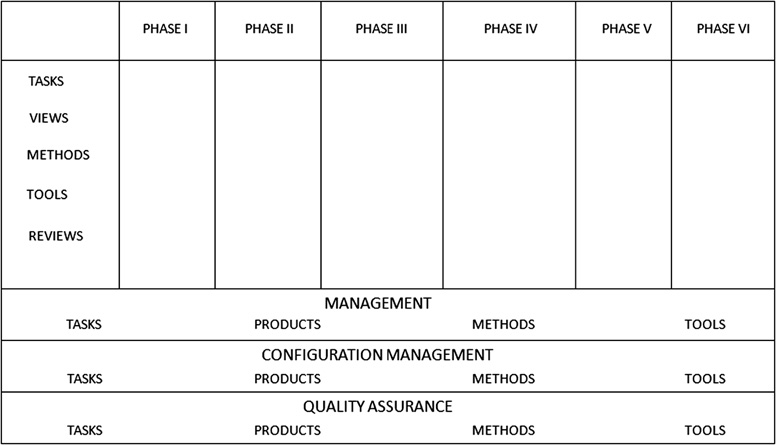

In laying out how the work will be done in a systems or software project, you would typically use a standard life cycle to identify the phases involved in the scope of the project and lay out your WBS tasks against the technical phases and the cross-phase categories. Ideally, you would develop a filled-in version of the chart similar to that in Figure 6-4 to help you understand the order in which tasks will be accomplished and views and data will be developed, which techniques and tools will be used, and what reviews will be accomplished.

Figure 6-4 Basic life cycle chart

The challenge with an EA project is that there are no EA life cycle standards available. This means no accepted standard definitions for the phases, and no standards for where to start or for reviews (including what to review, when to review, and entry and exit criteria). This also means that an EA project organization needs to make its own decisions in terms of how the work will be done with only its selected methodology being its basic guidance. Frameworks vary widely in the amount of methodology or process guidance they provide. While the TOGAF provides a life cycle methodology (see Chapter 22), the DoDAF provides only minimal process guidance other than the Six-Step Process. The DoDAF leaves the development organization free to select its own process or methodology and life cycle.

At a minimum, the EA project plan should outline both the management approach and the technical approach. The management approach includes tasks that need to be executed continuously across all of the technical tasks. These include estimating, scheduling, tracking, reporting, configuration management, and quality assurance. The project plan must discuss specific methods, techniques, and tools that will be used for each of these management tasks. For example, the project plan needs to include a description of the CM processes and tools, or at least provide a pointer to a configuration management plan. Similarly, the QA processes and tools can be described or the project plan can point to a QA plan. Additional management tasks include risk management and data strategy.

Risk management is an important, but frequently avoided, part of project management. Risk management is the practice of planning for potential future adverse events or impacts on the project and its products. For architecture development projects, this practice involves the identification of potential issues with the architecture that may significantly impact the improvements to be implemented based on the architecture. Risk management is a field in itself and will not be further addressed in this book.

Because data is so important in architecture, architecture project management also needs to develop a set of data-related strategies to complement and support the view-development process. These data-related strategies include data management (usually for the architecture data repository), data validation, and data dissemination. Again, the details of these data management issues form a field of their own and are not addressed in this book. (See DAMA-DMBOK: Data Management Body of Knowledge, 2nd Edition, by Dama International, Technics Publications, 2017.) Some of these topics will be covered on a broad level later in this chapter and in Chapter 7.

The technical approach includes a discussion of the process or methodology and tools that will be used to develop, manage, and maintain the architecture views and data. The process or methodology will include the order in which to develop views and data and the specific modeling techniques and tools used to do so. An enterprise may develop its own EA development methodology or use a “name-brand” process such as that provided by TOGAF. The development methodology or process needs to include or be coordinated with the following:

• Architecture validation strategy

• Architecture evolution strategy

• Architecture maintenance strategy

• Architecture dissemination strategy

• Enterprise transition planning

These topics are discussed in more detail in the following chapters. The technical process description should also include the process for architecture view and data reviews and the nominal schedule for these reviews with respect to other development tasks and events.

When Will the Work Be Done

An important part of planning is the development of project schedules, and a key part of developing schedules is level of effort (LOE) estimating for the various tasks that need to be done. Schedules for architecture development are complicated by the fact that EA data is used to support decision-making in major enterprise processes, and decisions made during these processes may in turn impact the EA. Estimating LOE is based primarily on experience. EA is still a new field and experience is limited for most enterprises.

Scheduling Milestones Typical internal milestones include task completion dates, view draft delivery dates, and review dates. Scheduling must also deal with dependencies among EA development tasks. Example dependencies for as-is portions of architectures include the need to complete at least some portion of document review and SME interviews prior to beginning view development. These are typical scheduling problems, and approaches for dealing with them don’t vary from those usually employed in most system or software projects.

One of the complicating issues of scheduling EA development or evolution activities is that there are many external process milestones that may constrain the EA development schedule. Examples of these external processes include the following:

• Enterprise budgeting process

• Investment review process

• System development life cycle process

• System of systems integration testing process

Each of these external processes may require types of EA data to support decision-making at key milestones. Budgeting and investment review processes usually have yearly cycles and require EA data to support decisions about which systems to select for initial funding, continuing funding, or retirement. Systems under development may require sufficient EA data to provide compliance criteria and guidance at key life cycle milestones. Planning for System of Systems (SoS) integration testing requires EA data such as internal and external interfaces for each system involved. Enterprise executives may decide that the EA development or evolution schedule needs to be adjusted so that the required data is available to meet key milestone dates for any of these processes. For system development life cycle and SoS integration testing processes, these milestone dates are usually those associated with critical systems.

In turn, decisions made during the processes described may impact the EA. Budgeting and investment review decisions may impact the planned timing of systems, business process, or capability evolution and architecture transition. Budgeting and investment review decisions, as well as EA decisions driven by system development needs, may also impact the speed of technology and standards introduction. SoS integration testing process results may impact key interfaces. All of these interactions complicate the scheduling of EA development, maintenance, and evolution.

Schedules also, as always, need to take into account management reserve based on risk analysis and planning.

LOE Estimating Issues LOE estimates per EA development task or possibly per EA view are necessary to support the development of reliable schedules. Because experience is a large factor in LOE estimating, planners must review organizational experience with EA development with respect to the following:

• The EA development process or methodology being used, including the views being developed

• The level of detail required in the architecture under development, including the types of architectures (as-is or to-be) under development

• The development tools being used

If the process, view set, or development tools are new to the development organization, additional time will be required for appropriate training and the learning curve. Additional time will also be necessary to get new tools in place and integrated and for training to be completed.

There are no generic industry guidelines for LOE estimates for EA development. Government projects typically do not keep detailed enough records to provide meaningful guidance. Commercial firms that do keep detailed records usually consider their experience data a competitive advantage and treat it as proprietary. Few reports with detailed data are published. If the development organization has no experience with EA development, or most of the personnel on the development team are not experienced with EA, then the best way to generate some initial LOE estimates is through a pilot effort. Pilot efforts are best focused on a small subset or “sliver” of the full EA that concerns a limited but well-defined mission performed by a small, well-defined, and cooperative set of operators who have a specific architecture issue. A successful pilot project can result in two beneficial outcomes: an example of an early success in the use of EA and estimates on the minimum LOE for the involved architecture tasks.

Summary of Project Planning

Organizations planning for architecture development projects should follow their standard planning procedures with attention to the following differences:

• There is no standard EA life cycle or standard set of reviews.

• The EA life cycle or development process must interact or interface with other key enterprise processes.

• There is less organizational experience with EA processes and views.

• There are fewer experienced personnel for EA development.

• For U.S. government organizations, there have been steadily increasing and changing federal requirements with respect to EA.

Standard planning should result in the following characteristics:

• A clear assignment of responsibilities

• A clear identification of deliverables

• A clear description of the processes, both managerial and technical, to be used

• A detailed schedule with identification of task dependencies

Details of Project Planning

This section focuses on important details of project planning, including specific details of the SOW for architecture projects and methodology/process, tool, and repository issues.

Statement of Work

All projects, whether internal or contracted, are based on some understanding of the work the project is to undertake. This understanding is usually documented in a written SOW. Architects need to have a good understanding of the elements of a SOW for an EA project since they may be required to develop or to respond to SOWs. The following subsections cover a generic SOW for an EA development project and highlight issues that are specific to EA projects.

Figure 6-2 provides an outline for an EA development project SOW. This outline is similar to that provided by The Open Group. Each of the items on the outline is discussed in a separate subsection next. Key information included in the SOW is provided by the first few steps of the Six-Step Process discussed in Chapter 5, and the relationships of items of the outline will be related back to the Six-Step Process.

Title

The title for the SOW usually includes the EA name in some form.

Project Background

The SOW should include a discussion of the sponsoring organization(s) and the context necessary for understanding their reasons for needing an EA.

Project Description and Scope

The SOW should include a discussion of the purpose of the EA and the EA scope as identified in steps 1 and 2 of the Six-Step Process. The entire list of stakeholder questions needs not be included, but the stakeholders (the users of the architecture) need to be identified as well as all the scope boundaries from step 2. This section of the SOW should identify the level of architecture that is being developed (such as enterprise, segment, or solution).

Architecture Vision

This section might be better called “Strategic Vision.” The SOW should include the drivers for the architecture from step 1 of the Six-Step Process, especially any strategic visions and plans, strategies, goals, or objectives that the EA is to support. Especially for solution architectures, it is always important to link the architecture back to the high-level business and mission needs that are driving the need for the architecture.

Management Approach

The SOW should spell out the required project management approach in terms of the management reporting that is expected of the executors of the SOW. This section should outline the types of estimating, scheduling, tracking, and reporting that will be expected. Configuration or version management, quality assurance, and risk management tasks and reporting requirements should also be identified.

Change Procedures

During the course of the project, the details of the SOW may change. This is especially true of EA projects where the specific views, required data, and options or tailoring tends to evolve as the understanding of the architectural domain matures during EA development. Because the SOW is the written basis for the project, it needs to be updated to reflect these and other types of changes. If it is not updated, there is an opportunity for the different parties involved to have divergent expectations on what the outcomes of the project should be. This type of misunderstanding can be quite serious, especially in a contractual situation or when management personnel turn over during the duration of the project.

The Change Procedures section of the SOW should outline the procedures for updating the SOW, including a description of how changes are proposed and the identification of who has the authority to approve proposed changes. There may be a committee or board that needs to approve proposed changes. Note that this section specifically addresses the change procedures for the SOW itself, not any configuration management procedures for the EA views and data.

Responsibilities and Deliverables

The SOW needs to outline clearly what products, artifacts, data, and other outcomes need to be generated by the project and who is responsible for which outcomes. In a contractual situation, the SOW includes identification of the responsibilities of the issuers of the SOW as well as the responsibilities of the executors of the SOW. The responsibilities of the issuers of the SOW may include providing timely access to documents and SMEs for as-is portions of an EA and access to critical business and technical SME groups for to-be portions of an EA. These responsibilities need to be spelled out in the SOW so that the impacts of delays in gaining access to needed data and experts on the outcomes of the project are clear. The responsibilities of the executors of the SOW include both the management and technical deliverables.

The management deliverables will include required status reports and briefings with the contents based on the work identified in the Management Approach section of the SOW. The technical deliverables include the EA views and data that are identified in step 4 of the Six-Step Process and possibly the standard views and data identified in step 6. The options (such as the types of models) and tailoring needed for the EA views and data, identified in step 4 of the Six-Step Process, need to be spelled out in the SOW. These options and tailoring choices can be updated with changes via the procedures in the Change Procedures section of the SOW. The electronic format for these EA deliverables should also be specified. The issuers of the SOW don’t want the EA views only in a paper format, and the data is not useful except in an electronic format. It is important that the issuers and executors of the SOW agree on what electronic format is to be delivered. This format may be driven by a standard or by the tools the issuer of the SOW plans to use. The electronic format may also be driven by a requirement to deliver portions of the architecture to a government or other external repository. As an alternative to including the view options and tailoring in the SOW, the SOW can include a process for agreeing on and documenting the selected options and tailoring as part of the EA development process.

Failure to agree on view options and tailoring or on an acceptable electronic delivery format can cause serious problems, especially in contractual situations. Failure to agree on view options and tailoring can result in the delivery of architecture views and data that don’t address the specific data needs of the stakeholders or that can’t support required analysis. Failure to agree on an electronic delivery format early in the project can result in a large amount of unexpected work for the executors of the SOW at the end of the project, when the developers need to translate their views and data into an electronic format that is radically different from that provided by the tools they used.

Other items related to the technical deliverables that need to be specified in the SOW include required reviews and additional analysis tasks. Since there is no standard life cycle for EAs, there are no standard reviews with well-known entry and exit criteria. The issuers of the SOW must identify and describe any technical views and data reviews that they want. The descriptions of any required reviews need to include when, with respect to the architecture development process, the reviews should take place and what the entry and exit criteria are. A common problem with EA projects is the request for review of operational or business views prior to the development of technical views. Since EAs have integrated views, based on a common set of data, this type of review is unrealistic. Development of technical views will impact the business or mission views since they are related. A better approach is to ask for a review to validate draft business or mission views to the extent possible prior to the development of technical views.

Analysis tasks associated with EA development include types of analysis necessary to develop the architecture as well as types of analysis that use data from a completed portion (as-is or to-be for a specific date) or release of the architecture. Types of analysis that might be included as parts of architecture development are tradeoff analyses and capability gap analysis. Types of analysis that use data from a completed portion of the architecture include ROI analysis, business case analysis, and performance analysis. SOWs can include requirements for any of these types of analysis.

A SOW can also spell out requirements for technical briefings and additional products such as interview notes resulting from interviews of SMEs.

Acceptance Criteria and Procedures

The SOW needs to specify how the technical EA deliverables will be judged acceptable. Acceptance criteria and procedures are critical in contractual situations and are different from organizational acceptance of the EA. That is, there is a difference between contractual acceptance and organizational acceptance of the EA as part of the governance process. The governance acceptance process is discussed in Chapter 9. Contractual acceptance criteria need to be considered carefully and spelled out explicitly in the SOW.

Acceptance criteria can include such items as compliance or conformance with a standard and consistency and completeness of views. However, note that requiring conformance with a standard may not be sufficient to ensure useful views. For example, the DoDAF provides guidance and as long as the EA data is defined in accordance with the DoDAF metamodel concepts, associations, and attributes, the EA is in conformance with DoDAF. However, conformance with the DoDAF doesn’t mean that the data is sufficient to address stakeholder issues or support key decision-making. Acceptance criteria can also be based on the ability of the EA data to support key decisions.

In addition to acceptance criteria, the SOW should contain acceptance procedures. Who gets to apply the criteria to the deliverables and what is the process by which the criteria are applied? Procedures may involve review of the deliverables by a board.

High-Level Project Schedule

A SOW should include a high-level project schedule containing the major milestones for deliverables from the SOW issuer’s point of view. It will be up to the executors of the SOW to develop detailed project development schedules in response to the high-level schedule in the SOW. The high-level schedule should not require the delivery of final views and data from one viewpoint of the EA prior to the development of views and data from the other viewpoints. The EA views and data form an integrated set, and development of additional viewpoints, views, and data will impact the existing views and data.

Support/Automated Environment and Tools

The SOW should identify any tools or facilities that the issuer of the SOW will provide to the executor of the SOW, plus any tool interfaces, such as an interface to the issuer’s CM system or repository, that the issuer expects the executor of the SOW to deliver data or deliverables to. Any restrictions or conditions on the use of tools and facilities should be specified.

Project Implementation Details

This section covers some of the basic elements needed for EA development. Included are discussions of stakeholder management; EA development methodologies; EA life cycle issues; and the relationships among methodologies, tools, and repositories.

Stakeholder Management

In planning the scope of the EA release, the focus is on architecture users. However, in planning the project as a whole, all classes of stakeholders need to be considered. The stakeholders who play various roles in the sponsorship, initiation, development, and management as well as use of the architecture must be identified. A good way to track all of these groups is to develop a politically oriented form of a stakeholder matrix that identifies each stakeholder by class and lists both their concerns and a priority. The priority given a stakeholder should be determined by the stakeholder’s criticality to the success of the EA project. So, for example, sponsors should get a high priority in terms of attention to their concerns. Another key item that assists in managing stakeholders is an EA marketing strategy and dissemination plan. This plan is discussed further in Chapter 7.

Architecture Development Methodology/Process

What is an EA development methodology or process? It provides an ordered set of technical tasks for developing the views and data and usually the models and techniques to choose from for the views, as well as the relationships among the views. The architecture development methodology may include a repository approach and a validation approach. The development methodology that a development group chooses to use may differ depending on the level of architecture being developed. The development methodology may also differ depending on the domain of the architecture. For example, a solution architecture development process for command and control domain architecture might differ from a process for a logistics- or data-centered domain architecture. Not only might different views be involved, but the development order of those views could differ. The command and control domain development process might focus on process, event response, and performance, while the data-centered domain development process might focus on operational data prior to considering process, and performance would be much less critical.

EA Life Cycle Issues

As discussed previously, the EA discipline is too new to have existing life cycle standards. Each development organization has had to develop its own life cycle. Right now, various government agencies, contractors, and commercial corporations have developed EA life cycles, and these life cycles tend to be quite different. Many focus on the early part of the EA life cycle and don’t yet handle maintenance in detail. Unfortunately, maintenance of the EA is the most difficult area to deal with, because EA interacts with most of the other enterprise processes and life cycles. Any EA life cycle has to address a large set of challenges:

• Does the EA life cycle handle problem-oriented, incremental architecture development?

• How does the EA life cycle fit with the concept of federated architectures and reference models?

• How does the EA life cycle coordinate with other related enterprise life cycles and processes, such as strategic planning, investment management, and system engineering and system development life cycles?

A promising EA life cycle for large enterprises with a relatively slow business tempo (see Chapter 8) and a reactive approach to change, such as government agencies, is discussed briefly next. The TOGAF ADM, which supplies an architecture development methodology and implies an EA life cycle, is discussed in Chapter 22.

OMB Life Cycle The Office of Management and Budget (OMB) and Government Accountability Office (GAO) started to develop EA life cycles so that the agencies could use them in further development of EA guidance and assessment tools for U.S. government agencies. The GAO life cycle is still very primitive (develop, use, maintain), but the OMB life cycle is starting to evolve into a useable outline for government agencies and larger, established enterprises. However, it is not clear that this style of life cycle will be useful for smaller enterprises with a rapid business tempo and a need for rapid response to a constantly changing marketplace and technology.

Figure 6-5 shows the OMB life cycle, which focuses more on how to use the EA to transition the enterprise than on the details of EA development, which is why OMB calls it an “Information and IT-enabled Performance Improvement Life Cycle.” This is a useful approach because it emphasizes the role of three levels of architecture (enterprise, segment, and solution) in enterprise evolution and transition and does not address the EA as an end in itself. However, more detail will be needed in the areas of EA development and maintenance to provide best practice guidance for EA development organizations and to enable comparison of EA development status in standardized terms. This additional development guidance will have to address development for each of the three levels of architecture. The life cycle diagram in Figure 6-5 is simplified and doesn’t show the number of different segment, program, and project activities that can be ongoing within an enterprise simultaneously. However, it provides a good idea of how the different levels of architecture need to be involved to support enterprise transition. Obviously, the OMB EA life cycle will need to evolve to show additional feedback loops and phasing details.

Figure 6-5 OMB EA life cycle (graphic from the OMB)

The OMB life cycle shows EA as being driven by the enterprise strategy and yielding enterprise results, which, after assessment and analysis, provide additional feedback for another cycle of EA development aimed at further improvements.

The architecture addressed in the “develop and maintain EA” phase is the Enterprise Level architecture and will include the Capability Viewpoint and both as-is and one or more to-be architectures. The to-be architectures will usually include a vision or long-term target architecture as well as multiple intermediate transition stage architectures. The Enterprise Level architecture includes the identification of segments for which Segment Level architectures are needed. Segments could be captured in the Capability Taxonomy (CV-2) or Capability Dependencies (CV-4) if they were tailored to identify which capabilities belonged to which segment. This phase ends with the approval of the Enterprise Level architecture by the EAESC or other appropriate governance group, as discussed in Chapter 9.

In the “prioritize segments” phase, the enterprise prioritizes and schedules the segments identified in the Enterprise Level architecture and develops an enterprise transition plan (ETP). This process involves careful coordination with the enterprise strategic planning. The development of the ETP may also result in the development of additional Enterprise Level architectures that reflect changes in the intermediate steps in the transition toward the long-term vision. This phase ends with the approval of the ETP and any additional intermediate Enterprise Level architectures by the EAESC or appropriate governance authority.

The Segment Level architectures are developed in the priority order determined in the previous phase. Part of developing the Segment Level architecture will be identification of the Solution Level architectures needed to describe all the component parts of the segment. The various systems that are associated with each Solution Level architecture can be identified in a tailored, high-level Systems Interface Description (SV-1) in the Segment Level architecture. This phase ends with the approval of the Segment Level architecture by the EAESC or appropriate governance authority.

The “allocate resources” phase allocates resources for all the programs/projects identified for a segment. The process used for this phase will usually involve the investment review board (IRB) (or equivalent) approving budgets for these programs/projects, based on information provided by the enterprise architecture and included in business case analysis and ROI type documents (per program). Note that during this phase, the exact set of projects and Solution Level architectures included in the segment may change, based on IRB decisions. The Segment Level architectures may need to be updated as a result of these decisions.

Once a program or project has been approved, the “create program management plan” phase develops the project management plan (PMP). The PMP for a Solution Level architecture should contain all the information identified earlier in the chapter in “The Project Plan.” The approval authority for the PMP will vary by enterprise, based on culture, but will frequently be the head of the organization who owns the program or project in question.

In the “execute projects” phase, the program/projects develop Solution Level architectures that align with the Segment Level architecture for their segment. These Solution Level architectures will need to be reviewed and approved by the appropriate governance authority, which may vary depending on the enterprise culture. The programs/projects then perform the necessary system and infrastructure development, testing, and installation.

Once the enterprise has been transitioned, the new processes and capabilities will be measured and assessed while the programs and projects continue to maintain the new systems and infrastructure. Further needs for improvement that impact existing segments can be fed back into the “develop segment architecture” phase. In the appropriate Segment Level architecture, these needs can be allocated to the appropriate program/project or a new program/project can be identified. The Segment Level architecture can be updated and the improvements implemented through the rest of the phases of the life cycle. Alternatively, if the identified improvement needs impact the existing segment priorities, these needs can be fed back to the “prioritize segments” phase. The existing priorities can be reexamined and the Enterprise Level architecture, ETP, and segment priorities and schedules can be updated to reflect these additional enterprise needs. New segments may be identified in this process. Then work on existing segments and program/projects can be readjusted and the additional needs implemented via the rest of the life cycle. Figure 6-6 summarizes the OMB life cycle in the format of the generic “Basic Life Cycle Chart” in Figure 6-4. Figure 6-6 shows the basic architectures and products per phase, along with the major reviews.

Figure 6-6 Life cycle chart for OMB EA life cycle

Of the EA life cycle challenges discussed, the OMB EA life cycle addresses at least two. It embeds the concept of Federated Enterprise Architecture through segment development. It defines how the EA life cycle fits in with investment management and the system development life cycle. It shows input from enterprise strategic planning but doesn’t address the full feedback issues involved in changing enterprise strategies. However, it is not clear how the OMB EA life cycle is adaptable to incremental architecture development as it clearly takes a full top-down approach.

Methodologies, Tools, and Repositories

Automated tools and repositories are necessary for developing architectures because of the amount of data involved and the amount of data correlation and consistency checking that is needed to support an integrated architecture. Tools are necessary to support productivity, especially during maintenance and updates.

Lessons Learned The EA development community is using some valuable lessons about automated tool support from the software and system development communities and their experiences with computer-aided software environment (CASE) and computer-aided design (CAD) tools. These lessons have to do with the integration of tools and development processes, with tool and process training and with repository issues.

The first lesson is that if different methods or techniques are used on different architecture views or in different architecture levels, these different methods or techniques need to integrate. That is, the data generated by the method or technique for one view must provide appropriate input for the next method or technique for the next view in the development process and for any method or technique that will be used to develop a view that shares data with the first view. Failure to have integrated methods and techniques will result in an inconsistent and un-integrated architecture. This need for data integration is what has driven DoD and the wider defense community to work on developing and standardizing an ontology to go with DoDAF and the related defense frameworks. This work is culminating in the standardizing of the Unified Architecture Framework (UAF), as discussed in Chapter 4.

The second lesson is that tools and development processes interact and must be integrated. Tools must be selected to support your development process. It may be tempting to try to use an inexpensive tool or one for which your organization already has a license, but if the tool and your process are a forced fit, experience shows use of the tool will be a serious mistake and will cause far more development problems and delays than is worth the cost. Forced fits include trying to use a tool that supports one specific method or technique to support a different method or technique and trying to use a tool that enforces a specific set of policies in an organization that has a different set of policies. Even a process-appropriate tool will usually have some features that may cause you to alter your process to achieve a smooth fit. Note that this lesson implies that if separate tools are used for different views or levels of architecture, then these tools must integrate. This need to integrate development tools has driven many EA development organizations to gravitate toward tools that provide support for all DoDAF views. Since the Unified Modeling Language (UML) provides a customizing technique (stereotyping) used in the definition of the Unified Architecture Framework Profile (UAFP), a current trend is to choose a UML tool that supports stereotyping as the key EA development tool. More tool integration issues are discussed in the upcoming sections.

The third lesson is that training is necessary both for new development tools and for new development processes and methods. The learning curve for both of these items is steep and needs to be accommodated in project schedules. The best way to compensate for the impact of these new techniques and tools on project schedules is training. Best practice includes having process or tool experts readily available, especially during the early days of use for new tools and techniques. Projects will encounter issues that weren’t addressed in training, and prompt experienced help can prevent serious delays or missteps that can have serious impacts later on. Program or project guides for the specific options and tailoring required for architecture views are also helpful.

The fourth lesson is that any tool environment that includes multiple repositories, either internal to development tools, standalone, or external to the development organization, needs careful management, as many of these repositories may have proprietary data formats or specialized interfaces. Many of these repositories will need two-way exchange of data to support local processes, so it is imperative that the data import and export interfaces support easy and error-free exchange of data. An overall data strategy needs to be in place to define and support the flow of architecture data throughout the entire tool environment. More about repositories is discussed in the upcoming sections.

Additional Processes and Tools In addition to an architecture development process, the architecture group needs processes to support additional analysis, project management, and the automation environment. Further processes may be required to provide direct support to the enterprise decision-making processes that require EA-based input and provide EA-impacting output. All these processes may require additional automated tools.

As discussed, analysis may be required during architecture development to select the best approaches or after architecture development to provide input to decision-making processes. Some of these processes use only EA data, while others require a combination of architecture with financial or other types of data. Examples of these types of analysis include activity-based costing analysis, business case development, ROI analysis, and performance analysis based on executable architectures or simulations. In many cases, specialized tools will be needed to support this analysis.

An architecture development effort also requires basic management and other cross-phase or “umbrella” processes such as configuration management and quality assurance. Management processes include estimating, scheduling, planning, tracking, and reporting. Most organizations have tools to support all these processes. Some sophisticated organizations may have management tools that integrate with the development tools to support tracking of progress against assigned tasks. Risk management is an additional area that can fit under the general management category, or it can be considered an additional process area.

Configuration management (CM) is a tricky issue with architecture tools that manage integrated sets of architecture data—that is, repositories. Architecture development organizations are going to have to modify their corporate CM policies and processes to deal with this issue of repository data and to mesh with their evolving EA governance structures. Architecture development tools frequently support some form of version control, but additional processes and tools will be necessary to support full CM, which includes configuration identification, change control, configuration status accounting, and configuration auditing. Aligning the policies implicit in development tools’ version control implementation with the organizational policies for full CM may also be a challenge and may cause serious problems with some development tools. Many development tools with repositories have embedded control policies that assume that the tool has the authoritative copy of the architecture data, while the CM processes and tools assume that they have the authoritative copy.

If quality assurance is defined in the currently accepted way as “process police,” then the high-level processes and concepts used for software and systems development should be easily adaptable to EA. However, these processes may place additional requirements on architecture development tools or require additional tools.

Architecture development organizations shouldn’t forget the processes needed to support the architecture development tools themselves and the organization’s automated environment. The more complex architecture development tools often require specialized “care and feeding.” These tools frequently allow for customization to support required tailoring both for views and for the development process. This customization may need frequent updating as the tailoring evolves and tool upgrades are released. All the development organization’s tools, as well as its infrastructure, need to be kept up to date with both technology improvements and security enhancements. In addition, if the organization has developed special code to support the integration of any of the tools, this integration code will need to be updated if the infrastructure changes and as new tool releases arrive. Some of these issues are addressed by the move to UML-based tools that support the emerging standard ontology (UAF) and exchange of standardized data types using XML.

Integration of Tools One of the persistent challenges faced by architecture development organizations is getting their automated tools to integrate properly. This issue can cause development teams lots of problems. Solutions can be expensive, and a lack of solutions can severely impact project productivity and product quality. Two general types of problems occur: integration of the various architecture development tools with one another and integration of architecture development tools with the other tools in the project’s environment. The architecture development tools may also need to integrate with other tools that are external to the architecture development organization’s enterprise. Figure 6-7 illustrates an example architecture tool environment and the types of tool integration involved.

Figure 6-7 Types of tool integration in an example architecture tool environment

Architecture development organizations quickly learn that, regardless of the methodology and modeling techniques they select, it seems that no single tool exists that will completely meet their needs. Some architecture development tools support only a limited number of the techniques the project wants to use. Other tools support most of the techniques, but there are specialty tools that do a better job on specific types of models. With the advent of DoDAF-related profiles (discussed in Chapter 4), some UML-based tools have the promise of providing a single tool that can address all the DoDAF views. However, specialty tools, such as data modeling tools and executable state transition modeling tools, may still be needed to assist in the development of types of models critical for a given enterprise. Although the UML tools can be used to represent the output of these specialty tools in a standard format, the UML tools may not have the capability to help develop certain views.

As a result, most architecture development organizations are faced with the continuing challenge of integrating multiple architecture development tools or repositories. This is not a new problem; software development projects have long faced similar issues in trying to integrate tools to support the entire software life cycle. However, because architecture views or models are integrated, all the tool interfaces must be two-way, which further complicates matters.

An expensive solution is to build custom interfaces between the tools. This is not always possible if the tools have proprietary databases and data formats that they use to store the architecture data. Another approach is standardization of the architecture data ontology coupled with a standard import/export format. This is the approach that the defense community has been evolving toward and is the basis of the current standardization of the UAF and UAFP.

Yet another approach to the development tool integration problem is based on the notion of a central architecture data repository that provides a standard import/export interface. In this approach, the repository is an independent tool, not an internal part of a tool that directly supports model or view development. View development tools must support the import/export standard in order to integrate with the repository. These tools can take repository data as input and output data to the repository. Basically, the development tools use the repository for sharing data. The repository approach has additional advantages. Tools can be simpler if they don’t need to have an internal repository. The repository can support an ad hoc reporting capability, metadata to support metrics, and version control. A repository can also support sharing of architecture data across an enterprise and the reuse of architecture data and artifacts in related architectures.

This sharing of architecture data across the enterprise is the reason why DoD has been so interested in a repository approach that would enable sharing of architecture data across the DoD. The repository approach has been advocated for a long time, but it has proven difficult to implement. Repository tools are commercially available, but they typically require customization, so they are expensive for small organizations and projects. In addition, there has been limited success both in getting tools to support the repository interface standards and in developing effective standards. Figure 6-8 shows the different approaches to tool integration and how the repository approach makes the number of interfaces much smaller. The independent repository approach is less attractive if a single architecture development tool is used, but there may still be a requirement for an independent repository (not development tool–dependent) for sharing of architecture data, and the development environment may still include multiple repositories that must be dealt with.

Figure 6-8 Two different approaches to tool integration

Not only do the architecture development tools need to be integrated, but these tools also need to be integrated with analysis tools, management tools, and document and presentation tools, as shown in Figure 6-7. That is, the architecture development tools or repositories need to be able to provide input to analysis tools and, potentially, to management tracking tools and CM tools. In addition, the architecture development tools (or repository) should be able to provide text and graphics to document and presentation tools. Failure to provide useable input for documents and presentation tools is a critical problem, because documents and presentations are one of the ways of making architecture data accessible to stakeholders. Sometimes problems with graphics can be subtle. Some tools provide exportable graphics, but although these graphics are suitable for wall charts for the architects’ use, the graphics are not readable when inserted into documents and presentations. Extracting readable subsets of the larger graphics can be difficult and time consuming. Usually, the interfaces from architecture development tools to nonarchitecture tools are one-way, so integration may be easier than integrating between architecture development tools.

Sometimes, architecture development tools in a development organization will have to integrate with tools from other organizations. For example, architecture development tools may have to integrate with requirements tools when Solution Level architectures are being developed. In other cases, the architecture development tools from organizations building Segment or Solution Level architectures may need to integrate with repositories owned by organizations responsible for the Enterprise Level architecture. In DoD, Segment and Solution Level architecture organizations need to be able to post architecture data to DoD Enterprise Level repositories such as the DoD Architecture Registry System (DARS). In TOGAF Segment and Solution Level architectures are incorporated into the Enterprise Continuum. The nature of these repository interfaces is dependent on enterprise processes and requirements.

No matter what nature of tool integration challenges are faced by a development organization, the best way to test this integration, especially if the integration approach depends on COTS product support for integration, is to establish a test configuration in a laboratory environment prior to attempting to use the tools in an actual development project. This is a lesson learned from problems with software development environments. Often, COTS product support for various interfaces and standards has unforeseen limitations that severely impact the ability of the tool to integrate as expected. In general, best practice is to test the integration capabilities of the tool with the rest of the architecture development environment prior to purchasing the product, if possible. Tools that won’t integrate are of very limited value.

The costs of integrating tools should be controlled as well. Although tools can be made to integrate using custom code or by maintaining customized repositories, the continuing costs of such an approach are frequently more than an organization or enterprise is prepared to pay. Though such a customized environment may be ideal in terms of architecture development and maintenance ease, usually less expensive approaches need to be investigated. These alternative approaches may require changes in the architecture development methodology or process.

Success Factors for Planning

Several factors affect successful execution of an architecture effort:

• Clear and common objectives/purpose The objectives and benefits for embarking on an architecture development must be clear, both to the sponsors and to the architecture team itself.

• Clear definition of stakeholders and expectations At the outset and during the course of an architecture development, stakeholders must be clearly identified and involved in the communications process. Stakeholders need to be reidentified during the course of development because tribes can emerge, especially as planned enterprise changes become clearer.

• Clear and demonstrated architecting skills Training and competence in the mechanics of modeling and view development as well as familiarity with the vocabulary, ontology, and semantic concepts used in your EA development methodology and framework are essential.

• Clear work plan and risk mitigation strategy A step-by-step WBS along with a clear SOW, clearly identified deliverables, and identification and mitigation strategy for anticipated risks is essential.

• Clear analysis and recommendations (and follow-up) In architecture projects that include findings and conclusions based on analysis, documenting findings clearly and communicating the impact of issues is essential. A follow-up plan to track actions based on findings and recommendations enhances the success of the architectural effort.

• Clear communications Architectures tell a story. The development of the architecture involves parts of the story. The effort is akin to plotting a cast of characters, determining events and actions, and sweeping a timeline forward. Any architecture effort that tells the story in a manner that is clear to an audience garners instant support.

• Crisp execution Ultimately, the success of architecture development efforts hinges on the crispness of the execution—in terms of quality of effort and timeliness.

Summary

This chapter has focused on aspects of EA development that include project planning and implementation issues. Much of this material is planning for the last few steps (4–6) of the Six-Step Process and is directed toward EA project planning. The basic project plan needs to include the usual topics addressing what, who, how, and when. Data strategies for management, validation, and dissemination need to be included, as well as risk-management approaches. Personnel issues are particularly critical. The chief architect role requires a combination of a large number of skills and may require a small team of people to execute. EA projects also require involvement of or coordination with external or customer roles to function successfully. Many of the details of this type of planning depend on corporate culture and experience, especially with EA projects.

Projects need to be based on a clear SOW. Because EA is a new discipline, there is limited guidance available on such topics as WBS for EA projects and EA life cycle, although OMB has a promising EA life cycle for government agencies. Architecture development processes or methodologies may vary widely among organizations, although the TOGAF provides a methodology-based approach that has been developed by an industrial consortium. Automated tools will be necessary to support EA development, and lessons learned from software and systems development emphasize the need for development tools to be integrated with one another, with development processes, and with other automated project tools. This need for integration drives the importance of an ontology and the desire for a single EA development tool and repository that covers the maximum number of viewpoints and views.

Planning and executing EA development must be done carefully because of the limited overall experience with EA and the resultant limited guidance.

Questions

1. How does your organization address the tool integration problem? How many architecture development tools do you use on a single project and how are they integrated?

2. How many other tools (management, document production, and analysis) do your architecture development tools need to integrate with on architecture projects? How is this integration achieved?

3. How does your EA life cycle address the three challenges identified in this chapter: problem-oriented, incremental architecture development; federated architectures and reference models; and integration with other enterprise life cycles and processes (including strategic planning, CPIC, and SDLC)?

4. How does your EA life cycle map or compare to the OMB EA life cycle?

5. See if you can find some SOWs for EA development projects. Compare the content of each SOW to the suggested SOW outline in this chapter. How are they similar and how are they different?

6. How does your organization deal with risk management? Do you have a methodology for risk assessment for EA projects?

7. What is your organization’s approach to EA data validation? How are the personnel who perform EA data validation integrated with the rest of the EA project team?

8. Does your organization have a standardized WBS for EA projects? If yes, how do the tasks align with your EA life cycle?

9. Does your organization have a job description for the chief architect’s position? What skills are required and how do they align with the skills identified in this chapter?

10. How is your organization’s EA project team organized? To whom does the chief architect report?

11. What architecture development process does your organization use? What order are the data and views developed in?

References

Bersoff, E., V. Henderson, S. Siegel. 1980. Software Configuration Management: An Investment in Product Integrity. Upper Saddle River, NJ: Prentice Hall PTR.

CIO Council. 2001. “A Practical Guide to Federal Enterprise Architecture, Version 1.0.” www.gao.gov/assets/590/588407.pdf.

Department of Defense. 2009. Department of Defense Architecture Framework Version 2.0. http://dodcio.defense.gov/Library/DoD-Architecture-Framework.

Object Management Group. 2016. Unified Architecture Framework Profile, Version 1.0 – FTF Beta 1. OMG Document Number dtc/16-08-01. www.omg.org/spec/UAF/1.0/Beta1/About-UAF/.

Office of Management and Budget. 2009. “Improving Agency Performance Using Information and Information Technology (Enterprise Architecture Assessment Framework v3.1).”

The Open Group. 2018. “TOGAF Version 9.2, revised educational edition.” The Netherlands: Van Haren Publishing.