CHAPTER 16

Redundancy and Disaster Recovery

This chapter covers the following subjects:

![]() Redundancy Planning: This section is all about ensuring your network and servers are fault tolerant. By setting up redundant power, data, servers, and even ISPs, you can avoid many disasters that could threaten the security of your organization.

Redundancy Planning: This section is all about ensuring your network and servers are fault tolerant. By setting up redundant power, data, servers, and even ISPs, you can avoid many disasters that could threaten the security of your organization.

![]() Disaster Recovery Planning and Procedures: A disaster is when something happens to your network that your fault-tolerant methods cannot prevent. To help recover after a disaster, data should be backed up, and a proper disaster recovery plan should be designed, practiced, and implemented if necessary.

Disaster Recovery Planning and Procedures: A disaster is when something happens to your network that your fault-tolerant methods cannot prevent. To help recover after a disaster, data should be backed up, and a proper disaster recovery plan should be designed, practiced, and implemented if necessary.

The typical definition of “redundant” means superfluous or uncalled for. However, it is not so in the IT field. Being redundant is a way of life. It is a way of enhancing your servers, network devices, and other equipment. It is a way of developing fault tolerance—the capability to continue functioning even if there is an error.

This chapter discusses how to prevent problems that might occur that could threaten the security of your servers, network equipment, and server room in general. A good network security administrator should have plenty of redundancy and fault-tolerant methods in place that can help combat threats and help avoid disaster.

However, no matter how much redundancy you implement, there is always a chance that a disaster could arise. A disaster could be the loss of data on a server, a fire in a server room, or the catastrophic loss of access to an organization’s building. To prepare for these events, a disaster recovery plan should be designed, but with the thought in mind that redundancy and fault tolerance can defend against most “disasters.” The best admin is the one who avoids disaster and, in the rare case that it does happen, has a plan in place to recover quickly from it. This chapter also covers how to plan for disasters and discusses a plan of action for recovering swiftly.

Foundation Topics

Redundancy Planning

Most networks could do with a little more redundancy. I know...a lot of you are probably wondering why I keep repeating myself! It’s because so many customers of mine in the past, and network admins who have worked for and with me, insist on avoiding the issue. Redundancy works—use it!

This section discusses redundant power in the form of power supplies, UPSs, and backup generators. It also talks about redundant data, servers, ISPs, and sites. All these things, when planned properly, create an environment that can withstand most failures barring total disaster.

The whole concept revolves around single points of failure. A single point of failure is an element, object, or part of a system that, if it fails, causes the whole system to fail. By implementing redundancy, you can bypass just about any single point of failure.

There are two methods to combating single points of failure. The first is to use redundancy. If employed properly, redundancy keeps a system running with no downtime. However, this can be pricey, and we all know there is only so much IT budget to go around. So, the alternative is to make sure you have plenty of spare parts lying around. This is a good method if your network and systems are not time-critical. Installing spare parts often requires you to shut down the server or a portion of a network. If this risk is not acceptable to an organization, you’ll have to find the cheapest redundant solutions available. Research is key, and don’t be fooled by the hype—sometimes the simplest sounding solutions are the best.

Here’s the scenario (and we apply this to the rest of this “Redundancy Planning” section). Your server room has the following powered equipment:

![]() Nine servers

Nine servers

![]() Two Microsoft domain controllers

Two Microsoft domain controllers

![]() One DNS server

One DNS server

![]() Two file servers

Two file servers

![]() One database server

One database server

![]() Two web servers (which second as FTP servers)

Two web servers (which second as FTP servers)

![]() One mail server

One mail server

![]() Five 48-port switches

Five 48-port switches

![]() One master switch

One master switch

![]() Three routers

Three routers

![]() Two CSU/DSUs

Two CSU/DSUs

![]() One PBX

One PBX

![]() Two client workstations (for remote server access without having to work directly at the server); these are within the server room as well.

Two client workstations (for remote server access without having to work directly at the server); these are within the server room as well.

It appears that there is already some redundancy in place in your server room. For example, there are two domain controllers. One of them has a copy of the Active Directory and acts as a secondary DC in the case that the first one fails. There are also two web servers, one ready to take over for the other if the primary one fails. This type of redundancy is known as failover redundancy. The secondary system is inactive until the first one fails. Also, there are two client workstations used to remotely control the servers; if one fails, another one is available.

Otherwise, the rest of the servers and other pieces of equipment are one-offs—single instances in need of something to prevent failure. There are a lot of them, so we truly need to redundacize. Hey, it’s a word if IT people use it! It’s the detailed approach to preparing for problems that can arise in a system that will make for a good IT contingency plan. Try to envision the various upcoming redundancy methods used with each of the items listed previously in our fictitious server room.

But before we get into some hard-core redundancy, let’s quickly discuss the terms fail-open and fail-closed. Fail-open means that if a portion of a system fails, the rest of the system will still be available or “open.” Fail-closed means that if a portion of a system fails, the entire system will become inaccessible or simply shut down. Depending on the level of security your organization requires, you might have a mixture of fail-open and fail-closed systems. In the previous server room example, we have a DNS server and a database server. Let’s say that the DNS server forwards information to several different zones, and that one of those zones fails for one reason or another. We might decide that it is more beneficial to the network to have the rest of the DNS server continue to operate and service the rest of the zones instead of shutting down completely, so we would want the DNS server to fail-open. However, our database server might have confidential information that we cannot afford to lose, so if one service or component of the database server fails, we might opt to have the database server stop servicing requests altogether, or in other words, to fail-closed. Another example would be a firewall/router. If the firewall portion of the device failed, we would probably want the device to fail-closed. Even though the network connectivity could still function, we probably wouldn’t want it to since there is no firewall protection. It all depends on the level of security you require, and the risk that can be associated with devices that fail-open. It also depends on whether the server or device has a redundancy associated with it. If the DNS server mentioned previously has a secondary redundant DNS server that is always up and running and ready to take requests at a moment’s notice, we might opt to instead configure the first DNS server to fail-closed and let the secondary DNS server take over entirely. This leads to clustering, which we discuss later in this chapter.

Redundant Power

Let’s begin with power because that is what all our devices and computers gain “sustenance” from. Power is so important—when planning for redundancy it should be at the top of your list. When considering power implications, think like an engineer; you might even need to enlist the help of a coworker who has an engineering background, or a third party, to help plan your electrical requirements and make them a reality.

We are most interested in the server room. Smart companies store most of their important data, settings, apps, and so on in that room. So power is critical here, whereas it is not as important for client computers and other client resources. If power fails in a server room or in any one component within the server room, it could cause the network to go down, or loss of access to resources. It could also cause damage to a server or other device.

When considering power, think about it from the inside out. For example, start with individual computers, servers, and networking components. How much power does each of these things require? Make a list and tally your results. Later, this plays into the total power needed by the server room. Remember that networking devices such as IP phones, cameras, and some wireless access points are powered over Ethernet cabling, which can require additional power requirements at the Ethernet switch (or switches) in the server room. Think about installing redundant power supplies in some of your servers and switches. Next, ponder using UPS devices as a way of defeating short-term power loss failures. Then, move on to how many circuits you need, total power, electrical panel requirements, and also the cleanliness of power coming in from your municipality. Finally, consider backup generators for longer-term power failures.

Using proper power devices is part of a good preventative maintenance/security plan and helps to protect a computer. You need to protect against several things:

![]() Surges: A surge in electrical power means that there is an unexpected increase in the amount of voltage provided. This can be a small increase, or a larger increase known as a spike.

Surges: A surge in electrical power means that there is an unexpected increase in the amount of voltage provided. This can be a small increase, or a larger increase known as a spike.

![]() Spikes: A spike is a short transient in voltage that can be due to a short circuit, tripped circuit breaker, power outage, or lightning strike.

Spikes: A spike is a short transient in voltage that can be due to a short circuit, tripped circuit breaker, power outage, or lightning strike.

![]() Sags: A sag is an unexpected decrease in the amount of voltage provided. Typically, sags are limited in time and in the decrease in voltage. However, when voltage reduces further, a brownout could ensue.

Sags: A sag is an unexpected decrease in the amount of voltage provided. Typically, sags are limited in time and in the decrease in voltage. However, when voltage reduces further, a brownout could ensue.

![]() Brownouts: A brownout is when the voltage drops to such an extent that it typically causes the lights to dim and causes computers to shut off.

Brownouts: A brownout is when the voltage drops to such an extent that it typically causes the lights to dim and causes computers to shut off.

![]() Blackouts: A blackout is when total loss of power for a prolonged period occurs. Another problem associated with blackouts is the spike that can occur when power is restored. In the New York area, it is common to have an increased amount of tech support calls during July; this is attributed to lightning storms! Often, damage to systems is due to improper protection.

Blackouts: A blackout is when total loss of power for a prolonged period occurs. Another problem associated with blackouts is the spike that can occur when power is restored. In the New York area, it is common to have an increased amount of tech support calls during July; this is attributed to lightning storms! Often, damage to systems is due to improper protection.

![]() Power supply failure: Power supplies are like hard drives in two ways: One, they will fail. It’s not a matter of if; it’s a matter of when. Two, they can cause intermittent issues when they begin to fail, issues that are hard to troubleshoot. If you suspect a power supply failure, then you should replace the supply. Also consider using a redundant power supply.

Power supply failure: Power supplies are like hard drives in two ways: One, they will fail. It’s not a matter of if; it’s a matter of when. Two, they can cause intermittent issues when they begin to fail, issues that are hard to troubleshoot. If you suspect a power supply failure, then you should replace the supply. Also consider using a redundant power supply.

Some devices have specific purposes, and others can protect against more than one of these electrical issues. Let’s talk about three of them now: redundant power supplies, uninterruptible power supplies, and backup generators.

Redundant Power Supplies

A proper redundant power supply is an enclosure that contains two (or more) complete power supplies. You make one main power connection from the AC outlet to the power supply, and there is one set of wires that connects to the motherboard and devices. However, if one of the power supplies in the enclosure fails, the other takes over immediately without computer failure. These are common on servers, especially RAID boxes. They are not practical for client computers, but you might see them installed in some powerful workstations. In our scenario, we should install redundant power supplies to as many servers as possible, starting with the file servers and domain controllers. If possible, we should implement redundant power supplies for any of our switches or routers that will accept them, or consider new routers and switches that are scalable for redundant power supplies.

In some cases (pun intended), it is possible to install two completely separate power supplies so that each has a connection to an AC outlet. This depends on your server configuration but is less common due to the amount of redundancy it requires of the devices inside the server. Either look at the specifications for your server’s case or open it up during off-hours to see if redundant power supplies are an option.

Vendors such as HP and manufacturers such as Thermaltake and Enlight offer redundant power supply systems for servers, and vendors such as Cisco offer redundant AC power systems for their networking devices.

This technology is great in the case that a power supply failure occurs, but it does not protect from scenarios in which power to the computer is disrupted.

Uninterruptible Power Supplies

It should go without saying, but surge protectors are not good enough to protect power issues that might occur in your server room. A UPS is the proper device to use. An uninterruptible power supply (UPS) takes the functionality of a surge suppressor and combines that with a battery backup. So now, our server is protected not only from surges and spikes, but also from sags, brownouts, and blackouts. Most UPS devices also act as line conditioners that serve to clean up dirty power. Noise and increases/decreases in power make up dirty power. Dirty power can also be caused by too many devices using the same circuit, or because power coming from the electrical panel or from the municipal grid fluctuates, maybe because the panel or the entire grid is under- or overloaded. If a line-conditioning device such as a UPS doesn’t fix the problem, a quick call to your company’s electrician should result in an answer and possibly a long-term fix.

If you happen to be using a separate line-conditioning device in addition to a UPS, it should be tested regularly. Line-conditioning devices are always supplying power to your devices. A UPS backup battery will kick in only if a power loss occurs.

Battery backup is great, but the battery can’t last indefinitely! It is considered emergency power and typically keeps your computer system running for 5 to 30 minutes depending on the model you purchase. UPS devices today have a USB connection so that your computer can communicate with the UPS. When there is a power outage, the UPS sends a signal to the computer telling it to shut down, suspend, or stand-by before the battery discharges completely. Most UPSs come with software that you can install that enables you to configure the computer with these options.

The more devices that connect to the UPS, the less time the battery can last if a power outage occurs; if too many devices are connected, there may be inconsistencies when the battery needs to take over. Thus many UPS manufacturers limit the amount of battery backup–protected receptacles. Connecting a laser printer to the UPS is not recommended due to the high current draw of the laser printer; and never connect a surge protector or power strip to one of the receptacles in the UPS, to protect the UPS from being overloaded.

The UPS normally has a lead-acid battery that, when discharged, requires 10 hours to 20 hours to recharge. This battery is usually shipped in a disconnected state. Before charging the device for use, you must first make sure that the leads connect. If the battery ever needs to be replaced, a red light usually appears accompanied by a beeping sound. Beeping can also occur if power is no longer supplied to the UPS by the AC outlet.

There are varying levels of UPS devices, which incorporate different technologies. For example, the cheaper standby UPS (known as an SPS) might have a slight delay when switching from AC to battery power, possibly causing errors in the computer operating system. If a UPS is rack mounted, it will usually be a full-blown UPS (perhaps not the best choice of words!); this would be known as an “online” or “continuous” UPS—these cost hundreds or even thousands of dollars. If it is a smaller device that plugs into the AC outlet and lies freely about, it is probably an SPS—these cost between $25 and $100. You should realize that some care should be taken when planning the type of UPS to be used. When data is crucial, you had better plan for a quality UPS!

Just about everything in the server room should be connected to a UPS (you will most likely need several) to protect from power outages. This includes servers, monitors, switches, routers, CSU/DSUs, PBX equipment, security cameras, workstations, and monitors—really, everything in the server room!

Backup Generators

What if power to the building does fail completely? Most would consider this a disaster, and over the long term it could possibly be. However, most power outages are 5 minutes or less on the average, and most of the time a UPS can pick up the slack for these short outages but not for the less common, longer outages that might last a few hours or days. And, a UPS powers only the devices you plug into it. If your organization is to keep functioning, it will need a backup generator to power lights, computers, phones, and security systems over short-term outages, or longer ones.

A backup generator is a part of an emergency power system used when there is an outage of regular electric grid power. Some emergency power systems might include special lighting and fuel cells, whereas larger, more commercial backup generators can power portions of a building, or an entire building, as long as fuel is available. For our scenario we should make sure that the backup generator powers the server room at the very least.

Backup generator fuel types include gasoline, diesel, natural gas, propane, and solar. Smaller backup generators often use gasoline, but these are not adequate for most companies. Instead, many organizations use larger natural gas generators. Some of these generators need to be started manually, but the majority of them are known as standby generators. These are systems that turn on automatically within seconds of a power outage. Transfer switches sense any power loss and instruct the generator to start. Standby generators may be required by code for certain types of buildings with standby lighting, or buildings with elevators, fire-suppression systems, and life-support equipment. You should always check company policy and your municipal guidelines before planning and implementing a backup generator system.

Backup generators can be broken into three types:

![]() Portable gas-engine generator: The least expensive and run on gasoline or possibly solar power. They are noisy, high maintenance, must be started manually, and usually require extension cords. They are a carbon monoxide risk and are only adequate for small operations and in mobile scenarios. Gas-powered inverters are quieter but often come with a higher price tag per watt generated.

Portable gas-engine generator: The least expensive and run on gasoline or possibly solar power. They are noisy, high maintenance, must be started manually, and usually require extension cords. They are a carbon monoxide risk and are only adequate for small operations and in mobile scenarios. Gas-powered inverters are quieter but often come with a higher price tag per watt generated.

![]() Permanently installed generator: Much more expensive, with a complex installation. These almost always run on either natural gas or propane. They are quieter and can be connected directly to the organization’s electrical panel. Usually, these are standby generators and, as such, require little user interaction.

Permanently installed generator: Much more expensive, with a complex installation. These almost always run on either natural gas or propane. They are quieter and can be connected directly to the organization’s electrical panel. Usually, these are standby generators and, as such, require little user interaction.

![]() Battery-inverter generator: These are based on lead-acid batteries, are quiet, and require little user interaction aside from an uncommon restart and change of batteries. They are well matched to environments that require a low amount of wattage or are the victims of short power outages only. Battery-inverter systems can be stored indoors, but because the batteries can release fumes, the area they are stored in should be well ventilated, such as an air-conditioned server room with external exhaust. Uninterruptible power supplies fall into the battery-inverter generator category.

Battery-inverter generator: These are based on lead-acid batteries, are quiet, and require little user interaction aside from an uncommon restart and change of batteries. They are well matched to environments that require a low amount of wattage or are the victims of short power outages only. Battery-inverter systems can be stored indoors, but because the batteries can release fumes, the area they are stored in should be well ventilated, such as an air-conditioned server room with external exhaust. Uninterruptible power supplies fall into the battery-inverter generator category.

Some of the considerations you should take into account when selecting a backup generator include the following:

![]() Price: As with any organizational purchase, this will have to be budgeted.

Price: As with any organizational purchase, this will have to be budgeted.

![]() How unit is started: Does it start automatically? Most organizations require this.

How unit is started: Does it start automatically? Most organizations require this.

![]() Uptime: How many hours will the generator stay on before needing to be refueled? This goes hand-in-hand with the next bullet.

Uptime: How many hours will the generator stay on before needing to be refueled? This goes hand-in-hand with the next bullet.

![]() Power output: How many watts does the system offer? Before purchasing a backup generator, you should measure the total maximum load your organization might use by running all computers, servers, lights, and other devices simultaneously, and measure this at the main electrical panel. Alternatively, you could measure the total on paper by adding the estimated power requirements of all devices together.

Power output: How many watts does the system offer? Before purchasing a backup generator, you should measure the total maximum load your organization might use by running all computers, servers, lights, and other devices simultaneously, and measure this at the main electrical panel. Alternatively, you could measure the total on paper by adding the estimated power requirements of all devices together.

![]() Fuel source: Does it run on natural gas, gasoline, and so on? If it is an automatically starting system, the options will probably be limited to natural gas and propane.

Fuel source: Does it run on natural gas, gasoline, and so on? If it is an automatically starting system, the options will probably be limited to natural gas and propane.

Some vendors that offer backup generators include Generac, Gillette, and Kohler. These devices should be monitored periodically; most companies attempt to obtain a service contract from you, which might be wise depending on the size of your organization. We discuss service contracts and service-level agreements in Chapter 18, “Policies and Procedures.”

Remember that your mission-critical devices, such as servers, should constantly be drawing power from a line-conditioning device. Then, if there is a power outage to the server, a UPS should kick in. (In some cases, the UPS also acts as the line-

conditioning device.) Finally, if necessary, a backup generator will come online and feed all your critical devices with power.

Redundant Data

Now that we have power taken care of, we can move on to the heart of the matter—data. Data can fail due to file corruption and malicious intent, among other things. Power failures, hard drive failures, and user error can all lead to data failure. As always, it’s the data that we are most interested in securing, so it stands to reason that the data should be redundant as well. But which data? There is so much of it! Well, generally file servers should have redundant data sets of some sort. If an organization has the budgeting, next on the list would be databases and then web and file servers. However, in some instances these additional servers might be better off with failover systems as opposed to redundant data arrays. And certainly, the majority of client computers’ data does not constitute a reason for RAID. So we concentrate on the file servers in our original scenario in the beginning of the chapter.

The best way to protect file servers’ data is to use some type of redundant array of disks. This is referred to as RAID (an acronym for redundant array of independent disks, or inexpensive disks). RAID technologies are designed to either increase the speed of reading and writing data or to create one of several types of fault-tolerant volumes, or to do both. From a security viewpoint, we are most interested in the availability of data, the fault tolerance (the capability to withstand failure) of our disks. A RAID array can be internal or external to a computer. Historically, RAID arrays were configured as SCSI chains, but nowadays you also find SATA, eSATA, and Fibre Channel. Either way, the idea is that data is being stored on multiple disks that work with each other. The number of disks and the way they work together is dependent on the level of RAID. For the exam, you need to know several levels of RAID including RAID 0, RAID 1, RAID 5, RAID 6, and RAID 10 (also known as RAID 1+0). Table 16-1 describes each of these. Note that RAID 0 is the only one listed that is not fault tolerant, so from a security perspective it is not a viable option. Nevertheless, you should know it for the exam.

RAID Level |

Description |

Fault Tolerant? |

Minimum Number of Disks |

RAID 0 |

Striping Data is striped across multiple disks to increase performance. |

No |

Two |

RAID 1 |

Mirroring Data is copied to two identical disks. If one disk fails, the other continues to operate. See Figure 16-1 for an illustration. This RAID version allows for the least amount of downtime because there is a complete copy of the data ready at a moment’s notice. When each disk is connected to a separate controller, this is known as disk duplexing. |

Yes |

Two (and two only) |

RAID 5 |

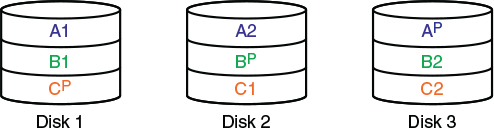

Striping with Parity Data is striped across multiple disks; fault-tolerant parity data is also written to each disk. If one disk fails, the array can reconstruct the data from the parity information. See Figure 16-2 for an illustration. |

Yes |

Three |

RAID 6 |

Striping with Double Parity Data is striped across multiple disks as it is in RAID 5, but there are two stripes of parity information. This usually requires another disk in the array. This system can operate even with two failed drives and is more adequate for time-critical systems. |

Yes |

Four |

RAID 0+1 |

Combines the advantages of RAID 0 and RAID 1. Requires a minimum of four disks. This system contains two RAID 0 striped sets. Those two sets are mirrored. |

Yes |

Four |

RAID 10 (also known |

Combines the advantages of RAID 1 and RAID 0. Normally requires a minimum of four disks. This system contains at least two RAID 1 mirrors that are then striped. |

Yes |

Four |

Figure 16-1 shows an illustration of RAID 1; you can see that data is written to both disks and that both disks collectively are known as the M: drive or M: volume. Figure 16-2 displays an illustration of RAID 5. In a RAID 5 array, blocks of data are distributed to the disks (A1 and A2 are a block, B1 and B2 are a block, and so on), and parity information is written for each block of data. This is written to each disk in an alternating fashion (Ap, Bp, and such) so that the parity is also distributed. If one disk fails, the parity information from the other disks will reconstruct the data. It is important to make the distinction between fault tolerance and backup. Fault tolerance means that the hard drives can continue to function (with little or no downtime) even if there is a problem with one of the drives. Backup means that we are taking the data and copying it (and possibly compressing it) to another location for archival in the event of a disaster. An example of a disaster would be if two drives in a RAID 5 array were to fail. If an organization is worried that this disaster could happen, it should consider RAID 6, or RAID 10.

Windows servers support RAID 0, 1, and 5 (and possibly 6 depending on the version) within the operating system. But most client operating systems cannot support RAID 1, 5, and 6. However, they can support hardware controllers that can create these arrays. Some motherboards have built-in RAID functionality as well.

Hardware is always the better way to go when it comes to RAID. Having a separate interface that controls the RAID configuration and handling is far superior to trying to control it with software within an operating system. The hardware could be an adapter card installed inside the computer, or an external box that connects to the computer or even to the network. When it comes to RAID in a network storage scenario, you are now dealing with network attached storage (NAS). These NAS points can be combined to form a storage area network (SAN), but any type of network attached storage will cost more money to an organization.

You can classify RAID in three different ways; these classifications can help when you plan which type of RAID system to implement.

![]() Failure-resistant disk systems: Protect against data loss due to disk failure. An example of this would be RAID 1 mirroring.

Failure-resistant disk systems: Protect against data loss due to disk failure. An example of this would be RAID 1 mirroring.

![]() Failure-tolerant disk systems: Protect against data loss due to any single component failure. An example of this would be RAID 1 mirroring with duplexing.

Failure-tolerant disk systems: Protect against data loss due to any single component failure. An example of this would be RAID 1 mirroring with duplexing.

![]() Disaster-tolerant disk systems: Protect data by the creation of two independent zones, each of which provides access to stored data. An example of this would be RAID 0+1.

Disaster-tolerant disk systems: Protect data by the creation of two independent zones, each of which provides access to stored data. An example of this would be RAID 0+1.

As mentioned, whatever you implement, the data must be accessible, and in many cases highly available. The properly planned RAID system will have high availability (HA) and will be scalable; for example, a RAID 6 system that allows you to dynamically add hot-swappable disks—and the space to add them! You want the system to be elastic as well. Some RAID systems have better elasticity than others, meaning the ability to adapt to workload changes. You might even consider moving certain data away from internal RAID and on to the cloud for additional elasticity.

Of course, no matter how well you protect the data from failure, users still need to access the data, and to do so might require some redundant networking.

Redundant Networking

Network connections can fail as well. And we all know how users need to have the network up and running—or there will be heck to pay. The security of an organization can be compromised if networking connections fail. Some types of connections you should consider include the following:

![]() Server network adapter connections

Server network adapter connections

![]() Main connections to switches and routers

Main connections to switches and routers

![]() The Internet connection

The Internet connection

So basically, when I speak of redundant networking, I’m referring to any network connection of great importance that could fail. Generally, these connections will be located in the server room.

Redundant network adapters are commonly used to decrease or eliminate server downtime in the case that one network adapter fails. However, you must consider how they will be set up. Optimally, the second network adapter will take over immediately when the first one fails, but how will this be determined? There are applications that can control multiple network adapters, or the switch that they connect to can control where data is directed in the case of a failure. Also, multiple network adapters can be part of an individual collective interface. What you decide will be dictated by company policy, budgeting, and previously installed equipment. As a rule of thumb, you should use like network adapters when implementing redundancy; check the model and the version of the particular model to be exact. When installing multiple network adapters to a server, that computer then becomes known as a multihomed machine. It is important to consider how multiple adapters (and their operating systems) will behave normally and during a failure. Microsoft has some notes about this; I left a link in the “View Recommended Resources” online document that accompanies this book. In some cases, you will install multiple physical network adapters, and in others you might opt for a single card that has multiple ports, such as a multi-Ethernet port Intel network adapter. This is often a cheaper solution than installing multiple cards but provides a single point of failure in the form of one adapter card and one adapter card slot. In our original scenario we had domain controllers, database servers, web servers, and file servers; these would all do well with the addition of redundant network adapters.

Companies should always have at least one backup switch sitting on the shelf. If the company has only one switch, it is a desperate single point of failure. If a company has multiple switches stacked in a star-bus fashion, the whole stack can be a single point of failure unless special backup ports are used (only available on certain switches). These special ports are often fiber-optic-based and are designed either for high-speed connections between switches or for redundancy. This concept should be employed at the master switch in a hierarchical star as well to avoid a complete network collapse. However, the hierarchical star is more secure than a star-bus configuration when it comes to network failure. In a hierarchical star, certain areas of the network still function even if one switch fails. This is a form of redundant topology.

Finally, your ISP is susceptible to failure as well—as I’m sure you are well aware. Most organizations rely on just one Internet connection for their entire network. This is another example of a single point of failure. Consider secondary connections to your ISP, such as redundant fiber-optics, forming what is known as a redundant ISP. Or, if you have a T-1 line, perhaps a BRI connection will do. Or if you have a T-3, perhaps a PRI connection would be best. At the very least, a set of dial-up connections can be used for redundancy. Some companies install completely fault-tolerant, dual Internet connections, the second of which comes online immediately following a failure. If you use a web host for your website and/or e-mail, consider a mirror site or more than one. Basically, in a nutshell, it’s all about not being caught with your pants down. If an organization is without its Internet connection for more than a day (or hours in some cases), you know it will be the network admin and the security admin who will be the first on the chopping block, most likely followed by the ISP.

Note

Network devices will fail. It’s just a matter of time. Chapter 12, “Vulnerability and Risk Assessment,” mentioned the concept of mean time between failures (MTBF)—a reliability term used to provide an average number of failures for a device per million hours of use. MTBF, along with mean time to repair (MTTR) and mean time to failure (MTTF), should be incorporated into your thought process when considering redundant networking.

Redundant Servers

Let’s take it to the next level and discuss redundant servers. When redundant network adapters and disks are not enough, you might decide to cluster multiple servers together that act as a single entity. This will be more costly and require more administration but can provide a company with low downtime and a secure feeling. Two or more servers that work with each other are a cluster.

The clustering of servers can be broken down into two types:

![]() Failover clusters: Otherwise known as high-availability clusters, these are designed so that a secondary server can take over in the case that the primary one fails, with limited or no downtime. A failover cluster can reduce the chance of a single point of failure on a server, regardless of what failed on that server—hard disk, CPU, memory, and so on. An example of a failover cluster would be the usage of two Microsoft domain controllers. When the first domain controller fails, the secondary domain controller should be ready to go at a moment’s notice. There can be tertiary and quaternary servers and beyond as well. It all depends on how many servers you think might fail concurrently. Another example would be the DNS server we talked about in the beginning of the chapter. If we wanted the DNS server to fail-closed, then we should set up a secondary DNS server as a failover, one that will be ready to go at a moment’s notice.

Failover clusters: Otherwise known as high-availability clusters, these are designed so that a secondary server can take over in the case that the primary one fails, with limited or no downtime. A failover cluster can reduce the chance of a single point of failure on a server, regardless of what failed on that server—hard disk, CPU, memory, and so on. An example of a failover cluster would be the usage of two Microsoft domain controllers. When the first domain controller fails, the secondary domain controller should be ready to go at a moment’s notice. There can be tertiary and quaternary servers and beyond as well. It all depends on how many servers you think might fail concurrently. Another example would be the DNS server we talked about in the beginning of the chapter. If we wanted the DNS server to fail-closed, then we should set up a secondary DNS server as a failover, one that will be ready to go at a moment’s notice.

![]() Load-balancing clusters: Load-balancing clusters are multiple computers connected together for the purpose of sharing resources such as CPU, RAM, and hard disks. In this way, the cluster can share CPU power, along with other resources, and balance the CPU load among all the servers. Microsoft’s Cluster Server is an example of this (although it can also act in failover mode), enabling for parallel, high-performance computing. Several third-party vendors offer clustering software for operating systems and virtual operating systems as well. It is a common technique in web and FTP server farms, as well as in IRC servers, DNS servers, and NNTP servers.

Load-balancing clusters: Load-balancing clusters are multiple computers connected together for the purpose of sharing resources such as CPU, RAM, and hard disks. In this way, the cluster can share CPU power, along with other resources, and balance the CPU load among all the servers. Microsoft’s Cluster Server is an example of this (although it can also act in failover mode), enabling for parallel, high-performance computing. Several third-party vendors offer clustering software for operating systems and virtual operating systems as well. It is a common technique in web and FTP server farms, as well as in IRC servers, DNS servers, and NNTP servers.

Data can also be replicated back and forth between servers as it often is with database servers and web servers. This is actually a mixture of redundant data (data replication) and server clustering.

However, it doesn’t matter how many servers you install in a cluster. If they are all local, they could all be affected by certain attacks or, worse yet, disasters. Enter the redundant site concept.

Redundant Sites

Well, we have implemented redundant arrays of disks, redundant network adapters, redundant power, and even redundant servers. What is left? Devising a mirror of the entire network! That’s right, a redundant site. Within the CIA triad, redundant sites fall into the category of availability. In the case of a disaster, a redundant site can act as a safe haven for your data and users. Redundant sites are sort of a gray area between redundancy and a disaster recovery method. If you have one and need to use it, a “disaster” has probably occurred. But, the better the redundant site, the less time the organization loses, and the less it seems like a disaster and more like a failure that you have prepared for. Of course, this all depends on the type of redundant site your organization decides on.

When it comes to the types of redundant sites, I like to refer to the story of Goldilocks and the three bears’ three bowls of porridge. One was too hot, one too cold—and one just right. Most organizations opt for the warm redundant site as opposed to the hot or cold. Let’s discuss these three now.

![]() Hot site: A near duplicate of the original site of the organization that can be up and running within minutes (maybe longer). Computers and phones are installed and ready to go, a simulated version of the server room stands ready, and the vast majority of the data is replicated to the site on a regular basis in the event that the original site is not accessible to users for whatever reason. Hot sites are used by companies that would face financial ruin in the case that a disaster makes their main site inaccessible for a few days or even a few hours. This is the only type of redundant site that can facilitate a full recovery.

Hot site: A near duplicate of the original site of the organization that can be up and running within minutes (maybe longer). Computers and phones are installed and ready to go, a simulated version of the server room stands ready, and the vast majority of the data is replicated to the site on a regular basis in the event that the original site is not accessible to users for whatever reason. Hot sites are used by companies that would face financial ruin in the case that a disaster makes their main site inaccessible for a few days or even a few hours. This is the only type of redundant site that can facilitate a full recovery.

![]() Warm site: Has computers, phones, and servers, but they might require some configuration before users can start working on them. The warm site will have backups of data that might need to be restored; they will probably be several days old. This is chosen the most often by organizations because it has a good amount of configuration yet remains less expensive than a hot site.

Warm site: Has computers, phones, and servers, but they might require some configuration before users can start working on them. The warm site will have backups of data that might need to be restored; they will probably be several days old. This is chosen the most often by organizations because it has a good amount of configuration yet remains less expensive than a hot site.

![]() Cold site: Has tables, chairs, bathrooms, and possibly some technical setup—for example, basic phone, data, and electric lines. Otherwise, a lot of configuration of computers and data restoration is necessary before the site can be properly utilized. This type of site is used only if a company can handle the stress of being nonproductive for a week or more.

Cold site: Has tables, chairs, bathrooms, and possibly some technical setup—for example, basic phone, data, and electric lines. Otherwise, a lot of configuration of computers and data restoration is necessary before the site can be properly utilized. This type of site is used only if a company can handle the stress of being nonproductive for a week or more.

Although they are redundant, these types of sites are generally known as backup sites because if they are required, a disaster has probably occurred. A good network security administrator tries to plan for, and rely on, redundancy and fault tolerance as much as possible before having to resort to disaster recovery methods.

Redundant People

Well—not really redundant people (which I suppose would be clones), but rather the redundancy of a person’s role in the organization. A person doesn’t work for a company forever; in fact, the average length of employment for IT management persons is less than five years. This level of attrition is in part made up of persons who move to other departments, leave for another job, take leaves of absence, or retire. This leads to the important concept of succession planning: identifying internal people who understand the IT infrastructure and can take over in the event an important decision-maker departs; for example, IT directors, CIOs, CTOs, and other IT management persons. The concept trickles down to any IT person who works for the organization. That is where the concepts of job rotation and separation of duties become very important. A high attrition rate requires cross-training of employees. In smaller companies, the loss of one smart IT person could be tantamount to a disaster if no one else understands (or has access to) the critical systems. That could truly be a disaster from a personnel standpoint, but much more lethal is a disaster concerning actual data.

Disaster Recovery Planning and Procedures

Regardless of how much you planned out redundancy and fault tolerance, when disaster strikes, it can be devastating. There are three things that you should be concerned with as a network security administrator when it comes to disasters—your data, your server room, and the site in general. You need to have a powerful backup plan for your data and a comprehensive disaster recovery plan as well.

Data Backup

Disaster recovery (or DR for short) is pretty simple in the case of data. If disaster strikes, you better have a good data backup plan; one that fits your organization’s needs and budget. Your company might have a written policy as to what should be backed up, or you might need to decide what is best. Data can be backed up to various media, other computers, SANs, NAS devices, and to the cloud, but generally, one of the best local mediums is tape backup.

There are several tape backup types you should be aware of for the exam. Most operating systems and third-party backup utilities support these types. Keep in mind that this list is not the end-all of backup types, but it gives a basic idea of the main types of data backups used in the field. When performing any of these types of backups, the person must select what to back up. It could be a folder or an entire volume. For the sake of simplicity, we call these folders.

![]() Full backup: Backs up all the contents of a folder. The full backup can be stored on one or more tapes. If more than one is used, the restore process would require starting with the oldest tape and moving through the tapes chronologically one by one. Full backups can use a lot of space, causing a backup operator to use a lot of backup tapes, which can be expensive. Full backups can also be time-consuming if there is a lot of data. So, often, incremental and differential backups are used with full backups as part of a backup plan.

Full backup: Backs up all the contents of a folder. The full backup can be stored on one or more tapes. If more than one is used, the restore process would require starting with the oldest tape and moving through the tapes chronologically one by one. Full backups can use a lot of space, causing a backup operator to use a lot of backup tapes, which can be expensive. Full backups can also be time-consuming if there is a lot of data. So, often, incremental and differential backups are used with full backups as part of a backup plan.

![]() Incremental backup: Backs up only the contents of a folder that has changed since the last full backup or the last incremental backup. An incremental backup must be preceded by a full backup. Restoring the contents of a folder or volume would require a person to start with the full backup tape and then move on to each of the incremental backup tapes chronologically, ending with the latest incremental backup tape. Incremental backups started in the time of floppy disks when storage space and backup speed were limited. Some operating systems and backup systems associate an archive bit (or archive flag) to any file that has been modified; this indicates to the backup program that it should be backed up during the next backup phase. If this is the case, the incremental backup resets the bit after backup is complete.

Incremental backup: Backs up only the contents of a folder that has changed since the last full backup or the last incremental backup. An incremental backup must be preceded by a full backup. Restoring the contents of a folder or volume would require a person to start with the full backup tape and then move on to each of the incremental backup tapes chronologically, ending with the latest incremental backup tape. Incremental backups started in the time of floppy disks when storage space and backup speed were limited. Some operating systems and backup systems associate an archive bit (or archive flag) to any file that has been modified; this indicates to the backup program that it should be backed up during the next backup phase. If this is the case, the incremental backup resets the bit after backup is complete.

![]() Differential backup: Backs up only the contents of a folder that has changed since the last full backup. A differential backup must be preceded by a full backup. To restore data, a person would start with the full backup tape and then move on to the differential tape. Differential backups do not reset the archive bit when backing up. This means that incremental backups will not see or know that a differential backup has occurred.

Differential backup: Backs up only the contents of a folder that has changed since the last full backup. A differential backup must be preceded by a full backup. To restore data, a person would start with the full backup tape and then move on to the differential tape. Differential backups do not reset the archive bit when backing up. This means that incremental backups will not see or know that a differential backup has occurred.

Table 16-2 shows an example of a basic one-week backup schedule using the full and incremental backup types. A full backup is done on Monday, and incremental backups are done Tuesday through Friday.

Table 16-2 Example Incremental Backup Schedule

Day |

Backup Type |

Time |

Monday |

Full backup |

6 p.m. |

Tuesday |

Incremental backup |

6 p.m. |

Wednesday |

Incremental backup |

6 p.m. |

Thursday |

Incremental backup |

6 p.m. |

Friday |

Incremental backup |

6 p.m. |

In this schedule, five backup tapes are required, one for each day. Let’s say that the backups are done at 6 p.m. daily. Often an organization might employ a sixth tape, which is a dummy tape. This tape is put in the tape drive every morning by the backup operator and is replaced with the proper daily tape at 5:30 p.m. when everyone has left the building. This prevents data theft during the day. The real tapes are kept locked up until needed. Tapes might be reused when the cycle is complete, or an organization might opt to archive certain tapes each week, for example, the full backup tapes, and use new tapes every Monday. Another option is to run a complete full backup (which might be time-consuming) over the weekend and archive that tape every Monday. As long as no data loss is reported, this is a feasible option.

Let’s say that this backup procedure was used to back up a server. Now, let’s say that the server crashed on Wednesday at 9 p.m., and the hard drive data was lost. A backup operator arriving on the scene Thursday morning would need to review any logs available to find out when the server crashed. Then, after an admin fixes the server, the backup operator would need to restore the data. This would require starting with the Monday full backup tape and continuing on to the Tuesday and Wednesday incremental backup tapes. So three tapes in total would be needed to complete the restore.

Table 16-3 shows another possible backup schedule where a full backup is done on Monday and differential backups are done on Wednesday and Friday.

Table 16-3 Example Differential Backup Schedule

Day |

Backup Type |

Time |

Monday |

Full backup |

6 p.m. |

Tuesday |

None |

|

Wednesday |

Differential backup |

6 p.m. |

Thursday |

None |

|

Friday |

Differential backup |

6 p.m. |

Let’s say the backup operator needed to restore data on Monday morning due to a failure over the weekend. The backup operator would need two backup tapes, the previous Monday full backup and the Friday differential backup, because the differential backup would have backed up everything since the last full backup. The Wednesday differential backup would not be necessary for recovery; contrast this with the incremental backup schedule from Table 16-2 where each tape would be needed for restoration. In a differential backup scenario the “clear archive bit” is not selected, so a differential backup will back up things that may have already been backed up by a previous differential backup. In an incremental backup scenario, the “clear archive bit” option is selected, and so items that are backed up by an incremental are not backed up by a subsequent incremental.

Some operating systems such as Windows Server manage full and incremental backups for the administrator. Windows Server 2008 R2 and higher create incremental backups that behave as full backups. Any item can be recovered if need be, but the backup only occupies the space needed for an incremental backup.

Now, the schedules we just showed in Tables 16-2 and 16-3 are basic backup methods, also known as backup rotation schemes. Organizations might also do something similar over a two-week period. However, you should also be aware of a couple of other backup schemes used in the field. These might use one or more of the backup types mentioned previously.

![]() 10 tape rotation: This method is simple and provides easy access to data that has been backed up. It can be accomplished during a two-week backup period; each tape is used once per day for two weeks. Then the entire set is recycled. Generally, this is similar to the one-week schedule shown previously; however, the second Monday might be a differential backup instead of a full backup. And the second Friday might be a full backup, which is archived. There are several options; you would need to run some backups and see which is best for you given the amount of tapes required and time spent running the backups.

10 tape rotation: This method is simple and provides easy access to data that has been backed up. It can be accomplished during a two-week backup period; each tape is used once per day for two weeks. Then the entire set is recycled. Generally, this is similar to the one-week schedule shown previously; however, the second Monday might be a differential backup instead of a full backup. And the second Friday might be a full backup, which is archived. There are several options; you would need to run some backups and see which is best for you given the amount of tapes required and time spent running the backups.

![]() Grandfather-father-son: This backup rotation scheme is probably the most common backup method used. When attempting to use this scheme, three sets of backup tapes must be defined—usually they are daily, weekly, and monthly, which correspond to son, father, and grandfather. Backups are rotated on a daily basis; normally the last one of the week will be graduated to father status. Weekly (father) backups are rotated on a weekly basis, with the last one of the month being graduated to grandfather status. Often, monthly (grandfather) backups, or a copy of them, are archived offsite.

Grandfather-father-son: This backup rotation scheme is probably the most common backup method used. When attempting to use this scheme, three sets of backup tapes must be defined—usually they are daily, weekly, and monthly, which correspond to son, father, and grandfather. Backups are rotated on a daily basis; normally the last one of the week will be graduated to father status. Weekly (father) backups are rotated on a weekly basis, with the last one of the month being graduated to grandfather status. Often, monthly (grandfather) backups, or a copy of them, are archived offsite.

![]() Towers of Hanoi: This backup rotation scheme is based on the mathematics of the Towers of Hanoi puzzle. This also uses three backup sets, but they are rotated differently. Without getting into the mathematics behind it, the basic idea is that the first tape is used every second day, the second tape is used every fourth day, and the third tape is used every eighth day. Table 16-4 shows an example of this. Keep in mind that this can go further; a fourth tape can be used every 16th day, and a fifth tape every 32nd day, and so on, although it gets much more complex to remember what tapes to use to back up and which order to go by when restoring. The table shows an example with three tape sets represented as sets A, B, and C.

Towers of Hanoi: This backup rotation scheme is based on the mathematics of the Towers of Hanoi puzzle. This also uses three backup sets, but they are rotated differently. Without getting into the mathematics behind it, the basic idea is that the first tape is used every second day, the second tape is used every fourth day, and the third tape is used every eighth day. Table 16-4 shows an example of this. Keep in mind that this can go further; a fourth tape can be used every 16th day, and a fifth tape every 32nd day, and so on, although it gets much more complex to remember what tapes to use to back up and which order to go by when restoring. The table shows an example with three tape sets represented as sets A, B, and C.

Table 16-4 Example of Towers of Hanoi Three-Tape Schedule

Day of the Cycle |

||||||||

1 |

2 |

3 |

4 |

5 |

6 |

7 |

8 |

|

Tape |

A |

A |

A |

A |

||||

B |

B |

|||||||

C |

C |

To avoid the rewriting of data, start on the fourth day of the cycle with tape C. This rotation scheme should be written out and perhaps calculated during the planning stage before it is implemented. Also, due to the complexity of the scheme, a restore sequence should be tested as well.

Tapes should be stored in a cool, dry area, away from sunlight, power lines, and other power sources. Most tape backup vendors have specific guidelines as to the temperature and humidity ranges for storage, along with other storage guidelines. Tape backup methods and tape integrity should always be tested by restoring all or part of a backup.

It’s also possible to archive data to a third party. This could be for backup purposes or for complete file replication. Several companies offer this type of service, and you can usually select to archive data over the Internet or by courier.

So far, we have been discussing how to back up groups of files. However, you can also back up entire systems or architectural instances. For instance, a snapshot backup backs up an entire application, drive, or system. It is also known as an image backup, especially when referring to backing up an entire operating system. Most of the time, this is done when a new system is installed and configured, but it can also be done when major changes are made to a system. Some organizations even back up images of all systems every month, even every week. This requires a lot of resources and a decent IT budget and so it must be planned accordingly.

Many organizations back up to tape. But some organizations are far too large for tape backup, and/or don’t have the personnel or equipment necessary to archive properly. In these “big data” scenarios, data might be stored on the cloud, or archived with a third-party such as Iron Mountain. Whatever your data backup method, make sure that there is some kind of archival offsite in the case of a true disaster. Optimally, this will be in a sister site in another city but regardless should be geographically distant from the main site. It is an integral part of disaster recovery planning.

DR Planning

Before we can plan for disasters, we need to define exactly what disasters are possible and list them in order starting with the most probable. Sounds a bit morbid, but it’s necessary to ensure the long-term welfare of your organization.

What could go wrong? Let’s focus in on the server room in the beginning of the chapter as our scenario. As you remember, we have nine servers, networking equipment, a PBX, and a few workstations—a pretty typical server room for a midsized company. Keep in mind that larger organizations will have more equipment, bigger server rooms, and more to consider when it comes to DR planning.

Disasters can be divided into two categories: natural and manmade. Some of the disasters that could render your server room inoperable include the following:

![]() Fire: Fire is probably the number one planned-for disaster. This is partially because most municipalities require some sort of fire suppression system, as well as the fact that most organizations’ policies define the usage of a proper fire suppression system. We discuss fire protection in more depth in Chapter 17, “Social Engineering, User Education, and Facilities Security,” but for now, the three main types of fire extinguishers include A (for ash fires), B (for gas and other flammable liquid fires), and C (for electrical fires). Unfortunately, these and the standard sprinkler system in the rest of the building are not adequate for a server room. If there were a fire, the material from the fire extinguisher or the water from the sprinkler system would damage the equipment, making the disaster even worse! Instead, a server room should be equipped with a proper system of its own such as DuPont FM-200. This uses a large tank that stores a clean agent fire extinguishant that is sprayed from one or more nozzles in the ceiling of the server room. It can put out fires of all types in seconds. A product such as this can be used safely when people are present; however, most systems also employ a very loud alarm that tells all personnel to leave the server room. It is wise to run through several fire suppression alarm tests and fire drills, ensuring that the alarm will sound when necessary and that personnel know what do to when the alarm sounds. For example, escape plans should be posted, and battery-backup exit signs should be installed in various locations throughout the building so that employees know the quickest escape route in the case of a fire. Fire drills (and other safety drills) should be performed periodically so that the organization can analyze the security posture of their safety plan.

Fire: Fire is probably the number one planned-for disaster. This is partially because most municipalities require some sort of fire suppression system, as well as the fact that most organizations’ policies define the usage of a proper fire suppression system. We discuss fire protection in more depth in Chapter 17, “Social Engineering, User Education, and Facilities Security,” but for now, the three main types of fire extinguishers include A (for ash fires), B (for gas and other flammable liquid fires), and C (for electrical fires). Unfortunately, these and the standard sprinkler system in the rest of the building are not adequate for a server room. If there were a fire, the material from the fire extinguisher or the water from the sprinkler system would damage the equipment, making the disaster even worse! Instead, a server room should be equipped with a proper system of its own such as DuPont FM-200. This uses a large tank that stores a clean agent fire extinguishant that is sprayed from one or more nozzles in the ceiling of the server room. It can put out fires of all types in seconds. A product such as this can be used safely when people are present; however, most systems also employ a very loud alarm that tells all personnel to leave the server room. It is wise to run through several fire suppression alarm tests and fire drills, ensuring that the alarm will sound when necessary and that personnel know what do to when the alarm sounds. For example, escape plans should be posted, and battery-backup exit signs should be installed in various locations throughout the building so that employees know the quickest escape route in the case of a fire. Fire drills (and other safety drills) should be performed periodically so that the organization can analyze the security posture of their safety plan.

![]() Flood: The best way to avoid server room damage in the case of a flood is to locate the server room on the first floor or higher, not in a basement. There’s not much you can do about the location of a building, but if it is in a flood zone, it makes the use of a warm or hot site that much more imperative. And a server room could also be flooded by other things such as boilers. The room should not be adjacent to, or on the same floor as, a boiler room. It should also be located away from other water sources such as bathrooms and any sprinkler systems. The server room should be thought of three-dimensionally; the floors, walls, and ceiling should be analyzed and protected. Some server rooms are designed to be a room within a room and might have drainage installed as well.

Flood: The best way to avoid server room damage in the case of a flood is to locate the server room on the first floor or higher, not in a basement. There’s not much you can do about the location of a building, but if it is in a flood zone, it makes the use of a warm or hot site that much more imperative. And a server room could also be flooded by other things such as boilers. The room should not be adjacent to, or on the same floor as, a boiler room. It should also be located away from other water sources such as bathrooms and any sprinkler systems. The server room should be thought of three-dimensionally; the floors, walls, and ceiling should be analyzed and protected. Some server rooms are designed to be a room within a room and might have drainage installed as well.

![]() Long-term power loss: Short-term power loss should be countered by the UPS, but long-term power loss requires a backup generator and possibly a redundant site.

Long-term power loss: Short-term power loss should be countered by the UPS, but long-term power loss requires a backup generator and possibly a redundant site.

![]() Theft and malicious attack: Theft and malicious attack can also cause a disaster, if the right data is stolen. Physical security such as door locks/access systems and video cameras should be implemented to avoid this. Servers should be cable-locked to their server racks, and removable hard drives (if any are used) should have key access. Not only does a security administrator have the task of writing policies and procedures that govern the security of server rooms and data centers, but that person will often have the task of enforcing those policies—meaning muscle in the form of security guards, and dual-class technician/guards—or by otherwise having the right to terminate employees as needed, contact and work with the authorities, and so on. Physical security is covered in more depth in Chapter 10, “Physical Security and Authentication Models.” Malicious network attacks also need to be warded off; these are covered in depth in Chapter 7, “Networking Protocols and Threats.”

Theft and malicious attack: Theft and malicious attack can also cause a disaster, if the right data is stolen. Physical security such as door locks/access systems and video cameras should be implemented to avoid this. Servers should be cable-locked to their server racks, and removable hard drives (if any are used) should have key access. Not only does a security administrator have the task of writing policies and procedures that govern the security of server rooms and data centers, but that person will often have the task of enforcing those policies—meaning muscle in the form of security guards, and dual-class technician/guards—or by otherwise having the right to terminate employees as needed, contact and work with the authorities, and so on. Physical security is covered in more depth in Chapter 10, “Physical Security and Authentication Models.” Malicious network attacks also need to be warded off; these are covered in depth in Chapter 7, “Networking Protocols and Threats.”

![]() Loss of building: Temporary loss of the building due to gas leak, malicious attack, inaccessibility due to crime scene investigation, or natural event will require personnel to access a redundant site. Your server room should have as much data archived as possible, and the redundant site should be warm enough to keep business running. A plan should be in place as to how data will be restored at the redundant site and how the network will be made functional.

Loss of building: Temporary loss of the building due to gas leak, malicious attack, inaccessibility due to crime scene investigation, or natural event will require personnel to access a redundant site. Your server room should have as much data archived as possible, and the redundant site should be warm enough to keep business running. A plan should be in place as to how data will be restored at the redundant site and how the network will be made functional.

Disaster recovery plans (DRPs) should include information regarding redundancy, such as sites and backup, but should not include information that deals with the day-to-day operations of an organization, such as updating computers, patch management, monitoring and audits, and so on. It is important to include only what is necessary in a disaster recovery plan. Too much information can make it difficult to use when a disaster does strike.

Although not an exhaustive set, the following written disaster recovery policies, procedures, and information should be part of your disaster recovery plan:

![]() Contact information: Who you should contact if a disaster occurs and how employees will contact the organization.

Contact information: Who you should contact if a disaster occurs and how employees will contact the organization.

![]() Impact determination: A procedure to determine a disaster’s full impact on the organization. This includes an evaluation of assets lost and the cost to replace those assets.

Impact determination: A procedure to determine a disaster’s full impact on the organization. This includes an evaluation of assets lost and the cost to replace those assets.

![]() Recovery plan: This will be based on the determination of disaster impact. This will have many permutations depending on the type of disaster. Although it is impossible to foresee every possible event, the previous list gives a good starting point. The recovery plan includes an estimated time to complete recovery and a set of steps defining the order of what will be recovered and when. It might also include an after action report (AAR), which is a formal document designed to determine the effectiveness of a recovery plan in the case that it was implemented.

Recovery plan: This will be based on the determination of disaster impact. This will have many permutations depending on the type of disaster. Although it is impossible to foresee every possible event, the previous list gives a good starting point. The recovery plan includes an estimated time to complete recovery and a set of steps defining the order of what will be recovered and when. It might also include an after action report (AAR), which is a formal document designed to determine the effectiveness of a recovery plan in the case that it was implemented.

![]() Business continuity plan: A BCP defines how the business will continue to operate if a disaster occurs; this plan is often carried out by a team of individuals. A BCP is also referred to as a continuity of operations plan (COOP). Over the years, BCPs have become much more important, and depending on the organization, the BCP might actually encompass the entire DRP. It also comprises business impact analysis—the examination of critical versus noncritical functions. These functions are assigned two different values or metrics: recovery time objective (RTO), the acceptable amount of time to restore a function (for example, the time required for a service to be restored after a disaster), and recovery point objective (RPO), the acceptable latency of data, or the maximum tolerable time that data can remain inaccessible after a disaster. It’s impossible to foresee exactly how long it will take to restore service after a disaster, but with the use of proper archival, hot/warm/cold sites, and redundant systems, a general timeframe can be laid out, and an organization will be able to decide on a maximum timeframe to get data back online. This in effect is IT contingency planning (ITCP).

Business continuity plan: A BCP defines how the business will continue to operate if a disaster occurs; this plan is often carried out by a team of individuals. A BCP is also referred to as a continuity of operations plan (COOP). Over the years, BCPs have become much more important, and depending on the organization, the BCP might actually encompass the entire DRP. It also comprises business impact analysis—the examination of critical versus noncritical functions. These functions are assigned two different values or metrics: recovery time objective (RTO), the acceptable amount of time to restore a function (for example, the time required for a service to be restored after a disaster), and recovery point objective (RPO), the acceptable latency of data, or the maximum tolerable time that data can remain inaccessible after a disaster. It’s impossible to foresee exactly how long it will take to restore service after a disaster, but with the use of proper archival, hot/warm/cold sites, and redundant systems, a general timeframe can be laid out, and an organization will be able to decide on a maximum timeframe to get data back online. This in effect is IT contingency planning (ITCP).

Some organizations will have a continuity of operation planning group or crisis management group that meets every so often to discuss the BCP. Instead of running full-scale drills, they might run through tabletop exercises, where a talk-through of simulated disasters (in real time) is performed—a sort of role-playing, if you will. This can save time and be less disruptive to employees, but it is more than just a read-through of the BCP. It can help to identify critical systems and mission-essential functions of the organization’s network as well as failover functionality, and alternate processing sites. It can also aid in assessing the impact of a potential disaster on privacy, property, finance, the reputation of the company, and most importantly, life itself.

![]() Copies of agreements: Copies of any agreements with vendors of redundant sites, ISPs, building management, and so on should be stored with the DR plan. We discuss agreements in Chapter 18, “Policies and Procedures.”

Copies of agreements: Copies of any agreements with vendors of redundant sites, ISPs, building management, and so on should be stored with the DR plan. We discuss agreements in Chapter 18, “Policies and Procedures.”

![]() Disaster recovery drills and exercises: Employees should be drilled on what to do if a disaster occurs. These exercises should be written out step-by-step and should conform to safety standards.

Disaster recovery drills and exercises: Employees should be drilled on what to do if a disaster occurs. These exercises should be written out step-by-step and should conform to safety standards.