CHAPTER 4

Server Storage

In this chapter, you will

• Learn about various storage technologies

• Understand why SSDs are superior to HDDs

• Plan storage capacity

• Learn about cloud storage options

• Understand network storage

• Learn how to install storage devices

• Recognize ways to maintain file systems

• Discover a variety of RAID configuration options

Today’s server storage implementations can be configured from a wide array of options based on several considerations, including whether the stored data is directly available to the server or whether it is accessible to the server over a network.

A variety of storage technologies are in use today. Large server rooms and data centers tend to use a collection of rack-mounted storage arrays available over a dedicated storage area network (SAN). For cloud-computing consumers, additional storage can be provisioned in seconds with the click of a mouse. Disks can be grouped together to increase disk read and write performance, to increase fault tolerance when disks fail, or to do both.

You need to understand storage options at both hardware and software levels, because when the data storage is seen by the server operating system, it needs to be configured—initialized, partitioned, and formatted. In this chapter, you will learn how to plan, choose, and configure storage to meet business needs.

Storage Technologies

A wide array of factors will influence your storage decisions, including decisions regarding where the storage will exist. Are you using cloud storage? Are the storage disks located inside the server itself, or are they accessible over an enterprise network?

Similar to server form factors, storage device dimensions and form factors have to be considered so that storage devices can be physically accommodated within servers or storage arrays. Today, 3.5-inch large form factor (LFF) hard drives are common. (This measurement refers to the diameter of the disk platters.) Small form factor (SFF) disks are 2.5 inches. (Note that you don’t need to be concerned with form factors when it comes to external USB drives.)

Regardless of the type of storage in use, two of your main concerns will be the storage capacity and how quickly you can read and write to storage. At the server level, today’s storage (per disk) falls somewhere in the 1 to 8 terabyte (TB) range, but this is constantly evolving. Of course, grouping disks together provides vastly greater storage capacity.

Magnetic Hard Disks

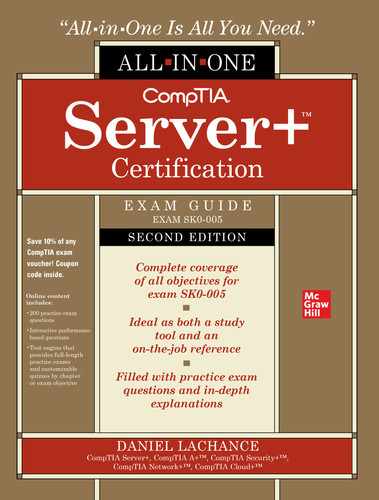

Old-school hard disks use magnetism to store data on metal platters. Hard disk drives (HDDs) are vacuum-sealed and contain multiple platters, each having read and write heads on an actuator arm to read and write data as the platter spins. You can see the actuator arm extended over the hard disk platter in Figure 4-1. Numerous factors influence disk speeds, as listed in Table 4-1.

Figure 4-1 The internal components of a magnetic hard disk

Table 4-1 Storage Speed Factors

The norm for desktop disks is 7200 RPMs; laptop disk speeds are around 5400 RPMs. Fast server hard drives spin at 15,000 RPMs, and you’ll pay more for these drives than for slower 10,000 RPM drives. Keep in mind that an increase in RPMs means a decrease in rotational latency, which translates to faster data access times.

Solid-State Drives

Solid-state drives (SSDs) have no moving parts, which means less physical wear and tear, less power required (no motors to drive), less heat, and less noise (less ventilation needs, as they run cooler because of reduced power draw). The biggest problem with SSDs, at least at the time of this writing, is that larger capacity SSDs are more expensive than the equivalent capacity magnetic hard disks.

Externally, SSDs aren’t much different from regular hard drives. Magnetic hard drives and SSDs are both plugged in using the Serial Advanced Technology Attachment (SATA) interface. Configuring and using the disk in the server operating system is the same regardless of drive type. There are also internal SSDs that can interface with the server via a Peripheral Component Interconnect (PCI) interface.

Some large external USB drives are simply enclosures that contain an internal magnetic hard disk. CompactFlash and Secure Digital (SD) storage are used for smaller devices such as cameras, audio recorders, and the like. The specific type of device, such as a digital camera, dictates whether you must use CompactFlash or SD storage. Both flash storage types are shown in Figure 4-2.

Figure 4-2 A USB flash drive (at left) and a 32GB SDHC (high capacity) flash memory card

Modern enterprise server environments often use a hybrid of SSDs and slower magnetic hard disks that are configured in different storage tiers. Different vendor solutions use varying methods of defining what gets stored on the faster SSDs; generally, frequently accessed data will reside on SSDs and less frequently accessed data is stored on the slower magnetic hard disks.

Public cloud providers also charge a premium when SSD storage is used. For example, when provisioning virtual machines in the cloud, we can opt for better performance by choosing a higher disk IOPS value. In the cloud, it’s important to deprovision unneeded resources (such as storage) to avoid unnecessary charges.

Hybrid Drives

You can probably guess what a hybrid drive is: it’s a combination of hard disk and solid-state technology in the same package. Often called solid-state hybrid drives (SSHDs), they consist of spinning platters as well as the faster flash memory. These drives cache frequently accessed data on the faster flash memory to improve performance.

Cost-wise, SSHDs fall between traditional hard drives and SSDs. Consider the following sample price comparisons (in US dollars):

• 1TB hard disk drive: $40

• 1TB SSHD: $60

• 1TB SSD: $90

You can see why SSDs are often used only for frequently accessed files or any type of disk-intensive activities related to reading and writing.

Storage Tiers

All storage and data are not equal: SSDs offer better performance than hard disks, and valuable data should be quickly accessible. Storage administrators can configure storage-tier policies that determine which type of data will be stored on which specific storage media. In the industry, this is generally referred to as hierarchical storage management (HSM).

As an example, EMC, the same company that owns VMware, offers the fully automated storage tiering (FAST) feature. Microsoft Windows Server also offers storage tier capabilities; you’ll need both SSD and HDD storage devices to set this up. After creating a storage pool from the physical disks and upon creating a virtual disk in the pool, you’ll get the option to create storage tiers on the virtual disk.

Because many servers today access data storage over a network, it makes sense to place tiered storage capabilities in front of SAN storage. Different vendor solutions have different naming conventions for storage tiers. Here are some examples:

Windows Server

• Tier 1 (SSD)

• Tier 2 (HDD)

Tier 1 storage (SSD) is used for frequently accessed and important data; Tier 2 storage (HDD) is used for less frequently accessed data.

EMC

• Extreme performance tier (fastest)

• Performance tier (middle of the road)

• Capacity tier (slowest)

Disk Interfaces

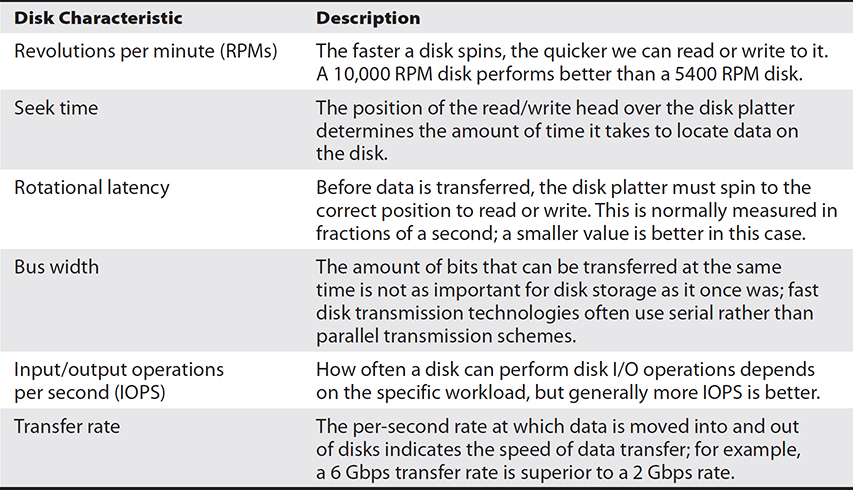

Over the years, some disk interface standards have stood the test of time and have evolved, while others have been replaced by newer technologies. Take, for instance, the old IDE (Integrated Drive Electronics) disk interface standard that was common in the 1980s and 1990s, which is no longer commonly used. Instead, Serial ATA (SATA) (Figure 4-3) and Fibre Channel (FC) are the norm in server environments.

Figure 4-3 SATA disk data cable connectors

Table 4-2 lists common disk interface characteristics.

Table 4-2 Disk Interfaces

Optical Drives

Optical drives have slowly begun to fade away. When is the last time you burned a CD or DVD, or even a Blu-ray disc? Probably not recently. With the availability of cheap and fast external USB devices, consumers have moved away from optical media in favor of USB storage devices because they are easy to work with, they accommodate many writes—and they just work! Writing multiple times to an open CD or DVD, on the other hand, can be tricky, and you need the right software to do it.

Optical storage media is considered a form of Write Once Read Many (WORM) media. This enables data to be written once to the media and then read many times. Newer operating systems, including Windows, support optical media burning without requiring that you install additional software, despite the fact that it isn’t as common nowadays as it once was. There are occasions when a server may require a local optical drive to boot from for recovery purposes or to install an operating system. As long as the server has a USB interface, you’ll be able to plug in an external optical drive if needed. With the advent of server virtualization, Preboot Execution Environment (PXE) network boot, and operating system imaging, installing from optical media has become less common.

Cloud Storage

Public cloud storage has become popular for consumers and enterprises, and no wonder! All that’s needed is an Internet connection and a cloud provider subscription. You can provision (and deprovision) storage instantaneously and pay only for the space you use and potentially for data transfers out of cloud storage. Legal or regulatory restrictions may prevent the use of public cloud storage entirely or in certain geographic regions. Certainly cloud storage is not appropriate for every scenario—it’s just another option.

Your on-premises storage (servers and storage enclosures) can be configured to replicate or back up to the cloud as well, so hybrid solutions are also possible. The great thing about cloud storage is that somebody else deals with the disk devices, their configuration, and their availability on the network. As cloud consumers, we can connect from pretty much any device (such as a smartphone, as shown in Figure 4-4) on any network to access our files.

Figure 4-4 Accessing cloud storage from an Android smartphone

Direct-Attached Storage

Direct-attached storage (DAS) is the traditional server storage model in which storage disks are housed inside the server chassis and are locally available only to that server. So if the server is not accessing storage over some kind of a network, it’s DAS.

Network-Attached Storage

File-sharing protocols such as Microsoft Server Message Block (SMB) and the Network File System (NFS) for UNIX enable user connectivity to shared file systems over a network. Common Internet File System (CIFS) is a specific implementation of SMB. The fact that these higher layer protocols are used is a factor that distinguishes network-attached storage (NAS) from storage area networks (SANs). Another factor is that servers connect to NAS storage over a network consisting of standard network equipment and using standard network protocols such as Internet Protocol (IP). SANs are specialized high-speed networks designed to transmit disk I/O traffic using protocols designed for this use.

NAS can come in the form of a hardware appliance, such as the Dell device shown in Figure 4-5, which is essentially a disk enclosure with wired and wireless network connectivity and web management built into its firmware. In other cases, your NAS storage may be served up from a server configured solely for this purpose.

Figure 4-5 PowerVault NX3200 NAS device, Courtesy of Dell, Inc.

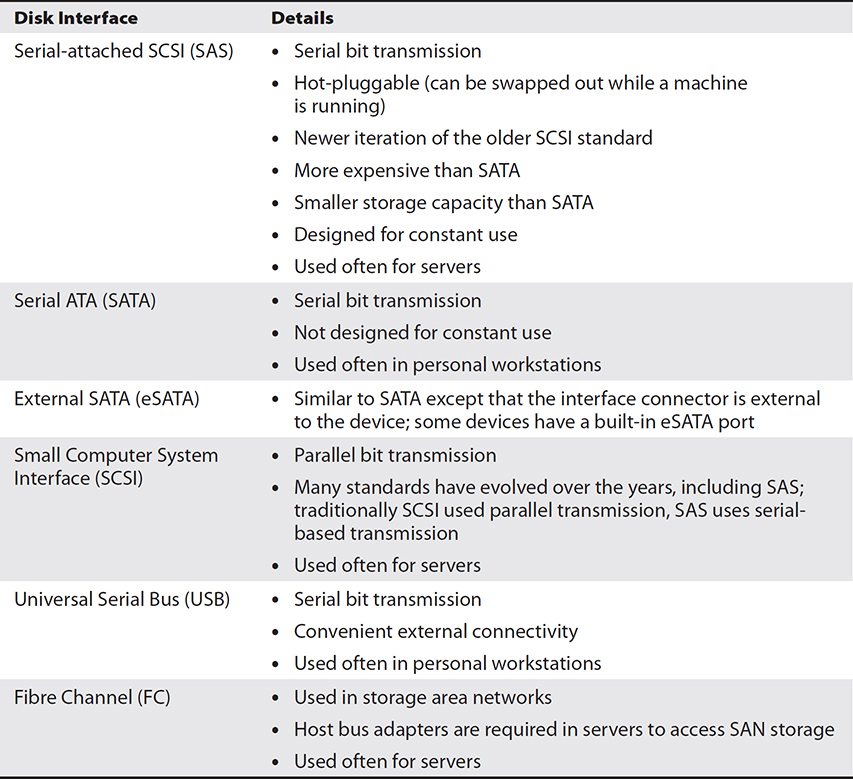

iSCSI

Internet Small Computer System Interface (iSCSI) is a form of SCSI, a decades-old standard used primarily for disk storage. To this day, it continues to evolve and is common in server environments. iSCSI makes storage accessible to hosts over a standard TCP/IP network on a small scale within a company.

The i in iSCSI refers to the fact that standard network equipment, such as standard Ethernet switches and cabling, can be used along with IP. Here’s what happens: SCSI disk I/O commands generated by a host (the iSCSI initiator) are placed inside IP packets. IP packets have the delivery details, such as the identity of the server or appliance serving up the disk space (the iSCSI target). Standard network hardware and software makes iSCSI possible; compared to a dedicated Fibre Channel SAN, iSCSI is less expensive, slower, and less reliable.

A separate network segment, or virtual local area network (VLAN), configured within or between network switches should be dedicated for iSCSI use; there’s enough overhead involved already by stuffing disk commands into IP packets that need to be addressed and transmitted. What makes this solution attractive is its price tag, and it gets the job done.

iSCSI Initiators The iSCSI initiator can be implemented as software or hardware. Hardware initiators support enhanced options such as a server booting the operating system over the network; this isn’t possible with software initiators because the OS has to be running before the initiator is available. Before the initiator can contact the target, it needs some kind of network address and port. The port identifies the services running on the host, and in this case it’s TCP port 3260. This could be a hostname such as storage1.acme.local or an IP address such as 172.16.54.56. After the host connection is established, we then specify the logical unit number (LUN) using an iSCSI qualified name (IQN) such as iqn.2012-06.com.sample:target0.

iSCSI Targets The iSCSI target hosts the disk space on an IP network, and that space gets consumed by servers, as depicted in Figure 4-6. Storage administrators carve out different chunks of disk space for servers to consume. These chunks of disk space are called logical unit numbers because traditional storage protocols such as SCSI used a unique numeric value to refer to disk storage.

Figure 4-6 iSCSI initiators connect to iSCSI targets using TCP/IP.

FCoE

Fibre Channel is a high-speed transmission method often used to connect servers to network storage. Despite “fibre” being in the name, it does not require fiber-optic cabling; standard twisted pair copper cables will work just fine. Because the FC standards are designed for speed and reliability, they are more often used in data centers or by service providers.

Fibre Channel over Ethernet (FCoE) stuffs disk commands into Ethernet frames as opposed to using higher level IP packets, which introduce more overhead. But for this to work, you need the correct hardware:

• Converged network adapters (CNAs) in each server, which combine Ethernet and FC functionality in a single card

• FCoE switches, which serve as the connection point between servers and storage arrays

• Copper or fiber-optic cables

Storage Area Networks

A SAN separates storage from individual hosts. Hosts connect to storage over the network and that storage appears, to the host operating system, to be a local storage device. iSCSI SANs use standard networking equipment and TCP/IP to make this happen. SANs can also consist of specialized network equipment and network storage protocols such as FC. You’ll find FC SANs used in larger enterprises, data centers, and service providers, and the cost can range from tens of thousands of dollars into the millions!

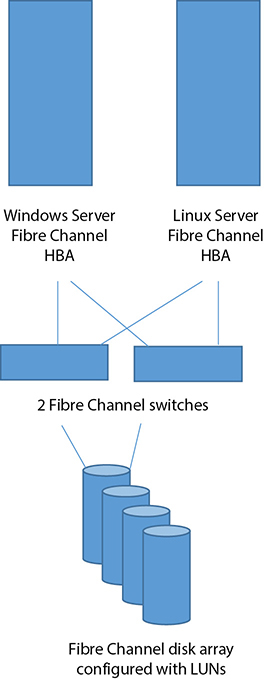

FC speeds currently fall in the range of 1 to 32 Gbps. FC SANs incur less overhead than higher level protocols such as IP used with iSCSI. FC SAN devices need a FC HBA to connect to SAN storage. The HBA has a unique16-digit hexadecimal identifier, called a World Wide Node Name (WWNN). The HBA in a server can have multiple ports, and each can connect to different FC switches for redundancy. Each port can be configured with a World Wide Port Name (WWPN). For example, a FC switch can have a single WWNN plus a WWPN for each port. Storage arrays are connected to FC switches. Collectively, this topology is referred to as a fabric. Other devices take on this nomenclature, too, as in fabric switches.

Storage administrators configure LUNs and LUN masks to determine which servers can use which configured storage. A LUN uniquely identifies disk space on the storage array. A LUN mask is normally configured at the HBA level, so that, for example, we can prevent Windows Server from seeing specific LUNs used by Linux servers. A simple FC SAN fabric is depicted in Figure 4-7.

Figure 4-7 A FC SAN using redundant switches

Storage Capacity and Future Growth

When it comes to servers, a myriad of questions need answers. What is the server’s purpose? How many users will be connecting at once? How critical is it that the server stay up and running? Planning servers before jumping into the actual configuration is paramount. One part of this is trying to anticipate server storage needs. And this is where cloud computing (specifically, Infrastructure as a Service, or IaaS) can be very useful.

When you work with on-premises storage, you have to order storage devices, wait for them to be shipped, plug them in, configure them, and then the storage can be used. If, over time, you don’t need all of the storage, well, you paid for it all either way. This is one reason why cloud storage makes a lot of sense. By definition, cloud services must support the following:

• A pool of resources shared by multiple tenants

• IT services available on demand from anywhere using any device

• Rapid elasticity (I need more disk space right now!)

• User (not service provider) provisioning and deprovisioning

• Metered services (pay only for storage used)

Base 2 vs. Base 10

Imagine one of your users asking you to explain a kilobyte. Would you say, “It is a term to express 1000 bytes,” or would you say, “It expresses 1024 bytes”? Those 24 little bytes can really add up when you’re calculating server storage needs, especially in a data center or service provider environment!

We humans normally express numbers using base 10. Why? There are a few theories:

• We have 10 digits on our two hands.

• The pope said so in the Middle Ages.

• Our financial systems do it this way.

It really doesn’t matter why. It just is. Computers, however, don’t do base 10—they do base 2, or binary, where everything is collections of 0’s and 1’s. So with base 10, we could say 103 = 1000 (10 × 10 = 100, 100 ×10 = 1000). With base 2, we would say 210 = 1024 (2 × 2 = 4, 4 × 2 = 8, and so on). Therefore, a 1 terabyte (TB) drive is 1,099,511,627,776 bytes (that’s 1 trillion and change), not just 1 trillion. The difference here is 99 billion bytes!

Where Did All the Disk Space Go?

Remember the line from the movie Field of Dreams, “If you build it, they will come”? Well, for disk space our statement of wisdom will be, “If you make it available, it will be consumed.” As storage capacity increases and becomes affordable, we seem to have a need to gobble it all up for storing movies, music collections, company data, operating system images, and so on. Here is a partial list of disk space consumers:

• Operating system files

• Operating system patches and service packs

• Operating system images

• Driver and application updates

• Log files

• Temporary files

• Data files

• Data backups and archives

You can imagine that a customer-transaction database for a large online retailer would consume an increasing amount of disk space, especially over time. Any application server resulting in data is a potential item to consider. File servers housing thousands of user home directories are prime candidates for running out of space. Some companies use on-premises or cloud-based policy-driven software that automatically archives data not accessed frequently to slower, cheaper storage media (a different storage tier). Windows Servers use the Volume Shadow Service (VSS), also referred to as the Volume Shadow Copy Service, for backups to enable data backup without requiring applications to be taken offline during the backup.

Using Less Disk Space

What can we do to use less storage space? Disk quotas can limit how much disk space is used either in a folder (regardless of who places content there) or even by user (such as on a file server). Some quota tools support soft quotas, where the quota is not enforced, but a log entry is made noting that the quota has been reached. Hard quotas are enforced. Windows Server user quotas can be set using the GUI, as shown in Figure 4-8.

Figure 4-8 Setting user disk quotas in Windows Server

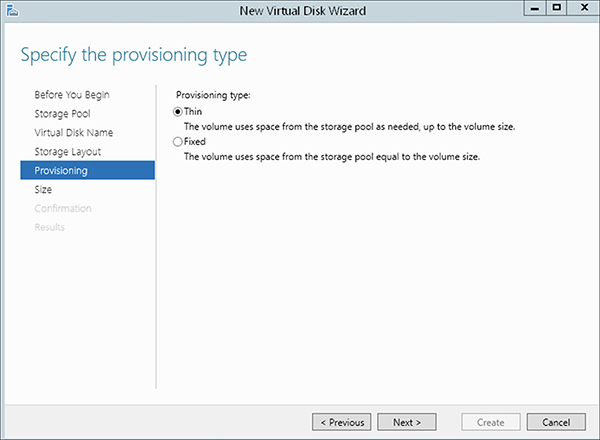

As a server technician, when you create server disk volumes, you have the option of thin provisioning, which is also referred to as overbooking or overcommitted disk space. Here’s how thin provisioning works:

1. A server admin adds 500GB of new storage to a server.

2. The admin creates disk volume 1, which is thinly provisioned during creation and is set to a size of 500GB.

3. The admin creates disk volume 2, which is thinly provisioned during creation and is set to a size of 500GB.

Wait a minute! That’s 1TB of disk space you may use down the road, but there is only 500GB of physical storage! The beauty here lies in the fact that both disk volumes will use disk space as they grow over time, but in the end you are limited to 500GB. With thin provisioning, you don’t have to know ahead of time exactly how much space each volume will end up needing. Figure 4-9 shows thin provisioning being configured within the Windows Server operating system.

Figure 4-9 Thin provisioning option in Windows Server

Compression

Disk compression tools save disk space by reducing redundant occurrences of data. Windows and Linux servers let you work with compression in the GUI or at the command line. For example, on a Windows server, you can compress all files on drive D: with the following command:

compact /c /i /d:

From a terminal prompt on a Linux host, you could use

gzip -r zipped_budgets.gz /budgets_2020 /budgets_2021

Data Deduplication

Data deduplication can remove redundant data blocks to conserve space. Windows Server includes data deduplication for NTFS volumes, but it’s not installed by default. There are also tools available to measure where your current disk space is being used to aid in planning future storage capacity. One example is Microsoft’s File Server Resource Manager (FSRM), shown in Figure 4-10, which is included with the Windows Server OS.

Figure 4-10 FSRM storage report

Windows Image Files

The standard file type for storing Windows images is the .WIM format. You can save disk space by storing multiple images of the same operating system within a single .WIM file. Suppose, for example, that you have five Windows 10 images used within the company, and each image is approximately 10GB, for a total of 50GB. If you were to store those five images in a single .WIM, the total consumed disk space might be only 25GB. The reason is single-instance storage; multiple copies of files are stored only once, thus saving space.

You can use the Deployment Image Servicing and Management (DISM.exe) tool to work with Windows image files. DISM is built into newer operating systems such as Windows 10 and Windows Server 2019.

The following example creates an image of drive C: and appends it to Z:win10_images.wim with a name of win10_sales:

Dism /Append-Image /ImageFile:Z:win10_images.wim /CaptureDir:

C: /Name:win10_sales

The following example applies the win10_sales image stored in the win10_images.wim file to the C: drive:

Dism /Apply-Image /ImageFile:Z:win10_images.wim /name:win10_sales

/ApplyDir:C:

Of course, DISM isn’t the only game in town; other tools such as imagex.exe, Microsoft Deployment Toolkit (MDT), and Microsoft System Center Configuration Manager (SCCM) do a fine job when working with Windows images.

RAID Configuration

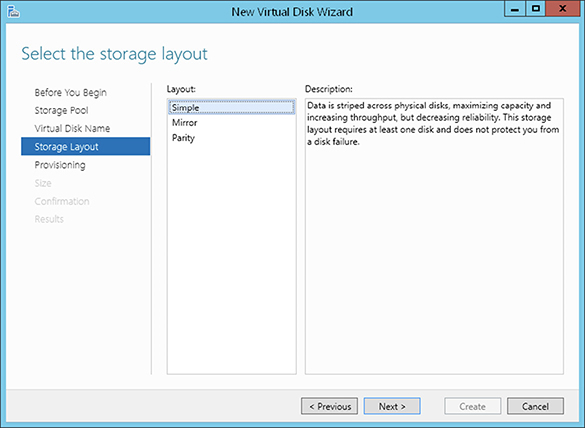

Groups of disks not yet configured to work together are called JBOD—just a bunch of disks. RAID (Redundant Array of Independent Disks) takes a much more structured approach, and for years it has been widely used in enterprise computing ecosystems. RAID enables you to group multiple physical disks together as a logical manageable unit for two reasons: improved disk I/O performance and fault tolerance. The operating system may see what it thinks is one disk, when in reality the RAID array may consist of several disks working together.

Hardware RAID support is normally integrated on server motherboards, but if it isn’t, you can get expansion cards (RAID controllers) to make this possible. Software RAID (see Figure 4-11) is built into server OSs, but, of course, if something goes wrong with the OS, something might go wrong with the RAID disk array. Not only that, but remember that firmware designed for one purpose always outperforms software designed for many uses, so it’s a good idea to use hardware RAID whenever possible. In Windows, using software RAID requires disks to be treated as dynamic disks; disks start off as basic disks, but you are prompted to convert to dynamic disks when configuring software RAID levels.

Figure 4-11 Configuring software RAID in Windows Server

Hardware RAID array controllers often have their own battery-backed caches, which work well when disk writes to the array aren’t committed immediately and an issue occurs, such as a power outage. Upon restart, cached data is actually committed to disk. In the spirit of redundancy (remove single points of failure!), you can also use redundant RAID controllers. Interestingly, RAID level 1 (disk mirroring), when two disks are connected to two different controllers, is called duplexing. Figure 4-12 shows how hardware RAID storage shows up in Windows Server.

Figure 4-12 An Intel RAID 0 volume in Windows Server

RAID Levels

RAID levels consist of various disk configurations. The following sections summarize the characteristics of a few common RAID levels.

RAID 0

• Uses disk striping

• Requires at least two disks

• Data to be written to disk is broken into blocks (stripes) that are evenly written across the disk array

• Improves disk I/O performance

• Offers no fault tolerance

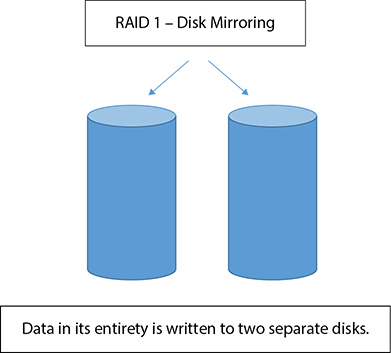

RAID 1

• Uses disk mirroring

• Requires at least two disks

• Data written to a disk partition on one disk is also written to a disk partition on a different disk

• Can use only 50 percent of disk space

• Tolerates a disk failure

• Does not replace backups

RAID 5

• Uses disk striping with distributed parity

• Requires at least three disks

• Data to be written to disk is broken into blocks (stripes) that are evenly written across the disk array

• Stores parity (error recovery) information for each stripe on a separate disk from its related data stripe

• Tolerates a single disk failure

• Can reconstruct in memory and on demand any data from failed disk

RAID 6

• Uses double-parity RAID

• Requires at least four disks

• Data to be written to disk is broken into blocks (stripes) that are evenly written across the disk array

• Stores two parity (error recovery) stripes on each disk

• Never stores parity and its related data on the same disk

• Tolerates two disk failures

• Can reconstruct in memory and on demand any data from failed disks

RAID 10

• Uses RAID level 1 and then 0

• Uses disk mirroring followed by striping

• Provides fault tolerance and performance

• Requires at least four disks

• Stripes data across mirrored pairs

• Tolerates multiple disk failures as long as they are not in the same mirrored pair

• Is useful for busy (many reads/writes) databases

Storage Device Installation

Installing storage devices begins with knowing what you have and progresses to knowing what you need. A rack-mounted server with an integrated SAS RAID controller may or may not accept SATA disks; it depends on the specific RAID controller. In the same way, a disk subsystem might accept only 4 disks, or it might accept 128. But after the connectivity is taken care of, disk space must be configured appropriately and file systems created.

MBR and GPT

When servers can see disk space, whether storage is DAS, NAS, or over a SAN, the next step is to make the space usable. This means initializing disks, partitioning them, and formatting those partitions with a particular file system. Disk initialization is required for server operating systems to be able to use the space, and you can choose either master boot record (MBR) or GUID Partition Table (GPT) for this. MBR and GPT are a function of the server’s firmware (BIOS/UEFI) and the operating system, not the disk itself.

MBR is the old 1980s 32-bit standard for disk initialization that supports a maximum of four primary disk partitions, each being no larger than 2TB in size. Another MBR option is to create three primary partitions and one extended partition in which you can then create multiple logical drives, but this is rare these days.

GPT is a newer, 64-bit standard for initializing disk space that supports many very large partitions (the values vary depending on the OS and file system used). Windows Server will allow up to 128 partitions, each being up to 256TB in size, although theoretically the partition size could be up to 9.44 zettabytes (ZB). GPT also stores partitioning information in multiple places on the disk, which makes it much more resilient to corruption compared to MBR. Booting from a GPT-initialized disk requires UEFI (as opposed to BIOS) and, in the case of Windows Server OSs, also requires a 64-bit operating system.

Windows systems can use the diskpart.exe command line tool or the GUI Disk Management (shown next) and Server Manager tools to work with disks. Server Manager doesn’t even give you the option of initializing a disk using MBR. Those using Linux systems can use the old fdisk command to work with MBR; the gdisk command is used for GPT. Linux also supports using Logical Volume Management (LVM) to group physical disks together upon which logical volumes can be created. A single logical volume could span multiple physical disks.

File Systems

File systems are determined when you format a disk partition. Each server OS supports various file systems—some old, some new—that can meet your requirements. For example, if you want user disk quota and file encryption support on a Windows server, you should be using NTFS.

Windows Server OSs generally support the following file systems:

• File Allocation Table (FAT)

• FAT32

• Extended FAT (exFAT)

• New Technology File System (NTFS)

• Resilient File System (ReFS)

NTFS (shown next), which supersedes FAT and FAT32, is a journaled file system that supports compression, encryption, file system security, larger file and partition sizes, and user disk quotas, to name just a few features. FAT32 and exFAT are used most commonly with removable storage such as flash drives, although you can format flash drives as NTFS.

ReFS is a newer file system made available in the Windows Server OS that is designed to be more resilient to file system corruption. It boasts new features, including the ability to scan for and correct file system corruption while the disk volume is mounted and in use. But it has drawbacks: for example, ReFS doesn’t support Encrypting File System (EFS), data deduplication (pre-Windows Server 2019 version 1709), and it can’t be used on an OS boot drive.

UNIX and Linux server OSs generally support the following file systems:

• UNIX File System (UFS)

• Zettabyte File System (ZFS)

• Extended File System (EXT2, EXT3, EXT4)

• ReiserFS

EXT4 and ReiserFS are common in today’s Linux environments, although development support for ReiserFS appears to be waning. Both of these file systems are journaled, which means all file system write transactions are logged before being committed to disk. This makes the file system less susceptible to corruption, which can occur with a power loss, for example.

The Virtual Machine File System (VMFS) is specific to VMware. It was designed to support simultaneous read/write activity by cluster nodes where virtual machine hard disk files and snapshots (checkpoints) are concerned. One benefit of VMFS is that it enables the live migration (movement) of virtual machines between VMware ESXi hosts with zero downtime. The Microsoft Failover Clustering feature does support Cluster Shared Volumes (CSVs), which enable the live migration of Hyper-V virtual machines between clustered Hyper-V hosts with zero downtime.

For resilience to disk failures, some drive enclosures, also called storage rack cages, support hot-swappable disks. This means failed disks can be replaced while everything stays running. If you’re not using all the disks, you can have extra ones plugged in—these are hot spares. Cold spares are extra disks that you can swap out when used disks fail; doing this requires that the system be shut down. Storage rack cages are normally powered and have fans and interface cards for data and power conversion of power connectors such as for SATA and Molex.

Sample Scenario 1

In this scenario, you’re a storage tech working in a data center with multiple tenants. You’re working on some new virtualization servers that customers will use to run their virtual machines. Each physical server motherboard supports SAS RAID and has four SATA ports, one of which is currently used by a disk containing the server OS. Your boss is after you to get the best possible performance for virtual machines while allowing for the possible failure of one disk. What should you do?

Given this scenario, the best solution is to acquire three fast, high-capacity SATA disks and configure them using hardware RAID in a stripe set with distributed parity (RAID 5). RAID 5 needs at least three disks; RAID 6 needs four, which is not an option here. With RAID 5, you’re spreading the disk I/O workload across three disks while also writing parity information in case one disk fails.

Sample Scenario 2

Your company is using FC SAN with multiple FC switches connected to SSD and hard disk storage arrays. A new blade server has arrived. The blade will run Windows Server Hyper-V by booting from the SAN and will host two virtual machines running customer management software. You must ensure that both virtual machines have redundant connectivity to a log file storage volume and an application database storage volume on the SAN. Log files should be stored as archives on slower disks, while database access must be as fast as possible. How should you go about this?

The first clue here is redundant SAN connectivity—this means you need a dual-port FC HBA for multiple paths to different FC switches. You will also need the correct HBA driver so that the two virtual machines can talk to the SAN directly.

Storage administrators will have to configure three LUNs in this case: one to host the Windows Server OS (boot from SAN), a second LUN for log files, and a third LUN for the database. Storage tiers need to be configured so that the database and related files are available on fast SSDs, while logs are stored on hard disks.

Hands-on Exercises

Exercise 4-1: Configure a Windows Server iSCSI Target

1. Start your Ubuntu Linux virtual machine. At the login prompt, type uone and press ENTER. Type Pa$$w0rd172hfX and press ENTER.

2. Type ip a to view the IPv4 address acquired through DHCP. Write down this IP address; you will need it in a later step. Leave the virtual machine running for the next exercise.

Install the iSCSI target software from PowerShell

3. Make sure your Srv2019-1 virtual machine is running in VMware Workstation and that you are logged on using the Administrator account with a password of Pa$$w0rd172hfX.

4. Click the Windows Start menu and enter powershell. When Windows PowerShell shows up in the search results, select it.

5. Enter install-windowsfeature fs-iscsitarget-server. After a few moments, the software is installed.

6. Enter get-windowsfeature *isci*. The asterisks are wildcards that will show features containing the text iSCSI. Notice the X in the box for iSCSI Target Server, which implies that the software has indeed been installed.

Configure iSCSI storage on the target

7. If it is not already running, start Server Manager from the Start menu.

8. In the navigator on the left, click File And Storage Services, and then click Disks. You will create a dedicated storage volume for iSCSI virtual disks.

9. Right-click the second listed disk (take note of the disk number) and choose New Volume. Click Next twice to accept the default settings. If prompted that the selected disk will be brought online and initialized as a GPT disk, click OK.

10. In the Specify The Size Of The Volume Window, for the volume size, enter 30GB. Click Next.

11. Choose Don’t Assign To A Drive Letter Or Folder and click Next.

12. Enter a volume label of iSCSI Virtual Disks and click Next.

13. On the summary screen, click Create, and then click Close.

14. In the upper-right, click Tasks, and then click Refresh. Select the disk number from step 9.

15. In the Volumes panel below, right-click the listed volume and choose Manage Drive Letter And Access Paths. From the Drive Letter drop-down list, choose drive I. Click OK.

Create iSCSI virtual disks for consumption over the network

16. Within Server Manager, in the navigator on the left, click iSCSI.

17. Click the Tasks button on the upper-right and choose New iSCSI Virtual Disk.

18. Click the Browse button and navigate to drive I: to store the iSCSI virtual disks. Click Select Folder. Click Next.

19. Enter iscsi_lun1 for the name and click Next.

20. Enter 10GB for the size and click Next.

21. Ensure that New iSCSI Target is selected and click Next. This is where you specify which network hosts are allowed to access this iSCSI storage.

22. For the name, enter LocalServers and click Next.

23. Click Add. From the Type drop-down list, select IP Address.

24. In the Value field to the right, enter the IP address you wrote down in step 2. This is the IP address of Ubuntu Linux server, which will consume this storage. Click OK.

25. Click Next twice, click Create, and then click Close. Your iSCSI VDHX file is created.

26. Close Server Manager.

Exercise 4-2: Use the Ubuntu Linux iSCSI Initiator to Connect to an iSCSI Target

1. Make sure your Ubuntu-1 virtual machine is running in VMware Workstation. If you’re not already logged in, at the login prompt, type uone and press ENTER. Type Pa$$w0rd172hfX and press ENTER.

2. You will now connect to the iSCSI target on Srv2019-1 (192.168.1.200). Enter the following command and then press ENTER. Because this is the first time in this session you’re using the sudo command, you’ll need to reenter the password for user uone.

sudo iscsiadm --mode discovery --type sendtargets

--portal 192.168.1.200

3. In the command output, notice the first returned IQN item after 192.168.1.200:3260,1, which will look something like this:

iqn.1991-05.com.microsoft:srv2019-1-localservers-target

4. To connect to the iSCSI target storage, enter

sudo iscsiadm --mode node --targetname the_IQN_noted_

above in Step 3 --portal 192.168.1.200 –-login

5. Enter

lsblk --scsi | grep iscsi

6. Notice (from step 5) the connected iSCSI disk and its name (for this example, we will assume the name is sdd).

7. Enter sudo fdisk /dev/sdd and press ENTER.

8. Press the n key (for new partition, and make sure it is lowercase) and then press ENTER, and keep pressing ENTER to accept the defaults to use the entire disk for this primary partition.

9. At the Command (m for help): prompt, press the w key (for write, and make sure it is lowercase) to save the changes to disk.

10. To format the new partition (sdd1), enter sudo mkfs /dev/sdd1.

11. Create a mount point folder by entering sudo mkdir /project-x.

12. Mount the new partition into the folder by entering sudo mount /dev/sdd1 /project-x.

13. Verify the mount succeeded by entering mount | grep project. The Ubuntu Linux host is now using the remote disk space housed on the Windows iSCSI target server.

14. Switch to the Windows Server iSCSI target. Start Server Manager, and in the iSCSI view, below the iSCSI targets section, notice the Initiator ID shows the Linux host IP address. The Linux host is now consuming the disk space over the network. Leave this server running.

Exercise 4-3: Configure Software RAID Using Windows Server

This exercise demonstrates how two disk volumes can be thinly provisioned to use all available space in the storage pool.

1. On Srv2019-1, start PowerShell from the Start menu.

2. Create a variable to store disks eligible to be added to a storage pool, and then create a storage pool by entering

$disks=(Get-PhysicalDisk –CanPool $True)

Then press ENTER and type the following:

New-StoragePool –FriendlyName SWRaid –

StorageSubSystemFriendlyName "Windows Storage*" –

PhysicalDisks $disks

3. If it’s not already running, start Server Manager from the Start menu.

4. On the left, click File And Storage Services, and then click Storage Pools.

5. Right-click the SWRaid storage pool and choose New Virtual Disk. If you don’t see the SWRaid storage pool, exit Server Manager and start it again. Note that “virtual disk” in this context has nothing to do with virtualization. Click OK, and then click Next.

6. Enter the name of the new virtual disk, Vdisk1, and click Next twice.

7. On the Select The Storage Layout screen, ensure that Simple is selected to configure disk striping across the three disks. Then click Next.

8. For the Provisioning Type, choose Thin and click Next.

9. Enter 145GB as the size and click Next. Click Create and then click Close.

10. The New Volume wizard will automatically launch. Click Next five times to accept the defaults. On the Confirm Selections screen, take note of the drive letter for the new disk volume. Click Create and then click Close.

11. In the navigator at the left, click Volumes. On the right, notice the new drive letter (for example, E:).

12. Start Windows Explorer and notice the new drive letter for your new striped volume. It appears as a single drive letter in Windows, but the volume is really spread across three physical disks, which results in enhanced disk performance; however, if a single disk fails, the entire striped drive will become unavailable.

Exercise 4-4: Configure Software RAID Using Ubuntu Linux

1. Make sure your Ubuntu-1 virtual machine is running in VMware Workstation If you’re not already logged in, At the login prompt, type uone and press ENTER. Type Pa$$w0rd172hfX and press ENTER.

2. Enter sudo lsblk --scsi to list SCSI disk block devices. You should see sda, sdb, and sdc. These are three separate disks. We will create a disk mirror between sdb and sdc.

3. Enter sudo fdisk /dev/sdb and press ENTER. Press the n key (for new partition; must be lowercase) and then press ENTER four times to create a primary partition that consumes the entire disk.

4. Press T to change the partition type, and then enter fd to set the type to Linux raid autodetect.

5. Press the w key (for write, and make sure it is lowercase) to write the changes to disk.

6. Repeat steps 4–6 in this exercise, except in step 3, enter sudo fdisk /dev/sdc instead of /dev/sdb.

7. Enter sudo fdisk -l /dev/sdb /dev/sdc to verify that the Linux raid autodetect partition flag has been set on both disk partitions (look under the Type heading). Notice the partitions are /dev/sdb1 and /dev/sdc1 (look under the Device heading).

8. Create a software RAID 1 (mirroring) configuration:

sudo mdadm --create /dev/md1 --level=1 –-raid-devices=2 /

dev/sdb1 /dev/sdc1

9. Press Y (for yes) to continue creating the array.

10. Verify your work:

sudo mdadm --detail /dev/md1

11. Make a useable file system on the mirrored array:

sudo mkfs -t ext4 /dev/md1

12. Make a mount directory:

sudo mkdir /cust_trans

13. Mount the file system to a directory so it is ready to use:

sudo mount /dev/md1 /cust_trans

14. Enter the following command to verify the /cust_trans mount point is using the disk mirror device /dev/md1:

mount | grep /dev/md1

Chapter Review

Server storage is a crucial part of server planning, including potential future disk space requirements. This is especially true for data center administrators, because consumers expect storage to be available when they decide to provision more space.

Storage Device Characteristics

Characteristics such as RPMs, seek time, rotational latency, bus width, and IOPS all contribute to the overall transfer rate of data into and out of hard disks.

Hard disks consist of read and write heads on mechanical arms that move over the spinning platters. Solid-state drives (including flash drives) have no moving parts; everything is electronic. SSDs generally perform faster but are more expensive than hard disks.

Disk Interfaces

Disk interfaces include

• SAS (common in servers)

• SATA

• SCSI

• USB

• Fibre Channel (used in SANs)

Local and Network Storage

DAS storage is available locally to a server without using a network. NAS uses file-sharing protocols such as SMB and NFS to make network storage available. SANs use specific disk block I/O protocols for high-speed access to network storage. SAN-specific equipment includes FC HBAs in each server, FC switches, and storage arrays. LUNs represent disk space available over the network. Techniques such as LUN masking and zoning are used to restrict server access to specific LUNs.

iSCSI enables storage consumption by hosts over a standard TCP/IP network. iSCSI initiators connect to iSCSI targets either on the same or on a different subnet. iSCSI traffic should be kept separate from other TCP/IP traffic by configuring a dedicated iSCSI VLAN.

FCoE incurs less overhead than iSCSI because it packages disk I/O commands directly into Ethernet frames instead of higher level IP packets. CNAs and FCoE switches are required, but fiber-optic cabling is not; copper-based cables will also work.

Storage Capacity Planning

Planning for future storage capacity can be measured using base 2 or base 10. Base 2 is more specific (binary) and more closely reflects actual required disk space. Compression, data deduplication, disk quotas, and thin provisioning are options for optimizing disk space use.

RAID

RAID treats multiple physical disks as a group, but the operating system sees only one logical disk. RAID is used to squeeze more disk I/O performance (multiple disks reading and writing instead of just one) and to provide fault tolerance (disks that will fail).

• RAID 0 Disk striping

• RAID 1 Disk mirroring

• RAID 5 Disk striping with distributed parity

• RAID 6 Disk striping with dual distributed parity

• RAID 10 Combines RAID 1 with RAID 0

Disk Initialization and File Systems

MBR and GPT do not refer to a type of hard disk; disks are initialized in an operating system as either MBR or GPT. MBR allows a maximum of four partitions, each with a maximum potential size of 2TB. GPT limits are imposed by specific operating systems; Windows Servers allow up to 128 GPT partitions, each being 256TB in size. Booting from GPT requires UEFI.

After disk initialization, the disk must be partitioned. A single disk could be carved out to include an OS bootable partition, a data partition, a swap file (page file) partition, and so on. When installing specialized operating systems such as the VMware ESXi hypervisor, you can create an optional scratch partition to accommodate diagnostic output. Swap/page/scratch partitions are also useful when the system is low on memory; the oldest used memory pages can be swapped out to disk to free up space in active memory. A general rule of thumb is to ensure this partition is at least 1.5 times the size of the amount of RAM installed in the host.

After disk partitioning, file systems are created when the partition is formatted. Windows servers typically use NTFS, where Linux servers vary: EXT2, EXT3, EXT4, and ReiserFS are common. VMFS is a file system specific to VMware hosts that enables concurrent reading and writing to a disk volume by multiple cluster nodes, similar to Microsoft CSVs.

Questions

1. A new 4TB drive was added to a server, but you notice that the server operating system can use only 2TB. What is the most likely cause of the problem?

A. The drive was initialized as GPT.

B. The drive was initialized as MBR.

C. The operating system has not been updated.

D. Compression is enabled.

2. What hard disk dimension is considered the standard?

A. 1.75-inch

B. 3-inch

C. 5.5-inch

D. 3.5-inch

3. Which disk speed factor does not apply to SSDs?

A. IOPS

B. Capacity

C. Rotational latency

D. Transfer rate

4. Which disk interface standard is the most common in servers?

A. SAS

B. IDE

C. SATA

D. EIDE

5. Your server environment requires the ability to replace a failed disk while systems remain running. What type of disk system must you use?

A. Hot-swappable

B. UEFI

C. Drive-swappable

D. RAID

6. Identify the Windows supported file systems. Choose two.

A. eFAT

B. ReFS

C. XFS

D. NTFS

7. How does a SAN differ from NAS?

A. There is no difference.

B. SANs use IP and NAS does not.

C. SANs are local server storage and NAS is not.

D. SANs use specific block I/O protocols and NAS uses standard file-sharing protocols.

8. One of your locations is using iSCSI for server network storage. You want to ensure that regular TCP/IP traffic does not impede iSCSI performance. What should you do?

A. Use a separate VLAN for iSCSI.

B. Configure LUN masking.

C. Configure LUN zoning.

D. Enable iSCSI CHAP authentication.

9. Which statement regarding software iSCSI initiators is true?

A. Targets are accessible only through FC switches.

B. They do not support OS booting from SAN.

C. IPv6 must be configured.

D. iSCSI targets must reside on the same subnet with iSCSI initiators.

10. Your boss has asked you to determine what equipment must be ordered to set up a new FCoE environment. Which FCoE item is required?

A. NAS device

B. CNA

C. UEFI

D. RAID controller

11. You are a Linux server administrator. To plan future disk space requirements, you would like to track user disk space consumption. What should you configure?

A. Hard quotas

B. GPT

C. NTFS

D. Soft quotas

12. As you configure three new server disk volumes, you are unsure as to how large each volume should be. What should you configure?

A. Thin provisioning

B. Thick provisioning

C. RAID 0

D. RAID 1

13. Which RAID level offers the best performance?

A. RAID 0

B. RAID 1

C. RAID 2

D. RAID 3

14. Which type of storage would be considered Tier 2 storage?

A. SCSI

B. IDE

C. SSD

D. HDD

15. Which type of storage combines disk platters with flash memory?

A. SSHD

B. SSD

C. HHDD

D. HDD

16. In an attempt to save disk space, Sean wants to store multiple Windows Server 2012 images in a single .WIM file. Which command should Sean use?

A. Dism /Append-Image /File:Z:win10_images.wim /CaptureDir:C: /Name:win10_sales

B. Dism /Add-Image /ImageFile:Z:win10_images.wim /CaptureDir:C: /Name:win10_sales

C. Dism /Append-Image /ImageFile:Z:win10_images.wim /CreateFrom:C: /Name:win10_sales

D. Dism /Append-Image /ImageFile:Z:win10_images.wim /CaptureDir:C: /Name:win10_sales

17. You are planning the use of a new 8TB HDD. Six disk partitions are required for varying file storage uses. How should you initialize the disk?

A. GPT

B. MBR

C. RDP

D. TCP

18. Which features do NTFS file systems offer beyond FAT32 file systems? Choose two.

A. Encryption

B. Auditing

C. Imaging

D. PXE

19. What benefit does VMFS offer over NTFS?

A. Encryption

B. Simultaneous journaling from multiple nodes

C. Auditing

D. Simultaneous read/write from multiple nodes

20. Your server requires storage that will be supplied over the network. What must the storage administrator configure for your server?

A. NTFS

B. VMFS

C. DAS

D. LUN

Questions and Answers

1. A new 4TB drive was added to a server, but you notice that the server operating system can use only 2TB. What is the most likely cause of the problem?

A. The drive was initialized as GPT.

B. The drive was initialized as MBR.

C. The operating system has not been updated.

D. Compression is enabled.

B. MBR-initialized disks have a maximum partition size of 2TB. A, C, and D are incorrect. GPT partitions are not limited to 2TB. Operating system updates do not determine partition size. Compression of the file system would not cause only 2TB to be available.

2. What hard disk dimension is considered the standard?

A. 1.75-inch

B. 3-inch

C. 5.5-inch

D. 3.5-inch

D. Most hard disk platters have either a 2.5- or 3.5-inch diameter. A, B, and C are incorrect. These are not standard hard disk dimensions.

3. Which disk speed factor does not apply to SSDs?

A. IOPS

B. Capacity

C. Rotational latency

D. Transfer rate

C. Rotational latency is related to the amount of time it takes for hard disk platters to spin to the correct position; SSDs do not have spinning platters. A, B, and D are incorrect. IOPS is the number of disk I/O functions that can be performed within 1 second. Capacity is the size of the drive. The transfer rate is a measurement of how quickly data can move into and out of a storage device.

4. Which disk interface standard is the most common in servers?

A. SAS

B. IDE

C. SATA

D. EIDE

A. SAS disks are designed to work 24/7 at high speeds, making them well suited for servers. B, C, and D are incorrect. IDE and its successor EIDE are deprecated disk interface standards. SATA is commonly used in desktops and servers, but it is more common on the server side.

5. Your server environment requires the ability to replace a failed disk while systems remain running. What type of disk system must you use?

A. Hot-swappable

B. UEFI

C. Drive-swappable

D. RAID

A. Hot-swappable components can be replaced while a system is running. B, C, and D are incorrect. UEFI is a firmware standard that succeeds BIOS. Drive-swappable is a fictitious term. RAID organizes multiple physical disks to work together for fault tolerance and/or performance reasons.

6. Identify the Windows supported file systems. Choose two.

A. eFAT

B. ReFS

C. XFS

D. NTFS

B, D. The ReFS file system was introduced with Windows Server 2012 R2 and reduces the amount of file system corruption problems. NTFS is the most common Windows Server file system type; it is a journaled file system that supports additional features such as encryption, file security, compression, and disk quotas. A and C are incorrect. eFAT is a fictitious term. XFS is a file system used by the SGI IRIX operating system and some flavors of Linux.

7. How does a SAN differ from NAS?

A. There is no difference.

B. SANs use IP and NAS does not.

C. SANs are local server storage and NAS is not.

D. SANs use specific block I/O protocols and NAS uses standard file-sharing protocols.

D. SANs do not use IP or file-sharing protocols; instead, they use block I/O protocols such as Fibre Channel. A, B, and C are incorrect. SANs and NAS are not the same. NAS uses standard file-sharing protocols such as SMB and NFS. SAN solutions do not use IP and they are not considered local storage; instead, they are considered network storage.

8. One of your locations is using iSCSI for server network storage. You want to ensure that regular TCP/IP traffic does not impede iSCSI performance. What should you do?

A. Use a separate VLAN for iSCSI.

B. Configure LUN masking.

C. Configure LUN zoning.

D. Enable iSCSI CHAP authentication.

A. VLANs can be used to isolate one type of network traffic from another for performance reasons. Placing iSCSI traffic on its own VLAN accomplishes this. B, C, and D are incorrect. LUN masking and zoning are used to control host access to network storage. iSCSI CHAP is indeed used for authenticating initiators to targets, but it is not related to improving network performance.

9. Which statement regarding software iSCSI initiators is true?

A. Targets are accessible only through FC switches.

B. They do not support OS booting from SAN.

C. IPv6 must be configured.

D. iSCSI targets must reside on the same subnet with iSCSI initiators.

B. Software iSCSI initiators can be used only when the operating system is already running; therefore, SAN booting using this solution is not possible. A, C, and D are incorrect. iSCSI does not require FC switches; standard Ethernet equipment works just fine. IPv6 is not required for iSCSI; IPv4 works well. Initiators and targets do not have to reside on the same subnet.

10. Your boss has asked you to determine what equipment must be ordered to set up a new FCoE environment. Which FCoE item is required?

A. NAS device

B. CNA

C. UEFI

D. RAID controller

B. CNAs combine FCoE and NIC functionality; each host needing access to network storage requires a CNA. A, C, and D are incorrect. NAS devices provide access to network storage using file-sharing protocols such as NFS and SMB; this is not required with FCoE. UEFI is a firmware standard that succeeds BIOS. RAID controllers can group multiple physical disks together for fault tolerance and/or performance reasons, but this is not FCoE-specific equipment.

11. You are a Linux server administrator. To plan future disk space requirements, you would like to track user disk space consumption. What should you configure?

A. Hard quotas

B. GPT

C. NTFS

D. Soft quotas

D. Soft quotas can log the fact that disk space thresholds have been exceeded. A, B, and C are incorrect. Hard quotas actually prevent additional files from being stored on a server once the quota is reached, so they aren’t as useful as soft quotas for tracking purposes. GPT is a newer disk partitioning scheme that supersedes MBR and allows for a larger number and capacity for partitions. NTFS is a common Windows file system standard that supports features such as file encryption, compression, auditing, and disk quotas.

12. As you configure three new server disk volumes, you are unsure as to how large each volume should be. What should you configure?

A. Thin provisioning

B. Thick provisioning

C. RAID 0

D. RAID 1

A. Thin provisioning enables administrators to set a disk volume to grow to a maximum size if required, but the space is not allocated in its entirety, thereby allowing other thinly provisioned disk volumes to consume that same space as needed. B, C, and D are incorrect. Thick provisioning allocates disk volume space immediately and therefore cannot share that space simultaneously with other disk volumes. RAID 0, disk striping, is used to improve disk I/O performance. RAID 1, disk mirroring, is used for redundancy in case one disk fails.

13. Which RAID level offers the best performance?

A. RAID 0

B. RAID 1

C. RAID 2

D. RAID 3

A. RAID 0, disk striping, groups multiple physical disks together for disk I/O. When writing data to the RAID 0 array, the data is broken into blocks (stripes), each of which is concurrently written to a physical disk in the array, thus resulting in improved performance. B, C, and D are incorrect. RAID 1, disk mirroring, is used for fault tolerance. A disk write to one disk is duplicated on another disk. When one disk fails, the other has an up-to-date copy of the data. RAID 2 stripes bits of data but still writes error recovery information. RAID 3 stripes bytes of data but still uses a dedicated parity disk for error recovery.

14. Which type of storage would be considered Tier 2 storage?

A. SCSI

B. IDE

C. SSD

D. HDD

D. Hard disk drives are considered Tier 2 storage. A, B, and C are incorrect. SCSI and IDE are disk interfaces that are not organized into storage tiers. SSD would be considered Tier 1 storage.

15. Which type of storage combines disk platters with flash memory?

A. SSHD

B. SSD

C. HHDD

D. HDD

A. Solid-state hybrid drives combine flash memory with disk platters. B, C, and D are incorrect. SSDs are flash memory only. HHDD is a fictitious acronym. HDDs are disk platters only.

16. In an attempt to save disk space, Sean wants to store multiple Windows Server 2012 images in a single .WIM file. Which command should Sean use?

A. Dism /Append-Image /File:Z:win10_images.wim /CaptureDir:C: /Name:win10_sales

B. Dism /Add-Image /ImageFile:Z:win10_images.wim /CaptureDir:C: /Name:win10_sales

C. Dism /Append-Image /ImageFile:Z:win10_images.wim /CreateFrom:C: /Name:win10_sales

D. Dism /Append-Image /ImageFile:Z:win10_images.wim /CaptureDir:C: /Name:win10_sales

D. The /Append-Image switch adds images to a single .WIM file. A, B, and C are incorrect. There is no /File, /Add-Image, or /CreateFrom switch for the dism command.

17. You are planning the use of a new 8TB HDD. Six disk partitions are required for varying file storage uses. How should you initialize the disk?

A. GPT

B. MBR

C. RDP

D. TCP

A. GPT allows more than four disk partitions. B, C, and D are incorrect. MBR allows up to a maximum of four partitions. RDP, the Remote Desktop Protocol, is used to connect to a Windows computer over a network; it is not related to storage, nor is TCP. TCP is the Transmission Control Protocol; it is a reliable connect-oriented transport protocol within the TCP/IP suite.

18. Which features do NTFS file systems offer beyond FAT32 file systems? Choose two.

A. Encryption

B. Auditing

C. Imaging

D. PXE

A, B. NTFS file systems support encryption and auditing, which are not supported with FAT32 file systems. C and D are incorrect. Imaging and PXE are not file system features. Imaging is a convenient way of deploying operating systems without performing a manual installation. PXE enables booting over the network instead of from local media and is normally used for imaging operations.

19. What benefit does VMFS offer over NTFS?

A. Encryption

B. Simultaneous journaling from multiple nodes

C. Auditing

D. Simultaneous read/write from multiple nodes

D. VMware’s VMFS is designed to enable multiple cluster nodes to read and write to the same file system at the same time. A, B, and C are incorrect. Encryption and auditing are available with NTFS file systems. Simultaneous journaling is not a valid feature.

20. Your server requires storage that will be supplied over the network. What must the storage administrator configure for your server?

A. NTFS

B. VMFS

C. DAS

D. LUN

D. A logical unit number is a unit of disk space with a unique ID that is prepared by a storage administrator for use by a specific server. A, B, and C are incorrect. NTFS and VMFS are file systems that are not required for network storage. DAS refers to direct-attached, or local server, storage.