4

Organizational Constraints and Complexity Theory: Modeling with Agents

This chapter focuses on the need to use modeling and to define what type of modeling to use for which question.

4.1. A preamble to modeling

To fulfill the request, we remember that our industrial, social and economic environment is continuously subject to technological revolutions. In particular:

- – the first revolution was due to “mechanization” a little more than a century ago and provided society with significant gains in quality;

- – the second revolution was related to the “electrification” of enterprises, which made it possible to operate all mechanical machines under better conditions. This led to productivity earnings;

- – then came the third revolution with “electronics”, allowing the development of the management and control of automation/automata. It led to performance gains;

- – finally, the “cybernetics” revolution made it possible to overcome distances and distributed component issues. This led to interconnected systems operating in a cooperative or collaborative mode.

The latter “cyber environment” enables the connection of all the above technologies referring to the above evolutions. What is remarkable is that everything or every resource is interconnected: equipment, products, users, suppliers, friends, producers, etc. Here, artificial intelligence becomes a technology enabler: its evolution in the cognitive or neural domains allows us to realize the programs that are being used in cyber space.

Modeling is about representing real systems in an abstract way. As a result, its implementation requires some abilities, as well as capacities such as flexibility, modularity and genericity (universality) in order to ensure the functional integration adapted to each element of the global system. And all the more with the complex systems.

In the following, we will detail how to conduct the global implementation (or global integration) of concepts and programmed elements using artificial intelligence. As the modeling process goes on, all entities used in the model are called agents. The functioning of these interconnected entities make possible the emergence of patterns, forms or behaviors. We call the phenomenon collective intelligence.

4.2. Introducing collective intelligence

Our five senses allow us to better understand our environment, to perceive and understand it so that we can adapt. However, our environment has itself evolved and changed: in a very short time, on a human scale, humans created various artifacts, such as nuclear power plants, products such as CFCs, industries that produce CO2, industrial food, a very open market economy, etc. We are unable to measure all their interactions and understand the consequences, and our body itself has not had experienced them through evolution. We are thus cognitively ill-fitted. Similarly, the world we have created is so complicated that we must call upon experts to manage these artifacts; yet, no expert has been trained to take into account all the components. Immersed in this world, our senses do not have the ability to provide us with adaptive knowledge on our relationships with the environment. Thus, we are both isolated in a new and “invasive” world that cannot be dominated in its entirety, and we are unable to manage and control it as we would with a traditional and limited environment that we may have known better and that we would be able to tame.

These open and complex worlds – all factors of progress in our society – can diverge because of the number of interactions between them and our environment (in relation to the so-called stable norms that characterize our society). Indeed, the resulting economic and ecological problems are linked to their unpredictability (e.g. chaos) and their convergence towards states for which we are not prepared if not very vulnerable to. This raises questions related to the evolution of our world, the referents who relate to it, our vulnerability, etc.

If we cannot control these worlds and the situations that emerge from them, it is up to us to adapt to them in a more or less forced way, ignoring the notions that inhabit us such as: our capacity for intuition, our emotions and our instinctive drives. From our individual behavior and our ability to adapt will therefore emerge a global behavior and a collective intelligence or collective way of doing things.

This is what this chapter deals with. It is important to be able to acquire technologies, i.e. tools, techniques and methods that enable us to better model our relationships with our environment and these new worlds, to have a better understanding of them and, consequently, to better design or redesign the systems under our control.

By describing the problem in this way, we address the theme of collective intelligence, the stability of complex systems and their re-engineering. We propose the study of complex systems from the perspective of agent communities. Indeed, in a slightly different formulation, complexity is a property of programmable networks – in this case, a network of networked elements or organizations. The nature of these organisms – called “agents” – can be human, animal, economic, social or artificial. However, these communities of agents operate in a very particular way, and their individual behavior will be influenced by that of their own neighbors. As we have seen, a global order, representative of what is called a “collective intelligence”, will emerge. Thus, the following definition [PEN 03] establishes a common basis for understanding:

“Collective intelligence is a hypothesis relating to the ability of a group of agents to achieve a higher level of performance in action. It underlies the existence and use of cognitive processes, learning, representation, decision-making, but also social processes such as sharing, exchange, negotiation, self-organization, or relational (or socialization) processes, such as recognition, competition, involvement”.

This chapter describes some theoretical aspects related to the notion of agents and collective intelligence, and then addresses practical aspects, particularly in terms of competition and cooperation between agents and between competitive worlds. Finally, it describes techniques and methods that we have developed and derives methodological elements for process re-engineering.

4.3. Studying the agent concept

The objective of this section is to clarify basic concepts and define terms appropriate for a consistent understanding of the system under study.

4.3.1. Some definitions of an agent

The words “entities”, “elements”, “organizations”, “actors” or “agents” are frequently used, often interchangeably. There are even a multitude of definitions on the agent concept depending on the type of application for which the agent is designed, but which nevertheless have some similarities. J. Ferber equates the term agent to a physical or virtual entity [FER 95] that:

- – is able to operate in an environment;

- – can communicate directly with other agents;

- – is driven by a set of trends in the form of individual objectives, and a satisfaction or survival function that it seeks to optimize;

- – has its own resources;

- – is able to partially perceive the environment;

- – has only a partial representation of it;

- – has skills and provides services;

- – can eventually reproduce; and finally

- – whose behavior tends to meet objectives, taking into account the resources and skills at their disposal and according to its perception and representations and the communications received.

This definition introduces exploitable characteristics, such as the notion of limited or local objective and perception in the environment. Indeed, an administrative operator, technician or mobile robot generally has a certain radius of perception and influence to observe and act on its physical environment (a mobile agent in the Internet network cannot observe the entire network; only a part of it). Similarly, the assignment of tasks and products to resources in a decentralized production workshop is done locally. In summary, the interactions between product and resource agents depend largely on their own objectives and the constraints in their local environment.

Wooldridge and Jennings [WOO 95, JEN 98] defined the agent as a computer system located in an environment and acting in an autonomous and flexible manner to achieve certain objectives for which it was designed:

- – an agent is said to be situated if it is able to act on its environment from the sensory inputs it receives from the same environment;

- – an agent is considered autonomous if it is able to act without the intervention of third parties (human or agent) and if it controls its own actions and internal state;

- – an agent is said to be flexible if it is able to act in a reactive, proactive and social way:

- - reactive: able to perceive its environment and react over time;

- - proactive: take the initiative and be opportunistic (i.e. at the right time);

- - social: able to interact with other agents when the situation requires it (to complete its own tasks or to cooperate with them).

This notion of situation or situated agent implies that autonomous agents must act in a real world, in perpetual change, partially observable and intrinsically unpredictable. Similarly, they must react in real time to all disruptions encountered, while respecting their objectives and system constraints. Finally, they may have to take into account the actions of other agents [DRO 99].

To summarize, this book adopts the following definition: an agent is a physical or virtual entity, in a situation, in an environment with which it interacts in an autonomous and flexible way.

4.3.2. The different categories and models of agents available

This section presents different models of agents in order to understand their characteristics and operating modes, and summarizes some of the work done in the control of distributed systems. We distinguish here two main families of agents: cognitive agents and reactive agents [REA 03a].

4.3.2.1. Cognitive agents

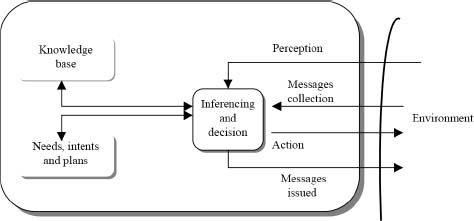

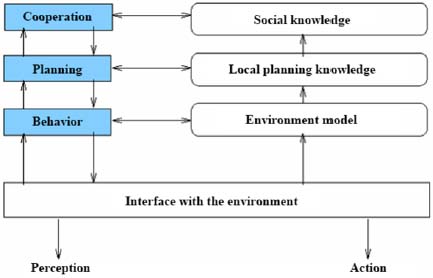

This was the first agent model proposed, and it is also known as a deliberative agent. It is based on symbolic artificial intelligence (AI) and makes it possible to plan the actions of an agent within its environment [FIK 71]. Indeed, cognitive agents alone are capable of carrying out relatively complex operations. In general, they cooperate with each other to achieve a common goal (solving a problem, a complex task, etc.) and have a set of explicit representations (about the environment, other agents and themselves) described in a knowledge base on which they can reason. They react according to their knowledge, goals, information exchange with other agents and perception of the environment (see Figure 4.1). They have communication means and mechanisms to manage interactions with other agents (cooperation, coordination and negotiation).

Figure 4.1. Cognitive agent model

This agent model is a metaphor for the human model and is based on the sociology of organizations [LIU 02]. Its origin lies in the desire to bring together traditional expert systems in the field of AI.

The cognitive agent is also referred to as BDI (Beliefs, Desires and Intentions) [BRA 88]. The agent postulates that its actions must be, as for a human being, dictated by abstract representations of the world that include the representation of his or her own capacities, its goals as well as those of other agents. This model is based on extensions of logic and is founded on the following mental states:

- – beliefs: the agent’s knowledge of the environment;

- – desires: the agent’s “options”, i.e. the different objectives through which the agent may want to pass;

- – intentions: the states through which the agent has chosen to transit.

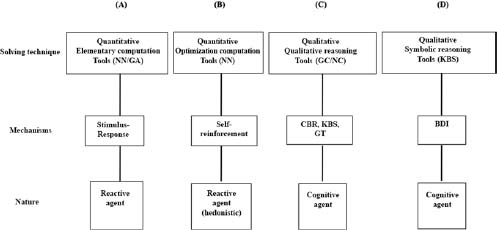

The functioning of a BDI agent therefore involves updating its beliefs based on the information it receives from its environment, then determining the different choices it faces, analyzing them and setting its goal states according to them (intentions) and finally defining its actions taking into account its intentions. The cognitive agent generally deals with qualitative information and uses qualitative or symbolic reasoning. This processing can be established through tools such as genetic/neural classifiers (GC/NC) or expert systems (ES). This agent can use mechanisms such as case-based reasoning (CBR), knowledge-based systems (KBS), game theory (GT) or based on the logic used in BDI agents (see (C) and (D) in Figure 4.3).

4.3.2.2. Reactive agents

Among the critics of symbolic reasoning is R.A. Brooks [BRO 91] who by expressing opposition to the symbolic model proposed an alternative approach now called “active AI”. Thus, intelligent behavior should emerge from the interaction between various simpler behaviors. J. Hawkins [HAW 04] seems to be of a similar opinion.

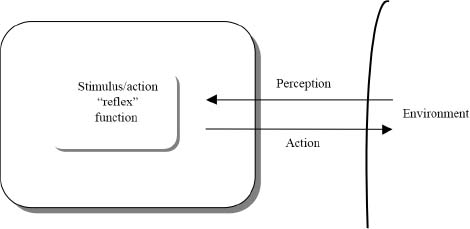

Figure 4.2. Model of a reactive agent

In this same context, we consider that reactive agents do not have explicit knowledge of the environment, other agents or their past, nor of their goals (no planning of their actions). They are agents that react only to their perception of the environment and act according to this perception (see Figure 4.2). This agent model is a metaphor for the “ant” model and is based on life sciences and collective intelligence [BON 94, BON 99].

Hedonic agents are in the same category of reactive agents, but with more rationality. They learn to modify their behavior by self-reinforcement in order to increase their “pleasure” or “satisfaction”. They are capable of “hedonistic” expectations and slow adaptation based on their historical experience, and this requires a level of rationality higher than the purely reactive agent.

Reactive agents generally process quantitative information and use elementary or optimization calculations. They can be constructed by connectionist networks such as neural networks (NN) or by using simple computational algorithms such as genetic algorithms (GA). Their capacities respond to the law of stimulus/action and self-reinforcement learning (see Figure 4.3(A) and (B)).

4.3.2.3. Summary and comments

Reactive agents are the easiest to implement. While their effectiveness has been verified in many applications, their limitations come from the following points:

- – the agent does not have a mental representation of the environment and must choose the right actions only from local data;

- – the agent’s overall behavior cannot be easily predicted. Therefore, it is not always possible to design the agent behavior for the specified purpose.

Figure 4.3. Structures of agent models

Unlike reactive agents, cognitive agents are much more complex and difficult to implement because each agent relies on its own skills in isolation to solve a problem. This is the main limitation of this architecture.

To address the disadvantages of these two models, the researchers combined these two opposing but complementary aspects of agent design, a combination that revealed hybrid agents.

4.3.2.4. Hybrid agents

Hybrid agents are designed to combine reactive and cognitive abilities, allowing them to adapt their behavior in real time to the changing environment [FER 92, FIS 99]. In the hybrid model, an agent is composed of several layers arranged in a hierarchy. Most architectures consider that three layers are sufficient.

Thus, at the lowest level of the architecture, there is usually a purely reactive layer, which makes its decisions based on raw data from the sensors. The intermediate layer ignores the raw data and works instead with a vision of environmental knowledge. Finally, the upper layer is responsible for the social aspects of the environment (communication, cooperation, negotiation), i.e. the reasoning taking into account the other agents.

The InteRRap model proposed by J.P. Muller [MÜL 94] (see Figure 4.4) is an example of the hybrid model where each layer has a knowledge base according to its degree of abstraction: an environment model at the reactive layer level, a mental model at the local planning layer level and a social model for the global cooperation and planning layer.

Figure 4.4. The InteRRap hybrid agent model

4.4. Applications using agents

Through the extended definition that has been given, agents are found everywhere in our universe. Here are two examples of use that illustrate their role and architecture.

4.4.1. Modeling the behavior of a living organism

The individual behavior of a so-called “evolved” organism as we encounter it around us can be represented by a fairly general agent, regardless of the environment studied: ant colonies, management in industry, evolution of the economic world, a man lost in the crowd, a tiger in the jungle, etc. Indeed, by observing such organisms around us, we note that three steps always appear in their behaviors:

- – situation analysis and action in a given environment to ensure its survival or to overcome an impasse. The action can be of the “reflex” or “reasoned” type but it is executed autonomously;

- – reproduction or integration of solutions to introduce diversity and multiply forms and varieties (i.e. dissemination and exploitation of an advantage);

- – adaptation to improve and “refine” its configuration and information processing system.

In terms of modeling, an agent will be required to perform the functions described above. To the extent that this approach is not sufficiently responsive, natural selection mechanisms will be added and integrated into the agent. Otherwise, a colonization or domination effect is to be expected, which also results in an induced function. This already leads us to make two remarks related to certain information processing techniques and, consequently, on the structure and type of agents we can use:

- – In industry, most of the advanced technologies, which we have developed (artificial intelligence, forecasting, etc.) to anticipate an event or to emulate the activity of a living organism, have often been followed by mixed results. This is normal insofar as the basic mechanisms used in these approaches are sometimes very different from those in our heads. Indeed, we are “programmed” to ensure our survival: when an animal jumps on us, it is important to be able to quickly recognize if it is a tiger or a cat, in other words, if we should hit it or if we have to run away. And if there are precursors (sniffing, detection of “weak signals”) that allow us to anticipate this event, all the better. In terms of information processing, this means that first of all, pattern recognition techniques and notions of globality and parallelism are used. However, the computer programs we have designed work in a much more simplified way on the basis of algorithms, with reduced data and in an analytical and sequential way. Hence, the inevitably limited results obtained.

- – Artificial neural networks, which have often been promoted in the knowledge that they are, in a way, structured in the image of our brain, are first made (at the current stage of our mastery of this technology) to classify quickly in reflex mode, but not to reason or predict. On the contrary, case-based reasoning (CBR) is an excellent technique that we have used many times successfully at IBM because it allowed us to model a way of doing things and the operators’ experience, and then to use these skills in a similar or different way when we later found ourselves confronted with similar situations.

4.4.2. Modeling of an industrial management and control system

All the architectures and concepts proposed to implement new approaches in production system management and control are shown in Figure 4.3. From left to right, we have included models that are first quantitative, then qualitative and cognitive, and whose implementation requires special care.

Jihad Reaidy’s thesis focused on the decentralized management of production systems. Products and resources are represented by agents in constant interaction for a better allocation of tasks on resources. These agents must be able to react at any time, in any geographical or physical context. To this end, the notions of partial perception of the environment, situation, autonomy and reactivity represent many of the criteria and characteristics that must be included at the level of an agent. We used an evolutionary agent model that can be considered as a hybrid agent. This model is applied to “product” and “resource” agents for the dynamic allocation of resources in a production workshop. It implies a relatively simple specific architecture: there is no need to make this agent more complex because it is mainly diffusion and propagation phenomena that come into play. The architecture of this agent model, which was used in the DAPS tool [MAS 01a]. Other agents have been used in more ambitious projects such as VFDCS or PABADIS which will be presented later [LIU 02, PAB 00].

4.5. Conclusion: information related to the use and usage of modeling

Being able to model complex systems makes it possible to provide “good” solutions to the economic and social challenges related to the so-called commercial wars, the rise of free trade economy, ecology or protectionism. Before being even worried about the usual “economic war” term, let us take up the relationships with the concept of “global free trade”.

4.5.1. Free Trade considerations

There is a lot of talk of “Free Trade”. Today, however, there is no free trade: we are still under the era of international exchanges governed by States, federations of states, countries or groups of countries, etc. Indeed, among these countries, there are always companies or enterprises, and humans: they are the ones who negotiate, trade, share the markets, enrich themselves or become poorer.

Thus, when we talk about competition or “pure and perfect” competitiveness, this does not exist: the competitors are never on the same level of strong equality (such as size, wealth, skills, business intelligence and production costs). This situation is fundamentally different to the spirit of competition involved when governing sport, knowledge, beauty and commerce.

When people say that free trading is a question of trading with various partners, saying that “with many partners, our relationships and situation will be stronger”, it is in fact a way to give advantage to some categories of people (leaders or group of partners) to protect and develop some global interests or financial interests of given categories of people, despite other market players. This causes a bias in terms of economic interests, objectives or strategies to be considered in the model.

For a government, an economic leader, etc., it is also the ability, on behalf of specific superior interests, to favor or reinforce some beliefs such as political religions: for example to develop car industry in some countries to the detriment of agriculture, as well as to promote finance to the detriment of steel or textile industry. In short, the major decision-makers (political and/or industrial) can restructure, or reorganize, a complete domain of activities, thus counterbalance or reduce the profits of some to the profit of others; they can redistribute the cards of the economy, as in a game, and change their belief/religion.

4.5.2. Harmonization of situations and objectives

All this can only be done with models! For instance, the models used by a government can be different from the models operational in a company.

Indeed, managing free-exchange approaches (which are free in appearance) is necessary at the country level to not ruin completely some specific socio-professional categories and maintain good employment balance sheet. It is then these models that are implemented to moralize a rather “liberal” strategy by signing economic contracts between countries: these are intended to fight against “competition distortions”, against all kinds of deviances such as dumpings, social plans, the fiscal organization, the environment and monetary distortions. To this end, they can focus on “harmonization”, to get for example similar wages and the same labor law, the same taxes, the same formalities, the same standards, the same currency, taxes and variable contributions from one country to another, and finally legislation concerning practices, costs and behaviors.

4.5.3. Emergence of the ecology and “patriotism”

But – and this is new – our free-trade religion has just become more complicated: indeed, ecology is now integrated into the economy and adds its constraints, given that they are considered as externalities: here, we will denote “short market circuits” in logistics; best balance between local production and mass personalized production; aggregation, i.e. a level of upscale integration, etc. All these strategies developed with/by ecologists can be considered as protectionism decisions.

Thus, there are two directions: free trade and protectionism have to be integrated themselves in the models and will have to be processed as pertaining to the theory of games or the dynamic pricing of the products.

4.5.4. Comments and expectations on modeling expectations

Therefore, according to the above considerations, there are now only three main solutions to three class of actions:

- – Offshoring (make or buy strategy). Exile or distribution of companies and people to greener climates; improvements and competitiveness of individual companies.

- – Redefinition and adjustment of the role of a state. Is the decline or withdrawal of state control over the economy an institutional and virtuous solution, which ultimately leads to harmonization and comparable competitiveness at macro-economy level?

- – Free trade or generalized protectionism? “Patriotism” also includes “defense of heritage” by certain actors or countries. The objective is to limit the destruction of jobs or purchasing power, to save specific skills, know-how and subsidies, and then to maintain and restore acceptable competitiveness criteria.

In the following chapter, we will detail some examples to explain the utility and uses of models used in large companies such as IBM.