“As our personal devices know more and more about us—where we live, where we work, when we’re at the movies, when we’re listening to music—they can make better decisions about how we might like to interact with them.”

—Laura Klein, Designing for Voice Interfaces

Lately, purported advances in artificial intelligence have ignited a buzz about the possibility of texting and talking to our domestic machines. However, a highly successful conversational interface is already over twenty years old. While only recently allowing voice input, Google Search has embodied the core principles of conversation from the beginning (Fig 3.1). And while its capabilities are ever improving, the basic starting point on the web has remained virtually identical. There’s a motley wordmark, a text entry box and two buttons—as it’s been for all time.

Over the years, despite demonstrative efforts by third-parties to “redesign Google,” the interface works so well that it only needed a cleanup. With the conversational model in place, adding the alternative for audio input and output fits right in. (Fig 3.2).

This is the ideal we should be striving for, interactions that flow like conversations regardless of the medium, allowing users to switch modes seamlessly depending on the context and the information being exchanged. If the right framework is in place, capabilities can be layered in without breaking the model.

Google Search is just this type of proven partner in conversation, whether input arrives in text or speech. Interactions are:

- cooperative,

- goal-oriented,

- quick and clear,

- turn-based,

- and error-tolerant.

It’s fast and efficient, and doesn’t let you forget this—how many thousands or millions of matches came back in how many fractions of a second, as displayed on the results page. It doesn’t matter that nearly no one looks past the first page. It matters that seeing a large quantity in a small amount of time feels fast. And this is critical. The experience of speed is entirely subjective.

Several years ago, I made a spontaneous field study of exactly this. I boarded a San Francisco city bus near my office. A pair of bus drivers were passing the time and talking about web search. One of the drivers was wearing a characteristic Sikh turban. The other was excited to tell his friend about his efforts to learn as much as possible about Sikh culture and religion.

“I use Google. It is the best. I just typed in ‘Sikh’ and all of this information came up instantly.”

“What about Yahoo!? Don’t they also have a web search?”

“Well, they do, but it is much slower than Google. Yahoo! is for yahoos.”

Yahoo! Search was a client at the time. So, I knew for a fact that Yahoo! was licensing the Google search engine and that both sites were equally fast. Because the results appeared under a different header in a more vibrant and colorful layout, the subjective impression was that Yahoo! was slower. Usability testing bore this out.

In addition to being fast and feeling fast, Google Search matches the user’s intent. Most businesses need to pull the user at least a bit off their path to be successful. The milk is in the back of the supermarket for this reason. However, Google is a machine for meeting needs. The better Google Search matches what the user’s looking for, the better it is for Google. And the more searches it makes, the better it gets at matching user intent.

By observing and learning from trillions of searches, Google Search has also become highly error-tolerant. To test how far it would go I typed “lsgn recp” (without quotes) into the search field (Fig 3.3). In less than a second, Google delivered a summary of an actual recipe—the “Absolute Best Ever Lasagna Recipe”—and a series of related queries. Typing the same garbage string of letters into the recipe’s source site, Food.com, gets “Sorry, No Matches.”

Many websites put the work of providing clean input data onto the user, but Google makes us feel like it’s on our side. By taking advantage of the fact that we’re social creatures who make a lot of mistakes, Google has become pretty good at guessing, which has made it very rich indeed.

Each interaction with Google Search is also an object lesson in how to be an effective information seeker. Because revising the query feels effortless, and the turn-taking interaction is natural, there’s little perceived downside to running repeated searches. Each turn tells you something about the domain you’re searching. And it’s unlikely you’ll get nothing. So, it feels like Google never breaks. Google is a true partnership between human and machine. Query formation has become a life skill more valuable than knowing your way around town, and far more useful to most students than the Dewey Decimal System.

Following conversational design principles is just one part of creating conversational interactions. It’s also important to employ them in the right way at the right time.

Moment by Moment

Our interactions with digital systems take place in a series of key moments. A moment can be thought of as a stage in a customer’s knowledge of and relationship to a system. And each of these moments should support the success of the overall interaction.

These key moments are:

- Introduction. The aspects of the system that create a strong, positive initial impression, invite interest, and encourage trust.

- Orientation. Establishing or re-establishing the boundaries of the system, how the concepts within it are organized, and the possibilities for action towards a goal.

- Action. The set of tasks that are supported by the system, and the available controls for accomplishing those tasks.

- Guidance. How the system helps ensure successful interactions, including providing instructions and feedback, and a positive ongoing relationship with the customer.

Think of the human analog. Let’s say you’re going to take a skiing lesson; while you would hope that all your interactions with the instructor reflected your needs and inspired confidence, you would also expect different priorities at different points in your relationship. First you would probably want to feel that you are with a friendly, expert instructor who is upfront about their identity and credentials and demonstrates sufficient enthusiasm for skiing. Then you would want to know how the instructor will help you meet your goal and what your options are for proceeding. And in the middle of the lesson, it’s important that the instructor helps you succeed and takes care of you if something goes wrong. Should you return the next day for another lesson, the introductory interactions need only reassure you that you’re dealing with the same person as before.

Many elements of the system will have roles in more than one key moment. A strong, clear introduction can also set expectations that help orient the user. All elements should reinforce one another harmoniously.

Introductions: who are you?

Because you never get a second chance to make a first impression.

—Head & Shoulders dandruff shampoo

A successful introduction establishes identity, commands interest, communicates value, makes an emotional connection, builds trust, and offers a clear next step—and does all of this in a matter of seconds. Think of the openings of your favorite TV shows. Every episode has theme music and titles designed to grab your attention even before the action starts. Trevor Noah introduces himself on The Daily Show even though most people who tune in know who he is and expect to see him. This reinforces the emotional connection of habitual viewers and, equally, welcomes new viewers. Different elements are appropriate to each medium, but the principles are the same.

Various studies offer estimates of how much time a person, product, website, or interface has to make a first impression. The answer is, not much. The precise number of milliseconds doesn’t matter. It’s a sliver of attention, and whatever you have to offer will be judged in light of your customer’s past experience and biases.

Any system needs to communicate several things quickly to make it to the next impression. Beyond establishing credibility and utility, it needs to get hooks into a human mind slippery with competing associations and concerns, conscious and unconscious.

When confronting a new system, the potential user will have these unspoken questions:

- Who are you?

- What can you do for me?

- Why should I care?

- How should I feel about you?

- Why should I trust you?

- What do you want me to do next?

If you haven’t answered these questions explicitly in the design process, the system won’t provide clear, meaningful answers to your user. Many digital systems are quite complex, but if you can’t distill what you offer into a single introductory sentence you’re putting the work of understanding it onto your potential customer.

The first iteration of Google was cheerful and explicit (“Search the web using Google!”). It required a very low commitment and returned something useful. That’s a good introduction. A powerful introduction will inspire the first interaction. That first interaction should provide enough value to seal the deal.

Most systems don’t have the luxury of a one-click interaction. The more complex the product or service, the more powerful the first impression should be. From the customer’s perspective, the first impression may or may not be their first time using an interface (Fig 3.4). It’s also likely that the very first time someone encounters a system won’t be the encounter they remember.

For as long as we’ve had the consumer internet, a website homepage has been the place to start the conversation. Too often, though, it’s functioned more like, well, an inert page of information. If we’re going to inspire more interaction, we need to take a more conversational approach to copy and structure.

But most people won’t make it to the homepage unless they’ve already heard about the product or company elsewhere. And thanks to our friend Google, many people end up on websites they’ve never seen before (or have seen and forgotten). So, a good question to keep in mind at all points is, “What if someone who doesn’t know what we do ends up here?” Where websites are concerned, think of every page as a homepage. With larger systems and services, consider in what context, on what device, and with which system representative the relationship is most likely to begin.

Slack claims to be the fastest growing business application in history. Their success has a lot to do with their ability to offer efficient online communication and collaboration while retaining the humanity of people working together. Their marketing homepage gets this across (Fig 3.5). It’s clean and efficient and leads with concrete details rather than abstract promises.

The team administrator will see this page when setting up a Slack account, but for the team members, an email invitation (Fig 3.6) is going to be their first experience of the application. Given the social context of the person inviting them, there’s no need to overexplain Slack’s proposition. This fulfills all the criteria for a successful introduction. Overexplaining erodes credibility and takes up precious time.

Duolingo, the language learning application, also makes a terrific first impression (Fig 3.7). A critical part of using language well is using as little of it as possible. Anyone who finds Duolingo via a web search for “learning French” will see a link to getting started in French, and a link that indicates there are other language courses available too. This is simple and unambiguous. French. Free. Easy. Daily practice. The economy of the statement sets the expectation that the learning interaction will be simple and user-centered.

Identity. Interest. Value. Trust. These are the essential ingredients in every successful introduction. Aim to solve these in as few words as possible. Look to the classic advertising slogans, designed to burrow into human brains, for inspiration. Traditional advertising was led by the copywriter, so these deceptively simple phrases demonstrate significant craft. The best advertising inspires us to avoid being generic or boring. Each one conveys a distinctive, concrete, customer-centered benefit. Despite hailing from the days of print and television, these are conversational because they reinforce a sense of familiarity, a shared culture, and are designed to inspire action.

Melts in your mouth, not in your hands (M&Ms candy)

When you care enough to send the very best (Hallmark)

Leave the driving to us (Greyhound)

The happiest place on earth (Disneyland)

Humans are physical beings, as of this writing, with a rich emotional life, so evoking emotions and physical sensations is more powerful than speaking in abstractions. Of course, in the first moment of an interaction, it’s important to be descriptive as well as persuasive. Don’t forget to be persuasive. It’s not an interaction, or a conversation, unless the person you’re addressing bothers to answer.

How to be forgettable

Simply creating awareness is not enough to make a successful introduction. You need to move people from awareness to action without requiring effort on their part. Plenty of businesses spend a lot of money on branding and marketing campaigns, then fail to make the most of attention when they get it.

Your product or service will take a quick trip down the memory hole if you:

- have a name that’s generic, or hard to spell or pronounce;

- say too little about yourself, or too much about anything;

- look or sound like other products (benefitting from people’s confusion is a dark pattern);

- lack a clear, enticing pitch;

- provide too many options; or

- use terms that are meaningless to your target customer.

These things are more likely to happen when there’s insufficient understanding about what’s meaningful to potential customers. The clearer you are about what customers care about, and why, the easier it is to connect with those existing needs and associations. In the absence of this understanding, it’s easy to say too little, too much, or the wrong thing. As Dale Carnegie said in his seminal interaction design text How to Win Friends and Influence People:

You can make more friends in two months by becoming interested in other people than you can in two years by trying to get other people interested in you.

I’ve been an avid Twitter user from the beginning, so it would make me happy for them to succeed. Given their notorious trouble attracting new users to the platform, it’s mystifying as to why they don’t make more of their homepage (Fig 3.8). There’s no website name, no pitch to participate, sign-up is subtle, and they lead with a context-free video, which gives the overall impression of a generic news site. The most prominent next step is for existing account holders. This is the interaction design equivalent of saying, “Don’t you know who I am?”

Rather than offering a concise, friendly introduction and using this critical moment to draw potential users in, Twitter simply poses the question, “What’s happening?” And then appears to provide the answer with the content below. Only current Twitter users will recognize the question as the input prompt from the app. In the absence of an explanation, this comes across as mysterious, and even a little shady. A single phrase of welcome and a clear next step for new users would go far.

Once a system has made a good first impression, it’s time to help people get oriented and develop a solid understanding.

Orientation: where am I?

According to legend, in the 5th century BC, the poet Simonides of Ceos attended a banquet at the house of a wealthy nobleman in Thessaly (now Greece). When Simonides stepped out for a few minutes to answer a message, the roof of the banquet hall collapsed, crushing everyone inside. Friends of the deceased wanted to give them a respectful burial, but couldn’t tell any of the bodies apart. Simonides realized that he could identify each one by picturing who had been sitting in each place at the table. From this event, he inferred that picturing pieces of information in specific physical locations could be used as an aid to recall. This technique is called the method of loci, or the memory palace. It’s used today by real-world competitive memorizers—and the fictional cannibal Hannibal Lecter.

Given how long humans ranged the earth before developing writing, the power of spatial memory makes sense. For millennia, survival depended on remembering the location of fresh water, shelter, or a heard of tasty mammoths. Now we have Yelp. And if you spend a lot of time online, like I do, you might find that your memory seems to deteriorate. I think these things are related.

This phenomenon explains why it’s more common to speak about navigating websites than requesting information. A spatial metaphor makes information easier to retain and process. Without a spatial reference, it’s easy to get lost. If you can use language to create a vivid map in the mind of the user, you can instill confidence and provide ease of use. Even better if your map fits into a pre-existing mental picture and requires no learning.

Navigation as orientation

People glean information from a variety of contextual cues. If you see someone standing behind a bar washing glasses, you can reasonably expect to order a drink from them. If you see someone confidently walking down a city street, you might consider asking them for directions.

According to James Kalbach’s Designing Web Navigation, structural navigation—the navigation that reflects how an information space is organized—helps users in these ways:

- Expectation setting: “Will I find what I need here?”

- Orientation: “Where am I in this site?”

- Topic switching: “I want to start over.”

- Reminding: “My session got interrupted. What was I doing?”

- Boundaries: “What is the scope of this site?”

In a website, navigation labels present the major categories of functionality. They should be as straightforward as possible, as in Mint’s labels: phrases like “Budgets,” “Goals,” and “Ways to Save” are clear and direct (Fig 3.9).

Navigation is not the place to try out novel concepts. A cautionary example comes from Hotwired, the first commercial web magazine, launched in 1994 (Fig 3.10). I was alive then, and I remember the site, but I don’t remember what any of those labels referred to (“Renaissance 2.0,” anyone?). The editors quickly changed them, but the trend of so-called “mystery meat” navigation continued throughout the late 90s. Imagine a highway sign saying, “Crude & Nom-Noms” instead of “Gas & Food.”

According to researcher Peter Pirolli, author of Information Foraging Theory, people interacting with information systems are doing a continuous cost-benefit analysis, just like animals on the hunt. Everyone wants to know as quickly as possible whether they’re on a path to success. And that’s exactly what successful navigation does. Surprises and mysteries aren’t fun, unless you’re writing a thriller!

Context and cues

According to Professor Gabriel Radvansky, a psychology professor at the University of Notre Dame, “entering or exiting through a doorway serves as an ‘event boundary’ in the mind, which separates episodes of activity and files them away.” The same thing happens with information spaces.

As household objects become “smarter,” and new modes of interaction emerge and visual cues diverge or disappear, it’s critical to rethink how to orient every customer in the information space. Encouraging discovery and habitual use are challenging enough with the standard menu and button GUI, but there is more of an established practice of providing suggestions and recommendations. With voice and messaging interfaces that are intended to feel more conversational, there are missed opportunities to offer a helpful aside.

You need to start with a set of concepts that are familiar and meaningful. People switch between multiple systems, so it’s much better to ask how we can orient a service around the user’s intention, rather than ask users to reorient themselves as they move from their smartphone to their smart fridge. This is a hard problem. Amazon hasn’t solved it, even though people who buy the Echo speaker are likely to be familiar with the Amazon.com ecosystem.

According to a 2016 study by Experian, over 80% of people surveyed reported using the Echo to play a song or set a timer, and there was a sharp drop-off in use after that. Only 32% of users reported using the Echo to buy something on Amazon Prime (http://bkaprt.com/cd/03-01/). There’s nothing about interacting with Alexa that cues a user to the full range of possibilities or links one task to the next. Without prompts to interact, customers must be highly motivated to seek out new topics and activities. The Amazon.com recommendation engine is core to the company’s success, so it’s surprising that Alexa has no suggestions to offer. This may change with time and usage data.

Fundamentally, this is a pitfall of retaining a device-centered perspective on design. Rather than asking, “How do we get our customers to make more purchases using the Amazon Echo?” the better question is, “How can we make the presence of an Amazon Echo in the home provide more value to both Amazon and the customer?” and, “Where do we need to introduce cues and prompts to do that?”

If the designer is clear on the value that the system is providing and the context of use, it’s possible to identify the best times in the interaction flow to provide additional orientation cues, but possibly in a different mode. As Laura Klein writes in Design For Voice Interfaces, “When you think about it, some pieces of information are really easy to say, but they are hard to type, and vice versa. The same goes for output.” Maybe when a talking speaker wants to show you the way, the smartphone screen in your hand is the sign.

Choices are work

Navigation represents choices, choices are decisions, and decisions are work. Hick’s Law states roughly that the more choices a person faces, the more time it will take to make a choice. This principle is named after the British psychologist William Edmund Hick who created a formula that models reaction time. Offering a quick interaction is one of the most important principles of a conversational design. As with Grice’s principles of Quantity and Manner, the most humane thing to do is the work of determining the smallest number of the most appropriate choices to help the customer reach their goal.

Of course, we don’t want to sacrifice accuracy. So, the key is to offer the right choices at the right time. Google Search offers the ability to narrow results after the search is conducted, rather than making the user choose up front (Fig 3.11).

The order in which a system offers choices is as critical as the number of choices it offers. You need to offer the right number of the right choices at each step to help users make easy decisions and avoid mistakes. The ideal is something like the opposite of an automated phone tree in which the customer proceeds through a series of multiple-choice audio menus that seem unpredictable, banal and interminable. Offer the most consequential or meaningful choices first. For example, booking travel starts with flight, hotel, or car, before getting into destination or dates. Ordering food often begins with the style of cuisine. Solve for the smallest possible number of choices that will satisfy customer intent.

The wrong lesson to take from Google Search is that the open search field represents an ideal way to navigate. An open input—or an open question—represents a nearly infinite set of choices. This is only appropriate when the system offers a large set of potential responses relative to the set of possible requests. Menus may not seem conversational, but they’re faster and friendlier than the potentially endless alternative of bad guesses.

Orienting customers in a conversational way means impressing each new mind with the full range of possibilities and then eliminating nearly all of them. This might seem contradictory. In truth, no one with a goal in mind wants to know the full range of possibilities. That’s paralyzing. They just want to know if they can accomplish the thing they have in mind at that moment. Offering the right choices at the right time is crux.

Seamless, the food delivery service, begins by asking where you live. In this context, it doesn’t matter which restaurant is best overall if it doesn’t deliver to you. Once the system knows where you live, it asks, “What would you like?” And the arguments with your housemates can begin.

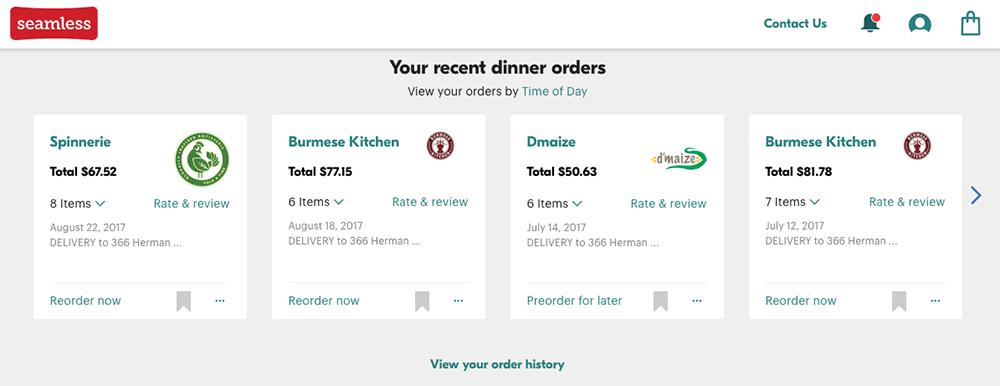

Like a helpful serviceperson, a system that is capable of remembering past behavior can offer appropriate and timely choices based on that information. For returning customers, Seamless offers the option of reordering previous orders (Fig 3.12). This suggestion doesn’t block finding or choosing a new restaurant, but it’s a tremendous convenience for those too tired after a long workday to decide what to have for dinner.

Intelligent digital systems may start making more sophisticated decisions on behalf of their customers, but predicting behavior and preferences is still a tricky business. No one wants to believe they’re that predictable. Systems will need to provide at least the illusion that the human is still in control.

Action: what can I do?

I live on Earth at present, and I don’t know what I am. I know that I am not a category. I am not a thing—a noun. I seem to be a verb, an evolutionary process—an integral function of the universe.

—Buckminster Fuller, I Seem to Be A Verb

Reserve a table. Place your order. Tweet. Comment. Pay rent. Kill time. The most fundamental design decision is what your product or service allows your customers to do. Trivial or momentous, the verbs are the point of the conversation, a part (or the whole) of the goal.

Verbs give your system the opportunity to augment the animal joy of physical motion with playfulness, or the exhilarating sense of command (made all the more exhilarating because you no longer need to get off the sofa to order dinner). An action could be anything you can imagine and make happen. This is the heart of interaction design.

With a trivial physical act—a single click, tap, swipe, or utterance—a user can issue an order, triggering significant events across time and space. The actions a system offers allow users to change something in the world, or in their own mental state or that of another.

The hardest work an interface does is translating human actions into machine actions and vice versa. Humans act based on habit, hope, feelings, fears, and sometimes goals, using a mental model patched together from a lifetime of associations. Machines act based on rules and depend upon precision. To ensure a system delivers on the expectations it sets, we often need to break up a single customer action into its component parts and build it back up again piece by piece.

Actions must be goal-oriented, moving the customer closer to their objective. The actions must be context-aware, reflecting the customer’s state (in a hurry, using a mobile device) as well as the state of the system (signed-in). Each discrete action must provide feedback. The user needs to know whether their action succeeded or failed and what to do next. The implications of the action must be clear and apparent. As mentioned earlier, truth in interaction is matching expectations. This is the path to trust and credibility.

As in conversation, the sequence of actions should feel cooperative. An unexpected order creates unnecessary work for the user. It’s every designer’s duty to do the hard work to make the customer’s interaction easy.

The better the system matches the customer’s mindset and guides action, the more mistake-proof it will be. Of course, things will go wrong. As we know from conversation among humans, it’s impossible to prevent all misunderstandings and it’s easier to recover in an environment of trust. All actions must be reversible or provide very clear warning when they represent commitments. Any interface for action that omits or misrepresents the full consequences to the user is failing to be truthful.

And since correcting course and getting back on track also requires a set of actions, the same principles apply to helping a user get out of a fix. The goal is to help users recover quickly and clearly while retaining confidence that the system is still on their side.

The context of action

To support the success of the user’s action, the system needs to implicitly or explicitly communicate the following in an efficient and context-aware manner:

- Prerequisites to action. What does the user need to do before the action is possible? (The user should only be presented with actions they can actually take.)

- Encouragement to action. How does the system articulate the benefits of taking the action?

- Instructions for action. Is it as simple as clicking Submit, or are we going down a complex path?

- Consequences of action. Set expectations of what will happen once the action is taken.

- Level of commitment. Is it possible to undo this action?

As an omnichannel platform for separating people from their money, Amazon offers good examples of ways to support action through clear, conversational language. The interaction for watching a sitcom that requires a premium HBO subscription is a study in doing things right (Fig 3.13). Amazon offers precisely the right amount of information about the benefits and consequences of completing the action.

The danger of ambiguity

Actions have consequences. It’s up to the phrasing of the action to communicate these clearly—the last thing you want to do is make your customers guess at what will happen. Ambiguity decreases trust and increases cognitive overhead—that means creating more anxiety and more work for users.

A trivial example of a European ATM demonstrates how a polite-sounding word—“especially”—can introduce uncertainty into the interaction (Fig 3.14). It’s trivial because I trust the machine to dispense the correct amount. It’s unsettling because why offer a soft preference like “especially”? By pulling the customer out of their flow, this sort of detail introduces anxiety and steals time. Even if this is the result of a mistranslation, it’s a choice with implications.

Fig 3.14: This European ATM offers options, but introduces unnecessary ambiguity into the interaction. The word “especially” is either unnecessary or misleading.

Fig 3.14: This European ATM offers options, but introduces unnecessary ambiguity into the interaction. The word “especially” is either unnecessary or misleading. Even in a system with nonverbal interface cues or a graphical interface, the choice of action verbs and phrases is the design choice (Fig 3.15). Even if you’re representing an action with an icon, you must be clear about the command that the icon represents. The phrase defines the action. And no amount of styling can overcome misleading wording. Each action has nuanced implications, as well as conventional usage.

The power of choice

As with choices of paths through the information, systems offer a choice of actions to take. Choices not only have a cognitive dimension, but an ethical one too. This is the moment when ease of use, business goals, and honoring the customer’s true goals hang in the balance. Any instance of choice may be minor, but they add up. A system working in true interactive cooperation with a user will not lead them down a bad path.

Former Google Design Ethicist Tristan Harris points out that offering a limited set of choices can create the illusion of greater freedom and instead manipulate and hijack the mind of the customer. The given choices may distract from true needs. As he says in “How Technology Hijacks People’s Minds ”:

When people are given a menu of choices, they rarely ask:

- “what’s not on the menu?”

- “why am I being given these options and not others?”

- “do I know the menu provider’s goals?”

- “is this menu empowering for my original need, or are the choices actually a distraction?”

Consider Facebook. Facebook mediates billions of relationships around the world: between friends, family, romantic partners, businesses and their customers. No matter the type of relationship or the type of message, Facebook offers a single primary reaction—“Like” (Love, Haha, Wow, Sad, and Angry are hidden, but appear on hover to offer an extended range of one-dimensional reactions).

Some posts are simply likeable, but what about the messages and connections that deserve more than a snap reaction (Fig 3.16)? As Tristan Harris posits, these options diminish and obscure the full range of human emotion. There’s social pressure to Like, and social acceptance in Liking. These options are so convenient, it’s easy to make the argument that Facebook has hijacked how people think about and negotiate relationships.

Fig 3.16: This is an easy post to Like, but what about topics that don’t reduce to bland approval or emoji outrage? Action labels define the range of human agency within any particular system. The more people rely on a given system, the more consequential these choices become.

Fig 3.16: This is an easy post to Like, but what about topics that don’t reduce to bland approval or emoji outrage? Action labels define the range of human agency within any particular system. The more people rely on a given system, the more consequential these choices become. This consideration adds an additional dimension to the maxim of Truthfulness. It’s up to your goals and your conscience to what extent you recognize a duty to minimize distraction from the customer’s higher-order life goals. The ultimate challenge for designers is to create a system in balance, one that’s transparent about the business goals it represents, while encouraging the user to take those actions that provide value to both the business and the customer.

The feedback loop

Every complex system starts out as a simple system that works — and the simplest coherent system is a feedback loop.

Habits are cognitive shortcuts that free our overtaxed brains to think about other things even when we’re doing complex tasks, like driving a car. At first, driving a car requires a lot of intense attention. Over time, drivers can steer, accelerate, brake, and check the mirrors without thinking. And—for better or worse—a habitual commuter can get home almost without noticing.

Anyone designing anything for humans needs to consider the point at which a behavior becomes a routine. The traditional dogma of user-centered design is that designers create systems to help people achieve goals by accomplishing tasks. A basic standard for an interactive system is usability—how easy something is to learn and use. Nothing that requires conscious thought will ever be as usable as something that’s used out of habit. Habits are hard to compete with, and habits are often entirely unhinged from goals.

A habit is a thing deep in the brain, a feedback loop that forms through repetition. In The Power of Habit: Why We Do What We Do in Life and Business, Charles Duhigg popularized the work of MIT researchers to understand the neurological basis of habit. There’s a cue—the trigger that sends someone down a habit path—a routine, and a reward. Over time, and through repetition, our brain comes to associate cues with rewards, reinforcing habits.

Game designer Amy Jo Kim expresses the idea of the habit loop as a type of learning loop, and encourages product designers to consider it in their work. In a learning loop, feedback from the system helps the user get better at interacting with the system over time. This growing mastery is its own powerful reward (Fig 3.17).

For example, the core action on Facebook is posting updates. The system gives you feedback in terms of Likes from your friends. Getting more reactions from more people feels like getting better at Facebook, and drives further interaction, whether or not interacting through Facebook has anything to do with any higher-order goal.

When designers talk about “delight,” they often mean some unexpected, pleasurable sensation generated from interacting with a product or service. The aspiration is that this delight will be part of the habit-reinforcing reward. It’s easy to mess this up by underestimating the amount of cognitive effort an unfamiliar system requires, and by overestimating how pleasurable the reward is. The result can be an unnecessarily novel interface that’s hard to learn and leaves the user frustrated rather than delighted. Or an interaction that’s only delightful the first time, then increasingly grating through repetition—like your barista telling a knock-knock joke.

Guidance: the system wants you to succeed

In an ideal world, every exchange would be go as smoothly as the perfect dinner party and every offered action would be self-explanatory—but the world is more complicated than that. Everyone has different skills and expertise, and many of the systems we’re designing truly do allow their users to accomplish new things in new ways.

Even a well-designed object or system may need to explain itself to help customers succeed every step of the way. Avoiding verbosity may seem more refined, but the confusion is likely to elicit some light profanity in response. A friend of mine photographed this water dispenser at his office. The dispenser required a little aftermarket guidance to facilitate success (Fig 3.18). While this object was designed to exist in mute simplicity, some kind person gave it the power of language to explain itself.

Opportunities to provide guidance include:

- Sales. Helping the customer understand whether a product or service will solve their problem or help them meet their goal in advance of any commitment.

- Instructions. Any additional information provided in context of the interaction, like stage directions. This general category includes hints and onboarding.

- Contingency messages. Letting the customer know something outside the expected flow has happened and getting them back on track. Includes errors.

- Notifications. Interruptions that occur outside the context of interaction.

- Documentation. Reference materials provided for study outside of interacting.

Fig 3.18: Workplace kitchens are a fruitful location for conducting interface design field studies.

Fig 3.18: Workplace kitchens are a fruitful location for conducting interface design field studies. In person-to-person interactions, we talk about offering a high level of service, anticipating needs and providing information at just the right time. Often, the best service is nearly invisible, like a server who manages to keep your drink topped-up without you noticing, or a bank teller who offers a pen along with the paperwork you need to fill out. In interaction design, offering just what’s needed for the task at hand is called progressive disclosure. The Quartz app does a nice job of this (Fig 3.19), offering a summary of the story that links to the complete article, and an emoji blurb that provides the option to see more detail.

Affordances and clearly labeled actions are not always enough, and that’s okay. Often interfaces end up worse off because designers think that a button label or an icon should do all the work. Combining an unambiguous action along with some additional guidance is the best way to support both habitual customers, and those new to the system or infrequent users.

In designing a high level of service-oriented guidance into interactions, there’s one thing to keep in mind above all: computers are good at storing and recalling information and people are not. As I’ve mentioned earlier, computers should do all the information storage and retrieval in the relationship. If at any point a computer-based system relies on human memory to function, that system has failed its human. In this regard, password-based authentication is the web’s most epic failure. This makes password recovery the most “popular” feature of any secure system. Bank of America makes their customers work hard to recover passwords (Fig 3.20). I suspect the designer didn’t read these instructions out loud.

While you should seek every opportunity to use stored information to benefit customers, also do your best not to creep people out. This is something Facebook has trouble with (Fig 3.21). Facebook tracks interpersonal interactions across billions of relationships, along with all the associated data users add to the system. By referring to themselves as a “we” that has concerns about “you,” Facebook destroys the comforting illusion that it’s just a neutral utility.

Notifications

It used to be that you had to interact with a system through its interface to exchange information with it. Now, thanks to push notifications, a system can get your attention even if you’re not currently using it.

Notifications do serve a purpose. In theory, they allow a system to continue to serve a customer without requiring continuous attention. In practice, they’re easy to abuse. The desire for engagement can cloud judgment about what sorts of interruptions actually benefit the customer. The disruption can snowball, especially if you have relationships with multiple systems, or the same system across multiple devices. The proliferation of notifications can lead to constant interruption, which is the opposite of feeling served and empowered by technology.

In general, notifications should require affirmative consent from the customer. Therefore, you need to establish trust and offer value before prompting your customer to receive notifications. It’s particularly important to approach notifications from a place of politeness, and have a holistic strategy across all messaging types and channels that the system uses to convey information.

The characteristics of helpful notifications:

- They’re well-timed. In the old days, the worst notification was a phone call during dinner. Notifications should arrive at the right time for the customer to respond. Unless the notification is for an early morning alarm, 3 am is unlikely to be that time.

- They’re concise and clear. This goes for all communication, especially for alerts requiring action.

- They’re personalized and relevant to the customer. Unless the notification is truly an emergency, use other forms of communication for general messages.

- They deliver value and enable action. Notifications should only be used to alert the customer to something they need to take action on. Creating a sense of urgency when there's no possible action just creates anxiety. No one needs more anxiety.

- They generate interest and reward trust. Every notification is a potential enticement to shut off all notifications. Don’t be that.

Google’s Material Design Guidelines is written for Android App designers, but its Notification guidelines are broadly applicable (http://bkaprt.com/cd/03-02/). They say that notifications are appropriate for:

- communication from another person through your system, assuming it’s a person your customer is likely to want to hear from;

- action that helps your customer meet a goal or have a better quality of life, such as getting to a meeting on time, or avoiding rushing to make a flight that’s been delayed; and

- a system state change that suggests or requires action.

In summary, notifications are appropriate only when it’s likely the customer will welcome the interruption and have the ability to take action in response, such as acknowledging a calendar event or shutting off an alarm.

Notifications are not appropriate for:

- Advertising. Unless the customer has opted-in specifically to promotional messages, avoid interrupting them with a commercial.

- Messages with no customer value. Be very clear about the value of the service you’re providing, or don’t create a notification.

- Situations in which there is no action. If there’s nothing for the customer to act on, there’s no reason to interrupt them.

In 2014, Skype demonstrated a high degree of context awareness by introducing the concept of an “active endpoint.” If a Skype user is signed into multiple devices, say a laptop, tablet, and a smartphone, but they’re only sending chat messages from the phone, Skype chat notifications will only go to the phone. All the other devices will remain silent, at least with respect to Skype.

Onboarding

The onboarding process is how you help a new (or long-lost) customer feel at ease, in control, and productive. The term comes from the common human resources practice of new-hire orientation. Just dropping someone cold into a new organization and leaving them to figure things out on their own is unkind. No one likes to feel ignorant or inept. It’s in the interest of an organization to help new staff members feel competent and supported. The same goes for new customers.

The amount and type of onboarding guidance your system needs depend on three things:

- How different the system is from others your customer may have used already

- The conceptual complexity of the system

- The amount of effort the customer needs to put in before getting something useful out

A well-designed system should draw on the existing mental models of potential customers and require little effort before payoff. However, if your system presents unfamiliar concepts—a new way to accomplish something or a way to accomplish something complex—you may need to design an experience specifically for new users. This onboarding experience should be integrated into the overall experience, and be as lightweight as possible—the opposite of a long, up-front tutorial. For example, Duolingo does a good job of helping users self-sort based on their expertise and desire to just get started (Fig 3.22).

To design effective onboarding, you need to know which customer actions will deliver the greatest value. And if you don’t know what constitutes value, before designing the system, you’d better do some user research.

In general, the best onboarding is the least intrusive. Don’t focus on creating a delightful process that’s an experience in and of itself. Focus on getting the user to the value in a way that supports your business goals. Identify barriers to achieving value and what the customer needs to overcome them, whether it’s simple inline information, or hand-holding and encouragement throughout the process.

Accidents Happen

The designer shouldn't think of a simple dichotomy between errors and correct behavior: rather, the entire interaction should be treated as a cooperative endeavor between person and machine, one in which misconceptions can arise on either side.

—Donald Norman, The Design of Everyday Things

We designers demand creativity of ourselves, but don’t anticipate it in the people we design for. That’s a failure of imagination. Expecting that people will behave “correctly” is the path to fragile interactions.

Humans are not precision systems. Our memories lapse, our fingers slip, and we do things in the wrong order. Screwing up is our birthright. Science fiction has enshrined in us a sense of superiority—some special human quality is often the proffered explanation for escape from an extraplanetary scrape—but it’s really our mistakes that define us.

How a machine responds to human error is the best test I can think of for its ability to mimic human intelligence—or at least to seem more human than cold, calculating machine. Precise calculations and dazzling displays of information retrieval are easy for machines, but supportive redirection? Not so much. Forgiveness may be divine, but it’s certainly not digital.

Humans are improvisational; machines run according to rules. From the perspective of a computer, what is human error? It’s an unanticipated input that leads to dead ends and unnecessary frustration (for the human, not the machine). Too many system responses, even in sophisticated interfaces, are simply variations on “Does not compute.”

Errors in human social interactions provide opportunities for the unexpected, both tragic and delightful. When humans make mistakes with each other, the most humane thing to do is to gently correct, redirect, and try to prevent the error from happening again in the future.

What if the problem is the system’s fault? Sometimes the human does everything right, but the machine makes an error and the system breaks. With some clever branding, this can be made to be charming, like the early Twitter fail whale (Fig 3.23) which turned server outage into a cultural icon. What isn’t charming is being too glib if the system failure causes a serious setback, especially anything involving the loss of personal data. Imagine your doctor setting your medical records on fire and then just shrugging with an “Oops!” A sincere apology and a quick path to setting things right would be more appropriate.

Matt Jones, interaction designer and author, coined the phrase “be as smart as a puppy,” (also affectionately referred to as BASAAP) to say designers should be “making smart things that don’t try to be too smart and fail, and indeed, by design, make endearing failures in their attempts to learn and improve. Like puppies” (http://bkaprt.com/cd/03-03/). This is a useful notion to consider in terms of setting expectations for how much intelligence you can expect from a system that’s learning from its user over time. However, people don’t typically depend upon puppies to help them accomplish important goals. Failure is not endearing to someone trying to book a flight or make a money transfer. Or, trying to read a book.

The Amazon Kindle surprised me by letting me know I couldn’t read a book I had purchased sometime previously (Fig 3.24). This message presumes the customer knows how to deregister a device. There’s no path to action, just an acknowledgement of the roadblock.

Fig 3.24: This is a hostage situation. The user is offered no alternative but to acquiesce.

Fig 3.24: This is a hostage situation. The user is offered no alternative but to acquiesce.Do the poka-yoke

Poka-yoke is the Japanese term for mistake-proofing in manufacturing. Poka-yoke designs are most common in machines and devices that are dangerous if used incorrectly. For example, no microwave will start unless the door is shut.

The simplest poka-yoke in digital interaction is only allowing user input within boundaries. For example, by offering a menu of options rather than an open text input, you can mistake-proof an online form. It’s an excellent design principle to run through every possible scenario in which a customer might use a system as designed and yet end up harming themselves, like misspeaking or misspelling the names of their medications.

From a business relationship perspective, email is treacherous. Prevent your customer from looking like a careless idiot, and you earn their loyalty forever. Gmail offers a fantastic poka-yoke feature (Fig 3.25). If you try to send a message that contains a phrase such as “attached to this message” or “I’ve included” without attaching a file, a dialog box appears to alert you to your error. Now if only they could do something about the toxic waste spill that is reply-all!

Interaction design begins in the mind of the customer, which means error prevention starts in the first moments of creating the awareness that any given system exists. Make sure you start with the right concept, one that matches the users’ mental model. You cannot control user expectations and associations, so you must understand them and look beyond your product to see what creates them. Assume everyone is on autopilot all the time, and that they’re drawing unconscious inferences from the barest of cues. Asking for open-ended input will lead to the assumption that any answer is acceptable. A voice that sounds too much like a person will set expectations too high.

Our human brains are great at many things, but we often have as much trouble remembering as predicting. Never require users to retain information in their memories. Always ask, “Are you sure?” before dangerous or consequential actions. And warn people, gently, when they’re getting too close to the rails. Like elephants, machines have excellent memories. Tax the robots, not the apes.

Context Makes the Conversation

As interconnected digital systems endeavor to offer more “natural” ways of interacting through voice and text, the limitations of these systems, combined with the context of their use, can make interacting with them somewhat of a minefield. This leads to the sort of frustration intuitive interfaces are supposed to prevent. Being able to interact with a computer in the same way you text a friend or talk on the phone sets high expectations. Human and machine may be conversing, but they are not cooperating. For example, the H&M shopping assistant on the Kik messaging service creates a much more awkward interaction than simply browsing a website (Fig 3.26).

Natural language processing requires the computing power to analyze and interpret human speech or text in real time. There’s no room for ambiguity.

Before rushing to chat, consider whether it really will make life easier. Some of the drawbacks include:

- Lack of context awareness. The systems can’t pick up on contextual cues that might be available to a human, and probing for information would cross over into uncanny valley. Siri or Alexa could ask, “What are you doing right now?” to help train it to be more context aware, but that would be creepy.

- Takes more time. The web has made self-service fast and satisfying for a wide variety of complex tasks. It’s easy to become habituated to speed. Talking through a task can feel agonizingly slow, much more so than clicking a button.

- Unpredictable. Even the most intelligent system is vastly more limited than a human. It can be impossible to predict what options are available or what input is acceptable.

- Not error-tolerant. Unexpected input can bring interactions to a halt with no path forward.

Fig 3.26: The H&M bot on Kik employs a hybrid chat/menu interface that eats up a lot of valuable screen space. The advantage over browsing images on a mobile website is unclear.

Now, Look Who’s Talking

While it can be helpful to analyze an individual’s interaction with a system into discrete moments, for the sake of conceptual clarity in the design process, they’re all intertwined. The same conversational principles apply across all moments in an experience, just with different emphases at different times. And all customers will have slightly different needs and preferences; some want to take action before really understanding who they’re dealing with, while others are more careful before diving in. Avoid making assumptions about what users want in favor of understanding real-world contexts.

A set of conversational principles that can be used in developing speaking interfaces is a good starting point, but it’s the personality that unifies the experience and brings a conversation to life. A personality is a set of stable characteristics, manifested in behavior patterns and situational responses. We tend to consider personality traits as constant and meaningful (regardless of fluctuations in mood). If your friend tells you about meeting a new person, you may ask, “What are they like?” and expect an answer in adjectives—energetic, withdrawn, friendly, creepy, talkative, or laid-back. Words that describe their personality help you understand what it’s like to interact with them.

Conversational principles are nothing without a unifying personality—the animating spirit. A distinctive, appropriate, consistent personality brings the principle of conversational design to life. The more sophisticated the interaction you can have with a system, the more personality plays a part. After all, a golden retriever has more personality than a goldfish. Let’s look at how language and behavior can demonstrate the personality of a product or service.