Preface

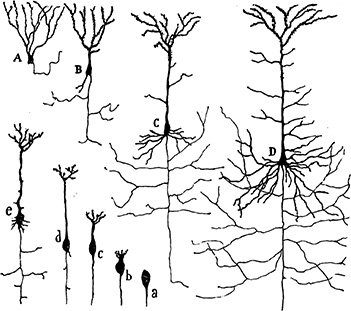

Commonly called brain cells, billions of interconnected neurons make up your nervous system, and they enable you to sense, to think, and to take action. By meticulously staining and examining thin slices of brain tissue, the Spanish physician Santiago Cajal (Figure P.1), was the first1 to identify neurons (Figure P.2), and in the early half of the twentieth century, researchers began to shed light on how these biological cells work. By the 1950s, scientists inspired by our developing understanding of the brain were experimenting with computer-based artificial neurons, linking these together to form artificial neural networks that loosely mimic the operation of their natural namesake.

1. Cajal, S.-R. (1894). Les Nouvelles Idées sur la Structure du Système Nerveux chez l’Homme et chez les Vertébrés. Paris: C. Reinwald & Companie.

Figure P.1 Santiago Cajal (1852–1934)

Figure P.2 A hand-drawn diagram from Cajal’s (1894) publication showing the growth of a neuron (a–e) and contrasting neurons from frog (A), lizard (B), rat (C), and human (D) samples

Armed with this brief history of neurons, we can define the term deep learning deceptively straightforwardly: Deep learning involves a network in which artificial neurons—typically thousands, millions, or many more of them—are stacked at least several layers deep. The artificial neurons in the first layer pass information to the second, the second to the third, and so on, until the final layer outputs some values. That said, as we literally illustrate throughout this book, this simple definition does not satisfactorily capture deep learning’s remarkable breadth of functionality nor its extraordinary nuance.

As we detail in Chapter 1, with the advent of sufficiently inexpensive computing power, sufficiently large datasets, and a handful of landmark theoretical advances, the first wave of the deep learning tsunami to hit the proverbial shore was a standout performance in a leading machine vision competition in 2012. Academics and technologists took note, and in the action-packed years since, deep learning has facilitated countless now-everyday applications. From Tesla’s Autopilot to the voice recognition of Amazon’s Alexa, from real-time translation between languages to its integration in hundreds of Google products, deep learning has improved the accuracy of a great number of computational tasks from 95 percent to 99 percent or better—the tricky few percent that can make an automated service feel as though it works by magic. Although the concrete, interactive code examples throughout this book will dispel this apparent wizardry, deep learning has indeed imbued machines with superhuman capability on complex tasks as diverse as face recognition, text summarization, and elaborate board games.2 Given these prominent advances, it is unsurprising that “deep learning” has become synonymous with “artificial intelligence” in the popular press, the workplace, and the home.

2. See bit.ly/aiindex18 for a review of machine performance relative to humans.

These are exciting times, because, as you’ll discover over the course of this book, perhaps only once in a lifetime does a single concept disrupt so widely in such a short period of time. We are delighted that you too have developed an interest in deep learning and we can’t wait to share our enthusiasm for this unprecedentedly transformative technique with you.

How to Read This Book

This book is split into four parts. Part I, “Introducing Deep Learning,” is well suited to any interested reader. This part serves as a high-level overview that establishes what deep learning is, how it evolved to be ubiquitous, and how it is related to concepts like AI, machine learning, and reinforcement learning. Replete with vivid bespoke illustrations, straightforward analogies, and character-focused narratives, Part I should be illuminating for anyone, including individuals with no software programming experience.

In contrast, Parts II through IV are intended for software developers, data scientists, researchers, analysts, and others who would like to learn how to apply deep learning techniques in their field. In these parts of the book, essential underlying theory is covered in a manner that minimizes mathematical formulas, relying instead on intuitive visuals and hands-on examples in Python. Alongside this theory, working code run-throughs available in accompanying Jupyter notebooks3 facilitate a pragmatic understanding of the principal families of deep learning approaches and applications: machine vision (Chapter 10), natural language processing (Chapter 11), image generation (Chapter 12), and game playing (Chapter 13). For clarity, wherever we refer to code, we will provide it in fixed-width font, like this. For further readability, in code chunks we also include the default Jupyter styling (e.g., numbers in green, strings in red, etc.).

3. github.com/the-deep-learners/deep-learning-illustrated

If you find yourself yearning for more detailed explanations of the mathematical and statistical foundations of deep learning than we offer in this book, our two favorite options for further study are:

Michael Nielsen’s e-book Neural Networks and Deep Learning,4 which is short, makes use of fun interactive applets to demonstrate concepts, and uses mathematical notation similar to ours

Ian Goodfellow (introduced in Chapter 3), Yoshua Bengio (Figure 1.10), and Aaron Courville’s book Deep Learning,5 which comprehensively covers the math that underlies neural network techniques

4. Nielsen, M. (2015). Neural Networks and Deep Learning. Determination Press. Available for free at: neuralnetworksanddeeplearning.com

5. Goodfellow, I., et al. (2016). Deep Learning. MIT Press. Available for free at: deeplearningbook.org

Scattered throughout this book, you will find amiable trilobites that would like to provide you with tidbits of unessential reading that they think you may find interesting or helpful. The reading trilobite (as in Figure P.3) is a bookworm who enjoys expanding your knowledge. The trilobite calling for your attention, meanwhile (as in Figure P.4), has noticed a passage of text that may be problematic, and so would like to clarify the situation. In addition to trilobites habituated within sidebars, we made liberal use of footnotes. These are likewise not essential reading but provide quick explanations of new terms and abbreviations, as well as citations of seminal papers and other references for you to follow up with if you’re so inclined.

Figure P.3 The reading trilobite enjoys expanding your knowledge.

Figure P.4 This trilobite calls attention to tricky passages of text. Look out for it!

For much of this book’s content, corresponding video tutorials are also available. Although the book provided us with an opportunity to flesh out theoretical concepts more thoroughly, the videos enable you to become familiar with our Jupyter notebooks from a different perspective, in which the importance of each line of code is described verbally as it is typed out.6 The video tutorial series is spread across three titles, each of which parallels particular chapters of the book:

6. Many of the Jupyter notebooks covered in this book are derived directly from the videos, which were all recorded prior to writing. In some places, we decided to update the code for the book, so while the video version and the book version of a given code notebook align quite closely, they may not always be strictly identical.

Deep Learning with TensorFlow LiveLessons:7 Chapter 1 and Chapters 5 through 10

Deep Learning for Natural Language Processing LiveLessons:8 Chapters 2 and 11

Deep Reinforcement Learning and GANs LiveLessons:9 Chapters 3, 4, 12, and 13

7. Krohn, J. (2017). Deep Learning with TensorFlow LiveLessons: Applications of Deep Neural Networks to Machine Learning Tasks (video course). Boston: Addison-Wesley.

8. Krohn, J. (2017). Deep Learning for Natural Language Processing LiveLessons: Applications of Deep Neural Networks to Machine Learning Tasks (video course). Boston: Addison-Wesley.

9. Krohn, J. (2018). Deep Reinforcement Learning and GANs LiveLessons: Advanced Topics in Deep Learning (video course). Boston: Addison-Wesley.

Register your copy of Deep Learning Illustrated on the InformIT site for convenient access to updates and corrections as they become available. To start the registration process, go to informit.com/register and log in or create an account. Enter the product ISBN (9780135116692) and click Submit. Look on the Registered Products tab for an Access Bonus Content link next to this product, and follow that link to access any available bonus materials. If you would like to be notified of exclusive offers on new editions and updates, please check the box to receive email from us.