Chapter 12

Sound Design

In this chapter we will be exploring how sound plays an essential role in creating an immersive experience. From how sound is created to how we can re-create it in a digital space, there are a lot of exciting things happening within 3D sound experiences. Here is what we will be covering:

HEARING WHAT YOU SEE It is important first to understand how we hear so that we can then look at the best ways to re-create that sound to create realism in a soundscape.

SPATIAL SOUND Just as in physical spaces, sound has direction and distance. There is different technology that will help create a sense of 3D sound.

AUGMENTED AUDIO Just as we can add a layer of visuals into a user’s view, we can also add a layer of ambient audio to what they hear.

VOICE EXPERIENCES With XR devices becoming more hands free, voice is becoming an intriguing way to interact with a computer.

Hearing what you see

Before you continue reading, I have a task for you. Find a place that you can sit comfortably for about five minutes, and bring a notebook and something to write with. It can be inside or outside—it really can be anywhere (FIGURE 12.1).

FIGURE 12.1 Listening. Woman sits in stillness listening. It is easier to actively listen when you are still and aware of where sounds are coming from.

Photographer: mimagephotography for Shutterstock

Close your eyes, and be still. Bring your awareness to listening. Try to avoid moving your head as you do this. Don’t turn your neck toward a sound; try to keep your neck at a similar orientation.

Listen for what you hear. See if you can identify what sounds you are hearing.

Then go one step further and try to identify where those sounds are coming from. Are they close? Far? Which direction are they coming from? Keeping yourself as the central axis, do you hear them in front of you? Behind you? To the left or right of you? Up high or down low?

When five minutes are up, draw out what you heard by placing a circle in the middle of the page to represent you, and then map out all the sounds that you heard around you in the locations you heard them from. If they felt close, write them closer to you, and in the same way, if they felt far away, then write them farther from you.

Okay, go off, give it a try, and then return so you can use your experience to provide context as we explore what you just experienced.

I realize this exercise could present some challenges for those with any temporary or permanent hearing impairments. However, if you are able to feel any sound vibrations, you could do the same exercise paying attention to those sensations instead. The goal is to pay attention to the experience of sound in your natural physical environment.

As you draw out your diagram of sound, start to pay attention to where you place the sound in context to yourself. Also consider how you determine the source of the sound. This is called sound localization. It is impressive how well we can understand spatial and distance relationships just from sound. It is easy to state that things that sound louder are closer and things that are softer are farther away; however, that isn’t the case. When someone whispers into your ear, even very quietly, it can sound very loud. You can hear thunder from miles away, and yet, it also can be quite loud.

Sound localization The ability of a listener to identify the origin of a sound based on distance and direction.

Part of the challenge is that sound isn’t static; it travels. This journey starts with the object that makes the sound but also includes where and how far the sound can travel before it hits a surface or is absorbed into an object. You can go outside to a wide-open space, yell “echo,” and hear nothing back. When you go into a tunnel or under a bridge, however, and yell “echo,” you hear a response. This is because the sound waves reflect that sound back to you, after bouncing off the flat, smooth surface. These flat surfaces help reflect and continue sound waves moving from one place to another. The sound will continue until the sound wave carrying it through the air loses its energy. Rooms with carpeting and softer materials have less echo than rooms with hard surfaces, as they have more places for the sound to be dampened, stopping the vibration of the particles and stopping the sound. The environment of the room has a direct impact on the sound experience. This is why it is such an essential part of the immersive experience.

How do we hear sound?

Sound is created through the vibration of an object. This causes particles to constantly bump into one another, sending vibrations as sound waves to our ears and, more specifically, to our eardrums. When a sound wave reaches the eardrum, it too will vibrate at the same rate. Then the cochlea, inside the ear, processes the sound into a format that can be read by the brain. To do this, the sound has to travel from the ear to the brain along the auditory nerve. Sound requires an element or medium to travel through, such as air, water, or even metal. You may already understand this process, but as we look to design for sound, there are some key properties that are essential to understand, including loudness and pitch.

Because we can detect a wide range of sound, we need a way to measure the intensity of the sound. This is called loudness, which uses the unit of decibels (dB) to measure how loud or soft a sound is.

Loudness The intensity of a sound, measured in relation to the space that the sound travels.

To help you add some perspective to dB measurements:

A whisper is between 20 and 30 dB.

Normal speech is around 50 dB.

A vacuum cleaner is about 70 dB.

A lawn mower is about 90 dB.

A car horn is about 110 dB.

Note

Loudness is not on a linear scale, despite the use of the dB measurements. Our lowest hearing threshold is 0 dB, and the threshold for sound to cause pain is 120 dB.

Sound changes depending on how fast the object is vibrating. The faster the vibration, the higher the sound. This pitch is measured using frequency, or how many times the object vibrates per second. Humans can hear a wide range of pitches, but cannot detect very low and very high sounds. Frequency measures the cycle rate of the physical waveform; pitch is how high or low it sounds when you hear it.

Pitch The perceived highness or lowness of a sound based on the frequency of vibration.

Frequency is measured in hertz (Hz). The human hearing ranges from 20 to 20,000 Hz. However, our hearing is most sensitive to sounds ranging in frequency between 2000 and 5000 Hz. Those who experience hearing loss will often start to lose or have the upper pitches affected first.

To re-create sound, we need to record a digital representation of the sound. This digital audio encodes the sound waves of the audio signal in sequence. You can record these at different rates. TABLE 12.1 breaks down the formats.

Table 12.1 Audio File Formats

UNCOMPRESSED AUDIO FORMATS |

LOSSLESS COMPRESSED AUDIO FORMATS |

LOSSY COMPRESSED AUDIO FORMATS |

|---|---|---|

PCM |

FLAC |

MP3 |

WAV |

ALAC |

AAC |

AIFF |

WMA |

WMA |

How do you choose which format to use? The answer depends on what you’re working with.

Raw audio to be captured and edited: Uncompressed formats allow you to work with the highest quality file, and then you can compress the files to be smaller afterward.

Music and voice audio: Lossless audio compression files maintain the audio quality but also the larger file sizes.

Transferable and sharable audio: Lossy audio compression formats produce smaller files sizes, which facilitates sharing.

How is sound useful?

Sound is much like a ripple in water; it starts in a central spot, and then it slowly extends out gradually getting smaller and smaller (or quieter and quieter) as it moves away from the center. Even if you hear a sound from far away, you can still detect where the sound is coming from or at least an approximate direction. You can tell the difference between footsteps walking behind you or down another hallway. You can tell the difference between a crowded restaurant and an empty one from the lobby, all because of the sound cues of chatter. The more chatter you hear, the more people must be inside.

These sound cues help the user process information about a space both before and as they enter it. Sound adds an additional layer of information that will help the user further grow their understanding of what is going on around them.

We have discussed how light can be used to understand space and depth, and sound can also be used to calculate distance and depth. Through the use of SONAR (sound navigation and ranging), you can measure the time it takes for a sound to reflect back its echo. This idea is used by boats and submarines to navigate at sea and to learn about the depth of the ocean as well.

How do we use sound in XR?

There are many ways that sounds play a role in our understanding of space. Within XR there are three main ways sound is used.

Ambient sound

Feedback sound

Spatial sound

Ambience for reality

In order to really create a sense of “being there,” sound adds another layer of realness. When you see a train approaching, that comes with the expectation of hearing the wheels on the tracks, the chugging sound of the engine, steam blowing, and the whistle, horn, or bell. These sounds add to your perception of the train approaching.

While a train has very specific noises that we associate with it, there can also be less obvious sounds that make an experience feel real. Within an environment, the layering of ambient sounds, or background noise, creates a sense of space and also a mood. If you are trying to give the illusion that the user is outside, then you may want to incorporate birds chirping and wind blowing into the sound track. These ambient sounds, though not too noticeable, will add a sense of realism to the experience. Just as you did with the listening exercise at the beginning of the chapter, it is important to really be aware of all the sounds you hear within a space. Notice the ambient sounds that allow the user to feel truly immersed. Listen for sounds that you can mimic to re-create the scene. Sounds that are noise intensive and have consistent looping, such as fans, wind, or waves, do not work as well in this medium, however, so you want to avoid them. Sounds that have a start and stop to them will be more effective and less intrusive.

When designing for AR and MR, you can rely more on the natural ambient noise that will be in the user’s physical space. For example, if you know that people will experiencing something outside, then you can allow for the natural ambient noises that will be part of the experience. However, if there are specific sounds that people should hear, then you will want to include those in the experience design. You should design for what you know. If you are creating a navigational experience that requires people to be outside to use it, then you can design the experience knowing that it will be used outside, which will come with all the ambient sounds built in. Any other noises that you want people to hear on top of that will have to be designed in.

Providing feedback

For the user experience, sound can be a great way to provide feedback about how the user is interacting within space. Hearing a sound when you select an interactive element will reinforce that you have successfully activated it. These sounds can be very quiet, such as a click, or louder, such as a chime. Just be sure to use these sounds in a consistent way, so that the user will start to associate the sounds with their actions.

Sound cues can guide interactions. You can also use sound to direct the user to look or move to another location, to make sure they see an object that may not be in their gaze. It can also be used in VR to alert the user when they are close to the edge of their space boundaries.

Creating depth

Because our understanding of sound is 3D, it makes sense that you would also re-create sound to reflect depth. It also provides information to the user, such as how close or far away an object is. This topic is such an essential part of XR sound design, we are going to dive into how make your sound have depth next.

Spatial sound

To re-create sound in a spatial environment, look at two components.

How the sound is recorded

How the sound is played back through speakers or headphones

The traditional types of audio recordings are mono and stereo. Mono sound is recorded from a single microphone, while stereo is recorded with two microphones spaced apart. Stereo is an attempt to create a sense of depth by having different sounds heard on the right and left sides of a recording. This is the kind of sound that laptops and phones can play back. It is intended to create a sense of 3D audio. The concept of 360-degree sound has been experimented with for years, looking at how surround sound can allow sound to come from different speakers all around the room creating a full 3D audio experience. This is used most commonly for the cinema and must be designed around people sitting in one fixed location.

Single-point audio capture

To help your stereo recordings sound even more natural, one option is a binaural audio recording format. To record binaurally, you record from two opposite sides and place each microphone inside a cavity to replicate the position and chamber of an ear. This concept is used to re-create sound as closely as possible to the way we hear it ourselves. Headphones are needed to accurately listen to binaural sound. Because the sound was captured in-ear, the idea is to then listen to it in the same format. People experiencing sound via VR are almost guaranteed to be wearing headphones or listening from the speakers built into the headset, so binaural sound recording is a good fit: It records the sound exactly how people will experience it.

Binaural A method of recording two-channel sound that mimics the human ears by placing two microphones within a replicated ear chamber positioned in opposite locations to create a 3D sound.

Another prominent type of immersive sound uses ambisonics. This format is not reliant on the user wearing headphones. Ambisonic audio uses four channels (W, X, Y, and Z) of sound versus the standard two channels. An ambisonic microphone is almost like four microphones in one. You can think of this as 2D (stereo) versus 4D (ambisonic) sound. Ambisonic microphones have four pickups, each pointed and oriented in a different direction making a tetrahedral arrangement. Sound from each direction is recorded to its own channel to create a sphere of sound (FIGURE 12.2). This really does make a huge difference in the ability to capture an immersive soundscape.

FIGURE 12.2 Ambisonic Microphone. Able to capture audio from four directions at once, this Sennheiser ambisonic microphone is creating a spatial audio recording from nature.

Ambisonic A method of recording four-channel sound that captures a sphere of sound from a single point to reproduce 360° sound.

The ambisonics format is not new either. It was developed by the British National Research Development Council in the 1970s, more specifically, by engineer Michael Gerzon. Even though this technology has been around for decades, it has struggled to find a commercial use, which limited its success—until now. With its success in the VR industry, this audio format is seeing rejuvenation.

The amazing part of these spatial audio techniques is you can record the sound in the exact location of the original situation that you want to re-create in your 3D experience. You can hear an orchestra how the conductor does, by recording in the exact location of where they stand. This idea of presence, or the sense of feeling like you are there, can be reinforced with the use of this kind of audio. While the visuals are an important component to creating this illusion, they are only a part of it. Because human beings engage all of our senses in any place we are, having audio that is as immersive as the visuals can provide a realistic experience. The more real it is, the more we are able to connect with an experience emotionally, mentally, and physically.

The one downside of audio capture from a single location listening in all directions around it—such as using binaural or ambisonic sound capture—is that it is reliant on a sweet spot. When the audio is captured this way, the microphones remain still. However, humans are often in a state of motion. Even if we are sitting, we still will move our heads either toward a sound or an image or simply to remain comfortable throughout the experience. When the user moves from that specific location, they move out of that sweet spot. Some adjusting can be made during the audio edit, but to have the best immersion, different headphones and HMDs have built-in head trackers to adjust the audio with the user’s motions. Positioning of the sound in relation to the user’s ears really changes the sense of direction just as it would if they were physically in the space. This will have a direct effect on the user’s perception of reverb, frequency, pitch, and intensity of the sound.

Paradise case study

Many XR experiences rely on an individual user experience, where each person will have their own set of headphones on or be inside their own virtual space. However, there is now technology that allows these experiences to become social and collaborative. This is a feature for which there is more and more of a demand. In a social situation, the sound may not track with the user. However, it could be designed to be static within a space, allowing the sound to change as the user moves through space (as in the real life).

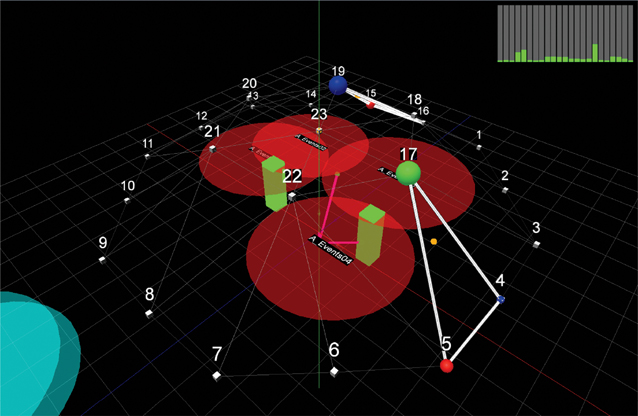

Paradise is an interactive sound installation and gestural instrument for 16 to more than 24 loudspeakers (FIGURE 12.3). For this collaborative project, Douglas Quin and Lorne Covington joined their backgrounds in interaction design and sound design to create a fully immersive sound experience that they optimized for four to eight people. The installation allows users to “compose a collage of virtual acoustic spaces drawn from the ‘natural’ world.”1 As users move through the space and change their arm positioning, sensors activate different soundscapes from wilderness and nature to create a musical improvisation. This composition is unique each time as it relies on how each user moves and interacts within the space. Motions can change the density of sounds, the volume of them, the motion or placement of the sound in the space, and the overall mix of the sounds together.

1 www.veniceperformanceart.org/the-art-week/fragile-body-material-body-2016/artists/douglas-quin-lorne-covington

FIGURE 12.3 Paradise Experience. Visitors react to the interactive soundscape environment of Paradise. Venice International Performance Art Week, 2016.

Photograph used by permission of Douglas Quin

This experience was reimagined for both interior and exterior spaces. Changing the location “creates a different spatial image,” Quin explained when I spoke with him and Covington about the challenges of the project. As they re-created the experience, they had to adjust for the location. The exterior exhibit required fewer ambient sounds, as they were provided naturally. The interior exhibit required more planning based on how sound would be reflected and reverbed by the architecture of the space (FIGURES 12.4 and 12.5).

FIGURE 12.4 Virtual Design Environment. In preparation for the Paradise installation a virtual plan was created using an accurate floor plan (including elevations) to prototype a virtual reality (VR) environment offsite in advance.

Image used by permission of Lorne Covington

FIGURE 12.5 Behind the Scenes. This behind-the-scenes screen capture shows the installation environment for Paradise. Numbers indicate loudspeakers. The green rectangular blocks are visitors. The large red circles are unseen zones of sounds that slowly rotate. Sounds are activated when a visitor breaks the edge of a circle. The triangles with colored balls are sound sources for any given sound (with volume indicated by the size of each ball).

Image used by permission of Lorne Covington

Covington compared designing spatial interactive audio to having a good dance partner who responds to you. In the real world you don’t know what will happen next, so you have to be prepared for whatever they will do next, and respond accordingly.

Both Covington and Quin described their interactive experience as a complex reactive system. As Quin said, “The sound has to react along with the world. Instead of thinking of the audio as a soundtrack, consider the audio more as the action and reaction.” If someone does this, then do A; if someone does that, then do B; and so on.

This allows for a “shared delight in surprises.” Delight was shared by both the participants and Quin and Covington, who enjoy watching all the ways people interact with the experience. It’s the participant’s agency that creates a unique composition of sounds in each space.

Augmented audio

Imagine going for a bike ride while listening to your favorite playlist, receiving voice-driven instructions, and still being able to hear car engines, sirens, and horns honking all around you. This is the power of augmented audio. When you put a pair of headphones on, you block or dimmish all other sounds. There are times when this experience is great: In fact, you may choose to have noise cancelling headphones to completely block out sounds around you. When you need to concentrate or focus on one thing, cutting out all distractions is a really great tactic. But there are other times, such as walking or riding a bike through busy streets, that you should not fully cut off the outside sounds for your protection. And at times, you may simply want to maintain awareness of the physical environment around you.

Augmented audio The layering of digital sound on top of, but not blocking out, the ambient sounds of an environment.

Augmented audio, also referred to as open-ear audio, allows you to hear all the ambient sounds around you while adding another layer of audio on top of it. This allows you to receive navigational directions, participate on a phone call, listen to an audiobook, or listen to your favorite music—all while still being connected to the world around you.

Smartglasses, also known as audio glasses, come in different shapes from a number of different manufacturers. Many of these come in a sunglasses option, as they are most likely to be used outside. However, many come with customizable lens options to personalize your experience.

Bose was the first to the market and started off demonstrating the technology using 3D printed prototypes at South by Southwest (SXSW) Conference and Festival in 2018. I remember walking by the Bose house in Austin, Texas, where they had taken over part of a local restaurant to showcase their AR glasses. I was intrigued. I wanted to know how Bose, known for their high-quality speakers and headphones, was entering the world of AR. Well, I quickly found out how important audio is to an immersive experience while wearing their AR glasses on a walking tour of Austin (FIGURE 12.6). The experience started by connecting the sunglasses to my phone through Bluetooth. Because the glasses took advantage of the processing power of a smartphone, Bose could keep them lightweight and cool. Instead of reinventing what was already available in a phone, they utilized it. As I walked, I was guided by voice to walk a specific route. As I approached certain landmarks, I was provided information about each area. Using the location of my phone, the experience was customized and timed so that I could learn at my own pace. The best part was I could still talk to those around me as I walked, and we could exchange observations. This instantly proved beneficial: One person in the group spotted a famous actor stepping out of their vehicle for a film premiere at the festival and was able to tell everyone else as we continued listening to our guided tour. While celebrity spotting might not happen every day, it did prove the value in allowing unexpected sounds that are happening around a user to still be heard while they are listening to audio of the designed experience.

FIGURE 12.6 AR Sound. 3D printed prototypes of the original Bose AR glasses at SXSW 2018.

To be clear, these glasses and similar pairs from other developers don’t show the user any visuals. They are just to provide audio. Importantly, they allow for voice interactions without needing to take out a phone. They allow the user to interact hands-free and ears-free. They are essentially replacements for headphones or ear buds that allow the user to still hear everything around them at the same time.

How does it work?

Using what is called open-ear technology, a speaker is built into each arm of the audio glasses. What helps make them augmented, while also staying private, is the position and direction of the speakers. One speaker is placed on each arm of the glasses near the temple so that the sound is close, but still allows other sounds to enter the ear cavity (FIGURE 12.7). The speakers point backward from the face, so they are angled right toward the ears. This angle reduces how much of the sound can be heard by others around the wearer. Even in a 3D printed prototype there was not much sound escaping from the glasses, and very little could be heard even by those standing on either side.

FIGURE 12.7 Speaker Closeup. The speakers on the Bose AR glass prototypes are near the ear.

In addition to the speakers themselves, there is also a head-motion sensor built in that can send information from the multi-axis points to your smartphone. This allows the app to know both the wearer’s location as well as what direction they are looking. This information can help customize directions—knowing the wearer’s right from left for example—as well as making sure they see key parts of an experience along the way.

The exact functionality changes among the brands of glasses, but the concept remains the same. With micro-directional speakers, the goal is to allow the wearer to hear, while minimizing that sound for everyone else around them. Many frames also have the option to automatically adjust the volume based on the environment. This is a feature that is built into car audio as well, and often is correlated with the speed of the car. The concept is to automatically adjust your volume relative to the space you are in. This further enhances the hands-free concept, minimizing the need to fiddle and adjust as you are out on an adventure. This feature could be greatly appreciated by those wearing these glasses on a bike.

More than a speaker

Listening is only half of the conversation. To allow for user feedback, these glasses also include a microphone. This allows the glasses to connect with the user’s voice assistant (more on this in the next section). Once again, this function helps maintain the hands-free functionality. It also allows the glasses to be used for phone calls and voice memos for those who want to communicate on the go. Many models have the option to turn the microphone feature on and off for privacy when needed. This is an important consideration. If you do purchase a pair, make sure that you can control when the device is listening and when it is not.

To further customize the experience, one arm of the glasses is equipped with a multi-function button that you can tap, touch, or swipe. Other than microphone control, this is the only other button you will find on the glasses. This allows you change your volume, change tracks, make a selection, and navigate within an experience—without having to access your phone directly.

Tip

If you need prescription lenses, many of these smartglasses offer them built in or an option to have your optometrist switch the lenses for you.

How can you get your own?

Although Bose has recently announced they would stop manufacturing their audio sunglasses line, they are still currently available for purchase as of this writing. They were the first to the market, but decided to not continue manufacturing the glasses as they didn’t make as much profit as the company had hoped. When interviewed about, this a Bose spokesperson said,

Bose AR didn’t become what we envisioned. It’s not the first time our technology couldn’t be commercialized the way we planned, but components of it will be used to help Bose owners in a different way. We’re good with that. Because our research is for them, not us.2

2 Roettgers, J. (2020, June 16). Another company is giving up on AR. This time, it’s Bose. Protocol. www.protocol.com/bose-gives-up-on-augmented-reality

Just as with all technology, each advancement is a step up toward another great advancement, so new products are sure to be on the horizon that would never have been created if it weren’t for these audio glasses.

Since Bose’s first launch, others have stepped up production of their own version of Bluetooth shades. Leading the way is Amazon with their Echo Frames, which bring their well-known Alexa assistant into a pair of sunglasses. Everything many have learned to love about having a voice-powered home assistant is now available on the go. Other options to check out include audio glasses from GELETE, Lucyd, Scishion, AOHOGOD, Inventiv, and OhO.

If you are looking to use your glasses for more than just audio communication, some of shades on the market also include cameras allowing for some action-packed capture. Leading the way in this market is Snapchat with their Spectacles Bluetooth Video Sunglasses.

Audio glasses might remain as stand-alone audio devices. Augmented audio may also be incorporated into full visual and auditory glasses. But in either case, the focus on exceptional sound quality will pave the way.

Voice experiences

Voice is now an interface. Voice interfaces are found in cars, mobile devices, smartwatches, and speakers. They have become popular because of how they can be customized to the user’s environment, the time of day, and the uniqueness of each situation. Alexa, Siri, and Cortana have become household names, thanks to their help as virtual assistants.

We are accustomed to using our voice to communicate with other people—not computers. So, it makes sense that companies like Amazon, Apple, and Microsoft try to humanize their voice devices by giving them names. It is important to make these interfaces feel conversational to match the expectations that humans have for any kind of voice interaction. As stated in Amazon’s developer resources for Alexa, “Talk with them, not at them.”3 This concept has also been supported by Stanford researchers Clifford Nass and Scott Brave, authors of the book Wired for Speech. Their work affirms how users relate to voice interfaces in the same way that they relate to other people. This makes sense, because that is the most prominent way we engage in conversation, up until this point.

3 Cutsinger, P. (2018 June 7). How building for voice differs from building for the screen: Talk with your customers, not at them. Alexa Blogs, Amazon.

As we discuss another tech, you guessed it, there are more abbreviations. The two main ones to know here are:

VUI for voice user interface

NLP for natural language processing

Alexa is one example of a voice interface that allows a user to interact conversationally. The challenge of this, of course, is that when we speak to a person, we rely on context to help them make sense of what we are saying. Natural language processing, or understanding the context of speech, is the task that an NLP software engine performs for virtual-assistant devices. The process starts with a script provided by a VUI designer. Just as you’d begin learning a foreign language by understanding important key words, a device like Alexa must do something similar. This script allows the user to train an assistant to an experience, or skill, as it is called in VUI design.

Voice user interface The use of human speech recognition in order to communicate with a computer interface.

Natural language processing The use of artificial intelligence to translate human language to be understood by a computer.

With many XR experiences linking to a smartphone or even promoting hands-free as an added benefit, that opens up the potential for other ways for users to interact within an experience. If you are relying on tapping into smartphone technology, then you first need to understand how to design for it. VUIs are not reliant on visuals, unlike graphic user interfaces (GUIs). The first thing to understand is that voice interactions should not be viewed as a replacement for a visual interface.

Not a replacement

It is important not to get into a mindset that a voice interaction can serve as a replacement for a visual interaction. For example, adding a voice component is not an exact replacement for providing a keyboard. You also need to be aware that the design approach must be different. If you show a keyboard to a user, they will likely understand what action to complete thanks to their past experiences with keyboards. A keyboard, in and of itself, will communicate to the user that they need to enter each letter, number, or symbol. If they are able to do this with both hands, like on a computer, it may be an easy enough task. But if they have to enter a long search term or password using an interface where they have to move a cursor to each letter individually, this task may be greeted with intense resentment. One way to overcome this daunting task is to provide a voice input option instead. It is often much easier to say a word than type it all out. However, the process of inputting data this way is much different than with a traditional QWERTY keyboard or even an alphabet button selection, and it not as familiar.

When a user sees the letters of the alphabet or a standard QWERTY keyboard, they connect their past experiences with it, so they can easily start to navigate to the letter they want to choose (FIGURE 12.8). But when you are relying on voice, the user will connect to their past communication with other people as how to interact.

FIGURE 12.8 Virtual Keyboard. Concept communicating with friend via screen hologram with full QWERTY keyboard.

Photographer: Peshkova for Shutterstock

There needs to be something in the UI that communicates to the user that they can use their voice to interact. This is often shown through the use of a microphone icon. However, it can also come in the form of speech. One way to let someone know that they can speak is by starting the conversation with a question such as “How can I help you?” Depending on the device, and where it will be used, design this experience to match what will work best to communicate to the user that they can use their voice and to start the conversation.

What do you do first in a conversation? Before you speak, you might make sure the person you are speaking to is listening. But if you are speaking to a computer, you don’t have body cues or eye contact to rely on. So, this active listening state needs to be designed.

Though most of the conversation experience will not use visuals, this is one area where a visual provides great benefit. Using a visual to let the user know that the device is listening can help substitute for the eye contact they are used to when talking with another person. This can be a visual change, such as a light turning on or a colorful animation, so they know that what they are saying is being heard.

Tip

Provide visual cues to provide feedback to the user. A great place for this is to communicate that a device is ready and listening.

Companies like Apple have created their own custom circular animations that they use across all their devices; when a user sees Apple’s colorful circle of purples, blues, and white, they connect it with a voice interaction. Seeing this animation communicates that the device is ready and listening for a voice command. All of this happens instead of a keyboard appearing. So, it isn’t a replacement, but rather a totally different way of communicating, and therefore in need of a totally different interface and design.

Context

When people communicate, we use our knowledge of the context to create a shared understanding. Once the user knows the device is listening, they may know to start talking, but how does the user know what to say or even what is okay to say without any prompts? With a voice interface there is no visual to show what the options are. It is best practice to have some options voiced, such as “you can ask...” followed by a few options. You may be familiar with the options provided by a teleprompt: “Press 1 to talk to HR, press 2 to talk to the front desk.” Those are often very frustrating, because they are very one sided and not conversational. The goal here is to start by understanding the user’s goal.

This is where everything we have been talking about is coming together. Once you have identified the why in your project, planned out roughly how it might look, done user research, and created a user flow, you can start to predict some options that a user may be looking for. You can start off by letting the user know what their options are based on this research. This can be laid out in a set of questions or by asking an open-ended question.

When you record an interview with someone, it is the best practice to ask open-ended questions or compound questions. The reason is that you want the person to answer with context. If you ask two questions in one, a compound question, it is a natural tendency for them to clarify the answer as they respond. Perhaps you ask “What is your favorite way to brew coffee, and what do you put in it?” Instead of answering “French press and with cream,” it is likely that they will specify which of the questions they are answering within the answer itself. So, a likely response would be, “My favorite way to brew coffee is using a French press, and I like just a little cream in it.” Traditional media interviews don’t include the questions—so it’s important to get context in the answer. We’re discussing question and answer methods here because such exchanges point out an important way that humans communicate. We like to make sure that our answers have the correct context to them. This is especially true when there is more than one question asked.

The need for context is important to understand as it relates to voice interfaces: Humans may not provide needed context in their voice commands. Having the computer ask questions that are open-ended or have multiple options will trigger the user to provide more context in their answer, which will help the device more successfully understand what the user is asking. Using the power of machine learning and natural language processing, the device creates a system that recognizes specific voice commands. These commands must be written out as scripts.

Scripts

Think about all the different ways within just the English language someone can say no:

nope, nah, no way, not now, no thanks, not this time...

And this is just to name a few. You also need to consider what questions the user may ask and the answers they may give. This requires anticipating what the user will say and then linking that response to activate the next step. With a traditional computer screen, the user has a limited number of options based on the buttons and links you provide. With the input of a click or a tap, the computer knows to load the connected screen based on that action. With voice, the interaction is reliant only on spoken language.

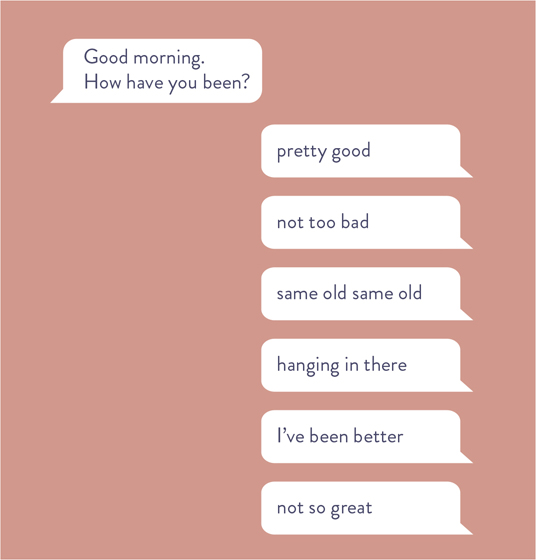

As part of the voice interface design, an important step of the process is to create a script. This script should embrace the dynamic qualities of conversation (FIGURE 12.9). A successful script should go through multiple levels of user testing to identify questions that users answer—and also all the different ways they answer. When the user isn’t given a set number of options to choose from, the script helps translate the human response into something actionable by the computer.

FIGURE 12.9 Script Sample. Sample answers collected to show possible answers to the question “How have you been?” All likely answers need to be collected to help a voice assistant understand how to respond based on each possible answer.

While it is easy to tell a computer what to do if someone says “yes” or “no,” it is less likely that the user will stick to these words. Computers may easily be able to understand yes and no commands, but what happens when a user, who is speaking as they always do to other people, says “I’m going to call it a day.” Or “I’m going to hit the sack.” These idioms are not going to be understood by a computer, unless they are taught them. Without understanding the cultural context, it could be understood that you are going to physically hit a bag, instead of go to sleep. How do you anticipate responses of users to build into a script? You ask them, and you listen to them.

Use a script and thorough user testing to collect anticipated responses to help keep the experience complete. Multiple rounds of quality assurance testing must be completed throughout the whole process. Think of how color, image choice, and copy set the tone for a design in print or on the web. In the same way, sound quality, mood, and content of the responses of the voice will set the tone for the voice experience. As you can imagine, this is no small task. To do this well requires a team of people dedicated to designing these VUI skills. However, scripts that are created have components that can be used across the different skills.

With voice and choice of words comes personality. Many people have such a strong connection with their voice assistants that they refer to them by name, and they speak very highly of them, almost as if they are a member of the family. The conversational experiences we have will start to build a relationship with the device and will also help establish a level of trust. These experiences have a way of making an experience personal. Think about how nice it is when someone knows and uses your name. What if they could also learn and know your favorite settings and words? What if they could mimic these preferences as they guide you, provide clear instructions, and help reduce your anxiety by saying the right words? This is what voice experiences can do. They are rooted in our experiences of conversations with friends and colleagues, so it is no surprise that we start to trust them like one too.

Power of sound

Sound design should not be an afterthought; it makes or breaks the experience. Once you start to notice how sound plays a role in your physical world, you can start to design ways for sound to create more immersion in your XR experiences. Using audio can help an experience feel more real, enhance your physical space, or even help you interact with a computer interface, hands-free.