Chapter 8

Human Factors

Despite all the technology used in an XR experience, it the user that drives the interaction, and should control the pace of it. Though the experience is powered by a computer, it needs to be created for the human. In this chapter, we explore how to design your experiences for humanity. Here is what we will be covering:

DESIGNING THE WHOLE EXPERIENCE Experience design looks at many factors that should all work together to communicate a cohesive message.

THEORIES OF PERCEPTION Understanding how our brain perceives visual information is an essential skill to have, especially as it relates to spatial design.

CREATING HIERARCHY IN 3D Creating a ranking system for your content is not a new design process, but when you add z-space, things get taken, literally, to a new dimension.

Designing the whole experience

Experiences designed for users and what they are interacting with need to be designed with a human-centered approach. Taking human factors and psychology into account, a human-centered approach focuses on how to improve a product or an environment to enable the user to be most successful in accomplishing their task with or within it. It relies on an understanding of psychology aims to analyze and enhance the overall experience of the interaction. Through the lens of these factors, you need to look at the experience as a whole to best measure success.

If you are looking to analyze human factors for XR, head to the grocery store. This is experience design with many flaws and great ideas—both of which are equally inspiring. The goal of every customer is to find, and likely purchase, something they need or want. As you walk through each space and each aisle, pay attention to how the space is arranged for the kind of product it displays. The produce section is wide open with less of a grid structure, in contrast to the narrower aisles lined with boxes, cans, and containers of every size and shape. The overall space is designed to follow a flow of the user, with similar foods categorized together. The aisles are created to accommodate the width of two carts, so shoppers can navigate up and down. Being unable to move your cart where you need to or struggling to find what you are looking for will influence your overall impression of the store—and might affect your willingness to be a repeat customer. This is especially true if there is a better option close by.

On the East Coast, Wegmans is well-known grocery store. It is often a customer favorite because of their attention toward the customer experience. They have a coffee bar when you walk in, and your cart has a cup holder so you can enjoy your drink as you shop. They have a full in-store restaurant if you need more than a cup of coffee to power your shopping spree. They pride themselves on having a large variety of food in stock and top that off with really fresh, quality food. When you leave, you are likely to be tired, because the stores are huge; they take some time to navigate through, and you are likely to see a lot of people during your visit. This is all part of the experience. They don’t just think about the purchase of food, but rather they consider the full experience. When you pull in to the parking lot, the area is clean and well maintained with beautiful landscaping. When you enter the store, you are greeted with an updated and timely display, and they seem to always know what you need—or didn’t even realize you needed until you saw it. Before you even have a cart, they have made a strong visual impression. Everyone who works there seems happy and is smiling, which makes a lasting impression on the customer. When you check out, they ask you every time if you found everything you were looking for.

Of course, even at Wegmans, some things can be improved. For one, the checkout process always feels like the longest and most time-wasting aspect of whole experience. How many times do we really need to move things in and out of the cart? Also, finding one particular thing in a huge store can cause frustration. Or the aspect that most people dislike: They often rearrange the store, and an item that used to be in a known spot is no longer there. The list could go on and on about all the small details of the store that negatively impact the overall shopping experience.

Analyzing a user experience like this can help keep your design human centered. How could the parts of an experience that work well serve as launch points in the experiences you are creating? Conversely, you can view any pain points or sources of user frustration as inspiration to find a design solution. Deep dive into what causes these frustrations, as that can directly impact how you design immersively. You can take inspiration in the way that grocery stores alter the structure of the store based on the shape and requirements of the food and, even more importantly, how people interact with it. Piling up apples on a shelf isn’t as efficient as placing them in baskets and open top containers for the customer to make their selections. Design for the content.

Packaging design

Beyond the interior design of the grocery store, each product within the store has been designed. The exterior packaging signals a lot about what you will get in the interior (FIGURE 8.1). Perhaps the most exciting part—packaging is all 3D. The boxes and bags that hold the food and other essentials we need can provide a vast array of inspiration. Looking at the different shapes, colors, type treatments, size, placement of the design, and even the product itself can help you as you expand your 3D design skills. Think of how a package of cereal is displayed versus a bag of cookies; the visual appeal changes depending on which side of the package you look at. The panels that will be displayed facing the customer each serve as a poster, trying to engage the customer in all it has to offer.

FIGURE 8.1 RealEats Packaging Design. RealEats is a prepared meal delivery company with a mission to make it simple for people to eat what they call “real food.” The wraps are engineered to work with the client’s previous packaging (the clear bags you see on the inside of the paper wrap). The design solution also had to work in a custom vending machine.

Photo provided by: 29 Design Studio; Founder and CEO: Maureen Ballatori; Lead creative: Courtney Carroway

Each product must have the required information, such as the nutrition facts, ingredients, barcodes, expiration dates, and association marks, in addition to the other elements of the package laid out by the designer.

As you turn the box around, how does the design flow across each plane? Can you see the product inside? How can you tell the difference between various flavors of the same kind of snack? What is the shape of a single package, and how does that work if they have to be stacked on top of or next to one another. How will they look as a single product, but also how they will look as a group? All of these details are communicated through the design and create shelf impact. Looking at the bigger picture, if you know where your product will be on the shelf, then you also need to consider what will be near it—the competition.

Picking out a product is a full sensory experience, engaging your sight, touch, sound—and sometimes smell and taste. If you pick up a box and it feels sturdy, you will trust it more than a thin box that doesn’t hold its form. If you can smell or taste the product, that will have a huge impact on your decision to buy it or not. That is why grocery stores often offer tasting stations in various locations. Each of your sensory impressions will impact which items end up in your cart and are brought into your home. You may also notice some interesting trends: Organic and natural products typically have cleaner and fresher designs, while food packaging targeted toward kids uses brighter colors. This all comes back to knowing who your audience is and what they respond to. It comes back to the centering all decisions around who you are designing for.

Back in the grocery store, each product a shopper selects tells a story. The goal is to tell the user about what is inside the package. It might be a story about how the product was made, the mission of the company, or how much they care about the Earth—but each package holds a narrative. Part of this story should communicate what the product is and what it does. It might even explain who the product is for, especially if it has a specific audience. An example would be a woman’s protein bar for post-workout snacking. The story extends the experience and adds a human connection.

The packaging of each element, down to the stickers on each piece of produce, has been thought through. In the full picture, those details may seem small, but they play an important role in the overall experience. If that small sticker has a produce code on it, that will speed up your checkout time. This is designing the whole experience.

Although you might not have plans to design a grocery store anytime soon, you can take valuable inspiration from it. In fact, everything we just discussed about product design needs to be considered for 3D objects in immersive spaces.

Immersive design is the new product design

In recent years, the role of the product designer has gained prominence and become the norm for many companies, especially any “design-first” companies such as Spotify, Apple, Amazon, Google, or Airbnb. As defined by Toptal:

Product designers are in charge of the entire product creation process. They are ultimately responsible for discovering and defining a problem, and then empathically designing a solution. The skills that a product designer must have range from technical to human-centered design. Product designers take part in user research, prototyping, visualization, testing, analyzing, and communicating.1

1 Toptal. (2021). Product designer job description template. www.toptal.com/designers/product-design/job-description

In essence, they are problem-solvers who look at the whole experience to create a system, rather than focusing on just the one thing they may be designing for in each. They are involved in all phases of the design process, including user research, user needs, copywriting, wireframing, prototyping, visual design and aesthetics, interaction design, and usability testing. In addition to the packaging design we see in the grocery store, product designers often design interactive experiences. In fact, if you combine the skills of the product designer and a packaging designer and top that off with some motion-design skills—well, that is the whole package, all rolled in to immersive design.

This sounds complicated, but so are people. When you design from a human-centered approach, there are many factors to consider. But the good news is that you already know a lot about humanity, as you live it every day, even in seemingly mundane tasks like grocery shopping.

Holistic empathy

An experience is something that is felt on many different levels—emotional, physical, mental, social, and maybe even spiritual. This holistic approach looks at how each component connects to the larger whole. The holistic approach to design places the user at the center and takes into account every aspect of their experience—the environment, the time, the device they are using, their motivations—and their abilities, triggers, or emotional connections. All of these work together simultaneously. In order to truly design for the user, you need to design with empathy. It is important to be open to view the diverse perspectives each user has when interacting with an object or product.

As an example, consider the use of hand dryers versus paper towels in a public restroom. Someone who is focused on environmental impact may wonder why paper towels are even available because they can be seen as so wasteful. However, to a mother helping her child with Sensory Processing Disorder, the perspective may be different. She would be grateful for the paper towels, knowing that the loud, unpredictable noise of the hand dryer could cause heightened anxiety to her child who needs to use the facility. These are just two perspectives, but you can see how one product can be viewed in different ways when you consider multiple points of view. Understanding this and embracing it is an essential part of designing empathically.

To design holistically, you need to view things from every angle, literally. When what you are designing can be viewed in 360 degrees, you need to look at it from every vantage point to picture how someone else will see it. You cannot just assume that it looks okay; you need to see it yourself just the way others will.

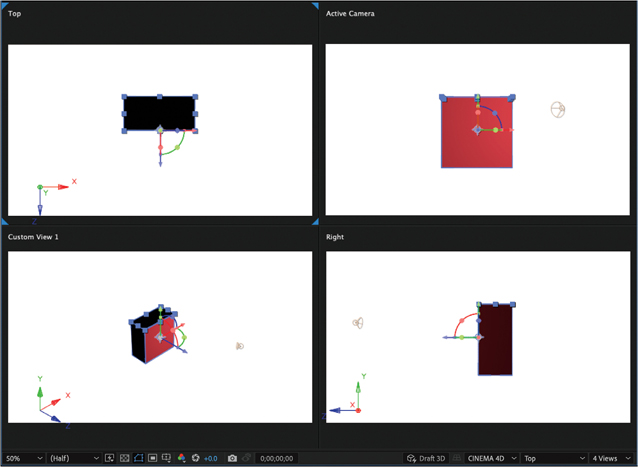

In many 3D design programs there is an option to change your view of the scene. If you haven’t already taken advantage of these settings, you should. The first place you can start is with a 4-up view (FIGURE 8.2). Here your screen can show up to four different views at the same time. You can see views from the top, left, right, and front all at once, with separate images for each displayed across the screen. In 2D, you often need to zoom in and out as you work, but in 3D, you need to look at the whole. Changing your views is easy and fast to do on a computer screen, and doing so reveals more of the relationships among all the various elements and the environment. This will be even more critical as we look at hierarchy later in this chapter. It isn’t enough to just view your designs in perspective; you should constantly be checking them from all angles to see exactly how your users will see things.

FIGURE 8.2 4-up View. A view inside Adobe After Effects showing a cube from the top, front, three-quarter profile, and right views—all at one time. The active camera view shows the front camera view and pose that will be rendered of the 3D object, while the other views help understand spatial relationships, especially in z-space.

Charles and Ray Eames wrote:

The details are not the details; they make the product.2

2 Ostroff, D. (2014, September 8). The details are not the details. Eames Office. www.eamesoffice.com/blog/the-details-are-not-the-details

Each detail combines with another detail, eventually making it whole. Having the ability to see the whole image in 3D is a challenge when you are working on a 2D screen. The details can often get lost or be hard to visualize. Sony has found a solution to this with the release of their Spatial Reality Display. The display can work alongside your traditional monitor as a way to explore your 3D model spatially. After a few seconds your eyes can adjust to the depth abilities of the screen. Beyond just showing a true depth image, the monitor also uses eye-tracking sensors to optimize the image for both eyes. It is with the release of products like this Spatial Reality Display that designers will be able to design at the level of detail that makes the whole experience better.

Tell a story

Humans are storytellers by nature. When a user has a set goal or destination, they will follow a sequence of events or actions over time in order to reach it. This journey becomes the narrative. To create a successful experience, the user will need to have a connection to it, and storytelling is a great catalyst for connection (FIGURE 8.3). Consider a basic story structure: You have a character, a scene, a problem, and actions that happen over time. When you add a plot or the goal of the main character, then you have a story. You have everything you need. For example, you have a user who wants to find a restaurant that fits their dietary needs, on the street they are on, when they are visiting from out of town. Enter AR maps to help them narrow their search by proximity and with their food preference. Then, an augmented map can direct them to the exact location. They eat and live happily ever after.

FIGURE 8.3 Man vs. Man. XR storytelling controlled by a third-person controller, created using Unity, to narrate the state of technology and politics today. “Within our virtual age, users can explore, trigger, and be the content they consume. But sex and money dominate our next screens: what we see (default settings), the stories we design (funding support), and worlds we build; fortunately, XR affords a spectrum of reality.”

Designer and Creator: Francesca Ortega

While not every story has a happy ending, the overall idea is there; you are creating a sequence of actions for a user, and that creates a narrative. There are three main ways to establish a narrative in XR: narrative-first experience, real-time experience, and capture-first experience.

NARRATIVE-FIRST EXPERIENCE relies on the user walking through all the actions to achieve their goal, as described in the food search example. Narrative-first examples in AR often rely on a specific location or orientation and often will rely on GPS. In VR, all games are narrative first. The goal may change per game, but each action is intended to bring the user closer to achieving it.

Another great use case of a narrative-first experience is a way to experience something from the past or something in the future. VR especially has the ability to bring people back to experience previous moments or places: a powerful way to connect with a place, an event, or a memory. History, such as the attack on Pearl Harbor, can literarily be re-experienced in a virtual environment through the use of photogrammetry or 3D modeling. This same approach can also be used to visualize the future. For instance, you could see what a future building renovation will look like by standing in the exact place and augmenting your view with the final model in place. This could help address any potential changes needed before the construction starts, making it much more cost efficient. Places hold memory and vision. Combining a strong narrative with an XR experience can be a powerful and impactful experience.

REAL-TIME EXPERIENCES rely on the direct interaction with an object or content. As the name suggests, they happen in real time, which makes them the most effective at mimicking interactions related to our physical world. These are great for social VR and AR experiences, where people from multiple devices can join the same virtual environment and interact with the same digital content. So, if you throw a digital ball and knock over blocks, the user on the other side of the room can see it happen, from their perspective, in real time, just as if the game was happening in the reality. FIGURE 8.4 shows a retro game board example. This is also commonly used in AR shopping. You can “see it in your space” before you purchase. Not only can a buyer preview a product with one of these AR apps, but they also can see the full product from every angle to determine whether it meets their needs.

FIGURE 8.4 Tilt Five. This augmented reality entertainment system uses proprietary miniaturized projectors and a retro, reflective game board to create 3D tabletop holographic games and experiences in real time.

Designer: Jeri Ellsworth, CEO; Photo provided by: Tilt Five

CAPTURE-FIRST EXPERIENCES are the third option. These rely on the user capturing an image or a movie. Because these stories are meant to be shared, these are a perfect way to bring others into your world. Capture-first experiences are prevalent on many social media platforms in the form of augmented stickers and fun animations that anchor to a physical object, such as a face, through motion tracking.

A story creates compassion and connects humans together. When you look at the full picture of an experience, you can start to break down the abstraction between the user and the content—both digital and physical. Once you start to blur these boundaries, the way you see it, or your perception, will change.

Theories of perception

How humans see, process, and perceive visual information is a fascinating process that is still not fully understood. However, a lot is known about our visual pathway and how we collect information from what our eyes see. Although many designers work intuitively from their foundation in good design principles and are able to achieve a solid visual feel, scientific research can help us understand what makes something effective.

Visual pathway

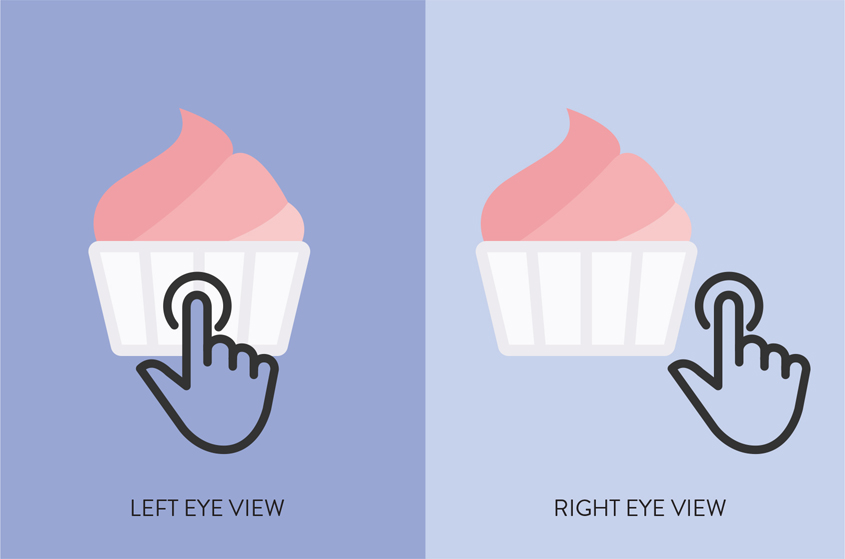

While the full process of how we see is quite complex, it is important to understand the basic concepts. Let’s focus on the visual pathway, which includes all the anatomical structures that work together to convert light into electric waves that get sent to different parts of the brain to be interpreted. Some go to the right cerebral hemisphere, and some go the left (refer back to Figure 4.6). The visual pathway starts at the retina, in the eye, and connects all the way to the back of our head in the primary visual cortex, or the V1. The primary visual cortex processes stimuli that give clues about size, orientation, motion, direction of motion, and binocular disparity (FIGURE 8.5). As you are designing, you should not be trying to delight the eyes, but rather the visual cortex. This explains how “good design” isn’t just visually appealing, but rather communicates well with the parts of our brain that are processing the visual information. So, while you can train yourself to see something and understand that it is more visually appealing, there are specific ways to design for the way we process what we see.

FIGURE 8.5 Binocular Disparity. This shows the difference in view from each eye, demonstrating how horizontal separation can alter the accuracy of an interaction.

Binocular disparity Interpreting between the different images seen by the left and right eyes which arise as a result of horizontal separation.

Which eye dominant are you? If you don’t know, here’s a quick way to find out: With both eyes open, hold out your hands touching your thumb and index finger to create a triangle frame. Place an object that is far off in the distance within your triangle frame. Close one eye and then the other. For one eye the object will move or be completely out of the frame. For the other eye, the image will stay in place; this is your dominant eye. This is favoritism is called ocular dominance.

Ocular dominance The favoring of one eye over the other for the receipt of visual input.

Knowing which eye is dominant for a user has benefits, especially for eye-tracking technologies. It also points out that people with opposite eye dominance may see a scene or objects a bit differently. Knowing that people are paying more attention to the right side of the scene versus the left can change where you should place more important information. Technology is being developed that will be able to determine eye dominance through eye tracking, which could automatically customize the layout of the UI to individual users. You could even enhance the resolution of the rendering on the side of the current user’s dominance, which could allow for a lower quality (less processing power needed) on the opposite side. This could be a helpful way to make more efficient use of the limited processing power available on a device. This could replicate the natural blurring our eyes apply to objects that are in our peripheral vision.

Our brain plays a large part of understanding what we see. So, as we discuss sight, it makes sense to also explore how psychology plays an integral role in the process of perception.

Gestalt

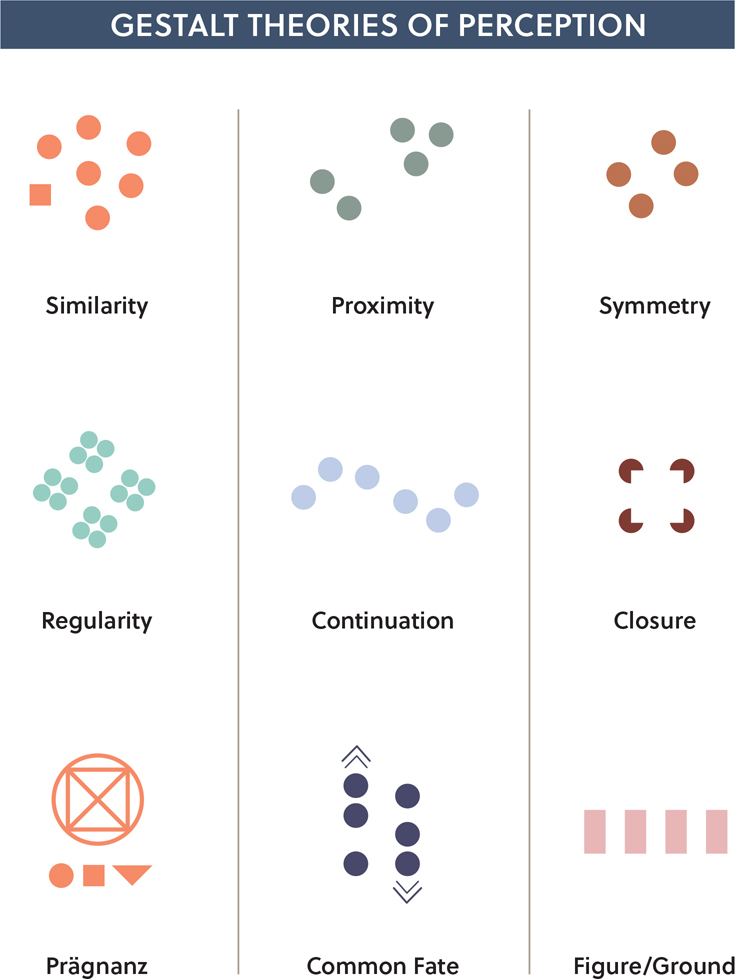

Any foundational design discussion or exercise will likely reference the gestalt principles of visual perception. This is, in essence, the psychology of what makes design successful. It was developed based on research from Germany in the 20th century, and the underlying concept is that the whole is greater than the sum of its parts. Many of the principles of gestalt, which means s hape, have become essential tools to graphic design. It is rooted in human psychology, but it bloomed into addressing how visual communication can have layers to deepen the relationship of the user to the design. My favorite part about gestalt is how it makes people feel smart. A designer can work out a visual solution, and do so brilliantly, and when a user sees it and understands it—they feel smart.

Each gestalt principle and law is equally important, and they should all be learned and practiced by designers. However, here I’ll highlight the most important ones, as they relate to XR, to provide context of how you can use them in that space (FIGURE 8.6).

FIGURE 8.6 Gestalt Theories of Perception. A visual exploration of the gestalt theories of perception including similarity, proximity, symmetry, regularity, continuation, closure, prägnanz, common fate, and figure/ground.

To get started, here is the breakdown:

Similarity: Grouping of similar things.

Proximity: Association of near things.

Symmetry: Recognition and balance.

Regularity: Repeating pattern.

Continuation: We follow lines.

Closure: We fill in the gaps.

Prägnanz: Simple and clear.

Common Fate: Associated items travel together.

Figure/Ground: Foreground and background.

Some of these are really important in XR, like figure/ground, so we are going to spend extra time on that topic in the next chapter. But let’s break down some of the others.

Proximity and similarity

Proximity and similarity are part of every kind of design. As it sounds, similarity is the process where our brains group things that are similar. You can make use of this by designing everything the same or by choosing to make one thing stand out—the “one of these things is not like the other” concept. Proximity looks at the space around elements. Objects that appear closer together will be grouped together by the brain. The space around these objects plays an active role in helping the user connect these objects together as visual groups, even more so than the actual objects themselves.

This is an essential part of creating a hierarchy, which we will discuss more later in this chapter. In interaction design, similarity and proximity are often used together to create successful UI systems. Being able to see all the buttons and associate them together because they all look and act the same allows us to categorize them together. This same concept translates to XR—where similarity of elements can help the user make associations of the actions. Users will learn to expect that elements that look similar will have similar affordances. Then, of course, it is your job to meet that expectation and make sure that elements that you design the same do, in fact, act the same way.

Where you place items will also help the user understand the action based on that context. If you place an item close to the main navigation, then the user will expect that it is a navigational element. Our brains group things that are similar, and also group things that are close together. That is how our minds work, so make sure to use that to benefit the user, especially in your 2D and 3D UI where it can be extraordinarily effective.

You can see how this same concept will incorporate symmetry and regularity. Your design patterns create expectations and create systems. Be thoughtful about the decisions you make, so that you keep them consistent across a full experience. This is really important for the user experience.

Continuation

Have you ever taken a road trip and found it therapeutic to just be staring at the open road leading you to your destination? That feeling is thanks to gestalt and, more specifically, continuation. As humans, we like to follow lines. We even will find things that look like lines and associate them as lines so we can follow them. Dashes, dots, arrows, breadcrumbs: If it forms a line in any fashion, our natural instinct is to see where it goes. Knowing this, if there is somewhere you would like someone to look in a scene, how would you direct them there? With a line of some type. Navigation apps use this concept fluidly. If there is somewhere someone needs to go, they follow the path to get from where they are to where they need to go. When you are designing for a 360-degree environment, there is a chance that a user will not be looking in the right direction at the time to see your line. So, you need to add a visual cue to guide them to look where they need to. This also provides reassurance to the user who may have constant anxiety that they are missing out on whatever is not in their current field of view.

Closure

We like to see complete, whole pictures. If there is a shape or image of something that we can recognize that is incomplete or not all in our view, then our minds complete it for us. Shapes, numbers, letters, symbols, imagery: With closure, our brains can complete what we may not see, if we know what it typically looks like in its entirety. This concept is really great in MR and with holograms, where a user may not always see the full image, but yet can still complete the full form to understand what it is they are looking at.

Prägnanz

The primary visual cortex, which helps our brain understand what we are seeing, crosses over both hemispheres of the brain. As you may recall, each side of the brain processes different kinds of stimuli. Logic is processed on one side, while emotion is on the other, for example. This means that when we see something, both sides of our brain have to process it, together but in their own ways, to form the meaning that we then understand. So, anything that is too complicated is going to be a barrier for us and may even stop us from interacting with it. In contrast, we will gravitate to anything that is simple and clear, because our minds can easily understand it and process it for us. The Law of Prägnanz states that we prefer things that are simple. If something appears complex or ambiguous, then the brain will try to simplify it to understand it. Knowing this, you can consider the complexity of your environments and models. For example, a face has a lot of details and organic lines, but when we carve a pumpkin, we simplify each element into a geometric form to enable us to carve it out. While in this case the medium forces the simplification, this is in essence what our brain will naturally do. Using basic primitive shapes as the foundation for what you are working to create will help keep things simple.

Common fate

If you have ever been to a theater with lights lining the roof of the marquee, then you have experienced the power of common fate (FIGURE 8.7). By pacing out the pattern in the way the lights blink, it gives the illusion that the lights are moving along the edge of the roof, but in fact they are static and just share a common motion. This illustrates the principle of how our minds group together objects that move in the same motion. The idea is that objects that share in the same direction and motion, much like runners in a race, will share a destination, such as the finish line.

FIGURE 8.7 Marquee Lights. The historic Coronado Theater in downtown Rockford, Illinois, shows an intricate display of marquee lights that gives the illusion of motion.

Photographer: Eddie J. Rodriquez for Shutterstock

This concept is often used in interface design to form a relationship between elements that, although not similar, move or change in same the way. Our minds will connect them together into a group. The microinteractions we discussed in Chapter 7, “The UI of XR,” are good examples of the ways this principle can be effective. If you would like animated objects to be grouped together, have them move at the same time, velocity, and direction.

Creating hierarchy in 3D

Just as a deck of cards has an order of rank (ace, king, queen, jack), so do does information. You need to rank it, however, and then adjust its appearance to create this visual hierarchy. To do this, you can alter its properties of size, weight, and position. By emphasizing some information, you will in turn deemphasize other information.

Hierarchy The organizational system for content that involves the emphasizing of some information and the deemphasizing of other information.

If you want one word in a line of text to stand out, you can make it bold or highlight it. Or you can surround it by whitespace and leave it all

alone.

Thanks to the gestalt principle of similarity, you will see the bold, highlighted, or isolated words before other words, because they are treated differently (FIGURE 8.8). We group things that are the same, so when something changes, even just a little bit, it will form a different visual group. Each of these visual groups will be ranked based on characteristics and placement creating a hierarchical order. You need to identify what’s most important to understand: What does the user need first? What do they need second? What do they need third, and then what do they need after that? Then adjust the design properties to make that happen. You don’t need to change the size and the weight and the position for it to stand out. Changing one parameter is enough. But the more you can change the visual weight of the content, and the way we perceive this invisible weight, the more importance our minds connect with it.

FIGURE 8.8 Hierarchy. Hierarchy is created by emphasizing some content while deemphasizing other content. You can achieve this through the use of size, weight, space, and position of the objects.

Imagine that you are looking at a design, in any medium, and it is falling flat—it doesn’t grab your attention, it doesn’t hold your attention, and you don’t learn anything from it. I guarantee you that the design of the content is missing hierarchy and does not make use of gestalt principles to engage you. Hierarchy is that essential.

You can control spatial relationships in 2D as it a pretty controlled medium. If you move the position of a block of text, the relationship between that text box, the other elements on the page, and the page boundaries themselves will all stay the same.

In responsive design that adjusts for the device, you have less control, as the content will shift based on the screen dimensions and resolution. Here, you work in percentages to divide up the space and rely on math to adjust the layout per screen size. Another important step in responsive design is that you have to rank all your information. Images are ranked based on how they are on the page. The top of the page is more important, and elements in columns will be ordered from left to right in importance.

With type, you use character styles and style sheets to rank each level of type. You use CSS to identify Heading 1, Heading 2, Heading 3 and create a visual ranking that generally adjusts the size and weight for each subhead. This way, as the position of the type changes based on how the user is viewing it—mobile or desktop—it can rely on the other properties for emphasis of some, which will in turn deemphasize other elements.

In 3D this all changes with the addition of depth. The hierarchical system you create is dynamic and based on the position of the user. The things that you are closest to will be the biggest. Where they are placed could be reliant on where they are placed by the user or on the position of the user in a space. To create a hierarchy in 3D, with so many variables that are in the control of the user, you will need to base design choices for hierarchy on the variables that you know will be constant.

Position

Depending on the kind of experience you are creating, you may have more or less control over the exact position of elements. VR gives you the most control. You can design the full environment to be laid out in a specific and purposeful way. This allows you to decide spatial relationships that can help guide the viewer to see one element before another. As people navigate through a digital world, you may not have complete control of where they are looking, but you can create a hierarchy within the space and among the elements. With AR and MR, there are many uncontrollable variables. The first is the user’s physical space. Without knowing exactly what their space will look like, it is hard to design for it. Instead you have to make it work for any and all backgrounds (more on this in the next chapter).

In MR or advanced AR, the device you are working with will have a better understanding of the overall space that a user is in. This spatial awareness includes the dimensions of the room, how far away the walls are, what other objects may be in the space—and identifying planes such as a floor, wall, or tabletop. This is also helpful as you can adjust the position of a digital object to fit within the parameters of the scanned environment. Using this method allows a user to select a location in a mathematic way.

In AR, the user is in charge of selecting an element to which they want to anchor their scene or object. They scan to find a flat plane such as a floor or tabletop. Then, with a tap input from the user, that point becomes the 0, 0, 0 location and is the point from which the x, y, z coordinates will originate. The position of everything else will be based on that location, and all elements are positioned from that origin. Knowing this enables you to design accordingly, based on the type of medium used. If you are designing a mobile AR experience and you want one object to be seen up close and another to be far off in the distance, then you should position them that way in the 3D software. The distance relationship from that central origin location will be the same when it is anchored in the user’s physical environment.

As you are trying to create visual groups in this space, you can look first at UI elements to group and then look at the positioning of 3D elements. Those that you place closer together in a digital space will have a similar relationship when added to the physical space. Of course, additional items might join with a digital element, and that is okay. Although you have no control over that, you can make full use of your control of the digital spacing and focus on the relationships you need to establish there as a starting point. For example, if you would like to have a text element appear in a box, then you want to make sure those two elements are in the exact same position, so they appear as one element.

Orientation vs. rotation

As you position elements, also consider how they are oriented toward the user. With 360-degree views of a 3D model, you can pick which view is shown on the launch of an experience or when it is anchored into a space. Depending on the user agency, the view of that object could be changed or manipulated, but you do have control of that initial orientation.

To clarify, there is a difference between orientation and rotation. Because many 3D software programs allow individual control of each of these variables, it is important to understand the difference. Orientation is the pose of the object, and the rotation is the specific motion path taken to each pose. If you think of a dancer, setting up to do a pirouette turn, they will first bend their knees with one foot behind the other and their arms are held out circling their body. Their body is facing their audience. This is the orientation. As they spin to complete the turn, this is the rotation. The orientation may change or return to its original pose as the movement stops. As you are creating a hierarchy of elements, you want to choose the orientation to help the user see a specific part of the element or to guide them to look elsewhere.

Orientation The angular position and direction of a 3D object.

Rotation The motion taken around a specific axis: x, y, or z.

Viewing distance

At this point you need to design for the final device, but you can use this to your advantage. Each HMD and mobile device has different specifications for field of view and size of screens. If you know something will be viewed on the Oculus, then you need to design specifically for that. If you know something will be viewed on an iOS or Android device, then you have to design specifically for each device and use a different programming language to do so. By designing for the exact device that will be used for the experience, you can really focus on making it work precisely for how the experience will be viewed by the user.

Adaptive distance field

The lenses on our eyes play an integral role in the way we perceive space, especially depth. As you can imagine, this plays an important role in XR. In our real world, as we get closer to an object we see more detail than we did when we were far away. Our eyes naturally adapt to depth, so if you focus on something that is closer to your eyes, or father away, you are changing the focus distance and the plane that is in focus. That process creates an adaptive distance field. This same concept can be replicated in digital forms, based on the distance to a shape and how much detail can be seen on the surface of the shape. As the user gets closer, their perception of the object can change or become clearer, and they should have a better sense of the outside and possibly the inside of the element.

You can and should design a hierarchy that can adapt based on space. This means having a larger, full-picture hierarchy that looks at all the overall elements. In addition, you need a secondary level of hierarchy for each object or element. If you have text, you can make it easier to read by adding hierarchy to the words. Similarly, you can make part of an object stand out to be noticed first, before other areas are explored. So, look at your hierarchy in different viewing scales. Think big picture, and then zoom in to add hierarchy to each detail.

Tip

Don’t forget to design the inside of a 3D object, remember that XR does allow users to explore inside elements by just stepping inside their virtual presence. This can be really fun to design and explore.

Vergence-accommodation conflict

If you can, find a place where you can see the horizon line, such as looking out a window or physically going outside and looking far off into the distance. Stare at the horizon for a moment, and then hold up your hand in front of you and quickly look at that instead. You will see a shift in focus that takes a few seconds, or longer, depending on your eyesight. This switch in focus is a process that your brain does automatically to help you adjust based on where you are looking, but more importantly, the distance involved. As you switch from looking far off into the distance to up close, the focus distance (accommodation) will shift. If you are wearing an HMD, this causes a big problem. The reason is that you may perceive the illusion of depth in virtual environment, but in fact the screen is only meters from your eyes. So, your brain may be focusing up close, but the visuals appear as though they are far away. This is called vergence-accommodation conflict.

Vergence-accommodation conflict When your brain receives misaligned signals about the distance of a virtual 3D space or object which alters the eyes’ ability to focus on it.

This is a known issue in VR especially, but not much has been done from a technical aspect to correct it (FIGURE 8.9). It may prove to be a challenge without a perfect solution, but there are some things you can do to design to overcome this:

Place objects in the digital scene at similar distances to where they would be found in real-world situations.

Slow down any animations or motions that move objects toward a user and use this sparingly. Let the user control the speed of the motion based on their movement through the space.

Use depth cues such as shading, perspective, reflections, occlusion, textures, gradients, and lighting.

Minimize the overlapping of elements that are spaced out on the z-axis. Separate them by varying their x and y coordinates. This will reduce the number of times the user’s eyes will have to change focus depth in a short period of time.

FIGURE 8.9 Vergence-Accommodation Conflict. As you switch your focal distance from the real world to a digital screen, the positioning of the display in relation to your eyes will affect your ability to properly perceive depth.

Relationships

Establishing a hierarchy is really about organizing. In order to organize, you need to group elements together and establish a relationship. If you have one main element, you may want other elements to follow it, either in motion, position, or appearance. This creates a parent-child relationship. In a perfect world, parenting works by establishing a level of hierarchy. The parent is in charge, and the child listens, every time. In 3D animation, that actually does work, unlike in the real world. While we are working on designing alternate realities, we can dream and make magical things happen, right?

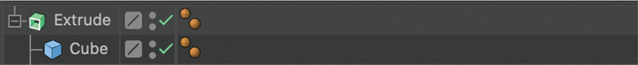

Parenting is a relationship of hierarchy that defines how multiple objects relate to one another. You establish which element is the parent and which are the children (FIGURE 8.10). You can have multiple child elements but only one main parent. This is also referred to as the root. Parenting can be seen as a chain system that links multiple elements together. Identifying relationships that can be grouped in this way helps you identify and create a ranking of hierarchy, but also speeds up your workflow. Instead of applying the same properties to multiple elements, parenting allows you to apply them as a group, where each child does what the parent is modeling. This can also help you place elements together to avoid the focusing issues as we have discussed.

FIGURE 8.10 Parenting. The Extrude layer, as shown in this screenshot from Cinema 4D, is the top layer (representing the parent), while each Cube layer becomes a child of that extrusion. The way layers are embedded shows the order of hierarchy.

Hierarchy of needs

Up to this point we have been looking at how to create a hierarchy ranking to provide order and organization of the content. But if you flip that around, you can instead focus on the hierarchy of human needs. What does the user need to be successful? This is the most important consideration to make your design successful. This concept works across all design, but it is especially important to review and understand this as you design for XR. The experience you create has to address the low-level needs first. So, it needs to function first, before the high-level needs can be tackled. Creativity and proficiency are the top two higher needs. This concept is based on Abraham Maslow’s Hierarchy of Needs pyramid that was included in his 1943 paper titled “A Theory of Human Motivation.” It has been adapted in many forms since then, including how you can order the needs of a user, as shown in FIGURE 8.11.

FIGURE 8.11 Hierarchy of Needs Pyramid. This concept is based on Abraham Maslow’s Hierarchy of Needs pyramid to show the hierarchical order in which needs should be addressed for effective user experiences.

FUNCTIONALITY Does it work the way it is supposed to? Does the camera turn on for a mobile AR experience? Does the experience even launch? Does it do the thing that people are coming to experience? This can also address barriers to entry such as a sign-in or sign-up process that doesn’t work, which means no one can ever even get to the immersive experience.

RELIABILITY Is it stable and consistent? If a user comes back to the experience over and over, does it continuously work as intended? If things become glitchy, they are not meeting the reliability needs of the user. If the user has to reload an experience over and over to get it to work, then it will not be considered reliable.

USABILITY This is directly evaluated by determining if the product can be used to achieve the user’s goal. Is it usable? It is also directly connected to ease of use and satisfaction for the user. This need is in the middle of the pyramid making sure it is addressed before the considerations that come above it in the pyramid.

PROFICIENCY Does this enable the user to improve, to get better at something than they were previously? This is about the gain for the user as a result of the experience itself. This comes from multiple uses, typically to further grow their skills in a specific area. This can relate to being proficient with the experience itself or with what the experience has taught them.

CREATIVITY Once all the other needs have been met, this is where creative thinking can come in. Users can start exploring and discovering new ways to experience something, or perhaps further the design beyond what the designer may have considered. Once a user reaches this point and has had all these needs met, they will likely be a repeat user of the experience as a result.

Because we create these XR interactions for other humans, you want to make sure that you are meeting all their needs for the experience. This hierarchy of needs chart is also a great way to work through the design process with technological needs as well. Before you spend the time designing a full experience, it is worth the time to see if your idea is even possible, assessing whether it will function. If it isn’t possible to get your idea to work, or maybe it does work but it is not reliable, those are key signs that it may not be worth moving forward in the design process yet, until those low-level needs are first met. With an industry that is changing and evolving as fast as this one, you will want to keep an eye on it—something that may not be as possible or reliable today, might be tomorrow.

Human centered

When you are working within a field that is very technologically reliant, it is important to continue to bring humanity back to the central focus. You can often get so lost in the limitations and advances of what computers can do that you lose focus on who you are designing for. You want to design the full experience from start to end focusing on each detail, for not just a user, but rather a living, breathing, creative, individual with their own needs, perceptions, influences, and challenges. Make your designs as human as the people you are designing for.