Chapter 1

Pick Your Reality

To get things started, we will be exploring the different technology included in all forms of extended reality. As we start our design journey into this immersive space, it is important to understand a little bit about how this technology has been used and is currently being used. It is also essential that you become an XR user yourself, if you aren’t already.

THE TECH BEHIND THE ACRONYMS Breaking through the barrier of learning a lot of new terms and a lot of abbreviations, we dive into extended reality by simplifying the technology and discovering the reality of your preference.

YOU ARE ALREADY USING EXTENDED REALITY To learn where we are going, we need to see where we have already been. Here we look through a wide range of XR examples that have been and are making a difference in our daily lives.

PROSTHETIC KNOWLEDGE The process of learning something new, especially something of a large scale, is intimidating. So, we are going to break it down into baby steps.

The tech behind the acronyms

Have you had the opportunity to experience any of the seven natural wonders of the world? From the dancing light show provided by Aurora Borealis to the vast architecture of rock at the Grand Canyon (FIGURE 1.1)? Interesting enough, the common theme among the seven is a unique use of space and light. Some of these wonders rise above the earth showing their majesty, such as Everest, the mountains surrounding the Rio de Janeiro harbor, and even the volcano Paricutín in Mexico. Others stand out because their beauty is in the opposite direction: down. Victoria Falls is created by a dramatic elevation dip allowing water to free fall over 354 feet into the lower Zambezi River. The mist of the water often delights with a rainbow and the occasional moonbow, or lunar rainbow, at night. On the Great Barrier Reef a variety of habitats and specifies inhabit the 133,000 square miles of the Coral Sea.

FIGURE 1.1 Grand Canyon. Havasu Falls in one of the seven wonders of the world, the Grand Canyon in Arizona.

Photographer: ronnybas for Shutterstock

There are so many things to learn about any of these amazing locations, and there are many ways that you can do so. However, hearing or reading about them isn’t the same as being there—surrounded by their wonder, experiencing it yourself. When you listen, read, or even look at a photograph, you are engaging only one of your senses at a time. When you are standing on the edge of a canyon, you can experience it through all of your senses. The way the experience is felt will vary from person to person: You could hear birds calling and water rushing; you could feel the wind against your face and the hard rock under your hands; you could see colorful hues of rocks for miles; you could smell the fresh juniper or ponderosa pine; you could taste the moist air after a thunderstorm. Then, of course, you could perceive your internal sense from the inside out, which is your awareness of self, essentially your presence. Perhaps you are balancing on a rock’s edge or adjusting to a different altitude, and this is all part of the physical experience. Each one of these details plays an important part of the experience.

The more immersed and engaged you are in an experience, the more strongly you are emotionally connected to it. The more senses you engage when experiencing something, the stronger your connection to it. This awareness is the language of the human experience. When we listen or read about a place, we might be able to mentally connect with it, and perhaps we might emotionally make a connection. But the physical experience is often missing. This physical presence is not possible to create in many technologies, but in extended reality (XR) it is. As the name suggests, these experiences are an extension of our real, physical, and three-dimensional world. They activate our senses and our perception of physical presence to replicate and enhance the actual experience.

There are a variety of ways this can be created and a wide range of technologies and platforms that allow you to engage with different content and spaces. Throughout this book we will be exploring these different technologies, but even more importantly, how to design and create 3D experiences that are more than just fun to look at, but also help improve our daily activities, the challenges we face, and the way we learn. They create an extension of our own reality. XR has the power to communicate and share more than just information; it can deliver an experience that we connect with mentally, physically, emotionally, and immersively.

While many terms and acronyms within this field may be new to you, the foundations of design remain constant, just as you would expect when moving between print media and interactive digital media. There are, of course, different considerations and topics that you will need to grasp as you start designing for 3D experiences. Immersive design is in essence an advanced version of motion design and UI (user interface) and UX (user experience) design, with enhanced audio design too. If you have any background in these areas, you may be looking to bring your skills to the next level, or really a different reality. If you have less experience in one or both of these areas, the pages ahead will guide you through these topics one by one.

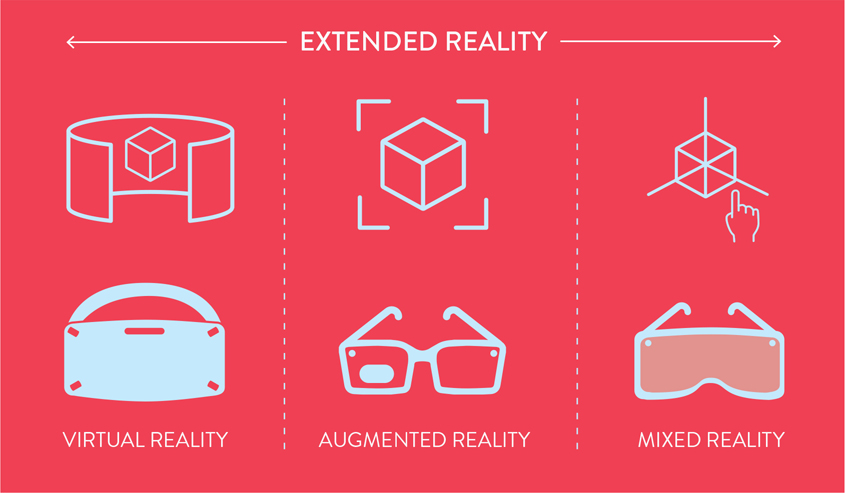

Within this exciting and quickly evolving field, we can ideate, design, build, and then be immersed within these digital experiences in a digital environment or as part of our physical world. As we start this journey, it is important to have a good understanding of how to categorize these different realities, and of course to know their acronyms which are used very commonly in the industry. Extended reality, referred to as XR, is the space where humans and computers interact and communicate using virtual and physical elements. XR is not in reference to a specific kind of technology, but rather a larger term to categorize all the different realities:

Virtual reality (VR)

Augmented reality (AR)

Mixed reality (MR)

All of these are reliant on the use of technology that allows the user to view a fully virtual space or a hybrid of their current space with augmented, or layered, digital content (FIGURE 1.2).

FIGURE 1.2 XR Breakdown. Extended reality is the overarching term that includes VR, AR, and MR.

Virtual reality (VR) offers a fully immersive experience, provided by a head-mounted display (referred to as an HMD) or a computer-assisted virtual environment (which is referred to by the acronym CAVE) that showcases a fully digital environment, often without seeing your current physical space (FIGURE 1.3).

FIGURE 1.3 Virtual Reality. In VR the user is fully immersed into another virtual space, where they can’t see the physical space around them.

Virtual reality A fully immersive interactive computer-generated experience within a simulated digital environment.

Augmented reality (AR) offers a hybrid view allowing you to see your physical environment, but then augments, or adds, a layer of digital information into that view. This can be type, images, 3D models, video, and audio. The digital content enhances the physical space (FIGURE 1.4).

FIGURE 1.4 Augmented Reality. In AR the user is able to see the physical world around them while adding a layer of digital content into their view.

Augmented reality An interactive computer-generated experience that enhances the physical world with an overlay of digital content.

Mixed reality (MR) allows a more dynamic relationship between the physical and digital environments (FIGURE 1.5). It is often referred to as the best of VR and AR. You are still able to see your surroundings as you do in AR, but MR has the more advanced imaging capabilities you would see in VR, because this technology often has the power of spatial computing. This allows for a more customized experience that adapts to each physical space that a user occupies. In MR, through the use of different technologies, the device is able to get a mapping of the space before you launch into the full immersive experience, so it can learn and understand where the physical planes are and then anchor and adapt the digital planes to them. If you are confused about the difference between AR and MR, you are not alone. In fact, as AR gets smarter and able to adapt more and more to the unique spaces around the user, the lines between these two blur. At the time of writing this book, this term is still being used, but it is expected that AR will take over to include the MR space entirely. In case you hear the term MR, it is good to know the difference that existed as the technology was advancing.

FIGURE 1.5 Mixed Reality. In MR the user is able to see the physical world around them while adding a layer of digital content into their view, but with a more advanced understanding of the physical world using spatial computing.

Mixed reality An interactive, computer-generated experience that blends the physical world and a digital environment using advanced spatial computing and gesture recognition or controller input.

Throughout this book, we will explore the variables and challenges that arise when designing for these different formats. We will be discussing design practices for each as needed and appropriate. There will also be many topics that relate across all of XR, especially related to creating 3D objects and space. However, just to be clear, this book places more of an emphasis on the AR and MR space, as there are more variables that need to be considered. With more variables in this space, there are also more design challenges and problems to overcome.

Honestly, before you can even consider designing for XR, you need to have some experience interacting within the reality you are designing for. If you had never visited a website, how would you even begin to design for one? It would be impossible to know best practices without a basic understanding of how users interact with the computer and what kinds of interfaces already exist. One of the roles of an XR designer is to make a user’s first experience, as well as their one-hundredth, enjoyable and approachable. For many new users the new technology can feel overwhelming on its own, so anything that can add comfort will also smooth out the whole experience.

Thus, in order to design for XR users, you first need to become one. Some technology might be more accessible than others, so start with what you need. If you are working toward a specific end project, then you will want to use that equipment. For example, if you are designing an app for a Magic Leap, then you need to gain experience using other apps on a Magic Leap to learn what it is like, and note some of the initial hurdles and enjoyable moments that you have as you do so. This research is essential. If you don’t have a specific project you are working toward, then come up with one. Throughout this book we will be looking at the process of designing for an immersive experience, so even if you don’t have a concept as of now, keep an open mind and an open sketchbook to record ideas as they come to you. The best possible way to learn something is to go through the full experience. To fully immersive yourself, that is what we are learning about after all.

If you are looking for a place to start or to continue to expand your experience interacting with XR applications, the most accessible technology is mobile AR. With a new or recent smartphone or tablet, you can pretty quickly test out different examples of AR. Thanks to ARCore (Android) and ARKit (iOS) this technology is now built into your device.

Tip

Ideas come to you when you are not focused on them, so always have a sketchbook or notes app at the ready to get your ideas down and out of your head so you can refer to them later.

If you have an iPhone running iOS 11.0+ with an A9 processor (iPhones 6s and newer), then you are AR capable. To get some quick experience, you can launch the Measure app, included with iOS 12+. This is a quick way to measure something in your physical world, by overlaying an augmented and dynamic ruler using the camera on your phone and, well, other technology that you will shortly understand more about. If you are on an Android, you can check the compatibility via the Google developer site. Google also has a built-in measure app using AR that you can check out on a Google Pixel 2 or Samsung Galaxy S9 phone.

It is important to understand that smartphones are actually a stepping stone to wearable AR glasses. They are the processing power behind AR wearables, many of which are still being developed. Though there are a few different models of these released, the future is really in the relationship between glasses and a smartphone. As you look at other phone accessories, such as smartwatches and Bluetooth headphones, they all bring us one step closer to AR glasses.

The goal for many companies in the XR space is to make the tech as mobile as possible. Many of the VR experiences require so much processing power that they require being tethered to a desktop PC. Because VR isn’t as reliant on moving around, it works for those experiences. However, AR and MR rely on the location and being able to move through a physical space. That means that a portable and mobile system is essential.

The many considerations around wearing something on your face and your head create many design challenges. A huge component of this is processing power. For a computer to process large amounts of data, such as mapping a room and interacting in a 3D space, it requires a lot of processing power. The more power needed, the more heat produced. No one is going to want to have a heavy and hot device on their face to experience this tech. So, a lot of development is looking at how to limit the processing that is done within the HMDs themselves. One option is to have an external processing source such as a smartphone or even a separate pack that the user carries on a belt or in a pocket (already seen on some devices).

As with other technologies, many are looking to use the cloud space as another alternative to reduce the processing required within the AR and MR accessories themselves. Nreal Light offers a unique product within the MR space, all while having a design that looks somewhat like normal glasses. It is reliant on the use of a smartphone using the 5G network, another important processing source.

Meanwhile, Qualcomm developed a reference design for a smart viewer based on their Snapdragon XR1 platform. Qualcomm is sharing the design with other companies as an example of how to create a headset with both AR and VR. These advancements start to blur the different areas of reality, which is why the term XR has been adapted to hold all these new advancements that blur boundaries.

Within the XR space, including specifically VR, AR, and MR, the technology is evolving at a rapid rate. Unfortunately, the concepts of the experiences are not as innovative. So, rather than prioritizing keeping up with each technological advancement, there is a benefit to keeping your focus on the end goal. Your goal should embrace the benefits of extending our reality by providing information in context and engaging the user through the full human experience.

Instead of just hearing someone recount their time as they stood on the edge of the Grand Canyon looking out at nature’s beauty, we could put on a headset and experience their story. Instead of learning science through 2D figures, we could put on a pair of glasses and explore immersively how metamorphosis works. We can gain empathy with others by experiencing interactions the way others do. We can learn and create within the same space.

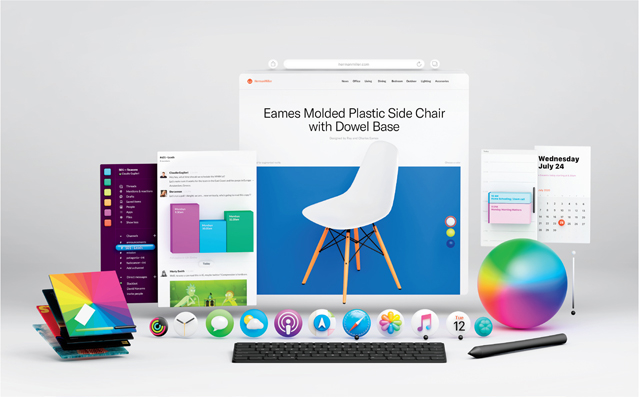

Most importantly we can break out of the rectangle screens that confine so much of our interactions, communication, and workspaces and make our physical space our new interface (FIGURE 1.6). By untethering XR headsets from computers for processing power, we are also untethering ourselves: We are no longer limited to being where our computer is to be productive. That idea is the driving force behind XR. With every new experience, the need for new design solutions and practices surface: especially challenges to make the technology the most accessible, and for XR to start helping people in the variety of tasks in their lives. We need to learn more about the field, the challenges, the capabilities, and then how to best design immersive 3D experiences for XR. Let’s get started.

FIGURE 1.6 3D Workspace. What if there were no screens? This is what a mixed reality workstation could look like if a keyboard and pencil were the only physical objects.

Designer: Claudio Guglieri

You are already using extended reality

If you are concerned about the unfamiliarity of XR, the best news may be that all of this technology isn’t actually new. In fact, you may have been using parts of XR technology for years. Although this field often focuses heavily on the technology, an experience doesn’t have to be highly technological or even complicated for it to be XR—and to be effective.

Some of the earliest examples of AR were very low tech and were created to help solve a problem from our daily lives. For example, the invention of the gun-sight on a hunting rifle. By looking through a collimating telescope’s small target icon on top of the rifle, a hunter could increase the accuracy of their shot. This invention dates back to 1901. It relies less on the advanced technology and more on how our eyes focus on the foreground and background. Once you start looking, you will start to see examples of XR all around you.

1st & Ten

The first example that I recall was the highlight of the significant yard lines used in a television broadcast of an NFL game (FIGURE 1.7). These white, yellow, and sometimes blue lines that show up on the field are not part of the actual field: This augmented information allows viewers to more easily see the significance of each play. Because fans are able to see how much further players need to advance the ball, they can become more active viewers and more emotionally and consistently connected to each play. Without these lines, viewers would not see this proximity as accurately, and that uncertainty changes the intensity of their reaction to the play.

FIGURE 1.7 1st & Ten graphics system. Blue and yellow lines show the 10-yard distance on a football field needed for the offensive team to advance. These lines are augmented using a combination of green-screen technology and motion sensors. This technology has been in use since 1998.

This AR experience works through the use of motion sensors and green-screen technology. At the beginning of the game, a 3D scan of the field is created to map out the field and identify the exact green color of the field. During the broadcast they are then able to have the foreground players occlude the background lines. This way the players appear on top of the digital lines making the effect less distracting and even more realistic. Sports is an area that has a number of existing great XR use cases, and the potential for more, especially during a live broadcast or streaming.

Although football was the first sport to use digital augmented information in a broadcast, it is not the last. This concept of applying digital content to the broadcast has since been used by a range of sports providing everything from visual analysis of a game, details on replays, pitch speeds and strike zones in baseball, and even customized advertising for soccer fans to help brands reach their target audiences around the world.

Head-up

Another way you might have seen or experienced AR is in a vehicle. Head-up displays, called HUDs, are a navigational feature that project information for the driver onto the windshield, so the information appears to be right on the road in front of them (FIGURE 1.8). Though the display content varies by manufacturer, common features are a speedometer, navigational directions, engine rpm, blind spot warnings, and even the speed limit. The benefit of this, as implied by the name, is that the information is right where the driver needs it, so they can get the information they need without looking down and away from where they need to be looking for safety. This technology has its roots in military aircraft, where it was first used with the navigation of planes, and then later adapted by General Motors for a race car. Today, it is a feature found in a number of vehicle makes and models.

FIGURE 1.8 HUD. The driver’s view of navigation using a head-up display featured in a BMW.

Seeing stars

A number of applications have used the concept of mobile AR, which relies on the use of the camera and often the location and position of the phone using the internal gyroscope, to display augmented information. Star Walk is a mobile application that was a front runner in creating a valuable use case for this tech. Vito Technology explains:

It will become your interactive guide to the night sky following your every movement in real time and allowing you to explore over 200,000 celestial bodies with extensive information.

The key term here is in real time. As you move your phone or tablet across the night sky, the app identifies the stars including some you may not be able to see. It displays other information, as well, about rising and setting times of planets plus historical context to many constellations and even satellites. You can see an example of it in FIGURE 1.9.

FIGURE 1.9 Star Walk. The constellation of Monoceros shown in the Star Walk 2 app. This celestial education app has been available since 2001.

Designer: Vito Technology

Try before you buy

AR especially has begun to change the marketing and advertising worlds. The concept of “try before you buy” was made virtual with IKEA’s Place app, which allows you to see the company’s furniture in your home before you commit to buying it. This concept has been brought into home appliances and even garden and landscape planning (FIGURE 1.10).

FIGURE 1.10 Virtual Showroom. MetaVRse 3D Web Game-Engine showing a virtual showroom.

Designer: MetaVRse Product Team

In the same theme of trying before you buy, the cosmetics industry has paid attention to the power of letting a consumer see what a specific color of makeup looks like to make sure they like it. It is often hard to see what the color looks like when it is packaged, let alone what it will look like once applied. L’Oréal partnered with Perfect Corp. to create an app called YouCam Makeup, so potential customers can see what the makeup will look like through the power of AR, before they buy.

The real estate market has also seen the benefits of allowing potential buyers to view a home before it has even been built, or from afar if they aren’t able to visit the home in person (FIGURE 1.11). This has become even more popular with social distancing rules during the COVID-19 pandemic. These benefits can go even a step further and allow a real estate agent to change the style of the home’s decoration to match the preference of their buyers, improving the chances of sale.

FIGURE 1.11 Real Estate. RealAR helps people see property before it’s built using mobile AR technology to visualize the completed house.

Designer: Dr Daniel Swan

Filters

XR is social. You can connect with others in multiple ways in XR, through gaming, virtual teleportation, and social media. Social media has helped launch the functionality of facial recognition and AR through the use of filters or stickers (FIGURE 1.12). Many of the front cameras on mobile phones are able to perform an in-depth analysis of your face and then apply fun dynamic filters that move as you do. It wouldn’t be fair to keep all that fun for yourself, so they are meant to be shared, and many people do this via their preferred social media, such as Snapchat and Instagram.

FIGURE 1.12 Fruit Frenzy. Augmented reality game on Instagram, Facebook and Snapchat using filters.

Designer: Oniric Labs

Experiences

When you are not able to be somewhere in person, then the next best thing is to experience it virtually. This works very well within the VR space, especially in combination with photogrammetry scans, which we will discover more about in Chapter 2, “Technology Check.” You can be transported to another location by just putting on a headset.

Using just a mobile device or tablet you can explore the work of famous artists in a new interactive way. Cuseum is creating AR experiences that push the limits on how museum attendees experience art. During the COVID pandemic Cuseum allowed users to bring the museum experience into homes. Using mobile AR a digital overlay is added to bring the art to life (FIGURES 1.13 and 1.14).

FIGURE 1.13 The Tree of Life. Gustav Klimt’s icon masterpiece “The Tree of Life” brought to life with augmented reality on the occasion of the 100-year anniversary of one of the world’s most famous artists.

Designer: Cuseum

FIGURE 1.14 Hacking the Heist. Image of stolen artwork returned to its empty frame using augmented reality. Rembrandt’s “The Storm on the Sea of Galilee” at the Isabella Stewart Garden Museum in Boston, Massachusetts.

Designer: Cuseum

Learning

There is a variety of research about the many different ways we learn, and one of the most interesting commonalities is that active learning results in a higher percentage of retained information. This is where XR comes in, fully immersing yourself in a time in history or being able to explore the solar system in your bedroom brings engagement to a new level. Even better, XR utilizes multiple modes to communicate, offering auditory and visual elements, which can provide additional assistance to those who have a learning challenge. The ability for customization and personalization of the teaching mode allows for teachers and students alike to adjust experiences for each user versus a one-size-fits-all teaching method.

Manufacturing plants, such as the GE Aviation plant in Cincinnati, use AR headsets to assist workers as they build jet engines. The program’s feedback lets them know when they have tightened the nuts and screws enough to ensure the best performance. They receive this feedback from the first time they perform these tasks, teaching them from the very beginning how to do it correctly and also continuously monitoring quality control. This interactive experience allows for more efficient and accurate production of airplanes.

Within the medical field, there is an enormous amount of critical information to learn. Gaining hands-on experience is also an essential component of growing knowledge. There are many ways in which XR can assist those learning within the medical field, from learning anatomy to assisting in unique surgeries. Though many procedures can be predictable, and even common for young medical professionals to experience, for the less routine instances medical personnel can benefit from learning about the procedures using a 3D visualization (FIGURE 1.15).

FIGURE 1.15 AR Brain Surgery. A visualization of surgeons performing brain surgery using augmented reality and an animated 3D brain.

Photographer: Gorodenkoff for Shutterstock

Stepping stones

As mentioned before, phone accessories such as smartwatches and Bluetooth headphones are helping develop what will become AR glasses. We are already using and helping to test many of the capabilities that are being developed for these accessories, which is in turn helping the development of the AR glasses.

Think about how smart Apple’s wireless headphones, AirPods, have become. When you first use them, you may think features like pausing a song when you remove an earbud from your ear or connecting to your device via Bluetooth once they are placed within your ear are helpful additions to your headphones. But these advances are more than just convenient features as they show steps toward creating a technology that is needed in future devices that are worn close to the face, such as glasses. The creation of these smaller functionalities help collect an understanding of user needs and how to improve the technology on this smaller, more focused scale. This all leads toward future technology and use of audio in other new developments, such as glasses. This technology is already on the market—has been used in this format of AirPods since 2016—and serves as a great stepping stone for things to come.

There are many expansive examples of how XR is already part of our society, and despite its reputation for being new, use cases can be found to date back decades. In Chapter 2 we will walk through additional key historical examples that have blazed the trail to where we are today. Great things take time, and with smart concepts and solutions, exciting potential emerges. As you start to learn and see how well-designed examples of AR have been entering our daily lives (at times unnoticeably), it showcases the power of creating transparency of the technology and the potential trajectory of this industry. Observe the world around you, and start noting where augmented information has crept in to assist within your daily life.

Prosthetic knowledge

The one thing that is always constant in consumer technology is change. It often feels like once you get used to something, it is updated or becomes outdated. One observation that I have about this within the XR space specifically is that it does in fact continue to evolve, but when XR evolves, it is usually to increase efficiency. Often I find that updates make the process of creating something a bit easier.

It may appear that it is worth waiting to dive in to learn until some of the back-end work has achieved a more stable status. But typically it is in those earlier days of trying to navigate how things work, even if you fail a lot, that you learn the most about how it works. And often, once we get to the more efficient place, some of the functionality doesn’t get the attention it deserves as you focus primarily on the visuals. This is unfortunate, because to truly design something effectively, you need to have a solid grasp on how it works.

An example of this in user interface (UI) design is prebuilt templates for websites. These can be a great way to maintain a foundational structure and also allow for a faster launch of the final product—all great things. However, if you, as the designer, don’t take the time to understand how the template works and to really understand the foundation of the site, then it is much harder to design for it. If you jump right into designing the UI without understanding the basics of HTML and CSS, as well as how the site is built and styled, then it is going to be much harder to understand how to customize the experience. You need to understand the box model to understand how HTML works, because without that, it is hard to understand why websites are built through blocks and modules.

The hardest part of starting something new is taking the first step. Once you are walking, you are going to figure out a place to put your foot with each step. The longer you wait to start, the more you will have to play catch up. This process is really just like walking, even though you can take only one step at a time. While there is time in the process to look at the project with a 100-foot view, it is also important to not get so overwhelmed that you never start. To do so, start with what you need for that first step. Then with the next step, learn more for what you need for that one. This concept is called prosthetic knowledge. Rich Oglesby used his long-running Tumblr blog to showcase case studies of creative concepts that were created one thought at a time (www.prostheticknowledge.tumblr.com/).

Prosthetic knowledge The act of learning information as it is needed.

New ideas and concepts can come as a bit of a shock initially. Once you have time to process them, which is really an analysis of the smaller details or steps, then new concepts can become easier to accept. Large ideas, especially new ones that are out of your comfort zone, are intimidating. But smaller, sequenced actions provide a roadmap that can make it all feel more approachable.

There was an article published in the July 11, 2011, Wall Street Journal that references the initial reactions about the concept of having electricity in our homes. Worry over having electricity in our homes now may seem almost hard to imagine because it is something that has become a standard across many cultures. The first time it was brought up as an idea, however, the shock of it led to thoughts of fear. As quoted within the article,

If you electrify homes you will make women and children vulnerable. Predators will be able to tell if they are home because the light will be on, and you will be able to see them. So electricity is going to make women vulnerable. Oh and children will be visible too and it will be predators, who seem to be lurking everywhere, who will attack.1

1 Rooney, B. (2011, July 11). Women and children first: Technology and moral panic. The Wall Street Journal. www.wsj.com/articles/BL-TEB-2814

The irony of this is, of course, that light makes it easier to see the things that darkness can easier conceal. But, from the viewpoint of that time, the fear of the unknown can overwhelm.

In the movie What About Bob?, the concept of taking baby steps is reiterated throughout the whole plot. As the main character, Bob, who suffers from a number of phobias, is preparing to leave his apartment, he puts this concept into action by taking one baby step at a time. First, he gets out of bed, then he gets dressed, then he puts on socks, then shoes, gets his coat, and then turns the knob on the door to open it and leave. The full concept of leaving the apartment was too overwhelming; he wasn’t able to move at all. Like Bob, focusing on the one next thing you need to do, and learning what you need to feel comfortable with that one thing, becomes a more manageable task. This is a good lesson in prosthetic knowledge: Keep each accomplishment and learning curve small, and before you know it you will look back on a completed concept.

With technology, the constant change can, and will at times, feel confusing and likely overwhelming. But you can empower yourself by acknowledging this will happen from the beginning and by preparing for it. The way forward into designing for XR is with the first step. Learn all you need to in order to get there. That first one step might be the hardest one, but I promise you can’t get there without starting. I assure you, they will continue to get easier. Once you have learned what you need to get to that first step, learn what is needed to take the next one. This approach allows you to continue making forward progress along an ever-changing path. If you wait to get started until you know and understand how to get to your end destination, then the roads may have completely changed by the time you reach them. In truth, as a designer and with a type A personality, I find that having some sort of plan and control over a project and predicting a timeline adds a sense of comfort to the work. There will be times when you have to put that aside, however, and be open to the possibilities of what will come next.

Looking ahead

This book is organized to teach each part of the process, using prosthetic knowledge. At the end of each chapter, you’ll find a design challenge to help you start putting some key concepts discussed within the chapter to use. Take the time to do them right away. They are intended to be short, but they will incrementally build skills that will help you take something you already know and feel comfortable with and expand it to another level of thinking. With an open mind and with an appreciation for dimensional planning, let’s immerse ourselves into the reality of your choice.