Virtualization has been one of the biggest trends in the past few years. Virtualization enables the engineer to share the hardware across different software instances. Docker is not really a virtualization software, but it is conceptually the same.

With a pure virtualization solution, a new OS runs on top of a hypervisor sitting on top of an existing operating system (host OS). Running the full OS means that we can be consuming a few gigabytes of hard drive in order to replicate the full stack from the kernel to the filesystem, which usually consumes a good chunk of resources. The structure of a virtualization solution is shown in the following image:

Layers diagram for a virtual machine environment

With Docker, we only replicate the filesystem and binaries so that there is no need to run the full stack of the OS where we don't need it. Docker images are usually a few hundreds of megabytes, instead of gigabytes, and they are quite lightweight, therefore, we can run some containers on the same machine. The previous structure using Docker is shown as follows:

Layers diagram for Docker

With Docker, we also eliminate one of the biggest problems of software deployment, that is, the configuration management.

We are switching a complicated per-environment configuration management, where we need to worry about how the application is deployed/configured into a container that is basically like a software package that can be installed in any Docker-ready machine.

The only Docker-ready OS nowadays is Linux, as Docker needs to make use of the advanced kernel features, forcing Windows and Mac users to run a virtual machine with Linux in order to provide support to run Docker containers.

Docker comes with a very powerful and familiar way (for developers) of configuring the containers.

You can create containers based on an existing image (there are thousands of images on the Internet) and then modify the image to fulfil your needs by adding new software packages or altering the filesystem.

Once we are satisfied with it, we can use the new version of the image to create our containers using a version control system similar to Git.

However, we need to understand how Docker works first.

As it was mentioned before, Docker needs a virtual machine to provide support on Mac and Windows, therefore, the installation on these systems may vary. The best way to install Docker on your system is to go to the official website and follow the steps:

https://docs.docker.com/engine/installation/

At the moment, it is a very active project, so you can expect changes every few weeks.

By default, Docker comes with no images. We can verify this by running docker images on the terminal, which will produce an output very similar to the following screenshot:

It is an empty list. There are no images stored in the local machine. The first thing we need to do is search for an image. In this case, we are going to use CentOS as our base for creating the images. CentOS is very close to Red Hat Enterprise Linux, which seems to be one of the most extended distributions of Linux in the industry. They provide great support and there is plenty of information available on the Internet to troubleshoot problems.

Let's search for a CentOS image as follows:

As you can see, there is a long list of images based on CentOS, but only the first one is official.

This list of images is coming from something called the Registry in the Docker world. A Docker Registry is a simple repository of images available to the general public. You can also run your own Registry in order to prevent your images from going to the general one.

There is one column in the table in the preceding screenshot that should have caught your attention almost immediately, the column called STARS. This column represents the rating given by the users for a given image. We can narrow the search based on the number of stars that the users have given to an image by using the -s flag.

If you run the following command, you will see a list of images rated with 1000 or more stars:

docker search -s 1000 centos

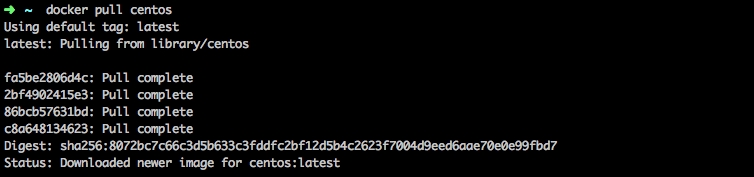

In order to fetch the CentOS image to the local machine, we need to run the following command:

docker pull centos

The output produced will be very similar to the following image:

Once the command finishes, if we run Docker images again, we can see that centos is now appearing in the following list:

As we specified earlier, Docker does not use the full image, but it uses a reduced version of it, only virtualizing the last few layers of the OS. You can clearly see it, as the size of the image is not even 200 MB, which for a full version of CentOS, can go up to a few GB.

Now that we have a copy of the image in our local machine, it is time to run it:

docker run -i -t centos /bin/bash

This will produce the following output:

As you can see, the prompt of the terminal has changed to something like root@debd09c7aa3b, which means that we are inside the container.

From now on, every single command that we run will be executed inside a contained version of CentOS Linux.

There is another interesting command in Docker:

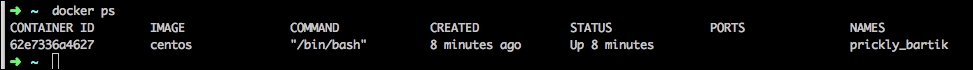

docker ps

If we run this command in a new terminal (without exiting from the running container), we will get the following output:

This output is self explanatory; it is an easy way to see what is going on in our Docker containers.

Let's install Node.js in the container:

curl --silent --location https://rpm.nodesource.com/setup_4.x | bash -

This command will pull and execute the setup script for Node.js.

This will produce an output very similar to the following image:

Follow the instructions, as this will install node:

yum install -y nodejs

It is highly recommended to install the development tools, as the installation process of a few modules requires a compilation step. Let's do it:

yum install -y gcc-c++ make

Once the command finishes, we are ready to run the node applications inside our container.

In the Docker world, an image is the configuration for a given container. We can use the image as a template to run as many containers as we want, however first, we need to save the changes made in the previous section.

If you are a software developer, you probably are familiar with control version systems such as CVS, Subversion, or Git. Docker was built with their philosophy in mind—a container can be treated like a versionable software component and then changes can be committed.

In order to do it, run the following command:

docker ps -a

This command will show a list of containers that have run in the past, as shown in the following image:

In my case, there are few containers, but the interesting one in this case is the second; this is where Node.js is installed.

Now, we need to commit the status of the container in order to create a new image with our changes. We do it by running the following command:

docker commit -a dgonzalez 62e7336a4627 centos-microservices:1.0

Let's explain the command:

- The

-aflag indicates the author. In this case,dgonzalez. - The following parameter is

container id. As we indicated earlier, the second container has the corresponding ID62e7336a4627. - The third parameter is a combination of the name given to the new image and the tag of the image. The tagging system can be very powerful when we are dealing with quite a few images, as it can get really complicated to identify small variations between them.

It might take a few seconds, but after finishing, the output of the command must be very similar to the following image:

This is the indication that we have a new image in our list with our software installed. Run docker images again and the output will confirm it, as shown in the following image:

In order to run a container based on the new image, we can run the following command:

docker run -i -t centos-microservices:1.0 /bin/bash

This will give us access to the shell in the container, and we can confirm that Node.js is installed by running node -v, which should output the version of Node, in this case, 4.2.4.

Now, it is time to deploy Node.js applications inside the container. In order to do it, we are going to need to expose the code from our local machine to the Docker container.

The correct way of doing it is by mounting a local folder in the Docker machine, but first, we need to create the small application to be run inside the container, as follows:

var express = require('express');

var myApplication = express();

app.get('/hello', function (req, res) {

res.send('Hello Earth!');

});

var port = 80;

app.listen(port, function () {

console.log('Listeningistening on port '+ port);

});It is a simple application, using Express that basically renders Hello Earth! into a browser. If we run it from a terminal and we access http://localhost:80/hello, we can see the results.

Now, we are going to run it inside the container. In order to do it, we are going to mount a local folder as a volume in the Docker container and run it.

Docker comes from the experience of sysadmins and developers that have lately melted into a role called DevOps, which is somewhere in between them. Before Docker, every single company had its own way of deploying apps and managing configurations, so there was no consensus on how to do things the right way.

Now with Docker, the companies have a way to provide uniformity to deployments. No matter what your business is, everything is reduced to build the container, deploy the application, and run the container in the appropriate machine.

Let's assume that the application is in the /apps/test/ folder. Now, in order to expose it to the container, we run the following command:

docker run -i -t -v /app/test:/test_app -p 8000:3000 centos-microservices:1.0 /bin/bash

As you can see, Docker can get very verbose with parameters, but let's explain them, as follows:

- The

-iand-tflags are familiar to us. They capture the input and send the output to a terminal. - The

-vflag is new. It specifies a volume from the local machine and where to mount it in the container. In this case, we are mounting/apps/testfrom the local machine into/test_app. Please note the colon symbol to separate the local and the remote path. - The

-pflag specifies the port on the local machine that will expose the remote port in the container. In this case, we expose the port3000in the container through the port8000in the Docker machine, so accessingdocker-machine:8000from the host machine will end up accessing the port3000in the container. - The

centos-microservices:1.0is the name and tag of the image that we have created in the preceding section. - The

/bin/bashis the command that we want to execute inside the container. The/bin/bashis going to give us access to the prompt of the system.

If everything worked well, we should have gotten access to the system prompt inside the container, as shown in the following image:

As you can see in the image, there is a folder called /test_app that contains our previous application, called small-script.js.

Now, it is time to access to the app, but first, let's explain how Docker works.

Docker is written in Go, which is a modern language created by Google, grouping all the benefits from a compiled language such as C++ with all the high-level features from a modern language such as Java.

It is fairly easy to learn and not hard to master. The philosophy of Go is to bring all the benefits of an interpreted language, such as reducing the compilation times (the complete language can be compiled in under a minute) to a compiled language.

Docker uses very specific features from the Linux kernel that forces Windows and Mac users to use a virtual machine to run Docker containers. This machine used to be called boot2docker, but the new version is called Docker Machine, which contains more advanced features such as deploying containers in remote virtual machines. For this example, we will only use the local capabilities.

Given that, if you run the app from within the container located in the /test_app/ folder, and you are in Linux, accessing http://localhost:8000/, it would be enough to get into the application.

When you are using Mac or Windows, Docker is running either in the Docker Machine or boot2docker so that the IP is given by this virtual machine, which is shown when the Docker terminal starts, as shown in the following image:

As you can see, the IP is 192.168.99.100, so in order to access our application, we need to visit the http://192.168.99.100:9000/ URL.

If you remember, in the previous chapters, one of the most important concepts was automation. Automation is the key when working with microservices. Instead of operating one server, you probably will need to operate few dozens, reaching a point where you are almost fully booked on day-to-day tasks.

Docker designers had that in mind when allowing the users to create containers from a script written in a file called Dockerfile.

If you have ever worked on coding C or C++, even in college, you are probably familiar with Makefiles. A Makefile file is a script where the developer specifies the steps to automatically build a software component. Here is an example:

all: my-awesome-app my-awesome-app: main.o external-module.o app-core.o g++ main.o external-module.o app-core.o -o my-awesome-app main.o: main.cc g++ -c main.cc external-module.o: external-module.cc g++ -c external-module.cc app-core.o: app-core.cc g++ -c hello.cc clean: rm *.o my-awesome-app

The preceding Makefile contains a list of tasks and dependencies to be executed. For example, if we execute make clean on the same folder where the Makefile file is contained, it will remove the executable and all the files ending with o.

Dockerfile, unlike Makefile, is not a list of tasks and dependencies (even though the concept is the same), it is a list of instructions to build a container from scratch to a ready status.

Let's see an example:

FROM centos MAINTAINER David Gonzalez RUN curl --silent --location https://rpm.nodesource.com/setup_4.x | bash - RUN yum -y install nodejs

These small few preceding lines are enough to build a container having Node.js installed.

Let's explain it in the following:

- First, we choose the base image. In this case, it is

centosas we used before. For doing this, we use theFROMcommand and then the name of the image. MAINTAINERspecifies the name of the person who created the container. In this case, it isDavid Gonzalez.RUN, as its name indicates, runs a command. In this case, we use it twice: once to add the repository toyum, and then to install Node.js.

Dockerfiles can contain a number of different commands. The documentation for them is pretty clear, but let's take a look at the most common (aside from the ones seen before):

CMD: This is similar to run, but it actually gets executed after building the command.CMDis the command to be used to start an application once the container is instantiated.WORKDIR: This is to be used in conjunction withCMD, it is the command used to specify the work directory for the nextCMDcommand.ADD: This command is used to copy files from the local filesystem to the container instance filesystem. In the previous example, we can useADDto copy the application from the host machine into the container, runnpm installwith theCMDcommand, and then run the app once again with theCMDcommand. It can also be used to copy the content from a URL to a destination folder inside the container.ENV: This is used to set environment variables. For example, you could specify a folder to store files uploaded by passing an environment variable to the container, as follows:ENV UPLOAD_PATH=/tmp/EXPOSE: This is used to expose ports to the rest of the containers in your cluster.

As you can see, the

domain-specific language (DSL) of Dockerfiles is quite rich and you can pretty much build every system required. There are hundreds of examples on the Internet to build pretty much everything: MySQL, MongoDB, Apache servers, and so on.

It is strongly recommended to create containers through Dockerfiles, as it can be used as a script to replicate and make changes to the containers in the future, as well as being able to automatically deploy our software without much manual intervention.