CHAPTER 11

Editing Dialogue Tracks

What do I want from a dialogue edit? In a word, smooth: the ability for me as a mixer to hit play on the dialogue edit and not be taken out of a scene by any jarring ambience changes between angles/takes, bumps, or other sonic anomalies. A good dialogue edit is like a smooth handoff in a relay race—no interruption of flow.

David Barber, MPSE, rerecording mixer,

supervising sound editor, The Frozen Ground

At last, it's time to edit. No more setup, no more preparation work. As you begin, keep the following two main purposes of the dialogue editing process in mind so that you can be efficient and focused.

- To serve the film by creating clean, focused tracks, free of anything that gets in the way. This is how you can help move the story along and create minute details that enhance plot, character development, and drama.

- To prepare tracks that can be mixed easily and productively. No matter how creative, clean, or detail-rich your tracks, if the mixer can't quickly make sense of them, all of your work is in vain. You'll never have enough time in a dialogue premix to give your tracks the love they deserve, so you must edit with an eye to the mix, presenting your tracks in such a way that the mixer spends her time creatively, bringing out the best in them, rather than trying to figure out your system.

Respect these two goals—craftsmanship and procedure—and the rest will work out.

Organizing Tracks

I like to know that the same mic/actor [sound angle] is on the same track throughout the scene, with no other takes in between—to be sure it will “always” be like this and not “sometimes.”

Diego Gat, rerecording mixer

San Martín: El cruce de los Andes; El Gato Desaparece

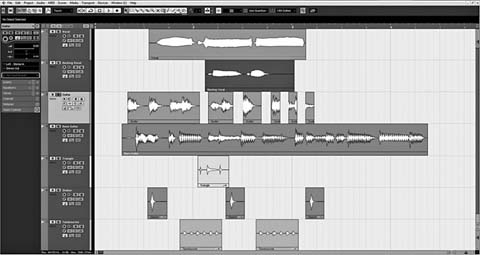

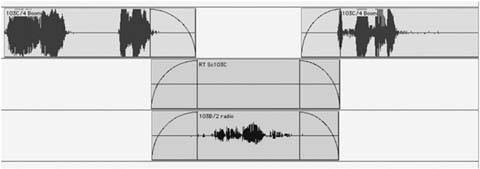

The first real step in editing is to organize the tracks within a scene so that each camera angle (shot) has its own track. If you've done any popular music production, you know that you organize the recording, editing, and mixing of each song by instrument (see Figure 11.1). You might have a track for the lead guitar, one for the bass, one for the synth, a few for the drums, and of course one for the singer. In your mix, You'll want a specific combination of EQ, dynamics, and effects for each instrument and voice throughout the song.

If the guitar lives on the same track throughout the song, you can easily apply its required processing without interfering with the other instruments and without any undue automation acrobatics. But if you record a few bars

Figure 11.1 Editing a pop song on Cuebase. Each instrument occupies one track on a song. On another song, the track geography may be totally different, depending on the needs of the song.

of guitar on a certain track and then drop in some drums followed by a few lines of vocals, you can imagine how difficult it becomes to control the music. You'll spend all your time executing exotic processing automation and lose focus on the real issue: telling a story through music.

In many ways you face the same issues in dialogue editing. Within a scene, each camera angle, each perspective, and to an extent, every character, behaves like an instrument in a pop song. By properly organizing the scene, you gain the same control you achieve in music mixing. Each shot will have its unique characteristics, and by putting each one on its own track—within that scene—you're making life much easier for yourself and for the mixer.

Be careful not to take this music analogy too far. The “rules” of a scene— who's on which track—are true only within the one scene you're working on. A music album is made up of many songs, each of which has it's own track needs. The rock ballad on track one will not have the same layout as the romantic polka on track nine. Similarly, each scene in a film takes care of Number One, unconcerned about the plight of its colleagues. Just because Bill's close-up is on Dial C in scene 76, he doesn't have to appear on Dial C throughout the film. Nor do you need to create hundreds of tracks to accommodate all the people/angle combinations You'll encounter. On a film of average complexity, you should be able to elegantly edit the dialogue using no more than 16 tracks, still providing the order and logic needed to manage the tracks within each scene. This is possible only because you recycle the tracks as you enter a new scene. Unlike music production, where a track

Figure 11.2 Use the information in the clip to identify scene, shot, and take.

takes on the name of its “inhabitant,” dialogue tracks steadfastly hold onto their names: Dial A, Dial B, and so on.

Fortunately, the key to organizing your session by shot presents itself as soon as you open the session. Unless you're especially unlucky, the scene, shot, and take information (or the slate and take number if the production used a sequential slate-numbering system) will appear on the region names (see Figure 11.2).

An Example of Scene Organization by Shot

Before the shoot, a scene must be broken into shots. At its most basic level, a scene will contain a master shot, close-ups for each speaking character, perhaps a two-shot or a dolly shot, and whatever other angles are needed to move the story along. Let's imagine a simple two-person interior scene and see how it can be broken into shots. In the drawing room, Fanny and Edmund are engaged in a bit of courtship:1

EDMUND

Now I must look at you Fanny, and tell you how I like you; and as well as I can judge by this light, you look very nicely indeed. What have you got on?

FANNY

The new dress that my uncle was so good as to give me on my cousin's marriage. I hope it is not too fine; but I thought I ought to wear it as soon as I could, and that I might not have such another opportunity all the winter. I hope you do not think me too fine.

EDMUND

A woman can never be too fine when she is all in white.

That's the script. The director will visualize it into shots, and the assistant director will organize a sensible shooting order based on locations, availability of actors, and weird production demands. You'll end up with a script breakdown like this:

| Scene | 32, | INT day drawing room, Edmund and Fanny | |

| 32 | MWS | Establishing shot, Edmund + Fanny left of frame, large clock on right | |

| 32A | MCU | Fanny | |

| 32B | CU | Edmund | |

| 32C | WS | through window | |

When the scene is shot, the script supervisor keeps track of which parts of the scene were successfully filmed from each angle. This lined script will enable the film editor, and later you, to see which text was covered during each shot and take. In the real world, the script report will include coverage details for each take so you can easily know what you have to work with and where your pitfalls lie. It's rare that all shots, or angles, will cover the entire scene. Usually, a scene is blocked so that certain sections are covered by specific angles. Otherwise, you end up wasting film and shooting time, both of which are expensive.

The lined script in Figure 11.3 is hideously oversimplified, but you see how the picture editor can use the script notes to learn what sort of coverage exists.2 He'll pick from this treasure trove of shots, selecting the best moments and the greatest performances, and piece together a believable, emotional story that pulls the viewer through the action and into the next scene.

Figure 11.3 A simplified example of a lined script.

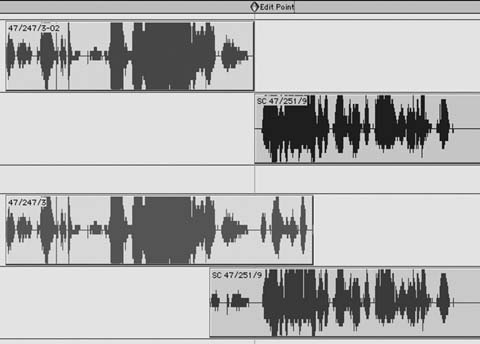

Organize by Sound Edits, Not Picture Edits

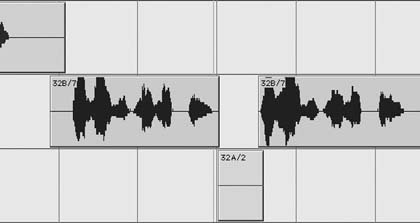

In the scene shown in Figure 11.3, begin by placing every appearance of shot 32 (the master shot) on Dial A, all 32A regions on Dial B, and so forth.3 (See Figure 11.4.) Later you might decide to further split the regions, but this is a good start—and it's very easy. Remember that even though you're splitting the dialogue regions by shot, you're not (or you are but very rarely) cutting with the picture. You're splitting by “original microphone angle” and your splits will be based on sound, not picture, edits. If picture editors always cut picture and sound at the same moment, films would be awfully boring, so sound and picture edits are often staggered.

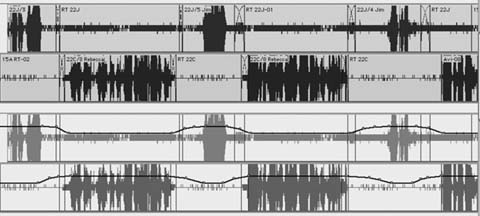

Figure 11.4 As you begin to split a scene, the most sensible place to start is by shot. In this example, scene 71, all of the regions from shot (or “slate in” this example) 206 go to Dial A, regions from shot 209 go to Dial B, and so forth. The sequence is a matter of taste, but many editors place the first shot to appear in a scene onto the first track used in that scene.

In the scene with Edmund and Fanny, the editor might cut to Fanny's close-up before Edmund completes his first speech. Although we see Fanny's appreciative face, we hear the end of Edmund's track. Still holding onto Fanny's CU, we hear her lines, now in sync with her picture. At the end of Fanny's lines, the picture editor chose to hang on to her CU for a few moments, even while we hear Edmund's retort. In all such cases, you will split the dialogue based solely on sound edits.

At this point, you don't know anything about the tracks, but you can make certain blind assumptions. By organizing the scene by shot, you can better understand its sound issues. Plus, this configuration will make the mix sensible and logical.

Additional Splits

At times You'll want to split your tracks beyond the basic shot-of-origin mode. Perspective cuts, telephone conversations, problematic noises, or places where you want extra focus are just a few of the reasons to do this. If, for example, the picture editor used three different takes of Edmund's close-up, two of which have the same room tone and another that suffers from a low-frequency rumble, you should split the rumble-infested take onto its own track. After all, it will unquestionably need special processing because of the noise, and it will likely benefit from longer room tone transitions.

Don't be shy about splitting; tracks are cheap. In general, splitting is better than not splitting, but thoughtless or unnecessary splitting creates more problems than it solves. Talk to the rerecording mixer about how many tracks you can use, and how detailed she wants the splits to be.

Organizing multitrack clips is covered in the section “Working with Many Channels of Dialogue,” later in this chapter.

Unnecessary Inserts

Just because the picture editor gives you a sound clip, doesn't mean you have to use it. If, while talking to Fanny, Edmund (32B) takes a thoughtful pause and the picture cuts to Fanny silently nodding her approval, the picture editor will probably—out of habit—cut both picture and sound to Fanny's shot (32A). When the lecture continues, the editor will cut back to Edmund's track. What you're left with is a change of room tone when we cut to Fanny and another when we return to Edmund (see Figure 11.5).

Figure 11.5 When you cut from 32B to the silent cutaway of Fanny, 32A, you may introduce unnecessary room tone changes and gain nothing in exchange.

Clearly, if Fanny says something or makes an audible sigh or movement, You'll need her insert sound to maintain a sense of reality. However, if the sound from her insert offers no useful information, You'll end up moving back and forth between room tones for no reason. Not only does this make you work harder than necessary, but it subjects the viewer to yet another room tone transition. If the inserted room tone doesn't help the story, get rid of it and cover the inserted shot with room tone from Edmund's track (see Figure 11.6). The result: a smoother track that's easier to cut and easier to mix. Everyone wins.

Figure 11.6 If there's absolutely no interesting information in Fanny's short cutaway, remove it and fill with Edmund's room tone. Also, if Fanny's cutaway has useful information but is unacceptably noisy, remove it and fill with tone from 32B. Later, Foley may be added to liven up the gap.

How Many Tracks?

In general, you want enough principal dialogue tracks to be able to cut two consecutive scenes of average complexity without reusing tracks. So if, on average, you use six tracks per scene, You'll want 12 to 14 primary dialogue tracks. Sometimes things get out of control and a long or madly complex scene uses ten or more tracks, so the next scene will necessarily reuse some. Don't worry.

On small films I typically use about 14 main dialogue tracks. By the time I get to the dialogue premix, I've added a few ADR tracks, some production effects, and X, Y, and Z tracks, ending up with about 30 altogether. Any mixer can handle 30 tracks, but if I'm working with a mixer or dubbing stage I don't know, I'll send a list of my tracks and ask if this arrangement is reasonable.

Track layouts are very much project-specific. Generally for small, simple projects I prefer to keep production sound to eight tracks (usually about four to five per scene, roughly checkerboarded) plus two to four for split-out production FX, and another four for ADR. It's not usually necessary to have more than 16 tracks for a DX tracklay, though this is DEFINITELY dependent on the scope of the show. On larger projects I have had sessions that are many, many tracks wide.

Polly McKinnon, dialogue editor

The Lord of the Rings: The Two Towers

No matter how many tracks you end up with, be sure to have the same number on each reel, in the same order. Even if you don't use every track on each reel, go to the mix with every track in its proper place. This way the tracks will always come up on the correct channels of the mixing desk. Repatching or rerouting is a nuisance and always leads to mistakes. If a track goes unused for an entire reel, you may choose to make it inactive, just to let the mixer know not to bother thinking about. But this borders on obsessiveness.

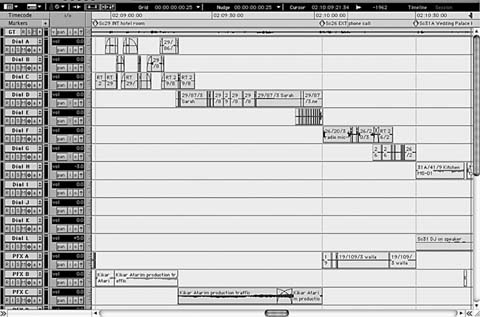

Scene-to-Scene Splits

Just as you split your tracks within a scene, you can split one scene against the next. The idea is to avoid recycling tracks on adjacent scenes in order to make for less heroic automation between scenes. Say that one scene uses eight tracks (Dials A → H); try to start the next scene on track 9 (Dial I).

Figure 11.7 Try not to put adjacent scenes on the same tracks. Here scene 29 is on Dials A, B, C, and D, plus PFX B and C. Scene 26 (including its split, which begins during scene 29) is on Dials E, F, and G plus PFX A. The beginnings of scene 31A can be seen on Dials H and L.

The third scene will then begin again on Dial A (see Figure 11.7). If you have a very long or complicated scene that won't allow checkerboarding, just make sure that its first couple of shots start on tracks that weren't used at the very end of the outgoing scene. (See Chapter 19, “Preparing for the Mix,” for more on this topic.)

Where to Edit

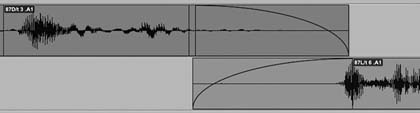

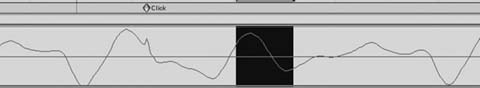

By necessity, editing dialogue involves making splices. You must get from this shot to the next—where do you jump? By far the most common place to move between clips is in the space between them. This allows for a natural transfer of energy from one source to another (see Figure 11.8), and it usually works.

Figure 11.8 The most common place to fade from one clip to the next is during the pause between them.

Longer crossfades, shorter crossfades, asymmetrical crossfades—these will influence the effectiveness of the transition. But if cutting between words or sentences doesn't yield believable speech, then you probably need to pick another place to edit. Here are some easy possibilities:

- Try forgoing the smooth crossfade between clips, voting instead for a hard cut (almost hard) just before the first modulation of the incoming sentence; the energy of the incoming word may be strong enough to mask the underlying tone mismatch (see Figure 11.9). Such psychoacoustic masking is part of the thinking behind such wonders as MP3 encoding.

- Move the edit slightly while maintaining the same sync. This will work for you until there is a text or rhythm change between the clips.

- You may be able to edit just within the first word of the incoming shot.

These solutions involve cutting in or near the gap between the words. However, sometimes that just won't do; you must gather your wits and begin editing within words. This involves a certain familiarity with the workings of language.

Using the Structure of Language to Your Advantage

The sounds of language consist of vowels and consonants. Generally, vowels are created with an open vocal tract and consonants are the result of some sort of constriction in the mouth or throat. Understanding a bit about vowels and consonants can be helpful when you're trying to squeeze takes together to form a replacement sentence or when you need to tighten or loosen a phrase.

For the most part, it's the vowels that give you trouble. They're usually longer than consonants, so they have more opportunities to wreak havoc, and being open they tend to be more tonal, more musical, than their

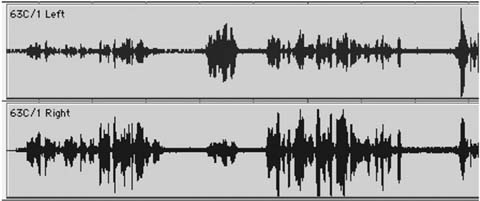

Figure 11.9 If the region you're fading to (in this case, 63C/1) doesn't have a usable handle, or if a crossfade between the clips results in audible changes in room tone, hide the shot transition in the strong initial modulation of 63C/1's beginning. Often you can mask moderate changes in room tone behind such a heavy attack. Listen carefully to the transition—with plenty of preroll—to make sure you're not fooling yourself.

percussive consonant cousins. It can be frustratingly difficult to cut within vowels. In fact, unless you're totally without options, don't try it. You'll likely create a bump since the complex tonal elements of the vowel won't line up properly at your cut. And if you try to smooth the edit with a crossfade, You'll create a double voice. Approach vowels as respected adversaries and focus instead on consonants.

Most consonants are useful landmarks for lining up alternate takes against a reference. Those like D, P, and T tend to show up quite clearly in a waveform, so They're ideal beacons for navigating through a sentence. The only problem with short consonants is that They're, well, short. The very attribute that makes them useful for alignment lends them little flexibility. You can't really stretch time by making a T sound longer. You can, however, play with the space around it. Don't be afraid to lose a tiny bit of time before a T or buy a little more pause after a P. Just don't expect to gain a lot of time.

Editing within Words

If the vowels are too dangerous and the short consonants are good as markers but not the least bit flexible, where can you edit within words to change the length of a sentence?

- Cut in the pauses when possible. You can make up a lot of ground by pulling a frame here and there from the spaces between words, but there aren't always real pauses in a sentence or it sounds unnatural when you tamper with them. Be careful not to hurt the breaths.

- If a vowel is terminated with a sharp consonant sound, you might be able to shorten the end of the vowel using the attacking consonant sound to mask the vowel glitch. Try it; it just might work.

- Look for a shorter or longer vowel sound from another take. This isn't the fastest approach, but it may give you just what you need.

- Use sibilants. Sibilant sounds are the “hisses” created by consonants such as Sh, Ss, or Ch. They inhabit an enchanted land between vowel and consonant sounds. Like other consonants, sibilants are easy to spot; in fact, their familiar pursed-lip shape is the most obvious of all waveforms. Unlike normal consonants, however, sibilants are long-lasting so there are usually many opportunities to make them longer or shorter. Very tonal vowels are all but impossible to splice, but sibilants are more like white noise than music, so you're rarely punished for editing them. In fact, you can do (almost) anything: cut, fade, loop—all within reason, of course.4

No matter how you go about splicing together a line, there's one truth you really ought to pay attention to: never fade during a line of dialogue. Put another way, there should never be any dialogue inside a fade. You can easily fool yourself into thinking that your quick fade at the beginning of a word—your attempt to fix the actor's flub—actually works. You may decide that the fade over the ringout at the end of a sentence really does remove that little noise. No, on both counts. Fading over dialogue just doesn't work, and it robs the line of its natural energy.

Shot Balancing

You've got to know where to cut, but you also have to know how to do it. Dialogue editing's most important sleight of hand is taking the elements of a scene, often shot at different times or under dissimilar conditions, and nursing them into a living scene that viewers will truly believe to be real. Matching the voice qualities, the degree of “on-mic” sound, and the level and quality of the room tone, and creating narrative-enhancing perspective are what make a scene shine. Shot balancing removes the mechanics of filmmaking, but exploits the language of film to allow the sound of the dialogue to be more than just the carrier of the text.

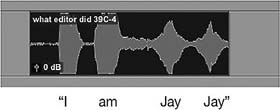

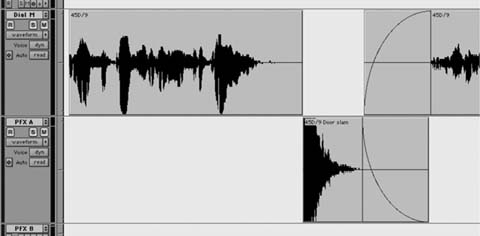

Sometimes film editors will lop off the ends of sentences and wonder why it doesn't sound right, and then expect you, the production dialogue editor, to fix it! Let's say an actor originally said “I am JJ, you idiot!” The director wanted to make this character more likable so the film editor cut off the words “you idiot” leaving the “JJ” dangling in midair. Figure 11.10a shows what the edit looks like in Pro Tools. Notice the scene and take number, 39C-4.

Figure 11.10a Sentence edited by the picture editor (39C-4).

Compare this to the original sentence:

Figure 11.10b Original line (39C-4).

In Figure 11.10b notice the little tail on the end of the second “Jay,” which is the word “you.” If the actor had said “I am JJ … you idiot!” It would have been easier to remove “you idiot” and have it sound almost like the end of the sentence. That's why looking for alternate takes is a good idea!

I found an alternate whose second “J” sounded like it would work as an ending of the sentence (Figure 11.10c).

Look at Figure 11.10c. Notice the scene and take number: 39C-2. The selected take was 39C-4. So even without looking at the sound reports, I know that there are at least takes 1 and 3 as well. After listening to all the coverage, this was

the best candidate. I cut off the second “Jay” from 39C-2 and attached it to the original 39C-4 and added matching ambiance on either end. It's not perfect, but compared to just lopping off the words it's passable! (See Figure 11.10d.)

Figure 11.10d Completed sentence (39C-4 and 39C-2).

When you edit production sound, you have to be a “dialogue detective” and search around to find creative solutions for problems that you hear. Sometimes you find these solutions in alternate takes or alternate angles. By listening and keeping your mind and ears open, you will start to be more creative in finding “fixes.”

Victoria Rose Sampson, dialogue editor

Pirates of the Caribbean: The Curse of the

Black Pearl; Sex and the City

Remember, films are shot with one camera, not in script order. Between each shot or setup (not each take), the camera relocates, the lights are reset, and all sorts of other things change. This results in a new sound for each angle. Sometimes the difference between shots is hardly noticeable; other times the contrast is shocking, leaving the edited scene all but unlistenable.

Compare cinema's single-camera approach with video studio production techniques. Soap operas and many TV comedies are shot with several cameras, either in front of an audience or designed to give you the impression that an audience exists and that it's live. Because scenes are shot more or less in real time, there's little difference in the sound characteristics from one shot to another.

There's usually no change in set or lighting between shots (since They're shot multicamera), so there's little reason for each angle to have a different sound. Besides, these productions are almost always shot on a sound stage, so the overall noise and the differences from one angle to another are under much better control. Finally, the sound source doesn't change with the picture—the sound is captured from a common studio boom or a battery of radio mics—so there's no resulting change in room tone or sound color (see Figure 11.11).

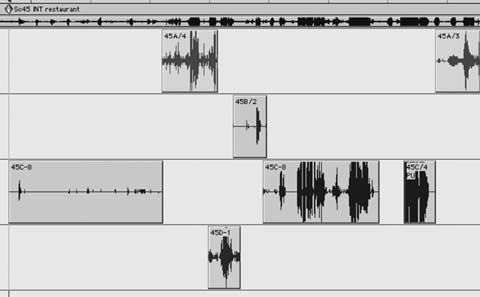

Now, back to film production. Imagine an interior restaurant scene (we'll call it scene 45) in which three people—Bob, Betty, and Blanche—are talking. Betty's back is to the kitchen, so her room tone includes refrigerator rumble in a large, hard space as well as some shuffling about. Bob's back is against a soft banquette, so his room tone is comparatively quiet. Behind Blanche is the rest of the restaurant—goodness knows what her shot sounds like, but

Figure 11.11 Plan of multicamera studio recording. Shown are the characters, the boom microphone, and three video cameras. Sound is captured from studio boom microphones or from several wireless microphones. The character of the sound doesn't change from shot to shot.

Figure 11.12 A schematic of scene 45. Note the different camera setups for each shot. Also note the landmarks in the room and their relationships with the characters and the camera. The geography of the room helps define the room tone for each shot.

it's probably full of air conditioner noise. (These shots have far more similarities than differences, but in this example we'll exaggerate things for the sake of clarity.)

The scene consists of several shots (see Figure 11.12):

- Wide establishment shot of everyone at the table, scene 45.

- Medium close-up of each of the three characters:

a. Betty CU, scene 45A

b. Bob CU, scene 45B

c. Blanche CU, scene 45C

- Medium shot, POV from the kitchen, scene 45D.

Now piece together an imaginary scene from the shots just given. The picture editor will analyze each one and fashion a scene that exploits the strengths of the footage and conceals its weaknesses to best tell a story. Chances are, the resulting sound bumps aren't the editor's highest priority. The picture people tell the story; we try to make it work. Besides, “It all sounded pretty good in the Avid!”

As soon as you open the AAF, the scene is annoying. Cuts between Betty and Bob result in two mismatched kinds of kitchen noise, while moving to Blanche you lose the hum of the kitchen only to hear the din of the restaurant. And neither of the wider shots cut well against the close-ups. Even if the scene is interesting and dramatically effective, it won't sound like a movie because of the bumps. How can this happen? Remember that picture editors have things on their minds other than the sound of shot transitions, so what to you seems an unforgivable lapse of judgment makes perfect sense to them. They're right. The story is what counts. Let the sound department deal with the bodies.

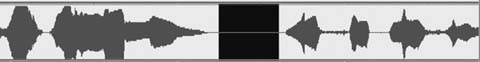

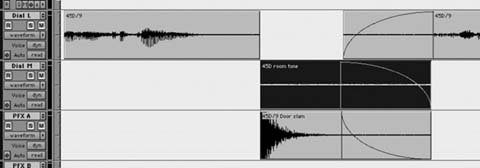

Scene 45 is sitting on your desk and, for whatever reason, it doesn't work. Before you can effectively attack the other issues (noises, overlaps, replacements, perspective, etc.), you must make the scene reasonably smooth. Otherwise, the irregularities caused by room tone mismatches will make it all but impossible to listen for the more subtle problems (see Figure 11.13).

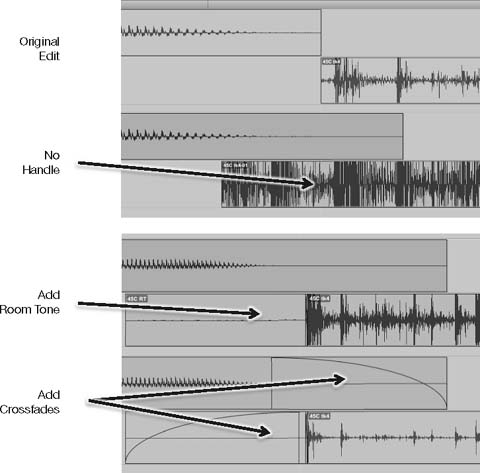

The easiest and quickest way to start smoothing the scene is to simply open up the handles of the clips on either side of the cut, make a crossfade, and

Figure 11.13 Detail from scene 45 after you've organized the tracks but before editing. Each shot has different room tone, so the scene is bumpy. Note that Blanche (45A) and the shot from the kitchen (45D) have much heavier room tone than the other shots.

Figure 11.14 Just because you have a handle doesn't mean it's useful. Here you see the OMF edit (above) and after opening handles (below). The marker indicates the location of the original cut. Note that there's no useful room tone in either handle. Always listen for words, breaths, and motion sounds when opening handles for tone bridges.

hope for the best. As it turns out, we were able to create clean room tone bridges merely by widening the clips. Fortunately for Bob, Betty, and Blanche, the editing gods smiled on us; as we pulled out the handles, we didn't encounter any footsteps, breaths, or words to ruin the room tone. Finding good “free” room tone like this isn't unusual, but to find it for a whole scene is unheard of. Count your blessings.

What do you do when the handles don't provide the room tone you need for transitions, as shown in Figure 11.14? You must create room tone, attach it to the region, and then make your crossfade. The next section deals in depth with room tone—what it is, how to make it, how to manage it. But first let's look at an example of bridging shot transitions using room tone you've found or created.

Figure 11.15 When a clip's handle cannot be used, copy room tone from a nearby part of the same shot. Then attach it to the original sound at the edit point and fade.

Often when the room tone in the handle is of no use, You'll find enough elsewhere in the same shot. Since the character of most room tones varies over time, looking nearby has two advantages: it's easy and it's likely to match (see Figure 11.15).

Remember, the room tone you're attaching to a shot must come from that shot (but not necessarily from the same take). Doing otherwise defeats the point of splitting tracks and organizing your scenes by shot of origin. If you can't find appropriate room tone elsewhere in the shot, try copying the region to a work track and fishing through the entire take. This technique is described in the next section.

You've now smoothed shot transitions in two ways: the lucky way, where you find usable room tone in the shots' handles, and the more common method, where you copy or construct a tone transition. Although each set of regions poses unique transition problems that require their own solutions, all shot transition puzzles are solved with some variation of these techniques.

You soon discover, however, that smoothing scenes is a constant tradeoff between total noise and evenness, and many novice editors err on the side of noise. “Lay down a wall of room tone,” the logic goes, “and you can mask the differences between shots.” Or you can mask the differences between the shots by running a vacuum cleaner in your cutting room while listening to the scene, but that's hardly a solution (see Figure 11.16).

Figure 11.16 Room tone was applied for the entire duration of scene 45 in an attempt to mask shot mismatches. The underlying problem has not been solved, and the scene will now be much noisier.

Although there are (rare) occasions when you want to include a wall-to-wall carpet of room tone in a scene, you shouldn't count on this as a solution to shot mismatches. But because this ill-conceived practice is so common, it's worth looking at why you shouldn't work like this. Look again at Figure 11.16. We've done nothing to ease the transitions. True, the bumps are less audible, since the problematic transitions are hiding behind the room tone track. During the dialogue premix, however, You'll undoubtedly remove some of the ambient noise and the mask will lose its cloaking ability. The cleaner you make the tracks sound, the more this editing technique will betray you.

Aside from being ineffective, this technique makes for noisy tracks. Through-out the scene, at least two tracks of room tone are playing. Twice the room tone, double the noise floor. The original problem wasn't within the shots but only at the transitions, so why should we add unnecessary noise to the middle of perfectly respectable shots? To understand this you must know something about room tone.

Room Tone

Room tone is the single most important tool of dialogue editing. Just as woodwind players are obsessive about their reeds, or fly fishermen have a mystical relationship with their flies, so too are dialogue editors fanatical about room tone. Without it, you can't edit.

At the same time, the term “room tone” is grossly misunderstood and misapplied. To many, it means “an interior atmosphere.” To some, it's any atmosphere. Wrong on both counts. Room tone is the “silence” in any location recording, what's left from a take when you remove the words, the footsteps, the breaths, and the rustle. It describes the location (interior or exterior) where a scene was recorded, and it helps define the essence of a shot.

Bumps in an edited scene are usually caused not by differences between characters' voices but rather by differences between their room tone. It's room tone that sets the shots apart, interfering with the magic of cinema continuity. And it's room tone that enables you to create a smooth and believable scene. Dialogue editors use room tone in every stage of editing. These are some of the most obvious examples:

- To bridge between different shots within a scene, lending the impression that the scene is a continuous action rather than a collection of shots.

- If added judiciously, to ease the transition between quiet close-ups and boomy wide shots.

- To remove noises from dollies, denture smacks, lip flaps, offscreen talking, and so on.

- To replace the environmental sound when dialogue must be rerecorded (ADR). Any missing body action sounds are replaced with Foley or with similar sound from elsewhere in the shot.

- To enhance international versions of films (M&E) when original room tone is added to atmospheres so that the international version resembles the original-language version.

Room Tone Versus Backgrounds or Atmospheres

Simply put, room tone belongs to the dialogue department, whereas atmospheres (also called “backgrounds” or “ambiances” depending on where you live) are the domain of the sound effects/background department. Dialogue recordings are mono (perhaps multitrack, but not stereo), and thus so is room tone. Atmosphere recordings are usually stereo or 5.1, although some are mono. Good room tone will have all of the characteristics of the shot that it's fixing. Level, color, pitch, “action”—all of these attributes must match the shot at the moment in the scene when They're used. Even if the location mixer records a stereo atmosphere of the set, this is not the same as a room tone and its uses are different.

Finding Room Tone

A location recordist will try to record room tone for each scene filmed. This will solve some of your problems, but it's not necessarily the answer to your prayers. If there are four shots for a given scene, for example, each one will have its own distinct sound. Maybe the lights have moved; perhaps there's a noisy highway behind a character in one angle but not in others; or the camera may be closer to the actor (and microphone) in one shot than in the others so the camera noise is discernibly louder. The reasons for mismatched room tone are endless (see Figure 11.17).

When the location mixer records 30 seconds of room tone for the scene, whose tone is he recording? The shot with the camera? The shot with the highway noise? The shot with more lighting ballast noise? Or perhaps an “average” room tone for the scene?

To make matters worse, it's often very difficult for the location mixer to finagle a few seconds of real silence on the set while she records. Her call

Figure 11.17 Shooting on location means mismatched room tones. In this convenience store, there are several sources of steady noise, many of which can't be turned off. Add to that the traffic outside and the noises created by the shoot itself—ballasts and the generator—and you see how it's hard to depend on a “general” room tone recording intended to cover the whole scene.

for “Total silence!” doesn't mean “Whisper softly into your cell phone” or “Slowly open your lunch.” “Absolute quiet!!” doesn't mean that the continuity person can complete her notes or that the grip can play with his pliers. Bottom line: There's no guarantee You'll be able to find good room tone within the wild room tone recordings from the set. Often, you have to make it yourself.

Creating Room Tone

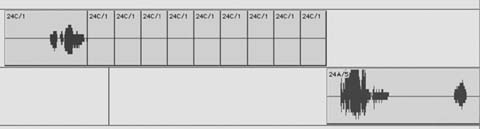

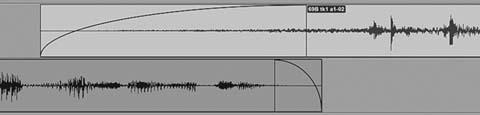

A common myth is that you need to locate only a small snippet of decent tone and then you can loop it to cover any duration. Wrong. Unless you've created a room tone so sterile that it totally lacks texture, any loop you attempt will be heard as just that: a loop. Reasonably long stretches of room tone can be looped, but even these usually require some tricks to avoid sounding repetitive (see Figure 11.18).

Figure 11.18 A room tone loop fills the hole between two shots. A string of regions that looks like this will sound like a loop. Anything this symmetrical means that the editor didn't listen while filling the gap.

Another myth is that you can successfully create loads of room tone in a sampler. It can be done, but the result is rarely natural, alive, or believable.5 For the most part, if you want good room tone for a shot you must collect bits and pieces of it from within the shot and then piece together a convincing construction (see Figure 11.19).

The new room tone must come from within the same shot as the material it's supporting. Using another shot's tone won't solve your problems. What do you do?

1. Make a copy of the clip for which you're trying to create room tone. Paste it onto one of your work tracks.

2. Open the handles of this clip all the way to its boundaries.

3. If you're working in Pro Tools, enter Shuffle mode. On other DAWs, use a mode that allows you to ripple tracks while editing. (Take note: One of the main reasons you created work tracks was to have a safe place for ripple editing. If you use Shuffle mode on a “real” track, You'll lose sync on all of the track's downstream regions.) Remove all dialogue and noises from this region. The region will become increasingly shorter and You'll be left with room tone only. You can actually remove most of the words and noises without listening to the track; let the graphic display and your gut be your guide.

4. Make a couple of fine passes, each time removing more and more unwanted noises, breaths, and rustle. You should end up with a very smooth chunk of room tone, devoid of recognizable landmarks. The process is

Figure 11.19 More room tone is needed for the highlighted section on Dial E. If a region's natural handles aren't useful, copy the region to a work track and completely open the region's handles. There'll be many possibilities for finding good room tone.

much like cooking a reduced sauce—you simmer it for hours and hours until it becomes a small, perfect concentrate.

5. You can automate the process of isolating room tone with Pro Tools' Strip Silence function (see Figure 11.20). This feature, equivalents of which are found on most workstations, asks for a threshold volume and a duration, which it uses to define “analogue black.” If you dial in −35 dB and 500 ms, for example, it will create a new region wherever the peak level doesn't exceed −35 dB for at least half a second. Once this criterion

Figure 11.20 Pro Tools' Strip Silence dialogue box.

is met, it will create a new region. Some people like to separate the wheat from the chaff using Strip Silence; I prefer to do it manually.

6. When your room tone is smooth and without transient sounds, perform crossfades at each of the edits you created (see Figure 11.21). This will smooth the cuts and prevent clicks (especially important if your room tone carries lots of low-frequency information).

Figure 11.21 Smooth out the room tone with crossfades.

7. You'll want to create a consolidated region from your jumble of crossfaded clips. One complete clip is easier to edit, fade, and automate. Plus, you'll have a properly named room tone file that will appear in your clips list and can be imported into other reels of the film. Before consolidating this string of edits, be certain that you're happy with the edits and the crossfades, since once consolidated the edits can't be fixed. Consolidating creates a new soundfile, which you must subsequently name.

8. Name the new file using a sensible, systematic, easily sorted naming scheme—for example, “RT Sc32 Bill WS (quiet version).” This provides all the information you need to find the right room tone without scratching your head. You don't want to end up with hundreds of room tone soundfiles named “Aud_1-1.” Be sure to include “RT” in the clip name so that you can easily find it. How you name your room tone creations is not important as long as you're consistent. Later in the scene, or perhaps when you edit the ADR, you'll need the room tone files you painstakingly created. Make it easy on yourself by giving them consistent, logical names.

9. If you're working in Pro Tools, return to the Slip mode. If you are using another workstation, leave the ripple enable mode and return to normal editing.

10. If you're going to immediately edit with your new room tone (which is usually the case), trim away the beginning and end of the soundfile before copying it to a track adjacent to the one you're editing, from which you'll cut and paste as necessary. Trimming the top and tail of the soundfile provides a handle you can use to fade or trim any new edit (see Figure 11.22).

Figure 11.22 Consolidate the tone into one manageable region. Trim off the region's outer edges (seen here beyond the highlighting) to facilitate edits and fades.

Using All Takes to Create Room Tone

If, after you cull the words, noises, and other unwanted irregularities from your expanded take, there's not enough room tone to work with, you can extract what you need from all of the takes of the shot.

- Find all of the alternate takes of the shot in question, including the one used in the film. (See Chapter 15 to learn how to do this.) String the takes together, one after another, onto the work track.

- Follow the procedure described earlier for extracting quality room tone from the takes. When finished, consolidate and name the new room tone file.

Dealing With Changes in Room Tone Pitch or Volume

Small, recognizable sounds—that tiny click, the miniscule footstep, the quiet lip smack—will betray you when you repeat a section of room tone. Plus, it's not likely that the click, step, or smack riding along with room tone will happen to fall in an appropriate spot in the scene. Get rid of such signatures.

However, sometimes it's not enough to remove the telltale noises. Say you're looping a piece of room tone that rises in pitch or level over the course of its five-second life (nondescript background traffic rumble is an example). Merely repeating the cue will result in a “reset” of the pitch at each loop edit. Even though you carefully removed the noises, your loop still sounds like a loop (see Figure 11.23).

You can often create a good loop from a pitch-periodic room tone file by copying and reversing the room tone (see Figure 11.24).

- Make a copy of your clean room tone region. Make sure there are no acoustic sounds such as footsteps, birds, or voices in the clip.

- Use your DAW to create a new, reversed region.

Figure 11.23 This room tone region tends to get louder with time, so looping it will result in a sudden drop in level followed by a slow rise. The same problem can arise when the level is even throughout the region but there's a steady change in pitch. Either way, there'll be a bump at the edit.

Figure 11.24 Assuming there are no sounds with patterns (voices, birds, car horns, etc.) or with a clear attack and decay, you may be able to loop a room tone with a pitch or level pattern by reversing the soundfile.

- Trim a small amount from the end of the original file and the beginning of the reversed file. Edit these shortened soundfiles together and crossfade as necessary.

- Consolidate and name the resulting soundfile.

The room tone will still have a cyclical pattern, but it will be smooth and complete rather than cut off in mid-period. In a pinch, it will work fine as long as you don't overuse it.

Matching Room Tone and Dialogue

Go to great lengths to avoid applying EQ or other processing to a room tone during editing. If you have to change the room tone to match a shot, there's probably something wrong with it. Try again to find a better match, since “fake” room tone will come back to haunt you. Remember, it's unlikely that you can hear as well in your cutting room as on the mixing stage, so bold processing of room tone clips will likely backfire.

If all honest attempts at finding acceptable room tone fail, then anything is fair game. Equalize if you must, but listen to your work in a mix room—before the premix—to make sure you haven't fooled yourself into believing that the edit works. It's horribly embarrassing to play these tracks in the dialogue premix—tracks you're so proud of because of the nerdy manipulations you've subjected them to—only to hear that they sound nothing like the dialogue you're trying to match.

You may be tempted to use room tone from sound libraries. Its logical that you can purge the shot of room tone, leaving only the “good” words, and then fill in the holes with tone from someone else's recording. This flies in the face of all things dear to generations of dialogue editors and it defies the logic of room tone editing. Having said that, if following all the rules has gotten you nowhere and your back is against the wall, that SFX library room tone option seems pretty good.

Using Room Tone to Remove Noises in Dialogue

Location recordings are filled with small noises that often go unnoticed on first listening. The causes of these short nuisances are limitless, but the most common are static discharge, cable problems, lip smacks and denture clacks, dolly track, and crew noises. Individually, these tiny sounds don't amount to much, but collectively they steal focus from the dialogue and leave the impression that the set is much noisier than it really is. Thankfully, these transient noises are easy to remove as long as they occur between words.

Small ticks and pops can usually be replaced with room tone, but first you have to find them. The easiest way is by scrubbing until you pinpoint the click. Then zoom in to the offending noise. Find a tiny piece of adjacent room tone, copy it and paste over the click, and add short crossfades (see Figures 11.25 and 11.26). If you're removing several clicks within a small region, use a different piece of room tone for each replacement so it doesn't sound like a loop.

Techniques for removing such noises are discussed in detail in Chapter 14.

Copying Less Room Tone Than You Need

Earlier, when you created room tone by extracting “clean” silence from a shot and consolidating it into a single new soundfile, you cut off the edges so that you'd have some “wiggle room” for making edits and creating crossfades. (Refer to Figure 11.22.) The same tip applies when copying room tone from a pause in order to cover a click—take less than what's available. Leave some clean tone in the handle beyond what you select so that when you edit it into your track you'll have some extra room tone to play with

Figure 11.25 Often clicks are very hard to spot visually. The click here, indicated by the marker, can be fixed by replacing it with adjacent material. The selected sound will be pasted over the matching part of the region containing the click. Line up the sine curves to avoid making new clicks.

Figure 11.26 The copied material covers the click; short crossfades prevent introducing other problems. Note that the line of the waveform follows a more or less predictable path even after the new material is inserted.

Figure 11.27 Room tone copied from a pause in dialogue. Don't copy the entire available room tone. Omit a bit on either side of the selection so you can easily adjust the edit point and make crossfades.

and use for crossfades (see Figure 11.27). Remember, if there's motion or clothing rustle in the area you're repairing, your “fill” room tone must contain matching action. Don't go to the trouble of adding clean room tone to a rustle-filled shot when the resulting quiet will be distracting.

Shot Transitions: Basic Rules of Thumb

Moving smoothly from shot to shot is what makes a scene believable. Managing noise is what makes a scene bearable. Keeping processing to a minimum is what makes a scene natural. Balance these competing interests and a scene will work. In this section you will see many types of edits to use in many situations. Consider them starting points, tips to set you on the right path. They are guides, not gospels. However, there are a few overarching principles that are common to all edits from one shot to another. Work with these basic rules in mind and the rest will largely take care of itself.

Rule One: Whenever Possible, Play Only One Source of Room Tone at a Time

Let each shot speak for itself, overlapping room tones only at transitions. It's true that there must be constant room tone running the length of a scene. After all, it's the air that a scene breathes. However, in most circumstances the room tone comes from the regions themselves. Only when there's a hole in the production track do you add a steady track of tone.

In Figure 11.28, each shot crossfades into the next. Here we were lucky because there was sufficient clean room tone within the handles of each shot

Figure 11.28 Detail of preliminary edit of scene 45. Regions overlap more or less symmetrically and there's no supplemental room tone track. The room tone for the scene is carried by the shots themselves.

to allow for easy crossfade creation. There's no added, steady room tone track; instead, the room tone comes uniquely from the shots that make up the scene so it rarely has more than one source. “But,” you ask, “what about the crossfades? During these transitions two room tones are playing at once.” Yes, but as one shot fades in its partner fades out, so the sum of the two is never more than 100 percent of the average level of the shots. You haven't broken the rule about avoiding more than one simultaneous tone.

Creating crossfades between regions doesn't eliminate sound differences between shots. It merely lengthens the transition so that you don't notice the changes as much. Human “hearing memory” is surprisingly short, so it doesn't take much to fool the ear into thinking that a transition is smooth. Just spread the transition over a bit of time and most listeners won't hear a thing.

Once you construct smoothing crossfades between shots, the scene will almost certainly sound quieter, as though you equalized or otherwise cleaned it. There will likely remain “swells” as you move back and forth between shots. When the room tone characteristics of two adjacent shots are very different, there'll be peaks and troughs in the overall noise level as well as changes in the sound itself. This brings us to the next basic rule of dialogue editing.

Rule Two: Evenness is a Trade-off Between Noise and Smoothness

When two shots don't match well, you'll inevitably hear their transitions despite crossfades. By lengthening a crossfade, you'll smooth the transition and give the impression of evenness. At the same time, this will increase the total noise since there'll be a longer period during which two room tones are playing. What's the “right” length? Of course, there's no answer to that. It's a choice, so use your judgment. Don't be afraid to experiment with fade

Figure 11.29 The louder side of a crossfade will require more time to lose and gain energy, and normally will require a longer fade.

lengths; a tiny change in overlap can make a huge difference in balance, noise, and smoothness of the crossover. Plus, the two fades that make up a transition needn't be symmetrical. Typically, the noisier of the two room tones will require a longer overlap and longer fade, since it's carrying more energy—your hearing needs more time to adapt (see Figure 11.29).

Rule Three: Design Scenes That Require the Least Amount of Processing

An edit that will already play smoothly allows the mixer to use less processing, less camouflaging, and makes the predub/mix less of a technical endeavor. As a mixer you don't want to have to battle the edit, but rather to finesse it and focus more on the artistic vision of the director.

David Barber, MPSE, rerecording mixer,

supervising sound editor

House at the End of the Street

While it's true that you can smooth almost any shot transition if you throw enough EQ and noise reduction at it, you have to ask yourself, “At what cost?” Scenes not properly prepared prior to the mix require much more processing to achieve an acceptable amount of smoothness. Because of the excessive filtering they may lack life, or sound thin and metallic, or at worst like a cell phone.

When your transitions are smooth, they're less objectionable to the ear. Hence, they require less shot matching. Of course, certain shots will need to be “helped along” and the entire scene may need noise reduction, but since your dialogue transitions largely take care of themselves, you don't need to overcook it. This allows the rerecording mixer to put more energy into making the shots sound nice—robust, full, warm, articulate. The dialogue hasn't been stripped of its character, so there's more to play with.

Ironically, the noisier the location of the scene, the bumpier you can leave your dialogue edit. If you're cutting a scene with lots of traffic noise, in a location where traffic is logical, rest assured that the sound effects editor can sort out a problematic dialogue transition with a well-placed car-by or other background motion effect. No one will notice one more car amid a noisy scene, and the tiny added motion energy will bridge the gap.

On the other hand, no scene is harder to cut than an intimate conversation in a dead quiet bedroom at three in the morning. There's nothing to hide behind, and if the shots aren't naturally well matched, it's a nightmare to cut back and forth. If there's camera noise on one shot but not on the other, you have very few smoothing resources and you can't count on the SFX guys to save you. There are few appropriate atmospheric sound effects that can be used to mask the bumps, so you're on your own. Hopefully, you will have a few mics to choose from.

Working with Many Channels of Dialogue

When mono Nagra was the industry standard, most location recordings came to the dialogue editor on single-channel 35 mm mag stripe. For better or worse, you were at the mercy of the location mixer, whose job it was (and still is) to combine all of the on-set microphones into a mixed track. Then along came stereo Nagra and later DAT, which enabled location mixers to provide two separate mixes or two solo tracks, depending on the complexity and needs of a shot. There were pioneers like Robert Altman, who was recording on multitrack tape as early as 1975 with Nashville, but mono or two-track location recordings remained the norm until recently.

Hard disk recorders are now the standard, and multitrack field recordings are changing the way everyone works, not just the pioneers. A location mixer can now provide you a good live mix, recorded onto one or two tracks, along with prefader, unmixed microphone tracks. The picture editor, and later you, will get a production mix that is often acceptable, especially for quick-turnaround or low-budget productions. But often you want to dig into the tracks to find the mics that will make the scene alive and clean, or you must correct problems in the field mix. Given enough time, you can exercise great control over the tracks, bringing them to the mix well planned and highly focused. But when you're facing a scene in which every shot has several channels of original audio, it's easy to lose your perspective and choke on the abundance of sounds. Do you pick one track or play them all? Do you play two tracks at the same time? The answer, of course, is “it depends.”

The lower the budget, the less time you have to mix, so I appreciate the dialogue editor choosing the microphones. Besides, if there is a problem he has the recourse to recall the discarded mic track. If the location recordings contain more than four tracks, then the dialogue editor must select the useful tracks and mute the unusable ones.

Patrick Ghislain, rerecording mixer

La lengua de las Mariposas

Many shots—a close-up of a talking head, a two-shot of seated characters, even a group of people—can be recorded perfectly well onto one channel using a boom, a directional microphone, and a skilled boom operator. Assuming that this single track is well recorded, nothing could be easier for you. Just fix the head and tail of the shot, remove any unwanted noises, and you're done.

On the other hand, it's really satisfying to have at your disposal every microphone—radio mic and boom—so that you can choose exactly where you want to focus. You have fewer overlap problems and no worries about the location mixer or boom operator missing his cue. Again, the problem is having the time to sift through the material.

Deciding Which Tracks to Use

On most projects you'll work with the original, isolated, tracks of each clip, keeping the location mix as a reference. It seems silly for a location mixer to record all those tracks, only for you to ignore them and head straight for the location mix. What you do with these extra channels is largely guided by common sense, but it will also depend on the wishes of the film's rerecording mixer. If you've never prepared tracks for the person who'll be mixing your dialogue, have a little talk. Find out how he likes to receive multimicrophone, multitrack elements. Does he want all decent tracks to be available (and prepared) for the mix, or does he prefer that you make the call? How should you present the main choice? The other channels? Muted? Unmuted? Find out. The rest is up to you.

I love when the sound editor tries to save the boom. When he doesn't try to take away contrasts, rhythm, and ellipses. When he keeps a scene alive and holds on to accidents when they are not disturbing. When he respects what happened during the shoot.

Cécile Chagnaud, film editor/sound editor/sound designer

L'aube; Lettres vidéo

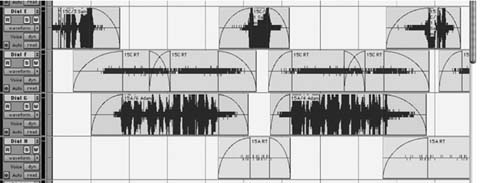

Deciding which channel to use is extraordinarily situation-specific, so the best I can offer you in a book like this is to set some basic rules, which, once learned, you can forget and then trust your gut. For example, earlier we learned the first rule of thumb of dialogue editing: Whenever possible, play only one source of room tone at a time. A simple, symmetrical crossfade often works well when crosscutting from one boom shot to another (see

Figure 11.30 Boom shots crossfading against each other. This is the most straightforward and easiest of shot transitions.

Figure 11.30). It also works with two radio mics in one shot. As long as the shots are reasonably similar, then symmetrical crossfades almost always give you good results.

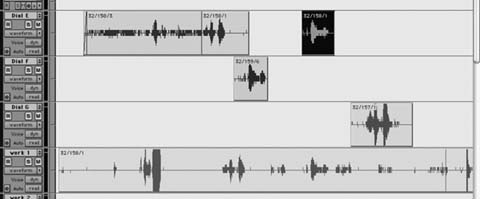

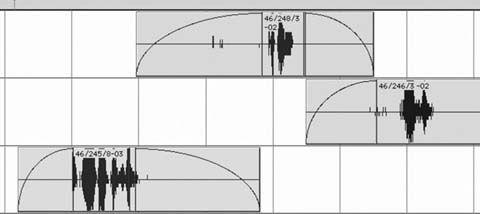

It doesn't matter how many tracks each shot carries, you have to get organized before you can edit. In Chapter 10 you learned that it's in everyone's interest that within a scene you organize the regions by shot (camera angle), all clips from a certain shot falling on the same track. The same rule applies for shots with lots of tracks. However, now there's one more thing to keep track of: the microphone used. Since the purpose of organizing your clips is to make for easier editing—and, more importantly, easier mixing—you've got to organize each clip by shot AND by microphone (see Figure 11.31). Otherwise, there's no point organizing anything at all.

It makes sense to me to put slates [shots] that will require the same treatment (EQ for example) on the same track, as opposed to religiously putting specific characters on specific tracks, which some editors do.

Polly McKinnon, dialogue editor

The Lord of the Rings: The Return of the King

Until you know how many dialogue tracks each shot will take up, you can't really organize, so the first thing to do is to pick your mics, more or less. As you get used to the clips you'll learn what each track (usually) represents;

|

the sound reports are handy here, to let you know what's on each channel. If a channel is totally—yes, totally—useless, delete it (you always have the OMF/AAF original in case you later realize this was a terrible idea). Any track that you likely don't want, but is not without some merit, move to the junk tracks. Any tracks that seem to be of interest, keep. Organize the clips by shot—the normal way—but also make sure that each microphone within each clip happens on the same dialogue track in all appearances. If a certain channel is not used in a clip, leave it blank (see Figure 11.32). As long as you remember that the purpose of splitting is to make for an easy mix, you'll do fine. |

Figure 11.32 When organizing a scene with multichannel clips, you must consider microphone as well as shot.

Managing Multiple Mics

It's not always about finding the cleanest track. It's about finding the one that works for what we see on screen. I love when dialog editors dig through the production dailies and find alternate takes for problematic situations. It's always nice to present to your director another option instead of cueing the line for ADR.

Kunal Rajan, supervising sound editor

King of Norway; For the Love of Money

Editing with lots of options is really the same as editing with few. No matter how many mics you have to choose from, you usually end up editing from one microphone to another. That's it. The differences are only in microphone selection. Following are examples of shots with two microphones. They run from very simple to somewhat complicated.

One Shot, Two Mics, Similar Room Tone: No Dialogue Overlaps

Imagine a long shot in which two people walk toward the camera. There's no headroomfor a boom, so both actors are wired with radio microphones (see Figure 11.33). Each carries her own room tone, but the tones aren't overwhelmingly different from each other. This doesn't sound altogether

Figure 11.33 A typical pair of radio microphone tracks. The characters are each wearing a wireless, and their room tones are similar. Normally, you won't want to let both tracks play at the same time, as all sorts of problems may arise.

bad, and you may be tempted to do nothing at all, leaving both tracks. However, there are a few pitfalls in leaving both running simultaneously.

- You're unnecessarily playing two tracks, even though only one person is speaking at a time. This doubles the room tone noise.

- Since both tracks are always open, you increase the chances of radio microphone distortion, clothing rustle, and other nasties coming from the nonspeaking character's mic.

- Whenever one of the characters speaks, you hear her voice twice—once close and on-mic and again through her partner's microphone, muffled, off-axis, and slightly delayed. The resulting dialogue is less punchy because you now have two wavefronts: one tight and crisp; the other gooey and late. If you remove the off-mic track, you'll usually get better articulation and a sense of reduced ambient noise.

Contrary to general opinion, mixing two radio mics separated by a reasonable distance won't often result in phasing. In fact, you'll hear phasing only when the two characters are very close—kissing, hugging, or passing near each other. Cutting this shot is actually quite simple. Assuming that there's a pause between each character's lines, you can crosscut as though the sounds came from separate shots (see Figure 11.34.).

If there's no room to crossfade between characters, you can likely cut in places where strong modulation will mask the edit. (Take another look at Figure 11.9.)

Removing the off-mic information will make your shot sound crisper and more articulate. If you hear one character's room tone coming and going

Figure 11.34 When two radio mic tracks carry similar room tone, you can usually crosscut between them. Listen to all of your fades (in solo) to make sure you're not including any of the “other” side of the conversation in your track.

with her lines, first extend the room tone bridges. If this doesn't work, it's likely that the room tones are too dissimilar, either in volume or in texture, and you'll have to try a different approach. Read on.

One Shot, Two Mics, Similar Room Tone: Dialogue Overlaps

In the real world, people often interrupt each other. Sometimes it's an aggressive or excited attempt to make a point, but more often it's just part of the rhythm of speech. As if by some mandate from above, movie dialogue is much more serial—one person speaks, the other responds. It certainly makes for easier dialogue editing. When one character does interrupt another in a film, it's called an overlap. Usually, but not always, it's a problem (see Figure 11.35).

When a character's track is corrupted by the sound of her conversation mate's voice, you'll likely have to replace the end of the on-mic line as well as the beginning of the incoming line. At least that's the rule. However, when you're cutting back and forth between reasonably well-balanced and isolated microphones, overlaps don't always cause you grief. If there's very little crosstalk between mics, you can play both tracks for the duration of the overlap and hope for the best. The power of the interruption's attack will often mask the doubling of tone, so you won't notice a noise increase. If both sides are clean and on-mic, you can probably get away with playing both tracks for a few words.

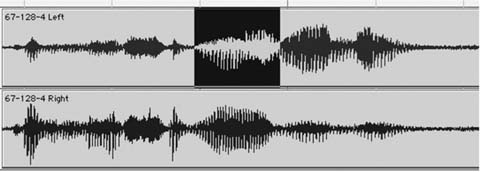

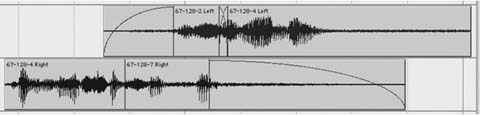

Figure 11.35 A typical two-radio microphone overlap. In the highlighted section, the top (left) track interrupts the bottom track. Outside the highlight, only one track is “on-mic” and the other can be eliminated. During the overlap, both characters are talking, which means that their lines are contaminated during the overlap and probably have to be replaced.

Figure 11.36 Both sides of the overlap have been repaired. A new beginning (67-128-2, left) replaced the damaged attack on the top track, and a clean ending (67-128-7, right) replaced the corrupted end of the bottom track.

Sometimes playing both tracks during a two-microphone overlap doesn't work. The increase in ambient noise due to room tone doubling may be audible. You may encounter strange coloring of the voices from the interaction of the two mics. Or you may hear a doubling of words if the two characters are far enough apart to create a timing difference but not so far as to keep them off of each other's tracks.6 In such cases, you must reconstruct from outtakes both the end of the corrupted line and the beginning of the interruption (see Figure 11.36). Refer to Chapter 14 for techniques of locating the alternate take material needed to salvage these overlaps.

Boom on Track 1, Radios on Track 2: Similar Sound

Occasionally a boom and a radio microphone will crosscut surprisingly well despite common sense telling you otherwise. Try using the crosscutting technique shown in Figure 11.34. Odds are good it will work. If not, continue reading.

One Shot, Two Microphones: Dissimilar Room Tone

Sometimes it's not possible to crossfade back and forth between the two microphones of a shot. At each crossfade you hear a wave of changing room tone energy and the scene loses its footing. Matters are made worse in “cutty” scenes, since the frequent cuts are constantly reminding the ear of tone mismatches. This is a common situation when editing a scene with a boom and one or more radio mics, but two radio mics can also result in the same problem.

Figure 11.37 What's left of the original pair is on Dial E and Dial G after cutting the scene. The tracks have dissimilar room tone, so a simple back-and-forth edit plan won't work. Here, all off-mic signal was replaced by the matching room tone and placed on the track beneath. This four-track plan is a bit cumbersome, but it gives the rerecording mixer the most flexibility in balancing smoothness versus noise.

Figure 11.37 shows a classic scheme for cutting between strongly mismatched shots. Room tone fills are edited for each angle and kept separate from the shots. This gives great flexibility, but may prove to be too much work. When one shot's room tone is much louder than the other's, try first filling the heavier track (usually the boom) with room tone and fading in and out on the quieter track.

- Eliminate all off-mic dialogue on the boom track (i.e., when the dialogue of the other person is heard on the boom channel, replace it with good boom room tone).

- If the radio microphone room tone isn't too loud, you can either create short tone bridges leading up to the dialogue or cut from silence to radio mic with no tone bridge, assuming that the dialogue attack is loud enough to mask the change in room tone level. Figure 11.38 shows how to construct such a shot.

- Use volume automation to slightly reduce the boom channel's level while there's dialogue on the radio microphone channel. Match the timing and curve of the automation with the fades on the radio track. How much can you lower the level of the channel that carries the dominant room tone? Probably not more than 3 dB, but it depends on the two tracks (see Figure 11.39).

Figure 11.38 The top track is a boom and the bottom track is a radio microphone with much less room tone. In this case, Paul's mic can probably fade in and out without continuous tone, since the noise of the boom microphone masks any swells in his room tone.

Figure 11.39 Even though Paul's radio microphone track carries little tone compared to Beatrice's boom, its room tone will contribute to the overall noise level. Slightly reducing the level of the boom track while the radio mic is playing will help smooth the scene and make it quieter.

Figure 11.40 Occasional quiet radio microphone inserts can be balanced and controlled by providing a parallel track of the boom's room tone. Later, you'll use a similar technique for ADR and production FX.

Aggressive, detailed volume automation like this can be a bone of contention between you and the mixer. Some will be thrilled that you did his grunt work by presenting a scene that basically works. Now he can start making beauty. Others will complain (perhaps rightly) that you created a mess. You never know. Ask.

You may encounter a scene largely covered by a boom microphone that also includes a few inserts from radio mics (see Figure 11.40). Often, the radio microphones will carry less room tone than the boom, so the inserts will stand out. An easy way to match these shots without increasing overall noise is to run a track of the boom's room tone parallel to the room tone segments. The mixer can easily control the amount of room tone with respect to the shot. There are many other applications for this parallel tone technique, such as production effects (PFX) editing and ADR editing.

One Microphone per Channel: Dissimilar (and Loud) Room Tone

In the last example, one channel overpowered the other so you only had to create one track of continuous room tone. The other, quieter track could effectively “hide” behind the noise of the dominant track. Now you're facing two noisy tracks, each carrying its own room tone. This poses an unsettling choice between smoothness and noise. Certainly, the smoothest option would be to run both tracks all the time so there's no energy or tone change between the two. But this causes two problems.

First, as in any of the examples shown, the off-mic track, with its slightly out-of-sync, muddy sound, will compromise the clarity of the speaking character. Adding this inarticulate muck to an otherwise decent bit of dialogue will weaken attacks and add an unwanted “halo” around the words. Even with a noisy room tone problem, the normal rules of cutting still apply. The other problem is that running two tones all the time makes your edit awfully noisy. No getting around that.

Once you've tried other, less draconian solutions, you're left with the option of creating two fully toned tracks and then minimizing the double room tone noise as best you can with volume automation.

- On each track, remove the off-mic dialogue and replace it with appropriate room tone.

- Once you've finished the editing, use volume automation to reduce redundant room tone as much as possible (see Figure 11.41). When, for example, the character on Dial B is speaking, lower the level of the added room tone on Dial A until you reach a point where you can hear the room tone changes. At that point, back off a bit with the level reduction.

Figure 11.41 Complete tone fill for two noisy, nonmatching tracks (top). Filling off-mic sections will clean room tone can help with articulation, but it results in a noisy scene. Volume automation (bottom) reduces noise buildup.

Two Acceptable Tracks: Which to Use?

Dialogue editors must understand the importance of the boom. It's the lens through which the space is realized—it contains the life of the scene. You may not be able to use the boom, but listen to it.

Tully Chen, location mixer

Foonote; Watermarks

Rarely in life are you saddled with too many good choices, but this dilemma does occasionally appear in dialogue editing; you encounter a scene recorded with a radio microphone and a boom and, amazingly, both sound pretty good. Which one to use depends on your access to the rerecording mixer. If you can play the scene to the mixer (or, barring that, to the supervising sound editor), you can get an opinion as to which way to go. Some mixers will ask you to fully prepare both tracks so that the choice can be made in the mix. Others will leave the choice to you.

On zero-budget films, I prefer to make the decision myself. If I'm familiar with the way the mixer works, I'll move the unused track—unedited—to a junk track. If I don't know the mixer, I'll prepare the unused track (up to a point) and then move it to junk. I remove the unused source from the active dialogue tracks since I don't want it brought into play unless we encounter a problem mixing my selection. Of course, I could edit both tracks side by side and mute the unused side of the pair. But this ties up a lot of track real estate and takes too much of my time, so I avoid it. What you end up doing will inevitably result from a combination of the mixer's wishes and your gut feeling.

Bottom Line: Common Sense and Luck

We've looked at several archetypal shot-to-shot edits, each with its own specific set of problems. And we saw lots of rules. Yet most editorial messes fall into gray areas, so rarely will obediently following the rules bring the truth. Instead, you have to analyze each problem, try the most appropriate solution, and then wing it. These are the main points to remember:

- Transition editing is always a trade-off between smoothness and total noise level. Keep enough mics open and you can make anything smooth. But at what cost? Crosscut as much as you can without causing unacceptable changes in room tone.

- Talk to the mixer, if possible, to see if your edited scene can be mixed or if you need to rethink your plan. And remember: many scenes don't need to be perfectly void of room tone changes. A skilled sound effects editor, upon hearing your edited guide track, will add a quiet car-by here, a discreet child's cry there, and no one will notice the occasional foible in the dialogue track.

- Except when both characters are speaking simultaneously or when it's otherwise impracticable, get rid of all off-mic dialogue. It only muddies up the track.

- When you must run simultaneous room tones because of mismatched levels or color, try to automate the tracks in a way that reduces the total noise of the room tone.

There'll be times when you simply must use more than one channel simultaneously. If there's continuous action on several tracks, there's no way to cut back and forth. In such cases, remove all the unwanted noises you can from each track, present both to the mixer, and make a note about ADR. There's nothing more you can do.

Making Sense of a Scene

Dialogue editing is the last line of defense against a scene that doesn't make sense. Granted, there's not much you can do if the narrative itself is falling apart, but you can prevent the soundtrack from adding to or even creating the folly. For example, when a scene is supposed to be exciting or busy, a picture editor may stack up production sounds, feeling that “more is more.” This works when the sounds in the sonic soup—each and every one—make sense and have specific roles to play. But when sounds are dolloped indiscriminately, whether by a picture editor or a sound editor, the result isn't excitement. It's a mess.

Here are some examples of poorly thought out, faux-exciting scenes:

Three ten-year-old girls are playing in a bedroom. They begin to dance and spin. As they become more vivacious, they start to giggle and laugh, and the laughter builds until they're interrupted by their humorless father. Since the girls are partly hidden by a doorframe in the master long shot, the picture editor layers several takes of the girls' squealing. No major lip-sync problems, he reasoned, so why not make the scene “beefier” this way? On an initial screening of the film, this trick might go unnoticed. You'll play along with the intensifying frolicking and likely not realize that rather than just three voices—one for each girl—you're hearing six or nine or more. The catch: This doesn't make sense. Even if most viewers can't initially identify the problem with the scene, they'll feel the scene is clogged, undisciplined, and without dynamics.