HDR Imaging in Automotive Applications

U. Seger Robert Bosch GmbH, Leonberg, Germany

Abstract

“High dynamic range image sensors” are essential for automotive driver assistance systems, and the automotive industry inspired and sponsored their development to a large extent. The Programme for a European Traffic System with Highest Efficiency and Unprecedented Safety (PROMETHEUS) launched in 1986 led to the requirements for automotive video systems for which the human observer was the reference model. The performance of the human eye (or other biological archetypes) has been seen to be necessary for the detection of traffic signs and road markings as well as everything else that needs to be detected to navigate through road traffic. Key performance parameters such as the nearly constant contrast resolution for more than six orders of magnitude made high dynamic range sensors an enabler for safety-relevant applications. At the inception of autonomous driving, high dynamic range sensors have become an inevitable building block for autonomous video systems.

Keywords

Automotive application; Human observer; Constant contrast resolution; Safety-relevant application; Autonomous driving

Acknowledgments

Without the inspiring working conditions and colleagues and later the excellent working groups I have had the chance to work with throughout the past 25 years of my professional life both in research (with Institute of Microelectronics Stuttgart) and in product development (with Robert Bosch GmbH) and without the patience of our promoters in the research phase and our customers in the product phase, all the experiences with HDR sensor design and camera development would not have been possible. Driven by the goal to develop systems which help to avoid accidents or at least to mitigate the impact on the individuals involved, we can be proud of the current status although there is still some need for improvement to reach the goal of “injury-free driving” with cars equipped with DAS or with autonomous driving cars. It has always been a motivation for me to come closer to the perfection of the best camera I ever experienced, the human eye, with its connection to an unrivaled effective control unit, the visual cortex. Finally, I thank my family, who patiently accompanied me and sometimes suffered from my enthusiasm for my job that sometimes required long business hours and some weekends to get things done with the necessary degree of perfection.

18.1 History and Motivation for High Dynamic Range Sensors and Cameras

Investigations of the root causes of traffic accidents by authorities such as the German Bundesanstalt für Straßenwesen (BAST), which is responsible for research into improved safety and efficiency of street traffic, and their systematic analysis have been the motivation to start massive efforts to develop technical driver assistance systems (DAS) to avoid or mitigate the yearly death toll paid for the increasing mobility possibilities given by affordable motor vehicles. Enke (1979) pointed out that 50% of collision accidents could be avoided by a reaction that started 1 s earlier. In 1979 Enke doubted that technical systems will be able to provide sufficient detection and recognition capabilities.

The European EUREKA Programme for a European Traffic System with Highest Efficiency and Unprecedented Safety (PROMETHEUS), which started in 1986, has been a European answer to that challenge and the approach to fight the increased risk of dying in street traffic.

European car companies such as Audi, BMW, Daimler, Jaguar, PSA Peugeot Citroën, and Volkswagen as well as their suppliers and subsuppliers teamed up with research institutes active in the area of remote sensing, fast signal computing, and actuation in PROMETHEUS. The target of the research activities was the development of systems and infrastructures to prevent or at least mitigate accidents. Common to all the approaches was the need to sense the environment so as to recognize potential risky traffic situations. Numerous remote sensing technologies from radar sensors to ultrasound sensors, ultraviolet, visible, near-infrared (NIR), and far-infrared radiation sensors, and lidar sensors have been proposed in all kinds of accident mitigation or prevention systems.

Pro-Chip, a subprogram of PROMETHEUS, focused on silicon sensors and neuronal processing chips in VLSI technology. Pro-Chip covered different remote sensing and signal processing areas, and one of these was the high dynamic range (HDR) sensor development. Focusing on optical sensing, it defined the goal to create an eyelike camera as an input for a DAS.

After the goal had been defined and initial calculations and tests had been performed, it became obvious that there was a significant gap between the seeing capabilities of a human eye and the technical performance of state-of-the-art image sensors for electronic cameras at that time. At that time mostly CCD cameras were used for machine vision. The signal-to-noise ratio in low-light conditions below 1 l× and the dynamic range were by far not usable for the intended applications in uncontrolled light situations as required in standard road traffic situations.

To assist the driver in recognizing traffic situations especially in critical situations, the camera system should have performance capabilities similar to those of the driver’s eyes. Further requirements arose from the intention to recognize what are also most important for drivers: traffic signs and road markings. The human observer as a reference model had been implied in the Vienna Convention on Road Signs and Signals, which had become effective by 1978. The visibility of signs for the driver is explicitly referenced on many occasions.

A most challenging goal was defined: the development of a video system with visual abilities similar to those of a human.

A lot of necessary spadework had already been done at this time by scientists in a different domain. In “Exploration of the primary visual cortex,” Hubel (1982) had developed a good understanding of the visual cortex and the function of the retinal structure. Shortly after PROMETHEUS started, Dowling (1987) published his comprehensive study on the retina and Mead and Mahowald (1988) had started to transfer the biological model into the electronics VSLI domain. Chamberlain and Lee (1984) had already begun to expand the dynamic range of the already mature CCDs, and Mendis et al. (1994) were to begin the development of what were later called “active pixel structures” in CMOS technology around the same time.

Shortly after PROMETHEUS had begun, the Defense Advanced Research Projects Agency and the National Science Foundation sponsored a comparable research program, the MIT Vision Chip Project, with the target “to design and build prototype analog early vision systems that are remarkably low power, small, and fast” as Wyatt et al. (1991) described.

All the ingredients for a silicon seeing machine where known or under development, but it took some years before a combination of advanced CMOS technology, a suitable design approach, and a software implementation led to a successful demonstration of an HDR CMOS camera at the Institute for Microelectronics Stuttgart (Seger et al., 1993) within the PROMETHEUS framework.

In the automotive world the term “high dynamic range CMOS imager” has been established for an imager with an intrascene dynamic range similar to that of the human eye (eg, > 120 dB).

The picture of a fully illuminated 100-W light bulb (see Fig. 18.1) with good recognizable details of the filament, the printing on the glass bulb, and the bulb fitting had become a comprehensive symbol for the large dynamic range of the HDR imaging devices although the scene is not as challenging as an outdoor street scene in situations with both the sun and shadowed regions in the field of view.

At the same time all the different research activities mentioned above generated numerous seeing chips, vision chips, artificial retinas, etc. Some research spin-outs reached a technical level which allowed industrialization by different companies. Although some approaches stayed close to the biological archetype, with a close-to-ideal logarithmic conversion characteristic, providing a constant contrast resolution over six to eight decades of received intensities (Seger et al., 1999), others tried to approximate the logarithmic transfer function by means of a piecewise linear approximation (Yang et al., 1999).

The viability proof for all the different concepts, however, was the harsh environment of the automotive application. Temperature stability from −40 to 120°C, intense solar radiation focused on the chip for several hundred hours, electrostatic discharge, etc., brought many weaknesses of the research approaches to the surface and these were resolved in many small steps.

Fourteen years after PROMETHEUS started the first real car application development started in 2000 and a vision of a complete electronic safety cocoon for vehicles woven by various sensors (see Fig. 18.2) was presented to the public (Seger et al., 2000).

Although this vision was considered as being too progressive at that time, things turned out differently. When Daimler presented its 2005 Mercedes-Benz S-Class model (model 220), one of the innovative highlights was a night vision camera using an HDR CMOS-based sensor with enhanced NIR sensitivity in combination with an active NIR headlamp. This was both the first implementation of a CMOS night vision camera in a series automobile and the first appearance of an HDR sensor in an automotive application. Because of their HDR capability, HDR CMOS sensors outperformed CCD sensors at that time. Around the same year, the first time in history, more CMOS sensors than CCD sensors were produced worldwide. Eight years later it was possible to buy a Mercedes-Benz S-Class automobile (model 222) equipped with:

• two near-range radar sensors, one long-range radar sensor, one night vision NIR camera, one night vision far-infrared camera, and one stereo multipurpose camera at the front;

• two near-range radar sensors and one multimode radar sensor at the back;

• 12 ultrasonic sensors distributed around the car (4 at the front, 4 at the back, and 4 at the sides);

• four surround-view cameras (distributed in the grill, the side mirrors, and the trunk).

One of key technologies enabling this vision was HDR sensors with “eyelike” performance. Within 15 years of the development of automotive cameras it has been possible, in cooperation with sensor and lens suppliers, to resolve most of the optical problems such as optical cross talk, unwanted reflections from all optical surfaces, avoidance of ghosts, and artificial false light structures, caused by impropriate materials or structures used in the optical path. HDR imaging became a success story and today we see a high percentage of HDR sensors in all developed automotive video sensing solutions. Some viewing applications still rely on linear sensors but will convert to HDR sensors as the benefits of HDR sensing are recognized. The reason for this success is explained in Section 18.2.

18.2 Requirements for Automotive Camera Sensors

The requirements in automotive video applications are as diverse as the applications themselves. From night vision to pedestrian detection, road sign recognition, lane detection, oncoming headlamp recognition, cross traffic alert, time-to-collision calculation, surround and rear vision, driver monitoring, etc., the applications are widespread. However, there is one common factor and one common goal behind all those applications: they have to deal with natural and technical illuminations and with an unpredictable variance in object appearance and have to work reliably and safely over a large temperature and voltage span.

18.2.1 The Illumination Condition

“Natural illumination condition” means that unlike industrial image capture and processing systems and unlike any photographer, an automotive video system cannot avoid particular bad situations but has to deal with uncontrolled illumination situations:

• Fast changing illumination situations in tree alleys or galleries. When one drives past windows in arcades (see Fig. 18.3), the light situation changes rapidly from bright sunshine to darkness, which is difficult even for human eyes to accommodate. The same effects can be observed when one leaves or enters tunnels.

• Relevant objects with only little contrast to the background. The bicyclist hidden in the fog (see Fig. 18.4) might decide to cross the road and should be detected even in situations worse than this.

• As long as the information on the low contrast between the bicyclist and the background is preserved, postprocessing of the image will help to detect details (see Fig. 18.5). The human eye especially preserves contrasts and allows the detection of even small differences in reflectance.

• Challenging situations — for example, the sun from various directions. The surfaces of objects change their appearance with a in the light conditions. The reflectance of a material describes how much light is absorbed and how much is reflected in which direction. The appearance changes with the relative position of the camera to the object and the illuminant of the object. An example can be demonstrated by the simulation of the appearance under different illumination conditions (see Figs. 18.6 and 18.7).

• Nearly no illumination on relevant objects on an overcast dark night. Automotive systems are expected to operate during the day and at night. Although the illumination condition differs largely within the opening angle of the headlamp and aside, an automotive video system is expected to see contrast in both dark and bright regions (Fig. 18.8).

• Challenging light situations also appear at night, when oncoming traffic leads to headlamp beams directly in the viewing angle, or in daytime, when the sun appears in the field of view. Every photographer always respects the advice never to take a photograph with the sun in the viewing angle, unless you use specific filters or you are trying to take special effect photographs. The strong variance in illumination typically overstrains linear sensors, resulting in either saturation or missing information due to missing gray value resolution (Fig. 18.9).

A further challenge for automotive sensors are motion effects in the image, which are of special importance as the automotive systems are themselves moving, which leads to shifted image contents with every acceleration or attenuation. All facilitating means which would normally be used in image capturing (flashlights to allow short integration times so as to reduce motion smear, long integration times with static objects, large or cooled sensors for high-sensitivity imaging in low-light situations) are not at hand for an automotive camera designer. Active illumination is not always possible, flashing is not allowed, integration time prolongation is not a particularly good idea if the camera is mounted in a moving car, and the super sensitive imagers with large pixels are good but far too expensive for a mass market product. The same holds for cooled sensors. Any kind of image postprocessing such as denoising, filtering, or tone mapping has to be done with limited resources in video real time, which means within 33–40 ms, corresponding to 25–30 frames/s.

In the context of a viewing application, video real time is whatever a human observer sees as a continuous video image (33–40 ms, corresponding to 25–30 frames/s). In the context of safety-relevant applications, real time is equal to or faster than the system reaction time, which for actuation of warning, restraint, braking, or steering systems is typically less than 150 ms.

The possibility to improve the illumination situation by a car-borne illumination system is very limited, unless variable active projection systems known as “matrix beams” or “multibeam headlamps” (see Section 18.4.4) are used. For cost reasons, however, the application of these illumination systems is still limited to top-range car models.

Further, the supporting effect of the illumination system is limited to a certain illumination field of view and is limited in range, because for a luminous flux of more than 2000 lm, United Nations Economic Commission for Europe Regulation 48 restricts the maximum luminance to 430,000 cd for all illumination components involved. Finally, outside the illuminated area there is darkness.

The light sources we encounter in road traffic are global sources such as sunlight, sky illumination ranging from clear blue sky to nightglow on a moonless night, the same but with an overcast sky, street lamps of various types, our vehicle’s own headlamps and those from other cars, different kinds of technical light sources such as building illumination flashing signals, and recently also LED and laser illumination systems. The dynamic range caused by the different intensity of light sources easily exceeds nine orders of magnitude; however, for automotive cameras a range of “only” around six to seven orders of magnitude is relevant (see Fig. 18.10).

The radiant flux Φ (W) of the light source is given by

where Q (J) is the energy radiated from a source over optical wavelengths from the ultraviolet to the infrared (wavelengths from 0.01 to 1000 μm) within a unit time (s).

18.2.2 The Object Reflectance

The variance in surface properties of interesting objects is of relevance similar to that of the variance in illumination. Surfaces can be

• specularly reflecting (eg, flashing garnish molding, windscreens, or the cladding of modern buildings),

• diffuse reflecting (eg, matt-finish paintings or roughened surfaces),

• a close-to-ideal absorber such as black fur, which in the worst case is worn by an pedestrian on a dark winter night.

The illuminated surface of the objects we are interested in as well as their background may change their appearance depending on the angular position, distance, material structure, and spectral absorption.

Further the material surface properties change with the weather and usage, as wetted or frosted surfaces behave differently from dry or cleaned surfaces.

Unfortunately the relevant figure for the automotive image capturing system is the convolution of both the irradiance applied on an object and the reflectance of the object of interest and its environment. The irradiance E is determined by the integral irradiation from all active light sources in the scene illuminating the object of interest and is

where Φ is the irradiance flux of a radiant source and A is the part of the object surface which is hit by the flux. E is measured in watts per square centimeter. Material properties such as the spectral absorption coefficient and the surface orientation define the portion of radiant flux which will be sent in the direction of the observer, which in our case is the camera for the DAS.

Figs. 18.6–18.8 show the same scenery with different illumination conditions. Figs. 18.6 and 18.7 show daylight conditions with the sun in different locations and Fig. 18.8 shows an overcast night situation with only the vehicle’s headlamps as the light source. Fig. 18.9 shows a hard shadow and objects with similar reflectance (eg, pavement) on the two sides of the shadow line.

18.2.3 The Medium Between the Object and the Image

The medium between the camera and the object and the light source is in the best case transparent but sometimes behave like a distortion filter (eg, a windscreen) but in the worst case behaves as a low-pass filter (fog) or an image disturbing noise source (reflected light from rain or snow). Fig. 18.4 shows the impact of fog on driver assistant systems. The luminance contrast of objects in the relevant distance for decision making (eg, braking or not braking) is significantly lowered and can reach values as low as 1%. For comparison, under optimal illumination conditions the human eye can distinguish between objects which appear to have a difference in brightness of 1% with respect to their neighboring objects. The insert in Fig. 18.5 shows the potential of image postprocessing to recover information from an HDR image as long as the image content is still above the noise level.

18.2.4 Functional Performance

Functions bundled in complex DAS such as lane detection, pedestrian detection, headlamp detection, and traffic sign recognition lead to irradiation requirements that span several orders of magnitude . At the same time, applications that require a steadily increasing field of view lead to a high probability that very different appearing objects will be detected at the same (integration) time by a single sensor.

A solution to reduce data complexity right at the location of detection in the focal plane, as it occurs in the human retina, has been investigated from time to time and has resulted in many approaches, such as the massive lateral processing in the focal plane proposed by Tarr et al. (1996) or Schultz et al. (2014). These approaches aim to allow local, cell-based processing like local signal inhibition, local amplification, or even digitization so as to decrease the requirements for an “overall correct pixel setting.” However, these efforts have not led to an automotive-applicable solution. Still the “off focal array” image processing requires image data as undistorted, unsaturated, and contrast preserving as possible. The envelope requirements are driven by various applications in different domains and result in time resolution better than 1/30 s, contrast resolution better than 1%, Delta E resolution close to 2, latency better than 40 ms, spatial resolution better than 1200 × 900 pixels, and intrascene dynamic range on the order of 1,000,000:1 between maximum and minimum irradiation. The combination is challenging enough to result in a nearly empty solution space, especially if contradicting requirements such as high resolution of object motion and at the same time suppression of beat frequency of modulated light sources are rated with similar priorities.

18.2.5 Operating Range

A challenge for automotive systems is the requirement of an operational temperature range.

The automotive system has to work within a temperature range of more than 120°C, or to be exact has to withstand temperatures from −40 to 125°C and has to perform fully within an operational range from −40 to 85°C ambient temperature. Self-heating reduces life expectancy for current imagers and even may lead to damages if the junction temperature Tj exceeds 125° for some 100 h, and self-heating induces leakage currents, doubling for every 7–9°C difference in temperature. With use of image sensor process technology with a feature size on the order of 50 nm and back-side illumination (BSI-technology) available for recent image sensors, self-heating might easily result in an artificial signal which is not distinguishable from real light.

18.2.6 Reliability

No drift effects are expected to happen during the lifetime of the sensor (which might be 20 years or more in car applications) and the sensor cannot be protected against significant solar radiation, thermal cycling, humidity, and mechanical shock, which means it must be resilient to those conditions.

18.2.7 Power Considerations

Operational temperature and reliability as described in earlier lead to an additional indirect requirement for camera sensors. As self-heating leads to a reduced operational range, the power for an HDR image becomes a relevant figure. Intrinsic HDR sensors which generate an HDR image in a single frame might outmatch reconstructive approaches which need storage for multiple integration steps and processing elements to reconstruct the image.

As the energy needed for each HDR image is a further key performance indicator, it is obvious that a target of 3 mJ per HDR image is a quite challenging target to reach if you need a considerable amount of memory and processing logic to reconstruct an HDR image from multiple exposures instead of deriving the HDR image by controlled charge skimming during integration.

18.2.8 Cost or the Democratization of a New Technology

In 2005 a night vision system providing a brilliant glare-free image displayed in the cockpit could be ordered for €1900 as optional equipment for a Mercedes-Benz S-Class vehicle. In 2018 a back-over avoidance systems will become mandatory in the United States for every new passenger car to reduce the yearly more than 200 fatalities and more than 15,000 injuries. It is assumed that equipment such as a back-over avoidance system will be affordable for customers of all car classes (not only for buyers of luxury cars) so that it is effective in reducing the number of traffic accident victims reported in official reports — for example, the US National Highway Traffic Safety Administration (NHTSA). At the same time, economies of scale will help to produce cost-effective components as automotive video systems originally intended to be a niche market product.

18.2.9 Safety

The New Car Assessment Program (NCAP) initiated by the NHTSA and the corresponding other regional NCAP labels judge the safety of new cars brought onto the market. The mission of the NHTSA with the NCAP is “to save lives, prevent injuries, and reduce traffic-related health care and other economic costs associated with a motor vehicle use and highway travel” (NHT, 2007). Regional NCAP organizations — for example, in Europe, Japan, Australia, New Zealand, and Latin America, adopted the target but made adaptations according to the regional differences in accident statistics. The approach is to test new cars independently from the car manufacturers and publish test results so as to inform the customer about the safety aspects of new cars and change their buying habit. The first standardized tests were released in May 1979 and the first results of crash tests were published in October 1979. Whereas in the beginning the tests focused on safety for passengers and rated the probability of being injured in collisions, the newest ratings are oriented more and more toward the avoidance of accidents and the protection of individuals involved in potential accidents such as pedestrians as well. Although improvements in the past were achieved by the implementation of safety belts, air bags, and impact protection structures, the prediction and anticipative working systems came into the focus in recent years. Systems such as a lane departure warning system, a front collision avoidance system, and automated emergency braking need remote sensing techniques as provided by radar, lidar, and video systems.

In the United States, the NHTSA considers new technology concerning the potential impact on fatalities and decides on changes to the rating system depending on the expected effect of new technologies. Each star of the five-star rating system corresponds to a certain protective feature of a new car. But the rating is adapted because of technical progress, which means that what is good for five stars today will not be sufficient in the future. Thus the NCAP keeps driving the safety standard and equipment development as the NCAP stars are good marketing arguments. Among the different local NCAP ratings, the European New Car Assessment Programme (Euro NCAP) tests are seen to be some of the most demanding tests. For currently requested features and their rating in the domains adult occupant protection, child occupant protection, pedestrian protection, and safety assist, see Euro NCAP Strategy Working Group (2015) and Euro NCAP Ratings Group (2015). The requirements are subject to ongoing adaptation. In the road map for 2020, especially in the sections on pedestrian protection and safety assist, new cars can receive high scores only if remote sensing systems are onboard. In most cases, signals from radar-, lidar-, and video-based systems are fused to allow highly reliable detection and the implementation of staged escalation systems starting with acoustic or tactile warnings for the driver, preparing reactions such as preparing the brake booster for fast interaction, and finally take-over action if the driver did not react in time to prevent an accident. To judge the situation video systems working reliably even under harsh environmental conditions are a key factor. Although HDR sensors are doing a great job in managing the large variety of lighting conditions and fast changing situations, further nonfunctional requirements exist.

The ISO 26262 standard (International Organization for Standardization Technical Committee ISO/TC 22/SC3, 2012) is another important driver for the development of automotive safety equipment and includes a video-based DAS. It was the reason to rethink the in situ testability of image sensors and marked the start of Automotive Safety Integrity Level (ASIL) support features on imagers. ISO 26262-10:2012 came into effect in July 2012. The standard is applicable to all safety-related electronic equipment for road vehicles of up to 3.5 t. In simple words, ISO 26262 requires that all electronic equipment developed and brought onto the market has to prove that it can fulfill the safety targets and is still functional within the system reaction time. Once the safety goals for a new car have been defined by the manufacturer, a safety plan has been established, and a decomposition of safety requirements has been done, it is up to the engineers to judge the impact of failures of electronic components on the safety goal (eg, “do not drive over a pedestrian entering the driving lane as long as it is physically possible to brake before reaching the pedestrian”). How can an image sensor contribute to that goal you might ask.

After a hazard and risk analysis has been performed and the ASIL-classification of the system and its components has been derived, a failure budget is given to each component of the system, which should allow the safety goal to be reached. Largely depending on the architecture and implementation of the system, the expected numbers of failures in time are in the range from 1000 (<10−6/h) in an ASIL A case to 10 (<10−8/h) in an ASIL D case. Note that this is for the complete system! Whereas a warning function might be classified as ASIL A, an automatic braking or steering system might be classified as ASIL C or D.

For each single component it has to be decided if redundancies are needed, special control measures are to be implemented, etc.

The image sensor as a key component and a potential source of failures which might lead to wrong decisions (eg, do not brake if a pedestrian is in the driving lane as the pedestrian has not been recognized because of saturation in the image) came into focus. A good understanding of which failures are relevant to the system is developed by the system designer and the component designer and leads to key failures per application as well as to strategies to correct failures or detect a critical system status. In the above-mentioned situation, automatic braking for pedestrians, a single-pixel defect will not play a major role unless the rate of defects becomes too high. Failures in the register setting for the integration time, however, might have a fatal impact. ASIL support features have been added to automotive imager designs to allow a functional test of analog and digital circuitries within the system reaction time. For automatic braking and steering the system reaction time is typically less than 150 ms, which means that for practical reasons the functionality must be checked within each individual image frame!

Automotive imagers currently on the market allow one to read out test lines or columns which provide information on the correct function of basic circuitries such as column gain amplifiers and analog-to-digital converters but also to check if transferred register settings have been implemented correctly.

Automotive HDR imagers with their ability to cover the complete range of physically possible input intensities in the application contribute significantly to the safety aspect as a functional test over the complete potential intensity range is possible within a single frame time.

18.3 HDR Implementations

Currently, several implementations of HDR sensors are available on the sensor market. We distinguish between intrinsic HDR concepts and reconstructed HDR concepts. Whereas intrinsic HDR concepts allow in situ generation of an HDR image by controlled charge skimming or reset, the reconstructed HDR concepts need a considerable amount of buffer memory to hold several captured images and rebuild an HDR image from several subsequently recorded images.

The main difference in the principles can be demonstrated in situations with a high illumination dynamic range, in combination with moving objects or modulated light sources. Sensors of the intrinsic HDR type generate no or at least fewer motion artifacts than sensors that use a reconstruction from images which have been integrated at different time slots.

18.3.1 Intrinsic HDR Solutions

Among the intrinsic HDR solutions, a differentiation can be made according to the similarity of the transfer function to an ideal logarithmic behavior. An imager which allows one to produce an analog pixel output which is nearly an ideal ![]() response or sensors which achieve a nonlinear conversion of generated cell output voltages which achieve a similar quasi-logarithmic behavior with sufficient resolution exhibit significantly better contrast resolution than their linear-approximated counterparts with only a few knee points in their transfer characteristic.

response or sensors which achieve a nonlinear conversion of generated cell output voltages which achieve a similar quasi-logarithmic behavior with sufficient resolution exhibit significantly better contrast resolution than their linear-approximated counterparts with only a few knee points in their transfer characteristic.

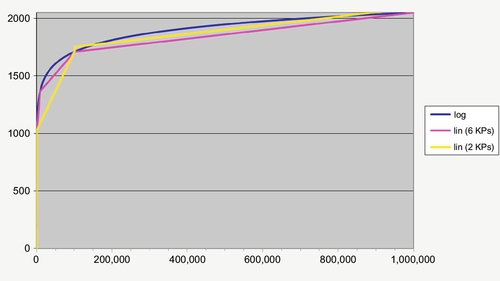

Fig. 18.11 shows the transfer characteristics of three different sensor implementations with direct logarithmic conversion representing an infinite number of knee points (resulting in a smooth transition characteristics) compared with the quasi-logarithmic transfer characteristics with piecewise linear approximations with either six or two knee points (resulting in a segmented transfer characteristics).

For simplification the sensor signal is assumed to be noiseless and the quantization of the signal is assumed to be equidistant in voltage steps in a linear-converting analog-to-digital converter. Whereas the difference in the linear intensity space shows only slight differences, the effect of the differences becomes quite obvious in the logarithmic intensity space as shown in Fig. 18.12. The introduction of a high number of knee points allows a smaller deviation from the logarithmic transfer characteristic (resulting in a constant increase of gray values when plotted vs a log illuminance scale) compared to a festoon like curve which shows alternating regions of gray value resolution versus a log scale.

Equidistant voltage steps represent, depending on the conversion function Vout = F(Φ), different contrast steps. In a logarithmic case, each of the 2048 quantization steps of an assumed 11-bit analog-to-digital converter represents an identical contrast step representing a 1.0068-fold intensity which allows one to distinguish objects with a difference in reflectance of 0.68%. The calculation is too optimistic by far as the noise contribution will consume a considerable part of the separation capability.

The difference in contrast which is necessary to reach the next least significant bit level with a characteristic deviating from the ideal logarithmic transfer characteristic is depicted in Fig. 18.13, which shows the minimum resolvable contrast across the complete dynamic range. Sensors with a log transfer characteristic show a constant contrast resolution while piecewise approximated transfer characteristic results in peaks in the minimum resolved contrast at the knee points of the transfer function. Standard natural scenes (unless it is foggy) often have an intensity difference in the range from 1:30,000 to 1:80,000 and in an extreme situation it reaches 1:500,000, and it is obvious that a large deviation from logarithmic behavior will result in large deviations in contrast resolution and lead to washed-out contrast in some intensity ranges. We can overcome this by modulating the location of knee points or significantly increasing the number of bits used for the representation of intensity values. However, contrast wipeouts are difficult to avoid. Understanding that contrast, either in luminance or in the color domain, is the enabler to separate and recognize objects from the background leads to the insight that a constant contrast resolution capability makes it easy to maintain object tracking even through largely varying illumination zones. How ingenious that the human retina is designed this way!

The contrast resolution achievable for different discrete numbers of knee points and for a given bit depth is given in Table 18.1.

Table 18.1

Theoretically Achievable Contrast Resolution

| Ideal Logarithmic | Piecewise Linear (6 Knee Points) | Piecewise Linear (2 Knee Points) | |

| Maximum least significant bit-equivalent contrast step | 0.68% | 2.6% | 14% |

| Minimum least significant bit-equivalent contrast step | 0.68% | 0.26% | 0.14% |

| Contrast resolution gap (1%) | – | 15% of digits | 30% of digits |

Table 18.1 shows that under the assumption that the output signal is converted with 11-bit accuracy, each of the 2048 digitization levels of a piecewise linear-converting sensor represents different contrast levels. Constant contrast resolution can be achieved only with a purely logarithmic transfer characteristic. That means that with a purely logarithmic characteristic each digitization level corresponds to the same factor in contrast change to reach the next digit in the output signal.

The maximum least significant bit-equivalent contrast step indicates what contrast increase is necessary for two object areas to appear different to the sensor used. Here the contrast step is defined as the ratio of irradiances received from the objects.

The contrast resolution gap describes the probability of not being able to distinguish between surfaces with 1% different brightness as the converted gray value is in an inappropriate region of the transfer curve.

18.3.2 Reconstructed HDR Solutions

Whereas the intrinsic HDR concept (with a sufficient number of knee points or number of bits used) allows close to constant contrast resolution in moving or light-modulated scenes, it is restricted in the minimum contrast resolution by the position of the knee point because around the knee point the resolution is degraded. Superfine contrast on the order of less than 0.5% cannot be resolved with considerable effort with use of this technique. Reconstructed HDR images from multiples exposures with a single or multiple slope linear sensing devices can achieve sophisticated contrast resolution by combining the image values of multiple overlapping exposures. However, it is required that the illumination is controlled during the taking of multiple exposures and the objects should not move significantly nor should the light be modulated during the different exposures unless a high computational effort is made to compensate for the object motion and light modulation artifacts. Similar techniques are of interest for still image photography as well, but are not a preferred image recording technique for automotive use.

18.4 HDR Video-Based Driver Assistance Applications

A good understanding of the value of HDR sensors and cameras in the automotive field can be achieved by a description of the major performance drivers for requirements by application.

18.4.1 Night Vision

Night vision was one of the first applications on the European market for different reasons. One reason was the transparency of the function to the end user, which allowed the end user to see in operation a new type of sensor entering the automotive field and share his/her impression with others; it is totally different from other systems (eg, the air bag trigger system, which nobody was keen to work on). Another major reason was the possibility for customers to differentiate their vehicles from those of their competitors and demonstrate a technological lead. At the beginning of the millennium, a camera in a car was still an eye-catcher.

The market penetration of this first vision product was not overwhelming on a global scale but served as a showcase for video-based applications and allowed a lot of experience to be gained with a very demanding application and confidence was generated in its operation as the night vision system was able to show images of high quality even under situations challenging for drivers’ eyes. The quality of the displayed image was the key performance indicator, and only an always saturation-free image with good preserved contrast as well as a temperature-stable and low-noise image could convince the end user that a night vision system served as a valuable support for driving at night.

To achieve a good contrasting image with low noise under precarious illumination conditions, at least from the perspective of a camera developer, meant dealing with differences in irradiation of up to six orders of magnitude while being able to distinguish contrast in luminance on the order of around 2%.

18.4.2 From Lane Detection to Lane Keeping Support

The change from monochrome displaying functions such as night vision or the colorful images of a rear-view camera to computer vision systems was accompanied by the introduction of imagers with sparse color filter arrays. Color separation was required for only one purpose in lane detection systems, to distinguish mainly regular white lane markings from yellow lanes used in construction zones as well as to recognize blue lines used in some countries.

No full-color Bayer color filter array was necessary, rather a coarse discrimination of lane markings in color categories was required, but at the same time high resolution was needed to maintain the lane recognition at greater distance providing a safe look-ahead range.

For lane recognition systems the HDR concept is essentially helpful in situations at night in which retroreflective fillers in the lane marking material cause very large gradients in reflectance that depend on the position of the lane marking relative to the headlamp. The resulting difference in irradiated intensity shows large variance; however the contrast between the lane marking and surrounding pavement is close to constant so the HDR sensor output is very similar over a large range of distances.

Although simple systems provided a warning once the calculated time to lane crossing became too small for a reasonable reaction of the driver before a lane was crossed, lane-keeping support became the more elaborate function. This function provides a carefully controlled momentum which is superposed on the steering column to maintain the car’s trajectory between the lane markings when they are present. It is still a comfort function, because the driver is always responsible and can easily overrule the system. This system is a precursor for autonomous driving as knowledge of the location and moving direction relative to the car’s own lane is a prerequisite to navigate a car.

18.4.3 Traffic Sign Recognition to Sign Reading

Very early on the detection and recognition of traffic signs also became a popular and easy-to-experience function of video-based DAS. Made to be detected visually by the driver, traffic signs are predestinated for automated visual detection systems. Because traffic signs are largely standardized by the Vienna Convention on Road Signs and Signals, are limited in the number of categories, and have expressive symbols; their recognition seemed to be an easy task for an optical detection system. But again it was the uncontrolled illumination and unpredictable surroundings which presented some challenges to the developers. Traffic signs that appear near the sun during sunset or diluted contrast in foggy conditions resulted in situations in which a standard linear camera-based system needs several attempts to obtain the information needed, resulting in a significant additional processor load, which is not necessary if only the sensor’s dynamic range has enough margin to resolve details of several decades of intensities. Future systems will need higher resolution to expand the optical system from a symbolic pattern matching machine to a real character reading and syntax understanding system. Still, resolution and viewing angle requirements as well as the availability of high-resolution HDR sensors is limiting the application in this field, but with upcoming technology changes we will see three- to five-megapixel HDR sensors which will be suitable for tasks of this kind. Another development brought some challenges for the traffic sign recognition community. The appearance of change signs equipped with LED illuminators but with nearly no standardization in the frequency domain caused camera makers to think about techniques to cope with millisecond pulses, which are not easy to detect with a standard camera sensor.

18.4.4 Headlamp Control

Also a comfort system but one already with some impact on safety is headlamp control. Oncoming vehicles are detected several hundred meters before they pass. The headlamps of oncoming vehicles are distinguished from those of leaving vehicles and from other random light sources in the vicinity.

Again, an HDR of more than six decades helps to distinguish the different headlamps as a pure detection of the presence of light sources is even easier to achieve with a saturated linear sensor.

A higher level of sophistication both in detection and in processing is the application of matrix beams, grid light, intelligent headlamps, or multibeam headlamps as they are sometimes called. Those headlamps allow separately controlled single beams to mask oncoming or leaving vehicles while dynamically forming a variable shaped beam illuminating the surroundings as known from a conventional high beam. Even beam shaping to follow a curved road has become possible. A precondition for the application of this advantageous lighting system is a safe and absolutely reliable detection of other road users as oncoming or leaving traffic. Again it is the HDR sensor which is superior to detect the 10,000 times brighter contour of an oncoming car’s headlamp and at the same time the light contour of the car’s own shaped beam as well as the contour of nonilluminated objects beside the headlamp corridor.

Beam shaping technology is being developed especially to increase safety at night for both the driver and the accompanying traffic as unintended blinding by headlamps is avoided and the seeing conditions are improved. This also shows a new dimension in the functionality of DAS. Whereas all systems mentioned before increase the comfort or the safety of the driver and the fellow passengers, headlamp control also takes care of the safety of others.

18.4.5 From Rear View to Autonomous Parking

Early rear-view cameras were NTSC cameras adapted for automotive use and in the very beginning even used CCD sensors but they became one of the fastest developing areas and developed from a camera to look behind the car to a surround-view system allowing even low-speed maneuvering with and without guidance from the driver. A new chapter in autonomous driving was opened by this kind of system.

Three-dimensional reconstruction of images from different cameras simulating a bird’s view camera floating above the car virtually controllable by a joystick from the driver’s seat showing the image on a high-definition monitor in the cockpit requires high uniformity in sensor performance and because of the huge field of view an extremely high level of mastering various different illumination conditions. Somewhere in the 360-degree image there is always the sun, a bright headlamp, a strong reflective building, or an especially dark corner, and the problems they pose can be overcome only with the use of cameras with extreme dynamic range capabilities. Illumination conditions with different color temperatures in every direction from the car (eg, caused by a sunset and artificial light in opposite directions) create challenging situations which require not a single saturated color channel if the absence of color noise or disturbing appearances is expected. Again this is the domain of HDR sensors.

18.4.6 From Object Detection to Preemergency Braking

The first pedestrian-detecting camera was introduced in 2007 when night vision systems were upgraded to warn the driver of pedestrians close to the driving corridor or intruding on the driving corridor.

Again this development was the precursor for a significant step toward greater safety in road traffic. The system has been used to gather experience in the field of pedestrian detection without the high risk of an automated reaction. For the warning systems a false positive is annoying but not critical, but for an automatic braking system, which came onto the market in 2009, a much higher level of detection has been achieved.

18.4.7 Autonomous Driving

The new hype in the development of cars and in the public discussion since the first Google car autonomously navigated through the streets of North America is autonomous driving.

The first automatic parking assist systems were introduced on the market in 2003 by Toyota, and today parking assist systems are available for a large variety of cars and demonstrate that autonomous driving at low speed is both affordable and safe. The use of autonomous driving (or at least largely assisted driving) can help to avoid accidents caused by inattentive drivers or can enable drivers with handicaps to still be flexible in their choice to use a car. Google announced at the end of 2014 that it would start road tests of its autonomous driving prototypes by the beginning of 2015, and autonomous driving was one of most discussed topics at the Consumer Electronics Show (CES) in Las Vegas in 2015. So DAS are moving from convenient companions to pilots and driving becomes traveling.

As a logical consequence, automotive safety systems have not only to monitor the environment, measure distances to other road user, estimate trajectories, and plan the driving speed and path but also have to monitor the driver and prepare for situations when handing the responsibility back to the driver is foreseen. Driver-monitoring cameras will be needed as well as surround-view cameras and other remote sensors.

The requirements for HDR sensing imagers will increase as will the pressure to find cost-effective ways to implement the systems to democratize the technology so as to get a benefit from many cars taking care for their passengers.

18.4.8 What Is the Drawback of HDR Imaging?

Not every automotive camera is an HDR camera today (but the proportion is steadily reaching 100%). But what is the drawback? The drawback is that 60 years of linear thinking in imaging has produced a significant amount of literature dealing with the shortcoming of video systems — blooming, saturation, color aberration, etc. — which is confusing to newcomers to this area as well as to customers and users. This book might help to create a profound overview and a better understanding of the advantages of nonlinear imaging and the genius archetype, the human eye. It is a great honor for me to contribute a short chapter which might help to obtain an even better understanding of what HDR sensors can contribute to safe driving.