Embedded Software Quality, Integration, and Testing Techniques

Mark Pitchford LDRA, Monks Ferry, United Kingdom

Abstract

Mission-critical applications demanding safety and security, such as aircraft flight controllers, car braking systems, or medical devices, demand state-of-the-art quality, integration, and testing techniques. However, best practices in the development of embedded software should apply not only to high-integrity devices, but also to lesser integrity applications when the need to optimize the effectiveness of the available test time and budget demands that pragmatic decisions should be made.

To complement this multitude of software test techniques there is a similar plethora of test tools available to automate them. These tools are commonplace in the development of safety- and security-critical applications, but elsewhere not everyone has the budget to buy all, or indeed any, of them. Of course, the providers of these tools would advocate the purchase of each and every one of them, so how can a limited budget best be allocated? And where no budget exists how can similar principles be applied to provide confidence that the finished item is of adequate quality?

That depends, at least partly, on the aims of the exercise. Is the test regime intended to prove compliance to a particular standard? Does the motivation for test come with the contractual insistence of a client organization? Or is the prime driver an internal one, perhaps with improved reliability and maintainability as the key goals?

It is also necessary to consider the starting point for this test regime. Ideally, all projects would be developed from a “clean sheet” beginning, using the latest best practices every step of the way. For many, that is a halcyon ideal; instead, they are tasked with the improvement of quality for legacy software, perhaps with its roots lying many years, developers, and outmoded “best practice” concepts in the past.

In addressing these issues not only are the concepts behind the techniques presented, but also some “case study” software code examples to drill a little deeper and illustrate how some of them are implemented in practice.

Keywords

Bidirectional requirements traceability; Dynamic analysis; Functional safety standards; Integration test; Coding standards; MISRA; Object code verification (OCV); Static analysis; Structural coverage analysis; Unit test

1 What Is Software Test?

There is some inconsistency in how the word “test” is used in the context of software development. For some commentators “software test” implies the execution of software and the resulting confirmation that it performs as was intended by the development team—or not. Such a definition views the inspection or analysis of source code as a different field; that is, one to be contrasted with software test rather than a branch of it.

For the purposes of this chapter the Oxford English Dictionary’s definition of the word “test” is applied: “a procedure intended to establish the quality, performance, or reliability of something, especially before it is taken into widespread use.”

Any activity which fits that definition can therefore be regarded as a software test, whether it involves code execution or not.

The generic term “static analysis” is used to describe a branch of software test involving the analysis of software without the execution of the code. Conversely, “dynamic analysis” describes a branch of software test in which the code is indeed executed.

2 Why Should We Test Software?

Returning to the definition of “test,” software is tested to establish its “quality, performance, or reliability.” Testing itself only establishes these characteristics; it does not of itself guarantee that software meets any particular criteria for them.

The aim then is to quantify the standard of the software. Whether that standard is good enough depends very largely on the context in which it will be deployed.

3 How Much Testing Is Enough?

One approach to static analysis focuses on the checking for adherence to coding rules or the achievement of particular quality metrics. Such an approach is usually easy enough to scope. Either code meets the rules or it does not, and if it does not it is either justified or corrected.

Other static analysis tools are designed to predict the dynamic behavior of source code. Such heuristic mechanisms are most commonly used for “bug finding”—looking for evidence of where source code is likely to fail, rather than enforcing standards to be sure that it will not. These are arguably complementary to other static and dynamic techniques and are often easy to apply to get a marked improvement in code quality. However, they sometimes lack the thoroughness sought in the development of mission-, safety-, or security-critical software.

Dynamic testing is less easy to apply in even fairly trivial applications. The possible combinations and permutations of data values and execution paths can be large enough to make it wholly impractical to prove that all possible scenarios are correctly handled.

This means that almost irrespective of how much time is spent performing software tests of whatever nature an element of risk will remain with regard to the potential failure of those scenarios that remain unproven.

Consequently, the decision on what and how much to test becomes a question of cost vs. the impact of the risk outcomes identified. Those risk outcomes include not only the risk of software failure, but also factors such as the risk of delaying the launch of a commercial product and conceding the initiative in the market to a competitor.

Testing is not a cheap activity and there is the cost of both labor and associated test tools to take into account. On the opposite side of the equation lies the consequence of flawed software. What is the likely outcome of failure? Could it kill, maim, or cause temporary discomfort? Could it yield control of the application to bad actors or make personally identifiable information (PII) accessible? Or is a mildly irritating occasional need to restart the application the only feasible problem?

Clearly, the level of acceptable risk to health, safety, and security in each of these scenarios is significantly different, and the analysis is further complicated if there are also commercial risk factors to be added into that equation.

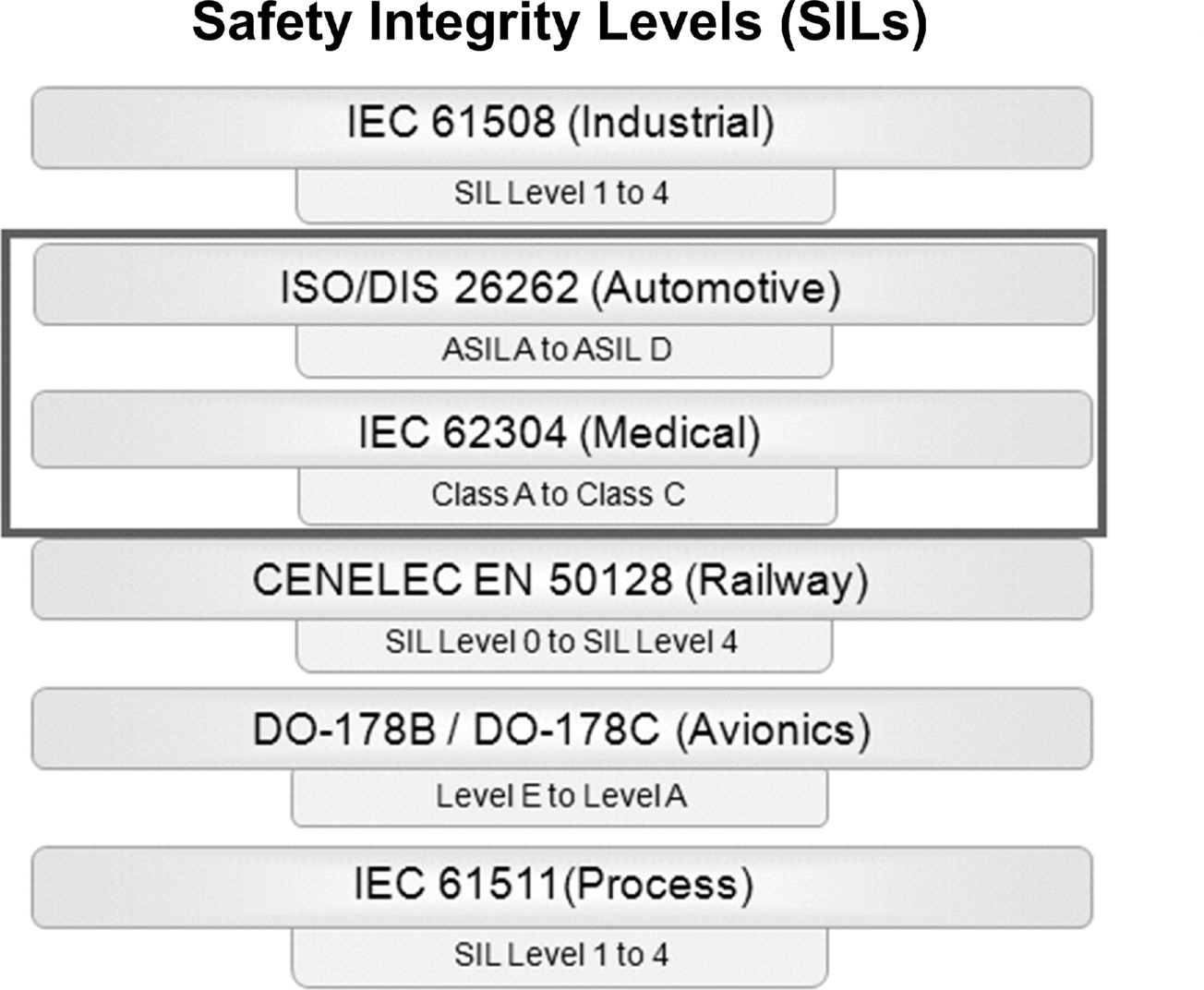

Some standards such as IEC 61508 (see Section 7) define a structured approach to this assessment. In this standard, software integrity level (SIL) 1 is assigned to any parts of a system in continuous use for which a probability of failure on demand of 10− 5–10− 6 is permissible. SILs become more demanding the higher the number assigned, so that SIL2 implies an acceptable probability of failure on demand as 10− 6–10− 7, SIL 3 as 10− 7–10− 8, and SIL 4 as 10− 8–10− 9.

The standard recommends the application of many techniques to varying degrees for each of these SILs on the basis that the proficient application of the specified techniques will provide sufficient evidence to suggest that the maximum acceptable risk level will not be exceeded.

Ultimately, then, the decision is about how the software can be proven to be of adequate quality. In many cases this ethos allows different SIL levels to be applied to different elements of a project depending on the criticality of each such element.

That principle can, of course, be extended outside the realms of high-integrity applications. It always makes sense to apply more rigorous test to the most critical parts of an application.

4 When Should Testing Take Place?

To some extent that depends on the starting point. If there is a suite of legacy code to deal with, then clearly starting with a new test regime at the beginning of development is not an option! However, “the sooner, the better” is a reasonable rule of thumb.

In general terms the later a defect is found in product development, the more costly it is to fix—a concept first established in 1975 with the publication of Brooks’ The “Mythical Man-Month” and proven many times since through various studies.

The automation of any process changes the dynamic of justification, and that is especially true of test tools given that some are able to make earlier unit test much more feasible (Fig. 1).

5 Who Makes the Decisions?

It is clear that the myriad of interrelated decisions on what, when, why, how, and how much to test is highly dependent on the reasons for doing so.

The judgments are perhaps relatively straightforward if an outside agency is involved. For instance, the developers of a control system for use in an aircraft will have to adhere to DO-178C for their product to become commercially available for use in international airspace. It then becomes clear that if the product is to sell, then a level of test that is appropriate to the standard is unavoidable.

This extends further to the qualification or certification of any tools to be used. That can vary quite significantly from one standard to another, but there are usually guidelines or instructions are laid down on what is required.

Conversely, the driver might be an internal one to improve software quality and improve corporate reputation and reduce recall costs. In that case the matter is a decision for management who will need to make judgments as to how much investment in the associated work and tools is appropriate.

6 Available Techniques

Enter the phrase “software test” into any browser and the variation in scope of test techniques and test tools is daunting. Static analysis, coding standards, quality metrics, source code coverage, object code coverage, dynamic analysis, memory leak profiling, abstract interpretation … the list of buzzwords and techniques is seemingly endless.

The resulting confusion is compounded by the fact that the boundaries between different techniques and approaches are not as clear-cut as they might be. “Static analysis” is a prime example of a term that means different things to different observers.

6.1 Static and Dynamic Analysis

The generic term “static analysis” is used only to indicate that analysis of the software is performed without executing the code, whereas “dynamic analysis” indicates that the code is indeed executed. So, simple peer review of source code and functional test fit the definitions of static and dynamic analysis, respectively. The boundaries become blurred when it is understood that static analysis can be used to predict dynamic behavior. As a result, it is a precision tool in some contexts and yet in others it harbors approximations.

To cut through this vague terminology it is useful to consider five key elements of analysis. These are all deployed in one form or another by analysis tools, but many can be and frequently are implemented from first principles usually in combination to provide a “tool kit” of techniques.

The first three are approaches to static analysis. Note that these attributes do not comprehensively describe the categories of static analysis tools. Many tools include more than one of these attributes, and it is possible to approximate each of them without the use of tools at all.

6.1.1 Code Review

Code review traditionally takes the form of a peer review process to enforce coding rules to dictate coding style and naming conventions, and to restrict commands available for developers to a safe subset.

Peer review of software source code was established to achieve effective code review long before any tools automated it, and is still effective today. The key to effective peer reviews is to establish a mutually supportive environment so that the raising of nonconformities is not interpreted as negative criticism.

If manual peer review is to be adopted with such standards as the MISRA ones in mind, then a subset of the rules considered most important to the developing organization is likely to yield the best results.

Many software test tools automate this approach to provide a similar function with benefits in terms of the number and complexity of rules to be checked and in terms of speed and repeatability.

Code review does not predict dynamic behavior. However, code written in accordance with coding standards can be expected to include fewer flaws that might lead to dynamic failure, and assuring a consistent approach from individuals brings its own benefits in terms of readability and maintainability.

Code review can be applied whether the code under development is for a new project, an enhancement, or a new application using existing code. With legacy applications, automated code review is particularly strong for presenting the logic and layout of such code to establish an understanding of how it works with a view to further development. On the other hand, with a new development the analysis can begin as soon as any code is written—no need to wait for a compilable code set, let alone a complete system.

6.1.2 Theorem Proving

Theorem proving defines desired component behavior and individual runtime requirements.

The use of assertions within source code offers some of the benefits of the theorem-proving tools. Assertions placed before and after algorithms can be used to check that the data passing through them meet particular criteria or are within particular bounds.

These assertions can take the form of calls to an “assert” function as provided in languages such as C ++, or the form of a user-defined mechanism to perhaps raise an error message or set a system to a safe state.

Automated theorem proof tools often use specially formatted comments (or “annotations”) in the native language. These comments can be statically analyzed to confirm the code accurately reflects these definitions, which are ignored by a standard compiler. Because of these annotations verification can concentrate on verification conditions: that is, checking that when one starts under some preconditions and executes such a code fragment the postcondition will be met.

The writing of annotations can be labor intensive and so these tools tend to be limited to highly safety-critical applications where functional integrity is absolutely paramount over any financial consideration (e.g., flight control systems).

Unlike the prediction of dynamic behavior through static analysis the use of “Design by Contract” principles often in the form of specially formatted comments in high-level code can accurately formalize and validate the expected runtime behavior of source code.

Such an approach requires a formal and structured development process, one that is textbook style and has uncompromising precision. Consequently, applying the retrospective application of such an approach to legacy code would involve completely rewriting it.

6.1.3 Prediction of Dynamic Behavior Through Static Analysis

The prediction of dynamic behavior through static analysis mathematically models the high-level code to predict the probable behavior of executable code that would be generated from it. All possible execution paths through that mathematical model are then simulated, mapping the flow of logic on those paths coupled with how and where data objects are created, used, and destroyed.

The net result consists of predictions of anomalous dynamic behavior that could possibly result in vulnerabilities, execution failure, or data corruption at runtime.

Although there is no practical way of exactly performing this technique manually, the use of defensive code and bounds checking within source code offers a different approach to yielding some of the benefits. For example, many of these heuristic tools use a technique called Abstract Interpretation to derive a computable semantic interpretation of the source code. In turn, this is used to analyze possible data ranges to predict any problematic scenarios at runtime. Defensive programming can make no such predictions but assertions, say, placed before and after algorithms can be used to defend against such scenarios by checking that the data passing through them meet particular criteria or are within particular bounds—and that includes checking for the circumstances that may cause runtime errors of the type generally sought out by tools of this nature.

As before, these assertions can take the form of calls to an “assert” function as provided in languages such as C ++ or the form of a user-defined mechanism, and it is highly pragmatic to use assertions in the most difficult and complex algorithms where failures are most likely to occur.

When tools are available the static prediction of dynamic behavior works well for existing code or less rigorously developed applications. It does not rely on a formal development approach and can simply be applied to the source code as it stands, even when there is no in-depth knowledge of it. That ability makes this methodology very appealing for a development team in a fix—perhaps when timescales are short, but catastrophic and unpredictable runtime errors keep coming up during system test.

There is, however, a downside. The code itself is not executing, but instead is being used as the basis for a mathematical model. As proven by the works of Church, Gödel, and Turing in the 1930s a precise representation of the code is mathematically insoluble for all but the most trivial examples. In other words the goal of finding every defect in a nontrivial program is unreachable unless approximations are included that by definition will lead to false-positive warnings.

The complexity of the mathematical model also increases dramatically as the size of the code sample under analysis gets bigger. This is often addressed by the application of simpler mathematical modeling for larger code samples, which keeps the processing time within reasonable bounds. But, increases in the number of these “false positives,” which has a significant impact on the time required to interpret results, can make this approach unusable for complex applications.

The last two of the “key attributes” concern dynamic analysis. Note that these attributes do not comprehensively describe the categories of dynamic analysis and that many tools include more than one of these attributes.

An overlap between static and dynamic analysis appears when there is a requirement to consider dynamic behavior. At that point the dynamic analysis of code that has been compiled, linked, and executed offers an alternative to the prediction of dynamic behavior through static analysis.

Dynamic analysis involves the compilation and execution of the source code either in its entirety or on a piecemeal basis. Again, while many different approaches can be included, these characteristics complete the list of the five key attributes that form the fundamental “toolbox of techniques.”

6.1.4 Structural Coverage Analysis

Structural coverage analysis details which parts of compiled and linked code have been executed, often by means of code instrumentation “probes.”

In its simplest form these probes can be implemented with manually inserted print statements as appropriate for the programming language of choice. Although such an approach demands in-depth knowledge of the code under test and carries the potential for human error, it does have a place in smaller projects or when only practiced on a critical subset of an application.

A common approach is for automated test tools to automatically add probes to the high-level source code before compilation.

Adding instrumentation probes obviously changes the code under test, making it both bigger and slower. There are therefore limitations to what it can achieve and to the circumstances under which it can be used, especially when timing errors are a concern. However, within appropriate bounds it has been highly successful and in particular has made a major contribution to the sound safety record of software in commercial aircraft.

Some test tools can perform structural coverage analysis in isolation or in combination with unit, module, and/or integration testing.

6.1.5 Unit, Module, and Integration Testing

Unit, module, and integration testing (referred to collectively as unit testing hereafter) all describe an approach in which snippets of software code are compiled, linked, and built in order that test data (or “vectors”) can be specified and checked against expectations.

Traditionally, unit testing involves the development of a “harness” to provide an environment where the subset of code under test can be exposed to the desired parameters for the tester to ensure that it behaves as specified. More often than not in modern development environments the application of such techniques is achieved through the use of automated or semi-automated tools. However, a manual approach can still have a place in smaller projects or when only practiced on a critical subset of an application.

Some of the leading automated unit test tools can be extended to include the automatic definition of test vectors by the unit test tool itself.

Unit testing and structural coverage analysis focus on the behavior of an executing application and so are aspects of dynamic analysis. Unit, integration, and system test use code compiled and executed in a similar environment to that being used by the application under development.

Unit testing traditionally employs a bottom-up testing strategy in which units are tested and then integrated with other test units. In the course of such testing, individual test paths can be examined by means of structural coverage analysis. There is clearly no need to have a complete code set to hand to initiate tests such as these.

Unit testing is complemented by functional testing, a form of top-down testing. Functional testing executes functional test cases, perhaps in a simulator or in a target environment, at the system or subsystem level.

Clearly, these dynamic approaches test not only the source code, but also the compiler, linker, development environment, and potentially even target hardware. Static analysis techniques help to produce high-quality code that is less prone to error, but when it comes to proving correct functionality there is little alternative but to deploy dynamic analysis. Unit test or system test must deploy dynamic analysis to prove that the software actually does what it is meant to do.

Perhaps the most telling point with regard to the testing of dynamic behavior—whether by static or dynamic analysis—is precisely what is being tested. Intuitively, a mathematical model with inherent approximations compared with code being compiled and executed in its native target environment suggests far more room for uncertainty.

If the requirement is for a quick fix solution for some legacy code that will find most problems without involving a deep understanding of the code, then the prediction of dynamic behavior via static analysis has merit. Similarly, this approach offers quick results for completed code that is subject to occasional dynamic failure in the field.

However, if there is a need to prove not only the functionality and robustness of the code, but also provide a logical and coherent development environment and integrated and progressive development process, then it makes more sense to use dynamic unit and system testing. This approach provides proof that the code is robust and that it does what it should do in the environment where it will ultimately operate.

6.2 Requirements Traceability

As a basis for all validation and verification tasks all high-quality software must start with a definition of requirements. This means that each high-level software requirement must map to a lower level requirement, design, and implementation. The objective is to ensure that the complete system has been implemented as defined and that there is no surplus code. Terminology may vary regarding what different requirement tiers are called, but this fundamental element of sound software engineering practice remains.

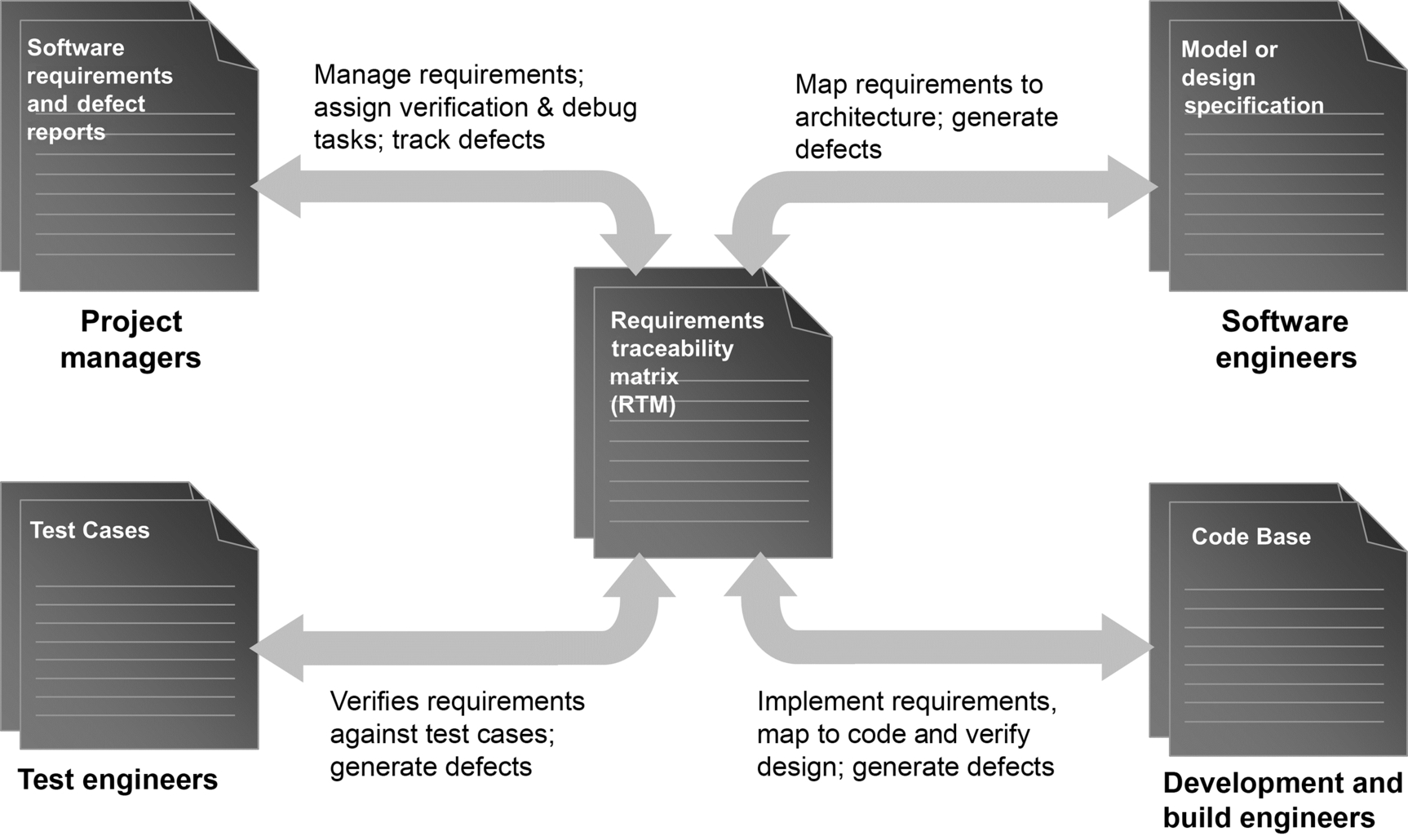

Simply ensuring that system-level requirements map to something tangible in the requirements decomposition tree, design and implementation is not enough. The complete set of requirements comes from multiple sources, including system-level requirements, high-level requirements, and low-level (or derived) requirements. As illustrated below there is seldom a 1:1 mapping from system-level requirements to source code, so a traceability mechanism is required to map and record the dependency relationships of requirements throughout the requirements decomposition tree (Fig. 2).

To complicate matters further each level of requirements might be captured using a different mechanism. For instance, a formal requirements capture tool might be used for system-level requirements while high-level requirements are captured in PDF and low-level requirements captured in a spreadsheet.

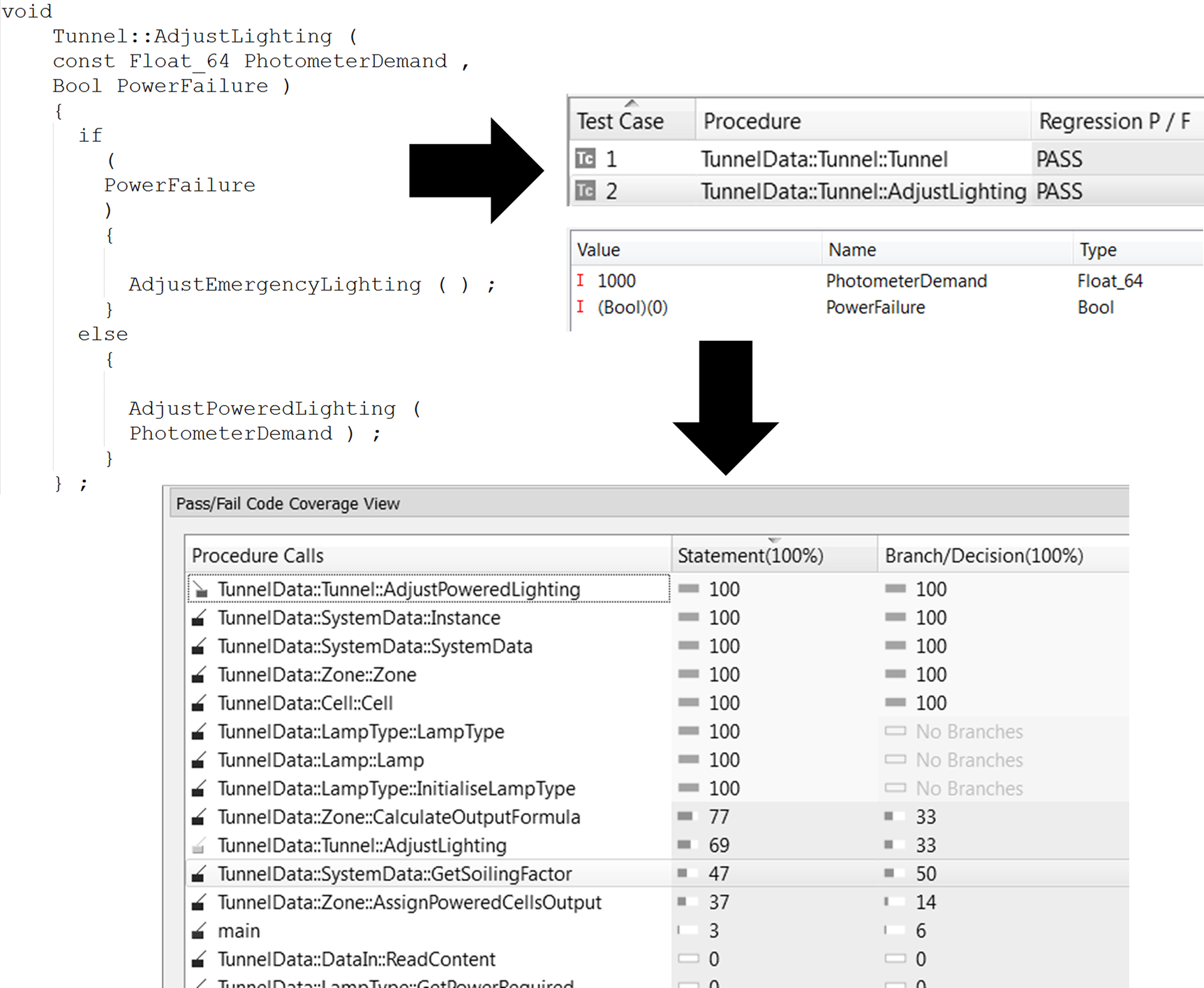

Modern requirements traceability solutions enable mapping throughout these levels right down to the verification tasks associated with the source code. The screenshot (Fig. 3) shows an example of this. Using this type of requirements traceability tool the 100% requirements coverage metric objective can clearly be measured, no matter how many layers of requirements, design, and implementation decomposition are used. This makes monitoring system completion progress an extremely straightforward activity.

It would be easy to overlook the requirements element of software development, but the fact is that even the best static and dynamic analysis in tandem do not prove that the software fulfills its requirements.

Widely accepted as a development best practice, bidirectional requirements traceability ensures that not only are all requirements implemented, but also that all development artifacts can be traced back to one or more requirements. Requirements traceability can also cover relationships with other entities such as intermediate and final work products, changes in design documentation, and test plans. Standards such as the automotive ISO 26262 or medical IEC 62304 demand bidirectional traceability, and place constant emphasis on the need for the complete and precise derivation of each development tier from the one above it.

When requirements are managed well, traceability can be established from the source requirement to its lower level requirements and from the lower level requirements back to their source. Such bidirectional traceability helps determine that all source requirements have been completely addressed and that all lower level requirements can be traced to a valid source.

Such an approach lends itself to a model of continuous and progressive use: first, of automated code review, followed by unit test, and subsequently system test with its execution tracing capability to ensure that all code functions exactly as the requirements dictate, even on the target hardware itself—a requirement for more stringent levels of most such standards.

While this is and always has been a laudable principle, last-minute changes to requirements or code made to correct problems identified during test tend to leave such ideals in disarray.

Despite good intentions many projects fall into a pattern of disjointed software development in which requirements, design, implementation, and testing artifacts are produced from isolated development phases. Such isolation results in tenuous links between requirements, the development stages, and/or the development teams.

The traditional view of software development shows each phase flowing into the next, perhaps with feedback to earlier phases, and a surrounding framework of configuration management and process (e.g., Agile, RUP). Traceability is assumed to be part of the relationships between phases. However, the reality is that, while each individual phase may be conducted efficiently, the links between development tiers become increasingly poorly maintained over the duration of projects.

The answer to this conundrum lies in the requirements traceability matrix (RTM) that sits at the heart of any project even if it is not identified as such (see Fig. 4). Whether or not the links are physically recorded and managed they still exist. For example, a developer creates a link simply by reading a design specification and using that to drive the implementation.

Safety- and security-critical standards dictate that requirements should be traceable down to high-level code and in some cases object code, but elsewhere more pragmatism is usually required. A similar approach can be taken for any project with varying levels of detail depending on criticality both of the project as a whole and within an individual project. The important factor is to provide a level of traceability that is adequate for the circumstance.

This alternative view of the development landscape illustrates the importance that should be attached to the RTM. Due to this fundamental centrality it is vital that project managers place sufficient priority on investing in tooling for RTM construction. The RTM must also be represented explicitly in any life cycle model to emphasize its importance (as Fig. 5 illustrates). With this elevated focus the RTM is constructed and maintained efficiently and accurately.

When the RTM becomes the center of the development process it impacts on all stages of design from high-level requirements through to target-based deployment. Where an application is safety critical each tier is likely to be implemented in full, but once again a pragmatic interpretation of the principles can be applied to any project.

- Tier 1 high-level requirements might consist of a definitive statement of the system to be developed. This tier may be subdivided depending on the scale and complexity of the system.

- Tier 2 describes the design of the system level defined by Tier 1. Above all, this level must establish links or traceability with Level 1 and begin the process of constructing the RTM. It involves the capture of low-level requirements that are specific to the design and implementation and have no impact on the functional criteria of the system.

- Tier 3’s implementation refers to the source/assembly code developed in accordance with Tier 2. Verification activities include code rule checking and quality analysis. Maintenance of the RTM presents many challenges at this level as tracing requirements to source code files may not be specific enough and developers may need to link to individual functions.

In many cases the system is likely to involve several functions. The traceability of those functions back to Tier 2 requirements includes many-to-few relationships. It is very easy to overlook one or more of these relationships in a manually managed matrix. - In Tier 4 formal host-based verification begins. Once code has been proven to meet the relevant coding standards using automated code review, unit, then integration and system tests may be included in a test strategy that may be top-down, bottom-up, or a combination of both. Software simulation techniques help create automated test harnesses and test case generators as necessary, and execution histories provide evidence of the degree to which the code has been tested.

Such testing could be supplemented with robustness testing if required, perhaps by means of the automatic definition of unit test vectors or by static prediction of dynamic behavior.

Test cases from Tier 4 should be repeatable at Tier 5, if required.

This is the stage that confirms the software functions as intended within its development environment, even though there is no guarantee it will work when in its target environment. Testing in the host environment first allows the time-consuming target test to merely confirm that the tests remain sound in the target environment. - Tier 5’s target-based verification represents the on-target testing element of formal verification. This frequently consists of a simple confirmation that the host-based verification performed previously can be duplicated in the target environment, although some tests may only be applicable in that environment itself.

Where reliability is paramount and budgets permit, the static analysis of dynamic behavior with its “full range” data sets would undoubtedly provide a complementary tool for such an approach. However, dynamic analysis would remain key to the process.

6.3 Static Analysis—Adherence to a Coding Standard

One of the most basic attributes of code that affects quality is readability. The more readable a piece of code is, the more testable it is. The more testable it is, the more likely it will have been tested to a reasonable level of completion. Unfortunately, as The International Obfuscated C Code Contest has demonstrated, there are many ways to create complex and unreadable code for the simplest of applications. This metric is about adopting even a basic coding standard to help enhance code quality by establishing the rules for a minimum level of readability for all the code created within a project.

Modern coding standards go way beyond just addressing readability, however. Encapsulating the wisdom and experience of their creators, coding standards, such as the Motor Industry Software Reliability Association (MISRA) C and C ++ coding standards and the JSF Airborne Vehicle C ++ standard or the Barr group (formerly Netrino) Embedded C Coding standard, also identify specific code constructs that can affect overall code quality and reliability, such as areas of C or C ++ that the ISO standards state are either undefined or implementation specific.

Coding standards, such as the CERT-C or C ++ Secure Coding Standards and the Common Weakness Enumeration list (CWE) also help to identify code constructs that can lead to potentially exploitable vulnerabilities in code.

The optimum coding standard for a project will depend on the project objectives. Fig. 6 provides a simple outline of the objectives for several coding standards.

In practice, most projects will create their own custom standard that uses one or more of these as a baseline, and modify the standard to suit their particular needs. Clearly, software that is safe AND secure is often desirable! Fortunately, the same attributes that make code safe very frequently also make it secure, and it is no coincidence that the MISRA organization, for example, have always focused their guidelines on critical systems, rather than safety-critical systems. The 2016 release of MISRA C:2012 AMD1, “Additional security guidelines for MISRA C:2012,” further reinforced that position.

One area, in particular, where these reliability and security-oriented coding standards excel is in identifying code constructs that lead to latent defects, which are defects that are not normally detected during the normal software verification process yet reveal themselves once the product is released. Consider the following simple example:

1 #include < stdio.h > 2 #include < stdint.h > 3 4 #define MAX_SIZE 16U 5 6 int32_t main(void) 7 { 8 uint16_t theArray[MAX_SIZE]; 9 uint16_t idx; 10 uint16_t *p_var;

11 uint16_t UR_var; 12 13 p_var = &UR_var; 14 15 for(idx = 0U; idx < MAX_SIZE; idx += *p_var;) 16 { 17 theArray[idx] = 1U; 18 } 19 20 for(idx = 0U; idx <= MAX_SIZE; idx ++) 21 { 22 printf(“ %d”, theArray[idx]); 23 } 24 25 return(0); 26 }

It compiles without warnings using either GCC or Microsoft Visual Studio (the latter requires the user to provide a stdint.h implementation for versions earlier than 2010). On inspection an experienced programmer can find the errors in this fairly simple code which contains both an array-out-of-bounds error (an off-by-one error on line 20 when variable idx == MAX_SIZE) and a reference to an uninitialized variable (on line 11, and line13 for the loop counter increment operator). These violate MISRA C:2012 rules 18.1 (relating to out-of-bounds errors) and 9.1 (which prohibits an object from being read before it has been set), respectively.

At its most basic an array-out-of-bounds error is a buffer overflow, even though it is legal C and/or C ++ code. For secure code, buffer overflow is one of the most common vulnerabilities leading to the worst possible type of exploit: the execution of arbitrary code.

Nondeterminism is also a problem with the uninitialized variables in the example. It is impossible to predict the behavior of an algorithm when the value of its variables cannot be guaranteed. What makes this issue even worse is that some compilers will actually assign default values to uninitialized variables! For example, the Microsoft Visual Studio compiler assigns the value 0xCCCC to the variable UR_var by default in debug mode. While this value is meaningless in the context of the algorithm above, it is deterministic so the code will always behave in the same way. Switch to release mode, however, and the value will be undefined resulting in nondeterministic behavior.

Although real code is never as straightforward as this, even in this example there is some isolation from the latter issue as pointer aliasing is used to reference the UR_var variable.

A further MISRA C:2012 violation (rule 14.2: “A for loop shall be well-formed”) relates to the complexity of the loop counter in this example. It is legitimate C code, but is difficult to understand and hence potentially error prone (Fig. 7).

In addition to the obvious benefits of identifying and eliminating latent defects the most significant additional benefit of using static analysis tools for coding standards enforcement is that it helps peer review productivity. By ensuring that a piece of code submitted for peer review has contravened no mandatory rules the peer review team can get away from focusing on minutiae and on to what they do best: ensuring that the implementations under inspection are fit for purpose and the best that they can be.

Another area where enforcement of coding standards is beneficial is in identifying unnecessary code complexity.

Complexity is not in and of itself a bad thing; complex problems require complex solutions, but code should not be more complex than necessary. This leads to sections of code that are unnecessarily difficult to read, even more difficult to test, and as a result have higher defect rates than a more straightforward equivalent implementation. It follows that unnecessary complexity is to be avoided.

Several coding standards incorporate maximum code complexity limits as a measure for improving overall code quality. The following case study explains cyclomatic complexity and knots metrics and how they may be used to show how complex a function is. The case study goes on to explain how essential cyclomatic complexity and essential knots metrics can show whether or not a function has been written in a structured manner, in turn giving a measure of complexity.

6.3.1 Essential Knots and Essential Cyclomatic Complexity—Case Study

6.3.1.1 Basic Blocks and Control Flow Branches

It is initially useful to consider how the construction of high-level software code can be described.

A “basic block” is a sequence of one or more consecutive, executable statements in a source program such that the sequence has a start point, an end point, and no internal branches.

In other words, once the first executable statement in a basic block is executed, then all subsequent statements in that basic block can be assumed to be executed in sequence.

Control flow branches and natural succession provide the links between basic blocks.

6.3.1.2 Control, Static, and Dynamic Flow Graphs

The logical flow of source, object, or assembler code can be represented by a control flow graph as first conceived by American computer scientist Frances Elizabeth “Fran” Allen. A control flow graph consists of a number of representations of basic blocks (represented by circular “nodes”) interconnected with arrowed lines to represent the decision paths (called “links”).

During the static analysis of source code it is possible to detect and understand the structure of the logic associated with it.

Control flow graphs form the basis of static flow graphs to show code structure. Dynamic flow graphs superimpose that representation of the code with execution history information to show which parts have been executed. In both cases the exact color-coding and symbolism used to represent different aspects of these graphs varies between tools—a fact further complicated in this book by the absence of color!

In the following static and dynamic flow graphs diamond-shaped nodes are used where a basic block includes a function call; otherwise circles are used. Relevant use of shading is explained in the context of each illustration.

In languages such as C and C ++ it is often necessary to reformat the code to show only one instruction per line. This circumvents the problem of nomenclature for identifying decision points and instructions that occur within a single line of source code (Fig. 8).

6.3.1.3 Calculating a Knots Value

A knot is a point where two control flows intersect.

Knot analysis measures the amount of disjointedness in the code and hence the amount of “jumping about” a code reader will be required to undertake. An excessive number of knots may mean that a program can be reordered to improve readability and reduce complexity. A knot is not in itself a “bad thing,” and knots appear in many perfectly acceptable constructs such as for, while, if/else, switch, and exception (Fig. 9).

Because they are a function of the chosen programming style and high-level language the number of knots in a function gives an indication of the complexity added to it as a result of program implementation. An excessive number of knots implies unnecessary complexity.

6.3.1.4 Calculating a Cyclomatic Complexity Value

Cyclomatic complexity is another measure of how complex a function is. It is a value derived from the geometry of the static flow graph for the function. The absolute value itself is therefore a little abstract and meaningless in isolation, but it provides a comparator to show the relative complexity of the problem addressed by one function vs. another.

Cyclomatic complexity is represented algebraically by the nomenclature V(G) and can be derived in a number of ways, the simplest perhaps being a count of the number of “regions” separated by the links and nodes of the control or static flow graph (Fig. 10).

6.3.1.5 Identifying Structured Programming Templates—Structured Analysis

The concept of “structured” programming has been around since the 1960s, derived particularly from work by Böhm and Jacopini, and Edsger Dijkstra. In its modern implementation “structured elements” are defined as those constructs within the code that adhere to one of six “structured programming templates” as illustrated in Fig. 11.

Structured analysis is an iterative process where the static flow graph is repeatedly assessed to see whether it is possible to match one of the structured programming templates to a part of it. If so, that is “collapsed” to a single node as illustrated in Fig. 12.

The process is repeated until no more templates can be matched.

6.3.1.6 Essential Knots and Essential Cyclomatic Complexity

If this modified static flow graph is then used as the basis for knots and cyclomatic complexity calculations the resulting metrics are known as essential knots and essential cyclomatic complexity, respectively.

If there is only one node on any static flow graph it will exhibit no knots and only one region, meaning that a perfectly structured function will always have no essential knots and a cyclomatic complexity of 1.

The converse of this “perfect” result is that the essential measures will be greater than 0 and 1, respectively, showing that the code is not structured and hence may be unnecessarily complex.

6.4 Understanding Dynamic Analysis

As previously discussed, dynamic analysis involves the execution of some or all of the application source code. It is useful to consider some of the more widely used techniques that fall within this domain.

One such technique is a system-level functional test that defines perhaps the oldest test genre of them all. Simply described, when the code is written and completed the application is exercised using sample data and the tester confirms that everything works as it should.

The problem with applying this approach in isolation is that there is no way of knowing how much of the code has actually been exercised. Structural coverage analysis addresses this problem by reporting which areas of the application source code have been exercised by the test data and, more importantly, which areas have not. In its simplest form structural coverage analysis is reported in the form of statement coverage. More sophisticated reporting mechanisms can then build upon this to report coverage of decision points and perhaps even control flow paths.

Unit test is another widely used dynamic analysis technique that has been around almost as long as software development itself. The cornerstone of this technique at its most basic is that each application building block (unit)—an individual procedure, function, or class—is built and executed in isolation from test data to make sure that it does just what it should do without any confusing input from the remainder of the application.

To support the necessary isolation of this process there needs to be a harness program to act as a holding mechanism that calls the unit, details any included files, “stubs” any procedure called by the unit, and prepares data structures for the unit under test to act upon.

Not only is creating that harness from first principles a laborious task, but it also takes a lot of skill. More often than not the harness program requires at least as much testing as the unit under test.

Perhaps more importantly, a fundamental requirement of software testing is to provide an objective, independent view of the software. The very intimate code knowledge required to manually construct a harness compromises the independence of the test process, undermining the legitimacy of the exercise.

6.5 The Legacy From High-Integrity Systems

In developing applications for the medical, railway, aerospace, and defense industries, unit test is a mandatory part of a software development cycle—a necessary evil. For these high-integrity systems, unit test is compulsory and the only question is how it might be completed in the most efficient manner possible. It is therefore no coincidence that many of the companies developing tools to provide such efficiency have grown from this niche market.

In more mundane environments, perceived wisdom is often that unit testing is a nice idea in principle, but commercially unjustifiable. A significant factor in that stance is the natural optimism that abounds at the beginning of any project. At that stage why would anyone spend money on careful unit testing? There are great engineers in the team, the design is solid, and sound management is in place. What could possibly go wrong?

However, things can and do go wrong, and while unit test cannot guarantee success it can certainly help to minimize failure. It therefore makes sense to consider the principles proven to provide quick and easy unit tests in high-integrity systems in the context of less demanding environments.

6.6 Defining Unit, Module, and Integration Test

For some the terms “unit test” and “module test” are synonymous. For others the term “unit” implies the testing of a single procedure, whereas “module” suggests a collection of related procedures, perhaps designed to perform some particular purpose within the application.

Using the latter definitions manually developed module tests are likely to be easier to construct than unit tests, especially if the module represents a functional aspect of the application itself. In this case most of the calls to procedures are related and the code accesses related data structures, which makes the preparation of the harness code more straightforward.

Test tools render the distinction between unit and module tests redundant. It is perfectly possible to test a single procedure in isolation and equally possible to use the exact same processes to test multiple procedures, a file or multiple files of procedures, a class (where appropriate), or a functional subset of an entire system. As a result the distinction between unit and module test is one that has become increasingly irrelevant to the extent that the term “unit test” has come to include both concepts.

This flexibility facilitates progressive integration testing. Procedures are first unit-tested and then collated as part of the subsystems, which in turn are brought together to perform system tests.

It also provides options when a pragmatic approach is required for less critical applications. A single set of test cases can exercise a specified procedure, all procedures called as a result of exercising the single procedure as illustrated in Fig. 13, or anything in between. The use of test cases that prove the functionality of the whole call chain are easily constructed. Again, it is easy to “mix and match” the processes depending on the criticality of the code under review.

6.7 Defining Structural Coverage Analysis

The structural coverage analysis approach is all about ensuring that enough testing is performed on a system to meet its quality objectives.

For the most complete testing possible it is necessary to ensure that every possible execution path through the code under test is executed at least once. In practice, this is an unachievable aim. An observation made by G.J. Myers in 1976 explains why this is so; Myers described a 100-line program that had 1018 unique paths. For comparative purposes he noted that the universe is only about 4 × 1017 s old. With this observation Myers concluded that complete software execution path testing is impossible, so an approximation alternative and another metric are required to assess testing completeness.

Structural coverage analysis has proven to be an excellent technique for that purpose.

The closest structural coverage analysis metric to the 100% execution path ideal is based on the linear code sequence and jump (LCSAJ) software analysis technique, or jump-to-jump path (JJ-path) coverage as it is sometimes described. LCSAJ analysis identifies sections of code that have a single input path and a single output path, referred to as an interval. Within each interval each possible execution path is then identified. A structural coverage analysis metric is then determined by measuring which of these possible execution paths within an interval have been executed.

As with all these metrics the use of tools for measuring structural coverage analysis greatly increases measurement efficiency, effectiveness, and accuracy. In addition, the visualization of results provides excellent feedback on what additional test cases are required to improve overall coverage measurements. Test tools generally use coloring to represent coverage information. In Fig. 14 and elsewhere in this chapter black nodes and solid branch lines represent exercised code.

From these results it is a straightforward exercise to determine which test data need to be generated to exercise the remaining “cold” paths, making the ability to generate the quality-oriented reports required for certification extremely straightforward.

6.8 Achieving Code Coverage With Unit Test and System Test in Tandem

Traditionally, many applications have been tested by functional means only—and no matter how carefully the test data are chosen the percentage of code actually exercised can be very limited.

That issue is compounded by the fact that the procedures tested in this way are only likely to handle data within the range of the current application and test environment. If anything changes a little—perhaps in the way the application is used or perhaps as a result of slight modifications to the code—the application could be running an entirely untested execution path in the field.

Of course, if all parts of the system are unit-tested and collated on a piecemeal basis through integration testing, then this will not happen. But what if timescales and resources do not permit such an exercise?

The more sophisticated unit test tools provide the facility to instrument code. This instrumented code is equipped to “track” execution paths, providing evidence of the parts of the application that have been exercised during execution. Such an approach provides the information to produce data such as those depicted in Fig. 14.

Code coverage is an important part of the testing process in that it shows the percentage of the code that has been exercised and proven during test. Proof that all code has been exercised correctly need not be based on unit tests alone. To that end some unit tests can be used in combination with system tests to provide a required level of execution coverage for a system as a whole.

Unit tests can complement system tests to execute code that would not normally be exercised in the running of the application. Examples include defensive code (e.g., to prevent crashes due to inadvertent division by zero), exception handlers, and interrupt handlers.

6.8.1 Unit Test and System Test in Tandem—Case Study

Consider the following function, taken from a lighting system written in C ++. Line 7 includes defensive code designed to ensure that a divide by zero error cannot occur:

1 Sint_32 LampType::GetPowerRequired(const Float_64 LumensRequired) const 2 /* Assume a linear deterioration of efficiency from HighestPercentOutput lm/W output from each lamp at 3 maximum output, down to LowestPercentOutput lm/W at 20% output. Calculate power required based on 4 the resulting interpolation. */ 5 { 6 Sint_32 Power = 0; 7 if (((mMaximumLumens-mMinimumLumens)>Small) && LumensRequired >=mMinimumLumens)) 8 { 9 Power = (Sint_32)(mMinimumPower + (mMaximumPower-mMinimumPower)* 10 ((LumensRequired-mMinimumLumens)/(mMaximumLumens-mMinimumLumens))); 11 } 12 return Power; 13 }

The dynamic flow graph for this function after system test shows that most of the statements and control flow decisions have been exercised as part of system test. However, in a correctly configured system the values of “mMaximumLumens” and “mMinimumLumens” will never be similar enough to force the defensive aspect of the code to be exercised (Fig. 15).

Unit test can be used to complement the code coverage achieved during system test, which forces the defensive branch to be taken (Fig. 16).

The coverage from the unit test and system test can then be combined so that full coverage is demonstrated (Fig. 17).

6.9 Using Regression Testing to Ensure Unchanged Functionality

During the course of development, ongoing development can compromise the functionality of software that is considered complete.

As software evolves it is therefore essential to keep reapplying existing tests and monitor the subsequent test outcomes against previously determined expected results. This is a process known as regression testing. Often this is achieved by using test case files to store sequences of tests, and it is then possible to recall and reapply them to any revised code to prove that none of the original functionality has been compromised.

Once configured, more sophisticated regression test processes can be initiated as a background task and run perhaps every evening. Reports can highlight any changes to the output generated by earlier test runs. In this way any code modifications leading to unintentional changes in application behavior can be identified and rectified immediately and the impact of regression tests against other, concurrent, test processes can be kept to a minimum.

Modern unit test tools come equipped with user-friendly, point-and-click graphical user interfaces, which are easy and intuitive to use. However, a GUI interface is not always the most efficient way to implement the thousands of test cases likely to be required in a full-scale development. In recognition of this, the more sophisticated test tools are designed to allow these test case files to be directly developed from applications such as Microsoft Excel. As before, the “regression test” mechanism can then be used to run the test cases held in these files.

6.10 Unit Test and Test-Driven Development

In addition to using unit test tools to prove developed code they can also be used to develop test cases for code that is still in the conception phase—an approach known as test-driven development (TDD). As illustrated, TDD is a software development technique that uses short development iterations based on prewritten unit test cases that define desired improvements or new functions. Each iteration produces code necessary to pass the set of tests that are specific to it. The programmer or team refactors the code to accommodate changes (Fig. 18).

6.11 Automatically Generating Test Cases

Unit tests are usually performed to demonstrate adherence to requirements, to show that elements of the code perform the function they were designed to perform.

Generally, then, the output data generated through unit tests are important in themselves, but this is not necessarily always the case.

There may be occasions when the fact that the unit tests have successfully been completed is more important than the test data themselves. To address these circumstances as efficiently as possible, the more sophisticated unit test tools can generate test cases automatically, based on information gleaned by means of the initial static analysis of the software under test. For example:

- • Source code may be required to pass robustness tests.

- • The functionality of source code may already be proven, but the required level of code coverage is unsatisfied.

- • A “personality profile” of source code may be required prior to the modification of source code. Sequences of test cases can be generated based on the unchanged code and then exercised again when the source has been modified to prove that there has been no inadvertent detrimental effect on existing functionality.

To tune test cases generated in this way, tools provide a range of options to allow different aspects of the code to be considered. For example, options may include

- • generation of test cases to exercise upper and lower boundary values;

- • generation of minimum/mean/maximum values; and

- • generation of the optimal number of test cases in maximizing code coverage.

6.11.1 A Word of Caution

It would be easy to view the automatic generation of test cases as a potential “silver bullet,” an answer to all possible test questions with the minimum of effort.

It certainly represents an easy way to generate test cases, although it does require caution to ensure, for instance, that test cases do not result in infinite loops or null pointers.

However, there is an intrinsic compromise in the basic premise. The tests themselves are based on the source code—not on any external requirements. Detractors of the technique might argue that automatically generated test cases prove only that the source code does what it was written to do and that they would prove nothing if interpretation of the requirements were to be fundamentally flawed.

It is clearly true that such a test process compromises the principle of test independence and it is certainly not being suggested that automatically generated tests can or should replace functional testing, either at the system or unit test level.

However, once the test cases have been generated they can be executed in an identical manner to that provided for conventionally defined unit tests. The input to and output from each of the test cases are available for inspection, so that the correctness of the response from the software to each generated case can be confirmed if required.

7 Setting the Standard

Recent quality concerns are driving many industries to start looking seriously at ways to improve the quality of software development. Not surprisingly, there are marked differences not only in the quality of software in the different sectors, but also in the rate of change of that quality. For example, both the railway and process industries have long had standards governing the entire development cycle of electrical, electronic, and programmable electronic systems, including the need to track all requirements. On the other hand, although a similar standard in the automotive sector was a relatively recent introduction, there has been an explosion in demand for very high–quality embedded software as it moves toward autonomous vehicle production.

The connectivity demanded by autonomous vehicles has also become a significant factor across other safety-critical sectors, too. The advent of the Industrial Internet of Things (IIoT), connected medical devices, and connectivity in aircraft has seen a new emphasis on a need for security in parallel with functional safety.

Clearly, a safety-critical device cannot be safe if it is vulnerable to attack, but the implications go beyond that. For example, the vulnerability of credit card details from an automotive head unit do not represent a safety issue, but demand a similar level of care in software development to ensure that they are protected.

In short, the connected world poses threats to product safety and performance, data integrity and access, privacy, and interoperability.

7.1 The Terminology of Standards

In layman’s terms there are documents that define how a process should be managed and standards that dictate the instructions and style to be used by programmers in the process of writing the code itself.

These groups can be further subdivided. For example, there are many collections of these instructions for the use of development teams looking to seek approval for their efforts efficiently. But what are these collections of rules called collectively?

Unfortunately, there is little consensus for this terminology among the learned committees responsible for what these documents are actually called.

The MISRA C:2012 document, for example, is entitled “Guidelines for the use of the C language in critical systems” and hence each individual instruction within the document is a “guideline.” These guidelines are further subdivided into “rules” and “directives.”

Conversely, the HICC ++ document is known as a “coding standards manual” and calls each individual instruction a “rule.”

To discuss and compare these documents it is therefore necessary to settle on some umbrella terminology to use for them collectively. For that reason this chapter refers to “Process standards,” “Coding standards,” and “Coding rules” throughout and distinguishes “internal standards” used within an organization from “recognized standards” such as those established by expert committees.

7.2 The Evolution of a Recognized Process Standard

It is interesting to consider the evolution of the medical software standard IEC 62304 because it mirrors earlier experience in many other sectors.

The US government is well aware of the incongruence of the situation and is considering ways to counter it with the Drug and Device Accountability Act (http://www.govtrack.us/congress/bill.xpd?bill=s111-882). Recently, the FDA took punitive action against Baxter Healthcare and their infusion pumps, which the FDA has forced the company to recall (https://www.lawyersandsettlements.com/lawsuit/baxter-colleague-infusion-pumps-recall.html).

The net result is that many medical device providers are being driven to improve their software development processes as a result of commercial pressures. In short, they are doing so because it affects the “bottom line.”

A common concept in the standards applied in the safety-critical sectors is the use of a tiered, risk-based approach for determining the criticality of each function within the system under development (Fig. 19). Typically known as safety integrity levels there are usually four or five grades used to specify the necessary safety measures to avoid an unreasonable residual risk of the whole system or a system component. The SIL is assigned based on the risk of a hazardous event occurring depending on the frequency of the situation, the impact of possible damage, and the extent to which the situation can be controlled or managed (Fig. 20).

For a company to make the transition to developing certified software they must integrate the standard’s technical safety requirements into their design. To ensure that a design follows the standard a company must be able to outline the fulfillment of these safety requirements from design through coding, testing, and verification.

To ease the adoption of this standard and manage the shift in requirements many companies use gap analysis. Gap analysis begins by gathering and analyzing data to gauge the difference between where the business is currently and where it wants to be. Gap analysis examines operating processes and artifacts generated, typically employing a third party for the assessment. The outcome will be notes and findings on which the company or individual project may act.

7.2.1 ISO 26262 Recognized Process Standard—Case Study

In parallel with the advances in the medical device sector and in response to the increased application of electronic systems to automotive safety-critical functions the ISO 26262 standard was created to comply with needs specific to the application sector of electrical/electronic/programmable electronic (E/E/PE) systems within road vehicles.

In addition to its roots in the IEC 61508 generic standard it has much in common with the DO-178B/DO-178C standards seen in aerospace applications. In particular, the requirement for MC/DC (Modified Condition/Decision Coverage—a technique to dictate the tests required to adequately test lines of code with multiple conditions) and the structural coverage analysis process is very similar.

Safety is already a significant factor in the development of automobile systems. With the ever-increasing use of E/E/PE systems in areas such as driver assistance, braking and steering systems, and safety systems this significance is set to increase.

The standard provides detailed industry-specific guidelines for the production of all software for automotive systems and equipment, whether it is safety critical or not. It provides a risk management approach including the determination of risk classes (automotive safety integrity levels, ASILs).

There are four levels of ASILs (A–D in ISO 26262) to specify the necessary safety measures for avoiding an unreasonable residual risk, with D representing the most stringent level.

The ASIL is a property of a given safety function—not a property of the whole system or a system component. It follows that each safety function in a safety-related system needs to have an appropriate ASIL assigned, with the risk of each hazardous event being evaluated based on the following attributes:

- • frequency of the situation (or “exposure”)

- • impact of possible damage (or “severity”)

- • controllability.

Depending on the values of these three attributes the appropriate ASIL for a given functional defect is evaluated. This determines the overall ASIL for a given safety function.

ISO 26262 translates these safety levels into safety-specific objectives that must be satisfied during the development process. An assigned ASIL therefore determines the level of effort required to show compliance with the standard. This means that the effort and expense of producing a system critical to the continued safe operation of an automobile (e.g., a steer-by-wire system) is necessarily higher than that required to produce a system with only a minor impact in the case of a failure (e.g., the in-car entertainment system).

The standard demands a mature development environment that focuses on requirements that are specified in this standard. To claim compliance to ISO 26262 most requirements need to be formally verified, aside from exceptional cases where the requirement does not apply or where noncompliance is acceptable.

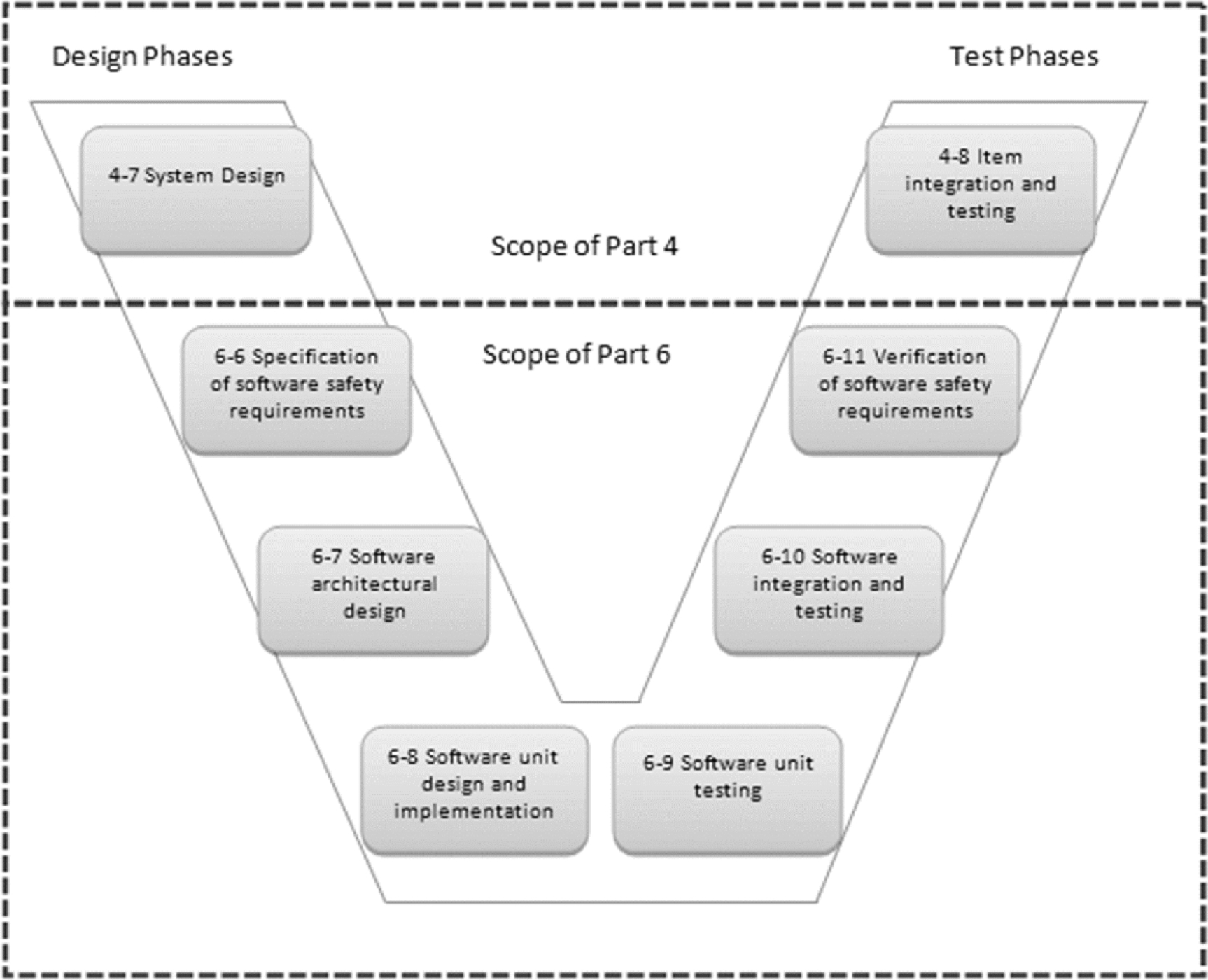

Part 4 of the standard concerns product development at the system level, and Part 6 of the standard concerns product development at the software level. The scope of these documents may be mapped on to any process diagram such as the familiar “V” model (Fig. 21).

Software analysis, requirements management, and requirements traceability tools are usually considered essential for large, international, cost-critical projects.

7.2.2 ISO 26262 Process Objectives

ISO 26262 recognizes that software safety and security must be addressed in a systematic way throughout the software development life cycle. This includes the safety requirements traceability, software design, coding, and verification processes used to ensure correctness, control, and confidence both in the software and in the E/E/PE systems to which that software contributes.

A key element of ISO 26262 (Part 4) is the practice of allocating technical safety requirements in the system design and developing that design further to derive an item integration and testing plan and subsequently the tests themselves. It implicitly includes software elements of the system, with the explicit subdivision of hardware and software development practices being dealt with further down the “V” model.

ISO 26262 (Part 6) refers more specifically to the development of the software aspect of the product. It is concerned with:

- • initiation of product development at the software level;

- • derivation of software safety requirements from the system level (following from Part 4) and their subsequent verification;

- • software architectural design;

- • software unit design and implementation;

- • software unit testing; and

- • software integration and testing.

Traceability (or requirements traceability) refers to the ability to link system requirements to software safety requirements, and then software safety requirements to design requirements, and then to source code and associated test cases. Although traceability is not explicitly identified as a requirement in the main body of the text, it is certainly desirable in ensuring the verifiability deemed necessary in Section 7.4.2. Moreover, the need for “bidirectional traceability” (or upstream/downstream traceability) is noted in the same section.

7.2.3 Verification Tasks

The methods to be deployed in the development of an ISO 26262 system vary depending on the specified ASIL. This can be illustrated by reference to the verification tasks recommendations (as presented in Fig. 22).

Table 1 in Section 5.4.7 of Part 6 recommends that design and coding guidelines are used, and as an example cites the use of the MISRA C coding standard. It lists a number of topics that are to be covered by modeling and design guidelines. For instance, the enforcement of low complexity is highly recommended for all ASILs.

Modern test tools not only have the potential to cover all the obligatory elements for each ASIL, but they also have the flexibility in configuration to allow less critical code in the same project to be associated with less demanding standards. That principle extends to mixed C and C ++ code, where appropriate standards are assigned to each file in accordance with its extension (Fig. 23).

Table 12 from Section 9 of Part 6 shows, for instance, that measuring statement coverage is highly recommended for all ASILs, and that branch coverage is recommended for ASIL A and highly recommended for other ASILs. For the highest ASIL D, MC/DC is also highly recommended.

Each of these coverage metrics implies different levels of test intensity. For example, 100% statement coverage is achieved when every statement in the code is executed at least once; 100% branch (or decision) coverage is achieved only when every statement is executed at least once, AND each branch (or output) of each decision is tested—that is, both false and true branches are executed.

Statement, branch, and MC/DC coverage can all be automated through the use of test tools. Some packages can also operate in tandem so that, for instance, coverage can be generated for most of the source code through a dynamic system test, and can be complemented using unit tests to exercise defensive code and other aspects that are inaccessible during normal system operation.

Similarly, Table 15 in Section 10.4.6 shows the structural coverage metrics at the software architectural level (Fig. 24).

7.2.4 SAE J3061 and ISO 26262

ISO 26262 requires any threats to functional safety to be adequately addressed, implicitly including those relating to security threats, but it gives no explicit guidance relating to cybersecurity.

At the time of ISO 26262’s publication, that omission was perhaps to be expected. Automotive-embedded applications have traditionally been isolated, static, fixed function, device-specific implementations, and practices and processes have relied on that status. But the rate of change in the industry has been such that by the time of its publication in 2016, SAE International’s Surface Vehicle Recommended Practice SAE J3061 was much anticipated.

Note the wording here. SAE J3061 is not a standard. It constitutes “recommended practice,” and at the time of writing there are plans for it to be replaced by an ISO/SAE 21434 standard—the first result of a partnership between the ISO and SAE standards organizations.

SAE J3061 can be considered complementary to ISO 26262 in that it provides guidance on best development practices from a cybersecurity perspective, just as ISO 26262 provides guidance on practices to address functional safety.

It calls for a similar sound development process to that of ISO 26262. For example, hazard analyses are performed to assess risks associated with safety, whereas threat analyses identify risks associated with security. The considerations for the resulting security requirements for a system can be incorporated in the process described by ISO 26262 Part 8 Section 6: “Specification and management of safety requirements.”

Another parallel can be found in the use of static analysis, which is used in safety-critical system development to identify constructs, errors, and faults that could directly affect primary functionality. In cybersecurity-critical system development, static code analysis is used instead to identify potential vulnerabilities in the code.

7.2.4.1 Beyond Functional Safety

Despite the clear synergy between the two standards it is important to note that SAE J3061 does more than simply formalize the need to include security considerations in functional safety requirements. It is easy to focus on an appropriate process once functional, safety, and security requirements are established, but the significance of malicious intent in the definition of those requirements should not be underestimated.

SAE J3061 emphasizes this point throughout. It argues that cybersecurity is likely to be even more challenging than functional safety, stating that “Since potential threats involve intentional, malicious, and planned actions, they are more difficult to address than potential hazards. Addressing potential threats fully, requires the analysts to think like the attackers, but it can be difficult to anticipate the exact moves an attacker may make.”

Perhaps less obviously, the introduction of cybersecurity into an ISO 26262-like formal development implies the use of similarly rigorous techniques into applications that are NOT safety critical—and perhaps into organizations with no previous obligation to apply them. SAE J3061 discusses privacy in general and personally identifiable information (PII) in particular, and highlights both as key targets for a bad actor of no less significance than the potential compromise of safety systems.

In practical terms, there is a call for ISO 26262-like rigor in the defense of a plethora of personal details potentially accessed via a connected car, including personal contact details, credit card and other financial information, and browse histories. It could be argued that this is an extreme example of the general case cited by the SAE J3061 standard, which states that “…there is no direct correspondence between an ASIL rating and the potential risk associated with a safety-related threat.”

Not only does SAE J3061 bring formal development to less safety- critical domains, it also extends the scope of that development far beyond the traditional project development life cycle. Consideration of incident response processes, over-the-air (OTA) updates, and changes in vehicle ownership are all examples of that.

7.3 Freedom to Choose Adequate Standards

Not every development organization of every application is obliged to follow a set of process or coding standards that has been laid down by a client or a regulatory body. Indeed, it is probably reasonable to suggest that most are not in that position.

However, everyone wants their software to be as sound and as robust as possible. Even if it is a trivial application to be used by the writer as a one-off utility, no developer wants their application to crash. Even if such a utility expands into an application with wider uses, no one wants to have to deal with product recalls. No one wants to deal with irate end users. Even removing all external factors entirely, most people want the satisfaction of a job well done in an efficient manner.

So, it follows that if the use of process and coding standards is appropriate when safety or security issues dictate that software must be robust and reliable, then it is sensible to adopt appropriate standards even if an application is not going to threaten anyone’s well-being if it fails.

Once that is established a sound pragmatic approach is required to decide what form those standards should take.

7.4 Establishing an Internal Process Standard

Many recognized standards are most ideally deployed within large organizations. There are many software development teams that consist of two or three people all resident in the same office. It would clearly be overkill to deploy the same tools and techniques here as in a high-integrity team of hundreds of developers spread across the world.