Human Factors in the Chemical Process Industries

Kathryn Mearns1 Human Factors Consultant

1 Corresponding author: email address: [email protected]

Abstract

Human Factors is a multifaceted discipline incorporating ergonomics, engineering psychology, human–machine interaction, the working environment, and human and organizational factors. This chapter provides definitions of the terms associated with human factors, including human error and performance influencing factors. It then discusses the techniques used to identify the potential for human error in the workplace before discussing some of the performance influencing factors under the headings of individual factors, job factors, and organizational factors. Factors discussed include competence and skills, personality, occupational stress, design of control rooms, and procedures. Above all, the importance of understanding the safety culture or safety climate of an organization or site is stressed, as is the role of management at all levels, i.e., senior managers, middle managers, and supervisors. In conclusion, the chapter advocates integrating Human Factors into the safety management system (SMS). This will ensure the proper design of hardware, software, processes, and procedures to facilitate good practice in terms of operation and maintenance. Training and competence and management of change should also be considered as an integrated part of the SMS. Ultimately, although everyone who works in the process industries is responsible for safety, senior management is accountable for safety. They are the individuals who would be found culpable in a court of law in the event of a major incident as testified by the outcomes of Public Inquiries such as Flixborough, Piper Alpha, Texas City, and Deepwater Horizon.

Keywords

Human error; Human factors; Job factors; Individual factors; Organizational factors

1 Introduction

The chemical process industries encompass a number of different hazardous substances and processes, ranging from the manufacture of pharmaceuticals and industrial chemicals through to processing hydrocarbons into petrochemicals. Nevertheless, some common principles are necessary to prevent the major accident hazard (MAH) risks that are inherent in these industries from being realized. These principles have been covered elsewhere in this volume, and this chapter focuses on how the human element can contribute to major accidents and how contributory “performance influencing factors” (PIFs) can be managed and mitigated.

Of course, the chemical process industries are not just susceptible to MAH risks, i.e., loss of containment, fires, etc. The likelihood of an MAH risk being realized is fortunately very small, and the vast majority of incidents in the chemical process industries tend to be occupational injuries arising from slips, trips, and falls. What is relevant about human factors is that the same personal and system failings can lead to occupational injuries as well as to major accident harm. While it is unacceptable for people to suffer any accident or injury at work, the main focus for most human factors work in the chemical process industries is to prevent major accidents. These accidents have not only the potential to affect the people working directly at the plant but also local populations in residence close by and the physical environment.

Major accidents such as Seveso, Flixborough, Bhopal, and Texas City serve to highlight the costs both in human life and financially. For example, 15 people died and 180 were injured at Texas City and the financial losses were in the order of $1.5 billion. The immediate and underlying causes of this accident are well documented in the US Chemical Safety Board Report (2007). In relation to the “Key Issues” identified as being underlying causes of this accident, it is worth noting that safety culture and human factors are two of the four, the other two being process safety metrics and regulatory oversight. The panel reports that simply focusing on the actions of the frontline operators at the plant and their immediate supervisors misses the point. Tackling the underlying causes of the accident such as a poor safety culture; overfocus on occupational safety indicators rather than process safety indicators; and a cost-cutting regime and lack of incident reporting will have a greater impact on preventing future major accidents. This recommendation is supported by the incident investigations of other major accidents over the decades in a number of safety critical industries.

This chapter is organized in the following way. First, there is an outline of the human factors definitions and terminology, to ensure that readers have a consistent understanding of the terms used throughout the chapter. Second, there is a section on human error and violations (also known as noncompliances/nonconformances), which are often the final triggering actions that can lead to an adverse event. Third, there is a description of the factors that can influence human performance (so-called PIFs), which need to be managed in order to keep the risk of human error as low as reasonably practicable. Finally, techniques used to measure and manage human error and PIFs are discussed throughout the chapter.

2 Human Factors Definitions and Terminology

Human Factors (HF) or Human and Organizational Factors (HOF), as it is sometimes called, is a wide-ranging discipline incorporating elements of psychology, physiology, ergonomics, sociology, engineering, and management science. The discipline is often labeled Ergonomics or Human Factors Engineering, but in reality the scope is much broader than that, with an increasing emphasis on the knowledge, skills, and techniques that characterize the social sciences such as sociology and management studies, i.e., qualitative techniques such as interviews, focus groups, workshops, as well as the traditional quantitative techniques, e.g., Human Reliability Analysis (HRA), Human Error Quantification, and questionnaire survey design and deployment, which have traditionally been associated with human error and human factors measurement and management.

For the purposes of this chapter, the terminology used in research and assessment of human factors is clarified below since many of these terms are used interchangeably and inconsistently in the literature:

1. “Ergonomics” literally means “laws of work” and is the term mainly used in Europe. The term Human Factors originated in the United States but is now used more widely. Ergonomics tends to be associated more with physical workplace assessment, which can also be referred to as Human Factors Engineering.

2. Cognitive Ergonomics or Engineering Psychology emphasizes the study of cognitive or mental aspects of work, especially where there is a high level of human–machine interaction, automation, decision making, and mental workload.

3. Human–Machine Interaction or Human–Computer Interaction is the applied study of how people interact with technology.

4. Working Environment emphasizes the environmental and task factors that influence human performance.

5. Human and Organizational Factors (HOF) emphasizes the organizational aspects that influence human performance such as leadership style, management systems, safety culture and climate, training and competency arrangements, incident reporting systems, behavioral safety, and human resource practices. As such, HOF mainly concerns itself with PIFs, which will be covered later in the chapter.

Members of the human factors community will often specialize in more than one of these subdisciplines and will be familiar with a range of different techniques to measure and manage both human error and PIFs.

The UK Health and Safety Executive (i.e., UK Regulator) considers Human Factors to encompass “environmental, organizational and job factors, and human and individual factors, which influence behavior at work in a way which can affect health and safety” (HSE, 1999). Using the above definition as a basis, the UK HSE has grouped the following human factors issues under each theme (see Table 1).

Table 1

Human Factors Issues According to HSG48 (HSE, 1999)

| Individual | Job | Organization |

| Competence | Task | Culture |

| Skills | Workload | Leadership |

| Personality | Environment | Work patterns |

| Attributes | Displays and controls | Resources |

| Risk Perception | Procedures | Communications |

Table 1 provides a useful framework for considering the topic of human factors, although it is often difficult (and indeed inadvisable) to consider these factors in isolation, since they often are interrelated and interact with each other. For example, “competence” could be considered as an individual responsibility, in the sense that the individual should ensure that they are trained and competent for the tasks they are involved. It could also be considered an organizational responsibility, in the sense that the organization should ensure that arrangements are in place for their staff to be properly trained and competent for their roles and responsibilities.

It is not possible to discuss all the issues in Table 1 in great detail; therefore, this chapter will only focus on a few issues under each heading. For individual factors, the focus will be on skills and personality, thereby covering competence and attributes. For job factors the focus will be on displays and controls and procedures, thereby including task and environment. For organizational factors the focus will be on culture and leadership, thereby encompassing resources and communication, both of which are key aspects of leadership.

One of the first points to be addressed is the definition of human error (human failure), which can be caused by many of the PIFs outlined in Table 1.

2.1 Human Error

Professor James Reason defines Human Error as “a generic term to encompass all the occasions in which a planned sequence of mental or physical activities fails to achieve its intended outcome and when these failures cannot be attributed to the intervention of some chance agency” (Reason, 1991, p. 9).

Reason further distinguishes between slips, lapses, and mistakes. Slips are associated with faulty actions, where actions do not proceed as intended, e.g., misreading a display. Lapses are failures of memory, e.g., forgetting to press a switch. Slips and lapses tend to occur during routine tasks in familiar surroundings where the operator may be on “automatic pilot” or attention is captured by something other than the task in hand, e.g., a work colleague distracting the operator with a question. They are categorized as “skill-based” errors and are relatively easy to recover from because the operator will receive direct feedback that their actions have not led to the anticipated outcome.

Mistakes occur when the intended plans for action are wrong in the first place. In other words, intended actions may proceed as planned, but fail to achieve their intended outcome. There are two types of mistake “rule-based mistakes” and “knowledge-based mistakes.” Rule-based mistakes are where an incorrect diagnostic rule is applied. An example of this is where an experienced operator on a batch reactor may have learned diagnostic rules, which are inappropriate for continuous process operations. If an attempt is made to apply these rules to work out the cause of a continuous process upset, a misdiagnosis can occur, leading to an inappropriate action. There is also a tendency for people to apply strong but successful rules that they have applied in the past, even if they are not appropriate for the situation.

Knowledge-based mistakes occur when the information-processing capabilities of the operator are being tested by an unfamiliar situation that has to be worked out from first principles and there are no apparent rules or procedures to deal with the situation. Quite often, only information that is readily available will be used to evaluate the situation or people will depend on a “gut feel” that their course of action is correct, perhaps based on similar but unrelated incidents in the past. Sometimes “attention capture” occurs where the operators become focused on one small part of an overall problem, e.g., at Three-Mile Island. In other situations, operators might switch their attention between one task and another, thereby not really solving the problem.

Mistakes are difficult to detect and are more subtle, complex, and dangerous than slips. Detection may depend on someone else intervening or unwanted consequences becoming apparent.

The final type of “error” is a violation or nonconformance/noncompliance. These are considered “intentional” because the operator deliberately carries out actions that contravene the organization's policies, rules, or safe operating procedures. However, violations often tend to be well intentioned, either because their objective is to complete a task or simplify it. Fortunately, violations are very rarely conducted in order to sabotage a process or a plant.

It is important to note that violations can occur due to both internal and external pressures on the operator (i.e., pressure to get a task done on time, which can come from the individual or it can come from perceived pressures from supervisors and managers). Violations can be routine, situational, optimizing, or exceptional.

Routine violations refer to when violations become the norm, i.e., what is normally done in the workplace, learned by others therefore becoming embedded as the way of doing things. They are usually shortcuts taken to get the job done more quickly, more efficiently, or more easily. Unless these types of violation are monitored and controlled, the organization can develop a culture that tolerates violations. Ways of counteracting routine violations are through good supervision, proper training (with explanations of why certain procedures are in place), good procedures and work practices, and, as a final measure, behavioral safety programs, which coach to reinforce the correct behavior and challenge and change incorrect behavior.

Situational violations tend to occur when there is a gap between what the rules or procedures say should be done, and what is actually available in order to get the job done. For example, a lack of trained and competent staff to conduct a task or lack of procedural clarity can lead to a situational violation. This can often occur when management are unaware of what resources are required, when procedures are out of date, or when there has been a cost-cutting program and staff have been made redundant, thus leading to reduced manning levels. Obviously, the same measures that are applied to manage routine violations can be used to manage situational violations.

Optimizing violations refer to when individuals carry out an activity for personal gain or simply for “kicks,” e.g., seeing how far they can go by testing the system to its limits. However, organizations often provide incentives such as bonuses for meeting production targets, which can encourage “organizational” optimizing violations. If brought out into the open through incident/near miss reporting programs and good communication up and down the organizational hierarchy, these types of violation can help to identify measures that can be taken to improve both production and safety within the organization.

Exceptional violations occur when there is an unusual or unanticipated situation where no rules or procedures apply or where the rules/procedures cannot be applied. Perhaps the most poignant example of an exceptional violation can be seen during the Piper Alpha disaster, where personnel had been told that jumping off the platform into the sea was not survivable. In reality, many of those who jumped into the sea that night did survive unlike those who stayed on the platform and made their way to the muster point in the accommodation block as the procedures and their training dictated where they perished.

This example serves to remind us that human beings do not always follow rules and procedures blindly but are capable of interpreting and adapting to situations and solving problems in situ. This inherent behavioral flexibility and adaptability is what keeps us safe in a complex and constantly changing environment, an issue that we will return to later in the chapter.

Human error can be seen as a consequence or outcome of our performance limitations, and we regularly make errors on an everyday basis. Even highly trained and competent individuals working in control rooms and on maintenance tasks within the chemical process industries are error-prone, but fortunately, most errors are captured before they develop into something more serious. There are a number of challenges facing the human factors specialist. One challenge is to design plant, equipment, and systems, which make the chance of human error very low or As Low As Reasonably Practicable (ALARP), i.e., at a point where the costs are not so prohibitive that the benefits are seriously undermined. Another challenge is to identify where human error is most likely to occur, what type of error might occur, and what is the likelihood of it occurring. A third challenge is to measure human error and identify what factors make it more likely to occur (the so-called PIFs). Finally, once error has occurred, it would be of interest how these errors are identified and recovered from.

The remainder of this chapter covers how these many challenges can be identified and overcome. Due to the diverse and extensive nature of the human factors subject matter, not all of the factors identified by the HSE or other international bodies will be reported here. Those areas in which the author has relevant knowledge and expertise will be the main focus of attention. As a result, most of the discussion will be centered on human performance issues and will cover individual and organizational factors, since these are the areas in which the author has conducted most of her research. Ergonomics and Human Factors Engineering, particularly regarding workplace design, human–machine interaction, and characteristics of the working environment, are not areas that the author is familiar with and therefore will only be discussed in passing. Nevertheless, it is worth noting that “Designing for Humans” (Noyes, 2001) is an important exercise and can potentially prevent the development of many of the human performance issues that will be discussed in this chapter.

2.2 Measuring and Managing Human Error

In a paper prepared for presentation at the Institute of Chemical Engineers (IChemE) XX Hazards symposium in April 2008, Visscher of the US Chemical Safety Board presented summaries of investigations of some of the 50 chemical-related incidents in the United States since 1998. In his conclusions, he notes that many of the incidents occurred in facilities where chemicals are stored and used for other purposes rather than at chemical processing companies per se. He also observes that the controls and safeguards that rely on human judgment and reliability are revealed as a particular area of vulnerability and that management should focus on these issues in their operations. Furthermore, Visscher reports that the major accidents that have occurred at the larger companies have highlighted the important role of corporate leadership and oversight in assuring process safety integrity. Corporate leadership and oversight and the important role they play in maintaining safety are explored later on in this chapter.

The chemical process industries use a number of techniques for measuring and managing human error/human failure. The Center for Chemical Process Safety (CCPS) (1994) has produced a set of guidelines for preventing human error in process safety, to which the interested reader is referred.

This section will only deal with a few of these techniques, but it is also to remember that it is difficult to estimate when and where human failure is likely to occur and the most effective way to prevent errors is to focus on managing the PIFs in general. Thorough and detailed incident investigation, with a strong focus on human and organisational factors, is important to identify which PIFs should be focused on in order to prevent further incidents. In the author's experience the following PIFs are the most commonly identified contributory factors in major accidents: poor design, poor procedures, lack of supervision, fatigue, poor safety climate/culture and lack of safety leadership, and underinvestment by senior management in safety improvements.

2.3 Human Reliability Analysis

Human factors specialists attempt to measure the likelihood of human error in predetermined situations through HRA and Human Error Probability (HEP). HRA techniques are used to support the minimization of risks associated with human failure. They are both quantitative (e.g., HEP) and qualitative (e.g., safety critical task analysis (SCTA)) in nature; however the application of quantitative techniques can be difficult due to the fact that HEPs in particular are often used without sufficient justification. In particular, new processes and new technologies will not have sufficient data available to generate HEPs. SCTA (see Energy Institute, 2011) considers the impact that PIFs can have on the likelihood of error, which will be affected by job and organizational factors such as design of plant and equipment, the quality of procedures, and the time available to get the job done. Using HEPs without task context can therefore lead to inaccuracies in analysis.

Henderson and Embrey (2012) produced guidance for the Energy Institute on Quantified Human Reliability Analysis (QHRA) (see Energy Institute, 2012), in order to reduce instances of poorly developed or executed analyses. These authors recommend an eight-stage Generic HRA process as follows:

1. Preparation and problem definition

2. Task analysis

3. Failure identification

4. Modeling

5. Quantification

6. Impact assessment

7. Failure reduction

8. Review

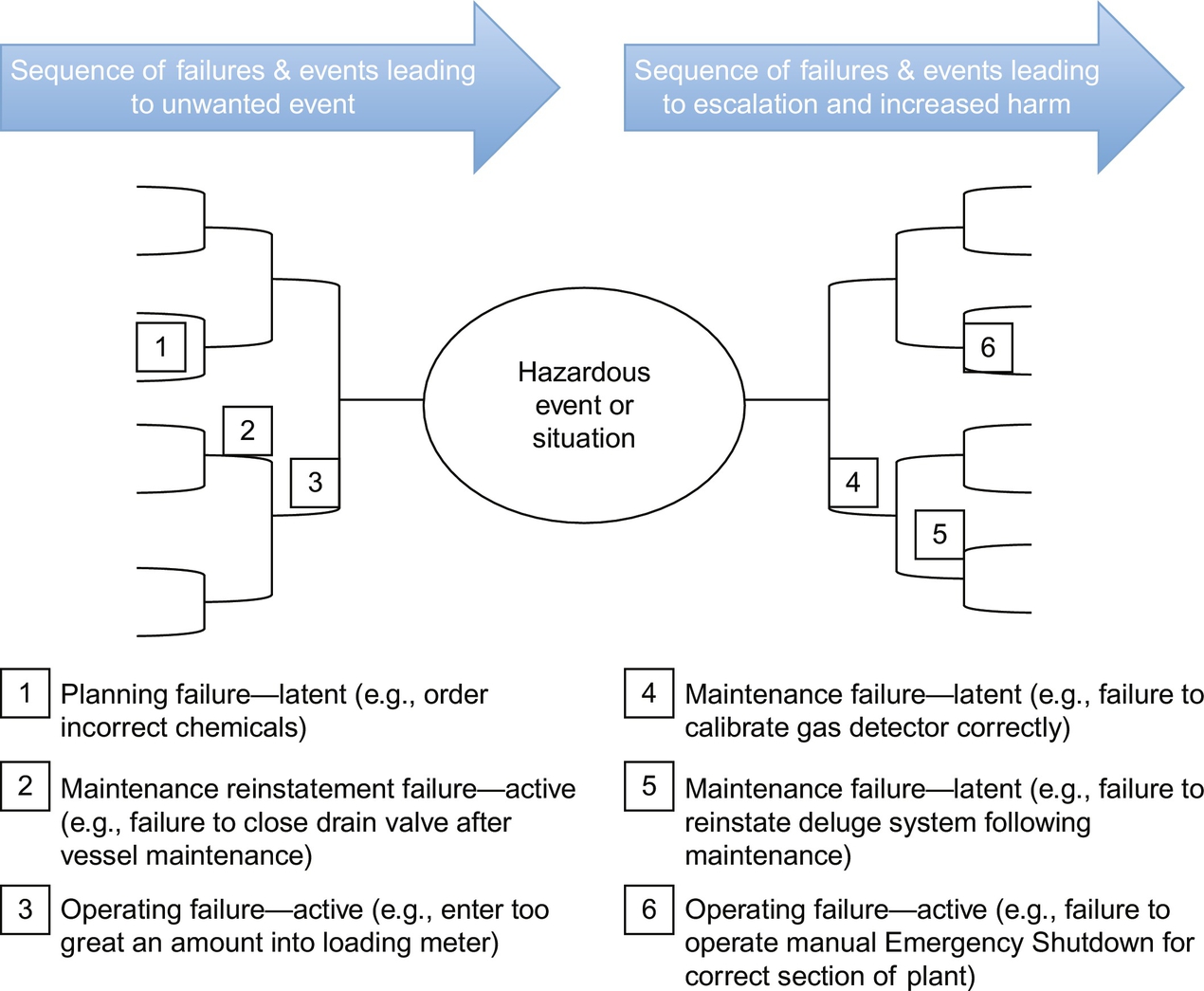

Bow-tie diagrams have become a popular way of illustrating how initiating and response failures can occur. For example, Henderson and Embrey (2012) use the following figure to show how different human failures can affect the initiation and mitigation and escalation of a hypothetical event (see Fig. 1).

From a practical point of view, a number of factors can undermine the validity of an HRA. As a starting point, the analyst will need a thorough understanding of the task and the environment it is conducted in. Therefore, the input of skilled and experienced operators will be required. A walk-through of the tasks and subtasks involved in the activity at the location and/or a detailed talk-through of the tasks and subtasks in a task analysis workshop is necessary. It is important to understand which PIFs might be exerting an influence in the actual working situation. Also if any HEPs have been imported (usually from a general database if such a database exists), the rationale from including these HEPs must be clearly articulated. It is normal practice to use a set of guidewords to identify the potential failures.

There are a number of guides to HRA (see Health and Safety Executive, 2009; Kirwan, 1994). The HSE (2009) report RR679 provides a review of human reliability assessment methods. Out of a total of 72 human reliability tools identified, the report authors considered 35 to be potentially relevant to hazardous industries such as the chemical process industries. This list was then reduced to 17 tools, most of which had only been applied in the nuclear industry. Only five of these tools were considered to have “Generic” capability, although some of the nuclear tools were considered to have wider application.

The lack of space precludes detailed discussion of all 17 HRA tools covered in RR679, and the interested reader is advised to access the report (which is available on the Health and Safety Executive website, for further details). Two of the tools are discussed here based on the fact that they are generic or have the potential to be applied more widely than in the nuclear sector: Human Error Assessment and Reliability Technique (HEART) and Technique for Human Error Rate Prediction (THERP). In addition, HEART and THERP are two of the few HRA methods that have been empirically validated (see Kirwan, 1996; Kirwan, Kennedy, Taylor-Adams, & Lambert, 1997).

2.3.1 Human Error Assessment and Reliability Technique

Williams (1985, cited in HSE, 2009) is attributed as being the first to refer to HEART in a series of conference papers. According to the review of HRA methods in RR679, HEART has been applied across a number of high-hazard industries where human reliability is critical, including the chemical process industry. It is designed to be a relatively quick to apply and is easily understood by both human factors specialists and engineers. There are nine Generic Task Types (GTTs) described in HEART, each with an associated HEP and 38 Error Producing Conditions (EPCs). EPCs affect task reliability, each with a maximum amount by which the nominal HEP can be multiplied. The key stages of HEART are the following:

• classify the task for analysis into one of the nine GTTs;

• assign the nominal HEP to the task;

• identify which EPCs may affect task reliability;

• consider the proportion of effect for each EPC;

• calculate the task HEP.

There are a number of premises that have to be taken into consideration when the technique is applied: (1) human reliability will be dependent upon the task to be performed; (2) this level of human reliability will tend to be achieved with a given likelihood within probabilistic limits in perfect conditions; (3) since perfect conditions rarely exist, human reliability will degrade as a function of the extent to which EPCs apply. It should be noted that the total probability of failure should never be more than 1.00, so if the multiplication of factors goes above 1.00, the probability of failure can only ever be assumed to be 1.00.

2.3.2 Technique for Human Error Rate Prediction

Swain and Guttman (1983) developed THERP for the US Nuclear Regulatory Commission (NRC). According to Kirwan (1994), THERP is a total methodology for assessing human reliability and, like HEART, it has also been validated by Kirwan et al. (1997). The THERP handbook prepared by Swain and Guttman (1983) for the NRC presents methods, models, and estimated HEPs to allow analysts to make either quantitative or qualitative assessments of human errors in nuclear power plants. It includes task analyses, error identification, and quantification of HEPs. Although THERP was developed for and has been used extensively in the nuclear industry, it has also been applied to the offshore and medical sectors and would no doubt also have applications in the chemical process industries. RR679 (HSE, 2009) outlines the key steps for applying THERP as:

• Decomposition of tasks into elements

• Assignment of nominal HEPs to each element

• Determination of effects of PSF on each element

• Calculation of the effects of dependence between tasks

• Modeling in an HRA event tree

• Quantification of total task HEP

Estimating the overall probability of failure involves summing the probabilities of all failure paths in the event tree. Summing only the primary failure paths and ignoring all the success limbs, when all the HEPs are 0.01 or smaller, can give an approximation of failure, expressed as an equation.

In conclusion, the human factors community has developed a range of different techniques to assess human reliability across a range of high hazard industries. The HSE report RR679 presents a review of 17 of these methods out of the total 72 identified. Most of the HRA tools have been developed for the nuclear industry; however, five of these tools were considered to have “Generic” application having been used in other industries, including the chemical process industry. It is worth noting that there have been three generations of HRA tools and the selection of an appropriate tool may be dependent upon the maturity of the site where it is being applied. For sites that are just attempting to quantify the risk of human error for the first time, first-generation tools may be the most useful. They may not be able to give insight into dependency or errors of commission, but they will be able to give a fundamental insight into the issue of human error. HEART and THERP are examples of these first-generation tools. Second-generation tools are more appropriate for more “mature” sites, with a long tradition of applying the first-generation tools but that want to understand context and errors of commission when predicting human error. CREAM, ATHEANA, and MERMOS are examples of second-generation tools, but they do not seem to be widely applied and they have yet to be empirically validated. Third-generation tools are now being developed using first-generation tools such as HEART as a basis. It is the current author's belief that if an organization does not have a full understanding of the context under which tasks are executed, there is little chance of accurately assessing human reliability and therefore the impact of PIFs should be the foremost consideration in the application of any tool.

2.4 Safety Critical Task Analysis

SCTA describes a process whereby the impact of potential human error on MAHs can be assessed. SCTA is an extension of Task Analysis, which is the study of the actions and mental processes an employee is expected to carry out in order to achieve a goal. Task Analysis can be used for activities such as assessing staff levels and improving training programs; however, it is not discussed in any detail in this chapter. For the interested reader, there are some excellent resources available, e.g., Kirwan and Ainsworth (1992) and Shepherd (2001). The purpose of SCTA is to identify where human failure can contribute to MAHs and can include initiating events, prevention and detection, control and mitigation, maintenance tasks, and emergency response. The process involves identifying which tasks on a site are safety critical in relation to MAHs, understanding whether human error or violations might contribute to initiating an adverse event, and understanding what preventative measures or layers of protection could be put in place to reduce the likelihood or mitigate the consequences of human failure.

A number of publications exist to support the implementation of SCTA. The Energy Institute (2011) has developed a clear set of guidance on SCTA and the UK Health and Safety Executive has produced a guidance paper for its inspectors (identifying human failures, HSE Core Topic 3), describing a seven-step approach for SCTA. This seven-step approach consists of the following:

1. Identify the main hazards—e.g., from HAZIDs, Safety Reports, or Risk Assessments.

2. Identify safety critical tasks associated with those main hazards and prioritize those tasks where there are many MAHs. Procedures and discussions with staff are the main techniques recommended.

3. Understand the safety critical tasks, i.e., who does what, when and in what sequence? Again this can be derived from procedures, checklists, interviews with staff (walkthroughs/talkthroughs), and observations of staff conducting the tasks.

4. Represent the safety critical tasks, i.e., through breakdown of the tasks in tables or diagrams in sufficient detail for further analysis.

5. List all the potential human failures and their consequences, through representing the safety critical tasks from step 4. Also, list the potential PIFs that could influence human performance for each task and consider what safety measures are already in place. The different types of human failure/error and PIFs used in SCTA are listed in Tables 2 and 3.

Table 2

Human Error Guidewords for Use in SCTA (Energy Institute, 2011)

| Action failures | Checking failures |

| Operation omitted Operation incomplete Operation mistimed Operation in wrong direction Operation too long/short Operation too little/much Operation too fast/slow Operation too early/late Operation in wrong order Right operation on wrong object Wrong operation on right object Misalignment Misplacement | Check omitted Check incomplete Right check on wrong object Wrong check on right object Wrong check on wrong object Check mistimed |

| Retrieval failures | Selection failures |

| Information not obtained Wrong information obtained Information retrieval incomplete Information incorrectly interpreted | Selection omitted Wrong selection made |

| Communication failures | Planning failures |

| Information not communicated Wrong information communicated Information communication incomplete Information communication unclear | Plan omitted Plan incorrect |

Table 3

PIFs for SCTA (Energy Institute, 2011)

Individual factors

Physical capability

Fatigue

Stress/morale

Work overload/underload

Competence

Motivation

Job factors

Clarity of signs, signals, instructions

System/equipment interface (labeling, alarms)

Difficulty/complexity of task

Routine or unusual task

Divided attention

Inadequate procedures

Task preparation (PTW, risk assessment, checklists)

Time available/required

Tools appropriate for the task

Working environment (noise, heat, space, lighting)

Organizational factors

Work pressure

Supervision/leadership

Communication

Staffing levels

Peer pressure

Clarity of roles and responsibilities

Consequences of failure to follow rules/procedures

Effectiveness of learning from incidents

Safety culture

Change management

Adapted from Health and Safety Executive (1999). Reducing error and influencing behaviour (HSG48). Suffolk:HSE Books.

6. Identify any additional safety measures that could be implemented to further mitigate PIFs and the risk of human failure.

7. Review the effectiveness of the process contributes to a wider understanding of SCTA and improvements to the technique.

A common problem is that all tasks are considered to be “safety critical.” Usually, it is the operational tasks that are the focus on the SCTA, e.g., chemical offloading operations, control room operations, or blending chemicals; however, checking tasks, emergency response, and maintenance tasks might also be included.

The UK Health and Safety Executive has made SCTA a requirement for acceptance of its Control of Major Accident Hazards (COMAH) Safety Reports. Table 2 lists the human error guidewords suggested by the HSE.

3 Performance Influencing Factors

The management of human failure is dependent upon understanding and responding appropriately to PIFs. The list of factors identified by the HSE (1999) shown in Table 1 outlines some PIFs; however for the purposes of SCTA, more comprehensive lists should be used (see Table 3).

Having outlined techniques used to identify different types of human error, the remainder of the chapter discusses some of the PIFs identified under the HSE, HSG48 framework shown in Table 1.

4 Individual Factors

The HSE (1999) lists the individual factors that can affect performance such as competence, skills, personality, and various person attributes such as attitudes to risk and safety. Competence can be defined as “A cluster of related abilities, commitments, knowledge, and skills that enable a person (or an organization) to act effectively in a job or situation” (Business Dictionary, accessed October 2016). The same source defines “skills” as “An ability or capacity acquired through deliberate, systematic and sustained effort to carry out complex activities or job functions involving ideas (cognitive skills), things (technical skills), and/or people (interpersonal skills).” Personality refers to a “relatively stable, consistent and distinctive set of mental and emotional characteristics a person exhibits when alone, or when interacting with people and his or her external environment.” Within the context of the chemical process industry, attitudes to risk and safety would refer to a tendency to respond positively or negatively toward hazards, their likelihood, their consequences, and the measures in place to mitigate the realization of the risks inherent in those hazards (author's own definition). Hence, someone could hold a positive attitude toward risk and a negative attitude to safety or a negative attitude toward risk and a positive attitude toward safety. This section will now expand on each of these individual factors.

4.1 Competence and Skills

Competence and skills are clearly of prime importance; however, there can be some debate about who is responsible for developing skills and competencies and keeping them up to date. Depending on the nature of the job/tasks and the skills and competencies associated with it, initial responsibility may lie with the individual or with the schools, colleges, and universities that are responsible for ensuring the people receive the necessary education. No matter what the job or tasks entail, it is safe to assume that basic numeracy and literacy skills will be required. It is up to the organization to provide an accurate job description and outline the relevant person characteristics required to carry out the job so that the right people can be selected from the outset. In the author's own experience, these job descriptions and person characteristics are sometimes inadequate and the suspicion is that the Human Resources Department has been left to write it, without any input from skilled practitioners; therefore, there is a lack of understanding of the true nature of the job and the type of person required for it. In order to recruit and retain the right people for the job roles and tasks in the organization, it is crucial that the right people are involved in writing the job description and person characteristics.

If one assumes that the right person is selected for the right job, then the organization may need to deliver some “on-the-job” training so that the new recruit can learn the tasks that he or she will be required to carry out in context. Again, in the author's experience, a school-leaver or graduate can very rarely come straight into a job and conduct their activities with the requisite level of competency; development “in role” under the tutelage of an experienced supervisor or mentor will be critical.

Once the basic skills and knowledge are acquired, there is a need for a period of on-the-job supervision to determine whether the individual is competent to carry out the assigned tasks. However, competencies should be kept up to date for example, when there are changes to the job or when there are new developments in systems, technology, legislation, or equipment. Refresher training may also be required for some tasks. Employees in the chemical industry will be trained and competent in the technical skills they require for their job, e.g., control room operations, chemical engineering, or maintenance; however, there are a set of nontechnical skills, which are widely used in other high-hazard, high-reliability industries, but do not appear to be prevalent in the chemical process industries.

4.2 Nontechnical Skills

Nontechnical skills training began in the aviation industry as far back as the 1970s, after the industry identified that many aviation accidents did not occur due to technical problems with the aircraft or the pilots’ lack of technical flying ability. Instead, flight data recorders and cockpit voice recorders identified nontechnical skills such as poor situation awareness, communication, teamwork, decision making, leadership, fatigue, and stress, as major contributors to aviation accidents. The industry therefore started to develop nontechnical skills training as part of Crew Resource Management (CRM), which has now become mandatory for all pilots and cabin crew. CRM refers to the flight crew's use of all necessary resources (systems, technology, equipment, and human) to ensure safe and efficient operation of the aircraft. Nontechnical skills are a critical part of CRM, particularly in the identification and recovery from errors (threat and error management). Nontechnical skills are generally divided into two subgroups: (1) cognitive skills (decision making, situational awareness) and (2) social skills (leadership, teamwork, and communication).

The use of simulators and Line-Oriented Flight Training (LOFT), which involves testing the crew's nontechnical skills, i.e., situation awareness, team working, decision making, in abnormal situations which have not been prebriefed, has facilitated this type of training over the decades. Test scenarios can be developed from various sources, but accident reports with a full emphasis on human factors issues are most often used. CRM and nontechnical skills are examined both in the simulator and in normal flight operations, and pilots have to keep these skills up to date in order to fly commercial aircraft. Pilots and cabin crew are also required to undertake regular refresher training.

Other sectors such as aircraft maintenance (Sian, Robertson, & Watson, 2016), maritime (STCW, 2010), nuclear (INPO, 1993), offshore production platforms (O’Connor & Flin, 2003), hospital operating theatres (Flin & Maran, 2004; Mitchell & Flin, 2008; Yule, Flin, Paterson-Brown, & Maran, 2006), and offshore well operations (Energy Institute, 2014; International Association of Oil and Gas Producers (OGP), 2014) have also adopted the principles of CRM and nontechnical skills training. It is worth noting that the development of guidance for CRM/nontechnical skills training in well operations has arisen directly as a result of the findings of the inquiries into the Deepwater Horizon disaster.

The content of CRM and associated nontechnical skills training will, out of necessity, reflect the industry in which the training is being designed and implemented (see Flin, O’Connor, & Mearns, 2002 for a review). Nonetheless, reference to the research papers and training development around these programs indicates that the subject areas of situation awareness, decision making, team working, leadership, and managing stress and fatigue are key components. Communication is considered to be the cornerstone of CRM and nontechnical skills training.

The author is not aware of CRM and nontechnical skills training in the onshore chemical process industries; however, it is likely that the components outlined earlier, particularly team working, leadership, and communication, are covered in some training programs for the industry. The importance and utility of CRM and nontechnical skills training is that it takes incidents and accidents as its starting point, and based on the detailed human factors analysis of those incidents, trains the skills that have been found to be lacking and have led to the incident occurring. It is therefore a way of “closing the loop” (Flin et al., 2002) to prevent the likelihood of human failure in the future. Through a focus on CRM and nontechnical skills, personnel can also be trained to recognize and trap errors before they develop into more series adverse events. The other interesting point about this type of training is that the aviation industry has developed and persisted with it, whereas other industries “chop and change” their training regimes to adopt whatever is fashionable at the time (the offshore oil and gas industry is a case in point). This consistency of approach means that these programs have had time to be integrated into the systems and processes of the industry and deliver the performance improvements they were designed to deliver.

Proper development of nontechnical skills training also requires sets of “behavioral markers” that can be used to identify whether employees are exhibiting the trained behaviors or not. Obviously, the development of behavioral markers will be industry specific and will require the combined skills of operational personnel (e.g., operators, engineers, maintenance staff) and human factors specialists. Performance can be assessed in training simulators if they are available or online, when the personnel will be assessed while actually conducting their tasks. Studies into the development of behavioral markers for nontechnical skills training in the aviation sector and hospital operating theaters include Flin and Martin (2001), Flin (2003), Fletcher et al. (2003), and Yule et al. (2008). CRM/nontechnical skills training courses will tend to consist of both theoretical and applied teaching.

In conclusion, nontechnical skills training provides a means by which human performance can be managed and improved. A number of safety critical industries have adopted this type of training, and it could be considered as a form of training for the chemical process industries.

4.3 The Role of Personality

The role of personality in determining safety performance has been well researched starting back in the 1950s with the idea of the “accident-prone” personality. Subsequent research and development has shown that so-called accident proneness may be a misnomer and again we find that a focus on PIFs is a more productive route to follow. Nonetheless, there are clearly individual differences in intelligence, aptitude, dexterity, skills, education, and motivation, and potential employees will tend to be selected for these characteristics rather than their personality, per se.

There is a vast literature on personality and a wide range of personality tests developed by psychologists over many decades. According to the American Psychological Association (APA, 2016), “personality” is defined as “individual differences in characteristic patterns of thinking, feeling and behaving.” The assumption is that “personality” is relatively stable and resistance to change and can be measured along a number of measurable “dimensions,” “factors,” or “traits.” The biological definition of “traits” is characteristics or attributes of an organism as expressed by genes and/or by the environment. When it comes to personality, a combination of genetics and the environment will lead to a particular trait developing in an individual. Many of the personality tests developed by psychologists are designed to identify individuals, who are suffering from clinical psychological conditions such as anxiety, depression, dementia, obsessive–compulsive disorders, and schizophrenia. From an occupational or industrial/organizational perspective, a number of personality tests exist for selection and development purposes. These tests are designed to not just measure personality but also assess aptitudes such as problem solving, social interaction, and situation judgment. One of the best-known occupational personality tests is the Myers Briggs, which assesses employees’ tendencies toward Introversion/Extraversion; Thinking/Feeling; Judging/Perceiving; and Intuition/Sensing. Results from these tests place people into one of a range of 16 different personality types, each of which has its own strengths and weaknesses. Although the Myers Briggs claims to identify 16 different personality types, most psychologists working in the area of personality testing nowadays recognize five main dimensions (i.e., the so-called Big 5, also known by the acronyms OCEAN or CANOE) with people scoring “high” to “low” on each trait:

• Openness to Experience—This personality trait reflects preferring variety in life, being attentive to inner feelings and having an active imagination, aesthetic sensitivity, and intellectual curiosity.

• Conscientiousness—Individuals who are conscientious tend to have high levels of self-discipline and are good at planning and striving to achieve long-term goals. They are often perceived as being responsible and reliable, but they can also be perfectionists and workaholics.

• Extraversion—Extraverts score highly on scales measuring sociability, assertiveness, energy, and talkativeness. By contrast, those scoring on the other end of the scale are Introverts, who enjoy spending time alone with their own thoughts and prefer solitary work and hobbies.

• Agreeableness—This trait speaks for itself. People who score highly tend to be warm, friendly, and tactful, with a positive opinion of others and are able to get along well with other people.

• Neuroticism—Neurotic individuals display the characteristics of being anxious, worried, moody, frustrated, or afraid. These characteristics are generally not considered to be conducive to good work performance; however, when it comes to risk and safety, neuroticism may have an important role to play.

The research evidence for the role of personality in industrial safety and accident involvement tends to be quite limited, with much of the literature focusing on driver personality and accident involvement rather than how personality traits affect attitudes to risk and safety in the workplace, particularly the chemical process industries. It is important to remember that any personality trait will not be exerting its influence in isolation but will also be subject to influence from the working environment (job factors) and the social environment (organizational factors) that the individual is exposed to.

4.3.1 Personality and Safety in the Workplace

A study conducted by Hansen (1989) investigated the relationship between accidents, biodata (e.g., age, job experience), cognitive factors, and personality in a sample of 362 chemical industry workers in the United States. The only personality trait investigated appears to have been neuroticism, in particular the social maladjustment and distractibility components of neuroses, both of which were found to have a relationship with accident involvement. Thus, individuals who were more socially maladjusted and prone to distractibility were more likely to have experienced a work accident.

Another study by Cellar, Yorke, Nelson, and Carroll (2004) examined the relationships between the Big 5 and workplace accidents in a sample of 202 undergraduate volunteers (134 women and 68 men). Clearly, this is not the best sample of “industrial workers” to focus on and the validity of this study could be challenged since it can be assumed that these students would probably not be working full time and may only have temporary jobs while completing their studies. The results showed that more Agreeable and Conscientious individuals were less likely to have been involved in accidents.

Clarke (2006a) investigated the role of perceptions of safety (known as “safety climate”; see later in this chapter), attitudes to safety (a personal expression of favor or disfavor toward risk and safety), and personality characteristics of traits on accident involvement in a wide variety of industries. The study took a “meta-analytic” approach, where relevant studies were identified from a literature search and the correlations between the variables of interest, i.e., safety perceptions, attitudes to safety, personality traits, and accident involvement, are analyzed. After an initially screening exercise against a set of criteria, only 19 studies out of total of 51 originally identified were included in the meta-analysis.

The results showed that negative safety perceptions were correlated most highly with accident involvement, followed by negative attitudes to safety and last of all, the personality characteristics with relatively low correlations. However, the personality trait “Agreeableness” was a better predictor of accident involvement than either safety attitudes or safety climate. Basically, people who demonstrate lower levels of agreeableness are more likely to be involved in accidents. This could be due to the fact that such individuals are less likely to be socialized into the norms of the organization and may also be less compliant with regulations, rules, procedures, and work instructions. Such individuals may be more likely to violate the rules and procedures that are in place to keep plant, people, and the environment safe. However, this is only conjecture on my part.

Clarke and Robertson (2008) demonstrated the role of agreeableness in accident involvement in another meta-analytic study. They identified 24 studies that had investigated the relationship between the “Big 5” Personality dimensions and self-reported accidents or personal injuries. The studies covered a wide range of occupations and nationalities, including Hansen's study on US chemical processing workers (production and maintenance) and UK personnel on offshore drilling rigs and production platforms. It should be noted that several of the studies were conducted on taxi or bus drivers from India, Turkey, South Africa, and the United States, thus covering the area of driver behavior rather than industrial safety. Although other personality dimensions were measured in some of the 24 studies, Clarke and Robertson (2008) only focused on the measurements of Openness, Conscientiousness, Extraversion, Agreeableness, and Neuroticism. Their meta-analytic study found that low Agreeableness, low Conscientiousness, and high levels of Openness and Neuroticism were all positively correlated with accident involvement, with little evidence of a relationship between Extraversion and accidents. In other words, people who were disagreeable and lacked conscientiousness were more likely to have been involved in accidents as were people who were more open to experience. This would seem to make sense because, as noted earlier, such individuals would have less tendency to comply with social norms and rules and regulations and a higher tendency to explore new ways of working, which again may not be compliant with the organization's way of doing things. However, somewhat surprisingly, people who were more neurotic also showed higher levels of accident involvement. This seems counterintuitive since one would have expected highly neurotic individuals to be risk averse and safety conscious. However, all personality dimensions except Agreeableness demonstrated evidence of being situation specific according to certain statistical controls used in the meta-analysis. This means that the effects of the personality characteristics were only evident in specific contexts, for example, a particular industry or occupation. For Agreeableness, the relationship with workplace accidents appears to be consistent across occupations.

There is one last study worth mentioning in the context of personality and accident involvement in a chemical process industry. This study was conducted in the UK offshore oil and gas industry (Sutherland & Cooper, 1991) and did not focus on the big 5 but instead took measurements of the Type A/Type B personality types, along with neuroticism and extraversion/introversion. Type As typically display behavior patterns such as competitiveness, hostility, and time pressure. These individuals are often categorized as “workaholics” and appear to live with high levels of stress in their lives. Type Bs, on the other hand, display more relaxed behavior, less competitiveness, and less hostility. Apart from questionnaire measurements of Type A/Type B personality, Sutherland and Cooper (1991) also used the questionnaire to measure neuroticism, extraversion/introversion, job satisfaction, self-reported stress, and accident involvement. The study involved 360 personnel working in the European offshore oil and gas industry on both production platforms and drilling rigs. These personnel had been surveyed previously, and this was a follow-up study 1 year later. The results showed that both the Type A behavior patterns and neuroticism were associated with increased accident involvement, lower job satisfaction, and higher levels of stress. Extraverts actually seemed to report more accidents than introverts, but Sutherland and Cooper found that introverts had also been involved in accidents, leading to personal injury; however, they were less likely to report them. The propensity to report may therefore be a characteristic of the extraverts. Type As were more prevalent in the sample of offshore workers used in this study and they also seem to be more characteristic of offshore workers in general (author's own observations and results from unpublished research), but Type Bs do exist offshore and there may be aspects of self-selection into offshore work and certain job roles, according to personality type. Not everyone can cope with the rigors of offshore life and long periods of isolation from family and friends. The role of stress was also examined in this study, and this topic will be covered in the next section.

In conclusion, the meta-analytic studies mentioned earlier indicate that only the personality trait of “Agreeableness” seems to have a consistent relationship with accident involvement across a wide range of industries, professions, and occupations with disagreeable personalities being more likely to have been involved in self-reported accidents. The other personality traits appear to exert their influence via associations with other factors such as safety climate, attitudes to safety, stress, and job satisfaction, which are factors that also exert an influence on the likelihood of being involved in an accident. It is therefore difficult to disentangle cause and effect here.

From the perspective of making interventions to improve safety, it is the author's personal belief that there is little an organization can do to change someone's personality; however, safety climate, levels of stress, job satisfaction, and attitudes to risk and safety can be managed and modified. Of course, organizations can select for particular personality types; for example, one could argue that only highly agreeable people should be selected to work for high-risk industries such as the chemical process industry. However, selecting for one particular personality trait may mean selecting out other personality traits that are beneficial for the organization in other ways. For example, the organization may also want personnel who are conscientious or competitive, or are quiet, thoughtful, analytic, and introverted. Therefore, it is suggested that personality is not an area an organization should focus on if it wants a viable, flexible, and competitive business. Nevertheless, it may be a factor to take into consideration when candidates are closely matched on a person specification for a job description and recruiters are having difficulty in making a recruitment selection. This may be particularly relevant when selecting candidates for a safety critical position in the chemical process industry.

4.4 Occupational Stress

No discussion of PIFs would be complete without reference to occupational stress, although where it fits within the individual, job, and organizational factors framework is a point of debate, since all these factors can be associated with the experience of occupational stress. There is an extensive literature on the subject and it is one factor that has been consistently related to accident involvement in a number of industries. Sutherland and Cooper's (1991) study on stress has already been mentioned, but there are many other studies, some of which have been conducted in the chemical process industries. The word “stress” is widely used, but occupational or job stress is specific to the workplace and arises from the conditions experienced there. Like so many human factors issues, stress will not exist in isolation from other PIFs and it can be debated as to whether occupational stress comes under the category of an individual, job, or organizational factors. In reality, it can span all three. For example, personality, lack of skills and competence, excessive workload, inadequate supervision, badly written procedures, and a poor safety climate impact on stress and therefore on the performance of both the individual and the organization. Of course, factors external to the work environment will also play a role in creating stress, for example, problems with work–life balance due to shift work or excessive overtime. Fatigue is often treated as a separate issue, but when considered within an occupational setting the author considers fatigue to be closely related to job stress, creating a vicious circle where the stress leads to tension, worry and lack of sleep, and the increase in fatigue makes it less easy to cope and therefore creates more stress. The techniques to manage both stress and fatigue are very similar and will be covered in the discussion later.

Occupational stress has been defined as the physical and psychological states that arise when an individual no longer has the resources to cope with the demands and pressures of the situation (see Michie, 2002 for a comprehensive review). It is considered to be the result of the interaction between the person and the environment. This is important to remember, since one person's “stress” may be another's “challenge”; therefore the feeling of being stressed and unable to cope is very much an individual-level phenomenon.

The causes of stress are manifold. They include factors that are intrinsic to the job such as work overload, time pressure, and poor working environment, e.g., noise, cramped conditions, lack of adequate lighting. The person's job role may also be an issue, such as role ambiguity and conflict; i.e., goals and expectations are not clear. Employees might have a poor relationship with their boss or with colleagues or they may feel that they are not being promoted quickly enough or are insecure about their job. Finally, the climate, culture, and structure of the organization may be a problem with a lack of participation in decision making, communication, and consultation.

We have already covered some of the factors that the individual will bring to the situation that contribute to feelings of being stressed. These include personality characteristics such as anxiety, neuroticism, and the Type A behavioral pattern. Factors external to the job such as family problems or life crises can be stressors, but research has shown that the main contributor to job stress tends to the organization itself and how people are being managed within that organization. Well-designed workplaces with clear roles, responsibilities, and expectations for their staff will tend to experience less work-related stress. Adequate training and competency programs, well-written procedures, an engaged workforce, and supportive supervision and management will also contribute to a reduction in stress. As is so often the case, addressing PIFs is the key to good safety performance.

The consequences of stress for the individual include physiological responses such as increased blood pressure and heart rate and behavioral responses such as increased smoking and drinking and either over- or under-eating. Needless to say, prolonged exposure to stress can have a long-term impact on both physical and mental health, e.g., coronary heart disease and depression. Researchers and medical professionals distinguish between acute and chronic stress. Acute stress is the immediate “flight or flight” response to a perceived threat, which leads to physiological changes in the body such as the release of adrenalin (associated with “butterflies in the stomach” and increased breathing rate). The body is then activated to deal with the threat either by fighting it or by running away, but in modern-day society, this is not necessarily a practical solution and so the individual starts to suffer from chronic stress, which is the prolonged exposure to stressors from the environment. It is this prolonged exposure that is considered to be dangerous and can lead to mental and physical consequences.

The consequences for the organization of chronic stress can include reduced quantity and quality of work, increased absenteeism and high turnover of staff, and reduced job satisfaction and morale. Ultimately, if employees are increasingly stressed and end up leaving, the organization's reputation might be damaged and it might find it harder to recruit personnel in the future. Of course, other potential negative consequences for both the individual and the organization are reduced levels of safety and increased accident involvement. There are many studies reporting the impact of stress on safety performance in the industrial context, although very few appear to have been conducted in the onshore chemical process industries. However as way of example, some of the studies conducted in the offshore oil and gas industry will be discussed here.

4.4.1 Occupational Stress in the Offshore Oil and Gas Industry

The offshore environment is an inhospitable place; installations are usually located far from land and are effectively chemical processing plants with accommodation built on top. For anyone who has ever visited an offshore installation, it will immediately become apparent that they are not built with humans in mind. Space is at a premium and stairways, doors, and gangways can be small and narrow. Parts of the plant can be difficult to access, and there appears to have been minimal ergonomic input into the design of installations. Due to their remote nature, workers are often required to spend up to 3 weeks offshore providing an added stressor of being remote from family and friends. Apart from being exposed to the risks arising from loss of containment and from flying in a helicopter to reach the installation, offshore workers are exposed to other psychosocial stressors such as noise, vibration, weather (cold, heat, wind, rain, snow), 12-h shifts, and sometimes dull monotonous work with little opportunity for developing new skills.

Sutherland and Cooperoper (1986, 1991, 1996) conducted some of the earliest studies investigating the relationship between occupational stress, mental health, and accidents in the offshore industry. Their studies included two samples of offshore personnel from the European offshore industry (mostly British): a sample of 190 in 1986 and a sample of 310 in 1991. A questionnaire for “stress auditing” was developed from interviews with offshore personnel, asking about aspects of their job, lifestyle, and accident involvement. Job satisfaction, psychological health, and social support were also measured. Analysis of the “stressor” questionnaire indicated 12 factors: career prospects and reward; safety and insecurity at work; home/work interface; under stimulation, i.e., low demand; physical conditions—working and living; unpredictability of work pattern; living conditions; physical climate and work; organization structure and climate; physical well-being; work overload; and transportation (e.g., flying in a helicopter). The top three causes of stress were pay and conditions, i.e., rate of pay, lack of paid holidays, and pay differentials between operating and contracting staff but relationships at home and at work were also of significance and these measures were correlated with job satisfaction and mental health. Accident victims reported less job satisfaction and poorer mental health, but it is difficult to ascertain cause and effect here since in relation to this chapter, the key issue is whether stress impacts on safety performance and leads to accidents.

Sutherland and Cooper (1986, 1991) raised the possibility of this relationship, and Rundmo (1992) suggested that stress could play an indirect role to play in accident causation among Norwegian offshore workers. However, in an extensive study of health and well-being in the UK offshore industry sponsored by the Health and Safety Executive, Mearns and Hope (2005) used the “Health Offshore” questionnaire to survey 1928 offshore workers on 31 installations to evaluate their perceptions of Health Climate and the measures that had been put in place to manage their health and well-being. In one section, respondents were asked to rate the extent to which they felt they were able to cope with any pressures experienced at work. Most respondents’ felt they coped well and there did not seem to be a significant relationship between self-reported levels of stress and accident involvement. Overall, 21% of respondents indicated that they had received support (in the form of advice, information, guidance) to help them cope with the stress they experienced in the workplace. When the effectiveness of this support was rated, there was considerable variation between installations, but the general consensus was that current forms of support offered were only moderately useful. This is somewhat surprising given that 50% of the offshore medical staff who responded to the questionnaire reported that they were trained in stress management. Furthermore, 37% of the 31 installations involved in the study offered training courses on stress management for their workforce.

In conclusion, the research evidence suggests that it is the consequences of work-related stress, such as psychological and physical ill-health, fatigue, and the way that people are thinking, feeling that can cause human error or violations and this is what causes accidents rather than stress in itself. It is also worth noting that people suffering from the symptoms of stress may be self-medicating or receiving assistance from medical practitioners to alleviate those symptoms; thus the medication may impact on performance causing drowsiness, disturbed vision, and so on.

4.4.2 Managing Occupational Stress

The best way to manage occupational stress is to address the organizational issues that affect it such as improving employee awareness of stress; implementing regular stress assessments; developing a stress management policy and procedures; mitigating the impact of organizational change and job uncertainty on the workforce by good communications; and developing a positive health and safety culture which includes the reporting of psychological ill-health and considers the impact of stress and its associated symptoms when investigating accidents and incidents. Furthermore, as the next section will demonstrate, attending to job factors such as good design, procedures, and working environment will further mitigate the causes and consequences of occupational stress.

5 Job Factors

Job factors include the nature of the task and the environment it is conducted in. This covers factors such as equipment, workload, procedures, and displays and controls. As for all the other sections of this chapter, it is not possible to focus in any detail on the entire list of job factors, so this section will only discuss a few. As a starting point, it is important to know that a raft of measures exist to ensure that equipment, control rooms, plant, and processes are designed in accordance with key ergonomics standards (see the Engineering Equipment & Material Users Association—EEMUA). For example, equipment should be designed in accordance with EN614 Parts 1 and 2 and control rooms should be designed in accordance with BS EN11064, EEMUA 191, and EEMUA 201. The reader is referred to the EEMUA website and these standards for further information. Other recommendations are that consideration should be given to the operators’ body size, strength, and mental capability, and both plant and process should be designed to facilitate operation and maintenance. The design should take account of all phases of the plant life cycle, including decommissioning and all foreseeable operating conditions such as plant upsets and emergencies. Finally, consideration should be given to the interface between the end user and the system and one way to ensure this is to involve users in the design process. Users should include plant operators, control room operators (CROs), maintenance staff, and systems support personnel. Unfortunately, this involvement often seems to be overlooked in the design process. Cost also seems to play a role in the suboptimum design; however, it is important to note that the proper design with humans in mind can prevent accidents and incidents further down the line in the life history of the plant and equipment.

5.1 Design of Control Rooms

Control rooms provide an important safety critical barrier to MAHs in the chemical process industries; however, CROs can have a number of challenges to deal with. These challenges include having to deal with too many alarms simultaneously (alarm flooding), several safety critical tasks that have to be performed simultaneously (workload), communications equipment and display equipment positioned apart in the control room even when they need to be used together (design), and uneven workloads, with long periods of monitoring tasks interspersed with periods of high intensity when dealing with abnormal situations.

It is therefore recommended that due care is put into designing control rooms for normal, abnormal, and emergency situations. There are six main areas that require attention:

2. Working Environment

3. Control and Safety Systems

4. Job Organization

5. Procedures and Work Descriptions

6. Training and Competence

The Safety and Reliability Group at SINTEF (2004) developed the CRIOP methodology to contribute to “verification and validation of the ability of a control center to safely and efficiently handle all modes of operation including start-up, normal operations, maintenance and revision maintenance, process disturbances, safety critical situations and shut down” (p. 2). CRIOP is a registered trademark and stands for a scenario method for Crisis Intervention and Operability Analysis. Organizations such as Statoil and Norsk Hydro were involved in its development for the Norwegian offshore sector, and the methodology was based on interviews, user discussions, workshops, and contributions from experts.

e-Operations have been added as a seventh area in the 2004 version of CRIOP because remote control and remote operations have been a recent development for the Norwegian offshore industry. This is partly to reduce risk to personnel, since fewer people will be required to travel offshore to conduct the work. It also can be considered a cost-efficiency measure.

CRIOP works on the basis of checklists and scenarios. The checklists used in design and operations are based on a “best practice,” including standards and guidelines such as ISO11064, EEMUA 191, and IEC 61508. The Norwegian Petroleum Directorate regulations have also been taken into account. The checklists have been laid out in a particular way for clear and easy usage. There is a checklist for each of the six areas, which comprises numbered points, e.g., 1, 1.1, 1.2, etc.; a Description, e.g., Are breaks planned/coordinated with control center tasks? Yes/No/Not Applicable; Reference to Documentation, e.g., ISO 11064-1; Comments and Recommendations and Responses.

The final stage of the CRIOP process is Scenario Analysis, which consists of Introduction, Planning, Participants, Duration, Group discussions, Documentation, Number of Scenarios, Organizational Learning, and Framework. This is followed by the identification of actors who will be involved in the events making up the scenario. Observations are made of how the events of the scenario are enacted and interpreted including planning and decision making and action/execution. A checklist of PIFs (referred to as Sociotechnical factors in CRIOP) is used in the scenario analysis. Actions plans are developed from the outcomes of the scenario analysis with the implication that management implements these action plans.

In conclusion, CRIOP provides a method whereby the full complement of human factors issues can be addressed through a systemic, applied enactment of events within process industry-specific scenarios, particularly involving the all important control room at the center of operations.

5.2 Procedures

In the author's personal experience in over 25 years as an academic and consultant, frontline workers in the chemical process industries constantly raise the usability of procedures as an issue. This is particularly the case for maintenance personnel, who seem to be largely ignored when it comes to procedural usability. One of the main complaints raised in questionnaires and focus groups with frontline workers is that “engineers,” who never visit the worksite to understand the context in which those procedures are to be used, write the procedures. Furthermore, work as “planned” rarely fits exactly with work as “executed,” with the exception of the simplest of tasks. This means that frontline workers constantly have to adapt and adjust the procedures to use in order to achieve their work objectives. When this happens, the new way of working can gradually become the “norm” and drift away from the original intent of the procedure. New recruits observe, copy, and conduct their work in the new way, thereby being “normalized” by the more experienced workers, who may have forgotten what the correct procedure is. Another problem with procedures is that they can grow “arms and legs” and become lengthy and unwieldy. This often occurs in response to accidents and incidents, where a new section (or sections) is incorporated to prevent such an incident happening again.