3

AWS Networking Services

The odds are 100% that you will be using networking services at AWS. Networking at AWS is called the virtual private cloud, or EC2-VPC. This chapter explores in detail the available networking options for virtual private clouds (VPCs) and how they are set up and configured at AWS. At the end of this chapter, you will have a strong understanding of VPCs, networking services and options available, and understand the necessity of properly planning your networking services.

The reality is that you may need to read this chapter a few times to become comfortable with the networking services at AWS. There are a few changes when setting up and managing networking in the cloud even though the terms might look familiar. In the previous chapter, we looked at the big picture of regions and zones, and edge locations—the bedrock services at AWS that we really can’t touch. Networking at AWS, the subnet is the lowest level we can gain access to, and it’s all at a virtual level. Yes, there is a physical network at AWS, but we don’t get to access it directly. Starting with the lowest point of the stack that we are allowed to control, let’s get into networking.

One of the first configuration questions that will be asked of you when ordering an EC2 instance; AWS WorkSpaces, their hosted VDI solution; or RDS, their hosted database solution is this question: what VPC or what subnet do you want to use? The topics for this chapter include the following:

VPC networking

Subnets and IP address types

Route tables

Security groups and NACLs

VPN connectivity options

Route 53—the domain name system (DNS) service

Questions we will ponder and reflect on for this networking chapter focus on our case study Terra Firma and its human resources customer relationship management (CRM) software system. To recap, the company’s current CRM system was developed in-house several years ago and is running successfully on VMware server images in the corporate data center. Other details include:

Management agrees that the current HR system must be migrated to AWS as soon as possible.

The company’s HR system needs to be able to properly scale to handle the increased demand of upper management.

The human resources system must be available 24 hours a day and needs to be accessed across the Internet and from corporate head and branch offices.

Some of the questions they need to get answered include these:

How many VPCs should Terra Firma consider using?

How many AZs should Terra Firma use to design for failover?

How many subnets should Terra Firma use?

Do the Web servers need public or private IP addresses?

Can we privately access AWS resources from within a VPC?

Are NAT services required?

Compliance rules mandate security at the subnet level. Is that possible at AWS?

VPC Networking

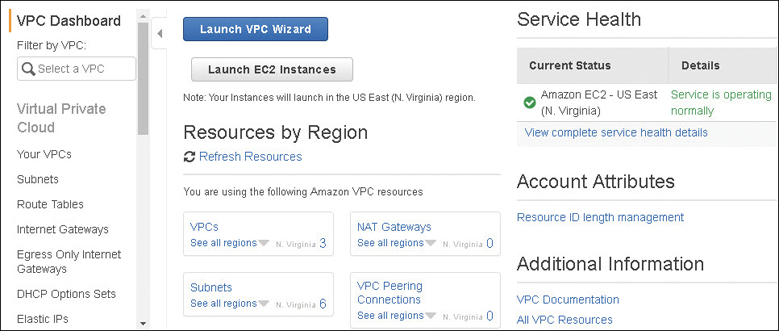

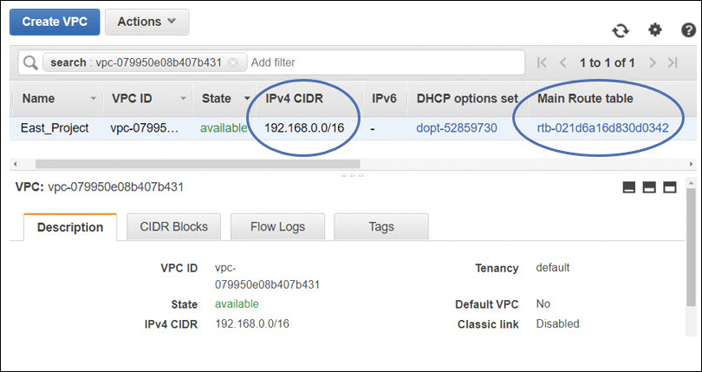

The networking layer at AWS is a VPC, and we generally work with networking services using the VPC console, as shown in Figure 3-1. The official name of a VPC is an EC2-VPC, even if we typically just use the VPC moniker. The EC2 (Elastic Cloud Compute) designation indicates that EC2 instances are usually hosted within a VPC. Each VPC is a logically isolated “data center” where your computer instances and various AWS services reside. Software that can run successfully on a Windows or Linux virtual server can be hosted on EC2 instances hosted in VPCs running as Web/application servers, databases, NAT servers, or any third-party software appliance you can think of.

When you order a VPC, AWS’s job is securing your VPC as a private isolated software data center linked to your AWS account. AWS’s job is to provision, host, and secure the VPC; the rest is up to us. There is also plenty of AWS management going on below the surface of a VPC; your network traffic must be routed and protected based on your network design and needs. In addition, Amazon must ensure the continued separation of your VPC from all other AWS customers. Any failure on Amazon’s end in safeguarding and protecting VPC networks just can’t happen.

There are lots of moving parts and pieces at AWS, which I like to describe as a large toolbox containing a variety of tools and attachments that you can mesh or cobble together any way they suit your needs. Within the VPC “toolbox” are many configurable options, including route tables, public and private subnets, VPN connections, gateways, and private endpoints, to name just a few of the available options. In addition, there are multiple security choices available at every network level allowing you to fully protect your EC2 instances; choices include security groups and network access control lists (ACLs). A VPC also has multiple connectivity options, allowing you to connect your VPC to the Internet, to your own private data center, to other VPCs within your region or outside your region, or to other AWS accounts holders’ VPCs.

Partnering with AWS

Hosting your applications in the Amazon public cloud means you have implicitly accepted to work in partnership with AWS in what is typically defined as a shared security model. AWS has responsibilities of building and securing its cloud infrastructure; this is typically defined as Security of the Cloud. Your responsibility as an AWS customer is to design acceptable security provisions for your applications and data hosted within the AWS cloud. The level of acceptable security provisions is entirely your choice. The definition for the customer’s responsibilities of securing their resources at AWS is called Security in the Cloud. Customers can choose to use any of the managed services and utilities provided to accomplish their deployment and security goals. Within each VPC, your compute instances (EC2) are hosted on subnets that you create in selected availability zones (AZs) within the AWS region you chose to operate within. Although the VPC is a managed service, customers make most of the choices and decisions on their own after AWS carries out the initial creation of the VPC.

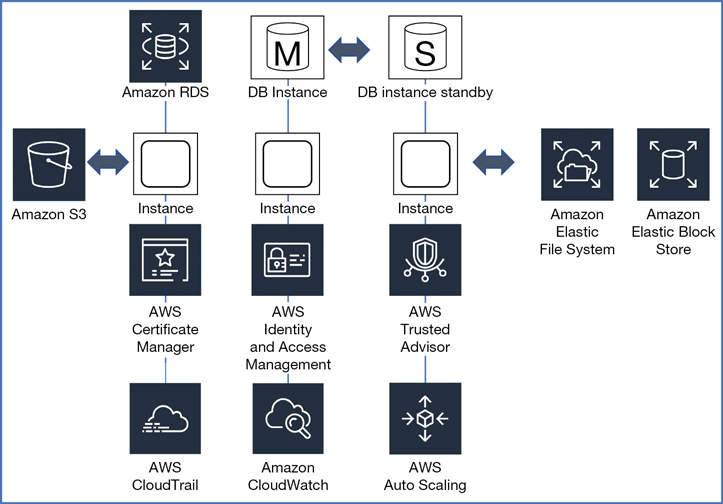

Because a VPC is defined as a virtual private network (VPN), it’s easy to forget sometimes that you’re still participating within a shared environment when hosting applications in the AWS cloud. AWS customers all share the private network backbone linking shared compute, storage, and services, as shown in Figure 3-2. Of course, the one issue you don’t want to experience in the AWS cloud is an unexpected loss of privacy.

Amazon’s private global network that hosts all the VPCs has terabytes of networking capacity available. AWS also owns and operates all the fiber connections connecting their data centers, AZs, regions, and edge location equipment together. However, the networking services that are exposed to each customer at AWS are not running on the same network hardware devices that are deployed in your own data centers. Some of the networking service terms we are going to define may also be new to you. And some of the other networking components that are utilized at AWS will hopefully sound familiar—terms like subnets, public and private IP addresses, and route tables. But you will find that your overall design of networking services at AWS will still be different from what you would use on-premise. Hosting hundreds of thousands of customers in a massive shared networking environment means networking can’t be the same as your on-premise network due to the size and scope of Amazon’s overall operation.

Note

If you’re a new customer at AWS, the VPC is the only networking choice currently available for hosting your workloads. There used to be another networking option available at AWS called EC2-Classic. We won’t be spending any time on this older style of networking because you don’t want and can’t access EC2-Classic networking; it hasn’t been available for new customers since December 2013. And you wouldn’t want to use it instead of a VPC. To begin with, EC2-Classic networking was a flat network design that was shared with all other EC2-Classic AWS customers. The EC2-Classic feature set available is quite minimal compared to the expansive options available with a VPC. However, your company may have EC2-Classic networking if it began working with Amazon before 2013.

To Host or to Associate?

Some services can be hosted within a VPC, and others can be associated to a VPC. There are many additional AWS management and compliance services that you will probably want to use. Some of these services will have relationships with the services and components hosted inside a VPC, but the services themselves are not actually installed in the VPC. AWS services such as AWS Config (a compliance tool) and ELB (Elastic Load Balancing) aren’t installed or hosted within a VPC; instead, they reside on the private or public Amazon network and, once ordered or selected, are then associated or linked to the chosen VPC carrying out their task. Table 3-1 lists several examples of AWS services that are either hosted or associated with a VPC.

Table 3-1 AWS Services That Host or Link to a VPC

Hosted VPC Services |

Associated Services |

|---|---|

EC2 instances |

Trusted Advisor |

Elastic Beanstalk |

AWS Config |

Amazon Redshift |

VPN connections |

ElastiCache |

Auto Scaling |

Amazon EMR |

Elastic load balancing |

Amazon RDS |

S3 |

Amazon Workspaces |

DynamoDB |

The reality is that most of Amazon’s offerings are not actually installed inside a VPC. The primary service hosted in a VPC is the EC2 instance.

What’s Behind the Networking Curtain?

How is it possible to manage thousands of customers who require hosted private networking services? To complicate this reality, customers change their minds all the time. It’s a daunting task. Customers who order a VPC are working within their own private walled garden of virtual networking. The first major concept of networking at AWS is that within each VPC, the networking exposed to each customer is designed and managed at the subnet level—specifically, the Layer 3 subnet address space contained within each availability zone. That’s as deep as we’re going to get in the network stack at AWS. Full stop.

Your on-premise networking environment is probably composed of virtual local area networks (VLANs), Layer 2 networks, and Multiprotocol Label Switching (MPLS) connections. Why does a customer’s exposure to the AWS network then start and end at Layer 3? Because thousands of customers are running on a massively shared network infrastructure, and AWS is running at a scale that far exceeds the scale utilized within your own data centers. As a result, the internal network design offered to each customer needs to be different as well.

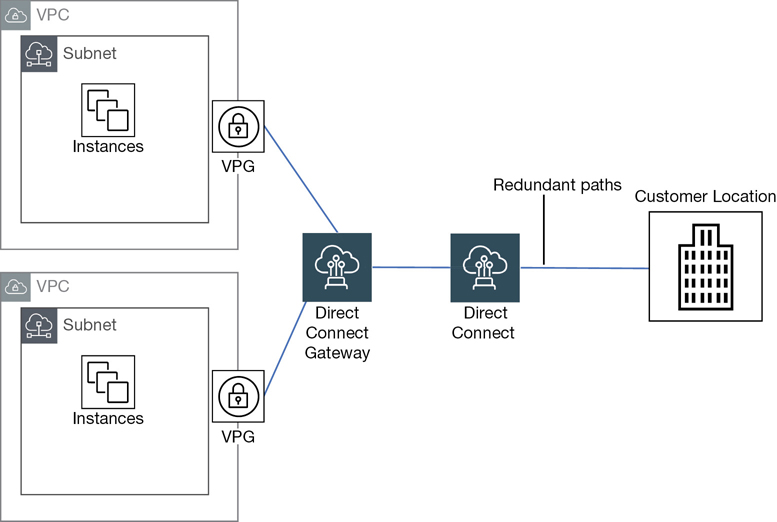

AWS is not using VLANs on the internal private network because these technologies cannot scale to the number of customers that Amazon hosts. A VPC also doesn’t use MPLS for communications; however, you may be utilizing MPLS connections when connecting to a VPC using an external Direct Connect connection from your on-premise network to AWS. You can find more details on external VPC connections later in this chapter. If you have worked with public cloud providers for several years, you may have experienced ordering networks with an email request and waiting a few days to get set up; or perhaps you waited just a few hours. Waiting a few hours or even days might be acceptable within your own data center. In the hosted public cloud, however, we demand and get cloud resources instantly. Amazon had to figure out a way to combine scale, automation, and instant gratification, and they did. Ordering a VPC takes seconds; if a VLAN had to be set up for each customer network, the process would ultimately take too much time and, as discussed, not be able to scale as required.

Note

If you expect to design a VMware-like network utilizing a stretch layer 2 network design at AWS, it’s technically possible between two separate AWS data centers, but it is not recommended. For example, each data center has separate power and cooling, and you don’t have access or control of these components at the physical layer; that’s Amazon’s domain. What happens if a connection goes down between the two stretched data centers? There are multiple redundant connections between the data centers at AWS, but again, the customer does not have access to the physical components or connections between AWS data centers.

Each VPC is a software-defined network built on Amazon’s own code and custom network hardware developed by AWS to match its required scale of network operations. There are two networks at AWS: the real physical network that is maintained by AWS, along with physical switches and routers and familiar networking components. The underlying physical network at AWS would be quite recognizable component wise. However, we don’t have access to the physical network; that’s the folks at AWS’s job. It may be obvious, but I’m going to mention it anyway: each VPC runs on top of the physical AWS network infrastructure.

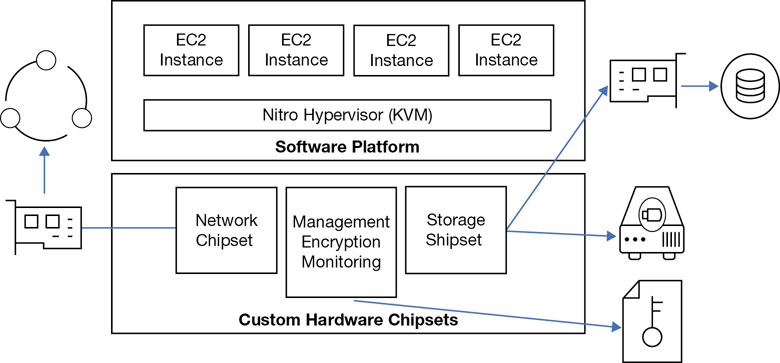

Your instances are running on a hypervisor installed on custom-designed bare-metal servers, as shown in Figure 3-3. The standard hypervisor AWS used was a customized version of XEN for a number of years, but many changes are happening. For several instance types—the C5d (massive computer-optimized instances), the M5d (massive memory-optimized instances), and the new, bare-metal EC2 instances—AWS has moved to what is called the Invocation platform with the Nitro system. Nitro is a customized version of the KVM hypervisor with a published benchmark of less than 1% when comparing virtual EC2 instance performance to bare-metal system performance. Nitro systems are also matched with a customized Nitro security chipset that monitors the firmware and hardware at boot, supports enhanced networking, and has NVMe block storage specifically designed for access to high-speed local storage over a PCI interface with transparent encryption provided on the fly by hardware processes. Scotty, I need more power! Wait a minute. I’m more than okay.

Note

If your job involves managing VMware and Hyper-V hypervisor, you have no exposure to the hypervisor at AWS. Maintaining and operating the hypervisor is Amazon’s job.

Therefore, customized bare-metal servers host multiple AWS customers and their EC2 instances, and all instances are hosted on VPCs. And there are approximately 80,000 bare-metal servers residing in a typical AWS data center. For many years when the hypervisor of choice was XEN at AWS, an emulated software router providing additional security resided just below the hypervisor. However, on the latest and greatest Nitro platform, a hardware router is directly embedded in the physical host. These routers sitting below the hypervisor provide the majority of VPC functionality and at a much greater speed.

It’s All About Packet Flow

I find that reducing the concept of the network to the packet level a simple, functional way of thinking about networking at AWS. You have packets that need to be sent from a specific EC2 instance, on a specific subnet, from a specific VPC, to a specific destination or location. At the subnet is where routing decisions are performed for the EC2 instances on each subnet. For example, instances may need access to the Internet, access to the head office, or access to data records stored somewhere on the AWS network.

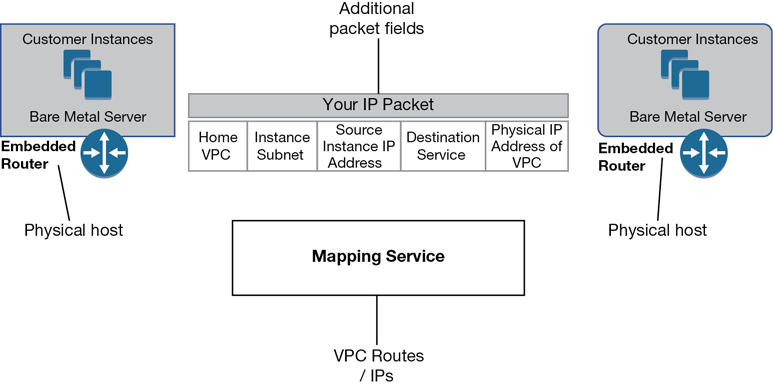

How’s each packet going to get to its preferred destination? The answer is through those specialized routers/routing services hosted on each bare-metal server and some custom external hardware. Before each packet exits the subnet to its destination, AWS stamps the required proprietary data records within each packet, including the VPC encapsulation details as shown in Figure 3-4 containing essential information:

Which VPC is home base for the packet?

What subnet does the instance reside on?

What is the IP address of the instance that sent the packet?

Where is the destination service the packet needs to be sent to?

What is the IP on the physical network where your VPC is located?

Figure 3-4 VPC encapsulation details

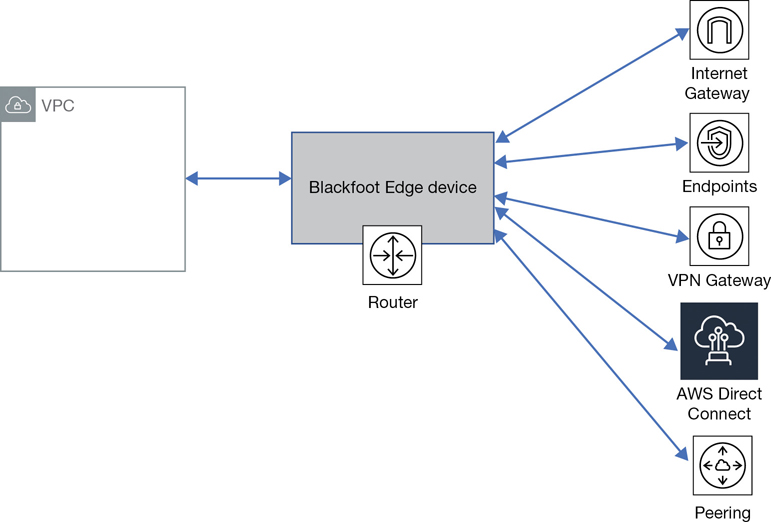

Packet flow is carried out using customized hardware components connecting to each “VPC” called a Blackfoot device, as shown in Figure 3-5. These smart devices sit on the edge of the VPC and perform a conversion for the network traffic based on the final destination each packet is headed toward. Each packet is also encapsulated with its essential personal travel information before beginning its journey. The outermost IP destination will need to identify the target physical host the packet is being sent to. In addition, each packet will have the destination VPC and destination network interface identification.

Note

Blackfoot edge devices translate the traffic residing on the internal VPC encapsulation network to the outside IP network sending each packet to its desired destination.

For example, if the traffic needs to go to the Internet, the VPC encapsulation will be stripped from the packet, and its IP addresses will be changed to a public IP address. If the destination was to an external private connection, the traffic would be directed to the requested VPN service. The Blackfoot device also adds additional protection by performing network address translation as the internal network traffic transitions to an external packet flow. With their current VPC design, AWS has successfully gone past any limitations imposed by using VLANs.

The Mapping Service

How can all the required networking information be gathered in a timely fashion? AWS uses a process called the mapping service. The mapping service is a fully distributed service in charge of mapping VPCs and their associated network interfaces and the physical locations of each VPC. Mapping decisions are highly optimized using high-speed lookup memory caches. With support for microsecond scale latencies, mappings are cached in the location where they are most used and are proactively validated when routing information is updated. According to AWS, with this design, the overall system latency is reduced to tens of microseconds. The central mapping server knows everything about every IP address, every Media Access Control (MAC) address, and where each component physically lives on the AWS network, as shown in Figure 3-6.

The mapping server replaces the traditional process of broadcasting packets to identify the MAC addresses, and multicasting to distribute information to everybody on the network. You could also think of the VPC as a tagging mechanism, where the tag of each VPC is inserted in the packet information; the packet can therefore be identified using the VPC tag as to where it needs to flow to. Both the source and the destination of each network packet are verified, and any security rules are upheld and maintained by the mapping service.

There are no broadcasts domains at AWS; the need for networkwide broadcasts has been removed. All networking information is instead held and maintained within the distributed mapping tables. On a traditional network, we would first broadcast and find out the destination information and then create the packet and send it off. Not at AWS. Broadcasts from millions of customers would bring down the network; therefore, no broadcasting is allowed outside of each subnet. Hopefully you found the last few pages an interesting summary of some of the cool networking components running inside of Amazon’s data centers. Now that we have some background, let’s look at creating our first VPC.

Creating Your First VPC

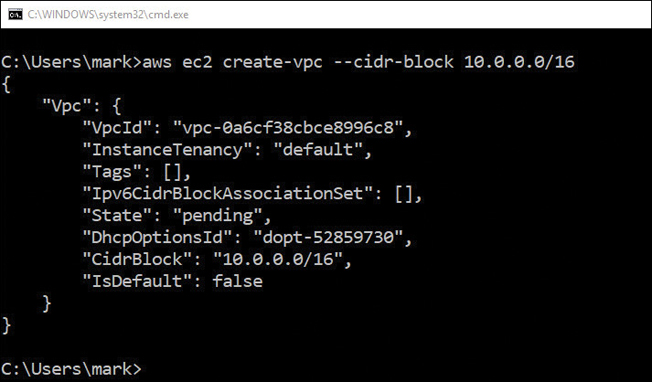

The initial creation of a VPC is either a very simple or a slightly more complex process depending on what tool you choose to use. Two wizards are available: the Launch VPC Wizard and the Create VPC. Each wizard shows up at different levels in the VPC management console, and that adds a bit of confusion because each wizard asks different questions and then carries out different tasks. You can also use the Amazon command-line interface (CLI) and enter a simple command-line string to create a VPC. Each of the choices available for creating a VPC carries out the creation task a little differently.

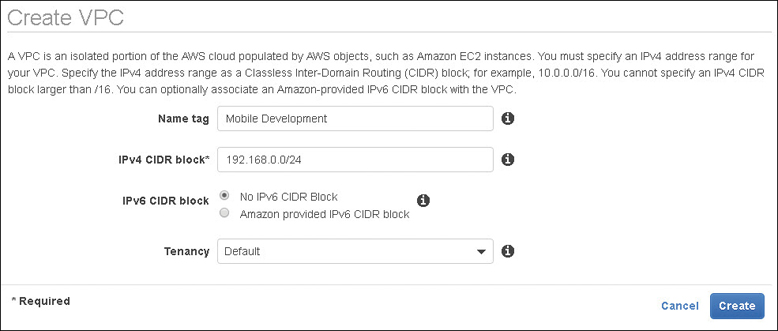

Example 1: Create the VPC

For our first example, we will click the Your VPCs link from the main VPC Dashboard. You must answer a couple questions when creating a VPC with the Create VPC wizard.

What is the name tag? That’s the name of the VPC being created.

What is the initial IPv4 CIDR block you want to use for the VPC?

There are two additional menu choices that allow you to add an IPv6 classless inter-domain routing (CIDR) range and make a choice about VPC tenancy. The tenancy option allows you to change from the default mode of running your future instances on shared hardware, called multitenancy, or single-tenancy hardware.

Hosting instances at AWS in a VPC on shared hardware resources means that other AWS customers will also be sharing the underlying bare-metal server hardware and hypervisor along with you. You will not know which customers you are sharing AWS resources with. Selecting dedicated tenancy forces you to run your EC2 instances within the VPC designated as dedicated on single-tenant hardware with you being the only tenant, or customer. This may be an important consideration if you’re bound by strict governance rules requiring you to operate with dedicated compute instances when operating in the AWS cloud.

Running dedicated EC2 instances at AWS is more expensive than multitenancy operation. A $2 charge per hour will be added when dedicated instances are running. Let’s return to the steps for creating a VPC with the Create VPC wizard.

Using the Management Console, click Services, and under Networking and Content Delivery, select VPC.

From the VPC Dashboard under Virtual Private Cloud, select Your VPCs.

Click the blue button Create VPC.

In the Name tag dialog box shown in Figure 3-7, enter the name of your VPC.

In the IP CIDR Block dialog box, enter the range of IP addresses you want to use in CIDR notation. For example, enter 192.168.0.0/16, which would allow you to create subnets within the VPC that could total approximately 65,530 possible hosts.

Optionally change the default tenancy from shared to dedicated.

Figure 3-7 VPC questions using the Create VPC wizard

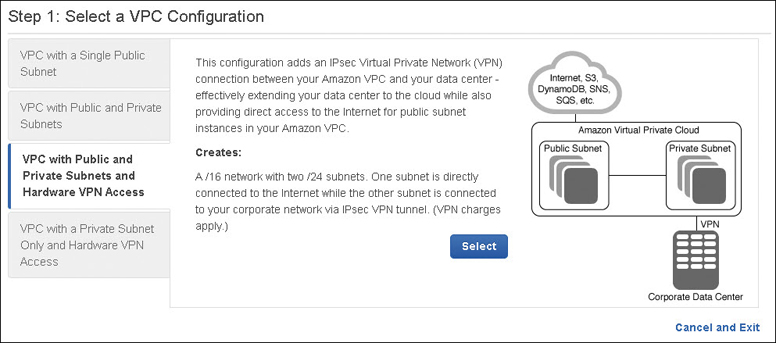

Example 2: Launch the VPC Wizard

From the main VPC Dashboard for this example, click the Launch VPC Wizard button.

This more powerful wizard option prompts you for answers to additional questions depending on the VPC design that was chosen. In addition to specifying the default CIDR block for the VPC, you also specify the CIDR blocks for the subnets that you want to create.

Other networking information details such as NAT servers and customer gateway configuration settings can also be added. These VPC wizards can be handy to get you started, but they are also limiting; for example, you can’t create multiple public and private subnets in multiple availability zones with the wizard. However, the Launch VPC wizard, as shown in Figure 3-8, is useful in visualizing the components required with the available VPC designs. You can use the VPC management console to add additional subnets anytime.

The following are VPC design choices we can consider:

VPC with a Single Public Subnet—This option creates a single public subnet that could be useful for a simple test environment or for a public-facing software as a service(SaaS) application. It might also be useful if you want to design with multiple VPCs for controlling incoming traffic flow through a transit network.

VPC with Public and Private Subnets—Creating a VPC with public and private subnets allows you to create an environment for a multi-tier application. The public subnets would be utilized for network address translation (NAT) servers or additional public-facing services such as load-balancing services. Multiple private subnets could be created and used for hosting, Web/application servers, and database servers.

VPC with Public and Private Subnets and Hardware VPN Access—This choice is like the second example above but also allows you to add a private VPN connection.

VPC with a Private Subnet Only and Hardware VPN Access—This choice is also like the second example, but there are no public subnets created.

After using the Launch VPC Wizard to create your initial VPC infrastructure, you can add additional subnets or connectivity that you require using the other options in the VPC console. The wizards are merely a starting point for most real-world network designs. You can also choose to use the AWS CLI tools to create a VPC, as shown in Figure 3-9.

To access the companion videos, register your book at informit.com/register.

If you used one of the VPC wizards or the AWS CLI, congratulations! You have created a VPC. Depending on the wizard or the creation process used, the VPC might be completely empty with just the required IPv4 CIDR block, or you may have fleshed out your design by choosing public, or public and private subnets, and associated network connectivity infrastructure. This is probably a good time to pause and think about how many VPCs you might need. This is a long-term question for your company. You may have many developers with many accounts creating many VPCs without regard for other developers who are also creating VPCs. You might need a single VPC today, but what about tomorrow? How much expansion will you need over the next two years?

Note

Terra Firma’s human resources system requires at the very least one VPC, but just how many will they need in the future needs to be discussed? Will their network design require a separate test bed VPC for development? Perhaps an additional VPC to test for quality control, and of course, a VPC for the live application? What if Terra Firma decided to operate in multiple regions? What if multiple developers with multiple accounts worked on the development of the human resources system? You can see how complicated this decision could get.

How Many VPCs?

There are initial limits to the number of VPCs that we can create. The default “soft limit” is five VPCs per AWS account. You can request additional VPCs resources up to the defined hard limit. The current hard limit for VPCs is the current number of VPCs in the region times the number of security groups per VPC. The total figure cannot exceed 1,000. This is but one example of hard and soft limits defined by AWS for each service. Check the AWS documentation for each AWS service that you are planning to deploy to find the current hard and soft limits and plan accordingly.

Note

Hard and soft limits are per AWS account, per region. With 20 regions available at AWS, a single AWS account can create 100 VPCs, 5 per region.

Consider these possible design options for calculating the number of VPCs required:

Your company wants to extend, or burst, into the cloud utilizing resources in the corporate data center and at AWS. Your primary need is additional compute resources at certain times of the month. In this scenario, one VPC could be the solution. A single VPC can host many subnets and instances and have private connectivity back to the corporate data center.

You are an independent developer creating an application that will be available across the Internet to users around the world. You have no corporate data center. The solution is to start with one VPC. Perhaps you will soon want to have a development workspace, a test workspace, and a production workspace, and you want them all to be separate. Now you have potentially three VPCs within a single region.

You’re an administrator who has been tasked with utilizing cloud storage at AWS. You need unlimited storage, and you don’t know the upper limit of your storage requirements. Your solution doesn’t need a VPC. You need storage—perhaps S3 object storage or Glacier archiving. This is an example of the service itself not residing within a VPC. You can find more details on S3 storage in Chapter 6, “Cloud Storage.”

You work for a large company, with several developers and administrators, and many departments spread across many continents. You may have many AWS accounts and many VPCs to contend with. Don’t worry! We can work with multiple VPCs and multiple accounts with VPCs, but it’s a great idea to have some long-term plan perhaps 2 to 3 years out if possible. At the end of this chapter, we will look at some discussion points to save you some potential future pain.

You work for a large company that is hosted in many different countries with different languages. Your company wants absolute separation; multiple VPCs can be created.

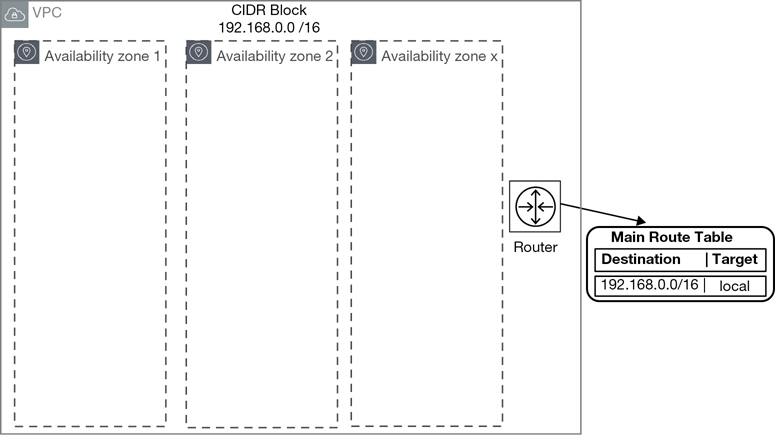

Creating the VPC CIDR Block

A VPC created using either a simple AWS CLI command or the main Create VPC wizard is really a blank slate except for the primary IPv4 CIDR block and the local main routing table. Here are some CIDR details to be aware of:

Both IPv4 and IPv6 subnets are supported within a VPC; however, it is required that VPCs and subnets have an initial IPv4 CIDR block defined first.

IPv6 CIDR blocks can be associated with your VPC, but only after an initial IPv4 CIDR block has been created.

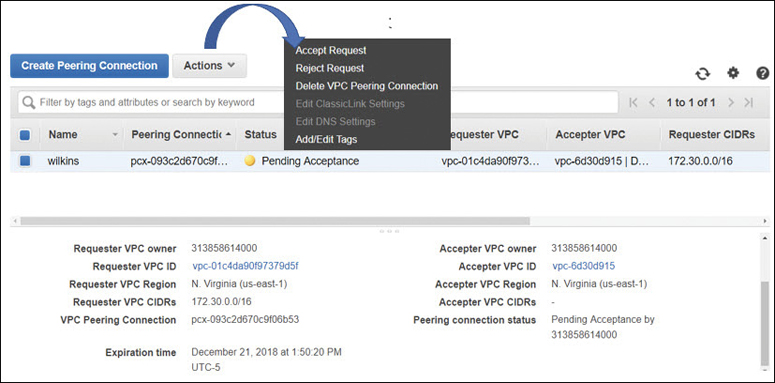

CIDR blocks must not overlap with any existing CIDR blocks associated within a VPC or with another VPC connected with a peering connection. Overlapping CIDR blocks are an issue to be avoided unless it’s a deliberate decision to ensure that a VPC cannot connect to another VPC, regardless of the situation.

The size of an existing CIDR block cannot be increased or decreased; it is locked after creation.

Planning Your Primary VPC CIDR Block

There are many questions, and many possible answers, when planning your IP addressing for your VPC. I can’t stress it enough that if you are not the prime networking expert at your company, you should talk to your networking team and get advice on what IP address ranges you should use at AWS. Two or three years down the road, you may want to connect your networking hosted at AWS to your corporate network, and you might find out that the IP address range you selected wasn’t the best choice. Meeting with your network team could save you hours of future reworking and save you from a serious meltdown. Your initial IP addressing choices could come back to haunt you without proper planning. We’ll explore these issues when we discuss joining VPCs together.

Note

The primary IPv4 CIDR block that you choose for your VPC will determine the number and size of IPv4 addresses that can be assigned to the subnets created within the VPC. The initial CIDR block that was added when you created the VPC can’t be changed; however, you have the option of adding additional secondary CIDR blocks to an existing VPC. We will expand upon using additional secondary CIDR blocks in a few pages.

Let’s start with the example of 192.168.0.0, as shown in Figure 3-10. For your VPC starting CIDR address, the network mask you choose will determine the number of possible hosts that can be contained on subnets within your single VPC.

Note

Amazon supports netmask sizes from /16 to /28.

As discussed, during the creation of a VPC, it’s mandatory that an IPv4 CIDR block is assigned to a VPC even if you’re planning to use IPv6. However, VPCs can also operate in a dual stack mode communicating over both IPv4 and IPv6 protocols. The subnet CIDR block for IPv6 is fixed at /64. During or after VPC creation, you can choose to associate an IPv6 CIDR block to your VPC and subnets. Reviewing the sample address ranges shown in Table 3-2, you will be able to find an acceptable range of hosts and addresses to match your project. When in doubt, explore increasing the subnet CIDR block size to accommodate more hosts.

Table 3-2 VPC CIDR Block Examples

CIDR Block |

Addresses Range |

Hosts |

|---|---|---|

192.168.0.0/16 |

192.168.0.4–192.168.255.254 |

65,529 |

192.168.0.0/18 |

192.168.0.4 and 192.168.255.254 |

16,379 |

192.168.0.0/19 |

192.168.0.4 and 192.168.255.254 |

8,187 |

192.168.0.0/20 |

192.1, 68.0.4 and 192.168.255.254 |

4091 |

192.168.0.0/21 |

192.168.0.4 and 192.168.255.254 |

2043 |

192.168.0.0/22 |

192.168.0.4 and 192.168.255.254 |

1019 |

192.168.0.0/23 |

192.168.0.4 and 192.168.255.254 |

507 |

192.168.0.0/24 |

192.168.0.4 and 192.168.255.254 |

251 |

192.168.0.0/28 |

192.168.0.4 and 192.168.255.254 |

11 |

Note that the first four IP addresses 0, 1, 2, and 3) and the last IP address (255) in each subnet’s CIDR block are reserved for Amazon’s use. Using /22 as a standard netmask for all subnets, the maximum number of hosts is 1019, which for a lot of use cases would be fine. However, if you’re creating a subnet for hosting thousands of clients utilizing a VDI solution, make sure to pick a larger range for future expansion. On a private subnet for databases, you could go as small as /28. You will not have 11 database hosts on a single subnet.

Adding a Secondary CIDR Block

Up to four secondary IPv4 CIDR blocks can also be associated with an existing VPC. After adding an additional CIDR block, the new route is automatically added to the VPC main route tables, enabling the additional local routing routes throughout the VPC. Keep in mind that the additional secondary CIDR block cannot be larger than the initial primary CIDR block. For example, if you associate a primary CIDR block of 10.0.0.0/24, an additional CIDR block of the same range or larger is not allowed. However, a CIDR block of 10.0.0.0/25 is allowed because it’s a smaller range.

The primary advantage of being able to add additional secondary CIDR blocks to an existing VPC is having the ability to add future expansion when necessary. If the initial primary CIDR block caused address space limitations, additional secondary CIDR blocks can be added allowing you to increase the number of IP addresses that can be used within the VPC.

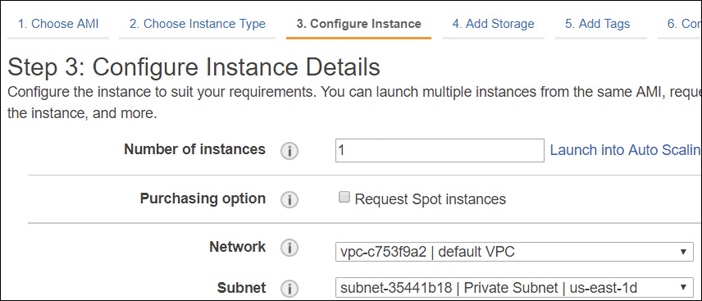

The Default VPC

You may have come across the default VPC if you have been playing around with AWS and adding an initial instance. The default VPC is available within each AWS region and is created with an IPv4 CIDR block of 172.30.0.0/16, which provides up to 65,531 private IP v4 addresses. In addition, an Internet gateway has been created and attached to the default VPC with a route table entry that sends all IP traffic intended for the Internet to the attached Internet gateway. A default security group and default network ACL are also associated with the default VPC. For each default VPC, subnets have been created for each AZ in the region where it’s located. All that’s left for you to do is to add instances. Instances placed on the default public subnet within the default VPC receive both a public and a private IPv4 address and public and private DNS host names. Potentially, your Web application is ready to go. Well, hold on just a second.

The idea behind AWS already providing a prebuilt default networking VPC environment is to enable you to start working quickly with AWS, even if you have limited network knowledge. The default VPC can be handy if you want to do a quick demo and don’t want to bother setting up subnets and Internet connectivity and think about any CIDR decisions. These networking decisions have been already carried out for the default VPC.

Note

The default VPC’s infrastructure is set up for public Internet access. However, the customer still makes the final decision for allowing public Internet access to a VPC by associating a public IP address with an EC2 instance during its creation. Allowing public access to AWS resources is always the customer’s choice.

Perhaps having a separate demo AWS account utilizing the default VPC would be useful for demonstrations and more. However, the default VPC can easily cause deployment issues when you are selecting a service that requires network placement. If you’re not paying attention, the default VPC will be preselected, as shown in Figure 3-11. And you may not want Internet access, which has been defined for the public subnets of the default VPC. For these reasons, for most deployment options other than a demo account, I would recommend deleting the default VPC from every AWS region in your AWS account. Yes, this means you would have to set up all your networking from scratch. But perhaps long term you’ll be happier knowing there’s no default VPC with easy Internet access provided to the unsuspecting user. It’s a really good idea to understand and control all your networking options when operating in the cloud.

Note

The default VPC can be deleted. If you want to bring it back, AWS provides a script to re-create it. You also can’t assign an existing VPC to become a default VPC.

Revisiting Availability Zones

Availability zones were discussed previously in Chapter 2, “Designing with AWS Global Services.” If you are comfortable with the concept of availability zones, you can skip this section. As you may remember, within each VPC, the number of availability zones that are available depend on the region the VPC is created in.

Creating a VPC in the N. Virginia region will give you the option of selecting up to six AZs that can be used within a single VPC to design a resilient network. Choosing the Canadian region, you would have just two AZs to work with. The stated long-term goal is that new AWS regions will have three or more zones. Subnets are created in each AZ. Next, instances are placed on the appropriate subnets. Utilizing AZs allows you to do the following:

Design for resiliency and failover with multiple AZs in a single VPC.

Load-balance instances hosted on subnets in different AZs. (See Chapter 5, “Planning for Scale and Resiliency.”)

Auto Scale instances hosted on subnets in different AZs. (See Chapter 5.)

Deploy RDS primary and slave database servers hosted on subnets in different AZs. (See Chapter 6.)

Creating Subnets

After an initial VPC creation, your next step is to create subnets within the VPC, per AZ(s), depending on your required network design. The AZs that you select for each subnet are already hosted and available within the VPC. It’s usually stated that a VPC spans “all of the availability zones within its region” and certainly, there is the potential to include all the AZs within a VPC if your design includes subnets for each AZ. However, AZs don’t show up automatically in each VPC; they are added during subnet creation when selecting each subnet’s location.

Each subnet that you create resides within its assigned AZ, and although AWS doesn’t share the physical details, each subnet is hosted in exactly one data center within the selected AZ, as shown in Figure 3-12. If you choose to design your applications for resiliency and uptime, you’ll want to design your solution using at least two AZs. As we know, AZs have been designed with isolation from other AZs; a failure in one availability zone will not affect the other AZs within the VPC and region.

AWS also carries out a balancing of sorts of customers across the listed AZs within a region. If I create a subnet in the N. Virginia region and select availability zone-a, and you also create a subnet in the N. Virginia region, selecting availability zone-a, the odds are high that my subnets are not in the same physical AZ as your subnets. We each have subnets in a selected AZ in N. Virginia. That’s as much location detail as were going to get in the AWS cloud.

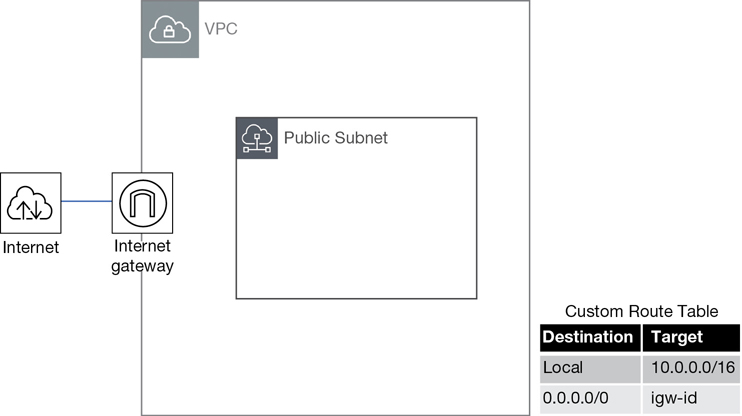

Every subnet that you create begins life as a private subnet with no connectivity. You may be intent on creating a subnet with Internet access, but you have a few tasks to carry out: you must first add, and then attach, an Internet gateway to the VPC and then manually update the route table associated with the public subnet with a route table entry, pointing to the Internet gateway. Only after every one of these steps is taken will you have an official public subnet.

Note

Subnets are defined by their connectivity options.

If subnet traffic is routed to the Internet through an Internet gateway, the subnet is defined as a public subnet.

If a subnet has no gateway or endpoint to direct traffic to, it is a private subnet. Traffic remains on the local subnet and has nowhere else to go. A subnet with no external gateway connectivity is the true definition of a private subnet.

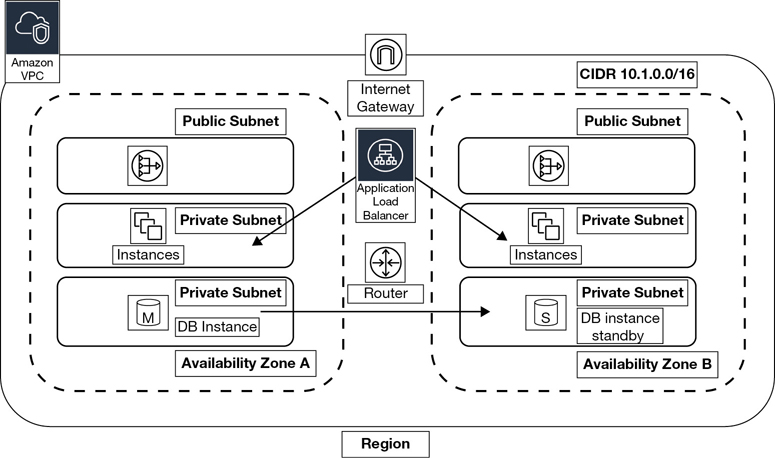

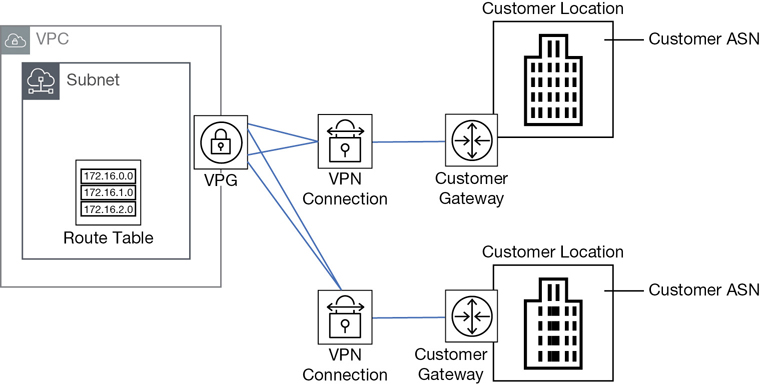

Amazon documentation sometimes defines subnets that are routed to a virtual private gateway as a VPN-only subnet distinguishing that there is another network to connect to—a private gateway location. Therefore, it is not as private as a subnet with no gateway connections. Terra Firma and its human resource application will need the following subnet types:

Public subnets for load balancers and NAT services

Private subnets for the database servers

Private subnets for the Web servers

Subnet Summary

Subnets are contained within an AZ.

Subnets host compute instances.

Public subnets allow you access to the Internet.

Public subnets are for infrastructure.

Private subnets are private with no Internet access.

Private subnets are where instances live privately.

VPN-only subnets have access to a VPN connection, typically to and from the head office.

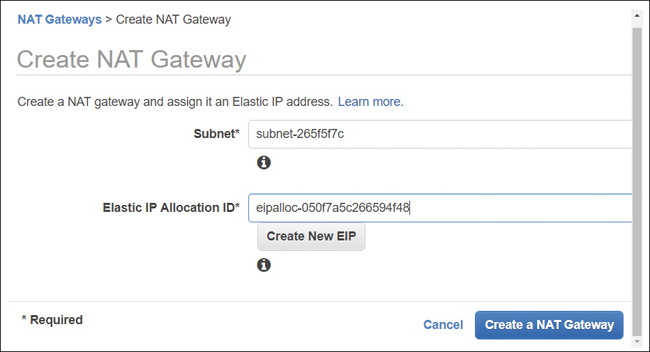

NAT Services

At AWS, a NAT gateway service can be ordered and linked to a public subnet to allow EC2 instances in a private subnet to connect to the Internet and receive required operating system and application updates. The NAT gateway service is always placed in a public subnet and configured with an elastic IP address that’s a static public IP address, as shown in Figure 3-13. On each created NAT gateway, a network interface is added that is assigned a private IP address from the IP address range of your subnet. The elastic IP address is for public communications, and the private IP address is for the private communications with the private subnets. For additional redundancy, create NAT gateways in each AZ and ensure the route tables, entries in each private subnet point the EC2 instances to the correct gateway location.

Note

The NAT gateway service initially supports up to 5 Gbps of bandwidth throughput and can scale up to 45 Gbps as required.

A NAT EC2 instance could also be built and hosted in a public subnet to allow instances in a private subnet to connect to the Internet through the NAT connection and receive required updates as necessary. However, you must completely configure and manage each NAT instance. If you decide this is the method that you want to utilize to provide NAT services, Amazon recommends that you build your NAT instance using the latest version of the NAT AMI. A NAT security group must also be created and assigned to the NAT instance, and, quite possibly, you will have to create an HA pair of NAT instances for redundancy. When building NAT instances, one configuration change needs to be enabled during installation: source destination checks must be disabled because the NAT EC2 instance is not the final source and destination for the traffic it sends and receives. The only reason I can think of why you would want to build your own instances for NAT services is if your environment needs to support port forwarding. The NAT gateway service that AWS supplies does not support port forwarding.

NAT Gateway Summary

NAT services are used so EC2 instances residing on private subnets can get required updates.

The NAT gateway service is a hosted AWS cloud service with no need to install or maintain an EC2 instance.

A NAT EC2 instance is merely an instance configured for NAT duties.

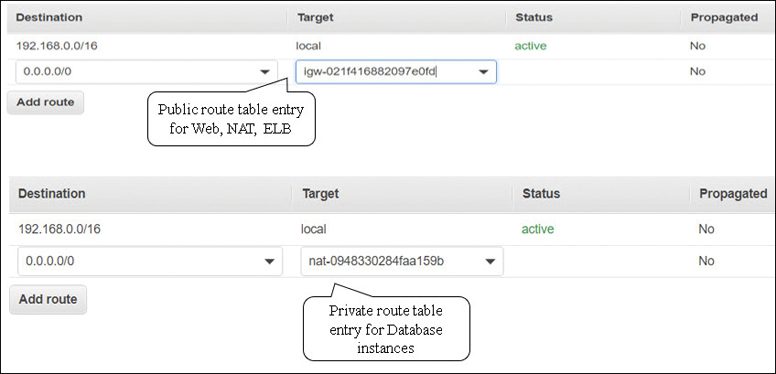

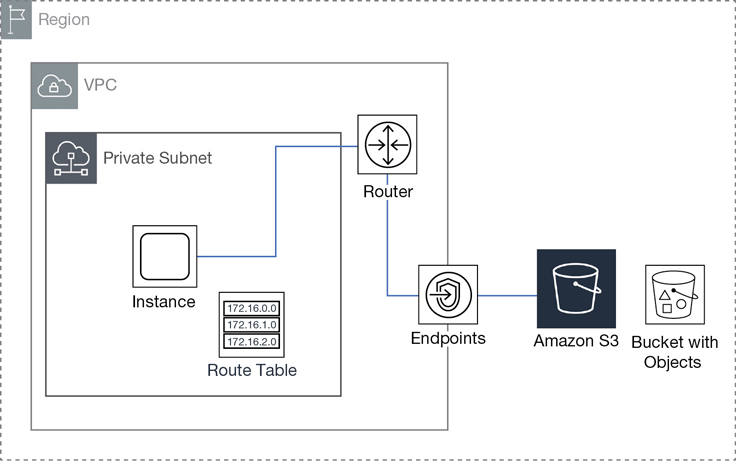

Working with Route Tables

A subnet route table is a defined collection of rules that dictate where egress subnet network traffic can flow to. As previously mentioned in the subnet discussion in this chapter, each subnet must be associated with a route table; and each VPC has local routing functionality built in implicitly. Multiple subnets can also be associated and controlled with a single route table, and a single route table can be assigned to multiple subnets. You may have multiple private subnets that need routes to the same service, such as a NAT gateway service to get required updates, or to a virtual private gateway for connections to the head office. Here are some common route table considerations:

Each VPC has a main route table that provides local routing throughout the VPC.

The main route table can be modified, but don’t do this. Instead, leave the main route table with just the local routing routes defined within the VPC. Any custom routes required by a subnet should be allowed with a custom route table. Leaving the main route table in its default state ensures that if you assign the main route table by mistake to a subnet, the worst that can happen is local routing.

The main route table cannot be deleted. However, it can be ignored and remain unassigned if you do not associate it with any subnets within the VPC.

Each route table entry defines a destination that is defined by CIDR notation and an external target. For example, a common destination is the corporate network, which can be reached through the virtual private gateway.

Subnet traffic is matched with the most definitive defined route within the route table that matches the traffic request.

Existing route tables are not automatically populated with routing rules to any network services that you order within your account. When routing services such as an Internet gateway (for IPv4 public Internet traffic), or Egress only Internet gateway (for IPv6 traffic) are attached to a nondefault VPC, they are not automatically added to a route table. They must be added manually. The exception to this rule is the default VPC, or VPCs created with the Launch VPC Wizard.

Note

After the initial association of the main route table to a newly created VPC, AWS makes no additional routing decisions.

The Main Route Table

As mentioned, the main route table provides local routing services throughout the VPC across all defined AZs, as shown in Figure 3-14. Each route table, whether custom or default, has an entry containing the VPC’s initial CIDR designations, and this entry cannot be changed. The main route table also defines the routing for all subnets that are not explicitly associated with any other custom route table after they are created. Only after a subnet is created can the associated route table be changed from the main route table to the desired route table.

Note

Every subnet, when it’s created, is automatically assigned to the main route table of a VPC. This is a protective step as the assumption is made that the main route table will only provide local routing throughout the VPC.

Custom Route Tables

It is considered a best practice to create custom route tables for each tier in your network design. Creation of custom route tables allows you granular control of traffic flow within each subnet. Let’s look at an example: Terra Firma is thinking of starting with a two-tier design for its human resource CRM application. It has decided to use two AZs within the VPC to provide for additional availability and failover for the application and database servers.

Public and private subnets will be created within each AZ, with public subnets for hosting the NAT gateway service, which allows instances on the private subnets to get updates as required, and a public-facing load balancer for balancing the user requests across the availability zones. Separate private subnets will also be created for the EC2 instances hosting the application servers, as well as the MySQL database instances, as shown in Figure 3-15. The database servers will utilize synchronous replication from the primary to the secondary server, ensuring the database records remain up to date.

Note

This example is merely a starting design. The actual number of VPCs to be created is still under discussion by Terra Firma. Perhaps the first VPC should be considered for initial design and testing purposes, with other VPCs created for production and quality control. The server and database migration tools provided by AWS also need to be tested and approved.

For our initial design, after the subnets have been created, custom route tables need to be created for the following subnet groupings, as shown in Figure 3-16:

Public subnet infrastructure—ELB, NAT gateway service. A custom route table will be created for the public subnets by adding a route for the Internet gateway enabling public network traffic between network interfaces hosted on the public subnets assigned with public IP addresses and the attached Internet gateway. (Of course, the first task is ordering an Internet gateway; next you must associate the Internet gateway with the VPC.) Internet gateway routes are usually set with a destination route of 0.0.0.0/0 because the destination may be anywhere on the public Internet. For our initial design, perhaps the destination route could be a smaller range of Terra Firma’s public IP addresses.

Application and database tiers—The application servers will be hosted on private subnets within each AZ. The master and slave database instances will be either a custom implementation or managed by the relational database service (RDS). To enable EC2 instances hosted on a private subnet to protectively connect to the Internet and receive required updates, the NAT gateway service will be hosted on public subnets within each AZ. Routes pointing to the NAT gateway service must be defined in the route table associated with the private subnets.

Figure 3-16 Terra Firma’s custom route tables

Route Table Summary

Configure the main route table for local routing.

Configure custom routes for public subnets.

Configure custom routes for private subnets.

We now need to talk about TCP/IP address types. IP addresses that are assigned to EC2 instances depend on the subnet types that are selected during the creation of the instance. Let’s look at the IP address options available at AWS, starting with private IPv4 addresses.

Private IPV4 Addresses

When a client launches a new EC2 instance, by default, Amazon assigns a private IP address to the primary virtual network interface card from the range of available IP subnet addresses. (Of course, there could be more network interfaces as well, and they would also have addresses assigned.) Private IP addresses communicate across the private network at AWS. A private DNS host name that points to the associated private IPv4 address is also assigned to each instance. If you choose to manually assign a primary private IP address, the IP address chosen must be available in the subnet’s IP address range where the EC2 instance will reside. You can assign any IP address in the assigned subnet range if it is not in use or reserved by AWS.

Note

Once a primary private IP address is assigned, the EC2 instance retains the address for the lifetime of the instance.

Additional, secondary private IP addresses can also be assigned to the network interfaces of an EC2 instance, and these addresses can be unassigned and moved between other EC2 instances that reside on the same subnet at any time. Any network interface other than the primary network interface (eth0) can also be detached and moved to other EC2 instances hosted within the same AZ and within the same VPC.

Note

Let’s again discuss the concept of cost at AWS. Communication between EC2 instances residing on the same subnet using their private IP addresses is free of charge. However, EC2 instances using private IP addresses located on subnets in different AZs are charged an outbound data transfer fee for communicating with each other.

The Simple Monthly Calculator is useful to carry out detailed pricing scenarios. http://calculator.s3.amazonaws.com/index.html

Private IP Summary

EC2 instances don’t need public IP addresses; they need private IP addresses.

Private IP addresses are cheaper cost-wise than public IP addresses when sending network traffic.

Public IPv4 Addresses

Another addressing option available at AWS is a public IP address that is typically assigned from AWS’s pool of public IP addresses, as shown in Figure 3-17. Public IP addresses from AWS’s own pool are managed and controlled by AWS and are therefore not permanently assigned to an EC2 instance. Whether or not your EC2 instance receives a public IP address during creation is dependent on how the public IP addressing attribute has been defined on the subnet where the EC2 instance is to be hosted. At the subnet attribute level of any subnet you have created, the IPv4 public addressing attribute is initially set to false, meaning no public IPv4 address will be assigned to any EC2 instances at creation. This is another example of the customer being in control of what exactly is made public at AWS.

Enabling the public IP addressing attribute can also be carried out during the creation of an EC2 instance resulting in an AWS-controlled public IP address being assigned. This can overrule the default state of the subnet’s public IP address attribute. If you create a subnet and don’t change the subnet defaults, public IP addresses are only assigned to EC2 instances that enable the public IP addressing attribute during installation.

Let’s explore the launch of an EC2 instance that is assigned an AWS-controlled public IP address.

You create an EC2 instance and enable the option to assign a public IP address to the instance during launch, overruling the subnet defaults.

After successfully creating your EC2 instance, you open a PuTTy session and connect to the instance successfully. Everything seems terrific.

You turn off your EC2 instance. Then you turn your instance back on and attempt to connect once again using PuTTy.

This time, your attempt to connect fails. You check your credentials and your key pair. Everything seems okay on your end.

Then you check your assigned public IP address. It has changed!

Here’s what’s happening in the background. Remember, the assigned public IP address is from Amazon’s controlled pool of public IP addresses. There’s a finite number of these addresses available. To make sure that AWS doesn’t run out of addresses, the AWS-assigned public IP address is detached when an EC2 instance is turned off. Once the instance is turned back on, AWS assigns a new public IP address. You may be thinking; “I don’t want my public IP address to change when I turn my instances off and on.” The good news: there is a solution with the assigning of what is called an elastic IP address.

Note

The reality is that if you have a Web/application server hosted at Amazon in a VPC, you don’t need a public IP address. The private address is a much better solution. How do customers connect to a Web server hosted in a private subnet? Easy. Place a public-facing load balancer hosted on the public subnet in your VPC, and direct the incoming traffic to the private subnet where your Web/app servers are located.

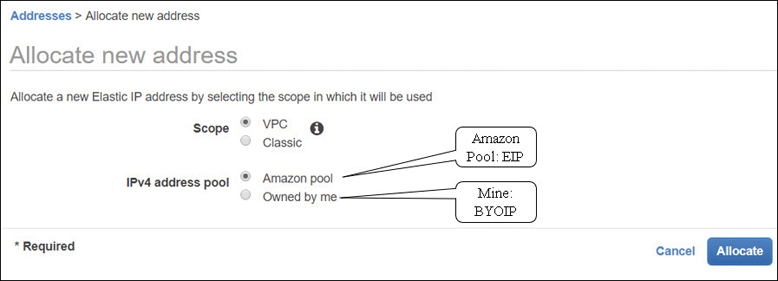

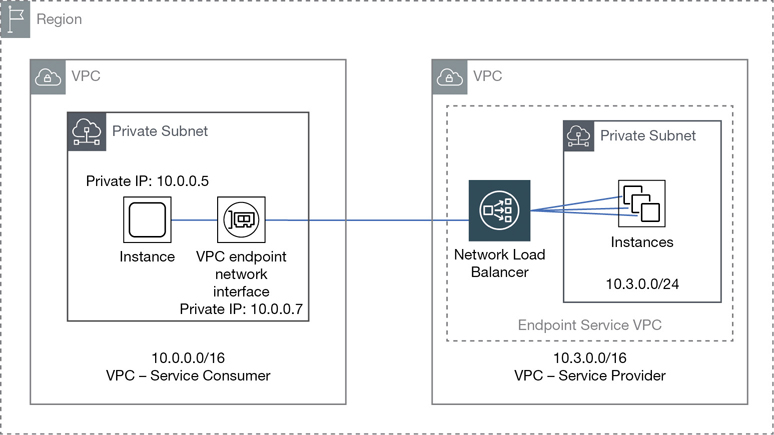

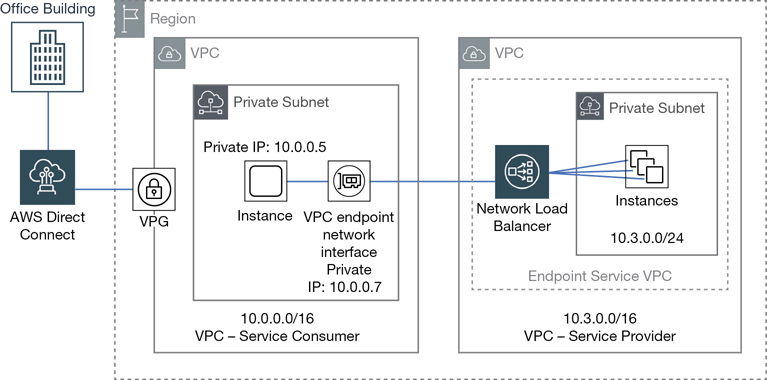

Elastic IP Addresses

A public elastic IP address (EIP) is a static public IP address that after creation is assigned to your account at AWS. There are two types of EIPs: a public version and a private one. First let’s discuss the public version; later in the chapter we will discuss a technology called PrivateLink, which uses the private EIPs. Requesting an elastic IP address is simple. You simply request an elastic IP address, as shown in Figure 3-18, and voilà, it’s added to your account from the regional pool of available EIPs. It arrives unassigned; your job is to next assign the EIP to the desired VPC in the region and finally assign the EIP to the EC2 instance.

AWS advertises each regional pool of elastic IP addresses across the public Internet and to all other AWS regions. Because of this advertising, EIPs hosted at AWS can be located across the public Internet and within the public AWS address space.

Note

There is a public listing of all available AWS EIP addresses that can be found: https://docs.aws.amazon.com/general/latest/gr/aws-ip-ranges.html.

Turning an EC2 instance that has a public IP address, an EIP, off and back on is no longer an issue; the EIP remains attached because it’s assigned to your AWS account and to the EC2 instance. And there’s no additional charge if you order and assign an EIP to an instance. However, if you don’t assign an EIP address after you have received it, AWS charges you an additional fee as EIP addresses are in limited supply. Therefore, at AWS, there are two public pools of IP addresses to consider: Dynamically assigned public IP addresses that are returned to the common public pool of AWS addresses, or elastic IP addresses, which are assigned as desired after ordering and assigned to a customer’s account. Let’s work through another common scenario:

You spin up an instance and forget to assign a public IP address to the instance during the creation.

Your attempts to connect to the instance using PuTTy are not successful.

You can’t connect because there is no public IP address; you’re trying to connect to the private IP address assigned. Aha!

After requesting and receiving an EIP address, you associate it with your instance.

You successfully connect to the public elastic IP address using PuTTy.

The public IPv4 address will be displayed as an attribute of the network interface when viewed through the management console, but the internal wiring is a little more complicated. On an EC2 instance with a public IP address, this address is internally mapped to the instance’s primary private IPv4 address using NAT services. When a public IP address is assigned to an EC2 instance, the outbound traffic is sent to your instance’s private internal IP address.

Assuming your EC2 instance is directly addressable from the Internet when someone wants to directly reach your instance, the inbound destination is the public IP address. When the instance needs to communicate outbound across the Internet, the source address is its public IP address. Queries on the private network of the EC2 instance always use the private address of the instance. The takeaway from this example is that AWS attempts to use the private IP address, whenever possible, for network communication with an EC2 instance.

Each EC2 instance that receives a public IP address at AWS is also provided with an external DNS hostname. As a result, the external DNS hostname is resolved to the public IP address of the EC2 instance when queries are external to AWS.

Public IP Summary

Use EIPs for NAT instances.

EIPs are also used for both public-facing and private load balancers.

EIPS are used for the NAT gateway services

Public IP addresses are not necessary for EC2 instances

Traffic Charges

It’s important to realize that public traffic and private traffic are charged differently at AWS. Costs at AWS can become extremely expensive if you don’t pay attention. This is but one example to consider: public traffic sent to a public IP address traveling across the Internet will have a much higher data transfer charge than private IP address traffic. Private traffic traveling within AWS data centers is always cheaper than traffic on a public subnet, as shown in Figure 3-19. Therefore, whenever possible, AWS uses the private network for communication.

Private traffic that stays within a single subnet has no additional charges, whereas private traffic that travels across multiple private subnets that are hosted by different AZs has an egress charge of $.01 per GB. This might sound perfectly fair and inexpensive, but think about database replication from the master database instance to the secondary database instance, with each instance located in a different AZ. If the database is a production database, expect a significant charge for the synchronous replication of your data from the primary to the secondary database instance. This is but one of many methods of how AWS charges you for using hosted cloud resources. One of the jobs Terra Firma must carry out is a detailed costing of its initial design, including the replication of the databases across AZs, the cost of the load balancer, the monthly charge, plus the traffic flow.

Note

All inbound communication traffic that an EC2 instance receives is free, regardless of whether it comes from inside AWS or from the public Internet. However, database replication traffic across multiple AZs is charged a data transfer fee.

Bring Your Own IP (BYOIP)

If you have a public IP address range that has been approved for use by your compliance rules, or a specific public IP address has been white-listed as a reputable IP address for a well-known hosted public service, you may want to use these public IP addresses at AWS. The good news is that it’s now possible to have control over your own public IP addresses at AWS. BYOIP allows customers to move their existing public IPv4 address space to AWS, as shown in Figure 3-20. Each customer still owns her public IP range; however, Amazon hosts and advertises the public IP address range across the Internet and AWS regions for you. Bringing your own public IPv4 address space to AWS allows you to accomplish the following tasks:

Maintain your public IP address reputation.

Avoid any changes to public IP addresses that have been white-listed.

Avoid changing IP addresses utilizing legacy applications.

Use a public IP address as a hot standby failover for on-premise resources.

Figure 3-20 BYOIP address architecture at AWS

Reasons for wanting control of your own public address space in the cloud could include the following situations:

What if you want to keep a recognizable public IP address but have the service assigned to that address hosted on AWS?

What if you had 10,000 hard-coded lottery machines and you wanted to change the hardware devices to virtual ones at AWS with your public IP addresses?

What if you had 2,000 hard-coded public IP addresses within your data center and you wanted to change the physical location of your data center to AWS but keep the same public IP addresses?

What if you had legacy workloads—or older applications relying on specific fixed public IP addresses? These IP addresses can now be moved to AWS.

Note

The specific prefix supported by BYOIP at AWS is /24.

The BYOIP Process

Import the public IP address, or the range of public IP addresses, into AWS. AWS creates a pool of these addresses and assigns the address pool to you.

Advertise the public address range.

Allocate EIP addresses from your AWS hosted pool of public IP addresses.

Step One: Provision Your Public IP Addresses

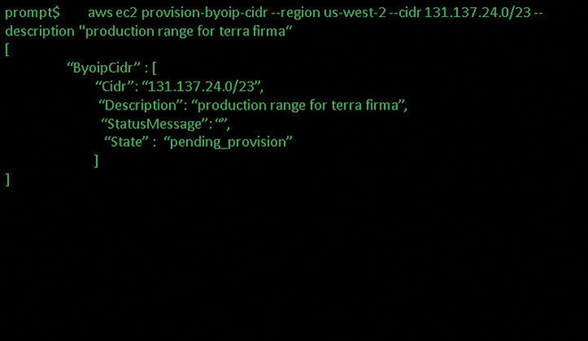

aws ec2 provision-byoip-cidr --region -cidr <cidr range>

After AWS has analyzed and accepted the range of public IP addresses, the state of the public address range to be hosted at AWS changes to “provisioned” indicating that the IP address request has been accepted. At this point you will be able to use these public IP addresses, but they have not yet been advertised across the Internet or to the peering partners of AWS.

Step Two: Advertise the Newly Created IP Address Pool Using the Advertise Command

aws ec2 advertise-byoip-cidr -- region <cidr range>

After this command has executed, the state of the address space changes from provisioned to advertised, as shown in Figure 3-21. The address range is now advertised across the Internet and to all the peering partners of AWS. Once the advertising process has been accepted and started at AWS, it’s time to stop the advertising of the same public IP addresses to avoid any funky duplication routing conflicts.

Step Three: Allocate an EIP from Your Range of IP Addresses

aws ec2 deprovisioning-byoip-cidr--range address range

When using the hosted pool of addresses at AWS to allocate elastic IP addresses, you can select a random IP address from the hosted pool or select a specific IP address.

If, in the future, you decide you don’t want AWS to advertise and host your pool of public IP addresses, you can execute a “withdraw” command to change the state of the public IP addresses from advertised back to an unadvertised state. At this point, AWS no longer advertises your public IP addresses. The last step is to run the deprovisioning command to remove the assigned elastic IP addresses.

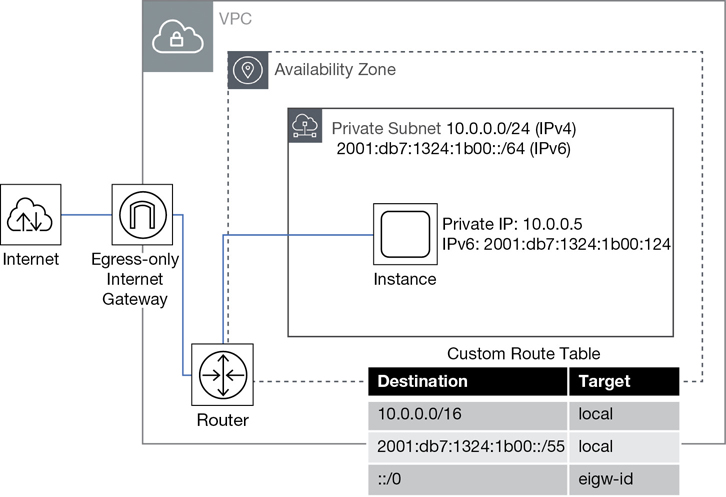

IPv6 Addresses

Remember that even though IPv6 addresses are fully supported within a VPC, an IPv4 CIDR block must be created first. The allowable format for IPv6 addresses is 128 bits, with a fixed CIDR block size of /56. Amazon is in control of IPv6 addressing; you cannot select your own CIDR range. Note with IPv6 addresses at AWS that there is no distinction between a public and a private IPv6 address.

Note

At AWS, IPv6 addresses are public addresses. Amazon also does not provide support for a DNS hostname for assigned IPv6 addresses.

If your instance is configured to receive an IPv6 address at launch, the address will be associated with the primary network interface. As noted earlier, instances at AWS do not have IPv6 DNS hostname support. Assigned IPv6 addresses are also persistent; you can stop and start your instance, and the IPv6 addresses remain assigned. IPv6 addresses are defined as globally unique because they have a globally unique identifier. Access to the Internet using an IPv6 address is controlled by the subnet route table, or by using security groups or network ACLs.

If you are planning to utilize IPv6 addressing at AWS for Internet access, here is a short summary of the steps to follow:

Associate the AWS-provided IPv6 CIDR block with your VPC.

Create an Egress only that’s outbound only, Internet gateway (EOIG).

This allows your private subnet to enable outbound communications to the Internet using IPv6; the egress-only Internet gateway prevents inbound communications.

Update your route tables to route your IPv6 traffic to the EOIG.

For instances hosted on IPv6 public subnets, add a route that directs all IPv6 traffic from the subnet to the Internet gateway. Note that that’s the regular Internet gateway, and not the EOIG; the EOIG is controlling private outbound traffic from the private subnet.

For instances on IPv6 private subnets, create a route that directs all Internet-bound IPv6 traffic to the EOIG.

Review and update your security group rule, including rules for IPv6 addresses.

Security Groups

Security groups operate at the EC2 instance level. They protect your server’s incoming network connections by placing a software firewall around each attached network interface. Each EC2 instance will have a primary network interface (defined as eth0); you may also have EC2 instances with multiple network interfaces, and each interface will be protected with a security group.

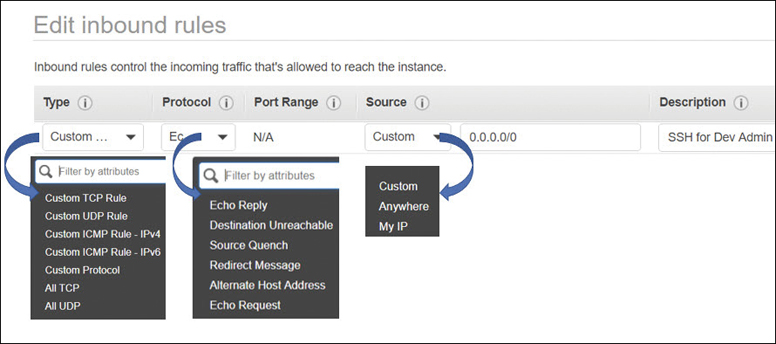

Each security group is a collection of inbound and outbound rules that designate the port and protocols allowed into and out of each network interface, as shown in Figure 3-22.

It may not be obvious, but each network interface is attached to a single EC2 instance, and each security group is assigned to a specific VPC. Here are some details of what we can do with security groups:

Security groups are associated with a particular VPC.

Each network interface assigned to an instance hosted within a VPC can be associated with up to five security groups.

We can allow rules; we cannot specify explicit deny rules with security groups. However, if access is not specifically allowed, you are implicitly denied.

When you create a new security group, if you don’t review and change your outbound rules, all outbound traffic is allowed.

Outbound rules can be changed to a more specific outbound destination.

Security group rules are defined as stateful. Inbound traffic flowing through a security group is therefore tracked to know the state of the traffic; if the traffic is allowed in, the response to the inbound traffic is always allowed outbound. This definition can be flipped; traffic that flows outbound will have its responses allowed back in. Once security groups have been assigned, changes can be made to the security groups assigned to a network interface while the instance is operational. Security group changes will take effect usually within a few seconds.

Security groups show up in several locations in the management console; they appear in both the EC2 and the VPC console. If you were using EC2-Classic networking, the security groups you would have created would have shown up only in the EC2 console.

Think of each security group as a reusable security template stored in your AWS account. Once a security group has been created, it can be assigned multiple times within the VPC where it was created, protecting one or many EC2 instances. Up to five security groups can be applied to a single network adapter, providing an effective set of allow rules mandating what ports and protocols are allowed for both inbound and outbound traffic.

The main characteristics of security group rules are as follows:

Security groups define allow rules. (You can’t create rules that explicitly deny access.)

Security group rules allow you to direct traffic outbound from one security group and inbound to another security group within the same VPC.

Security groups don’t deny traffic explicitly; instead, they deny traffic implicitly by only stating what is allowed.

Security groups are defined as stateless; if requests are allowed in, response traffic is allowed out regardless of defined outbound rules.

For each rule, you define the protocol, the port, or port range, and the source inbound rules or destination outbound rules for the traffic.

The protocols allowed are TCP, UDP, or ICMP.

Port Range: for either TCP or UDP, or a custom protocol, this is the range of ports to allow. You can specify a single port such as port 22, or a range of ports if you’re dealing with outbound dynamic port mapping. The ports that can be assigned are defined by RFC 5237 and 7045, which define the standard TCP/IP protocols.

One important concept to grasp about security groups is that they don’t deny traffic flow. Instead, they allow traffic flow. Another equally important concept is the “direction of traffic flow” allowed by a security group. As we know, the network traffic that is allowed in by a security group rule is also allowed out. However, a defined inbound port request does not use the same port number for the outbound response. For example, if there’s a rule defined allowing inbound HTTP traffic across port 80 inbound, the outbound traffic response is allowed out, but the response traffic does not use port 80 outbound. In most cases, outbound traffic uses a dynamically assigned port called an ephemeral port, determined by the operating system of the server making the response. We will explore ephemeral ports when we cover network ACLs later in this chapter.

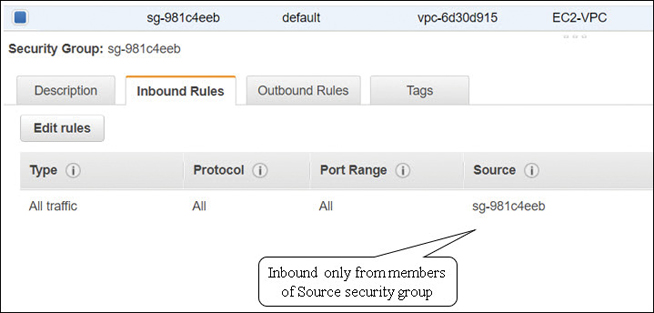

When a VPC is created, a default security group is also created, as shown in Figure 3-23. Note that all outbound traffic is allowed between the EC2 instances that are assigned the security group; however, any other traffic is implicitly denied. Therefore no one can get into any of the EC2 instances from the outside world because the security group did not allow any external inbound traffic. It’s also important to understand that you can’t delete a default security group; you also don’t have to use the default security groups; you can ignore them and create and assign custom security groups.

If you don’t pay attention and don’t specify a custom security group when an EC2 instance is created, at launch, the default security group is associated automatically. As we now know, the default security group allows all outbound traffic from the instance associated with the default security group, but accessing the EC2 instance from an external location will not allowed.

Custom Security Groups

What happens when you create a custom security group? First, you must associate the security group with a specific VPC. After it is first created, a custom security group allows no inbound traffic but allows all outbound traffic by default. After the initial creation of the custom security group and selecting the security group properties, from the Inbound Rules tab you create the inbound rules defining the network traffic that’s allowed inbound. On the Outbound Rules tab, define the network traffic or security group that is allowed to communicate outbound.

Inbound rules—Define the source of the traffic—that is, where it is coming from, and what the destination port or port range is. The actual source of the traffic could be a single IP address (IPv4 or IPv6), a range of addresses, or another security group.

Outbound rules—Define the destination of the traffic—that is, where it is going to, and the destination port, or port range. The actual destination of the traffic could be a single IP address (IPv4 or IPv6), a range of addresses, or another security group.

A prefix list ID for a specific AWS network service, such as an Internet gateway

Another security group—This feature allows instances that are associated with one security group to access instances associated with another security group. The security group can reside in the same VPC or can be from a VPC that has been peered together through a VPC peering connection.

Note

Security groups “allow” access; however, security groups are also said to “deny by default.” If an incoming port is not allowed, technically access is denied.

The best practice when designing security groups is to restrict what ports need to be opened. If you place a load balancer in front of your EC2 instances, the only ports that need to be allowed by the security group protecting the load balancer are the port or ports your application requires.

For your production applications in your design, it’s a good idea to consider picking unique ports based on your production server’s tier placement. For example, in a three-tier design, Web servers could be assigned 3020 and application servers 3030, and database instances could have custom ports chosen. That’s just an extra level of security to consider. In addition, be prepared to plan for a clean security audit, or for successful troubleshooting by designing and documenting your security group’s naming scheme up front. Putting some real thought into all naming conventions pays off when you are under pressure. We have all been in this situation; fill in the blank “what’s the blasted name of the --------. Who named it that?” Here are some examples to consider for your security group configurations and initial setup.

App Server Inbound Ports

This security group has rules to allow inbound access of HTTP and HTTPS from any IPv4 address as the traffic is arriving inbound from the public Internet. As shown in Table 3-3, the source IP address of 0.0.0.0/0 indicates the traffic is allowed from any Internet location.

Table 3-3 Security Group Allowing HTTP Access

Inbound Rules |

||||

|---|---|---|---|---|

Protocol |

Number |

Port |

Source IP |

Comment |

TCP |

6 |

80 (HTTP) |

0.0.0.0/0 |

Inbound from anywhere (IPv4) |

Outbound Rules |

||||

Protocol |

Number |

Port |

Destination IP |

Comment |

ALL |

6 |

80 (HTTP) |

0.0.0.0/0 |

Outbound IPv4 traffic |

Database Server Inbound Ports

At AWS, there are a number of managed database server options available with RDS; during configuration, you can choose to use the default port address assigned based on the database product’s default port access. You could change this to a custom port number to add an additional element of security. The source IP address could be specified as a single IP address or a range of IP addresses from your subnet of application servers that need to query the database. Default RDS security group database port options are listed in Table 3-4.

Table 3-4 Default Database Inbound Ports

Protocol |

Number |

Port |

Source IP |

Product |

Comment |

|---|---|---|---|---|---|

TCP |

6 |

1433 |

Single IP address, or, A range of IP addresses |

Microsoft SQL |

Default port to access selected database instance |

TCP |

6 |

3306 |

Aurora / MySQL |

||

TCP |

6 |

5432 |

PostgreSQL |

||

TCP |

6 |

1521 |

Oracle |

||

TCP |

6 |

5439 |

Redshift |

Administration Access

If you need to connect to an EC2 instance to perform direct administration, you must associate a security group with the network interface card that has inbound rules allowing Secure Shell (SSH) or Remote Desktop Protocol (RDP) access depending on the host operating system of the instance (see Table 3-5). A database instance may be hosted on a private subnet and not directly accessible from the Internet. However, setting up a bastion host would allow access for administrators to first authenticate to the bastion host and then “jump” to the associated EC2 instance in the private subnet.

Table 3-5 Security Groups Allowing Administrative Access

Protocol |

Number |

Port |

Operating system |

Comment |

|---|---|---|---|---|

TCP |

6 |

22 |

Linux |

Public IPv4 address, or IPv6 address or range of addresses |

TCP |

6 |

3389 |

Windows |

Pinging an EC2 Instance

Pinging an instance requires access to ICMP traffic. It’s almost an automatic process; drop to a command prompt and ping the public IP address of the newly created EC2 instance. In this case, the instance didn’t get the ping request because the security group is not allowing ICMP. The ping traffic request is inbound, so you must add an inbound ICMP rule, as shown in Table 3-6.

Table 3-6 Allowing PING Access

Protocol |

Number |

Port |

Source IP |

Comment |

|---|---|---|---|---|

ICMP |

1 |

8 (ECHO) |

Admin workstation network |

Public IPv4 address or range of addresses |

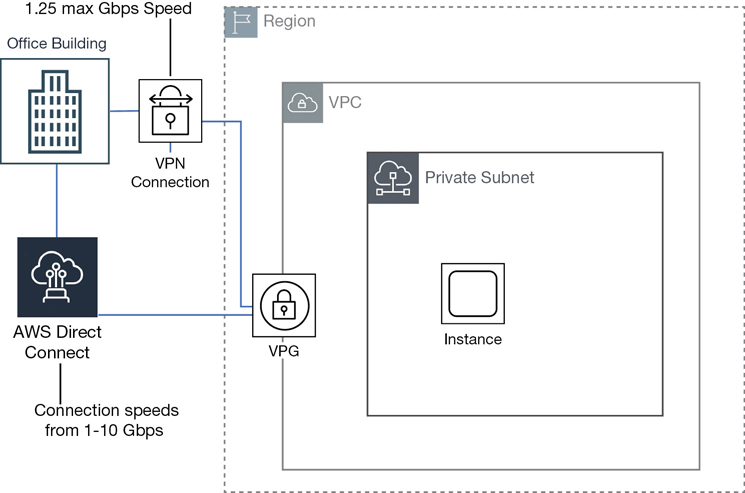

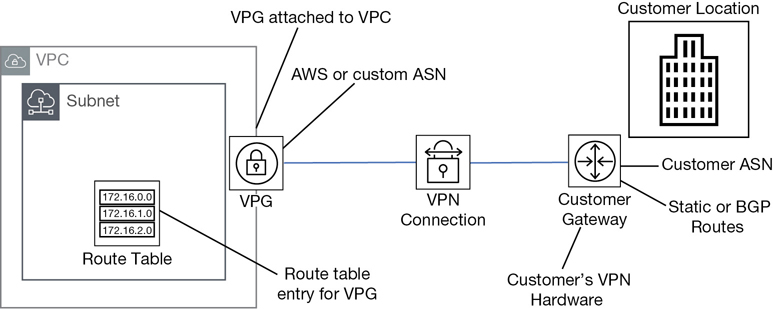

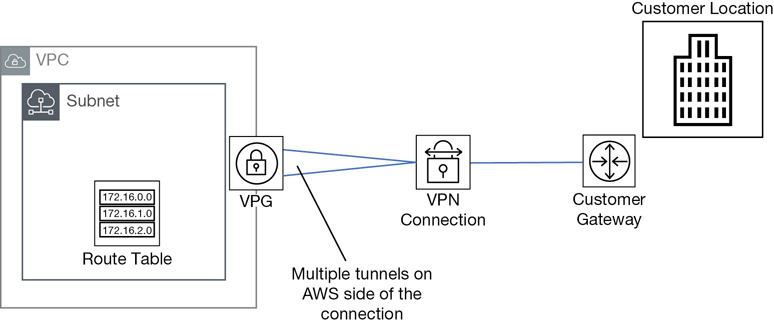

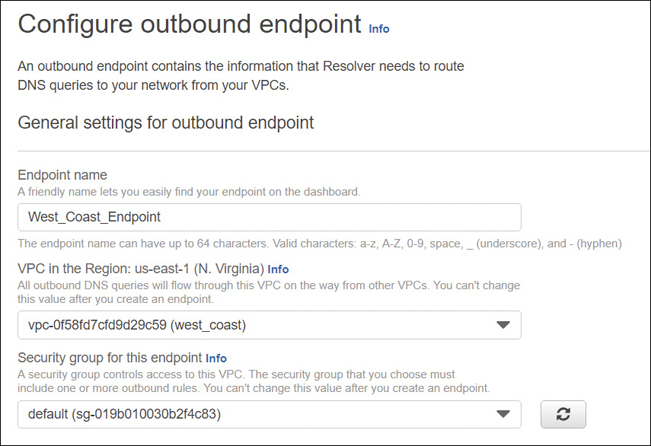

Elastic Load Balancing (ELB)