Chapter 5. Technical Debt and the Source Code

Comprehensive analysis of technical debt requires understanding of short-term and long-term issues with business goals, source code, architecture, testing, and build and deployment infrastructure, as well as how they are all related to each other. While you might conduct separate analyses for each of these artifacts, it is important to recognize that they are intertwined. When you make decisions about remediating technical debt, their interrelationships are especially important, as discussed in later chapters. In this chapter, we explain how to use source code as input to recognizing technical debt.

Looking for the Magic Wand

A web search for analyzing technical debt results in many vendor web pages describing tools, mostly those that conduct automated static program analysis. They promise that such analysis will help measure, and consequently reduce, your technical debt.

When you are faced with technical debt for the first time in a software development project, you might feel tempted to rush out to acquire one of these tools, hoping that you can identify and measure all your technical debt in one magic stroke. But do these tools provide the right approach to understanding your technical debt? And are they sufficiently comprehensive?

Let us look at an example from the Phoebe project. During a quarterly project review, the project manager became concerned about the increasing number of defects. She noted, “Our maintenance costs are increasing.” The developers felt that this was the result of spaghetti code, or unnecessarily convoluted and unstructured source code. They looked into using a static program analysis tool to help them understand the complexity of their system, and they selected SonarQube, an open-source but well-tested tool that supports their Java-based project. Running such code quality analyzers typically yield results similar to that shown in Figure 5.1.

This snapshot demonstrates some potentially confusing results about the quality of the Phoebe project’s source code. Static analyzers are likely to provide a long list of issues related to your code, and those issues may or may not be technical debt, it may or may not be essential to resolve them, and they may or may not be related to your current business objectives. Understanding how to use static analyzers to locate your technical debt without getting lost in overall defects or bad code quality is one of the most daunting aspects of technical debt management.

For the Phoebe project, the tool found a total of 13,417 issues in the code, most of which it listed with the severity code blocker, critical, or major. The tool does further sorting by bug, vulnerability, and code smell. Code smells and some of the bugs and vulnerabilities could be symptoms of deeper underlying issues related to technical debt. What should the development team do about them? Should team members record each one as a technical debt item in the issue tracker? Overwhelmed by the result, the team created one new issue and added it to the backlog: “Resolve technical debt based on the results of the static analysis.” And there it lingered. Needless to say, this is not a well-defined technical debt description.

We propose a more focused and deliberate approach to technical debt analysis, which includes using static analysis tools only after deciding what you will do with the information they provide. Depending on where you are on the technical debt timeline, you may consider using source code as input for technical debt analysis for three reasons:

The team is struggling to meet a deadline, and there are increasing numbers of defects. These symptoms should trigger code analysis.

The team conducted a Technical Debt Credit Check (described in Chapter 11, “Technical Debt Credit Check”) and identified causes such as staff turnover, lack of skill development, and time pressure. Such business issues should trigger analysis of the code, as it is likely that mistakes and complexities may have been introduced.

There are no immediate concerns, but the team would like to be proactive with code quality by performing regular lightweight checks of the code. This is a best-case scenario.

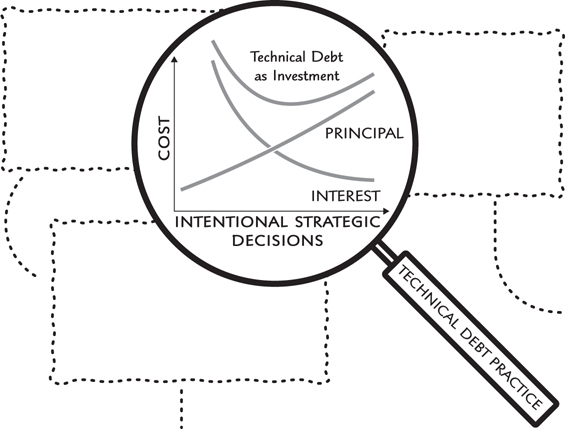

The information you get from a source code analysis can help you recognize and describe technical debt items and determine where you are on the technical debt timeline, especially whether you’ve passed the tipping point. In other words, are you approaching the suffering period—when the cost of technical debt surpasses the original value of incurring it—or are you well within it? The following sections proceed through the activities of technical debt analysis described in Chapter 4, “Recognizing Technical Debt.”

Understand Key Business Goals

If you don’t know where you are going, any route will do. Simply answering the question “How much technical debt does this code have?” is not useful. Technical debt should be identified as an enumeration of meaningful technical debt descriptions, not as an enumeration of code quality violations. Identifying the amount of technical debt occurs in the context of addressing a business goal about system quality and functionality. Investigating system quality and assessing whether it meets the business goals may reveal a portion of the code that is producing the symptoms of debt. The consequences of this piece of code ultimately give rise to two kinds of technical debt interest: recurring (constant additional effort incurred due to keeping this piece of code in the system—that is, living with this debt) and accruing (the cost of changing the system and retrofitting parts).

Each software development organization has its own distinct business goals and objectives. These are highly dependent on the context and product of the organization. In Chapter 3, “Moons of Saturn—The Crucial Role of Context,” we cover the many factors that can create conditions for the occurrence of technical debt. Business goals and associated risks serve as a good starting point to articulate how to go about technical debt analysis. Knowing your position on the technical debt timeline and your plans for remediating the debt helps you align the technical debt analysis with your business goals.

Table 5.1 provides some common examples of business goals related to productivity, quality, cost, and time to market. The associated pain points are symptoms that can inform code analysis for identifying technical debt. Some organizations do a good job of clearly communicating short-term and long-term business goals, while some development teams have to infer the goals through the pain felt across their organization as a consequence of the debt they are carrying.

The first row in Table 5.1 shows the business goal “Create an easy-to-evolve product,” one of the goals driving the Phoebe project. Symptoms of technical debt have become visible outside the development team, and management has noticed that features are being delayed and maintenance costs are increasing. When management asked the developers why, they pointed to buggy code of increasing complexity. The team conducted a Technical Debt Credit Check (described in Chapter 11), which revealed that the cause is the frequent addition of new team members to the project without appropriate onboarding. The organization has just become aware of technical debt on the technical debt timeline.

Table 5.1 Examples of mapping business goals to the technical debt timeline

Business Goal |

Pain Point |

Causes |

Point in TD Timeline |

|---|---|---|---|

Create an easy-to-evolve product |

Our maintenance and evolution costs are increasing. Developers are new to the project and say we have spaghetti code, resulting in an increased number of defects. We need to understand the extent of the problem before taking any action. |

Inexperienced team members create conditions for the occurrence of technical debt. |

Awareness |

Increase market share |

Customers have started switching services. We have had at least two security breaches in the past six months. We keep patching things up, but we need to step back and understand what is going on in the codebase. More security breaches could result in further loss of business. |

The teams stopped following standard procedures and did not understand key architectural requirements—security in particular. |

Tipping point: The project is experiencing symptoms, and the team needs to do something now. |

Reduce development costs |

If we reuse this piece of software, we anticipate reducing our development time, which is currently quite lengthy, but we are not sure if we will incur technical debt in the future if we go forward with the reuse strategy. |

Building on a product that already has debt could create more debt; the team does not completely understand the future contexts where reuse may be needed. |

Occurrence |

Reduce time to market |

Our velocity keeps dropping. It takes forever to implement even a simple change and test it, and we don’t know what is causing the delays. |

Teams do not create sufficient documentation or follow many of the standard processes. |

Past the tipping point |

Identify Questions About the Source Code

Your business goals and position on the technical debt timeline will inform the specific questions and concerns about your source code. Let’s keep building on our example. The pain experienced by the Phoebe project in the context of its business goal led the team to ask key questions about the system and consequently its source code: “Where is the maintenance cost being spent? How do we trace symptoms such as defects to the codebase?” Table 5.2 provides the driving questions for the source code analysis of Phoebe’s business goals from Table 5.1.

Table 5.2 Common questions for source code analysis

Business Goal |

Pain Point |

Driving Analysis Questions |

|---|---|---|

Create an easy-to-evolve product |

Our maintenance and evolution costs are increasing. Developers are new to the project and say we have spaghetti code, resulting in an increased number of defects. We need to understand the extent of the problem before taking any action. |

|

Increase market share |

Customers have started switching services. We have had at least two security breaches in the past six months. We keep patching things up, but we need to step back and understand what is going on in the codebase. More security breaches could result in further loss of business. |

|

Reduce development costs |

If we reuse this piece of software, we anticipate reducing our development time, which is currently quite lengthy, but we are not sure if we will incur technical debt in the future if we go forward with the reuse strategy. |

|

Reduce time to market |

Our velocity keeps dropping. It takes forever to implement even a simple change and test it, and we don’t know what is causing the delays. |

|

There are two categories of data that Team Phoebe needs to collect information about to answer the driving analysis questions. One is code measurement criteria that can be evaluated with code analysis. The other is symptom measures, such as the number of defects or lingering issues and the time spent resolving such issues and adding new functionality, which can be obtained from solid issue-tracking procedures, along with configuration management and code check-in/check-out procedures. The team can now correlate the results of the code analysis with the symptom measures by answering questions such as these:

How much time have we spent patching vulnerabilities?

Where in the code are maintenance costs increasing during development?

How are defects related to the areas of the code that are causing increased maintenance?

How often do developers change these areas of the system?

In how many places in the code do the developers need to implement changes?

How many change requests are developers able to complete per sprint/iteration? How long does each one take, including testing?

Where in the codebase do the developers spend most of their time?

These examples suggest that the kinds of questions that code analysis can help the team answer are often related to modifiability, maintainability, and secure coding. There may be other related concerns; for example, to enable reusability, the team may consider moving to decoupled microservices. Static analysis results alone would not return enough information to assess that approach, but it could provide input to the decision-making process.

User-observable operational issues, such as frequent crashes, and unintended functional results may also prompt code analysis for evaluating design fitness. Some examples of checking for design fitness for operational concerns include analyzing memory management, data flow, exception handling, performance, and security. While limited, static analyzers do have analysis rules that check for design fitness. Examples include the following:

Exception classes should be immutable (performance and security)

NullPointerException should not be explicitly thrown (performance and security)

The user interface layer shouldn’t directly use database types (enforce Model”View”Controller pattern)

Avoid the Singleton pattern (improve testability).

Define the Observable Measurement Criteria

By now we hope we have made the point clear: Static analyzers provide useful information, but there is no magical metric or tool for identifying technical debt with code analysis. There are some common maintainability/modifiability threads among business goals and concerns about source code, as our examples demonstrate, but one-size-fits-all measurement criteria for driving business goals do not exist. Choice of technology and development language as a consequence of the business goals also influence the measurement criteria. Therefore, a development team should determine the measures that will help members analyze a system in light of the analysis questions the team generated.

If your source code is messy, then you are probably paying a lot of recurring interest. Recurring interest occurs in the form of added time to implement new features or test the system, added complexity that results in increased maintenance costs, and system structure and behavior that are hard to understand and explain. In such cases, there is no one area that you can scope as the location where the debt resides, but overall the code has become too brittle. Creating concrete technical debt items helps you focus on the problem and document supporting evidence as you apply different kinds of analysis, be it tool-supported code analysis, architecture and design reviews, or infrastructure monitoring metrics. We will discuss the latter in subsequent chapters.

Table 5.3 associates symptom measures and code measurement criteria with the Phoebe project’s business goals to better elaborate the pain points and driving analysis questions. The quality measures provide a means to measure the pain and to check whether the symptoms are decreasing as the Phoebe team makes changes to repay the debt and improve the system. Conducting the source code analysis against measurement criteria associated with business goals will generate an initial list of candidate technical debt items.

Table 5.3 Examples of symptom measures and code measurement criteria

Business Goals |

Symptom Measures |

Code Measurement Criteria |

|---|---|---|

Create an easy-to-evolve product |

Defect trends (new defects per iteration, defects lingering over multiple iterations) |

|

Increase market share |

Security bug trends Amount of time spent patching |

|

Reduce development costs |

Propagation of change |

|

Reduce time to market |

Changing velocity |

|

Improve governance |

Potential effort spent per violation |

|

These are examples of starting points to help you recognize how multiple sources of information are related to each other. One theme that emerges from these examples is that unmaintainable code can result in declining development efficiency. The development team needs to ensure that members minimize accidental complexity in order to manage the system with minimal unintentional technical debt and keep the codebase understandable.

The devil is in the details. Writing clean, understandable, and well-thought-out code is every team member’s responsibility. Integrated development environments, automated code review, and unit testing software as well as static code analyzers have increasing capabilities to assist developers in writing high-quality code. Improving the capabilities of these tools is an ongoing challenge for the software industry, especially in minimizing false-positive rates and warning messages and making it easy for teams to incorporate them into their day-to-day development activities.

Select and Apply an Analysis Tool

System quality goals for the Phoebe project include minimizing new defects at each iteration and the amount of time existing defects linger on the backlog. Accordingly, the team established code quality criteria that included industry standards for writing maintainable code and avoiding code complexity. However, despite these actions, the team found itself struggling with unresolved defects, in particular hard-to-trace defects in the code. According to the developers, one cause of messy code is the tendency to copy and paste blocks of code. To avoid this practice, a new packaging scheme had begun to be implemented, but the developers suspected that it hadn’t been fully implemented yet.

To help evaluate the code quality, the Phoebe team selected SonarQube as its static analysis tool because it is open source, has a community to address developer questions, has a reasonably well-established rule set for Java, and incorporates maintainability as well as security measures. SonarQube can detect duplicate code blocks and the presence of packaging schemes. Developers’ knowledge of the areas of messy code helped them configure the tool to run on these areas of interest. The code measurement criteria also helped them configure the rules and set their priority.

As the team interpreted the analysis results, a closer look revealed that about one-fourth of the 13,417 issues were related to duplicate code blocks around adapters. This observation overlapped with the developers’ observation about spaghetti code that was hard to understand. Using the navigation features of the tool, the developers located the areas in the code that were impacted most severely. The results also showed a large number of empty Java packages; while such issues are usually minor, they significantly increase the recurring interest on the debt because they increase the software footprint and reduce the clarity of the system. The developers also mapped these areas to the number and kinds of defects they had observed.

Document the Technical Debt Items

Once the development team has generated the initial data, the next step is to ensure that members record the relevant results as technical debt items so that they can start managing them. This is the role of the technical debt registry, which can be any tool already used by the project: an issue tracker, a defect tracker, or a backlog management tool. The team should take two actions:

Document existing technical debt and create a strategy for paying it back.

Address how to ensure that the team does not inject new debt into the source code so no one has to deal with this many thousands of issues again.

These actions require establishing and enforcing some development practices. Here we focus on documenting the existing technical debt items. Later, we summarize development practices that minimize unintentional technical debt in the code.

The Phoebe team wondered if it should look at all 13,417 issues tagged as techdebt. Or should the team focus only on the blockers, which total 155 items? Or should it also include the major and critical issues in the registry? Team Phoebe started this analysis of the source code to see if any of the findings would overlap with the increasing defects and maintenance costs. The team recognized that the duplicate code and empty Java packages contributed significant amounts of recurring interest in the form of decreased understandability and small but annoying defects that ripple through the duplicated code snippets.

The team decided to introduce two major technical debt items into the registry: remove empty Java packages (see Table 5.4) and remove duplicate code (see Table 5.5). The experienced developers on the team also recognized that while they uncovered these technical debt items through a static code analysis of their codebase, resolving them would probably require some architectural thinking and analysis. For example, rather than duplicating the code, they would need to think about a common service that could be invoked.

Table 5.4 Techdebt on empty packages from the registry of the Phoebe project

Name |

Phoebe #345: Remove empty Java packages |

Summary |

The re-architecting of the source code to support multiple adapter specifications has introduced a new Java packaging scheme. Numerous empty Java package folders are present across multiple projects. |

Consequences |

No impact to functionality; however, may lead to confusion for users implementing enhancements or modifications to the source code. |

Remediation approach |

Using SonarQube, the team identified the empty packages. New and existing classes have been moved into new package folders; however, the previous package folders have been left in place with no class files. Cleaning up these packages should be trivial and ensure that there are no unintended calls left. |

Reporter/assignee |

A composite technical debt item as a result of our SonarQube analysis retrospective. Will be assigned to the Adapter team. |

Table 5.5 Techdebt on duplicate code

Name |

Phoebe #346: Remove duplicate code |

Summary |

AdapterCore and CoreLibrary grew organically with a lot of copy/paste code, resulting in over 40 blocks of code duplicated within each of the modules in these subsystems. |

Consequences |

No immediate impact to functionality; however, every time a change needs to be made, several small defects are injected due to the inability to propagate the changes to the blocks of code duplicated. |

Remediation approach |

See the results of the SonarQube analysis to identify the classes. The remediation will need to include a re-architecting effort and possibly introduce a factory class to handle the common functionality across the duplicated blocks. This should have been undertaken with the adapter architecture changes. |

Reporter/assignee |

A composite technical debt item as a result of our SonarQube analysis retrospective. We will have to postpone this to the next sprint as the effort involved is higher than we anticipated. |

They each recognized that the accrued cost of removing the empty packages was currently low, but it could increase over time if developers started adding code, creating a drift between the implementation and the initial architecture of the system. Removing the empty Java packages took care of about 250 of the issues returned. Once the packages were removed, several hundred minor issues also disappeared. Alternatively, the team could have elected to exclude these packages from the source code analysis. In this case, however, including them helped the Phoebe team recognize a recurring interest that it had been paying every sprint, in addition to complexities arising from the unnecessarily increased deployment footprint of the system.

Dealing with duplicate code is not as simple as removing empty packages because the remediation strategy requires architecting a new solution to pay the current principal. To address the accruing interest, Phoebe’s development team recognized that the team needs to retrofit a significant number of classes with duplicate blocks, which introduces a risk and adds time commitment, especially in testing. Therefore, the remediation approach field emphasizes that the team needs to conduct further re-architecting.

A large percentage of the major violations that the tool reported are related to how exceptions are handled, how errors are logged, and how comments and commented-out code are handled. These violations signaled to the Phoebe project manager that the development team needed a reminder about using development practices that avoid introducing unintentional debt, in particular by focusing on good software craftsmanship and understanding of software design.

Then Iterate

Following the process for identifying technical debt items that the Phoebe team demonstrated, team members decided to understand the complexity of the system first. The business goal and questions about the source code that they struggled with were related to increasing defects as a consequence of spaghetti code. So, they decided to analyze the structure of their code as well as its quality with the help of static code analyzers and prioritize what areas would need to be remediated. They did this prioritization based on the areas that were evolving the most and where they observed the most defects.

When you complete a first analysis iteration, the goals and frequency of subsequent iterations should become clear. You might learn that analysis for security and analysis for maintainability require different analysis questions, criteria, and tools, so you might conduct these analyses in two different iterations of the technical debt analysis. In another scenario, once you identify the technical debt items, you can decide on a frequency of analysis to ensure that similar code quality issues do not accumulate, in which case you may only apply the tool and document the issues.

When the software undergoes major changes or when business goals change, it makes sense to iterate all the activities to align the analysis process with the new software or new business goals. If you are intentionally taking on technical debt, then you are motivated to optimize your effort for a business goal anyway. Consequently, the measurement criteria derive from the intentional design decisions that lead to technical debt. Having observable measures within the code that map to the design decisions allows proactive management of technical debt within the code.

What Happens Next?

After selecting analysis criteria, running tools, and inspecting the code, you probably have a handful of technical debt items in your technical debt registry. The process for identifying technical debt items also assumes that you are performing the analysis in retrospect. The registry does not answer questions about the overall assessment of the system’s technical debt, but individually the items do address problematic areas. At this point, you can take two courses of action:

Address each technical debt item in isolation through local refactorings within an iteration/sprint boundary.

Consider the dependencies between the technical debt items.

Larger projects may require some planning across iteration boundaries.

Refactoring is the process of restructuring existing code without changing its external behavior. Depending on the nature of each technical debt item, refactoring the code locally without inducing architectural change may be the best strategy for removing the debt. To determine the most cost-efficient approach to paying back your debt, the technical debt description provides a starting point for estimating the cost of each technical debt item in isolation. The consequences identified help you understand the recurring interest. The analysis of change provides input about accruing interest. Using the following simple formula, assess each item over several iterations:

Recurring interest (consequence) + Accruing interest (propagating cost of change) × Probability of high-cost change scenarios

In this formula, the greater the probability of change, the higher the total cost of debt. When deciding whether to pay down debt, compare the cost of the impact of different change scenarios. If the technical debt items do not have the potential to cause ripple effects or if they have no dependencies on other items, the approach of focusing on one item at a time might work. We elaborate this first course of action in Chapter 8, “Costing the Technical Debt.”

However, software development is rarely that simple. More often than not, you will have to treat the technical debt items in reference to each other. Therefore, the second approach requires a more elaborate design and traceability analysis to assess the dependencies within the system boundaries as well as the technical debt items. We tackle this second course of action in Chapter 9, “Servicing the Technical Debt.”

What Can You Do Today?

Now that you know how to use source code to recognize technical debt, you can begin looking beyond external quality issues such as defects and recognize when you have internal code quality issues that may require you to deal with technical debt. Start by conducting these activities:

Understand the business context to guide the use of source code as input for technical debt analysis.

Acquire and deploy in your development environment a static code analyzer to detect code-level issues.

Analyze the code for the presence of unintentional technical debt and respond by including debt items in the technical debt registry.

As an example, the Phoebe project realized the importance of maintainability for a system. Lack of maintainability and technical debt are not the same thing, but unmaintainable code will have a lot of unintentional technical debt. It is never too late to make maintainability a non-negotiable software design principle for your projects.

For Further Reading

There are several guidelines and standards for code quality. The ISO/IEC 25010:2011 System and Software Quality Models standard summarizes quality characteristics, internal metrics (metrics that do not rely on the execution of the software), and external metrics (those applicable to running software) (ISO/IEC 2011).

The Consortium for IT Software Quality (CISQ) publishes standards for automated measures of quality characteristics in security, reliability, performance efficiency, and maintainability. These standards are a concrete place to start defining the code measurement criteria related to your quality attributes.

The CERT Division of the Carnegie Mellon University Software Engineering Institute has published secure coding criteria for C/C++ and Java that have become an industry standard (SEI 2018).

A book by Visser and his colleagues (2016), Building Maintainable Software, has successfully simplified the otherwise complex and ubiquitous problem of maintainability to ten guidelines, with examples in C# or Java. The book reflects the decades-long experience of the Software Improvement Group in the Netherlands in assessing software projects.

In addition, the industry has developed methods to improve automation of software quality. For example, Software Quality Assessment based on Lifecycle Expectations (SQALE) is a method developed by Inspearit, Inc., that identifies code violations based on a categorization of testability, reliability, changeability, efficiency, security, maintainability, portability, and extensibility. The method creates an assessment on technical debt reduction based on fixing these issues (Letouzey 2016; Letouzey & Ilkiewicz 2012).

There is wide application of using static code analysis to assess technical debt. Two examples are work by Arcelli-Fontana and colleagues (2015) and Zazworka and colleagues (2014). They show how to analyze for code smells that can lead to technical debt and summarize similar challenges of using the existing tools, as discussed in this chapter.