Event-driven architecture (EDA) requires a quite novel mind-set when it comes to application design and IT architecture. The goal of this chapter is to get you started on “thinking EDA” as you approach your architectural and application development work. Luckily for you, some of the groundwork for thinking in EDA terms has already been covered with the advent of object-oriented programming and the Web. However, old habits die hard, and thinking in EDA terms typically means erasing a lot of conventional software architecture concepts from your mind. When you think EDA, you want to reduce central control of application flow. You need to enable autonomous behavior in application components and allow for asynchronous parallel execution. You must design for unanticipated use whenever possible. And, in what might be the greatest challenge to conventional thinking, you must enable the event itself to carry its own state information. At the end of this chapter, we look at some situations where EDA is not the answer, and show how thinking EDA sometimes means not doing EDA at all. We also take a look at an example of an existing commercial EDA suite and show how thinking EDA is now being baked into standard industrial offerings.

If you’ve watched TV in the last 40 years, you might understand that CONTROL is trying to save the world from KAOS (aka “Chaos”). So it is in IT as well, and we all need to “get smart” to combat chaos, although we might need less control than we thought. Unlike Agents 86 and 99 in the classic TV show, as aspiring EDA professionals, we should aspire to less control. However, even as we release the brakes on control, we will never yield to chaos.

The key to thinking EDA is to reduce your reliance on central control of applications. Reducing central control is as much a change in your state of mind about application design and architecture as it is a change in the way that you design and architect applications in practical terms. To get to the point where you have reduced central control enough to realize a functioning EDA, you must take several steps. First, you have to reduce the presence of actual controllers themselves and replace them with trusted executors that function as elements of an EDA. These EDA elements must then be able to function autonomously and operate asynchronously in parallel. They must be designed for unanticipated use (to the greatest extent possible) and deployed with minimal dependencies on one another. Each of these factors is highly relevant to putting together a real EDA. Let’s take a look at each one and improve our understanding of how to make it all happen.

Controllers are software elements that maintain state and control process flow. They can be discrete applications, a part of a larger application, or even the enterprise application integration (EAI) suite controlling multiple system interactions. What is significant about controllers is their assigned duty of imposing control over a sequence of programming activities.

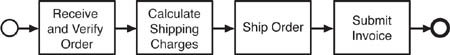

For example, suppose you have an order entry process that contains four business process steps, as shown in Figure 6.1. If you were developing an application to manage this order entry process, your first thought might be to create a controller that manages the sequential flow. The controller would receive the new order, verify the data, and then call out to the shipping system to calculate shipping charges. Upon completion, it would call the warehouse system to pack the order and then call the financial system to bill the customer.

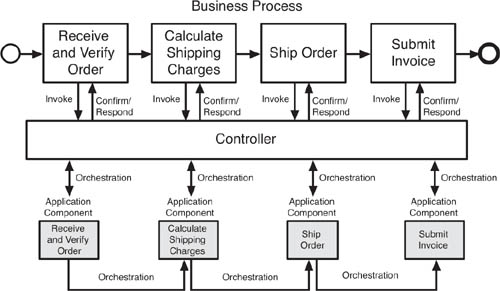

The conventional, controller-based approach to executing the New Order business process, as shown in Figure 6.2, is fine, but also contains a number of execution constraints. Changes in the application functions that affect order entry might require changes in the controller, and vice versa. The execution of the functional steps in order entry is reliant on the controller.

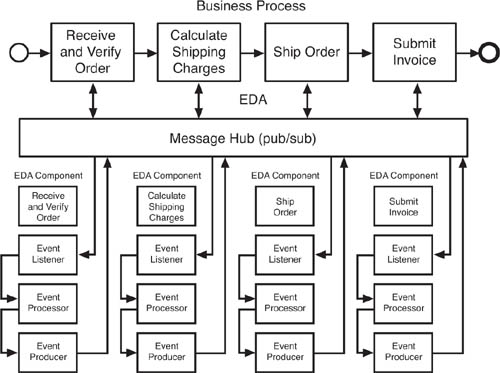

Let’s try to think EDA in this order entry process. Thinking EDA, shown in Figure 6.3, helps us envision that each step in the process can be carried out through trusted executors. The application function corresponding to each business process step could act upon detection of an event trigger. Each function would have an event producer, event listener, and event processor. Each application component listens for their particular event. The New Order component receives the new_order_received event, verifies the information, changes the event type to order_verified, and pushes it to the bus. The shipping component receives the event, processes it, adds the shipping charges to the event data, changes the event type to order_charges_calculated, and pushes it to the bus. The warehouse system receives the event, processes it, changes the event type to order_shipped, and pushes it to the bus. Finally, the financial system receives the event, processes it, changes the event type to order_billed, and pushes it to the bus.

The simple scenario shows how the EDA approach exposed key data that was buried in the controller model. To be clear, although this approach might require additional work to set up the first time around, thinking EDA should confer a number of architectural benefits right away and down the line as well. For example, modifying the business process for order entry becomes simpler in the EDA approach. Analysis and monitoring of the process can also happen without disrupting the design. Parallel processing becomes easier. For simplicity’s sake, we did not execute multiple steps in parallel though we could have easily executed the tasks to calculate the shipping cost and the ship order in parallel.

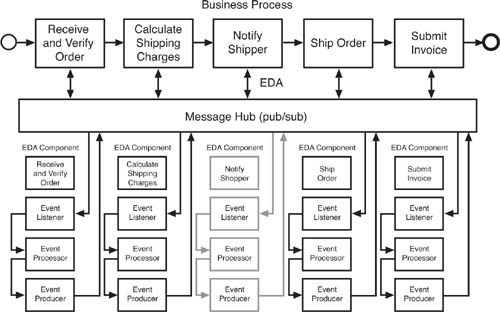

Let’s take this EDA example and demonstrate how modifying the process has become easier. In Figure 6.4, we have added the Notify_Shipper component. The Notify_Shipper component will take advantage of the exposed state change event order_charges_calculated, process the data, and then notify the shipper with an advanced notice of the demand. Adding the new functionality was as simple as adding a new component. The addition of the new component didn’t disrupt the remaining components nor the flow. We can also turn off the new functionality just as easily without impacting the flow. In contrast, adding this new functionality within the central controller model would have been tough and would have required downstream regression testing, downtime, deployment coordination, and the like.

Thinking EDA by reducing your dependency on a central controller requires that you also maintain state and process information within the events themselves. In EDA, state is not centrally maintained but is carried throughout the system by the events. EDA components are inherently stateless because they carry state in their messaging. We realize this sounds like a contradiction—how could something carry state but be stateless at the same time? Yet, that is just it: By carrying state, the EDA component does not rely on the central controller for its state. This is the essence of getting an EDA to work in real life. The events must carry their own state information to break free of the central controller.

Right now you might be thinking, “Hold on a second—without the controller, nothing ensures successful order processing.” EDA architectures work heavily through trust but that does not mean monitoring isn’t implemented. You actually have more ways to ensure the process executed than before. Each new order event can trigger a watcher component to monitor for the order_billed event. The sole purpose of this component could be to wait for the order_billed event and change the event to order_complete. If it doesn’t receive the event after a predetermined time, the watcher can send an alert. The alert might flow to an event listener that flags the time lag and forwards it to an event processor for correction or a person who can intervene. Also monitoring tools can be used to watch for stale events on the bus, monitor event volumes, or look out for component faults.

This decoupling might actually undo a secondary benefit of a central controller. Because the decoupling of components eliminates preconception, it also makes it more difficult (in the absence of very good documentation) to understand exactly how a process functions. The presence of a central controller obfuscates siloed process steps. This isn’t a technology problem as much as it is a process/organizational/business problem—but it’s a good idea to be aware of the potential issues.

In keeping with our heavy reliance on caveats, though, we need to temper our enthusiasm for thinking EDA slightly at this point. We do need to point out that shifting of the central control model can introduce some complexity to the design of the EDA components. The components must carry event state. And, the components might need to be configured to listen for new event triggers when new process steps and components have been added to the process flow. In our example, if you need to insert a new event processor in parallel to the old one (for example, if you need to have Notify Shipper before Shipping Order), you must either change Shipping Order to respond to a different event (Notification Sent) or block it from executing until Notification is sent. These kinds of requirements might make moving to EDA unappealing, depending on the nature of your process flows and the way your application developers like to work.

It is also possible, today, to get some of the same benefits of decoupled business process components using workflow orchestration engines, such as Microsoft BizTalk or Oracle BPEL, as flexible controllers that allow easy plug in of stateless application components. This is an overlap between the EDA approach and the emerging service-oriented architecture (SOA)-based business process modeling approach that is gaining in popularity. We note this overlap because we believe that non-EDA approaches are often preferable to EDA based on many specific requirements in a particular situation. We never think that EDA is always the best way to go. The good news is that you have a growing number of choices in today’s market.

Events carry current state data. Perhaps the best way to understand this is to contrast it with the alternative, which is to preserve state in the central controller. In our shipping example, under the centrally controlled approach, the controller would contain the state of the transaction. For instance, the controller would know that Customer X needs shipping for Order Y to Address Z and pass this information along to the application components it needed to process the order. In this example, the controller might send a procedure call to ShipOrder that contains Customer X, Order Y, and Address Z. Upon shipment, the ShipOrder component would send an acknowledgement back to the controller that said, simply, “Order Y, Shipped.” The controller would then send a procedure call to SubmitInvoice that asked for Customer X to be invoiced for Order Y.

In contrast, when thinking EDA, you store process information in the event. Process flow information can be removed from the controller and stored in the event. This allows EDA components to change the flow if the context changes midstream. In the EDA approach, each component would carry the full event state in its messaging. Each component would know that it was Customer X, Order Y, and Address Z. To contrast with the central controller model, for example, instead of the ShipOrder component sending an acknowledgement to the controller that said, “Order Y, Shipped,” it would send an Extensible Markup Language (XML) message to the enterprise service bus (ESB) that said, “Customer X, Order Y, Shipped to Address Z.” This might seem like a lot of extra work, but there is a very good reason for the event components to carry this kind of state information. Doing so helps decouple them and lets them operate autonomously. That way, when you add a new component, like NotifyShipper, it can operate fully without reliance on a central controller because it picks up all the needed event state information from the CalculateShippingCharges component, and it can forward the shipping information to the ShipOrder component without any need for central control. The result is an increase in flexibility in modifying the application.

EDA is autonomous behavior. When thinking EDA, strive to produce stateless, autonomous components. Think about breaking down sequential steps into multiple parallel executions. In many situations, these actions must be joined to continue processing. These cases might require a composite event processing component or a complex event processing agent. Either component will have to maintain a state while waiting for both actions to complete. The component should then combine the events and return to a stateless condition.

The most important aspect of thinking EDA is designing for unanticipated use. Key data should be appropriately exposed. As seen in our previous example, the best way to do this is to break down the business processing into discrete components. Central controllers tend to hide the relevant state change information that future components might find helpful. The process should be broken down into similar areas of concern and delegated. Event data then travels between these components exposing key business data.

In the previous example, the Notify_Shipper component took advantage of the exposed state change event order_charges_calculated. In that same vein, we could introduce an event wiretap component to analyze the order_charges_calculated events and produce a daily report of which shipper was used the most, was the cheapest, was the highest priced, and so forth. Both of these examples demonstrate the concept of unanticipated use.

To gain a feel for some of the in-depth work you will need to do in realizing an EDA, you need some detail about recommended practices for EDA implementation. As part of this exercise, we introduce you to the term agent, which is sometimes used in the industry as an alternative to describe event listeners, producers, and processors. Although this might be confusing at first, it will hopefully give you some preparation to deal with conversations that use this term.

Agents are responsible for receiving events, filtering, processing, forwarding, and transmitting new events. They are constructed to be self-contained and as self-sufficient as possible, allowing business event processing without outside intervention. They do not directly interact with other agents. However, they might interact with other systems, components, or services. This approach gives the agent collective an extremely agile architecture.

The parallel nature of EDA increases architectural agility by easing refactoring and component deprecation. With EDA, new functionally equivalent components can be plugged into the architecture and executed in unison. An audit of the new agent’s output compared with the deprecated agent will confirm their functional equivalence. And all of this can be done without interference to the enterprise system. We didn’t have to recompile any flow controller or register our new agent’s location. We simply had to attach new Web service components to the desired enterprise service bus.

Of course, there are drawbacks to the parallel paradigm. The three main constraints are lack of flow control (statelessness), indeterminate event processing sequences, and debugging difficulty. In the next section, we see how to counteract these disadvantages by interjecting some SOA into the architecture. However, for now we discuss the larger aspects of the inherent difficulties in managing event-driven architectures.

To facilitate EDA manageability, you should follow two best practices:

![]() Event management

Event management

![]() Agent typing

Agent typing

Event management is made up of two practices: mandatory event metadata and event dictionaries. Predefining some elemental mandatory event metadata pays for itself tenfold when it comes to debugging the system. Mandatory event data is data that must be present for all events on the bus. For example, event metadata should contain event type, sender, time stamp, causality, unique ID, trace data, and payload. Depending on design requirements, metadata might also require delivery, process, and security attributes. If using Java Message Service (JMS), this metadata can reside in name value pairs at the message header level, which increases performance.

Agents attach to the bus using subject-based subscriptions to filter unwanted events. This requires an enterprisewide homogeneous event namespace to ensure unique names. Event names should use hierarchical uniform resource identifier (URI) notation. This can be as simple as Control.Category.Event_Type.Event_Name (or, in this example, Production.WarehouseManagment.RFID.PackagReceived). The Control attribute allows similar messages to be released in the same environment without interfering. A control attribute could be set to “Beta” or “Deprecated” and used in conjunction with other systems with minimal impact. The other attributes further define the event. Agents can also use the * wildcard to subscribe to whole categories of events, for example Production.WarehouseManagment.RFID.*

An event dictionary should be constructed containing an organized view of all business events. Predefine your business event types based on your business process models and store these interface specifications in the event dictionary. The dictionary should contain the interface definition, pertinent service-level agreement information, time to live, auditing, and cross-references. Predetermined semantics are needed—otherwise, the dictionary could grow with similar event definitions that are vastly different implementations.

Making the event dictionary accessible and editable by everyone involved is important. One dictionary format is a hierarchical wiki Web page. This creates a living document allowing designers to update the dictionary as new business processes are defined. As new events are defined, corresponding new agents will be deployed to handle the processing.

EDA architectures employ many different types of agents. Take some time during design to define your project’s agents and their types. An agent’s type will characterize the expected behavior, increasing reusability while decreasing unpredictability. You’ll find that most agents can be classified as simple, infrastructure, or domain specific:

Simple agents: The most reusable agents will be simple agents. These agents receive events, process the information, and output an event. The most common simple agents are transformation agents, augmenting agents, and persistent agents.

Infrastructure agents: These agents are used to manage, control, and monitor the EDA environment. This includes agents such as the following:

Domain agents: These agents provide domain-specific implementations like Fulfill FDA Audit, Send Inventory Count, and so on. They mostly consist of the other agent types but are customized versions to suit a specific domain or vertical market.

All agents will rest on a message backbone. The bus itself is a logic term meaning there could be multiple physical message backbones that can be bridged together. Events travel on the bus through channels or topics. Ideally, you want as few topics as possible, but for logical and performance reasons, separate topics make perfect sense. You might have a separate channel for auditing and logging or a separate channel for high-priority events. It’s common for agents to listen to more than one channel and even send output to multiple channels.

Part of thinking EDA involves the necessary thinking not EDA, which we occasionally recommend when circumstances dictate. You might be thinking, now wait a minute! EDA is not always the answer? Yes, there are situations, many in fact, when EDA is not the optimal way to approach enterprise architecture or application design. In this IT version of “When Good Pets Go Bad,” we look at some of the factors that diminish the benefit or feasibility of EDA. These include application logic constraints, system performance constraints, governance and security barriers to EDA, organizational challenges, and cost-benefit misalignments.

The most basic reason not to do an EDA is if there is no inherent purpose for one in the application requirements nor any future benefit that anyone can discern from reuse down the line. This might sound obvious, but there is an unfortunate tendency in the IT field to get hyped up by trends and want to make every system you are building play by the new rules. Remember “Web enabling” a few years back? EDA might hold the same Svengali sway over even the most hard-minded of us.

As a rule, the higher up the application stack a process is, the better a candidate for EDA it will be. Lower-level processes, such as database mirroring, database access by applications, or packet routing, are not good candidates for EDA. The reason is that these deep processes are often highly reliant on fixed, fast-performing, proprietary protocols, but with no ill effect on integration or agility.

Performance can be a huge issue in migrating toward an EDA or SOA. XML and Web services, the base units of EDA, tend to be inefficient compared with other interfaces. They tend to create “chatter” on networks when they require authentication and they are generally difficult to accelerate. For these reasons, systems that require a high level of performance might be poor candidates for EDA. For example, the waiting time experienced by an ATM user might affect the customer’s opinion of the bank. If the ATM is excessively and consistently slow, the bank’s business might suffer. As a result, a slower functioning but more agile EDA might be exactly the wrong choice for an ATM network.

EDAs also have the potential to generate unpredictable and possibly disruptive amounts of traffic on networks. Because EDAs are dynamic in design, and patterns of message exchange are harder to predict in an EDA than in a conventional architecture, EDAs can strain networks. When combined with the fact that EDAs can span multiple networks, under no one entity’s direct control, the network management aspects of EDA can become daunting. If network performance and utilization is not a major issue, then network problems should not stand in the way of an EDA. However, if network utilization and performance is critical, or network performance could affect overall system performance, then the network could be a barrier to EDA that makes other options look more appealing.

Ultimately, all of these issues relate to the EDA’s cost and benefit calculation. It is possible to overcome virtually any challenge to EDA, but it might not be worth the cost. Any EDA component can be secured, at a cost. Any network performance issue can be overcome, at a cost. However, the EDA has to deliver a value in excess of those costs to be worthwhile. The costs might not be financial, and the same goes for value. For example, there might be a compelling national security value developing an EDA for counterterrorism, although the cost might be quite high in dollars. Yet, it could be worth it. In contrast, it might be costly to convert a set of well-designed and properly functioning legacy systems to EDA, but if the value realized is not high, the project is probably not worth undertaking.

The solution to the challenge of cost versus benefit is to evaluate where in a chain of processing steps EDA really adds value and where it is a “nice-to-have” feature. As you examine the total flow of business process steps and underlying systems that your architecture supports, you will likely see areas that are ripe for EDA and service-orientation, and other places where a migration to EDA can take place later. The important learning in this vein is to see where reuse and extensibility of EDA components can add value to the enterprise and focus your efforts and resources on those places first.

It turns out we are not the only ones thinking EDA. Some of the major IT suppliers are also beginning to roll out their versions of EDA. That’s a good thing. It would be a shame to do all this great thinking and find out we were the only true geniuses in the world and no one cared what we thought.

We thought it would be helpful to look at an example of an actual EDA package that is on the market today. So, let’s explore one of the big vendor EDA suites and see how it compares with our ideal EDA, for better or worse. We are very gratified that so many large vendors have embraced EDA to the point where they are willing to stake engineering and marketing dollars on the paradigm.

The datasheet for our sample EDA suite contains the following statement:

...enables customers to monitor, analyze, and respond to business events in real time. Organizations need to analyze increasingly large volumes of information and more rapidly respond to business events by adapting their applications and IT systems to become event driven. However, current infrastructures for processing and managing events are limited and require complex and expensive code development....EDA Suite complements the service-interaction model of SOA, providing infrastructure to manage event-based interactions and complex event analysis in real time.1

As you can see from this description, the folks at our selected vendor totally get what EDA is all about. They have tried to put together a solution offering that enables users to monitor, analyze, and respond to business events in real time. To make this work, they assembled a suite of software products. The suite contains an enterprise service bus (ESB), an event listener, and an event producer. The event listener/producer can connect with Radio Frequency Identification (RFID) sensors as well as temperature gauges and other devices that translate physical events into EDA-ready data. The EDA suite comes with JMS and a J2EE developer kit. With these elements, a user of this suite can develop event processors and specialized event listeners and producers. Event-based reactions can be programmed in J2EE as well.

Our sample suite’s datasheet describes a feature set that includes Business Activity Monitoring (BAM), real-time analytics, data enrichment, content-based routing, content filtering, asynchronous messaging, business rules, and a rules software development kit. Some of these terms refer to concepts that we have already covered, but with different names. For example, data enrichment describes a type of event processing that modifies data detected in an event so it can be acted upon correctly by the event processor.

Content filtering is equivalent to event stream processing. Content-based routing is a type of event processing that moves event data messages from one place to another, depending on rules that respond to the content of the event data. For example, let’s say that the EDA suite is detecting temperatures and needs to respond one way to high temperatures (over 75 degrees) and another way to low temperatures (under 75 degrees). Content-based routing provides a way for the EDA to make its different responses to the two temperature choices. Structurally, in this case, an event Web service would transmit temperature information to an event listener, which would route high or low temperature data to an event processor. You can accomplish this goal of content-based routing in two ways, and the pragmatic issues of performance and cost in an EDA make it worth the time to delve into them.

One approach to content-based routing in the temperature-detecting EDA is to have the event listener parse the entire body of the Simple Object Access Protocol (SOAP) message containing the temperature event data, and use a rule to determine if the temperature is high or low. In the situation, there would be an event Web service that transmits raw temperature data to the event listener, which analyzes the SOAP body and reads the temperatures. The advantage of this approach is that the event listener can essentially wiretap an existing temperature reporting Web service that wasn’t necessarily designed to function as an event producer. The disadvantage of this approach is that it is cumbersome and slow to have an event listener parsing the entire SOAP body to determine if the temperature is high or low.

A more efficient approach, from a performance perspective, is to build the content-routing into the event Web service itself. In this case, the Web service that reported the temperature would send one of two messages, called either HighTemp or LowTemp, depending on the temperature, and the name of the message, contained in the message header, would make life a lot easier for the event listener, which would not have to parse the entire SOAP message and apply an involved business rule. Performance-wise this approach is much better. Depending on your circumstances, the latter approach might be preferable because of cost considerations.

Our sample suite uses BAM to “define and monitor events and event patterns that occur throughout an organization.” The suite’s Business rules engine captures, automates, and enables change to business policy. The interesting point here is that the vendor provides a specific toolset to help the developer design an event processor. Of course, you could develop a wholly new event processor using the suite’s J2EE kit, but the suite makes it possible to establish and enforce business policies, or rules, that process event data. For example, let’s say you were using this suite to create an EDA for a property management company. You could define a business rule that said, “If the temperature goes above 90 degrees, then switch on the air conditioner, keep track of the time that the air is on, and invoice the tenant for the air conditioning service used.” The Business rules engine streamlines the process of putting that event processing logic into action.

Our sample EDA suite contains a “multi-protocol Enterprise Service Bus (ESB) to connect applications and route messages...[the] ESB provides multi-protocol messaging, routing, and transformation capabilities to distribute event messages and integrate services.” The ESB also comes with adapters, which provide “standards based access to virtually any data source.” To get specific, this ESB supports JMS, SOAP, JCA, WSIF, JDBC, HTTP, and FTP messaging protocols. It provides configurable JMS qualities of service with different types of persistence stores including database, file, and in-memory. The combination of the adapters and the multiprotocol capabilities are very powerful in EDA terms. They show that the EDA suite has the capability, at least in theory, to connect event processors, listeners, and producers at virtually any point in an enterprise.

Commercial EDA offerings are not without gaps, however. One gap in most of these solutions is their reliance on the developer and architect to be able to think EDA to deliver the kind of truly dynamic, complex, and implicit functioning that we want from an event-driven architecture. If the developers do not have a far-reaching understanding of thinking EDA, with its emphasis on decoupling of application components at the design stage and reducing dependencies, then the EDA that is developed through the product suite (or its analogs from other vendors) runs the risk of becoming a tightly coupled EDA. A tightly coupled EDA has lost a majority of its potential for dynamic, complex use.

That being said, there are few shortcuts to EDA. As with so many other endeavors in IT, there is an advantage to the user-friendly, wizard-based approach in that it enables fast deployment and shares advanced technologies with relatively untrained users. However, there is also no substitute for true expertise. (And if you’re reading this book, we congratulate you on your drive to learn the real stuff!) The challenge with deploying a packaged EDA solution like this suite is to know enough about event-driven architecture to bring out all of the suite’s best EDA qualities but not let any of its embedded logic throw you off track on the way to complex, implicit, and dynamic EDA.

One of the other potentially major gaps in most of the commerical EDA suites that we see is the risk of becoming dependent on proprietary elements of the suite. For example, though it is standards based, there are some subtle aspects of the many vendor-packaged ESBs that can lock the developer into the vendor stack. Once locked in, there is a potential risk that it will become costly or difficult to extend the EDA to non-vendor-based event components. Or, at the very least, such extensibility might require the purchase of proprietary adapters, and so on, which limit the essence of the EDA’s functioning.

Albeit, this kind of lock-in would occur with virtually any vendor solution. With an open source solution, the lock-in might occur with the architects and developers who built the components. Using any proprietary knowledge involves risking holding one hostage waiting for maintenance and extensions to the framework. There are no easy answers to this problem.

One possible mitigation to the vendor lock-in dilemma is to build an EDA component mediation into the EDA itself at the design stage. There are several solutions on the market today that offer the capability to mediate the XML and SOAP message traffic between multiple ESBs and Web service consumers, providers, and other network elements that make up a truly broad, complex, and implicit EDA. With a mediation solution, you can develop an EDA with the confidence that an event component, such as an event service, will be able to communicate with event listeners that are based on any ESB in any enterprise, regardless of platform, transport protocol, or SOAP version in use.

This chapter starts us on the path to practical use of EDA theory by getting us to “think EDA” as an approach to application design and enterprise architecture. In particular, to think EDA, we need to keep the following distinctive EDA factors in mind:

![]() Reducing central control—The essence of EDA is the reduction or elimination of central control from the application design. In an EDA, each event component functions autonomously. Of course, there is a flow of event processing steps, but the flow is not reliant on a central controller or main method for its functioning.

Reducing central control—The essence of EDA is the reduction or elimination of central control from the application design. In an EDA, each event component functions autonomously. Of course, there is a flow of event processing steps, but the flow is not reliant on a central controller or main method for its functioning.

![]() Carrying state inside the event—To operate without central control, event components must carry event state. In the example used in this chapter, an event component that notifies shippers of shipment status needs to send and receive the full order detail as its state information. This carriage of event state enables the event component to operate autonomously and makes possible more flexible modifications to the application.

Carrying state inside the event—To operate without central control, event components must carry event state. In the example used in this chapter, an event component that notifies shippers of shipment status needs to send and receive the full order detail as its state information. This carriage of event state enables the event component to operate autonomously and makes possible more flexible modifications to the application.

![]() Designing for unanticipated use—EDA components should be designed for unanticipated use. If you can design an EDA component that can serve multiple uses, it is easier to assemble and reassemble them into event-driven composite applications.

Designing for unanticipated use—EDA components should be designed for unanticipated use. If you can design an EDA component that can serve multiple uses, it is easier to assemble and reassemble them into event-driven composite applications.

![]() Understanding that the term agents is an alternative to the phrasing of event listeners, processors, and producers. Recommended best practices for EDA implementation include agent typing and the mandatory carriage of event metadata by EDA agents.

Understanding that the term agents is an alternative to the phrasing of event listeners, processors, and producers. Recommended best practices for EDA implementation include agent typing and the mandatory carriage of event metadata by EDA agents.

![]() In this chapter, we considered some situations where EDA is not the answer. Many factors could make an EDA a poor option, including high cost that cannot be justified by business outcomes of EDA, performance issues, and organizational readiness.

In this chapter, we considered some situations where EDA is not the answer. Many factors could make an EDA a poor option, including high cost that cannot be justified by business outcomes of EDA, performance issues, and organizational readiness.

![]() Finally, in this chapter, we looked at a sample commercial EDA offering to see what is available on the market today. We chose a high-end EDA suite, which includes an ESB, adapters, rules engine, and RFID server. It appears to be a robust solution, with many positive attributes, though any package of this type carries the risk of vendor lock-in and a reduction in the practical EDA skills of the development staff, who might rely on the prebuilt product to think EDA for them.

Finally, in this chapter, we looked at a sample commercial EDA offering to see what is available on the market today. We chose a high-end EDA suite, which includes an ESB, adapters, rules engine, and RFID server. It appears to be a robust solution, with many positive attributes, though any package of this type carries the risk of vendor lock-in and a reduction in the practical EDA skills of the development staff, who might rely on the prebuilt product to think EDA for them.

1. Oracle product datasheet