Event-driven architecture (EDA) falls into the maddening category of a technology paradigm that is half understood by many people who claim to know everything about it. Although we recognize that we, too, might not know absolutely everything there is to know about EDA, we believe that it is necessary to set out a working definition of EDA that we can adhere to throughout this book. Getting to an effective working definition of EDA is challenging because EDA must be described at a sufficiently high level that is comprehensible to nontechnologists, but at the same time not so high level as to sound vague or irrelevant.

An event-driven architecture is one that has the ability to detect events and react intelligently to them. For our purposes—and we discuss this in great detail later on—an event is a change in state that merits attention from systems. Brenda Michelson, a technology analyst, writes, “In an event-driven architecture, a notable thing happens inside or outside your business, which disseminates immediately to all interested parties (human or automated). The interested parties evaluate the event, and optionally take action.”1

One of the simplest examples of an event-driven system is actually from the noncomputer world. It is known as a thermostat. The thermostat is a mechanical device that turns the heat on or off based on its programmed reaction to an event, which is a change in temperature. The shift in temperature is the event, the “change in state” that triggers the reaction of the thermostat, which, in turn, affects the action of the heater.

We can see another simple example in the evolution of the automobile. Cars are becoming increasingly intelligent by reacting intelligently to their surroundings. If rain hits the windshield, the automobile recognizes the rain event and automatically turns on the windshield wipers, turns on the headlights, and adjusts the front windshield defroster. All of these things were formerly the driver’s responsibility, but now the car’s internal system uses its intelligence to react. An EDA is an architecture that acts in the same way: It detects events and reacts to them in an intelligent way. To be able to detect events and react to them intelligently, an EDA must have certain capabilities, including the ability to detect events, transmit messages among its components that an event has occurred, process the reaction to the event, and initiate the reaction to the event if that is called for. In generic architectural terms, these capabilities translate into the concepts of event producers, event consumers, messaging backbones, and event processors. These go by many different names in practice, and this is one of the great hurdles to getting a feel for what an EDA is at its core.

Many examples of EDAs occur in the realm of information systems, though most of the ones currently deployed are limited in scope. For example, if your credit card is simultaneously used in two separate geographical locations, those two events can be “heard” by the credit card processing systems and examined for a potential fraud pattern. The credit card fraud detection EDA is set up to listen for events that indicate potential fraud and respond—or not respond—depending on a set of rules that are programmed into the event processors. If the charge occurring out of state is at a mail order merchant where you have shopped before, the system might not deem the event pattern to be a fraud. If the second charge is for a high-dollar value at a merchant where you have not shopped before, the EDA might trigger a response that places a warning or “watch” status on your credit card account. Or, the activity might prompt a person to call you and find out if you have lost your card.

Or, imagine that an FAA air traffic control application needs to know the probability of rain in a certain location. At the same time, the Air Force needs the same data, as does NASA. Assuming that the weather data is collected and available on a server somewhere, it is possible to tightly couple that server, and the software running on it, with the FAA, Air Force, and NASA’s respective systems. Although this type of approach is frequently used, it is far easier to arrange for the weather application to publish the weather data and enable the subscribers (the FAA et al.) to get the data they need and use it however they need to use it. The weather status event of the weather application publishes the weather data so that the data subscribers can use it to drive the architecture. This is an event-driven architecture.

In this EDA, the FAA, Air Force, and NASA are integrated with the weather system by virtue of the fact that there is no specific coupling between the applications. Of course, they exchange data, but the applications are completely separate and have no inherent knowledge of one another. The developers do not need to know each other, and there is no need to coordinate. However, for it to work, they do need standards. To effectively disseminate and process events, the publisher and the subscriber might agree to use a commonly understood message format and a compatible transport mechanism.

One commonly used technology that is analogous to EDA is the Web itself. When you use a browser, you are initiating an integrated session with a remote system of which you have no specific knowledge. In all probability, you have no idea who programmed it, what language it’s written in, where it is, and so on. Yet, your browser can pull whatever information it is permitted to get and show it to you in a format that you can understand. The event of requesting the uniform resource locator (URL) triggers the action that results in the display of the Hypertext Markup Language (HTML) content in your browser window. As we develop our explanation of EDA, though, you will see that the Web is a very simple EDA.

An EDA consists of applications that are programmed to publish, subscribe, or take other actions upon events triggered by applications with which they share no formal coupling. For this reason, EDA has been likened to a “nervous system” for the enterprise.

Where would the IT industry be without metaphors? Even the idea of using the word architecture to describe how Byzantine networks of hardware, software, and data are configured shows how reliant we are on abstraction to achieve an understanding of what we are trying to accomplish in enterprise IT. In the spirit of metaphors, then, we shall borrow a concept from human physiology, the central nervous system, to further our understanding of EDA.

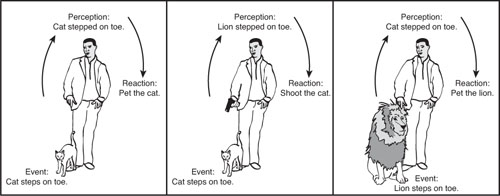

If your cat steps on your toe, how do you know it? How do you know it’s a cat, and not a lion? You might want to pet the cat, but shoot the lion. When the cat’s paw presses against your toe, the nerve cells in your toe fire off a signal to your brain saying, “Hey, someone stepped on my toe.” Also, they send a message that says something like, “It doesn’t hurt that much” and “It was probably a cat.” Or, if you saw the cat, the signals from your optic nerve are synthesized with those from your toe, each invoking your mental data store of animals and likely toe steppers, and you should know pretty quickly that it was, indeed, a cat that stepped on your toe. Your central nervous system is a massively complex set of sensory receptors, wires, and integration points, known as synapses. The nervous system is critical to your functioning and survival in the world. Just imagine if your central nervous system didn’t work well and you confused the cat with the lion. As Figure I.1 shows, you might shoot your cat and pet the lion, which would then eat you.

Our enterprises have their own nervous systems, too. Our Web sites, enterprise resource planning (ERP) systems, customer relationship management (CRM) systems, databases, and network infrastructures, for example, all work to feed the corporate equivalent of sensory information to the corporate “brain.” The corporate brain, in turn, assesses the input and reacts. Of course, the corporate brain might contain a few actual brains as well, in the form of employees, but their sensory input is determined by the enterprise nervous system. For example, if there is an increase in cash withdrawals at a bank, the banking systems, acting like nerve sensors in our toe, fire off withdrawal data to the corporate brain. The neurons in the corporate brain then route the data to its destination, which could be an automated bank cash reserve management system, the executive management team of the bank, or a combination. As our brain assesses and reacts to the cat stepping on our toe, the corporate brain of the bank must assess the input of the withdrawal spike and react.

If our enterprises were living beings, most of them would need some pretty intensive neurological care. Unlike a well-functioning person, whose nervous system can learn how to react to different stimuli and determine the best way to handle a given situation based on sensory input and mental processing, the typical enterprise has a nervous system that is hardwired to react in a specific set of ways that might not be ideal for every situation. In the cat-on-toe situation, most of our enterprises would probably expect the worst and then shoot in the general direction of the cat. Or, perhaps a more realistic version of the metaphor—the enterprise wouldn’t even know that anything had stepped on its toe, or that it even had a toe. It would be completely unaware most likely because it was never given the ability to be aware.

Like the person whose knee-jerk reaction is to shoot the cat regardless of what is going on, most of our enterprises have a nervous system that is not well set up to receive the data equivalent of sensory input, process it, know what it is, and react in an appropriate way. Event-driven architecture is an approach to IT that gives the enterprise the ability to improve its nervous system and have a level of adaptability and awareness that it needs. This is what we typically hear described as agility: the ability to react intelligently to stimuli and also continually reshape the reaction as circumstances change.

Data is powerful, if you can see it and know what to do with it. To paraphrase Levitt and Dubner and their great book, Freakonomics, an EDA provides potential adaptation data that exists in streams that we can’t possibly see on our own. Levitt and Dubner characterize the Internet as a “gigantic horseshoe magnet waved over an endless sea of haystacks, plucking the needle out of each one.” Similarly, an EDA—the nervous system—gives us a way to acquire data and to make the data we have meaningful. For example, if we knew that every day we experience what it feels like to have a lion stepping on our toe, followed by no negative reaction, we might learn to ignore it as unimportant—or begin to assume that it’s not a lion. That’s fine, until a lion does step on our toe...

Building an EDA to instill good functioning to the enterprise nervous system involves getting the various sensors, message pathways, and reacting logic processors to work together. In broad terms, this is known as interoperation, and it is the heart of the new EDA discussion going on today.

Reading about EDA as a “new” idea might give you a sense of déjà vu. As we saw with the familiar credit card fraud example, EDA is not a new concept. However, the current crop of EDAs uses proprietary standards for communication, and although they work well, they are, in effect, tightly coupled EDAs that can only share information among systems that use a compatible standard. For instance, it is possible to set up a fairly effective EDA if all systems are built on the same platform. Vendors have long provided high-performance pub/sub engines for compatible systems. The only problem is, as we know, not everyone is on the same platform, despite the dramatic sales efforts of some of Silicon Valley’s best and brightest minds. The good news is that many platform vendors have released new service-based EDA products, which do not rely on tight coupling.

The quest for a well-functioning enterprise nervous system has been the catalyst for the development of EDA for many years. Why, then, is EDA receiving such renewed and intense interest today? The reason has to do with the explosion in interoperability and the standardization of data across multiple enterprises, which changes the game of EDA.

Ultimately, the existence of an EDA is dependent on interoperability among systems. You can’t have awareness and reaction to events if the systems cannot communicate with one another. Existing EDA setups are invariably tightly constrained and narrow in their functionality because it has been so difficult, or costly, to achieve the level of interoperation of EDA components needed for any kind of dynamic or complex EDA functionality. That is now changing. Today, with the advent of open standards and the breakthroughs in system interoperability from service-oriented architectures (SOAs), it is now possible to establish EDAs that are far more intelligent, dynamic, and far-reaching than ever before.

To put the interoperability evolution in context, we will share a lunch conversation we had recently with a man who had been responsible for designing and implementing the basic underpinnings of the worldwide airline reservation and automated teller machine infrastructures. In the last few years, he has been involved in other pursuits, so he was eager to learn about SOA and EDA, the new tech trends that he had been hearing so much about in the industry media.

When we explained how the related concepts of SOA and EDA allowed, for the first time ever, truly open interoperation among heterogeneous software applications, regardless of operating system, network protocol, or programming language, he gave us a perplexed look. “That’s new?” he asked with a slight smirk. “That idea has been around since 1961.”

And, of course, he was right. The idea of open interoperability has been in the air for decades. Just like the automobiles have been around since the late 1800s. Even today, we still use a combustion engine to drive the wheels, so the essence hasn’t changed much. However, never before have cars been so reactive to our needs and their surroundings.

The same evolution is true for the software and the circumstances that we find ourselves in now, in 2009. Over the last eight years, from 2001 to 2009, we have seen an unprecedented shift in the IT industry toward the use of open standards for the purpose of integrating diverse software applications. Also, more and more companies are exposing their data in a standardized fashion further expanding the circle of opportunity.

This all started back in 2001.... An unusually broad group of major IT companies, including IBM, Oracle, Microsoft, BEA, and others, agreed to conform to a specific set of Extensible Markup Language (XML) standards for interoperation between software applications. These standards, known collectively as the Web Services protocol, provide a technological basis for any application in the world to exchange data or procedure calls with any other application, regardless of location, network, operating system, or programming language.

Specifically, the major standards that were ratified included Simple Object Access Protocol (SOAP), which is the message formatting standard, Web Services Description Language (WSDL), which sets out a standard document format with which to describe a Web service, and Universal Description, Discovery, and Integration (UDDI), a Web services registry application programming interface (API), that are available for use in a particular domain.

Thus, Web services are software-based interfaces that are universally understandable, self-describing, and universally discoverable. As our colleague Jnan Dash, the legendary lead engineer of the Oracle Database, puts it, the combination of the Internet and Web services makes possible a kind of “universal dial tone” for all applications. (The Internet is the dial tone and Web services give you the ability to “dial.”) With Web services, it is entirely possible for an application written in C to interoperate with a J2EE application without the need for any proprietary middleware. As a whole, the large-scale development and integration of Web services is a key step toward developing a service-oriented architecture (SOA). SOA represents a model in which functionality is decomposed into small, distinct units (services—for example, Web services), which can be distributed over a network and can be combined together and reused to create business applications. These services communicate with each other by passing data from one service to another or by coordinating an activity between two or more services.2 The industry vision that is fueling the SOA trend is that one day virtually any application needed in your enterprise (whether it is inside your firewall or not) will be available as a Web service and will be freely interoperable with other applications, enabling the decomposition of application functionality into small units that can interoperate or be orchestrated in composite applications. This vision is idealized, and it is likely that a full-blown SOA of this type will never actually come into existence. However, many are approaching the paradigm in steps.

Many enterprises have begun to introduce service-orientation in their architectures, selectively exposing capabilities through Web services in configurations that suit specific business needs and selectively service enabling core legacy systems. This is a remarkable achievement for an industry that was very much in the doghouse after the Y2K panic and dot.com fiascos of 2001. The most striking thing about SOA, beyond the fact that Web services standards were adopted simultaneously by many large IT vendors, is the fact that it actually works. SOA is very much the technological trend of the moment, and it is everywhere. You see SOA as a prominent feature set in products from Microsoft, Oracle, IBM, SAP, and so on. Virtually every major technology company has announced an SOA strategy or even shifted their entire market focus to being service-oriented. A sure sign that SOA had reached prime time was when Accenture announced that it was going to spend $450 million on an SOA consulting initiative for its global clients.

SOA removes much, if not all, of the proprietary middleware and network compatibility blockages that inhibit rapid changes in application integration. As a result, they can loosen the coupling between applications. Given how important agility is, tight coupling is rightly held out as the enemy of agility. Loose coupling is the enabler of agility and SOA delivers loose coupling. Changes become simpler, faster, and cheaper. As integration agility becomes reality, so does EDA and its increased awareness. Therefore, SOA delivers the necessary agility required for an EDA. However, achieving this goal of EDA through loose coupling without destroying a range of security, governance, and performance standards requires a great deal of planning and work. And, as we start to see, the path from where we are now, to SOA and then EDA, is not always clear.

This is not a cookbook, but it can put you on track for finding the right use for EDA in your organization and getting it started. At the very least, our intent is to familiarize you with this exciting new technological paradigm—and you will need this familiarity if you are a professional working in technology today. EDA and SOA are appearing in a myriad of commercial IT offerings and technological media articles. You need to know about EDA.

How you approach EDA is up to you, and if your career has been like ours, you might agree that rushing is seldom a good idea, especially when a new technology is involved. Our goal is to inform and stimulate your thinking on the subject. Whatever the ETA is for your EDA, only you will know the right way to proceed. Our wish is to give you the knowledge and insight you need to make it a success.

1. Michelson, Brenda. “Event Driven Architecture Overview.” Paper published by Patricia Seybold Group (2/2/2006).