Monitors are language features for concurrent programming. A monitor encapsulates data, which can only be observed and modified by monitor access procedures. Only a single access procedure may be active at a time. An access procedure thus has mutually exclusive access to the data variables encapsulated in the monitor. Monitors should sound familiar since we have already seen the monitor concept in the last chapter, though explained using different terminology. An object satisfies the data access requirement of a monitor since it encapsulates data which, if declared private, can be accessed only by the object's methods. These methods can be synchronized to provide mutually exclusive access. Thus, a monitor is simply represented in Java as a class that has synchronized methods.

Monitors support condition synchronization in addition to ensuring that access to the data they encapsulate is mutually exclusive. Condition synchronization, as the term suggests, permits a monitor to block threads until a particular condition holds, such as a count becoming non-zero, a buffer becoming empty or new input becoming available. This chapter describes how condition synchronization in monitors is modeled and how it is implemented in Java.

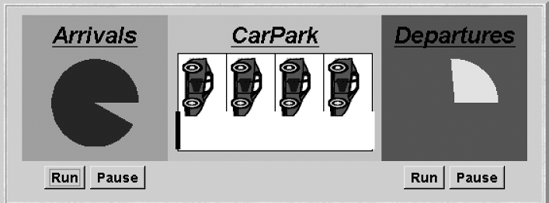

We illustrate condition synchronization using a simple example. A controller is required for a car park, which only permits cars to enter when the car park is not full and, for consistency, does not permit cars to leave when there are no cars in the car park. A snapshot of our Java simulation of the car park is given in Figure 5.1. It depicts the situation in which the car park is full, the barrier is down and no further cars are permitted to enter. Car arrivals and departures are simulated by separate threads. In Figure 5.1, the departures thread has been stopped to allow the car park to become full. The arrivals thread is therefore blocked from further progress.

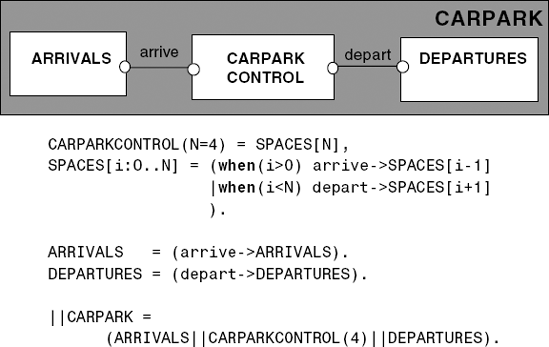

The first step in modeling a system is to decide which events or actions are of interest. In the car park system, we can abstract details such as display panel rotation and the starting and stopping of the display threads. We thus omit the actions concerned with running, rotation, pausing and terminating threads that we modeled in section 3.2.1. Instead, we concern ourselves with only two actions: car arrival at the car park and car departure from the car park. These actions are named arrive and depart respectively. The next step is to identify the processes. These are the arrivals process, the departures process and the process that controls access to the car park. Both the arrivals process and the departures process are trivial. They attempt to generate, respectively, a sequence of arrival actions and a sequence of departure actions. The car park control must only permit arrival actions to occur when there is space in the car park and departures to occur when there are cars in the car park. This expresses the synchronization conditions that must be satisfied by the other processes when interacting with the car park.

The car park model is given in Figure 5.2. The CARPARKCONTROL process uses the indexed state SPACES to record the number of available spaces in the car park. The control requirements described above have been modeled using the FSP guarded action construct (see section 2.1.5). Thus in state SPACES[0], arrive actions are not accepted and in state SPACES[N], depart actions are not accepted.

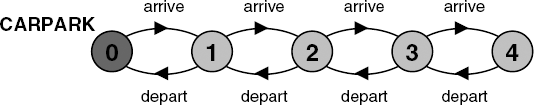

The behavior of the car park system is depicted as an LTS in Figure 5.3. The LTS has been generated directly from the model of Figure 5.2. It clearly shows that a maximum of four arrive actions can be accepted before a depart action must occur.

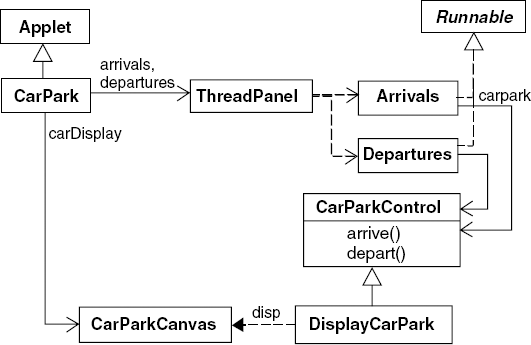

Our models of concurrent systems represent all the entities in a system as processes. In implementing the behavior of a model as a Java program, we must decide which entities are active and which are passive. By active, we mean an entity that initiates actions; this is implemented as a thread. By passive, we mean an entity that responds to actions; this is implemented as a monitor. As we will see in subsequent examples, the decision as to which processes in a model become threads in the implementation and which become monitors is not always clear-cut. However, in the car park example, the decision is clear. The processes ARRIVALS and DEPARTURES, which initiate arrive and depart actions, should be implemented as threads. The CARPARKCONTROL process, which responds to arrive and depart actions, should be a monitor. The class structure of the car park program is depicted in Figure 5.4.

We have omitted the DisplayThread and GraphicCanvas threads managed by ThreadPanel to simplify Figure 5.4. These are organized in exactly the same way as depicted in the class diagram for ThreadDemo in Chapter 3. The classes that are relevant to the concurrent execution of the program are the two Runnable classes, Arrivals and Departures, and the CarParkControl class, which controls arrivals and departures. Instances of these classes are created by the CarPark applet start() method:

publicvoid start() { CarParkControl c =newDisplayCarPark(carDisplay,Places); arrivals.start(newArrivals(c)); departures.start(newDepartures(c)); }

Arrivals and Departures are instances of the ThreadPanel class and carDis-play is an instance of CarParkCanvas as shown in the class diagram.

The code for the Arrivals and Departures classes is listed in Program 5.1. These classes use a ThreadPanel.rotate() method which takes as its parameter the number of degrees the rotating display segment is moved. The CarParkControl class must block the activation of arrive() by the arrivals thread if the car park is full and block the activation of depart() by the departures thread if the car park is empty. How do we implement this in Java?

Example 5.1. Arrivals and Departures classes.

classArrivalsimplementsRunnable { CarParkControl carpark; Arrivals(CarParkControl c) {carpark = c;}publicvoid run() {try{while(true) { ThreadPanel.rotate(330); carpark.arrive(); ThreadPanel.rotate(30); } }catch(InterruptedException e){} } }classDeparturesimplementsRunnable { CarParkControl carpark; Departures(CarParkControl c) {carpark = c;}publicvoid run() {try{while(true) { ThreadPanel.rotate(180); carpark.depart(); ThreadPanel.rotate(180); } }catch(InterruptedException e){} } }

Java provides a thread wait set per monitor; actually, per object, since any object may have a monitor synchronization lock associated with it. The following methods are provided by class Object from which all other classes are derived.

Note

public finalvoid notify()Wakes up a single thread that is waiting on this object's wait set.

public finalvoid notifyAll()Wakes up all threads that are waiting on this object's wait set.

public finalvoid wait()throwsInterruptedException

Waits to be notified by another thread. The waiting thread releases the synchronization lock associated with the monitor. When notified, the thread must wait to reacquire the monitor before resuming execution.

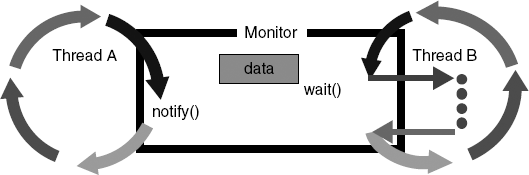

The operations fail if called by a thread that does not currently "own" the monitor (i.e. one that has not previously acquired the synchronization lock by executing a synchronized method or statement). We refer to a thread entering a monitor when it acquires the mutual exclusion lock associated with the monitor and exiting the monitor when it releases the lock. From the above definitions, it can be seen that a thread calling wait() exits the monitor. This allows other threads to enter the monitor and, when the appropriate condition is satisfied, to call notify() or notifyAll() to awake waiting threads. The operation of wait() and notify() is depicted in Figure 5.5.

The basic format for modeling a guarded action for some condition cond and action act using FSP is shown below:

FSP: when cond act -> NEWSTATThe corresponding format for implementing the guarded action for condition cond and action act using Java is as follows:

Java:public synchronizedvoid act()throwsInterruptedException {while(!cond) wait();// modify monitor datanotifyAll() }

The while loop is necessary to ensure that cond is indeed satisfied when a thread re-enters the monitor. Although the thread invoking wait() may have been notified that cond is satisfied, thereby releasing it from the monitor wait set, cond may be invalidated by another thread that runs between the time that the waiting thread is awakened and the time it re-enters the monitor (by acquiring the lock).

If an action modifies the data of the monitor, it can call notifyAll() to awaken all other threads that may be waiting for a particular condition to hold with respect to this data. If it is not certain that only a single thread needs to be awakened, it is safer to call notifyAll() than notify() to make sure that threads are not kept waiting unnecessarily.

Returning to the car park example, the implementation of the CarParkControl monitor is given in Program 5.2. Since either the ARRIVALS thread is blocked waiting space or the DEPARTURES thread is blocked waiting cars and these conditions are exclusive, only a single thread may be waiting on the monitor queue at any one time. Consequently, we can use notify() rather than notifyAll(). Note that we have made the spaces and capacity variables protected rather than private so that they can be accessed by the display class that is derived from CarParkControl.

The general rules for guiding the translation of the process model into a Java monitor are as follows:

Note

Each guarded action in the model of a monitor is implemented as a synchronized method which uses a while loop and wait() to implement the guard. The while loop condition is the negation of the model guard condition.

and

Note

Changes in the state of the monitor are signaled to waiting threads using notify() or notifyAll().

Example 5.2. CarParkControl monitor.

classCarParkControl {protectedint spaces;protectedint capacity; CarParkControl(n) {capacity = spaces = n;}synchronizedvoid arrive()throwsInterruptedException {while(spaces==0)wait();--spaces;notify();}synchronizedvoid depart()throwsInterruptedException{while(spaces==capacity)wait();++spaces;notify();} }

Thus in the car park model:

FSP: when(i>0) arrive->SPACES[i-1]becomes

Java: while (spaces==0) wait(); --spaces;and

FSP: when(i<N) depart->SPACES[i+1]becomes

Java: while (spaces==N) wait(); ++spaces;The state of the car park monitor is the integer variable spaces. Each method modifies spaces and consequently, the change is signaled by notify() at the end of each method.

Semaphores, introduced by Dijkstra (1968a), were one of the first mechanisms proposed to deal with inter-process synchronization problems. A semaphore s is an integer variable that can take only non-negative values. Once s has been given an initial value, the only operations permitted on s are up(s) and down(s) defined as follows:

down(s): when s> 0 do decrement s;

up(s): increment s

In Dijkstra's original proposal, the down operation was called P (for the first letter in the Dutch word passeren, which means "to pass"). The up operation was called V (for the first letter of the Dutch word vrijgeven, which means "to release"). Semaphores are implemented in the kernels of many operating systems and real-time executives. The above definition of semaphores seems to imply some sort of busy wait by down(s) until s becomes non-zero. In fact, in the kernel of an operating system, semaphores are usually implemented by a blocking wait as shown below:

down(s): | if s> 0 then |

decrement s | |

else | |

block execution of the calling process | |

up(s): | if processes blocked on s then |

awaken one of them | |

else | |

increment s |

Implementations of semaphores usually manage processes, blocked on a semaphore by down, as a first-in-first-out (FIFO) queue. The up operation awakens the process at the head of the queue. FIFO queuing should not be relied on in reasoning about the correctness of semaphore programs.

In the following, we describe how semaphores are modeled and how they can be implemented using Java monitors. However, it should be realized that semaphores are a low-level mechanism sometimes used in implementing the higher-level monitor construct, rather than vice versa as presented here for pedagogic reasons.

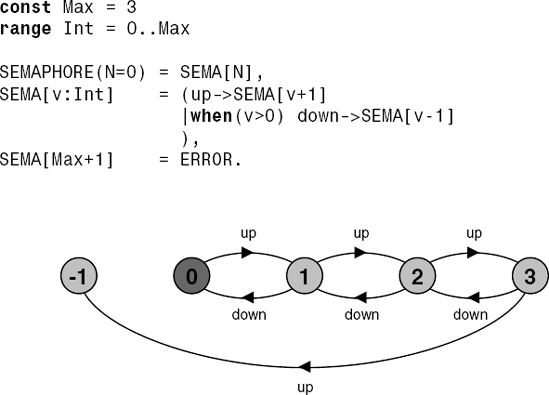

The models of concurrent systems that we can describe in FSP are finite, to ensure they can be analyzed. Consequently, we can only model semaphores that take a finite range of values. If the range is exceeded then this is regarded as an error in the model as described below:

constMax = 3rangeInt = 0..Max SEMAPHORE(N=0) = SEMA[N], SEMA[v:Int] = (up->SEMA[v+1] |when(v>0) down->SEMA[v-1] ), SEMA[Max+1] = ERROR.

The behavior of the semaphore is depicted in Figure 5.6, with the ERROR state indicated by state (−1). In fact, since the FSP compiler automatically maps undefined states to the ERROR state, we can omit the last line of the description and model a semaphore more succinctly as:

SEMAPHORE(N=0) = SEMA[N],

SEMA[v:Int] = (up->SEMA[v+1]

|when(v>0) down->SEMA[v-1]

).The model follows directly from the first definition for a semaphore in the previous section. The action down is only accepted when the value v of the SEMAPHORE is greater than zero. The action up is not guarded. SEMAPHORE can take values in the range 0..Max and has an initial value N.Ifan up action causes Max to be exceeded then SEMAPHORE moves to the ERROR state. When SEMAPHORE is used in a larger model, we must ensure that this ERROR state does not occur. As an example, we model the use of semaphores to provide mutual exclusion.

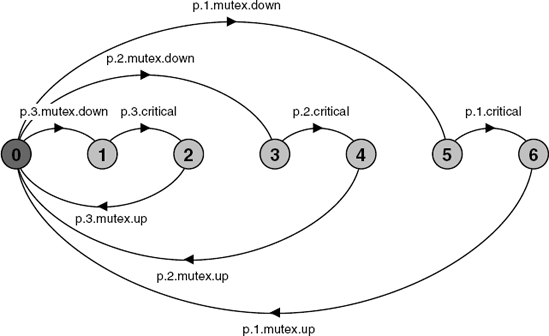

Figure 5.7 depicts a model in which three processes p[1..3] use a shared semaphore mutex to ensure mutually exclusive access to some resource. Each process performs the action mutex.down to get exclusive access and mutex.up to release it. Access to the resource is modeled as the action critical (for the critical section of code used to access the shared resource). The model for each of the processesis as shownbelow:

LOOP = (mutex.down->critical->mutex.up->LOOP).

The composite process SEMADEMO, which combines processes and semaphore and which is depicted graphically in Figure 5.7, is defined as:

||SEMADEMO = (p[1..3]:LOOP

||{p[1..3]}::mutex:SEMAPHORE(1)).Note that for mutual exclusion, the semaphore must be given the initial value one. The first process that tries to execute its critical action, performs a mutex.down action making the value of mutex zero. No further process can perform mutex.down until the original process releases exclusion by mutex.up. This can be seen clearly from the SEMADEMO labeled transition system in Figure 5.8.

It should also be clear from Figure 5.8 that no ERROR state is reachable in SEMADEMO since it does not appear in the LTS. In fact, the value of the semaphore does not exceed one (from the LTS, we can see that a trace of two consecutive mutex.up actions without an intermediate mutex.down cannot occur). For mutual exclusion, it is sufficient to use a binary semaphore which takes the values 0 or 1. We have already seen in the previous chapter that we can use the analysis tool to check mechanically for errors. We will see in subsequent chapters that we do not have to rely on visual inspection of the LTS to assert properties concerning sequences of actions. Of course, in this example, we can quickly check if mutex ever goes above 1 by setting Max to 1 and searching for errors.

Semaphores are passive objects that react to up and down actions; they do not initiate actions. Consequently, we implement a semaphore in Java as a monitor class. The actions up and down become synchronized methods. The guard on the down action in the model is implemented using condition synchronization as we saw in section 5.1. The class that implements semaphores is listed in Program 5.3.

Example 5.3. Semaphore class.

public classSemaphore {privateint value;publicSemaphore (int initial) {value = initial;}synchronized publicvoid up() { ++value; notify(); }synchronized publicvoid down()throwsInterruptedException {while(value== 0) wait(); --value; } }

Even though the down() method in Program 5.3 changes the state of the monitor by decrementing value, we do not use notify() to signal the change in state. This is because threads only wait for the value of the semaphore to be incremented, they do not wait for the value to be decremented. The semaphore implementation does not check for overflow on increment. This is usually the case in semaphores implemented by operating systems. It is the responsibility of the programmer to ensure, during design, that overflow cannot occur. We advocate the use of analyzable models to check such properties.

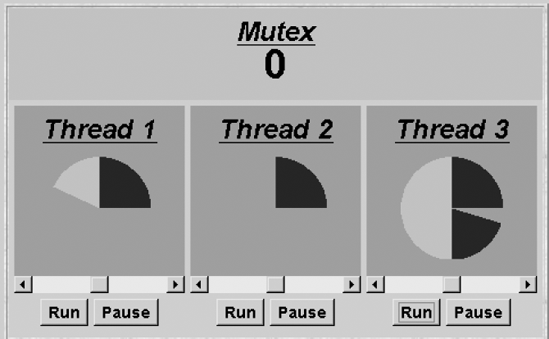

Figure 5.9 depicts the display of the semaphore demonstration program modeled in the previous section. A thread executing in its critical section is indicated by a lighter-colored segment. Each thread display rotates counter-clockwise. In Figure 5.9, Thread 1 is executing in its critical section, Thread 2 is blocked waiting to enter its critical section and Thread 3 has finished its critical section and is executing non-critical actions. The sliders underneath each thread adjust the time a thread spends executing critical, as opposed to non-critical, actions. If the total time spent in critical sections by all three threads is less than a full rotation then it is possible to get all three threads to execute concurrently. In other words, there need be no conflict for access to the critical resource. This is often the case in real systems. Mechanisms for mutual exclusion only take effect when there is conflicting access to a shared resource. In real systems, it is therefore advisable to keep the time spent in critical sections as short as possible so as to reduce the likelihood of conflict.

Example 5.4. Extended version of ThreadPanel class.

public classThreadPanelextendsPanel {// construct display withtitleand rotating arc colorcpublicThreadPanel(String title, Color c) {...}// hasSlider == truecreates panel with sliderpublicThreadPanel (String title, Color c, boolean hasSlider) {...}// rotate display of currently running thread 6 degrees// return false when in initial color// return true when in second colorpublic staticboolean rotate()throwsInterruptedException {...}// rotate display of currently running thread bydegreespublic staticvoid rotate(int degrees)throwsInterruptedException {...}// create a new thread with targetrand start it runningpublicvoid start(Runnable r) {...}// stop the thread using Thread.interrupt()publicvoid stop() {...} }

The program behind the display of Figure 5.9 uses the same ThreadPanel class as before; however, it uses a different constructor to create the display with multi-colored segments. The interface offered by the class, extended with the methods used in this chapter, is shown in Program 5.4.

The MutexLoop class, which provides the run() method for each thread, is listed in Program 5.5. The critical (mutually exclusive) actions are the rotate() actions which are executed when the segment changes to the lighter color. This is indicated by the rotate() method returning false when the rotating arc is dark-colored and true when light-colored.

The threads and semaphore are created in the usual way by the applet start() method:

publicvoid start() { Semaphore mutex =newDisplaySemaphore(semaDisplay,1);

Example 5.5. MutexLoop class.

classMutexLoopimplementsRunnable { Semaphore mutex; MutexLoop (Semaphore sema) {mutex=sema;}publicvoid run() {try{while(true) { while(!ThreadPanel.rotate()); mutex.down();// get mutual exclusionwhile(ThreadPanel.rotate());//critical actionsmutex.up();//release mutual exclusion} }catch(InterruptedException e){} } }

thread1.start(newMutexLoop(mutex)); thread2.start(newMutexLoop(mutex)); thread3.start(newMutexLoop(mutex)); }

where thread1, thread2 and thread3 are ThreadPanel instances and semaDisplay is an instance of NumberCanvas.

Buffers are frequently used in concurrent systems to smooth out information transfer rates between the producers of data and the consumers of that data. Consider, for example, a keyboard device driver that is supplying characters typed at a keyboard to an editor program. The editor can consume characters at a much faster rate, on average, than a person can type at a keyboard. However, some characters can take longer than others to process, for example a character that causes the screen to scroll or a keyboard command that invokes a formatting command. When the editor is processing a character that takes a long time to process, it is necessary to buffer characters from the keyboard, otherwise they would be lost. This buffer is sometimes referred to as the type-ahead buffer. It is an example of the sort of buffer that we describe in the following.

In this section we model and program a bounded buffer, which consists of a fixed number of slots. Items are put into the buffer by a producer process and removed by a consumer process. The buffer is organized so that the first item put into it will be the first item out (FIFO).

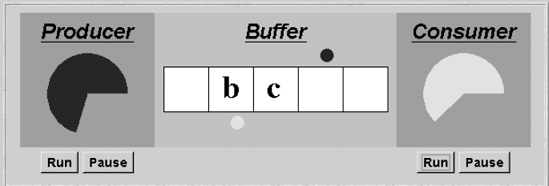

Figure 5.10 depicts the display of our example system in which a producer process communicates characters to a consumer process via a five-slot buffer. The small circle above the buffer indicates the next free slot into which the producer process can place a character. The circle below the buffer indicates the next slot from which the consumer process can take a character. The reader may note the similarity between this example and the initial car park example in section 5.1. In fact, the synchronization requirements are the same. The producer is only allowed to put a character into the buffer when there is a free slot and the consumer process can only get a character when there is at least one in the buffer. These are exactly the requirements for the car park, if we substitute space for slot and car for character. What is different between the two examples is the FIFO discipline enforced by the buffer, in contrast to the car park where cars can occupy any free space and need not leave in arrival order.

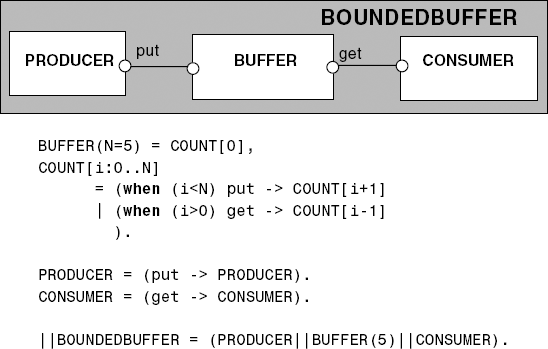

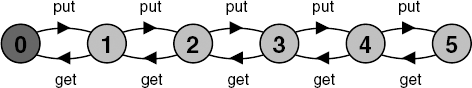

The producer–consumer system with a bounded buffer is an example of a program that handles data items without altering them. In addition, the behavior of the producer, the consumer and the buffer itself are not affected by the value of the items they handle. In other words, they do not test the value of these data items. The behavior is said to be data-independent. If data independence can be established then models can be simplified by omitting the detailed representation of parameters and data structures. This leads to much smaller and more tractable models. The get and put operations in Figure 5.11 are simple actions that do not have parameters. The LTS for the bounded buffer system, depicted in Figure 5.12, should be compared with the car park LTS of Figure 5.3 to see the similarity between the synchronization behavior of the two systems.

The BUFFER of the model becomes a monitor in the Java implementation, with synchronized methods put and get (Program 5.6). We have separated the interface of the buffer from its implementation since we will provide an alternative implementation in the next section.

The buffer has been implemented as a general-purpose class that can buffer any type of Java object. The buffer data structure is a fixed size array buf, indexed by in which points to the next free slot and out which points to the next slot to be emptied. These indexes are incremented modulo the size of the buffer. The code for the producer and consumer programs is listed in Program 5.7.

Example 5.6. Buffer interface and BufferImpl class.

publicinterface Buffer<E> {publicvoid put(E o)throwsInterruptedException;//put object into bufferpublicE get()throwsInterruptedException;//get object from buffer}publicclass BufferImpl<E>implementsBuffer<E> {protectedE[] buf;protectedint in = 0;protectedint out= 0;protectedint count= 0;protectedint size;publicBufferImpl(int size) { this.size = size; buf = (E[])new Object[size]; }publicsynchronized void put(E o)throwsInterruptedException {while(count==size) wait(); buf[in] = o; ++count; in=(in+1) % size; notifyAll(); }publicsynchronized E get()throwsInterruptedException {while(count==0) wait(); E o = buf[out]; buf[out]=null; --count; out=(out+1) % size; notifyAll(); return (o); } }

Example 5.7. Producer and Consumer classes.

classProducerimplementsRunnable { Buffer<Character> buf; String alphabet= "abcdefghijklmnopqrstuvwxyz"; Producer(Buffer<Character> b) {buf = b;}publicvoid run() {try{ int ai = 0;while(true) { ThreadPanel.rotate(12); buf.put(alphabet.charAt(ai)); ai=(ai+1) % alphabet.length(); ThreadPanel.rotate(348); } }catch(InterruptedException e){} } }classConsumerimplementsRunnable { Buffer<Character> buf; Consumer(Buffer<Character> b) {buf = b;}publicvoid run() {try{while(true) { ThreadPanel.rotate(180); Character c = buf.get(); ThreadPanel.rotate(180); } }catch(InterruptedException e ){} } }

Suppose that we did not wish to use condition synchronization directly in the implementation of the buffer monitor class but instead we decided to use two semaphores full and empty to reflect the state of the buffer. The semaphore empty counts the number of spaces and is decremented during a put operation. The put is, of course, blocked if the value of empty is zero. Similarly, full counts the number of items in the buffer and is decremented by a get operation. The get is therefore blocked if the value of full is zero. The modified buffer class is shown in Program 5.8.

Example 5.8. Buffer class using semaphores.

classSemaBuffer<E>implementsBuffer<E> {protectedE[] buf;protectedint in = 0;protectedint out= 0;protectedint count= 0;protectedint size; Semaphore full;//counts number of itemsSemaphore empty;//counts number of spacesSemaBuffer(int size) { this.size = size; buf = (E[])newObject[size]; full =newSemaphore(0); empty=newSemaphore(size); }synchronizedpublic void put(E o)throwsInterruptedException { empty.down(); buf[in] = o; ++count; in=(in+1) % size; full.up(); }synchronizedpublic E get()throwsInterruptedException{ full.down(); E o =buf[out]; buf[out]=null; --count; out=(out+1) % size; empty.up(); return (o); } }

The semaphores of Program 5.8 replace the count variable in the original implementation, the conditional waits on the value of count and the notification of changes in its value. An updated model to reflect the changes in the buffer implementation is shown below:

constMax = 5rangeInt = 0..Max SEMAPHORE(I=0) = SEMA[I], SEMA[v:Int] = (up->SEMA[v+1] |when(v>0) down->SEMA[v-1] ). BUFFER = (put -> empty.down ->full.up ->BUFFER |get -> full.down ->empty.up ->BUFFER ). PRODUCER = (put -> PRODUCER). CONSUMER = (get -> CONSUMER). ||BOUNDEDBUFFER = (PRODUCER|| BUFFER || CONSUMER ||empty:SEMAPHORE(5) ||full:SEMAPHORE(0))@{put,get}.

A problem occurs when we check this model using the analyzer tool LTSA and find that it reports a potential deadlock together with a trace of actions to that deadlock:

Composing

potential DEADLOCK

States Composed: 28 Transitions: 32 in 60ms

Trace to DEADLOCK:

getWe discuss deadlock in more detail in the next chapter. However, in essence, it means that a system can make no further progress since there are no further actions it can take. The deadlock in the model can be seen in the demonstration version of the program by starting the consumer and letting it block, waiting to get a character from the empty buffer. When the producer is started, it cannot put a character into the buffer. Why? The reason is to do with the use of two levels of synchronization lock: the first gives mutually exclusive access to the buffer monitor and the second gives mutually exclusive access to the semaphores.

When the consumer calls get, it acquires the Buffer monitor lock and then acquires the monitor lock for the full semaphore by calling full.down() to check if there is something in the buffer. Since initially the buffer is empty, the call to full.down() blocks the consumer thread (using wait())andreleasesthe monitor lock for the full semaphore. However, it does not release the monitor lock for Buffer. Consequently, the producer cannot enter the monitor to put a character into the buffer and so no progress can be made by either producer or consumer – hence the deadlock. The situation described above is known as the nested monitor problem. The only way to avoid it in Java is by careful design. In our example, the deadlock can be removed by ensuring that the monitor lock for the buffer is not acquired until after semaphores are decremented (Program 5.9).

Example 5.9. Fixed bounded buffer using semaphores.

classFixedSemaBuffer<E>implementsBuffer<E> {protectedE[] buf;protectedint in = 0;protectedint out= 0;protectedint count= 0;//only used for display purposesprotectedint size; Semaphore full;//counts number of itemsSemaphore empty;//counts number of spacesFixedSemaBuffer(int size) { this.size = size; buf = (E[])newObject[size]; full =newSemaphore(0); empty=newSemaphore(size); }publicvoid put(E o)throwsInterruptedException { empty.down(); synchronized(this){ buf[in] = o; ++count; in=(in+1)%size; } full.up(); }publicE get()throwsInterruptedException{ full.down(); E o;synchronized(this){ o =buf[out]; buf[out]=null; --count; out=(out+1)%size; } empty.up(); return (o); } }

As mentioned before, in this book we advocate the use of the model and analysis to aid in the process of "careful design". Those parts of the model which need to be revised to take into account the changes to the buffer, documented in Program 5.9, are shown below:

BUFFER = (put -> BUFFER

|get -> BUFFER

).

PRODUCER = (empty.down->put ->full.up ->PRODUCER).

CONSUMER = (full.down ->get ->empty.up->CONSUMER).Moving the semaphore actions from the buffer process to the producer and consumer processes reflects the change in the implementation where the semaphore actions are performed outside the monitor (i.e. before acquiring the monitor lock). If this modified model is composed and minimized, it generates an identical LTS to that depicted in Figure 5.12 for the original model. This gives us confidence that our revised semaphore implementation of the bounded buffer is equivalent to the original one which used wait() and notify() directly.

An invariant for a monitor is an assertion concerning the variables it encapsulates. This assertion must hold whenever there is no thread executing inside the monitor. Consequently, the invariant must be true at any point that a thread releases the monitor lock – when a thread returns from a synchronized method call and when a thread is blocked by a wait(). A formal proof of the correctness of a monitor can be achieved by demonstrating that the constructor for a monitor establishes the invariant and that the invariant holds after the execution of each access method and just before a wait() is executed. Such an approach requires a programming logic, a formal logical system that facilitates making precise statements about program execution. Greg Andrews (1991) uses this approach in his book. Similarly, Fred Schneider (1997) discusses formal derivation and reasoning about concurrent programs in his book.

Instead, for the reasons outlined at length in Chapter 1, we have chosen to use a model-based approach amenable to mechanical proof. The disadvantage is that our mechanical proofs only apply to specific cases or models while the manual proof-theoretic approach used by Andrews permits correctness proofs for the general case. For example, a proof method could establish monitor correctness for all sizes of a bounded buffer rather than just a specific size. However, it is usually the case that if a model for a specific case is shown to be correct, the general case can be inferred by induction.

Although we do not use invariants in formal correctness proofs, they are useful program documentation that aid informal correctness reasoning. The invariants for the monitor programs developed in this chapter are:

CarParkControl Invariant0 ≤spaces≤ N

The invariant for the car park controller simply states that the number of spaces available must always be greater than or equal to zero and less than or equal to the maximum size of the car park (N).

Semaphore Invariant:0 ≤value

The semaphore invariant simply asserts that the value of a semaphore must always be a non-negative value.

Buffer Invariant:0 ≤count≤sizeand0 ≤in<sizeand0 ≤out<sizeandin= (out+count) modulosize

The bounded buffer invariant asserts that the number of items in the buffer must lie in the range zero to size and that the indexes in and out must lie in the range zero to size −1. It states that the in index must always be count items "ahead" of the out index where ahead means addition modulo size.

Invariants are also used to reason about the correctness of classes in sequential object-oriented programs. The invariant is required to hold after each method execution. The difference in concurrent programs is the additional responsibility to establish that the invariant holds at any point where the object's monitor lock is released. These additional points are where a wait() can be executed.

In this chapter, we introduced condition synchronization, which in combination with the mutual exclusion provided by synchronized methods, supports the concept of a monitor in Java. Condition synchronization is implemented using the wait(), notify() and notifyAll() primitives which operate on a waiting queue which can be associated with any Java object. Operation wait() suspends the calling thread on the wait queue, notify() unblocks one of the threads on the wait queue and notifyAll() unblocks all the threads on the wait queue. When a thread suspends itself by calling wait(), the monitor mutual exclusion lock is released. Use of these primitives causes an exception if the invoking thread does not currently hold the monitor lock.

Model processes that react to actions rather than instigate them are usually translated into monitors in the program that implements the model. Each guarded action in the model of a monitor is implemented as a synchronized method which uses a while loop and wait() to implement the guard. The while loop condition is the negation of the model guard condition. Changes in the state of the monitor are signaled to waiting threads using notify() or notifyAll().

Nested monitor calls should be used with great care as they can cause a program to deadlock. This can occur since a thread that waits in a monitor releases only its lock, not the lock of any monitor from which it may have been called.

The idea of associating data encapsulation with mutual exclusion, which is the essence of the monitor concept, is jointly due to Edsger W. Dijkstra (1972b), Per Brinch-Hansen (1972) and C.A.R. Hoare (1974). The monitors in C.A.R. Hoare's classic paper differ in a number of respects from the way monitors appear in Java. Condition wait queues are declared explicitly in the original proposal and more than one can be declared in a monitor. This contrasts with the Java monitor, which permits only a single implicit condition queue. Multiple queues allow less rescheduling and thread-awakening if multiple threads are waiting on different conditions. In Java, all threads must be awakened to re-test their waiting conditions. If a thread's condition does not hold, it blocks again. In practice, threads waiting on different conditions usually wait at different times and consequently there is no extra thread-activation cost. Even when it does occur, the extra scheduling does not usually cause a problem.

Another difference is the semantics of notify(). The Java semantic for notify() is known as signal and continue. This means that the notified thread is taken off the wait queue and put into the scheduler's ready queue. However, the thread invoking the notify operation does not necessarily give up the processor and can continue running. Conditions in Java are thus always re-tested when the notified thread regains the monitor, since the condition may have been invalidated between the time it was notified and the time the monitor lock is re-acquired. In contrast, the notify operation of the original proposal had signal and urgent wait semantics. Notify would cause the notified thread to be executed immediately and the notifying thread to be suspended. However, the notifying thread would regain the monitor lock before new entries. Signal and urgent wait has the advantage that wait conditions do not need to be re-tested. The disadvantages are additional implementation complexity and, in a Java context, the fact that the semantics do not fit well with having a single wait queue. An extensive discussion of the different semantics possible for condition synchronization may be found in Greg Andrews' book (1991).

The development of monitors was inspired by the class concept of SIMULA-67 (Birtwistle, Dahl, Myhrhaug, et al., 1973). Monitors have been included in a wide range of early concurrent programming languages. Concurrent Pascal (Brinch-Hansen, 1975) was the first concurrent programming language to include monitors. Subsequent influential concurrent programming languages with monitors include Modula (Wirth, 1977), Mesa (Lampson and Redell, 1980), Pascal Plus (Welsh and Bustard, 1979), Concurrent Euclid (Holt, 1983) and Turing Plus (Holt and Cordy, 1988). The problem of nested monitor calls was raised by Andrew Lister (1977). Java has brought the development of monitors full circle by including the concept in an object-oriented programming language.

5.1 A single-slot buffer may be modeled by:

ONEBUF = (put->get->ONEBUF).

Program a Java class,

OneBuf, that implements this one-slot buffer as a monitor.5.2 Replace the condition synchronization in your implementation of the one-slot buffer by using semaphores. Given that Java defines assignment to scalar types (with the exception of long and double) and reference types to be atomic, does your revised implementation require the use of the monitor's mutual exclusion lock?

5.3 In the museum example (Chapter 3, exercise 3.6), identify which of the processes,

EAST, WEST, CONTROLandDIRECTOR, should be threads and which should be monitors. Provide an implementation of the monitors.5.4 The Dining Savages: A tribe of savages eats communal dinners from a large pot that can hold M servings of stewed missionary. When a savage wants to eat, he helps himself from the pot unless it is empty in which case he waits for the pot to be filled. If the pot is empty, the cook refills the pot with M servings. The behavior of the savages and the cook is described by:

SAVAGE = (getserving -> SAVAGE). COOK = (fillpot -> COOK).Model the behavior of the pot as an FSP process and then implement it as a Java monitor.

5.5 FSP allows multiple processes to synchronize on a single action. A set of processes with the action

syncin their alphabets must all perform this action before any of them can proceed. Implement a monitor calledBarrierin Java with asyncmethod that ensures that all of N threads must callsyncbefore any of them can proceed.5.6 The Savings Account Problem: A savings account is shared by several people. Each person may deposit or withdraw funds from the account subject to the constraint that the balance of the account must never become negative. Develop a model for the problem and from the model derive a Java implementation of a monitor for the savings account.