Design matters. What architect or other design professional doesn't believe that? We only have to listen to friends and co-workers to know how much people care about the places where they live, work, and play. Many, if not most, of us also believe that design matters tangibly by affecting health, learning, retail sales, athletic performance, productivity, and other aspects of our lives. THE ENVIRONMENTS DESIGNERS CREATE ARE THE SETTINGS THAT ENABLE OR INHIBIT HUMAN INTERACTION; PROMOTE OR STIFLE HUMAN DEVELOPMENT; AND FOSTER OR HARM HUMAN WELL-BEING.

Architects' clients often want design to matter beyond its artfulness. The people who hire designers are responsible for providing places that will help their companies or institutions excel. They seek design that will enhance performance, whatever the measure of that is within their work context.

Designers know this intuitively. Many even market the notion of design that matters in terms of organizational outcome. "If you hire us, we will help your organization achieve...." Yet few architects understand specifically what aspects of design relate to their clients' needs; nor can they quantify that impact in a believable way that will help their client justify the cost. Often there's no basis for their assertions about organizational performance enhancement other than professional intuition. So, the question isn't if design matters; rather its "in what way, how much, why, and to what effect" do people respond to the environments around them? IF ARCHITECTS AND INTERIOR DESIGNERS COULD ANSWER THOSE QUESTIONS, THEY WOULD HAVE KNOWLEDGE TO CREATE HIGHER VALUE PLACES AND GAIN GREATER CREDIBILITY WITH CLIENTS.

To succeed, the model for evidence-based design practice must embrace creativity and avoid dictating cookie-cutter design solutions. Good architecture is more than rote application of rules. It is innovative problem-solving at its best, wholly addressing needs, including the human need for delight and invention. Prior experience and intuition serve their purpose in design, as they do in all the decisions we make in life. Yet when we are faced with making major life decisions, people will most often do some research and seek advice to be well-informed. We're not enslaved to that information but we consider it. Making the best design recommendations we can, respectful of clients' value systems, is a responsibility of design professionals who wish to be treated as trusted advisors. That demands both being informed with transparent knowledge and being creative.

Lessons from the social sciences can help. Much social science research occurs in complex real-life settings and uses disciplined methods to sort through the messiness and come to a valid understanding of causes and effects. Other social science research is laboratory-based but also links human behavior with environmental influences. Most importantly, because it's about people and what affects their perceptions and actions, social science research can help designers understand how to manipulate physical environmental attributes to support the outcomes sought by their clients and to do more interesting work.

SOCIAL SCIENCES, PARTICULARLY SOCIOLOGY AND PSYCHOLOGY, AND DESIGN HAVE BEEN MARRIED FOR OVER 50 YEARS. IN SOME WAYS, IT'S BEEN A ROCKY MARRIAGE BUT ONE WITH MANY MORE HIGHS THAN LOWS. AFTER THESE MANY YEARS, THESE DISCIPLINES TOGETHER PROFFER EXTRAORDINARY PROMISE FOR INFORMED DESIGN. INNOVATION IS OCCURRING ON TWO FRONTS. THE FIRST IS THE USE OF SOCIAL SCIENCE METHODOLOGY TO CREATE EVIDENCE THAT WILL HELP US BETTER PREDICT OUTCOMES OF DESIGN DECISIONS. THE SECOND IS ABOUT THE APPLICATION OF KNOWLEDGE ABOUT THE CAUSES OF HUMAN BEHAVIOR, E.G., HOW PEOPLE LEARN (COGNITIVE SCIENCE) AND HOW PHYSICAL SETTINGS AFFECT LEARNING (ENVIRONMENTAL PSYCHOLOGY).

Methods (to study behavior) and knowledge (about behavior)—both can inform the creation of places where people can thrive. Data-hungry clients can gain confidence in design recommendations when they're backed up by performance metrics or even just a thorough needs assessment. Social science methods can be used to create business value by linking a design choice—say color or access to daylight—with a desired behavioral outcome, such as healing in the context of a health-care client. Some such research already exists and the challenge for the average design practitioner is to access it.

In his interview in this chapter, John Zeisel, PhD, claimed that some research exists on virtually any design question. Therefore, it could be claimed that there is always an opportunity for informed design. However, the amount of information may not always be enough, depending on the risks and costs associated with the decision being made. As the stakes become higher, the data must be more compelling to be an effective decision tool. WHEN EXISTING RESEARCH IS NOT SUFFICIENT FOR THE QUESTION AT HAND, THE PROCESS BECOMES USING THE BEST AVAILABLE INFORMATION, WHILE APPLYING THE TOOLS AND METHODS OF SOCIAL SCIENCE TO AUGMENT EXISTING DATA WITH NEW INFORMATION DEMANDED BY THE SITUATION.

Frequently, project fees and timetables won't support original research (although some practices have done well in finding clients who will fund it). When practical constraints limit research, the predesign planning process itself may provide an opportunity to systematically study the issue using internal client data, i.e., programming on steroids intended to reveal the potential to impact organizational behavior through design; not just a room list. Project-centric research may not apply broadly beyond the project, as it might reflect a specific client's idiosyncratic context, but many researchers will claim that patterns very frequently recur across organizations that allow generalizations from one project to many. Further, as Andrew Laing and David Craig point out in their interview, even when the results can't be generalized, systematic analysis of client needs provides a type of evidence—information about that client that can help that client understand how a design change could affect their organization's performance.

The work highlighted in this chapter addresses both methods and behavioral knowledge. Several themes emerge.

THERE ARE MANY RESEARCH METHODS THAT APPLY TO DESIGN. THE USE OF SEVERAL, RATHER THAN ONE, ENHANCES RESULTS. One can even cross the lines of this book's chapters and mix data mining, prototyping, and social and natural sciences!

There are many research approaches that may be used. For example, if your goal is to zero in on one very specific design attribute, it might be best to use highly controlled protocols in a laboratory setting. However, other questions may be better researched in complex environments with multiple variables at play, if it's how the influences come together as a system that most interests you.

REGARDLESS OF THE SPECIFIC METHODS OR SETTINGS YOU CHOOSE, THE RELIABILITY OF THE RESULTS WILL DEPEND ON USING RIGOROUS METHODS. Architects are seldom trained researchers. Often even PhD students in Architecture have no prior exposure to research methods. Because design professionals as a group haven't been sensitized to valid research practices, there is much poorly done research resulting in misleading information. Though well-intended, this work can lead to false expectations and failure of the resulting designs to yield positive results. Such information is also readily available to the profession through conferences and publications, so it is adopted as truth and the damage spreads.

We can do better by engaging people with research skills whenever possible, either as collaborators or in-house experts. Multidisciplinary collaboration is an ever-present concept advocated by proponents of evidence-based practice. Innovative designers are forging external relationships with consultants and academia; or they're hiring people with diverse backgrounds who understand the social sciences.

EVEN WITH THE RIGHT SKILL SETS INVOLVED, IT'S ESSENTIAL TO CLEARLY COMMUNICATE THE RESEARCH CONTEXT—THE HYPOTHESIS, SETTING, SUBJECT POPULATION, CONTROLS, AND MEASURES—ALONG WITH THE FINDINGS. That way, clients and other designers can make their own judgments about how much weight to put on the data in their decisions, on their projects. How we communicate the evidence is as important as the evidence itself.

Fortunately, over many years of environmental design research, a strong foundation has been developed from which design practice can move forward. Social science methods and knowledge—both are there to enhance evidence-based design practice, as long as the designers understand where to find them and how to use them.

Environmental psychology is arguably the social science discipline most directly related to evidence-based design. The mid-twentieth century witnessed an intersection of design—largely of urban and interior spaces—and social scientists.

Although not a social scientist by her early training, Jane Jacobs (1916–2006) addressed social issues in her advocacy of more humane urban development; one of which emphasized the importance of enhancing existing urban neighborhood patterns. Her theory of neighbors having "eyes on the street" emphasized citizen engagement and encouraged a redefinition of neighborhoods. Linking socioeconomic, political, and cultural anthropology to the history of urban growth patterns, she advocated for denser, higher-quality urban economic policies rather than suburban developments. "Eyes on the street," as Sherry Ahrentzen, PhD, argues in her interview, has been too often lifted out of context by architects who thereby use evidence to misinform design. However, Jacobs's work resonated with architects and helped bridge the gap between design intent and outcome, if not connecting design and scientific methodology.

Another urban theorist, William H. Whyte, introduced many designers to a social science method. In his Street Life project, Whyte conducted over 16 years worth of observational studies of pedestrians in Manhattan and patterns of human behavior—walking patterns, use of open space, points of gathering, and interaction. Everyday people were observed and photographed by still and movie cameras, and interviews were undertaken, interpreted and analyzed. Whyte was an advocate of field observations of people in natural conditions rather than in laboratory, experimental states of being.

Much of the early focus of environmental psychology was on interior environments. Robert Sommer (Personal Space), a social psychologist, studied how people alter their space to meet their needs and how, in turn, those spaces affected their behavior. Edward Hall (The Hidden Dimension), an anthropologist, identified how different cultures interpreted space in social relations. A number of behavioralists believed that design would control behavior and their work on office environments is credited by many to be a precursor to the discipline of architectural programming. Once it was recognized that space could influence behavior, the logical next step was a systematic inquiry process to articulate the design problem.

In 1968, the Environmental Design Research Association (EDRA) was established by social scientists, design and facilities professionals, students, and educators. It remains committed to the development and dissemination of research to improve understanding of the interrelationships between people and their surroundings, and thereby help to create environments responsive to human needs.

A review of environmental psychology literature reveals six primary areas of investigation. (Garling and Golledge 1993, Kaplan and Kaplan 1982)

Attention: Understanding how people notice the environment; either by stimuli which are (1) involuntary (distracting) and command notice, or (2) voluntary, requiring some effort, often resulting in fatigue. Understanding "attention" is the starting point of designing to enhance human behavior.

Perception and cognitive maps: The ability of people to imagine the natural and built environment, retain these images in the brain as spatial networks, and remember and recall these images called "cognitive maps." These neural networks enhance the ability to recall images that in turn influence new perceptions and understanding of new experiences.

Preferred environments: People seek environments that are most comfortable, that build confidence and the ability to engage others. Research is in areas that provide coherence (order), legibility (understanding), complexity (stimulating variety), and mystery (the prospect of gaining more information).

Environmental stress and coping: In addition to the study of environmental stressors such as acoustics and temperature change, other cognitive stressors such as prolonged uncertainty, lack of predictability, and stimulus overload are common points of study.

Participation: Much research is oriented toward enhancing citizen participation in environmental design, management, and restoration.

Conservation behavior: Studies explore environmental attitudes, perceptions, and values as well as devising intervention techniques for promoting environmentally appropriate behavior.

In addition to research that attempts to establish direct links between environmental variables and behavioral responses, there's a rich resource for designers in the form of related research fields; such as developmental and cognitive psychology. For example, how people learn, concentrate, and create is well researched and can provide the designer of educational settings with deep understanding of the behavioral characteristics that the space is intended to support for optimal human function. This type of "hypothesis-based design" does have an element of inference but it is informed design at the least and, in the best circumstances, can then be directly tested and measured for outcomes to confirm the hypothesis. Did this setting indeed influence the desired result?

John Zeisel's work with people living with Alzheimer's Disease is an excellent example of evidence-based process that starts with knowledge about behavior: People rely on natural mapping and mental cues to find their way and brain deterioration can impair these processes; which informs a hypothesized relationship to specific design elements (e.g., mementos); and ultimately is measured in a research-based assessment of the outcomes of design intervention.

The body of knowledge is rich and relevant to designers who want to positively affect human behavior. Social science research methods are relevant to complex environmental settings. Two of these—survey and observation—may be the most commonly used tools for assessing design performance, particularly in postoccupancy evaluations (POEs). These therefore have the dubious distinction of being both very powerful and very dangerous.

RESEARCH DESIGN IS AS IMPORTANT WHEN USING SOCIAL SCIENCE METHODS AS IT IS IN OTHER SCIENCES. Most designers wouldn't presume to venture into a lab setting and hook up electrodes to experimental subjects. How many, on the other hand, would see no problem with writing a survey, even if they have no training in how to do that? And this is the tip of the iceberg.

THERE IS A SYSTEM OF STEPS. THERE ARE SAMPLING PROTOCOLS TO AVOID BIASED RESULTS. PROCEDURES ARE NEEDED TO KNOW WHICH OF MANY CO-PRESENT VARIABLES IS THE CAUSE—OR ARE THE CAUSES—OF THE OUTCOME. EXPERIMENTAL DESIGN NEEDS TO ANTICIPATE AND CONTROL FOR CONFOUNDING VARIABLES WHEN APPROPRIATE AND/OR NEUTRALIZE THEM IN THE ANALYSIS OF THE DATA. CHOICE OF RESEARCH INSTRUMENT ITSELF MUST BE KEYED TO THE QUESTIONS BEING QUERIED AND THE SPECIFIC DESIGN OF THE TOOLS IS CRITICAL. STATISTICS HELP CLEANSE DATA SO THAT ANOMALIES DON'T CREATE MISLEADING RESULTS. LASTLY, ONCE THE MEANING OF THE DATA IS UNDERSTOOD, THE COMMUNICATION MUST BE CLEAR TO AVOID IT BEING USED OUT OF CONTEXT BY ANOTHER DESIGNER.

Here are ten typical steps in the process and some questions one would answer before moving on to the next step.

Problem Statement

What are the obstacles in terms of knowledge, data availability, time, resources, and cost/benefit?

Theory Assumption, Background Literature

Is there relevant literature to address my issue? Any prior failures? Why?

Variables and Hypothesis

Can we define the independent, dependent, and control variables? Can we define the relationships between the variables?

Operational Definitions and Measurements

What is the level of aggregation? Unit of Measure? What degree of error will be tolerable? Will others agree?

Research Design and Methodology

What are the threats to internal or external validity?

Sampling

How will I choose my samples? Is it important to be representative?

Instrumentation

Are valid and reliable instruments available or must I construct my own?

Data Collection and Ethical Considerations

How much training will be required of interviewers, observers, and analysts? What level of inter-rater reliability is acceptable? Do subjects' rights need to be preserved?

Data Analysis

What combination of analytical and statistical processes will be applied to data? What level of numerical differences is significant?

Conclusions, Interpretations, Recommendations:

Was my initial hypothesis supported? What if my findings are negative? What are the implications of the findings for the theory base? What representations can be reliably made to the public and other researchers?

This brief discussion of tools is an overview of some frequently used methods. It's less a "do it yourself at your own risk" guide than a way to raise awareness of the variety of tools available for different situations.

Nondirective and Structured Interviews: These are an excellent way to uncover drivers and needs, of which the subject is aware, as well as their perceptions and preferences. Structure enables consistent analysis of data from more than one subject or project; and works well when the questions have been framed. Less structure provides greater opportunity for the subject to raise unexpected issues. (Picture the psychoanalyst encouraging the patient to talk about a deep-seated issue: "I see...and you're saying that made you feel...?) Active listening techniques apply to ensure that needs and perceptions are raised and not shut down by narrow lines of questioning.

One-on-one interviews provide a safe context for the subject to openly share feelings. Small group interviews are often intended to provide peer support for raising controversial topics and may also be used to develop ideas and themes.

Focus Groups: One of the earliest methods used in design inquiry, focus groups are an efficient way to poll representative options and to provide context for diverse opinions. Good for information gathering, the social dynamics make closure less likely than raising issues. Skilled facilitation is critical.

Surveys/Questionnaires: These are very powerful for developing large data sets of well-controlled specific data and demographics controls. Traditionally, questionnaires enable high participation, good for both learning about needs from the perspectives of multiple users and engaging many people as part of the change management process. Web surveys have become particularly useful for reaching large populations of subjects. Their limitations are that the questions should be narrow and few, if you want a good return rate.

Trace Observation: How we actually use space and how we manipulate it to overcome shortcomings often reveals truths about needs and behaviors that are unconscious or not socially comfortable to discuss when asked. Trace observation documents the artifacts we leave in our surroundings.

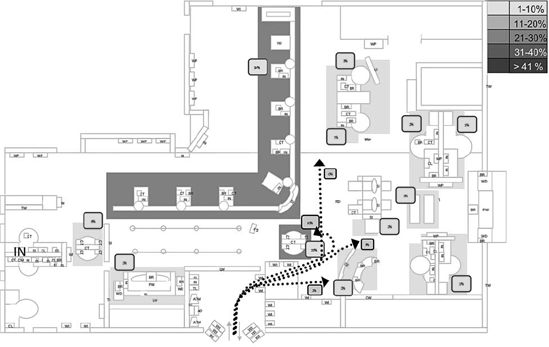

Behavioral Mapping: Space use is dynamic. People move from setting to setting. Their perceptions are often different from what really happens in the real world of varied, sometimes random actions. But it can be visually documented to reveal repeated patterns and anomalies. The map will document movement for Point A to B, most likely places for interaction or congregation, and sources of delay and conflicting behaviors.

Ethnography: Observing a person going about their daily lives provides an objective perspective on what actually is happening as compared to what people say is happening. There are many tools used in ethnographic studies: shadowing, in which the researcher is actually present and following the subject; activity logs and photo ethnography, where the subject documents his or her own behaviors but in a rigorous format to help get beyond perception; video, which may be less intrusive than shadowing but subject to its own regulatory concerns about protecting privacy and participant well-being; and "bed checks," in which behavior is documented at set time segments and places.

How to write an effective survey, facilitate a focus group, or control an observational study is the topic of many other books. This book's purpose is to illustrate through example, the work of people who are using social science methods and integrating knowledge from sociology, psychology, and anthropology in design application.

Despite the long history of environmental psychology and the rich data it has produced, hurdles still need to be overcome. What are best practices in using the information and skills from the social sciences? Or is the better question: "WHAT CAN WE LEARN FROM THE BEST PRACTICES OF TODAY TO TRANSFORM DESIGN PRACTICE TOMORROW?"

Andrew Laing is Managing Director, DEGW North America. With 19 years of experience with DEGW in London and New York, Laing combines an interest in research into the design and use of the workplace, with active involvement in leading client services. Since establishing the North American business in 1998, Laing has worked with many significant clients including Accenture, Fidelity Investments, Google, JWT, Microsoft, GSK, Nike, and the United Nations. Laing is the co-author of New Environments for Working and The Responsible Workplace. He has published many articles and speaks regularly at conferences. He is a visiting lecturer in the School of Architecture at Princeton University and received his PhD from MIT.

David Craig is a Director of DEGW North America in New York, where he contributes to global workplace strategy projects and oversees the development and application of DEGW's methods and tools in North America. Craig has focused on developing innovative approaches for measuring the impact of the workplace on businesses, including most recently impacts on employee productivity and workplace culture. He has also pioneered methods for developing distributed workplace practices for organizations seeking to enhance and capitalize on increasing employee mobility. Recently he led a global company-wide effort on tools and methods. Craig's recent clients include Capital One, Fidelity Investments, Marsh & McLennan Companies, Microsoft, and Pfizer.

He speaks regularly at industry conferences and has taught at Harvard's Executive Education program and at Georgia Tech.

How do you use evidence in your work?

DEGW has been very focused for a long time on how we work with clients to capture what they need in the design of their workplaces; how we collect information or evidence to support the briefing for these design projects; and also how we use that information as a means of measuring the success of those environments once they are implemented.

The real focus for us has been a very user-centric approach. We're interested in understanding the user perspective at different levels—a senior leadership level, where we learn about the corporate (or government or institutional) direction for moving organizational intent and strategy, in parallel with the user perspective across the organization about their own participation in that environment and the ability of that environment to support their success.

What types of methods do you use to develop information to inform that understanding?

We've developed a series of tools that are quantitative and qualitative. Qualitative: High-level discussions with leadership and staff about objectives (what they're trying to achieve) and metrics that might relate to that. Quantitative: Observational techniques and workplace performance surveys that capture critical qualities of the environment that relate to how people get their work done. We also use this data to benchmark "before" and "after" a design implementation. With our clients, we try to estimate the impact of the changed environment on their productivity. We look at the whole environment, not just the physical design but also technology, the way they manage their time, and the whole host of factors that contribute to workplace performance and user satisfaction. We do social network analysis, drawn from sociology. We employ some ethnographic methods but these are qualitative in nature.

The point of it is to understand where the business wants to go and how people should be working to go there. Different sorts of data, often direct interaction with business leaders, comes into play. It's difficult to get people to think about not just more conference rooms or whatever other physical changes they have in mind that might impact work, but instead to think more abstractly about the way people interact with each other.

Are the questions you ask centered around facilities?

We're pretty careful to make sure the questions we ask are not only about facilities-based issues. Our strategy is often not about facility-based issues but about the organizational change they're trying to create or the business direction they want to move in.

Our approach is related to an historical change in the nature of the workplace, especially with new technologies of the last 15 years, which challenges what the "office" is—where people get their work done and how they collaborate and communicate. The facility is no longer the container of the organization. We have to ask questions that are more about how people work and what they want to achieve. These questions enable us to think about the response of the workplace to help them achieve our clients' goals, with workplace considered in a very broad sense—work from home, how you use space over time, and the way you use technology.

Does the process you use to understand new workplaces inhibit or enhance design creativity?

The work we do is driven primarily by our research and consulting—the urge to understand how organizations behave and the requirements they have for the environment. In terms of creativity, we're finding a paradox. As work becomes less place-dependent—as we work in many different ways, virtually and otherwise—the value of the design of the place itself actually becomes more important. There is an interesting value-inflation. It emphasizes the role of design.

We develop concepts, always partner with an architect, and usually hand off the project after a transition period. The research findings will reveal opportunities and import areas where a client should focus but really there's a big step between that and actually creating designs. Therefore, solutions we come up with often do involve design concepts. We often think about the physical design as well as the needs; and we're very creative with space, especially because we're also thinking of new work styles, new policies, and new ways of using technology that organizations haven't seen before. The creative side of it is much broader than just thinking about space. We think of ourselves as designers of work, more than anything, tying together space, technology, policies, that sort of thing.

What types of measurements and metrics do you use in data development and analysis?

Our 2004 project with Capital One may be the best example. We started working with the executives, group by group, to determine what they believed drove productivity in their group. We also explored their metrics, formal and not, such as milestones, pipeline, etc.—all the usual business metrics—and then we worked back from those to define actual, observable behaviors which affect the metrics. For us, the further back you go into the details of how people actually work, the more easily measured these things are. It's more difficult with the aggregate business metrics we started with because these are going to be influenced by different variables. So we developed a constellation of things that drove business performance. We came up with a consensus set (12 to 20 metrics in total), such as "amount of time waiting for a colleague" and "time answering an email." Our job was to measure, before and after the design change, those things the business leaders thought were valid indicators of what made people more innovative. This is far from scientific. The goal is to capture how the client defines productivity, measure it with reliable methods, and bring them back to the appropriate level.

We developed a series of hypotheses (e.g., how the new workplace would address an identified issue), which all together contributed to defining a new workplace environment we could then measure. There are many tools for measuring, among them electronic surveys, comprehensive observations before and after, tracking average time with different behaviors, among others.

The work you address involves a number of variables. Can you isolate the impact of each of those; or is it even important to do that?

We don't ever try to separate the effect of different changes from one another. The client organization doesn't have that luxury of trying many different things and putting together what they learned. It has a relatively short time for the study and it's banking on the analysis succeeding. You do as much as you can to get it right the first time. We look at the total impact.

When you do interviews, focus groups, and surveys of large populations, you get very strong indications of what issues are important and how well these are performing in current conditions, even without doing the postoccupancy. We can take that as a leading indicator of what needs to be provided in a new environment.

The perspective we have is that this kind of measurement is "intentional"; it's not like a laboratory study. The metrics are driven by those intentions of the organization. One of the things we all have to think about here is the validity of different types of measurement. Which types of measures are in a sense more independent or more objective than others which are more driven by perception? The combination of methods we use varies. For example, "time in a meeting room" is independent of user perception of how well the environment is supporting them as users; but our position is that the user's perspective is also a valid measure because they think and believe they have seen changes in how the environment supports them. Therefore the change is having a result that's part of the intention.

How about the use of statistics?

We rely heavily on descriptive statistics but it's partly to demonstrate to the corporate organization that there is a demand for something. We have a very rigorous way to use observation to measure how space is being used over time. For example, we know how many observations over how much time would lead you to relevant conclusions. We don't typically use any type of reliability statistics, although we typically do work to get the right samples based on the probabilities. That's to ensure we have a decent representation over all the subgroups we're cutting the data by; but we're not looking for stability within work patterns or other types of responses. We take individual responses as meaningful; we're not always just seeking the averages or aggregate statistic.

There is consistency over time of work patterns we've observed across similar organizations that has given us a fair amount of confidence about them. There is a difference between what people report about use of space and what rigorous observational studies over a decade reveal. (We see less occupancy than what people report.) That difference is pretty consistent, so we've explored what the drivers of it are. Our goal is to provide enough credibility to convince managers—it's not necessarily to draw firm conclusions from, at least that would convince a researcher outside the organization.

Do you use an interdisciplinary research approach?

Yes, both internal and external. Within the firm, we employ quite a varied set of expertise in various social sciences—people with some kind of social science and some design training as well. We've found that mix of disciplines to be quite valuable. We also often collaborate with others, depending on the assignment, and are most likely to partner with psychologists and sociologists.

We probably talk more in a design language than in anything else. I'm aware there's a lot of workplace research but a lot takes an individual employee level perspective—how productive an individual is. The data on teams and organizations as a whole is somewhat sparser and usually more qualitative. So we really invented our own analysis and process for developing data that's not done by anyone else. It's a relatively small market we work in.

How much of your research is project-centric versus contributory to a broader knowledge base?

We collect a similar set of data across clients. We're looking at mining data across organizations. There's also some limit to the extent to which the data can reliably be generalized in terms of performance because we're often trying to be very specific to the business and what their particular metrics are. In that sense, it isn't completely standardized. It is driven by organizational objectives.

Most of the data is before conditions and often about dated facilities. We do postoccupancy evaluations maybe a quarter of the time. Often organizations are onto something new and different. We are sitting on a mountain of data (10,000 survey responses every month; observe 50,000 employees a year).

How would you change the curriculum or practice model to encourage use of evidence to enhance creativity?

It does seem to us there are big gaps in architectural design training where this kind of approach is largely absent from the curriculum. I don't see many schools that are developing a rigorous understanding of the social science tools and methods that architects could be using in programming efforts. I think programming is seen as a kind of routine effort. The schools don't spend much time on the rigorous thinking.

I'd even be cautious about calling what we do "programming." There is a very creative side to understanding demand—what an organization needs, how they currently work, and so forth. We have a lot of methodologies and they come together in different, very creative ways, and get expressed in unique ways, depending on what the organization is dealing with.

It would be ideal if the schools introduced that in their curriculum. It's a huge opportunity to reinvent and add value to what the architect is offering to the client.

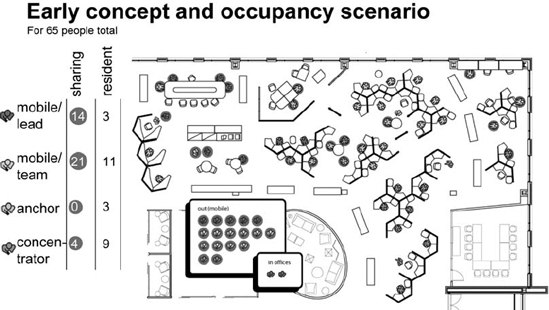

The company was already more progressive than most of their peers with regard to their workplace, having implemented an open work environment designed to emphasize collaboration over individual status, but they wanted to further increase the efficiency of their space, more closely co-locate staff who worked together, and improve the work experience.

Several research methods were used. Because there were very few examples of the kind of workplace they were envisioning, the answers to their questions came primarily from research on how people at the company worked at that time and from how people said they would like to work, particularly when given new scenarios to consider. Data was collected through observations of behavior in existing spaces that tracked how often space was used and for what purposes; surveys that gauged work patterns and measured the importance and performance of various aspects of the existing workplace; focus groups that explored future scenarios; and interviews with managers to better understand business drivers and objectives.

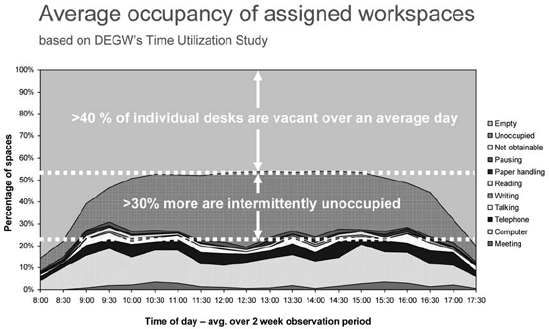

The research revealed some compelling information. Observations showed that assigned individual workspaces were only occupied around 35 percent of the time, indicating that their conventional space was inefficient, at least in the sense that their biggest piece of infrastructure was not well used, and that most work already took place away from an assigned desk.

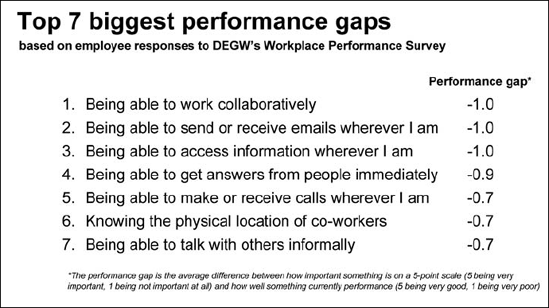

Low desk utilization along with high meeting room occupancy also revealed a polarized work pattern, with people commonly spending many hours in meetings every day and then struggling to keep up with individual responsibilities in their remaining time. This finding was consistent with data showing that people desperately wanted more spontaneous interaction (versus scheduled meetings) and more accessibility with their colleagues.

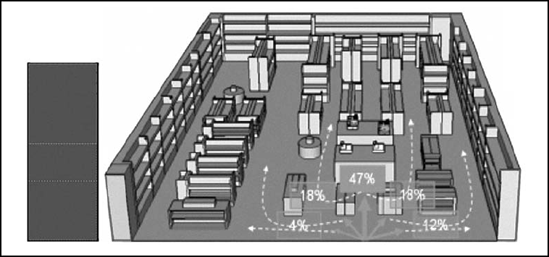

Figure 3.3. Average Occupancy of Assigned Workspaces: Systematic observation reveals much less time spent at an assigned desk than self-reported. This often-repeated pattern creates an opportunity for shared spaces that will reduce occupancy costs and provide more interesting work settings.

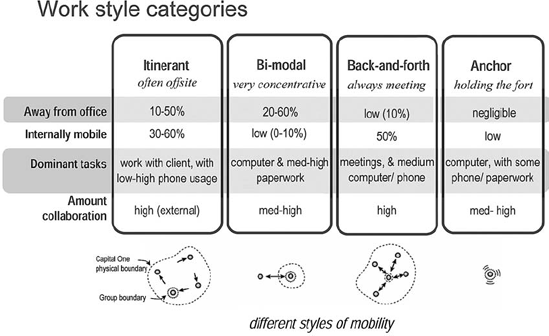

Finally, the research showed that there was not one universal work pattern but several different ones, ranging from extremely mobile to extremely desk-bound. Four different work-style categories were derived from the data and used for planning purposes to represent four different types of needs. Some roles predicted specific work styles, but many did not, suggesting individual differences.

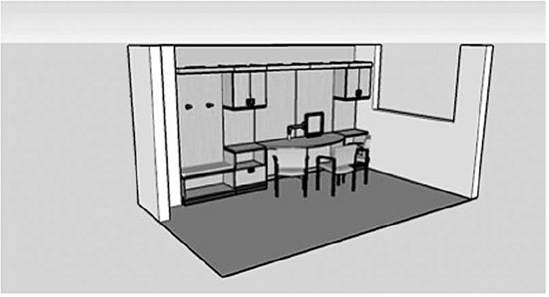

The research outcomes therefore led to a notably different workplace and a new way of supporting, even transforming, work patterns. Observational data showing low utilization helped confirm the hypothesis that work could be supported without assigned space. Data regarding specific performance gaps, in conjunction with manager input about what helped and hindered productivity in their teams, led to a decision that the core work environment should be even more open than before and should have more settings designed specifically for spontaneous meetings. Since control over distractions was also an issue uncovered in the survey, quiet zones—areas of the workplace designated as distraction-free—were also added to the design.

Figure 3.4. The Workplace Performance Survey also quantified specific functional deficiencies and thereby suggested most important design interventions to improve productivity.

Figure 3.5. The Workplace Performance Survey also quantified specific functional deficiencies and thereby suggested most important design interventions to improve productivity.

Figure 3.6. Current Work Patterns by Job Type: Through analysis of work processes, workers were categorized by their degree of mobility. This enabled the planners to quantify an optimal mix of settings to support various needs.

Lastly, to ensure that the right balance of settings, from very collaborative to very individual, were provided, work-style categories were used to quantify specific types of demand (e.g., such as how many meeting rooms one would use over the course of a week if one were categorized as a heavy "collaborator"), and the prevalence of different work styles were in turn used to develop a space program for the final concepts. Ultimately, the idea that every employee would have access to a range of specialized spaces rather than assigned one all-purpose space allowed the space program to be finely customized based on the activities and preferences of each team.

Figure 3.7. Translating survey data into space concepts is a critical transition. This mixed setting concept provided places that were appropriate for different work patterns identified by the research.

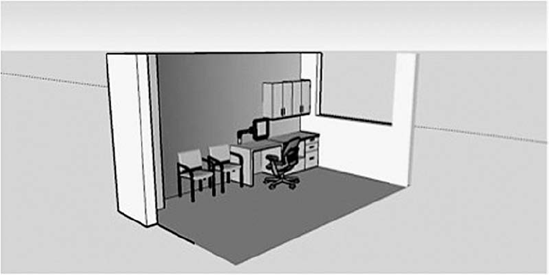

Figure 3.8. Desks are provided for mobile workers on a shared basis, substantially reducing real estate costs.

Joyce Bromberg is Director of WorkSpace Futures—Research at Steelcase Inc. In this role, Bromberg is responsible for user-centered research for vertical markets and Phase 0 research for new product development. Sometimes called "pioneering research," this activity utilizes her 25 years of industry experience in the areas of observation, synthesis, and design. Bromberg was appointed to this position in 2002. Prior to this, she was Director of Space-Planning Research and Environment Design. In these roles, she was responsible for all research and development activities related to space planning, the design of Steelcase environments, and was the lead developer of community-based planning, a space-planning methodology and web-based toolset. Bromberg joined Steelcase in 1982, as an interior designer. In 1986, she was promoted to Interior Design Project Manager, designing many award-winning showrooms and industry/trade show events. She then was appointed Manager of Strategic Planning for the architect and design market. She has also served as director of Surface Materials and Advanced Concepts. Bromberg is a graduate of the High School of Music and Art and has a BA in Art History from the State University of New York at Stony Brook.

How do you use evidence for design in your work?

My team uses a human-centered design research methodology to help Steelcase identify user needs that translate into a better health-care product. In a sense, because products are always conceived in terms of an application or the setting in which they are to be used, we use the research to develop new kinds of applications at an architectural level. We also freely share the findings from our research with our clients and anyone else who's interested. We're very interested in building a body of evidence and adding to that body of evidence through collaborations with health-care institutions and organizations like the Center for Health Design.

Could you talk about various methodologies you're using to do this research?

I don't know if anyone has come up with a definitive way to conduct evidence-based design research but we're experimenting with different approaches and hopefully adding to the body of knowledge about how one might approach such research.

We have a basic six-step process that is not proprietary to Steelcase:

Understand: It's necessary to comprehend secondary research with the goal of being as smart as we can about a topic or discipline—the vocabulary, process, roles, design precedent, and the forces impacting them today, e.g., insurance and medical error affecting health-care. We always do the reading with the physical environment in mind. For example, how might medical error be reduced by the design of an environment or the way we craft a new product?

Observe: The secondary research helps us to choose observation sites but we're always looking for a wide variety of places to observe. We spend generally a week at a time, around the clock, at any given location; and have developed a number of methods that help us observe. Part of what we do is about "asking"—using interviews, questionnaires, and focus groups. The asking reveals the explicit process; for example, what people say they do. The second part of observation allows us to understand the tacit, the unconscious—what they're doing without even thinking about why they're doing it. For this, we might shadow people, do time utilization studies, camera studies, video ethnography—a whole variety of techniques. The third part is Participatory Design. By engaging people, we get into their latent needs; the emotional. One of our tools is an online collage tool that allows participants to describe the existing condition and the desired or "should be" condition. It allows you to uncover deep feelings about experience. Our process is about asking, observing, and engaging: When you're designing with intent, you have to look at not just your space but also at the people, their behavior, and how they interact with objects and each other; at their technology; and at their process. These have to be understood and designed for at the same time. When we observe, we look at all and how people work around deficiencies.

Synthesize: We're very stringent about documenting all observations and secondary research and keeping this data separate from our opinions and the concepts that come out of it, as well. So, our third step is to state an observation and how we feel about that; and then track the concepts back to the original research source. You begin to see patterns in the data. We share observation stories and then group those stories into clusters—patterns of behavior or patterns of need—until we have gone from insights to sets of actionable design principles.

Realize: The next step is to use the principles, much like design criteria, to create solutions. We have charrettes and do a lot of brainstorming—considering the needs, the application context, and the aesthetics. The solutions are not developed in isolation. In conjunction with industrial designers, we consider products in context of what the new space will look like, the experience of the staff, and all the protocols and behaviors and technologies that one would need to make those spaces effective.

Test: Then, the product always goes into prototyping. We build full-scale models and do user testing.

Measure: Whenever we can, although not always possible, we measure what we've accomplished. Measurement for us always goes beyond only satisfaction to outcomes, such as time to treatment.

Might this research process inhibit creativity?

The converse is true. I think people mix up creativity and aesthetics. When I was starting as a designer, I was always worried about how something was going to look and where my next idea would come from. It was very stressful, particularly if you didn't want to pour through design journals and rely on what was already being done. When I discovered this practice of human-centric design, it calmed me as a designer because it allowed me to come up with solutions which, as a given, had to be aesthetically pleasing but in the best sense were more creative because they were solving an unmet need and were deeply aware of the processes that were going on. Sometimes architects and designers aren't aware that when they mess with the physical environment, they're messing with an ecology of behaviors. If they're not as mindful as they should be they're going to cause harm. So for me, ours is a very affirming process that allows my whole team to be much more creative in their solutions and the solutions to be more effective for the users as well. It does anything but limit creativity.

Do you use a wide variety of interdisciplinary expertise?

Not only do I believe in cross-disciplinary teams but I believe we're creating new kinds of professions or practices. On our team, we have people with lots of different backgrounds—designers, architects, researchers, and social scientists—and we always work with doctors, nurses, and patients, and often architectural firms as well. When you're handling complex problems, it's very difficult to do anything alone anymore. There must be collaboration. You have to have smart, curious people who have been trained to think and solve problems. In that sense, the broader the backgrounds, the better, because you have diverse people with diverse experiences to solve a hard problem.

There's a need to give people a way to understand what it is they're designing for. That way, they can design something that will help people be more effective or have some kind of positive, measurable outcome. Design education can't be about aesthetics alone. The curriculum will have to change dramatically. You have to give people tools to understand performance and process. For example, what can we learn from neuroscience about how the brain works that we can apply in order to design environments that essentially help the brain perform better? We're beginning to know how people learn. Are we taking advantage of that knowledge in the design of classrooms? So, designers can not only learn a method of investigation; they can be opened up to other areas of inquiry and take that information and make it useful for the practice of architecture.

There's another aspect of practice that has to change. Architecture firms aren't going to be able to do the level of research we're talking about alone. We've got to come together in consortia to do it, where we share everything we learn in the spirit of furthering the science. There's more to be gained in doing that than there is to be lost. There has to be this notion of altruism, if only to be good citizens of the world. In science, everything is shared and everything has to be peer-reviewed. The reward comes in being there first. It goes nowhere if you keep it a secret. If you don't allow it to be scrutinized and measured, it reduces the work we do as designers to a commodity.

The design of outpatient consultation rooms has remained generally unchanged despite major changes to patient care practices, processes, and technology. A study funded by Steelcase and conducted in the Division of General Internal Medicine of the Mayo Clinic in Rochester, Minnesota, utilizes a collaborative research model and rigorous experimental controls and statistical analysis to explore the relationships between specific aspects of room design and patient outcomes. This case study, excerpted from a monograph by the research team, illustrates the explicit use of controlled research techniques to assess design impacts on a setting of great importance to quality of care.

The exam or consultation room, in which millions of outpatient visits take place yearly, may critically impact the quality of health-care in general and of the patient-clinician interaction in particular. While there has been much work examining the patient-clinician interaction, little has been done to understand the extent to which it can be affected by the design of the consultation room.

The traditional consultation room involves a desk designed for the physician's individual use, with the clinician sitting in the primary work position on a chair with enhanced mobility. The patient and care partner (e.g., family member) sit to the side, on fixed chairs, and with limited access to the computer screen. Three trends in health-care suggest the need to reconsider the design of the consultation room in terms of its potential affect on patient-clinician interaction: patient-centered care as a manifestation of high-quality care; the shift from acute-care models in the outpatient setting to relationship-based care in the context of chronic care delivery; and the evolution toward information-intensive encounters, in which the electronic medical record and health information on the Internet play key roles in supporting clinical decision-making and patient education.

The Space and Interaction Trial (SIT) was conducted to understand the extent to which a consultation room designed to support modern clinical encounters could affect the patient-clinician interaction compared to a traditional room. SIT was a pilot randomized controlled trial of real clinical encounters to measure, through participant self-report surveys, the effect of a design intervention. In particular, the study evaluated the impact of three specific design features that potentially support the evolved nature of health-care today: the placement of the computer screen, the type and position of the desk, and the arrangement of the seats.

Two consultation rooms—a standard room and an experimental room—were designed. The standard room was modeled as composite using design guidelines from the Mayo Clinic, the Veterans Health Administration, and design reference books. (Figure 3.11) Key features of this room recognized the physician as the primary user and included: (a) distinct designated spaces for patients and clinicians; (b) a desk surface for clinician use; and (c) the setup of the computer monitor and input devices that favor physician use.

The experimental room (Figure 3.12) was designed to enhance patient-centered care with the use of technology in the consultation. This room featured a half-round table with a computer monitor on an extendable arm mounted on the wall above the table and differs from the standard room in the following ways: (a) the desk surface is a shared space for patients and clinicians; (b) the computer monitor and input devices allow similar access for patients and clinicians; and (c) participants sat on three adjustable chairs of the same design (with glides on patient chairs for safety and casters for mobility for the clinician) set around the table. The arm-mounted monitor was in full view of everyone sitting at the table and could be adjusted as needed. The computer input devices (i.e., wireless keyboard and mouse) were similarly accessible to patient, care partner, and clinician. Otherwise, the standard and experimental rooms were identical in terms of finishes and equipment.

Figure 3.11. The traditional consultation layout was designed around the physician's access to technology.

Figure 3.12. Research findings demonstrate increased patient involvement in the consultative process was supported by a new layout.

From August until October of 2007, six physicians and 65 patients participated in the study. Sixty-three patients completed the postvisit survey and are included in the analyses. Patients had a median age of 69, and 48 percent were women. Of these, 44 (70 percent) had not had prior episodes of care with the same clinician, and 14 (22 percent) had not received care at Mayo Clinic prior to current episode of care. Table 1 describes the baseline characteristics of patients by trial arm. Table 2 describes the results of the study across the six questionnaire domains.

Table 3.1. Baseline Characteristics

Characteristics | Standard room (N = 30) | Experimental room (N = 33) | Total (N = 63) | P-value |

|---|---|---|---|---|

Age, median (interquartile range) | 68 (58, 74) | 73 (67, 75) | 69 (61, 75) | 0.20[a] |

Women, n (%) | 14 (47%) | 16 (48%) | 30 (48%) | 1.0[b] |

Care partner in the room, n (%) | 14 (47%) | 15 (45%) | 29 (46%) | 1.0[b] |

New to Mayo Clinic, n (%) | 5 (17%) | 9 (27%) | 14 (22%) | 0.37[b] |

New to this clinician, n (%) | 8 (27%) | 11 (33%) | 19 (30%) | 0.60[b] |

[a] Wilcoxon Rank Sum Test [b] Fisher Exact | ||||

Table 3.2. Results by Domain

Standard room (N = 30) | Experimental room (N = 33) | P-value[b] | |

|---|---|---|---|

Predefined domains, median (interquartile range)[a] | |||

Patient satisfaction with the visit | 99.3 (97.2, 100) | 100 (98.6, 100) | 0.127 |

Mutual respect | 100 (100, 100) | 100 (100, 100) | 0.305 |

Trust in physician scale | 100 (100, 100) | 100 (100, 100) | 0.984 |

Communication quality | 100 (92.9, 100) | 100 (92.9, 100) | 0.362 |

People-room interactions (comfort, clear where to sit and place belongings, access to the computer monitor and input devices) | 81.8 (72.7, 88.6) | 86.4 (77.3, 90.9) | 0.140 |

Interpersonal-room interactions (cliniciancomputer-patient interactions) | 87.5 (68.8, 100) | 93.8 (81.3, 100) | 0.145 |

[a] Higher scores are better [b] Wilcoxon Rank Sum Test | |||

The data indicates that redesigning the consultation room to accommodate for the nature of modern outpatient care (i.e., increased patient involvement in information-intensive encounters) has a positive impact in the way patients experience the consultation, even in environments in which satisfaction with the standard consultation room is very high.

The experimental room was particularly successful in improving access to the computer display for patients and their care partners. Patients reported physicians making greater use of the electronic information (e.g., their medical record data, radiological images, health information on the Internet) to explain treatments and make decisions. Notably, patients in both rooms endorsed to a similar extent the notion that they participated in conversations about information on the computer screen, while patients in the experimental room were also able to see this information directly on the screen. This experience appears to be reliably captured in the questionnaire, as judged by the extent of interobserver agreement between patients and care partners. The ability to interact with the computer was an area of disagreement, mostly due to care partners indicating greater levels of dissatisfaction than patients with their lack of access to the computer screen in the standard room (as they were seated further away from the screen than the patients).

Although few designers have the research expertise to conduct research of this type, the collaborative nature of this experiment can be emulated to create evidence that benefits from experience outside traditional design realms. Further, although a high level of experimental rigor was used—and that is often off-putting to designers—the applicability to design is clear. The findings point the way to design adding tangible value for the people who own and use the space and they help make a case for a new design solution, thereby enhancing innovation.

Kevin Powell is the Research Director for the General Services Administration (GSA), Public Buildings Service (PBS). His group identifies new technologies and approaches needed to improve PBS's business process. Recent research has been focused on three primary areas—sustainability and high-performance building strategies; workplace effectiveness; and optimizing energy efficiency and operations. GSA-sponsored research includes several groundbreaking studies documented in "Workplace Matters" (2006) and "Sustainability Matters" (2008), "Assessing Green Building Performance" (2008), "Energy Savings and Performance Gains in GSA Workplaces" (2009), and "The New Federal Workplace" (2009) all published by the GSA (www.gsa.gov). Prior to joining the GSA, Mr. Powell worked with the Center for Built Environment, University of California, Berkeley.

What sort of research have you been engaged in and for what reason?

I am guided by the philosophy that the buildings we build, the places we make, need to grow out of the requirements of the people who are going to inhabit those buildings and places. The goal is to get things right and the way you get things right is to know what's working and what's not, and get that knowledge to the people who can act on it.

We are an agency that manages over 350 million square feet of commercial space where over a million people work. We know that to make the best decisions, we need the best information. We are mindful that we are public servants, and that a key part of our mission is to deliver superior workplaces for the federal workforce, at the best value to the American taxpayer.

In terms of researching building performance, where are we headed? Broadly, the research program is guided by a Six Sigma-structured approach—Define, Measure, Analyze, Design, and Verify. We also use the Balanced Scorecard technique, so that we're not just looking at the financial domain, but are also looking at our customer, employee, and business process domains. We are committed to postoccupancy evaluations to complete a feedback loop. POEs help us know if we are specifying the performance our tenants need, and if we are getting the performance that we are specifying. Currently, we are developing an exciting new metric called Integrated Design Excellence Analysis (IDEA). The IDEA tool will measure the success of Design Excellence from initial concept designs through fully developed design documents. The IDEA tool will provide GSA a framework of integrated design factors to use in rating projects within a standard scale. We believe that an extension of the IDEA tool, using the same set of factors, will become the basis for future postoccupancy evaluations.

Are these tools an asset to design creativity, or conversely, do they inhibit creative thinking?

I believe—strongly—that an evidence-based framework enhances creativity. A clear framework provides freedom, the spurring of thought that happens when the problem and success criteria are well-defined, and you can fully focus on pushing the envelope of the solution. People want innovation and creativity. There is no machine that manufactures that. What you need is context where you're encouraged—even forced—to consider multiple factors.

Experts bring an enormous amount of information and experience to bear. What spurs their creativity is capacity to make unexpected leaps, where the undefined becomes defined. But all of this can only happen naturally if you have a framework: Where are you starting from? Where are you going? What does success look like?

The tools we rely on to make our decision-making stronger spur our creativity. The inception of GSA's research program began with a series of workplace analysis tools—time utilization studies, social network analysis, end-user satisfaction surveys, and focus groups. Taken together, these tools showed that people were no longer tethered to their offices to access information. Technology enabled them to work anywhere, anytime. From this analysis, we knew we couldn't just continue to build office space as if nothing had changed since the 1970s; we needed to creatively identify new solutions.

Objective information also spurs buy-in. When we showed a customer evidence that two-thirds of the time, across multiple industries, knowledge workers are not at their desk, they thought that was interesting. When we showed them that we had observed that exact pattern to be true in their particular space, a lot of conversation followed, and the designer earned a license to be creative in developing a wide range of spaces, to change the size and location of offices and cubes in a way that could never have occurred without that underlying framework of analysis.

How rigorous does evidence have to be to be credible? How much is enough to be considered "proof" and is the process scalable to all design projects?

As a public institution, we are required to be both transparent and accountable. We are currently facing mandates that require us to pursue very ambitious sustainability goals—55 percent reduction in energy by 2015, carbon neutral buildings by 2030. Research implies innovation, risk, and reward. We are fortunate that our initial efforts appear to be paying off. We just completed a study that confirms that compared to national baselines, our recent buildings use 26 percent less energy, emit a third less carbon dioxide, all while costing 13 percent less to operate and having occupants that are 27 percent more satisfied. Having access to these objective facts has been important in validating—and further shaping—our approach to high-performance building.

We are also fortunate at GSA that the scale of our organization—we have nearly 9,000 buildings in our inventory—allows us to research the best design, operation, and management strategies with an investment of only a small percentage of our operating revenue.

What aspects of design education and the current practice model do you think should change?

Every assignment in the design studio begins with a program of requirements. Solving that program—with bold creativity and unexpected innovation—that is the goal of every design student, and it should be the goal of every design professional. What needs to be emphasized is that being clear, at the outset, what the criteria of success are, and being able, at the end of the day, to provide evidence that those criteria have been achieved—that is the foundation of a happy ending.

Being a public agency, the GSA has responsibilities to the environment and the taxpayers. Reliable measurement is key to understanding and managing the performance of its portfolio of facilities, including energy use, operating costs, and occupancy productivity. With approximately 342 million square feet of office space, housing 1.1 million workers, this is a large opportunity and challenge.

The foundation for sustainable design has been in place for several years, providing a number of use case opportunities. GSA has applied sustainable design principles to its building projects since 1999; and in 2003 established a target of U.S. Green Building Council (USGBC) Leadership in Energy and Environmental Design (LEED) Silver–level certification for new construction. Federal government mandates enacted in 2005 and later have raised the bar further, demanding a way to ensure that building performance is measured.

In this 2008 study, 12 GSA buildings are evaluated in a whole building performance measurement (WBPM) study to determine how well GSA's sustainably designed buildings are performing. The study's intent is to seek holistic evidence about the outcomes of using sustainable design approaches that will enable the GSA to make effective decisions about future design.

Figure 3.16. Building orientation along with horizontal and vertical fins helps to optimize the energy performance of the EPA Headquarters in Denver, Colorado

Measures included environmental performance, financial metrics, and occupancy satisfaction. For each building in the survey, at last 12 months of data were collected, sourced from utility bills, facilities maintenance schedules and budgets, and surveys. Performance data was identified, normalized, and analyzed using the Building Cost and Performance Metrics: Data Collection Protocol of the Department of Energy Federal Energy Management Program. The performance of the GSA buildings was compared with the average performance of U.S. commercial buildings, as documented in the CBECS national survey of commercial buildings constructed between 1990 and 2003 (EUI); Energy Star (CO2); 2006/2007 IFMA and BOMA surveys (Maintenance Costs); the Federal Water Use Index (Water); and Center for the Built Environment, UC Berkeley Occupant Satisfaction Survey.

Overall, best performance was achieved by buildings designed with an approach integrating various aspects of sustainability, as defined by LEED. These include site development, water savings, energy efficiency, materials selection, and indoor environmental quality. The two LEED Gold–level buildings were the only ones that performed at consistently high levels, although they did not lead on every measure. Integrated sustainable design, the evidence shows, is helping the GSA to deliver buildings that use less energy, have lower operating costs, and foster occupant satisfaction (which has been linked in other research to productivity).

Specifically, the buildings in this study use 26 percent less energy (65 kBtu/sf/yr vs. 88 kBtu/sf/yr); have 13 percent lower aggregate maintenance costs ($2.88/sf vs. $330/sf); and have 33 percent fewer CO2 emissions (19 lbs/sf/yr vs. 29 lbs/sf/yr). Some buildings performed strongly across the board, if designed with an integrated approach, while others achieved high ratings on only certain measures. Buildings designed to the California Title 24 energy code or Energy Star had high energy performance. Operating costs were lowest when sustainability was so considered in all aspects of design, including recycling and cleaning.

A frequent question of corporate real estate and facilities managers is if there is an ROI for sustainable design beyond energy savings. There are indications. A 2002 survey of 800 MBAs found that 80 percent said their motivation and company loyalty was enhanced by the company's sustainability initiatives. In the same study, 79 percent said they would forego income to work for a company with a credible strategy for sustainability.

Figure 3.17. Department of Homeland Security, Omaha, Nebraska. Recycled brick mulch from local brick plant. Full cut-off light fixtures to reduce light pollution. Building is 66 percent more efficient than ASHRAE 90.1 required. Soffit overhang on western facade. White roof to reduce heat island effect.

This survey strengthens such evidence with higher than average satisfaction ratings for the GSA buildings on air quality, cleanliness, thermal, acoustics, and lighting. Change management and periodic fine-tuning of systems also have apparent value.

This research provides the GSA with data to objectively assess building performance and the return from investment in LEED and other design approaches. It demonstrated that the top performing quartile of building already meet 2015 federal mandates for reducing metered energy and water use, thus providing guidance on future building programs. Areas for improvement and questions for future research also surfaced.

At a larger level, the methodology can be applied by practitioners to their own work. The full study cited here contains tools, formats, and standards that may be of use.

K. M. Fowler, andE. M. Rauch, Assessing Green Building Performance: A Post-Occupancy Evaluation of 12 GSA Buildings, PNNL-17393, Pacific Northwest National Laboratory, Richland, WA, 2008.

www.gsa.ov/appliedresearch.Survey of 800 MBAs from 11 Top International Business Schools, Stanford Graduate School of Business, 2002 GlobeScan International Survey, MORI.

Paco Underhill has spent more than 25 years conducting research on the different aspects of shopping behavior, earning his status as a leading expert and pioneer in the field. Envirosell, the firm he founded, is the principal testing agency for prototype stores and retail bank branches in the world. Beyond retail, the firm practice includes public libraries, airports, train stations, stadiums, museums, and medical offices. His research shows how today's world is ruled by factors such as gender, "trial and touch," and human anatomy. His first book, Why We Buy: The Science of Shopping, has been published in 27 languages, and has sold more copies than any other retail book in history. That book was reissued in 2009 as Why We Buy for the 21st Century. His second book, titled Call of the Mall: The Geography of Shopping, was published in 2004.

How do you use evidence, what kinds, and why is it important to your clients and your work?

I am delighted that twenty-first-century designers recognize that their responsibility isn't the construction of monuments but is focused on being able to serve the needs of people.

My job as a researcher is making frames. Let me construct the frame within which you focus your creative energies. I can make you a better designer without limiting your artistic or creative palette.

The architecture profession is struggling with its relationship with financial success. An architect can design an iconic headquarters building but that building will have no effect on the financial health of the company. An architectural firm's fees are based on selling hours, rather than some form of creative impact to the clients' bottom line. In store design, there is a long history of award-winning and widely published projects that are financial disasters and are closed before the prizes are awarded, or the spread in the magazine is published.

Design desperately needs to be more answerable to its clients and evidence is one of the ways of starting that process. So, how do you do it?

In 2009 it's easy to collect data. Being able to turn that data into something that's actionable and useful to a building operator or designer is a lot tougher. For 25 years, I've been using observation, interviews, mapping, and tracking systems to be able to try to understand better what happens.

There is a new generation of designers that are empowered by the collaboration with researchers. Early in my career I had the opportunity to interact with a number of name-brand architects, all of them talented and driven...but breathtakingly arrogant. Over the past ten years the design profession has changed. Just the fact that you are writing your book is evidence.

Some of the changes are driven by some very practical economic realities. In order to charge a premium for design services, there has to be some concrete evidence of value created—"This package did this for this brand"; "This store design increased sales"—or the perception of value—"This building or home resulted in this measurable result." Once you start to take measurements, the design profession is no longer a strictly creative process but one that has accountability.

Do you believe that being answerable to your clients will necessarily result in the best solutions for the community?

If the only thing that you designed is what someone asked you to do, then why did they need you in the first place? Clients often don't know what to ask for and therefore you have to be proactive in understanding their operating culture. That often involves some sort of discovery process at the front end. One of the historic problems for the design professions is whether that process happens in-house or is farmed out. If it happens in-house, it is a subservant factor to the design process itself. I think it's better to have someone who could, if need be, turn around and yell at you for turning in preliminary design concepts that have no relationship to the fact-finding mission undertaken on the front end of the project.

The design profession has to be a champion of the process of getting to design. Often our work with design firms is making sure we have everybody on the bus; then it's the designer's job to drive the bus. But getting everybody on board first is really critical.

How much evidence is enough and is it hard or transitory?

Think about what made a good house in 1985 and what made one in 2009. Some things are the same and some are different. Yes there are energy management issues and advances in building technologies, but we as people have changed, too. According to the most recent census data, less than 24 percent of American households have a mother and father and dependent children living under the same roof. Yet how much of new home construction is still about one master bedroom and a bunch of smaller ones? Where is the home gym and home office? Ever tried to keep a McMansion clean?

What tools and methods do you use to create or capture evidence?

We capture data in a variety of ways. For our observational data, our primary technology is humans and paper. We supplement this with video recordings using a combination of HDD and 8mm cameras. Video is analyzed by humans using video splitters to view multiple points simultaneously.

For survey data, we use PDAs, mostly Palm E2 and Palm CENTRO, with wireless capabilities to transmit data. Wireless transition allows us to monitor the progress of the interviewers every few hours. The palms are used mostly for surveys but have also been used for observational data when the capture points are linear.

Data is analyzed using a combination of SPSS, SPSS Desktop Reporter, MS Access, and MS Excel. While many deliverables are similar, each project requires a manual run of the data to account for the differences in each project. We use a proprietary global database to compare data points across different categories as well as countries.

Maps are done using a combination of software packages from basic PowerPoint shapes, to Google SketchUp and Adobe Photoshop. Much of the mapping is done manually. Again, this is due to the unique nature of every map and the data points that are illustrated by the maps. In some cases macros are used to facilitate the process.

We use technology selectively. There is so much technology out there that could make our data collection more efficient but with every piece of technology you give up something. Much of our work depends on seeing things that are not always obvious and much of this seeing needs to be done by a pair of eyes and not a CPU.

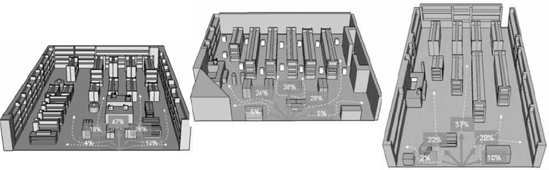

Figure 3.19. Entrance pathway mapping research reveals shoppers' immediate directional tendencies upon entering the store. Percentages do not reflect the shopping of specific fixtures. Fixtures are included for illustration purposes only.

Figure 3.20. This store coverage map illustrates the percentage of shoppers moving from the front to the rear of the store. The stores are divided consistently into four roughly equivalent sections for this measurement.

A simple example of this is something that we do routinely—a store entrance count. This is basically recording how many people enter the store and capturing some demographic information like age and gender.

There is facial recognition software that we can use to count the number of people entering while simultaneously recording the age and gender of each person. Once you set up your parameters, the software analyzes the video and gives you your data. However, the fact that you have to set up these parameters limits what the computer sees. In contrast, when we use humans to capture these numbers, they don't only see the parameters but the surrounding area. So in a simple entrance count, the human data capture can also note that the entrance is too narrow for two carts, or that traffic jams are common at the entrance, or that customers peek into the store but do not enter. (Maybe the Cashwrap line is visible from the entrance and it is too long.) A computer can never tell you that because you can't anticipate what you might see; thus you can't set the parameter. When we purchase technology, we look not only on how productive it makes us, but what we would lose and gain if we used it.

Figure 3.21. First destination documents the percent that various settings are the first stop of entering shoppers.

In the world of store design, there has been an intrusion of some technologies where the fascination is with the technology, rather than the human intelligence to turn it into some sort of useful form. One of the important qualifications of a new tool is how often you can use it and still add value. How often can I go back and redo a process—and redo—and learn something new that I can apply? For example, if I have a floor plan with all the tracks of where people are walking, is it something I want every two weeks or every two years? Something might be interesting once but not as an ongoing process. As a toolmaker looking at the world of design, I often look at the least amount of technology and how little I need to get my processing engine working.

Does evidence enrich or inhibit design creativity?

As a culture that consumes culture, we can no longer tolerate a one-to-one relationship between an artist and their work. That's an act of masturbation. It is exciting that there is a generation of designers out there now that recognizes that there are collaborative processes which are the focal point of getting desires and information out and into the design process...and that making it happen is really exciting. If we look at what's exciting in our culture, such as making a movie, those things are not solo acts.