The second experiment came about in an unexpected way. The company sd&m held a technical conference near Frankfurt, Germany some time in 1997. A number of speakers were invited, and I was among them. I talked about design patterns, our early results with pattern documentation, and how important it was to see whether design patterns really worked. I ended by saying that I was looking for subjects in a pattern experiment, and as a benefit for participating, I would offer an intensive pattern course, discussing all the major patterns known at the time. The president and founder of the company, Ernst Denert, sat in the front row. When I had finished he stood up, walked to the stage, and asked for my microphone. What was going to happen next? Taking away the speaker’s microphone is not exactly how the question-and-answer period after a talk works. He then turned to his employees (there were perhaps 150 in the room, most of them developers) and said: “You heard what Professor Tichy said. I don’t have anything to add to that. Who is going to participate?” After a few seconds of silence, a lot of hands went up! I’ve never had more effective encouragement for participation in any experiment, ever. After preparation and with the various scheduling conflicts, we actually ended up with 29 professional developers from sd&m participating. The experiment was conducted at sd&m’s Munich office in November 1997.

The experiment sought empirical evidence about whether using design patterns is beneficial, and whether some patterns are more difficult to use than others. In particular, we had noticed that developers sometimes went overboard in their pattern enthusiasm and used them in situations where simpler solutions would have been good enough. Was this overkill a problem? Chunking theory would suggest that it was not, because the size of a chunk does not matter. But the answers could be discovered only by observing programmers modifying programs with patterns and equivalent ones without patterns.

As previously, the experiment investigated software maintenance scenarios that employ various design patterns. This time we worked with professionals. The structure of the experiment was similar to the previous one, except that it employed a pre- and a post-test, and in took two full days. In the morning of day 1, the pre-test assessed the performance of programmers who had no knowledge of design patterns. In the afternoon and the morning of day 2, participants took an intensive pattern course, followed by a post-test in the second afternoon. The participants were 29 professional software engineers with an average of 4.1 years of experience. Participants worked on paper and had unlimited time; they all handed in their solutions within three hours. There were four programs on which maintenance tasks were performed. Each program existed in two versions: a pattern version containing one or two design patterns and an alternative version that was functionally equivalent but used a simpler structure instead of the pattern. Maintenance tasks were performed on programs with patterns and alternate versions, both in pre- and in post-test. The pre- and post-test allowed us to check whether pattern knowledge improved performance and whether there were any patterns that were easy to grasp without prior familiarity.

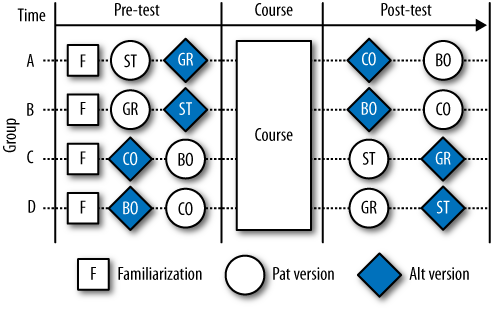

Figure 22-3 summarizes the experimental design. In order to control learning and fatigue effects, the order of programs is varied (counterbalancing), and data are collected from each subject on both pattern (Pat) and alternative (Alt) programs. ST, GR, CO, and BO are the programs. The familiarization phase (letter F) introduces participants to the experiment process.

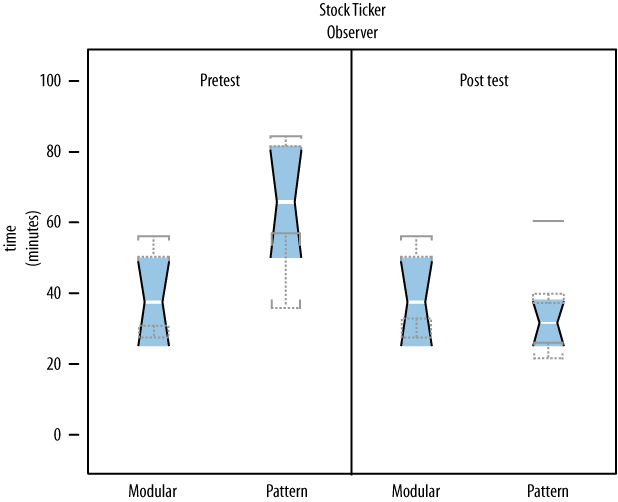

The results varied by pattern. Figure 22-4 shows the time participants took to complete maintenance tasks on the Observer pattern in program ST. The diagram contains four boxplots showing how modification times are distributed. Inside the hourglasses are the inner 50% of the data points; the median value is in the middle of the hourglass. The left side shows the pre-test. The pattern solution took longer to modify than the alternative (“modular”) solution. Apparently, an unknown pattern introduces additional complexity that costs extra time. After the pattern course, however, the situation changes. The alternative version still takes about the same time, but now the pattern solution is completed more quickly, even though it is more complex. This situation is exactly what chunking theory predicts.

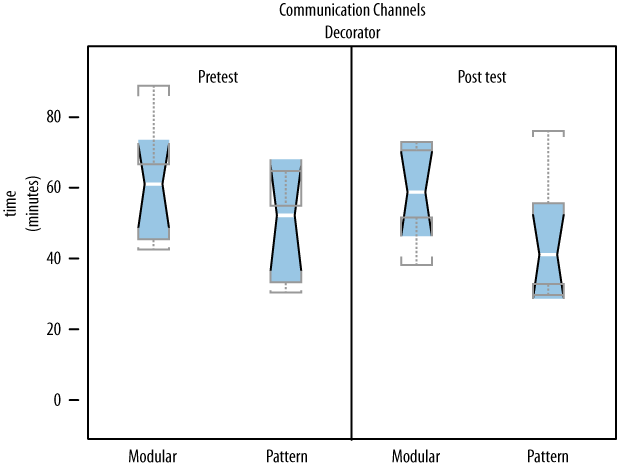

Figure 22-5 shows the time taken for Decorator. Apparently, working with the pattern rather than the alternative saves quite a bit of time. However, there is a surprise: there is no difference between pre- and post-test! Apparently, Decorator is easy to grasp and modify, even if not known to the programmers. Participants need no prior exposure to the Decorator chunk.

Not all results are this clear, though. For instance, Composite and Abstract Factory together showed no difference between the two versions in either pre- or post-test. Visitor caused great confusion. In the pre-test, the alternative solution took longer than the Visitor solution, whereas in the post-test the relation was reversed. Apparently, Visitor is difficult to understand in the relatively short time it was covered in the course. Altogether, there were nine maintenance tasks. In most, we found positive effects from using design patterns: either their inherent extra flexibility required no more maintenance time, or maintenance time was reduced compared to the simpler alternative. In a few cases, we found negative effects: the alternative solution was less error-prone or required less maintenance time. In other words, not all design patterns are equally beneficial. More details, broken down by individual maintenance task, can be found in [Prechelt et al. 2001].

The original experiment was replicated at the Simula Lab in Norway in 2002, with major extensions. We used the same sample programs and maintenance tasks, and I even gave the same course with identical slides. However, realism was increased by using a real programming environment instead of pen and paper. A total of 44 paid professionals from multiple major consultancy companies participated. Elapsed time and correctness were analyzed using regression models. Together with online logging of participants’ work, a better understanding of the results was possible. The data indicate quite strongly that some patterns are much easier to use than others. The patterns Observer and Decorator were grasped and used intuitively, even by subjects with little or no knowledge of patterns. Visitor caused difficulties. This confirmation of the earlier observations was important, because it applied to actual programming, not just exercises on paper. Also, participants varied greatly in professional experience (from less than a year to 20 years or more), so the results generalize much better.

The implication is that design patterns are not universally good or bad, but must be used in a way that matches the problem and the programmers’ knowledge. When approaching a program with documented design patterns, even short training can improve speed and quality of maintenance activities. When choosing between patterns and simpler, less flexible alternatives, it is probably wise to choose the patterns because unexpected new requirements often appear and the extra flexibility will be useful.