Scalability, reliability, and availability architectures

IBM Content Manager OnDemand (Content Manager OnDemand) is a lightweight process, that is, the Content Manager OnDemand code itself does not require extensive system resources to perform the functions that are required of it. Content Manager OnDemand installations scale to handle both large quantities of data and many users. The total quantity of data that is stored or retrieved at any time is the main contributor to the resource consumption on the server. This chapter focuses on the scalability, reliability, and availability of Content Manager OnDemand systems.

In this chapter, we cover the following topics:

12.1 Scalability, reliability, and availability defined

This section defines scalability, reliability, and availability and how they relate to a Content Manager OnDemand system.

Scalability

Scalability is the ability of a Content Manager OnDemand system to handle a growing amount of work with no performance degradation. A Content Manager OnDemand system’s performance improves with the addition of hardware and network resources and therefore is defined as a scalable system. Two types of scalability are defined:

•Horizontal scalability (or scale out): This type of scalability is achieved by adding more nodes, systems, or logical partitions (LPARs) to a Content Manager OnDemand instance. An example of horizontal scalability is adding more object servers to a Content Manager OnDemand instance.

•Vertical scalability (or scale up): This type of scalability is achieved by adding more resources to a single node in a Content Manager OnDemand instance. Typically, this type of scalability involves faster processors, more processors, memory, disks, or networking hardware.

Content Manager OnDemand is both horizontally and vertically scalable.

Reliability

Reliability is the ability of Content Manager OnDemand to perform and maintain functionality during regular workloads and during peak workloads. Peak workloads might occur regularly (for example, when everyone signs on at 9:00 a.m.) or periodically (at the end of the month when more processing than usual occurs). Or, peak workloads might occur sporadically (for example, when a special event occurs, such as a sales drive that results in more users using the system).

Availability

Availability is a measure of the time that a Content Manager OnDemand server or process functions normally, and a measure of the time that the recovery process requires after a component failure. It is the downtime (unavailability) that defines system availability. Availability is the amount of system uptime when the system is fully functional and accessible by all users.

Availability requires that the system provides a degree of redundancy to eliminate single points of failure (SPOFs). The greater the redundancy that is provided, the higher the availability of the system. A single physical machine is still a SPOF. For this reason, a high availability system topology typically involves horizontal scaling and redundancy across multiple machines.

High availability

High availability implies that no human intervention is needed to restore operation if a failure or outage occurs. A highly available system has an availability limit of at least 99%, which allows an average of 15 minutes each day to perform maintenance tasks (during which period the system is inaccessible to users). The degree of high availability that is achieved is a function of the amount of redundancy within the system and the degree to which this redundancy is automatically enabled.

Basically, two redundancy techniques are available:

•Passive redundancy: This redundancy is achieved by including enough excess capacity in the design to accommodate a performance decline, such as two Content Manager OnDemand servers (known as ARSSOCKD on z/OS and Multiplatforms) that access the same system tables and archive. If one server fails, the other server is available to take on the workload.

•Active redundancy: This redundancy is used to achieve high availability with no performance decline. In this case, at least double the required resources are allocated to the Content Manager OnDemand system. For example, if the peak workload requires 1.5 Content Manager OnDemand servers, three Content Manager OnDemand servers are configured to work in parallel. If one of the servers fails, the other two servers can take on the full workload with no performance degradation.

Systems typically become unavailable because of the lack of one or more of the following activities:

•Change control procedures (a failure to implement the appropriate procedures from installation verification through performance testing before you place the system into production)

•Monitoring of production system components (including total system workload, hardware, and network issues)

•Implementing high availability solutions (redundant systems and network connections)

•A comprehensive backup (and restore) process that is tested on a routine basis

A cost exists to implementing highly available high-performance systems. This cost must be weighed against the cost of not implementing such systems.

The following sections provide more information about example system implementations that allow high performance, scalability, reliability, and availability.

12.2 Scaling a Content Manager OnDemand system

A Content Manager OnDemand instance can be scaled from a single system image that performs all of the required tasks (data loading, library storage, and object storage) to a multiple system/multiple LPAR configuration, which offers higher levels of performance and availability. When a Content Manager OnDemand instance is distributed among multiple systems, these systems might be configured in the following ways:

•Single technology systems: The Content Manager OnDemand instance consists of systems that are of the same architecture. For example, all systems might be AIX systems.

•Multiple technology systems: The Content Manager OnDemand instance might consist of systems of different architectures. For example, the library server and an object server might be on a z/OS system; two other object servers might be on AIX systems; and another object server might be on a Microsoft Windows system.

In both of these scenarios, the configuration results in a single Content Manager OnDemand instance view from both the administrative and user perspectives.

With this flexibility and scalability, you can configure Content Manager OnDemand systems so that they meet a wide range of both workload and operational requirements.

Examples of these configurations are illustrated in the figures in this section. These figures are only a sample of the possible configurations that are used to illustrate the basic scalability features.

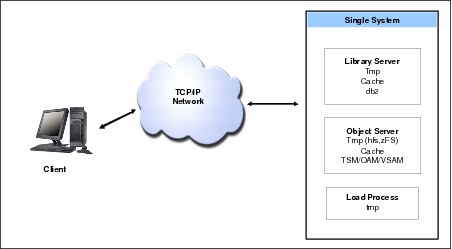

Figure 12-1 illustrates a single Content Manager OnDemand instance. In this figure, the Content Manager OnDemand server supports the library server, one or more object servers, and one or more load processes. The following sections provide examples of how the Content Manager OnDemand server can be scaled both vertically and horizontally.

Figure 12-1 Scalability: A single instance (simple client/server) setup

12.2.1 Vertical scalability

You can scale Content Manager OnDemand vertically by expanding the system, by using a larger system, through application design, or through parallel archive access.

Expanding the system

Content Manager OnDemand is vertically scalable if the system that it is running on is scalable. Vertical scalability is achieved by adding more hardware to the system. This hardware might be in the form of faster processors, more processors, memory, disks, I/O, or network capacity.

The limit to the amount of possible vertical scalability is the architectural hardware constraints of the system. For example, if the system supports only 24 GB of memory, that memory limitation can be overcome only by buying a larger system.

Using a larger system

You can scale a Content Manager OnDemand system vertically by using a larger system in one of two ways:

•Installing a larger system within the same family and architecture, for example, moving from an entry-level AIX system to an enterprise-level AIX system

•Installing a larger system from a different architecture and family, for example, moving a Content Manager OnDemand server from a Windows system to an AIX system

Application design

Modern computer systems contain multiple cores. They can perform multithreaded processing. Modern computer system operating systems allow parallelism in operations. To take advantage of these hardware and software features, an application must be designed so that it can run in parallel at multiple levels. Content Manager OnDemand can take advantage of both.

At the process level, the Content Manager OnDemand server runs multiple processes:

•A library server

•One or more object servers

•One or more load jobs

•The expiration process

At the thread level:

•The library server is designed so that it is multithreaded and it can service multiple incoming data requests on different threads and perform multiple database queries in parallel.

•The object server is also multithreaded so that multiple users can concurrently retrieve data from the Content Manager OnDemand archive.

Parallel archive access

When you access the Tivoli Storage Manager or object access method (OAM) archives, a store or retrieve request is sent to Archive Storage Manager (ASM). ASM then either stores or retrieves the data and returns the result to the Content Manager OnDemand server. If this process is conducted in a serial fashion, the archive storage access mechanism becomes a bottleneck at high transaction rates. To overcome this potential bottleneck, Content Manager OnDemand implements connection pooling to the storage archives.

Content Manager OnDemand maintains a pool of connections to the archive. When an archive store or retrieve request is received, an available connection from the pool is selected to perform the request. This connection allows both faster access to the archive (by eliminating the start process each time a connection is requested) and for the parallel execution of the store or retrieve operations.

On IBM i, when you access the ASM archives, connection pooling is not required for store requests. When a store request is made, ASM opens a connection and keeps it open until the data store request is complete. In addition, ASM allows the aggregation of objects, sending fewer objects to storage media than otherwise are sent without aggregation.

On Multiplatforms and z/OS, you can aggregate documents that are loaded from Content Manager OnDemand Web Enablement Kit (ODWEK) before you store them in the archive. The document is stored to cache where it is appended to the storage object until the object reaches 10 MB (defined storage object size), at which point it is migrated to a storage manager, such as Tivoli Storage Manager. For more information about this topic, see the following website:

12.2.2 Horizontal scalability: Library server

Even though Content Manager OnDemand allows a single library server for each instance, this library server can be scaled horizontally. The library server is scaled horizontally by using one or both of the following methods:

•The database tables (both the system and the application group) can be placed in different databases (z/OS) or different table spaces (Multiplatforms and z/OS) at the table level. Therefore, each of these tables can scale to the maximum practical size that is supported by the database within the operational constraints of maintenance and performance. Content Manager OnDemand does not impose a limitation.

•The application group data table design facilitates the following actions:

– You can create as many application groups as you need to support the required data to be archived.

– Each application group can be segmented into multiple tables where the table segmentation is based on size.

– Each of these application group data tables can be placed in a separate database (z/OS) or table space (Multiplatforms and z/OS).

12.2.3 Horizontal scalability: Multiple object servers

For Multiplatforms and z/OS, you can scale a Content Manager OnDemand system horizontally by using multiple object servers.

In the example that is shown in Figure 12-2, the Content Manager OnDemand system is horizontally scaled by placing the library server, object servers, and load processes on multiple systems.

Figure 12-2 Horizontal scaling: Multiple object servers (z/OS and Multiplatforms)

This form of horizontal scalability provides better performance, reliability, and scalability by distributing the storage and retrieval workload over multiple systems.

From a Content Manager OnDemand perspective, no limit exists to the number of object and load process servers. Each of the servers can run to its maximum capacity. Operational limitations are imposed by the TCP network bandwidth that connects all of the servers and by the available data center floor space. Both of these constraints can be reduced by placing multiple servers in a rack-mounted configuration.

This example and all of the following examples, from an external perspective, show a single Content Manager OnDemand instance. The fact that the system consists of multiple distributed systems is transparent to both of the following groups:

•The Content Manager OnDemand administrator, who continues to administer the system through the Content Manager OnDemand Administrator Client as though it is a single physical system.

•The Content Manager OnDemand users, who continue to access the whole system through a single IP address (that of the library server) and see only a single system from their perspective.

12.2.4 Horizontal and vertical scalability: Storage manager

This form of horizontal scalability provides better performance, reliability, and scalability by distributing the storage and retrieval workload over multiple storage subsystems within each object server.

An object server controls the storage and retrieval of the archived data. The archived data is stored in a storage subsystem. The number and architecture of these subsystems can be scaled to the limitations of the subsystem. Each object server can support one or more storage subsystems, and each storage subsystem can consist of multiple storage devices, as shown in Figure 12-3.

Figure 12-3 Horizontal and vertical scaling: Multiple storage managers

Each object server can have multiple storage subsystems of different types:

•Cache: The cache storage subsystem is controlled directly by the object server. Data is written to and read directly from cache. Cache consists of one or more cache file systems. Each cache file system can be mounted on a different device in its own directory. Each device can be placed on its own independent I/O interface/channel. Content Manager OnDemand does not impose a limit on the number of devices.

•IBM Tivoli Storage Manager: Tivoli Storage Manager is an archive storage subsystem. The Content Manager OnDemand object server sends data to and requests data from Tivoli Storage Manager. Each Tivoli Storage Manager server can be installed on its own system (for example, an AIX server). The Content Manager OnDemand object server allows the connection of multiple Tivoli Storage Manager servers. So, for example, if the Content Manager OnDemand object server is an AIX system and the data that is managed by that object server is stored in three Tivoli Storage Manager archives (all of which are AIX systems), the total processing capacity for that object server is four AIX systems. Each of the AIX systems can be configured with as many processors, memory, disks, and I/O as needed, up to its architectural limitation. If more capacity is needed, more Tivoli Storage Manager servers or object servers can be added.

•Object access method (OAM): OAM is a z/OS archive storage subsystem only. Only one OAM archive exists for each system. Scalability within the archive is achieved by increasing the number of storage groups. A z/OS system can grow by increasing the number of processors, amount of memory, number of disks, and I/O. If more capacity is needed than can be provided by a single system, z/OS allows multiple systems to be connected in a Parallel Sysplex. All of these systems then can access the same OAM subsystem, therefore providing unparalleled scalability, reliability, availability, and performance.

Both Tivoli Storage Manager and OAM provide hierarchical data management facilities. Data can be stored on different devices based on the age or predicted frequency of data access. For example, frequently accessed data might be placed on high-speed disk and infrequently accessed data might be placed on tape. When the data is requested by a user, the location of the data is transparent to the user. The only perceived difference from a user perspective is the response time, which is mainly a factor of the type of device on which the data is stored. In this example, tape access is slower than disk access.

In summary, better performance is achieved by distributing the storage and retrieval workload over multiple systems and multiple devices.

12.2.5 Horizontal scalability: Multiple logical partitions and systems

This scenario is similar to the multiple object server scenario where each object server is running on a separate system. In this case, the library server and one or more object servers are installed in separate LPARs on one or more physical systems, as shown in Figure 12-4 on page 291.

Figure 12-4 Horizontal and vertical scaling with multiple LPARs

This scenario is in organizations with large systems, such as AIX or z/OS, that are installed and that have enough available capacity to support the required Content Manager OnDemand workload. One advantage of this configuration is that you can control the priority of work and computer resource distribution to each of the LPARs, such as the number of processors or the processing priority (depending on the computer system/operating system architecture) that is allocated to each of the LPARs. So, for example, load jobs can be assigned a low priority during the day when the focus is on data retrieval and a high priority during the night when the focus is on data loading.

This setup supports horizontal scalability by using multiple technologies as appropriate. The main constraint is that clients must have access to all systems through TCP/IP.

12.2.6 Multiple server configuration rules

The following general rules apply when you configure multiple Content Manager OnDemand servers. In all cases, for additional guidance, see the appropriate Content Manager OnDemand documentation or contact Content Manager OnDemand Lab Services.

•Each Content Manager OnDemand server has its own set of configuration files.

•The parameters in all configuration files must be set so that all of the servers are part of the same instance.

•The Content Manager OnDemand clients connect to the IP address listening port of the Content Manager OnDemand server (library server module).

•The documents are retrieved from the various object servers based on the location information that is returned by the library server. This retrieval is transparent to the client systems.

•Parallel load processes must have separate temp directories.

Figure 12-5 on page 292 depicts this configuration type.

Figure 12-5 Multiple server configuration

12.3 High availability

The concept of high availability roughly equates to a system and its data being available (accessible by users) almost all of the time, 24 hours a day, 7 days a week, and 365 days a year. In actuality, 100% availability is not a cost-effective reality today for most implementations; rather, it is a goal. The goal is to design and build systems that are highly available by minimizing both planned and unplanned outages that can be caused by SPOFs.

12.3.1 Redundant systems: All platforms

Various techniques are employed on all platforms to achieve near high availability. These techniques are based on creating as much redundancy as possible within the system and the data that the systems include:

•Preventing data loss: Employing various levels of RAID to store the data on disk.

•Duplicating the data: Creating near real-time copies of the data on backup devices that replace the online devices if the online devices fail.

•Duplicate systems: A duplicate system (hardware, software, and data) is maintained (either locally or remotely), and when the main system fails, users are automatically directed to the duplicate system.

•Network redundancy: Creating multiple paths through the network so that if one path (or router) fails, the network continues to function.

All of these techniques work well and provide various levels of near real-time high availability based on the degree to which the redundant systems are created and are kept in active-standby mode.

12.3.2 Multiple LPAR sysplex: z/OS

The z/OS operating system has a high availability architecture that is built into it. A z/OS Parallel Sysplex is a tightly coupled cluster of independent z/OS systems that connect through an Internet Protocol network. A cluster is 2 - 32 independent systems that are locally or geographically dispersed. Communication between the z/OS systems in the sysplex is handled through the cross-system Coupling Facility (XCF). A z/OS Parallel Sysplex implementation provides the highest level of high availability in the industry.

Figure 12-6 illustrates a Content Manager OnDemand implementation of a two-system highly available z/OS sysplex system.

Figure 12-6 Scalability: Parallel Sysplex/multiple LPARs (z/OS)

Figure 12-6 illustrates an example of a two-system Content Manager OnDemand Parallel Sysplex implementation. z/OS system A contains a library server and an object server. The library server and object server can be either combined in a single executable file (most common z/OS implementation) or separated into two executable files, in which case they are installed in separate LPARs. z/OS system B shows a multiple LPAR system with a combined library/object server that is installed in each of the LPARs.

Both of these systems (all LPARs and all instances of the Content Manager OnDemand server) access a single set of Content Manager OnDemand database tables through DB2 data sharing. They also access a single OAM archive system through an OAMplex. Not shown in the figure is the access to a single Job Entry Subsystem (JES) spool and a shared file system (which consists of a set of hierarchical file systems (HFS) or z/OS file systems (zFS). The term “single” is used to imply that the same set of data is available to all systems concurrently. Each of these single systems consists of highly redundant components and therefore do not represent a SPOF.

The z/OS Parallel Sysplex technology enables the Content Manager OnDemand servers to share configuration files, database, JES, HFS, and archive. For performance reasons, all HFS read/write directories that are used for temporary storage of data are configured as unique to each Content Manager OnDemand server.

From a client perspective, the “cluster” is a single IP address. Incoming client requests are received by the sysplex distributor/Workload Manager (WLM). WLM monitors the various systems in the Parallel Sysplex and selects the appropriate Content Manager OnDemand server to forward the request to based on the current system workload and availability, so that the system that is more available (less busy) receives the request.

12.3.3 High availability: IBM i

IBM PowerHA® SystemMirror® for i is the integrated IBM storage-based clustering solution for high availability and disaster recovery. The data and applications are deployed into storage pools, which are called independent auxiliary storage pools (IASPs). IASPs can be deployed by using either internal or external storage. At any time, the nodes in the cluster can switch roles and become either a primary or backup node. PowerHA SystemMirror can be used for on-demand role swap operations.

The IBM Power Systems™ Capacity BackUp (CBU) offerings support disaster recovery and high availability needs. The CBU offerings recognize that true high availability or disaster recovery solutions require at least two systems. If one system is not available, the other system takes over. The CBU offering provides flexible and economic options for deploying business continuity operations.

In a high availability environment on IBM i, you might not want to replicate the following directories because OnDemand places only temporary data in them, and this data might occupy a large amount of space:

•Do not replicate the temporary integrated file system (IFS) directories for your instances. For example, do not replicate /QIBM/UserData/OnDemand/QUSROND/TMP or /QIBM/UserData/OnDemand/QUSROND/PRTTMP, where QUSROND is your instance name.

•Do not replicate the home directory for the user that is storing data. For example, if JOHNDOE is the name of the user profile that stores data into Content Manager OnDemand, do no replicate /home/JOHNDOE.

•Do not replicate the /tmp directory.

12.3.4 Horizontal and vertical scalability summary

You can use the architectural flexibility of Content Manager OnDemand (Figure 12-7 on page 295) to select the correctly sized system based on your needs. A Content Manager OnDemand implementation can be scaled both vertically (by using larger and larger systems) and horizontally (by increasing the number of systems that are part of the Content Manager OnDemand instance).

Figure 12-7 Horizontal and vertical scalability

A Content Manager OnDemand server can scale from a Windows server up to a cluster of z/OS systems. It is important to initially select an installation that meets the following requirements:

•Appropriate for your current workload in terms of the following items:

– Performance

– Reliability

– Availability

– Scalability

•Support for your future growth requirements if the following actions are necessary:

– Increase the number of users that access the system

– Increase the quantity of data that is stored in the system

•Change in the types of archived data

•Change in the preprocessing requirements

..................Content has been hidden....................

You can't read the all page of ebook, please click here login for view all page.