Solution architecture

This chapter provides a technical overview of the Microsoft Azure environment regarding architectural aspects of IBM Spectrum Virtualize for Public Cloud in Azure. It also explains various components of the Azure solution and how they interact and interrelate with each other.

This chapter includes the following topics:

•3.6, “Solution architecture: IBM Spectrum Virtualize as a storage for all-in-cloud model” on page 43

3.1 IBM Spectrum Virtualize

IBM Spectrum Virtualize is a software-enabled storage virtualization engine that provides a single point of control for storage resources within the data centers. IBM Spectrum Virtualize is a core software engine of established and IBM storage virtualization solutions, such as IBM SAN Volume Controller and all versions of the IBM FlashSystem family of products. This technology is now available in Azure, providing increased flexibility in data center infrastructure and cloud systems. This section describes the components of IBM Spectrum Virtualize as they are deployed in the cloud.

3.1.1 Nodes

IBM Spectrum Virtualize software is installed on Azure VM instances that are provisioned in Azure cloud. Each Azure VM instance is called a node. The node provides the virtualization for a set of volumes, cache, and copy services functions. The nodes are deployed in pairs (I/O groups) and 1 - 4 pairs make up a clustered system.

|

Note: At the time of this writing, IBM Spectrum Virtualize for Public Cloud on Azure is limited to a single I/O group. However, plans are in place to expand to four I/O groups in the future.

|

One of the nodes within the system is assigned the role of the configuration node. The configuration node manages the configuration activity for the system and owns the cluster IP address that is used to access the management GUI and command-line interface (CLI) connections. If this node fails, the system chooses a new node to become the configuration node. Because active nodes are installed in pairs, each node maintains cache coherence with its partner to provide seamless failover functions and fault tolerance.

3.1.2 I/O groups

Each pair of IBM Spectrum Virtualize nodes is referred to as an I/O group. A specific volume is always presented to a host server by a single I/O group in the system.

When a host server performs I/O to one of its volumes, all the I/Os for a specific volume are directed to one specific I/O group in the system. Under normal conditions, the I/Os for that specific volume are always processed by the same node within the I/O group. This node is referred to as the preferred node for this specific volume. When the preferred node receives a write into its cache, that write is mirrored to the partner node before the write is acknowledged back to the host. Reads are serviced by the preferred node.

Both nodes of an I/O group act as the preferred node for their own specific subset of the total number of volumes that the I/O group presents to the host servers. However, both nodes also act as failover nodes for their respective partner node within the I/O group.

Therefore, a node takes over the I/O workload from its partner node, if required. For this reason, servers that are connected to use multipath drivers must handle these failover situations.

If required, host servers can be mapped to more than one I/O group within the IBM Spectrum Virtualize system. Therefore, they can access volumes from separate I/O groups.

You can move volumes between I/O groups to redistribute the load between the I/O groups. Modifying the I/O group that services the volume can be done concurrently with I/O operations if the host supports nondisruptive volume moves and is zoned to support access to the target I/O group. It also requires a rescan at the host level to ensure that the multipathing driver is notified that the allocation of the preferred node changed, and the ports by which the volume is accessed changed. This modification can be done in the situation where one pair of nodes becomes overused.

|

Note: For more information about restrictions around Non-Disruptive Volume Move (NDVM), see this IBM Documentation web page.

|

3.1.3 Systems

The current IBM Spectrum Virtualize for Public Cloud in Azure supports a clustered system that consists of one I/O group (it is planned to support more soon). Specific configuration limitations are then set for the individual system. For example, the maximum managed disks (MDisks) that are supported is 992 TB per system. The current Azure implementation is optimized around 31 Azure MDisks and the largest single Azure disk is 32 TB.

All configuration, monitoring, and service tasks are performed at the system level. Configuration settings are replicated to all nodes in the system. To facilitate these tasks, a management IP address is set for the system.

|

Note: The management IP is also referred to as the system IP or cluster IP and is active on the configuration node. Each node in the system also is assigned a service IP to allow for individually interacting with the node directly.

|

A process is provided to back up the system configuration data onto disk so that it can be restored if a disaster occurs. This method does not back up application data; only the IBM Spectrum Virtualize system configuration information is backed up.

For the purposes of remote data mirroring, two or more systems must form a partnership before relationships between mirrored volumes are created. For more information, see this IBM Documentation web page.

3.1.4 MDisks

IBM Spectrum Virtualize for Public Cloud on Azure uses Azure MDisks as a back-end storage. Azure MDisks are offered with two storage redundancy options: zone-redundant storage (ZRS), and locally redundant storage (LRS). ZRS provides higher availability for MDisks than does LRS. However, the write latency for LRS disks is better than ZRS disks because LRS disks synchronously write data to three copies in a single data center.

IBM Spectrum Virtualize for Public Cloud supports only LRS types of storage. Azure MDisks are presented to the Azure VM instance (Spectrum Virtualize nodes) as disks or LUNs. IBM Spectrum Virtualize does not attempt to provide recovery from physical failures within the back-end controllers; an MDisk in Azure is provisioned from LRS storage.

LRS disk volumes are suitably protected and include built-in redundancy. LRS replicates your disk data three times within a single data center in the selected region. LRS protects your data against server rack and drive failures.

The application servers do not see the MDisks. Rather, they see several logical disks, which are known as virtual disks or volumes. These virtual disks are presented by the I/O groups through the LAN (iSCSI) to the servers. The MDisks are placed into storage pools where they are divided into extents that are used to create the virtual disks or volumes.

MDisks that are presented to IBM Spectrum Virtualize can have the following operating modes:

•Unmanaged MDisk

An MDisk is reported as unmanaged when it is not a member of any storage pool. An unmanaged MDisk is not associated with any volumes and has no metadata that is stored on it. IBM Spectrum Virtualize does not write to an MDisk that is in unmanaged mode, except when it attempts to change the mode of the MDisk to one of the other modes.

•Managed MDisk

Managed MDisks are members of a storage pool and they contribute extents to the storage pool. This mode is the most common and normal mode for an MDisk.

3.1.5 Storage pools

A storage pool or MDisk group is a collection of MDisks that provides the pool of storage from which volumes are provisioned. The size of these pools can be changed (expanded or shrunk) nondisruptively by adding or removing MDisks without taking the storage pool or the volumes offline. At any point, an MDisk can be a member in one storage pool only.

Each MDisk in the storage pool is divided into extents. The size of the extent is selected by the administrator when the storage pool is created and cannot be changed later (although methods are available to address this issue by using volume mirroring). The size of the extent can be 16 MiB (mebibyte) - 8192 MiB, with the default being 1024 MiB.

It is a best practice to use the same extent size for all storage pools in a system. This approach is a prerequisite for supporting volume migration between two storage pools. If the storage pool extent sizes are not the same, you must use volume mirroring to copy volumes between pools.

For more information, see this IBM Documentation web page.

3.1.6 Child pools

A subset of a pool can be created for administrative isolation. This feature can be useful when thin-provisioned volumes are used to prevent a single application from using all available space in the pool.

Another important use of child pools was introduced in IBM Spectrum Virtualize Version 8.4.2: the safeguarded child pool. This object allows the creation of unalterable snapshots of important volumes to guard against accidental or malicious corruption.

For more information, see 2.4, “Safeguarded Copy” on page 21.

3.1.7 Volumes

Volumes are logical disks that are presented to the host or application servers by IBM Spectrum Virtualize. The hosts cannot see the MDisks; they can see only the logical volumes that are created from combining extents from a storage pool.

For more information, see this IBM Documentation web page.

3.1.8 Hosts

Volumes can be mapped to a host to allow access for a specific server to a set of volumes. A host within the IBM Spectrum Virtualize is a collection of iSCSI-qualified names (IQNs) that are defined on the specific server. As a result, a node failover (when a node is restarted) can be handled without having a multipath driver that is installed on the iSCSI-attached server.

3.1.9 Host clusters

A host cluster is a host object in IBM Spectrum Virtualize. A host cluster is a combination of two or more servers that are connected to IBM Spectrum Virtualize through an iSCSI connection. A host cluster object can see the same set of volumes; therefore, volumes can be mapped to a host cluster to allow all hosts to have a common mapping.

For more information, see this IBM Documentation web page.

3.1.10 iSCSI

The iSCSI function is a software function that is provided by the IBM Spectrum Virtualize code. IBM introduced software capabilities to allow the underlying virtualized storage to attach to IBM Spectrum Virtualize by using the iSCSI protocol.

The major functions of iSCSI include encapsulation and the reliable delivery of Command Descriptor Block (CDB) transactions between initiators and targets through the IP network, especially over a potentially unreliable IP network.

Every iSCSI node in the network must have the following iSCSI components:

•An iSCSI name is a location-independent, permanent identifier for an iSCSI node. An iSCSI node has one iSCSI name, which stays constant for the life of the node. The terms initiator name and target name also refer to an iSCSI name.

•An iSCSI address specifies the iSCSI name and location of an iSCSI node. The address consists of a hostname or IP address, a TCP port number (for the target), and the iSCSI name of the node. An iSCSI node can have any number of addresses, which can change at any time, particularly if they are assigned by way of Dynamic Host Configuration Protocol (DHCP). An IBM Spectrum Virtualize node represents an iSCSI node and provides statically allocated IP addresses.

3.1.11 Cache

The primary benefit of storage cache is to improve I/O response time. Reads and writes to a magnetic disk drive experience seek and latency time at the drive level, which can result in 1 ms - 10 ms of response time (for an enterprise-class disk).

IBM Spectrum Virtualize provides a flexible cache model, and the node’s memory can be used as read or write cache. The cache management algorithms allow for improved performance of many types of underlying disk technologies. The IBM Spectrum Virtualize capability to manage in the background the de-staging operations that are incurred by writes (in addition to still supporting full data integrity) helps the IBM Spectrum Virtualize capability to achieve good database performance.

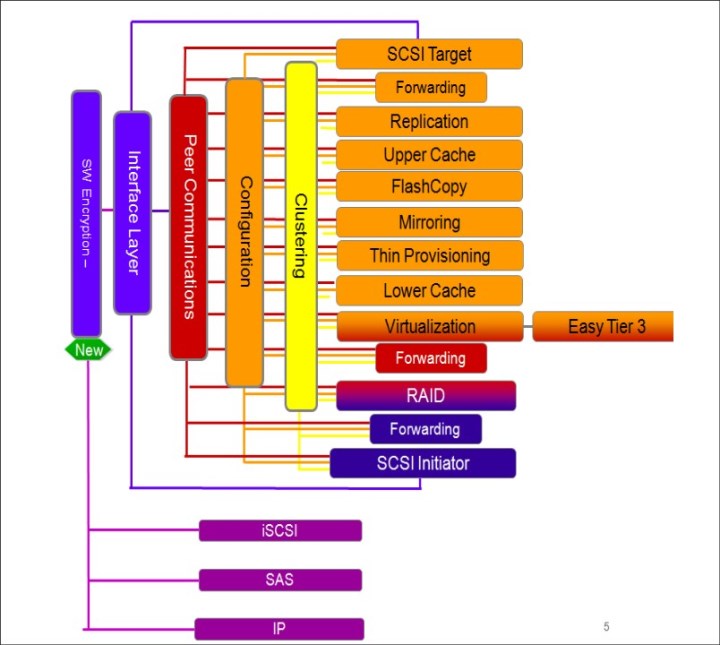

The cache is separated into two layers: upper cache and lower cache (see Figure 3-1).

Figure 3-1 Separation of upper and lower cache

3.1.12 IBM Easy Tier

IBM Easy Tier is a performance function that automatically migrates or moves extents of a volume to or from one MDisk storage tier to another MDisk storage tier. IBM Spectrum Virtualize code can support a three-tier implementation.

Easy Tier monitors the host I/O activity and latency on the extents of all volumes with the Easy Tier function, which is turned on in a multitier storage pool over a 24-hour period.

Next, it creates an extent migration plan that is based on this activity, and then, dynamically moves high-activity or hot extents to a higher disk tier within the storage pool. It also moves extents whose activity dropped off or cooled down from the high-tier MDisks back to a lower-tiered MDisk. The condition for hot extents is frequent small block (64 Kb or less) reads.

|

Easy Tier: The Easy Tier function can be turned on or off at the storage pool and volume level.

|

The automatic load-balancing (auto-rebalance) function is enabled by default on each volume and cannot be turned off by using the GUI. This load-balancing feature is not considered the same as the Easy Tier function, but it uses the same principles. Auto-balance evens the load for a pool across MDisks. Therefore, even the addition of MDisks, or having MDisks of different sizes within a pool, does not adversely affect the performance.

The Easy Tier function can make it more suitable to use smaller storage pool extent sizes. The usage statistics file can be offloaded from the IBM Spectrum Virtualize nodes. Then, you can use the IBM Storage Advisor Tool (STAT) to create a summary report. STAT is available on the web at no initial cost at IBM Storage Tier Advisor.

3.1.13 IP replication

IP replication allows data replication between IBM Spectrum Virtualize family members. IP replication uses IP-based ports of the cluster nodes.

The configuration of the system is straightforward and IBM FlashSystem family systems normally find each other in the network and can be selected from the GUI.

IP replication includes Bridgeworks SANSlide network optimization technology and is available at no extra charge. Remote Mirror is a chargeable option, but the price does not change with IP replication. Existing Remote Mirror users can access the function at no extra charge.

IP connections that are used for replication can have long latency (the time to transmit a signal from one end to the other), which can be caused by distance or by many “hops” between switches and other appliances in the network. Traditional replication solutions transmit data, wait for a response, and then, transmit more data, which can result in network utilization as low as 20% (based on IBM measurements). In addition, this scenario worsens the longer the latency.

Bridgeworks SANSlide technology, which is integrated with the IBM FlashSystem family, requires no separate appliances and so requires no extra cost or configuration steps. It uses artificial intelligence (AI) technology to transmit multiple data streams in parallel, adjusting automatically to changing network environments and workloads.

Bridgeworks SANSlide improves network bandwidth utilization up to 3x. Therefore, customers can deploy a less costly network infrastructure, or take advantage of faster data transfer to speed replication cycles, improve remote data currency, and enjoy faster recovery.

3.1.14 IBM FlashCopy

FlashCopy is sometimes described as an instance of a time-zero (T0) copy or a PiT copy technology.

FlashCopy can be performed on multiple source and target volumes. FlashCopy permits the management operations to be coordinated so that a common single PiT is chosen for copying target volumes from their respective source volumes.

With IBM Spectrum Virtualize, multiple target volumes can undergo FlashCopy from the same source volume. This capability can be used to create images from separate PiTs for the source volume, and to create multiple images from a source volume at a common PiT. Source and target volumes can be thin-provisioned volumes.

Reverse FlashCopy enables target volumes to become restore points for the source volume without breaking the FlashCopy relationship, and without waiting for the original copy operation to complete. IBM Spectrum Virtualize supports multiple targets, and has multiple rollback points.

Most clients aim to integrate the FlashCopy feature for PiT copies and quick recovery of their applications and databases. An IBM solution to this is provided by IBM Spectrum Protect, which is described in What can IBM Spectrum Protect do for your business?.

3.2 Azure terminology

Azure terminology is listed in Table 3-1.

Table 3-1 Azure terminology

|

Azure term

|

Explanation

|

|

Azure resource group

|

A container that holds all of the related resources for an Azure solution. For more information, see this web page.

|

|

Azure key vault

|

This vault is used to store the credentials in Azure and the store temporary key for cluster configuration and are deleted after the cluster configuration is complete. For more information, see this web page.

|

|

Azure network security groups

|

This group contains security rules that define rules for inbound network traffic, outbound network traffic between Azure resources. For more information, see this web page.

|

|

VNet

|

The Azure virtual network (VNet) facilitates secured communication between different types of Azure resources, such as Azure virtual machines (VM), external network, and the internet. For more information, see this web page.

|

|

Subnet

|

A range of IP addresses in the virtual network. You can divide a virtual network into multiple subnets for organization and security. Each NIC in a VM is connected to one subnet in one virtual network. For more information, see this web page.

|

|

Proximity placement group

|

An Azure construct that is used to specify proximity and placement input to deployment when many logically grouped Azure VMs are deployed. When specified, Azure ensures that associated compute resources are physically located close to each other. For more information, see this web page.

|

|

Availability set

|

A logical grouping of VMs that allows Azure to understand how your application is built to provide for redundancy and availability. For more information, see this web page.

|

|

Fault domain

|

A set of hardware components that share a single point of failure.

|

|

Update domain

|

This group of resources can be updated and brought down for maintenance and patching (for example, system patches and software updates) possibly at the same time. Typically, only one update domain is brought down at a time for patching.

|

3.3 Key components of the Azure solution

Figure 3-2 shows the high-level architecture of Spectrum Virtualize for Public Cloud on Microsoft Azure.

Figure 3-2 Components of the Azure solution

The IBM Spectrum Virtualize for Azure IaaS solution includes the following building blocks:

•Azure virtual machines

•Azure managed disks

•Azure networking elements, such as vNET, subnets, loadbalancer, and vNICs

•Azure constructs, such as resource group, availability set, and proximity

•Azure policies for network security groups

3.3.1 Azure Resource Manager template-based publishing

IBM Spectrum Virtualize for Public Cloud is published in the Azure commercial marketplace as an Azure Application with solution template. It employs nested Azure Resource Manager (ARM) template, which is an infrastructure as a code primitive in Azure. Customer needs to manage the solution and the transactions are billed through a separate plan. ARM template-based deployment automates deployment of several VMs along with customized network infrastructure, security groups, key vault management, managed disk in customer's Azure subscription.

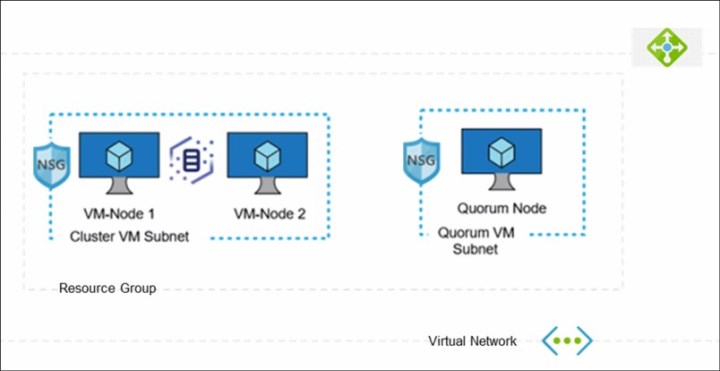

3.3.2 Resource group and vNets

A resource group in Azure is a unit of management; therefore, each Spectrum Virtualize cluster is deployed in a fresh resource group. All resources that are part of the cluster are held in their respective resource group.

Although multiple clusters cannot be deployed in the same resource group, multiple resource groups can share virtual network across different clusters (see Figure 3-3).

Figure 3-3 Resource group and vNets

VNet is the building block for a customer's private network in Azure. VNet facilitates various Azure resources to securely communicate with each other, with external resources over internet, and on-premises networks. Azure VNet is a virtual network environment that provides the basis for provisioning resources and service in the Azure cloud.

IBM Spectrum Virtualize can be deployed in a new or an existing VNet. For nodes and quorum, a separate subnet is required. Azure VNet subnets are defined by the IP address block that is assigned to it.

|

Note: Multiple resource groups can share a vNet across different clusters.

|

3.4 Highly available infrastructure

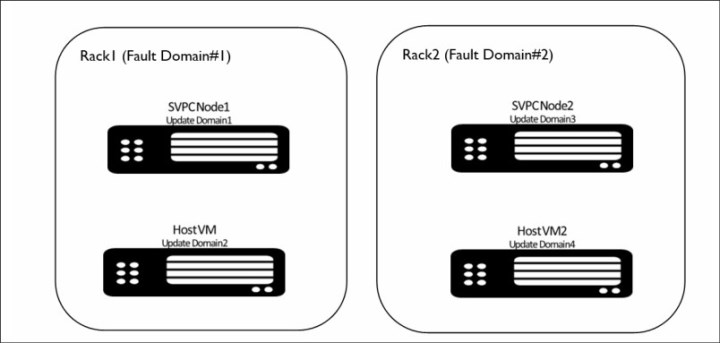

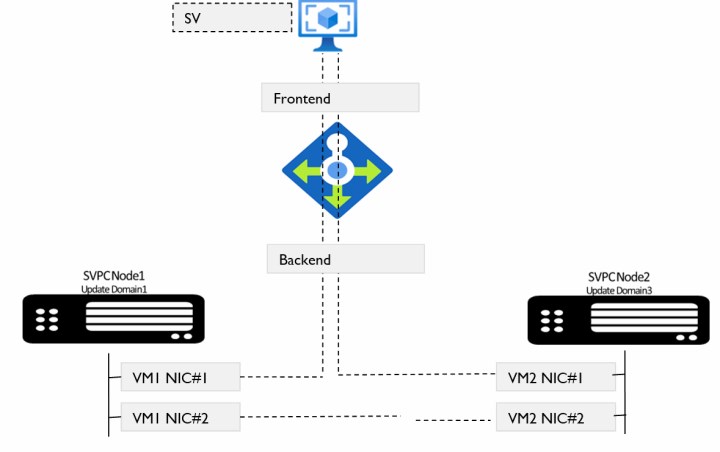

IBM Spectrum Virtualize nodes from an I/O group are required to be isolated in terms of different fault domain and different update domain so that cloud does not bring them down for any maintenance activity simultaneously as far as possible (see Figure 3-4).

Figure 3-4 Highly available infrastructure

The IBM Spectrum Virtualize design uses an availability set construct to help Azure understand how the solution is designed to provide for redundancy and availability. Azure’s Availability Set construct allows us to express logical groupings of IBM Spectrum Virtualize VMs and define the high availability requirement.

|

Note: Consider the following points:

•Update domains indicate groups of VMs and underlying physical hardware that are brought down at the same time for Azure maintenance. With each node VM from an I/O group in a different fault domain, Azure ensures high availability at the controller level.

•Fault domains define the group of VMs that share a common power source and network switch. IBM Spectrum Virtualize node VMs that are configured within an availability set are separated across up to three fault domains. Therefore, if a fault occurs, not all nodes in an I/O group go down because they are in different fault domains.

|

3.4.1 Controller node proximity

A proximity placement group defined logical grouping makes sure that Azure places the node compute resources close to each other.

IBM Spectrum Virtualize Node VMs must be separated by a low network latency. Placing node VMs in an availability zone helps to reduce the physical distance between the instances. However, it might still result in a network latency that is not acceptable for cluster functioning. Therefore, to get node VMs as close as possible with lowest possible latency, we make use of construct of proximity placement group.

|

Note: IBM Spectrum Virtualize nodes in a cluster are all added to the same proximity placement group.

|

3.4.2 Disk types and multi-attach shared disk support

IBM Spectrum Virtualize VMs interact with an attached MDisk to serve I/Os. When one VM from an I/O group goes down, I/O services that are provided by that VM are required to fail over to another surviving VM, which requires that MDisks are reattached to another partner VM quickly. This reattachment is best served by using the Azure multi-attach support for certain types of disks.

The multi-attach feature (see Figure 3-5) allows two or more node VMs to simultaneously connect to the same disk and so that when one node VM goes down, the disk is still reachable from other VMs instantaneously.

|

Note: Microsoft provides the multi-attach (shared disk capability) support for standard and premium SSDs.

|

Figure 3-5 Multi-attach shared disk support

In this design, multi-attached disks are simultaneously connected with multiple nodes in the specific I/O group. The IBM Spectrum Virtualize requirement is to attach Azure-managed disks to two VMs from same I/O group (see Figure 3-5 on page 37).

|

Note: The disk that is required to be attached to IBM Spectrum Virtualize must include multi-attach capable disks with the number of mount points (num_share) to be equal to 2 or more.

|

IBM Spectrum Virtualize block storage allows for the creation of raw storage volumes, to which server-based operating systems can connect. Table 3-2 lists the Azure disk types that are required for different use cases and workloads that are best served by it.

Table 3-2 Disk types required for different use cases and workloads

|

Disk type

|

Block storage workload

|

Use cases

|

|

Standard SSDs

|

•Large data processing workloads

•Enterprise applications

•File storage, VM file system

|

•Hybrid cloud replication use case

•Hybrid cloud DR use case

•Low-end, all-in-cloud infrastructure

|

|

Premium SSDs

|

•High-performance database storage

•Mission critical enterprise applications (Oracle, SAP, Microsoft Exchange, and Microsoft SharePoint

|

High-end, all-In-Cloud infrastructure

|

3.4.3 Load balancer for management traffic failover

In Azure, IP reassignment through IP failover from one VM to another VM can take much time. To solve this issue, Azure suggests the use of an Azure Load Balancer based design.

IBM Spectrum Virtualize design uses the services of the Azure Load Balancer for management IP failover. Load balancer redirects traffic from a received front-end IP address to a suitable back-end IP address of the node, which is running management service.

If the node fails, load balancer service switches the traffic to another back-end IP from the surviving node (see Figure 3-6).

Figure 3-6 Loadbalancer for management traffic failover

3.4.4 Multipath for data traffic failover and better performance

For I/O traffic failover, IBM Spectrum Virtualize design relies on proven host multipathing technology by configuring redundant iSCSI I/O paths from a host to the dual controller. When a node fails in Azure, the original path (with a target IP address) is not presented by the partner node after a failover and consequently, that path is stopped until the failed node is repaired. For this reason, it is required to use multi-paths from the host server to different nodes in the I/O group. Having multiple links to each of the node helps to protect the server against link failures in the network.

|

Note: Consider the following points:

•IBM Spectrum Virtualize design does not use load balancer technology for data traffic. Instead, it relies on multipathing technology.

•Because the individual iSCSI connection from VM can be throttled in the cloud environment, it is suggested to increase the iSCSI sessions from the host to realize the high throughput performance.

|

3.5 Security design considerations

Cloud features shared the responsibility model of security between the cloud provider, IBM Spectrum Virtualize, and customers. It is important to understand the shared responsibility model.

Azure cloud provides security of compute, network, physical resources in cloud data centers and various virtual resources, such as VM, virtual network, MDisk storage, and various associated IaaS services. Azure also provides a list of tools and services to manage the security of Azure resources. For more information about Azure security benchmark documentation and guidelines, see this web page.

Consider the following aspects of security of IBM Spectrum Virtualize for Public cloud in Azure:

•The core IBM Spectrum Virtualize software provides several basic common security features.

•IBM Spectrum Virtualize for Public Cloud provides other areas of security (see Figure 3-7) that factor in Azure-specific public cloud environment:

– Network security (all-in-cloud private deployment and restrictive network security rules)

– Azure access security (Azure Bastion access and hybrid cloud access)

– Operating system security (operating system patch update management and Azure Update Manager)

Figure 3-7 Security design aspects

3.5.1 Restrictive network security rules

When IBM Spectrum Virtualize network is deployed by using the Azure Resource Management (ARM) template, network security groups are created and applied at the subnet and network interface level. Network security groups also are automatically configured for each subnet or interface to protect the virtual network. Default network security group (NSG) rules tighten security to achieve the goal of network security.

For more information, see this Microsoft Docs web page.

To bolster security at network level, consider the following points:

•Default network security group rules that are provided with IBM Spectrum Virtualize deployment use only private IP addresses and provide access to or from only private IP addresses.

•By default (unless assigned), no public endpoints exist to IBM Spectrum Virtualize configuration IPs or data traffic IPs.

•To reach out to some specific services (for example, entitlement), some outbound access rules are enabled.

•NSG rules that contain tight security rules are defined to create a secured design.

3.5.2 Bastion access

Azure Bastion is a fully managed service that provides secure and seamless Remote Desktop Protocol (RDP) and Secure Shell Protocol (SSH) access to VMs without any exposure through public IP addresses. By using Azure Bastion, private and time bound access can be provided through SSH and RDP. Azure Bastion acts like a gateway proxy, which allows users to connect to resources in a private subnet.

3.5.3 Operating system patch update interface and Azure Update Manager integration

Figure 3-8 on page 42 shows the construction of a node. In Azure, IBM Spectrum Virtualize is packaged in a container and run in an Azure VM. RHEL OS is used as an operating system in the VM. Managing patching and updates is an important security requirement, especially in public cloud. The following methods are available that can be used to meet this security requirement:

•Manually by using Spectrum Virtualize CLIs

•Automatically by using Azure Update Manager

Figure 3-8 Operating system patch update interface and Azure Update Manager integration

3.5.4 Manual patching using IBM Spectrum Virtualize CLIs

IBM Spectrum Virtualize provides service node task (sntask) commands for managing hosts in public cloud offerings. In Azure solution, these sntask commands and service node information (sninfo) commands are stored and run as terminal commands on the RHELOS operating system of the VM instances.

One of the options of these CLI options (sntask applysecuritypatch) can be used to manually apply security patches to RHEL OS. Azure Red Hat repository is used to retrieve and apply security patches to the node VMs.

3.5.5 Automated patching by using Azure Update Manager

Azure Update Management is an important security service that uses Azure Automation to manage operating system updates in Linux VMs. The following process is used to configure automated patching for all the Spectrum Virtualize nodes:

1. Create an Azure automation account (for more information, see this web page).

2. Configure this automation account with Log Analytics workspace (for more information, see this web page).

3. Configure the required roles and permissions for automation.

4. Create run books and configure IBM Spectrum Virtualize scripts under them.

5. Add IBM Spectrum Virtualize node VMs under the update management.

6. Configure patching schedules and policies.

After the configuration is done, Update Manager automatically evaluates Azure VMs to maintain security compliance regarding released Critical and Security updates.

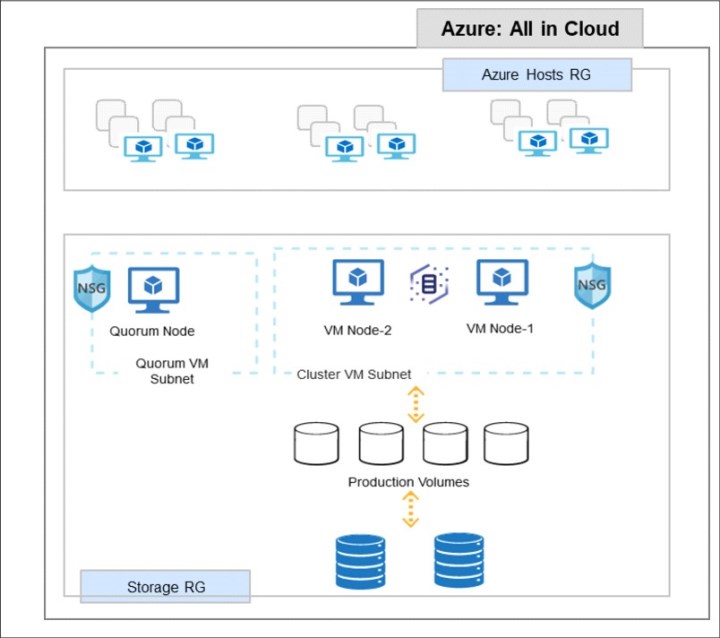

3.6 Solution architecture: IBM Spectrum Virtualize as a storage for all-in-cloud model

In the all-in-cloud model, as shown in Figure 3-9 on page 43, IBM Spectrum Virtualize for Public Cloud is deployed as a clustered pair of Azure Compute VM instances. It arbitrates between the Cloud Block Storage and the workload hosts. In the specific context of all-in-cloud deployment, IBM Spectrum Virtualize for Public Cloud supports extra features that enhance the public cloud block-storage offering.

Figure 3-9 IBM Spectrum Virtualize as a storage for all-in-cloud model

In this use case, a clustered system of IBM Spectrum Virtualize nodes (realized by using memory rich, network rich Azure VMs) presents volumes to the hosts (Virtualized Hosts - Realized as Azure VMs) running customer workloads. Hosts (VMs) access these volumes and read/write data. Most of the advanced functions that are provided by Spectrum Virtualize are defined on volumes. These volumes are created from managed disks (Azure MDisks). All data transfer occurs through the IBM Spectrum Virtualize node, which is described as symmetric virtualization.

..................Content has been hidden....................

You can't read the all page of ebook, please click here login for view all page.