CHAPTER 17

Digital Resources Versus Cognitive Tools: A Discussion of Learning Science with Technology

Over the past two decades, computers and network technologies have become commonplace in many aspects of our work and personal lives. Despite widespread use of technology by scientists across many disciplines, computers and network technologies are often underutilized and poorly integrated into core science education activities in K–16 classrooms. How can this underutilization be explained? This chapter discusses research studies associated with technological tools for learning science relative to meaningful use of technologies in science classrooms. In particular, the chapter presents a framework for examining whether a technological tool is a cognitive tool for meaningful use of technology focused on specific audiences, learning goals, learning activities, and learning performances. To provide discussion for this perspective, examples of technologies associated with four areas of learning science are presented and discussed relative to the Cognitive Tools Framework, and implications revisit the question of underutilization of technology in science classrooms.

Technology permeates many dimensions of our work and personal lives. Evidence of this is apparent in the many instances of technology that are sometimes taken for granted or invisible to us, including cars, telephones, household appliances, and toys (Tinker & Vahey, 2002). Increasingly, institutions and industries are being dramatically altered through the integration of computers and network technologies such as the Internet. Daily business in banking, the postal service, the stock market, and neighborhood grocery stores has changed substantially as a result of computers and network technologies. With the integration of technology as an increasingly ubiquitous and essential component of our daily lives comes speculation about even more and profound alterations in how we will live, work, and play in the future. To quote New York Times columnist Thomas L. Friedman on technology, “Clearly it is now possible for more people than ever to collaborate and compete in real time with more other people on more different kinds of work from more different corners of the planet and on a more equal footing than at any previous time in the history of the world—using computers, e-mail, networks, teleconferencing, and dynamic new software” (Friedman, 2005, p. 8).

Like their adult counterparts, American children are also incorporating the Internet into their daily lives for many different purposes. One recent study documented that 78% of American children ages 12–17 go online on a regular basis (Levin & Arafeh, 2002). However, as research studies in education document, increasing levels of computer use do not necessarily translate into increasing use of technology in American schools. Recent studies document that in many cases across a wide range of varieties of settings, schools are struggling to implement widespread adoption of computers and network technologies into mainstream classroom activities (e.g., Levin & Arafeh, 2002; Cuban, 2001). Although policy makers and the general public generally agree that K–16 students need many and varied productive experiences learning with and by technology (e.g., United States House of Representatives, 2001; Department of Education, 2004), most educators agree that much remains to be understood relative to the productive integration of technology into today's classroom activities. Concerning the use of technology within specific content areas such as science, as discussed in this chapter, a gap exists between technology for doing science and technology for learning science. What do we know about using technology to learn science, and how can this gap be bridged and understood?

This chapter investigates whether technology is underutilized in American science classrooms and, if so, what we can learn from exemplary cases of learning science with technology to guide more widespread integration. The chapter begins with a definition of technology in schools, followed by a discussion of the possible underutilization of technology in American science classrooms. Subsequently, the chapter will present a framework for understanding the lack of utilization of technology for productive learning in science classrooms and several exemplary cases.

ARE TECHNOLOGIES UNDERUTILIZED IN SCIENCE CLASSROOMS?

According to the U.S. Department of Education (2003), technology in schools consists of six categories, including (1) computers and computer-driven equipment; (2) servers, routers, and other equipment that support wired and wireless communication; (3) telephone-based technology; (4) display equipment used in classrooms; (5) infrastructure of wires and cables; and (6) software applications and programs. This chapter uses the following working definition of technology: computers, network technologies, and education-based software associated with categories 1 and 6 above.

According to the Department of Education, American schools have nearly ubiquitous access to computers and the Internet; 99 percent of American schools are connected to the Internet, with a 5:1 student-to-computer ratio (Department of Education, 2004). In addition, computer use is widespread; 90 percent of American children between 5 and 17 use computers on a regular basis (Department of Education, 2004). Interestingly, computers are increasingly a resource that is not limited to older students or adults; one study documents the largest group of new Internet and computer users as children aged 2–5 (Department of Education, 2004).

On balance, although statistics suggest that access and use are widespread, other studies investigating meaningful use of technology to learn science or other subjects suggest that the presence of computers in schools does not necessarily imply productive use of technology within classrooms (e.g., Department of Education, 2004; Cuban, 2001). In one study, although access to computers in school was widespread, most teachers and students used computers infrequently, such as once a month or less, and the majority of uses were focused on mundane or routine tasks (Cuban, 2001).

Science education researchers provide possible explanations for the lack of widespread integration of technology into science learning activities. Concerning Internet-based materials, some researchers suggest that limited use might be a result of poor quality of available web-based science material for K–12 science learning. Linn, Davis, and Bell (2004) state that despite nearly universal Internet access in classrooms, much of the Internet-available material is of questionable scientific content or appropriateness. Others attribute low use to the limited availability of software that is well integrated into curricular programs (U.S. Department of Education, 2003) or that promotes critical thinking (Lee & Songer, 2003). In addition, classroom teachers describe a gap between building or district expectations in their use of technology and the support structures needed to successfully integrate technology into their classrooms (American Association of University Women, 2000).

Collectively, research studies suggest that although computers and network technologies are available in nearly all American schools, the literature in science education suggests that there may be many factors that contribute to the underutilization of technology in American science classrooms. One approach to teasing out the underutilization of technology is to define exemplary roles and activities for the use of technology to learn science. This approach provides one means to begin to address and overcome the barriers impeding meaningful use of technology in science classrooms.

WHAT ROLE DOES TECHNOLOGY PLAY IN SCIENTIFIC LITERACY?

A discussion of exemplary uses of technology to learn science begins with a discussion of how we define learning or knowing science. What is science literacy? As defined by the National Science Education Standards (National Research Council, 1996, 2001), scientific literacy includes both science content knowledge (declarative facts and conceptual knowledge) and reasoning knowledge such as analyzing data, building explanations from evidence, and engaging with scientific questions. In the National Science Education Standards, a central component of scientific literacy is the appropriate use of technology to support learning goals (Bransford, Brown, & Cocking, 2000; National Research Council, 1996). But what is meant by “appropriate use of technology”?

To investigate this question, we look first at how professional scientists use technology. Scientists utilize technology for many specific purposes involving working with and developing both science content and scientific reasoning skills. For example, scientists utilize computers and network technologies for advanced analysis, data modeling, and data representation. A scan of research articles from a recent edition of Science magazine reveals several examples of essential use of technology by scientists, including simulation modeling to explain patterns in complex ecological systems at various levels of organization (Grimm et al., 2005) and DNA analysis of ancient artifacts to characterize evidence of human evolution (Haak et al., 2005). These examples represent scientists using technology to develop both scientific content knowledge (generating patterns about complex ecological systems) and scientific reasoning skills (e.g., analyzing data; making hypotheses based on empirical data).

Similarly, students’ experiences with technology in science classrooms have resulted in the productive use of technology for both content and reasoning in the form of modeling (Gobert & Pallant, 2004), data analysis, and data representation. Examples of technology to guide content development in atmospheric and environmental science and reasoning in terms of making hypotheses based on empirical data are the methods developed by Edelson and Reiser (2006) in which students use technological tools to analyze historical data on climate (WorldWatcher) or ecosystems (Galapagos Finches).

Additional examples in science education provide research-based means of technologies that can be utilized to guide the scientific reasoning we desire among K–16 students. Using technology to guide the development of reasoning can take many forms, including resources that provide (a) organized dialogue with peers and/or scientists towards collaborative understandings (O'Neill and Gomez, 1998; O'Neill, 2004), (b) scaffolded guidance in the development of scientific explanations (e.g., Explanation Constructor by Reiser, 2004), or (c) guidance in reflection on steps taken and progress within more open-ended investigations (e.g., WISE by Linn & Slotta, 2000; Knowledge Forum [formerly called CSILE] by Scardamalia & Bereiter, 1994; Symphony by Quintana et al., 2002).

A SHIFT IN RESEARCH FOCUS

An interesting commonality of the set of research studies briefly mentioned above is the emphasis on the role of technology as a resource for complex reasoning in science, including reasoning such as data analysis (e.g., WorldWatcher), the development of explanations (e.g., Explanation Constructor), or reflection (e.g., WISE). This emphasis on the role of technology as a resource for scientific reasoning is a shift from earlier studies of technology in schools that focused on counting and numbers, either amount of access to technology or amount of use. Shifting the research on technology from counting studies to quality and character of use is a major undertaking and shift in research emphasis. Why is this shift necessary or desirable?

Leaders analyzing the needs of educated citizens of the future, such as Susan Patrick, the Director of Educational Technology for the United States Department of Education, document a need to focus on the transformation of technology for educational purposes as opposed to focusing on the mere placement or integration of technology into schools, “The paper-based system does not make any sense to kids who are coming up in school. Is our educational system geared towards innovation? Do we want an 18th-century model or a 21st-century model for our schools? The 18th-century model is the one we have now… . The ed-tech community loves the term ‘integration’. But our schools need transformation not integration” (NSTA Reports, 2005, p. 1).

Similarly, a group of researchers from diverse educational perspectives has discovered that previous examinations of the allocation of educational resources such as curricular programs provided insufficient information to explain student outcomes (e.g., Cohen, Raudenbush, & Ball, 2000). These researchers argue that a more extensive examination of the use of the materials in particular learning contexts is needed to understand the value of the learning resource and the best means of widespread incorporation. Will a similar shift in research focus toward a critique of the use of technology in particular science education settings yield new insights into the best means to overcome the underutilization of technology to learn science? Might this kind of analysis that goes beyond counting studies yield insights into what is meant by the “appropriate use of technology” (National Research Council, 1996)?

In choosing any focus, important trade-offs are required. Focusing this chapter on the quality of use of the technology as opposed to counting studies eliminates a discussion on several other topics associated with learning science with technology that might have been addressed in this chapter. This shift in focus disallows, for example, a detailed historical review of trends in technology and science education over the past several decades. Luckily, this topic is well represented elsewhere (see Linn, 2003, for a good example). Similarly, this chapter does not provide an extensive list of all of the possible technological tools that are available for teaching or learning science. On balance, this chapter focuses on a particular thesis and an associated framework for analyzing the character and quality of the use of technology for learning science. This focus sacrifices some dimensions of breadth to allow a greater discussion of depth focused around the particular perspective of the quality of the use of technologies for learning science. In addition, the chapter provides insight and discussion associated with a handful of exemplary cases of the meaningful use of technology to learn science that will help shed light on principles that might guide more widespread productive use of technology for learning science.

Digital Resources and Cognitive Tools

To begin our discussion of the use of technology for learning science, we present two critical and contrasting definitions. A Digital Resource can be defined as any computer-available information source containing facts, perspectives, or information on a topic of interest. Digital resources often contain valuable information, such as science information presented in the form of text, pictures, simulations, video, or other interactive formats. The National Aeronautics and Space Administration (NASA) article describing a newly discovered planet candidate in the constellation Hydra, Hubble's Infrared Eyes Home in on Suspected Extrasolar Planet (www.nasa.gov/home/hqnews/2005/jan/HQ_05012_hubble.html), is an example of a valuable digital resource. Figure 17.1 presents a sample from the NASA article digital resource.

FIGURE 17–1. NASA article describing new planet candidate as example of a digital resource.

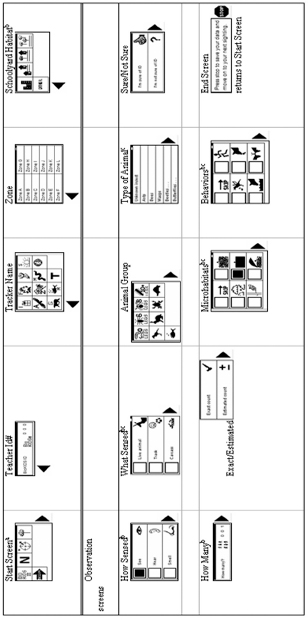

In contrast, a Cognitive Tool is defined as a computer-available information source or resource presenting focused information specifically tailored for particular learning goals on a particular topic of interest for learning by a particular target audience. An example of a cognitive tool is the BioKIDS Sequence of CyberTracker (Fig. 17.2), a modification of the original Sequence of CyberTracker (Fig. 17.3), designed to suit the learning goals of sixth-grade students studying a particular bio-diversity unit called BioKIDS (www.biokids.umich.edu).

To further define these constructs, we provide comparisons of digital resources and cognitive tools in three major areas. The first area is Audience/Knowledge. A digital resource is designed for a general audience to serve a range of possible roles. In reviewing the full NASA article available on the NASA website, it is not difficult to imagine scientists, high school students, teachers, and other adults reading this article and finding it to be of value. In contrast, a cognitive tool is designed for a specific audience and is focused on a particular knowledge goal. For example, the BioKIDS Sequence of CyberTracker is software specifically designed for the collection of schoolyard animal data by fifth- and sixth-graders in Midwestern states. Unlike the original CyberTracker sequence, the BioKIDS Sequence has a limited set of data types and a limited range of animals available for entry (e.g., Michigan-based animals). These limitations allow more focused data collection and more focused learning around particular curricular goals.

FIGURE 17–2. The BioKIDS sequence, as an example of a cognitive tool.

FIGURE 17–3. Sample screens from CyberTracker sequence for gathering field-based animal data.

A second area of comparison between digital resources and cognitive tools is the area of Learning Activities. Digital Resources do not specify how the particular resource is to be used for learning. For example, the NASA article may be used by scientists for a range of purposes and by high school students for a completely different set of purposes. In contrast, cognitive tools are designed to be used in particular ways to achieve particular learning goals. Our example cognitive tool, the BioKIDS Sequence, is used by fifth- and sixth-graders to achieve specific learning goals focusing on gathering data, data analysis, and ecology that are articulated in the curricular activities aligned with the national (NRC, 1996), state, and district science education standards.

The third area of comparison is Learning Performances. As a result of the lack of specificity with regard to audience and activities, digital resources also do not specify the kinds of products learners produce as a result of working with the digital resource. With the NASA article, a high school student might use this article for a report in an astronomy class; however, the performance or outcome that student develops as a result of the use of the article will likely never be known by NASA or any source associated with the digital resource. In contrast, the products that result from the use of the cognitive tool can be examined and evaluated, as well as compared with the original predictions about audience and learning goals. With the BioKIDS Sequence, research studies provided empirical evidence of the usability and learning outcomes associated with the use of this tool (see Parr, Jones, and Songer, 2004).

An interesting outcome of the comparison of digital resources and cognitive tools in the three areas of audience, activities, and performances is what kinds of conclusions can be drawn about the value of the technology for learning. In the case of digital resources where audiences, activities, and performances are not articulated, no clean comparisons can be made between intended goals, activities, and products to determine relative “learning success” with the digital resource. It is worth noting that this lack of specificity relative to knowledge, activities, and performances might be one explanation for why some earlier studies intending to evaluate the “learning success” of technologies were not successful.

In contrast, when comparisons of audience, activities, and performances are made relative to a cognitive tool, empirical evidence can be gathered on the degree to which intended goals are demonstrated by actual outcomes. This information can serve as empirical evidence of both the effectiveness of the resource and the empirically driven redesign of the resource. With the BioKIDS Sequence, data on student accuracy of data collection, analysis, and use of data for the development of scientific explanations can be compared with the original estimations of learning goals and audience. Subsequent redesign of the cognitive tool can then be performed, resulting in stronger learning outcomes and more meaningful use of the technology.

In summary, we developed definitions of digital resources and cognitive tools and developed three areas in which we contrast digital resources and cognitive tools. The next section outlines a framework for the examination of technologies relative to their potential as a cognitive tool and the potential transformation of digital resources into cognitive tools.

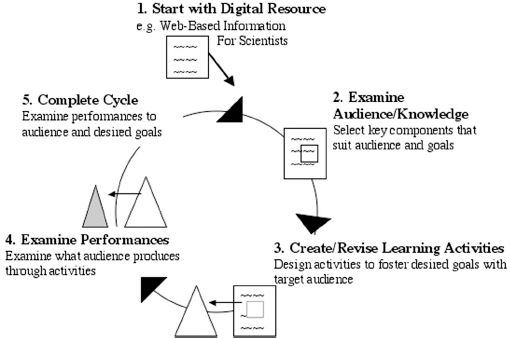

Transformation via the Cognitive Tools Framework

The cognitive tools framework allows a detailed examination of a particular technological resource relative to its predicted role and value in learning science. The development of this framework draws from work in related areas, such as design of high-quality assessment instruments that are well matched to instruction (National Research Council, 2001). Similar to other frameworks and drawing on the three areas of comparison outlined earlier, the examination of a technological tool via the Cognitive Tools Framework involves an examination relative to three dimensions of the learning experience: (1) target audience and learning goals, (2 the identification of specific learning activities that are performed with the digital resource, and (3) the articulation of particular learning outcomes, such as student performances that are produced as a result of use of the technology within the particular learning context.

As outlined in the previous section, digital resources often provide a rich scientific milieu with strong but unrealized or unfocused potential as a way of learning science. An examination of a digital resource in the three focus areas outlined earlier can lead to the development of a more focused technological tool targeted for a particular audience, learning goal, task, and outcome.

In many cases, it may be advantageous for science teachers or researchers to work from these existing rich scientific resources in the development of cognitive tools for learning science. In these cases, it might be advantageous to redesign the tool in particular ways, such as transform the digital resource into a cognitive tool following the steps of the Cognitive Tools Framework. Figure 17.4 presents the cycle of activities involved in the transformation of a digital resource into a cognitive tool following the Cognitive Tools Framework.

FIGURE 17–4. Steps involved in examining or transforming a digital resource into a cognitive tool via the Cognitive Tools Framework.

The framework consists of examination in three areas. Examination relative to Audience/Knowledge refers to a clear definition of the target audience (age, abilities, prior knowledge, and beliefs) as well as learning goals (science content, scientific reasoning, beliefs/attitudes about science) associated with the use of the digital resource. Learning Activities refers to the specific tasks that the target audience will perform with the digital resource. Note that the digital learning activities must correspond directly to the audience and learning goals in the Audience/Knowledge area. Learning Performances refers to the specific products that are generated by the student as a result of interactions with digital learning activities. Note that digital learning performances should be a clear demonstration of the knowledge outlined in Audience/Knowledge. Correspondence between the three areas of the framework provides a stronger prediction that learning with the resource will achieve desired goals.

Why Transform Digital Resources into Cognitive Tools via This Framework?

We present the idea of transformation of digital resources into cognitive tools via the Cognitive Tools Framework as one means to examine technological resources focused on learning science. We also present this framework to use in guiding the redesign of a digital resource into a cognitive tool that may more feasibly contribute to the intended learning goals outlined.

The idea of transformation to cognitive tools builds from the work of others who developed instructional design frameworks to guide design decisions for educational technologies (e.g., Schwartz, Lin, Brophy, & Bransford, 1999; learner-centered design by Soloway, Guzdial, & Hay, 1994). In addition, this work builds from the recent trend of developing educational technologies to be tailored to specific topics, populations, or disciplines (Linn, 2003). In these studies, expertise on how individuals learn was incorporated with human-computer interface expertise on how individuals interact with technology, to produce guidelines for the design of learner-focused technologies. For example, the work by Soloway and colleagues (Soloway et al., 1994) incorporated components of learning theory such as “learn by doing” with expertise about interface design, resulting in concrete issues to be addressed when designing for learners. Some of these issues included a focus on understanding, motivation, design for a diverse audience, and software that is sensitive to learners’ growth of understanding. In the work of Soloway and colleagues (1994) and others, the task of articulating guidelines for the design of technologies focusing on learners and the subsequent evaluation based on these criteria was considered essential to making informed decisions about the role of technology in learning science.

The work here also involves an analysis of software presentation and the role of technology; however, the Cognitive Tools Framework provides more specificity about the areas of necessary focus, as well as an emphasis on transformation as opposed to the nascent creation of new educational technologies.

Concerning areas of focus, an essential area for the Cognitive Tools Framework is the area of audience/knowledge. Examination of a technological resource via the Cognitive Tools framework requires a detailed understanding of knowledge to be learned and, ideally, the placement of the target concept in a sequence of concepts to be learned by the target audience. Without an articulation of the knowledge (e.g., facts, conceptual understandings, reasoning skills) and sequence of target science concepts, appropriate design decisions cannot be made that will determine whether the technology might be valuable as a learning resource within this learning environment and for the particular target audience.

Building cognitive tools from digital resources using the Cognitive Tools framework also takes into account the idea that many existing digital resources have strong educational potential that can be used as a foundation for a more focused Cognitive Tool. Many rich resources that contain excellent scientific material already exist, yet they are often unusable in their current form because of their unfocused, narrowly focused, or overly technical presentation. Guidelines for the productive reworking of these resources could expand the potential repertoire of productive learning resources for pre-college science students. In addition, transformation using the Cognitive Tools framework may be simpler than creating educational software products from scratch, particularly when a rich resource in this topic area already exists.

AN EXAMINATION OF SCIENCE LEARNING WITH TECHNOLOGY VIA THE COGNITIVE TOOLS FRAMEWORK

This section explores cases of technology in the research literature relative to the Cognitive Tools Framework. To begin this examination, it is necessary to identify the kinds of science knowledge we might wish technology to support. The National Science Education Standards suggest that in order to develop scientific inquiry abilities in K–12 education, learners should be engaging with rich scientific questions, gathering and analyzing data, formulating scientific explanations from scientific evidence, critically examining relevant data and explanations, and communicating scientific claims, evidence, and explanations to others (National Research Council, 2000).

Drawing from these dimensions of scientific inquiry, we select four areas that are considered essential in developing scientific knowledge where we believe technology can play an essential role:

- Learners think critically and logically about scientific ideas and compare them with real-life conditions.

- Learners critically evaluate and communicate scientific ideas.

- Learners formulate scientific explanations from evidence.

- Learners use appropriate tools to gather, analyze, and interpret data (National Research Council, 2000, p. 19).

In the following section, we identify categories of technologies that might be fruitful in supporting each learning dimension. The learning dimension and their associated technologies are represented in Table 17.1.

The following sections present exemplary cases of associated technologies and learning dimensions. The discussion of each case focuses on the role of the technology in the learning environment relative to the learning dimension specified and the components of the Cognitive Tools Framework. A discussion focused on the areas of the Cognitive Tools Framework allows a review relative to the tools’ use as a cognitive tool for learning science by a particular audience as opposed to digital resource. In each technology category, the cases discussed are only some of the possible resources that might have been selected from the research literature; thus this discussion is not intended to be a comprehensive review of all of the possible technologies that might have been discussed.

TABLE 17.1

Learning Dimensions and Their Associated Technologies

| Learning dimension | Associated technologies |

| Learners think critically about scientific ideas and/or compare with real life conditions | Modeling, simulations, and visualization tools |

| Learners critically evaluate and communicate scientific ideas | Online critique and discussion resources |

| Learners formulate knowledge such as scientific explanations from evidence | Online scaffolding tools |

| Learners using appropriate tools to gather, analyze, and interpret data | Computer-based data collection and analysis |

Thinking Critically with Modeling, Visualization, and Simulation Tools

In learning science, it is frequently not possible to manipulate objects first-hand in order to develop deep conceptual understandings of scientific concepts. Sometimes the necessary materials are very toxic or costly. Other times, resources are too large or too small to work with first hand, as when a class studies the movement of solar systems, weather systems, or individual electrons.

Digital resources transformed into cognitive tools can help science learners to experiment and think critically about real-life phenomena through study in controlled, hypothetical or virtual environments. Subsequently, learners can compare what they have learned in the hypothetical or virtual environment with real-life phenomena.

One example of a case where technology can support students in thinking critically and logically about complex systems is in the use of computer models, such as models of very large and complex three-dimensional systems. The Virtual Solar System (VSS) project supports students in the creation and analysis of three-dimensional models of the solar system that simulate the relative rotation, size, and distance between planets (e.g., Keating, Barnett, Barab, & Hay, 2002). In this study, specific curricular activities were developed to complement the visualization tools to support undergraduate students’ abilities to visualize abstract three-dimensional concepts leading to more sophisticated understandings of basic astronomy concepts. Research on the development of students’ explanations for the seasons, the phases of the moon, and eclipses reveals significant improvements in students’ understandings of these concepts as a result of the construction and analysis of solar system models. In this case, the researchers articulated the target audience and learning goals, the learning activities, and the learning performances to facilitate VSS 3D models. The models served as cognitive tools for critical thinking about sun-moon-earth concepts through students’ visualization of abstract concepts about the solar system from a range of reference points or perspectives (Keating et al., 2002). Students’ ability to run tests and view a three-dimensional system from a range of reference points allowed students to use the cognitive tool as an interactive representation of the actual scientific phenomena, which facilitated greater understanding of the scientific concepts than might be available with a non-interactive two-dimensional textbook picture or model. The emphasis on the needs of the audience, learning goals, specific activities, and specific performances facilitated the technology's use as a cognitive tool as opposed to a mere digital resource.

Another exemplary case of a cognitive modeling tool is the case of ThinkerTools (e.g., White & Frederiksen, 1998). Grounded in theories of learning, ThinkerTools was designed with a coordination between the three elements of the Cognitive Tools Framework: audience/learning goals, activities, and performances. Middle school students are guided in physics problem solving as they are also guided in the development of metacognitive skills about their own learning process. Learning outcomes, on measures of conceptual understanding of physics, applied physics, and attitudes about science, all demonstrate significant gains. Similarly, a third modeling tool focused on middle and high school students creating dynamic models of plate tectonics phenomena, leading to the development of sophisticated conceptual understandings of basic geology and the value of models (Gobert & Pallant, 2004). Again, the coordination of audience/learning goals, activities, and performances facilitated the possibility of students’ demonstrating strong learning outcomes with this cognitive tool.

Critically Evaluating and Communicating Scientific Ideas with Online Scaffolding Tools

Many network technologies are interactive and therefore provide possible opportunities for learners to critically evaluate and communicate their scientific ideas with others. Some technologies facilitate feedback and variations of tutoring, including feedback built into the tool (e.g., intelligent tutors), whereas others facilitate feedback and communication of individuals through the tool (e.g., Scardamalia & Bereiter, 1994). In one exemplary case, students using the interactive tool called Computer Supported Intentional Learning Environment (CSILE) demonstrated significantly higher scores on standardized tests and demonstrated greater depth in their explanations than students without CSILE (Scardamalia & Bereiter, 1994).

Discussion tools (e.g. online tools that provide opportunities for individuals to discuss, collaborate, or share ideas or materials) also can serve as rich examples of cognitive tools that can help learners critically evaluate or communicate their ideas about science. In one exemplary case, Guzdial and Turns (2000) discovered that computer-mediated discussions that focused specifically on topics related to class learning goals (e.g., the Audience/Knowledge area) were more effective than discussions with a more open discussion format. The Multimedia Forum Kiosk (MFK; Hsi & Hoadley, 1997) supported much greater participation by a more widely representative part the class as compared with participation levels in a more traditional classroom discussion. Similarly, a tool focused on supporting ongoing conversations between mentors and students can support guidance in science concept development, reasoning skills, or more productive views of the nature of science (e.g., Bruckman, 2000; O'Neill & Gomez, 1998). In these cases, special attention to Cognitive Tools Framework areas, such as those tailored to online discussions and learning goals or tailoring activities for more anonymous participation (Hsi & Hoadley, 1997), resulted in strong desired outcomes (productive views of the nature of science, conceptual development, high participation levels).

In a contrasting case of critical evaluation and communication of scientific ideas, the Kids as Global Scientists’ live prediction tool was used to support globally distributed middle school students’ predictions of tomorrow's weather. Once weather data was reviewed and predictions were communicated, these predictions were evaluated relative to the actual weather outcomes through feedback by online atmospheric scientists (Lee & Songer, 2003). In this case, the online prediction environment provided opportunities not just for the development of conceptual knowledge but also for the introduction of real-time problem solving for students (“bringing real world problems into the classroom for students to explore and solve”; Bransford et al., p. 207). On balance, because of the complexity of the real-world situation, the Audience/Knowledge component of the Cognitive Tools Framework has to be carefully matched to the learner's prior knowledge and abilities for maximum effectiveness (Lee & Songer, 2003).

Formulating Knowledge Such as Scientific Explanations with Online Scaffolding Tools

A third category of technologies that can support productive learning of science is online scaffolding tools. Online scaffolding tools can provide a range of different kinds of scaffolding. Scaffolding is commonly defined as the process used by a learner to assist in solving problems or performing cognitive tasks that are too difficult to perform on their own (Wood, Bruner, & Ross, 1976; Quintana et al., 2004). In the case of technologies for learning science, scaffolds are commonly present in the form of written prompts, links, or diagrams that are built into the software to guide students in up to three different types of scientific knowledge development: conceptual knowledge development, reasoning skills such as the development of an evidence-based explanation, or understanding the nature of science.

Zembal-Saul and Land (2002) provide evidence of an online scaffolding tool that helps prospective teachers to construct scientific arguments related to physics concepts. Using the Progress Portfolio tool developed by Loh and colleagues at Northwestern University (Loh et al., 1997), teachers were provided with online guidance in two areas: the organization and reflection on evidence from experiments, and the development of scientific explanations based on evidence. An examination of the preservice teachers’ performances after they used Progress Portfolio demonstrated that the cognitive tool provided valuable assistance in guiding the target audience in the interpretation of their results and in developing iterative refinements of their scientific explanations. In both of these examples, it appears that the matching of learning goals (reflection on data collected; development of strong scientific explanations) to the observed performances allowed a more detailed explanation of the ways in which the cognitive tool was and was not leading to the desired outcomes.

In two different papers, Davis (2003) and Reiser (2004) discuss research results with middle and high school students to explore differential benefits of online scaffolding tools for knowledge formulation such as scientific explanations. Davis (2003) explored different kinds of online problems to guide eighth-graders’ reflection. She discovered that when working with complex problem situations, students using generic prompts developed more coherent understandings than students using directed prompts. In contrast, Reiser (2004) presents a review of research results from studies exploring online scaffolding tools. Taking a bird's-eye view of these studies, Reiser discusses two ways in which online scaffolds can assist students in learning science. These ways are to a) structure the learning task itself and b) problematize essential scientific content. Reflecting on these research results relative to the Cognitive Tools Framework, one hypothesis is that Davis's generic (2003) and Reiser's scientific content scaffolds (2004) appear to define but do not overly restrict key dimensions of the knowledge dimension, so that the learning activities and learning performances can be coupled accordingly.

Using Cognitive Tools to Gather, Analyze, or Interpret Data

The fourth area of learning science in which technology can play essential roles is in the gathering and analyzing of scientific data or information. A relatively new and promising area for the use of technology in learning science is the use of small handheld computers such as personal digital assistants (PDAs) for quick data gathering and feedback associated with teaching and learning environments. As one example of this use, in Project WHIRL: Wireless Handhelds in Reflection on Learning (Penuel & Yarnell, 2005), teachers use handheld computers and the benefits of interactivity and feedback to improve their ability to rapidly assess student learning within their classrooms.

A second example of technology for data collection and analysis is Cyber-Tracker, the tool presented earlier. CyberTracker (Fig. 17.2 and www.cybertracker.co.za) is an icon-based software tool that runs on PDA computers to support professional African animal trackers in quickly recording and identifying animals in the field. Recognizing early on the learning potential of the CyberTracker digital resource for use by middle school students, researchers began to explore the transformation of this tool into a tool better suited for use by middle school students in Michigan. The examination of how to transform CyberTracker followed the Cognitive Tools Framework.

First, researchers investigated CyberTracker for audience/knowledge. At the onset, the icon-based, data entry format of CyberTracker was a good fit for the target audience of language-diverse fifth- and sixth-graders in the Midwestern states. On balance, the learning goals were focused on ecology and the inquiry reasoning of building scientific explanations, as outlined in the National Science Education Standards (National Research Council, 1996). The original CyberTracker sequence contained prompting for data collection that was irrelevant to these learning goals. Therefore the transformation of CyberTracker relative to audience/knowledge required little adjustment in the area of language for the target audience; however, large adjustments were necessary to focus the data collection to support content development in ecology and inquiry reasoning associated with building scientific explanations from evidence (Songer, 2006).

For all of our learning goals, the collection and organization of accurate scientific data were essential. Therefore, step three of the transformation of CyberTracker via the Cognitive Tools Framework involved the design of learning activities that focused on children's accurate data collection of animal data in their schoolyards. This transformation step involved both a reworking of the manner in which animal entries were organized in animal groups, as well as a streamlined sequence of data entry focusing on a small number of types of data focused around key unit concepts (habitats, animal group, animal, number, and zone). Figure 17.2 illustrates an example of the CyberTracker sequence after transformation via the Cognitive Tools Framework, now called the BioKIDS Sequence.

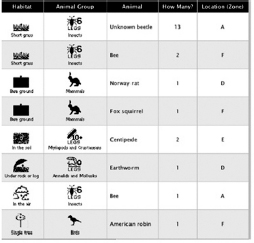

Finally, an examination of CyberTracker was performed relative to the third area of learning performances. This examination involved analysis of the products students generated from their data collection with the BioKIDS Sequence to determine if the information would support their ability to reach the desired learning goals. Figure 17.5 displays the student data collected by sixth-graders in one school-yard in the Midwestern United States with the use of the BioKIDS sequence.

DISCUSSION

FIGURE 17–5. Sample habitat summary for Michigan-collected animal data.

This chapter began with a discussion of the rapid integration of technology in our lives and the comparative underutilization of technology in science classrooms, despite a growing integration and use of technology by children outside of classroom walls. A majority of large-scale research on technology and science education contributes information largely associated with numbers (e.g., access, frequency of use); few studies provide an analytical lens to help explain why technology is underutilized in science classrooms. This is the case despite high-profile requests for research studies that investigate technology designed with the principles of learning in mind:

[T]he process of using technology to improve learning is never solely a technical matter, concerned only with properties of educational hardware and software… . Good educational software and teacher-support tools, developed with a full understanding of principles of learning, have not yet become the norm. Software developers are generally driven more by the game and play market than by the learning potential of their products… . Much remains to be learned about using technology's potential: to make this happen, learning research will need to become the constant companion of software development. (Bransford et al., 2000)

Addressing this issue of the examination of learning principle-driven technologies first hand, this chapter presents an analytical framework and a discussion of several exemplary cases designed or transformed with respect to audience, learning principles, and outcomes. Through the presentation of the analytical framework and associated cases, the chapter discusses the factors necessary in the design or transformation of learning-associated cognitive tools to learn science. The Cognitive Tools Framework identifies areas of examination necessary for either design or transformation of a digital resource into a cognitive tool, so that the technological resource can have a greater potential to contribute toward strong learning outcomes. The Cognitive Tools Framework and exemplary cases discussed present a perspective on how technological tools can be designed or transformed so that the gap in utilization in classrooms can be understood and overcome.

Each of the exemplary cases discussed illustrates means by which technologies can be designed to focus on issues of audience, knowledge, learning activities, and performances. In many of these cases, the technology was evaluated, and strong learning results that support the value of a cognitive tool as opposed to merely a digital resource were found. For example, empirical results conducted with the designed cognitive tool ThinkerTools demonstrate middle school students’ significant gains on a variety of physics concepts relative to peers and older students (White & Fredricksen, 1998). Similarly, empirical results on the transformed cognitive tool the BioKIDS Sequence demonstrate high levels of usability by fifth- and sixth-grade students and strong learning outcomes, both understanding of science content and scientific reasoning (Songer, 2005; Parr, Jones, & Songer, 2004). Interestingly, although the idea of designing or transforming technologies to be cognitive tools is one that has been around for a while, a review of the literature in science education suggests that greater empirical evidence of learning outcomes relative to cognitive tools is needed.

In conclusion, we encourage additional research to examine issues associated with the design of cognitive tools for learning science, including studies that utilize quasi-experimental comparative designs to examine the relative value of different designs relative to the factors of the Cognitive Tools Framework. With the continued small amount of research studies that examine learning-focused technologies, it will remain difficult to respond to critics who claim there is little empirical evidence to support the widespread use of technology in science classrooms. Furthermore, without a solid research base on learning-focused technologies, it will be impossible to make informed decisions on how and when to use technology for learning science. We also encourage additional research to examine the potential of technology for the learning of science, such as research that couples learning research with research on software and resource design. Coupled research of this kind is necessary to understand the effective use of technology in science classrooms and the transformations necessary to develop powerful cognitive tools to advance the learning of science by all learners.

ACKNOWLEDGMENTS

Thanks to Barbara Crawford and Bob Sherwood, who reviewed this chapter.

REFERENCES

American Association of University Women. (2000). Tech-Savvy: Educating girls in the new computer age. Washington, DC: American Association of University Women.

Bransford, J., Brown, A. L., & Cocking, R. R. (2000). How people learn: Brain, mind, experience and school. Washington, DC: National Academy Press.

Bruckman, A. (2000). Situated support for learning: Storm's weekend with Rachael. The Journal of the Learning Sciences 9(3), 329–372.

Cohen, D., Raudenbush, S., & Ball, D. (2000). Resources, instruction, and research. Seattle, WA: University of Washington, Center for the Study of Teaching and Policy.

Cuban, L. (2001). Oversold and overused: Computers in the classroom. Cambridge, MA: Harvard University Press.

Davis, E. (2003). Prompting middle school science students for productive reflection: Generic and directed prompts. The Journal of the Learning Sciences, 12(1), 91–142.

Edelson, D., & Reiser, B. (2006). Making authentic practices accessible to learners. In K. Sawyer (Ed.), The Cambridge handbook of the learning sciences (pp. 335–354). New York: Cambridge University Press.

Freidman, T. L. (2005). The world is flat: A brief history of the twenty-first century. New York: Farrar, Straus & Giroux.

Gobert, J. D., & Pallant, A. (2004). Fostering students’ epistemologies of models via authentic model-based tasks. Journal of Science education and Technology, 13(1), 7–22 (invited paper).

Grimm, V., Revilla, E., Berger, I., Jeltsch, F., Mooij, W., Railsback, S., et al. (2005). Pattern-oriented modeling of agent-based complex systems: Lessons from ecology. Science (310) 5750, 987–991.

Guzdial, M., & Turns, J. (2000). Effective discussion through a computer-mediated anchored forum. The Journal of the Learning Sciences, 91(4), 437–469.

Haak, W., Forster, P., Bramanti, B., Matsumura, S., Brandt, G., Tanzer, M., et al. (2005). Ancient DNA from the first European farmers in 7500-year-old neolithic sites. Science (310) 5750, 1016–1018.

Hsi, S., and Hoadley, C. (1997). Productive discussion in science: Gender equity through electronic discourse. Journal of Science Education and Technology, 6(1), 23–36.

Keating, T., Barnett, M., Barab, S., & Hay, K. (2002). The Virtual Solar System Project: Developing conceptual understanding of astronomical concepts through building three-dimensional computational models. The Journal of Science Education and Technology, 11(2), 261–275.

Lee, H. S., & Songer, N. B. (2003). Making authentic science accessible to students. International Journal of Science Education, 25(1), 1–26.

Levin, D., & Arafeh, S. (2002). The digital disconnect: The widening gap between internet-savvy students and their schools. Pew Internet and American Life Project. Retrieved November 14, 2005 from www.pewinternet.org

Loh, B., Radinsky, J., Reiser, B., Gomez, L., Edelson, D., & Russell, E. (1997). The Progress Portfolio: Promoting reflective inquiry in complex investigation environments. Paper presented at the Computer Supported Collaborative Learning (CSCL) meeting, Toronto, Canada.

Linn, M. C. (2003). Technology and science education: Starting points, research programs and trends. International Journal of Science Education, 6(25), 727–758.

Linn, M. C., Davis, E., & Bell, P. (2004). Internet environments for science education. Mahwah, NJ: Lawrence Erlbaum Associates.

Linn, M. C., & Slotta, J. D. (2002). WISE science. Educational Leadership, x(x), p. 29–32.

National Research Council. (1996). National science education standards. Washington, DC: National Academy Press.

National Research Council (2000). Inquiry and the national science education standards: A guide for teaching and learning. Washington, DC: National Academy Press.

National Research Council. (2001). Knowing what students know: The science and design of educational assessment. Washington, DC: National Academy Press.

O'Neill, K. D. (2004). Building social capital in a knowledge-building community: Telementoring as a catalyst. Interactive Learning Environments, 12(3), 179–208.

O'Neill, K., & Gomez, L. (1998). Sustaining mentoring relationships on-line. Proceedings of Computer Support for Collaborative Work 1998, Seattle, WA, pp. 325–334.

Parr, C., Jones, T., & Songer, N. B. (2004). Evaluation of a handheld data collection interface for science. Journal of Science Education and Technologym, 13(2), 233–242.

Penuel, W. R., & Yarnal, L. (2005). Designing handheld software to support classroom assessment: An analysis of conditions for teacher adoption. The Journal of Technology, Learning and Assessment, 3(5), 3–45.

Quintana, C., Reiser, B., Davis, B., Krajcik, J., Fretz, E., Duncan, R., et al. (2004). A scaffolding design framework for software to support science inquiry. The Journal of the Learning Sciences, 13(3), 337–386.

Reiser, B. J. (2004). Scaffolding complex learning: The mechanisms of structuring and problematizing student work. The Journal of the Learning Sciences, 13(3), 273–304.

Scardamalia, M., & Bereiter, C. (1994). Computer support for knowledge-building communities. The Journal of the Learning Sciences, 3(3), 265–283.

Schwartz, D. L., Lin, X., Brophy, S., & Bransford, J. (1999). Towards the development of flexibly adaptive instructional designs. In C. Reigeluth (Ed.), Instructional-design theories and models: A new paradigm of instructional theory (Vol. II, pp. 183–213. Mahwah, NJ: Lawrence Erlbaum Associates.

Soloway, E., Guzdial, M., & Hay, K. (1994). Learner-centered design: The challenge for HCI in the 21st century. Interactions, 1(2), 36–48.

Songer, N. B. (2005). Persistence of inquiry: Evidence of complex reasoning among inner city middle school students. Paper presented at the American Educational Research Association (AERA) annual meeting.

Songer, N. B. (2006). BioKIDS: An animated conversation on the development of curricular activity structures for inquiry science. In R. Keith Sawyer (Ed.), Cambridge handbook of the learning sciences (pp. 355–369). New York: Cambridge University Press.

Tinker, R., & Vahey, P. (2002). CILT2000: Ubiquitous computing—Spanning the digital divide. Journal of Science Education and Technology, 11(3), 301–304.

U.S. Department of Education. (2003). Technology in schools: Suggestions, tools and guidelines for assessing technology in elementary and secondary education. NCES 2003-313. Washington, DC: Technology in Schools Task Force, National Forum on Educational Statistics.

U.S. Department of Education. (2004). Toward a new golden age in American education: How the internet, the law and today's students are revolutionizing expectations. Retrieved November 15, 2005 from http://www.ed.gov/about/offices/list/os/technology/plan/2004/plan_pg20.html

U.S. House of Representatives. (2001). Hearing charter: Classrooms as laboratories: The science of learning meets the practice of teaching. Retrieved November 14, 2005, from http://www.house.gov/science/research/may10/res_charter_051001.htm

White, B. T., & Frederiksen, J. R. (1998). Inquiry, modeling, and metacognition: Making science accessible to all students. Cognition and Instruction, 16(1), 3–118.

Wood, D., Bruner, J., & Ross, G. (1976). The role of tutoring in problem solving. Journal of Child Psychology and Psychiatry and Allied Disciplines, 17, 89–100.

Zembal-Saul, C., & Land, S. (2002). Scaffolding the construction of scientific arguments by prospective teachers using inquiry-empowering technologies. Paper presented at the annual meeting of the American Educational Research Association, New Orleans.