CHAPTER 30

Systemic Reform: Research, Vision, and Politics

Systemic: of a system; specifically, in physiology, of or affecting the entire bodily system. (Webster's New World Dictionary, p. 1481)

Reform: v. to make better by removing faults and defects; n. an improvement; correction of faults or evils. (Ibid., p. 1222)

Combining the above definitions provides a basic definition of systemic reform, that is, to make better an entire system by removing faults and defects. Although that basic definition applies to systemic reform of science education, it does not define what is a “system” in educational reform, nor does it address the issue of the time required for systemic reform. This chapter addresses that definition by limiting its discussion of systemic reform to large-scale reforms that affect multiple parts of the education system. In addition, it discusses reform in terms of time, that is, long-term reforms. Obviously these two parameters limit the contents of the chapter by eliminating many regional or local reforms, both in the United States and internationally.1 But it can be argued that such limitations are necessary to both focus the discussion and to include a historical perspective.

It takes time to change any system, especially one as complex and entrenched as public education. Lee (2002) documented that changes in student achievement, the desired outcome of any educational reform, may require up to 30 years. When one expands reform to include a whole system, the issue of time becomes paramount. Therefore, this chapter presents a historical overview of large-scale reform efforts in U.S. science education from the end of World War II to the present. Across the 60 years discussed, three themes have emerged in the United States: Texts and Teaching, Courses and Competencies, and Excellence and Equity. Those themes drove the systemic reforms that occurred in three waves: from Sputnik through Man, A Course of Study (MACOS); from A Nation at Risk through national standards; and from the National Science Foundation's systemic initiative program to the No Child Left Behind legislation.

Although the launch of Sputnik in 1957 is commonly accepted as the catalyst for the first wave of systemic science education reform, both Dow (1991) and Jackson (1983) argue that it began earlier, and it focused on educating the scientific and technical workforce that was needed to out-compete the Russians in the race to space. The first wave of reform primarily addressed two parts of the educational system, texts and teaching. It involved federal support for the development of new curricula and Teacher Training Institutes focused on upgrading the content knowledge of teachers through graduate courses at colleges and universities across the nation. However, because of the public paranoia concerning a national curriculum, the Teacher Training Institutes were not ostensibly tied to the new curricula.

A second wave of reform was catalyzed by the publication of A Nation at Risk in 1983. This time the impetus was the need to improve the scientific literacy of all citizens in the new technological age. The U.S. Secretary of Education appointed a commission that had 18 months to report on the quality of America's education. The report focused on high schools and was devastating in its description of weaknesses (Goodlad, 2003; Sizer, 2003). Although it stopped short of calling for comprehensive, systemic reform, many states began to regulate education in ways intended to change the system. During the second wave of reform (approximately 1983 to 1991), another theme emerged, that is, courses and competencies. State policies were directed toward increasing scientific literacy and using science to improve the quality of life by ensuring that all students graduated from high school with adequate courses and competencies. The political context of the time involved issues of economic security within the emerging global economy.

The third wave of reform differed from the two previous ones, because it was based on theoretical insights. O'Day and Smith defined systemic reform as an effort “to upgrade significantly the quality of the curriculum and instruction delivered to all children … [requiring] major changes in the way states and local school systems make and implement policy” (1993, pp. 250–251). Their thinking and that of others2 were taken up by the newly reorganized science education directorate at the National Science Foundation (NSF) and its director, Luther Williams. Indeed, the focus of the third wave of reform, as presented in this chapter, is reform of a state's educational system, and it was catalyzed by the NSF's Statewide Systemic Initiative (SSI) program. Although systemic reforms in the third wave addressed a variety of components in the educational system, the themes that focused activities and policies at several levels were excellence and equity.

It has been impossible to review the research on systemic reform without placing both the reforms and the studies of them in educational and political contexts; for both contexts have affected the nature of the research. However, a comprehensive review of the literature revealed that the research base is uneven and most of it is evaluative in nature. The first three sections of the chapter address each wave of systemic reform and the research associated with it. A time line to guide the reader through the U.S. reforms is provided in Fig. 30–1. There is a common term used among those who participate in systemic reform, that is, lessons learned. The chapter concludes with a consideration of what lessons have been learned through research about systemic reform in science education.

| 1945 | Science-The Endless Frontier |

| 1950 | National Science Foundation |

| 1954 | Physical Science Study Committee Funded |

| 1957 | Sputnik Launched |

| 1958 | National Defense Education Act |

| 1963 | Man: a Course of Study (MACOS) Funded |

| 1969 | Apollo Moon Walk |

| 1975 | Congressional Review of Man: a Course of Study |

| 1981 | NSF Education Directorate Disbanded |

| 1983 | Nation at Risk and NSF Education Directorate Reestablished |

| 1984 | Education for Economic Security Act (Title II) |

| 1989 | NCTM Mathematics Education Standards, and AAAS Benchmarks |

| 1990 | NSF Statewide Systemic Initiatives |

| 1996 | NRC Science Education Standards |

| 2000 | NCTM Standards Revized |

| 2002 | No Child Left Behind |

FIGURE 30–1. Timeline of systemic science education reforms in the U.S.

WAVE I: TEXTS AND TEACHING—(FROM SPUTNIK TO MACOS)

The three full decades after World War II are an intriguing period in the history of American education, for it was during those years—the 1950s through the 1970s, roughly speaking—that the federal government made an extraordinary effort, unprecedented in scale, to involve itself in educational affairs for the purpose of improving the quality of schooling for a significant portion, if not all, of our nation's youth. (Jackson, 1983, p. 143)

Although the launching of Sputnik in 1957 is credited with starting the first wave of systemic reform in the United States, Dow (1991) suggests that “Long before Sputnik, postwar criticism of American schooling had been gaining momentum both within and outside the education profession” (p. 1). For example, Vannevar Bush's 1945 report to President Truman, “Science—The Endless Frontier,” provided the basis for founding the National Science Foundation (NSF) in 1950 (Bush in Jackson, 1983).3 The report articulated an urgent need to improve mathematics and science education. NSF responded by initiating Teacher Training Institutes and, in 1954, funding the first curriculum project, the Physical Science Study Committee (PSSC) (Jackson, 1983). Both initiatives were accelerated and expanded after Sputnik, with Congress passing the National Defense Education Act in 1958, which provided funds for local districts to build laboratories and to sponsor teacher education in science, math, and foreign languages. Concurrently, Congress increased funding for education at the National Science Foundation to the point where education was apportioned about one-half of the NSF's budget (Office of Technology Assessment [OTA], 1988). In order to compare the extent of funding, those monies are translated into current dollars in Table 30.1.

Teaching

The first wave of science education reform did not address all parts of the system, but it did address two aspects that were seen both educationally and politically as weaknesses, that is, the quality of the science teaching force and outdated science texts. From 1954 to 1974, NSF focused on teaching by funding Teacher Training Institutes at a total cost of $500 million (OTA, 1988). Because the consensus at the time was that the teaching force was deficient in content knowledge about science, institutes focused on increasing content knowledge by developing graduate, degree-granting programs in the individual sciences.

If judged by the numbers of teachers reached, the institutes were very successful. At their peak in the early 1960s, about 1,000 institutes were offered annually, involving the participation of over 40,000 teachers each year (about 15 percent of the math and science teaching force). Although more supervisors (80 percent in mathematics and science) and upper secondary school teachers (37 percent in mathematics and 47 percent in science) attended, 5 percent of early elementary teachers also were involved (OTA, 1988). At the time, NSF judged that the program had reached about as many teachers as any voluntary program could. For example, in the late 1980s (second wave), all of NSF's programs reached only 2 to 3 percent of secondary math and science teachers (OTA, 1988), whereas in the third wave (the 1990s) the statewide systemic initiative program reached only 8 percent of the science and math teachers in the 25 states/territories involved (Zucker, Shields, Adelman, Corcoran, & Goertz, 1998).

TABLE 30.1

NSF's Curriculum Development Funds

| Actual dollars* | Equivalent 2003 dollars** | |

| 1954 | $ 1,725 | $ 11,815 |

| 1955 | $ 5,000 | $ 34,247 |

| 1957 | $ 500,000 | $ 3,267,974 |

| 1959 | $ 5,500,000 | $34,810,127 |

| 1968 | $12,250,000 | $64,814,815 |

Note: *From “The reform of science education: A cautionary tale,” P. W. Jackson, (1983) Daedalus, 112, 147–148.

**Calculated using: oregonstate.edu/Dept/pol_sci/fac/sahr/cv2003.xls

Although there is little research about the institutes or evaluation of their success, the Office of Technical Assistance reviewed their efficacy in the late 1980s. The OTA report is one of the few documents that include research and evaluation findings on the institute program. It reports, for example, that a General Accounting Office review of research on the NSF-funded institutes “found little or no evidence that such institutes had improved student achievement scores” (OTA, 1988, p. 120). However, the OTA review identified the following important effects of the Teacher Training Institute program.

- It brought teachers up to date with current scientific developments.

- It brought teachers closer to the actual processes of science, thereby improving their sense of competence in science.

- It provided a network of peers who provided professional support long after the institutes ended.

- It allowed teachers to do experimental work in science and encouraged them to replicate such experiences with their students.

- It helped define leaders for the science education community.

- It recognized the importance of the work of science teachers.

- It inspired and invigorated teachers to continue teaching.

In retrospect, many science educators agree that the two outcomes with the most lasting effects were the development of a network of peers and the defining of leaders. Both of these effects substantively changed the nature of the science teaching force through the next several decades (Helgeson, 1974).

Because NSF did not require evaluations of the institutes, little information other than the OTA (1988) report is available. However, in 1976, Willson and Garibaldi examined the results of a large-scale independent evaluation of five NSF Comprehensive Teacher Training Institutes to determine if there were evidence that the cognitive achievement of junior and senior high students increased because their teachers participated in an NSF-sponsored institute. According to Willson and Garibaldi, previous findings indicating that students benefited from their teachers attending NSF institutes were confounded by differences between institute and non-institute teachers. They also were compromised because the process of assigning students to teachers in schools was nonrandom.4

For their analysis, Willson and Garibaldi (1976) identified the most theoretically relevant factors affecting student achievement and examined each with respect to any observed differences in student achievement in classes of institute and non-institute teachers. For example, they examined the possible differential assignment of institute teachers to higher-ability classes and found that the distribution of teacher assignment by student ability was independent of NSF institute participation. As they summarize, “Thus, it appears unlikely that students of institute attending teachers would do better than students of nonattending teachers because of differential ability” (Willson & Garibaldi, 1976, p. 433). They also examined the possibility that institute teachers might teach in larger cities but rejected this factor because urban representation in the study was small.5 After controlling for student ability and school location, they found that institute participation was a positive and significant factor affecting student achievement. As they summarized, “[T]eacher attendance at institutes is associated with higher student achievement than no attendance, and … students of teachers with high institute attendance perform better than students of teachers who have attended only one or two institutes” (Willson & Garibaldi, 1976, p. 437).

Texts

The second focal point of the first wave of reform was texts. Science textbooks in the 1950s were woefully out of date with current scientific advances. In 1954, Jerrold Zacharias and a group of physicists in Cambridge formed the Physical Science Study Committee (PSSC) and began to develop the first course in the NSF curriculum development program. It was soon followed by curricula in mathematics, chemistry, biology, and finally social science. Collectively, these curricula were known as the alphabet soup curricula (BSCS, CHEM Study, CBA, SCIS, ESC, etc.). By 1975, NSF had funded 53 curriculum projects at a cost of over $117 million. In all cases, teams of scientists, science teachers, and science educators worked on the materials. According to Nelkin (1977), the materials were designed to be “teacher-proof” in that they presented current scientific knowledge and concepts regardless of local norms. The innovative curricula of the 1960s not only updated content; they also focused on the methods of inquiry and stressed individual judgment. Welch and Wahlberg's (1972) analysis of the differences between Project Physics, one of the NSF-funded curricula, and traditional physics texts provides insight into the education vision at that time:

The developers of Project Physics were originally concerned about the continuing drop in the proportion of students who take physics in high school. To attract students who are not bound for mathematical, scientific, or technical careers, and without compromising on the physics content, they attempted to develop an interest-awakening, module system of course components using a variety of media and methods for learning… . Perhaps the most distinctive aspect of Project Physics is its humanistic orientation … (Welch & Wahlberg, 1972, pp. 373, 374)

Indeed, the texts developed in the first wave of reform differed from traditional texts in many ways. But all were directed toward improving and enlarging the scientific, mathematical, and technical workforce for the nation, the political necessity of the day.

Two curriculum projects merit discussion because they expanded the scope of the curricula efforts and illustrate how politics, not research findings, affect educational reform. In 1958, the Biological Sciences Curriculum Study (BSCS), initiated by the American Institute of Biological Sciences, was the first group to address the needs of a diverse student body by developing three versions of an introductory high school biology text. The need was great. High school biology texts were at least 20 years behind developments in the discipline, hampering especially the teaching of evolution.

Considering the politics of the day, it was not surprising that field testing and marketing of the BSCS books ran into trouble. In 1961 a field test in Dade County, Florida, was stopped because the books contained diagrams of the human reproductive system. Although BSCS refused to remove the offending diagrams, Dade County school officials blackened out the offending ones before allowing the field test to continue (Nelkin, 1977). In addition, state boards of education in both Texas and New Mexico objected to the inclusion of evolution. BSCS stood its ground, and its books—with evolution—were included on their state adoption lists. However, the publicity generated ensured political conflicts in both states and local districts.

The second curriculum project affected by political reality was Man: a Course of Study (MACOS). Although NSF had approached curriculum development in the social sciences cautiously, in 1963 it funded a year-long program for fifth- and sixth-graders that used an ethnographic approach to studying human behavior. MACOS did not avoid controversial issues and urged students to cultivate independent attitudes and to raise questions. Although MACOS clearly was treading on sensitive ground, it was successfully field tested in over 300 classrooms before commercial publishers pulled out. After NSF provided a supplement to offset publishing and distribution costs, the books were finally published in 1970. In 1974 MACOS was used in over 1,700 schools in 47 states. However, a year later sales plummeted because of an epidemic of community disputes. The congressional hearings that followed not only put the death seal on MACOS but also on NSF's curriculum development efforts and, in 1981, on its education directorate. A quote from congressional hearings during that time illustrates the political environment: “You [MACOS] are not suggesting the philosophy … that is being taught. [That] is a local option of the school district” (Senator Talcott, 1976, in Nelkin, 1977, p. 24). Although generally the MACOS controversy is seen as ending the first wave of reform, Peter Dow, one of its authors, credits the Apollo moon walk in July of 1969 with its end. According to him, after that success in space, “the so-called ‘education gap’ seemed as ephemeral as the ‘missile gap,’ and federal support for curriculum reform began to wane” (1991, p. 7).

Although NSF was more or less comfortable with supporting projects to develop curricular materials, it approached the implementation of those materials with trepidation. Furthermore, NSF did not endorse or claim responsibility for the educational value of the curricula. It was hoped that by supporting several choices in different subject areas, the Foundation would not be accused of sponsoring a national curriculum. NSF was sensitive to any claims of “federal intervention” and was under constant congressional pressure to avoid such “interference.” As Nelkin stated, “[NSF's] role was to make materials available but not to mandate their use” (1977, p. 27). However, Senator Allott put it more forcefully, saying, “I don't want these things rammed down the throat of educators” (Senator Allott, 1964, in Nelkin, 1977, p. 24).

Unlike the situation with the Teacher Training Institutes, there was considerable research on the efficacy of the NSF-supported curriculum materials. At the time, one study summarized the situation in the following way: “After the urgency and excitement of the early years of this new curriculum movement had passed, many individuals and groups, including the Congress of the United States, demanded objective evidence of the value of the new courses” (Walker & Schaffarzick, 1974, p. 83). Research focused on student achievement and overall program impact is summarized next. However, it is important to note that student achievement research was clouded by controversy over the type of test used to obtain the data. Therefore, two studies that specifically addressed the issue of test bias are discussed in detail. The findings of other studies are included in major meta-analytical and qualitative syntheses from the 1970s, which are discussed also.

Some researchers attempted to overcome test content bias by using two achievement tests, one based on the innovative curriculum used and the other based on a comparable traditional curriculum (Heath, 1964; Heath & Stickell, 1963; Lisonbee & Fullerton, 1964; Rainey, 1964; Wallace, 1963). Others examined test content to determine if scores on subscales reflected patterns of emphasis present in the curricula (Herron, 1966; Wasik, 1971). However, findings were mixed, and the concern about test bias continued to cloud research done on student achievement and the NSF-supported curricula.

Two studies, one of CHEM Study and the other of Project Physics, were unique in that both controlled for test bias and one, Project Physics, used an experimental design. In 1966, Herron compared the cognitive ability of students who had a class using CHEM Study with those who used a conventional chemistry text.6 He carefully constructed a chemistry test that measured cognitive ability—without regard to the actual content studied. He administered the test to chemistry students in four suburban schools and analyzed results by three student ability levels as measured by the Iowa Tests of Educational Development. Although there were differences in findings among the three ability groups, he found that at all ability levels CHEM Study students scored significantly higher on Application items than did students using conventional texts (Herron, 1966).

Welch and Wahlberg (1972) also addressed the need for controlled, experimental studies to assess student achievement with NSF-supported curricula. From a national list of 16,911 physics teachers, 136 were randomly selected to participate in this study. Of that number, 72 agreed to participate and were randomly assigned to either the experimental group (attended a six-week briefing session on Project Physics) or the control group (participated in a two-day physics workshop at Harvard University). Within the classes of both groups, students were randomly assigned to take one of two instruments that together assessed the following factors: cognitive, affective, learning environment, course reaction, semantic differential, and physics perception. Controlling for student ability, Welch and Wahlberg concluded that Project Physics students performed as well as students in other courses on cognitive measures and that they enjoyed their text and found physics less difficult that those in other physics courses. In addition, Project Physics had a special appeal to students in the middle-range IQ group (112 to 119), students who, they noted, had increasingly tended to elect not to take high school physics (Welch & Wahlberg, 1972).

In response to what many researchers, policy makers, and educators interpreted as a lack of substantive research on the quality of the curriculum and its influence on student learning, researchers began to use synthesis techniques, both qualitative and quantitative, to analyze findings across studies. One synthesis by Walker and Schaffarzick (1974) examined studies “in which an attempt was made to discriminate finer and more subtle differences between innovative and traditional curricula than a single score on an achievement test” (p. 94). They reviewed research since 1957 that compared the achievement of students using innovative curricula with the achievement of students using traditional curricula. The review included studies in science, mathematics, social studies, and English. Of the 23 studies that allowed for direct comparison of their results, a majority (12) were in science. Walker and Schaffarzick's (1974) review revealed an advantage for students using innovative curricula, compared with students using traditional curricula. They concluded that “[t]he innovative groups were superior about four times as often as the traditional groups in these comparisons” (p. 90). However, they continued to report that “innovative students do better when the criterion is well-matched to the innovative curriculum, and traditional students do better when the criterion is matched to the traditional curriculum” (p. 94). According to their synthesis, “different curricula produce different patterns of achievement, not necessarily greater overall achievement” (Walker & Schaffarzick, 1974, p. 97).

Boulanger (1981) synthesized research published between 1963 and 1978 that addressed science education for grades 6–12. The findings of over 50 studies were synthesized to determine the impact on student learning of certain aspects of instruction. Boulanger's synthesis “provides some insight into the effectiveness of systematic innovation in instruction” (p. 319). For example, the use of pre-instructional strategies (i.e., behavioral objectives, set induction, advanced organizers) was found to improve student conceptual learning. Training on scientific thinking, increased structure in the verbal content of materials, and increased realism or concreteness in adjunct materials also were aspects of science instruction found to improve cognitive outcomes. He also synthesized research on the quantity of time on topic, reporting that “simply expanding the amount of time spent on a given unit of material holds no special relationship to amount learned” (Boulanger, 1981, p. 321).

In order to provide a quantitative treatment of the research and reduce the potential for investigator bias, Shymansky, Kyle, and Alport (1983) synthesized 25 years (from 1955 to 1980) of experimental and quasi-experimental research regarding the effects of new science curricula on student performance. They used meta-analytic procedures to examine 105 studies, representing a total sample size of 45,626 students and 27 of the new science curricula. They measured the effect of 18 criteria on student performance individually, in combinations called criterion clusters (i.e., analytic skills, process skills, achievement, etc.), and by subject area.7

Shymansky and his colleagues found that the average student studying the new materials exceeded the performance of 63 percent of the students in traditional science courses on the aggregate criterion variable (i.e., general achievement, process skills, analytic skills, and attitudes toward science).8 The greatest gains were in process skills, attitudes, and achievement. They also reported that overall performance scores were more positive for mixed student samples than for samples that were predominantly male (less than 25 percent females) or for ones that were predominately female (more than 75 percent females). When analyzed by subject area, they found the biology curricula, developed in the BSCS, not only had the most positive impact on student performance but also had the most research supporting them. Furthermore, students using the new biology and physics programs showed the greatest gains across the 18 criteria measured, whereas students using the new chemistry and earth science materials showed the least gains.

As Shymansky and his colleagues note, their findings concerning overall improvements in achievement were especially important because much of the criticism of the new curricula assumed that improvements in process skills (a focus of all the new materials) could only be achieved at the expense of content knowledge (Shymansky, Kyle, & Alport, 1983).

Bredderman's (1983) meta-analysis of 57 controlled studies on three activity-based elementary science programs (Elementary Science Study, ESS; Science—A Process Approach, S-APA; and Science Curriculum Improvement Study, SCIS) found that 32 percent of the 400 comparisons favored the activity-based programs at least at the .05 level of significance. As he concluded,

The results of this meta-analysis of program effects help in estimating what the impact would be if activity-based programs were adopted across a wide variety of districts. Performance on tests of science process, creativity, and perhaps intelligence would show increases of 10 to 20 percentile units. Reading and math scores might be positively affected, and attitudes toward science and science classes would probably show a small improvement. Student performance on standardized achievement tests in science content, if affected at all, might go up slightly when averaged across all student populations. (p. 511)

On the other hand, Bredderman cautioned that gains from using the activity-based curricula were not sustained unless similar types of materials were used in subsequent grades.

In 1982, Weinstein and colleagues reported on a meta-analysis of 33 studies, involving over 19,000 junior and high school students in the United States, Britain, and Israel that was designed to assess the impact of the U.S. innovative secondary science curricula on achievement since 1960. They found a ratio of approximately 4:1 in favor of outcomes related to the use of the innovative materials (Weinstein, Boulanger, & Walberg, 1982). In 1986, Blosser synthesized many of these studies, concluding that “Data exist to support the idea that the science curriculum improvement project materials developed after 1955 were successful in promoting student achievement in the use of science process skills, in creativity, in higher cognitive skills at both the elementary and secondary school levels” (Blosser, 1986, p. 517).

TABLE 30.2

Estimates of Percentages of U.S. High School Physics Students Enrolled in PSSC

| Source | 1962–63 | 1964–65 |

| U.S. Office of Education | 12.6 (50,300)** | 20.6 (99,900) |

| National Science Foundation | 25.0 (125,000) | 50.0 (200,000) |

| College Entrance Examination Board | 19.6 | 29.6 |

Note: **The figures in parentheses are the estimated total PSSC enrollments. From “The impact of national curriculum projects—the need for accurate assessment” by W. W. Welch, 1968, School Science and Mathematics, 68, 230.

In summary, much of the research concerning the effectiveness of the NSF-supported curricula in improving student achievement was compromised by two issues: first, the problem of test bias, and second, the lack of random sampling. Most of the evidence for improved student achievement is based on secondary analyses, conducted after the period of funding. However, in general, the research indicates that many curriculum projects were successful in improving student achievement.

Another line of research involved the impact of the NSF-supported curricula. By 1970, approximately a quarter of all secondary students in the United States used NSF-funded materials in their science courses (Nelkin, 1977).9 Together, the curricula spanned all levels of mathematics and science education, including the college level, and, at times, extended across levels and areas. According to Schlessinger and Helgeson (1969), the science curriculum projects were more numerous, but less comprehensive, than those in mathematics. However, both the science and the mathematics programs fell short in their attempts to reach the total school population. Schlessinger and Helgeson (1969) described the situation in the following way: “Varying with the new course content projects, we are reaching only from twenty to about fifty percent of the students within our classrooms. This is true even where the teachers have been given special institutes in preparation for teaching these courses” (pp. 639–640).

Using the Physical Science Study Committee (PSSC), the first NSF-supported project, as a case study, Welch (1968) described how three separate studies produced three different sets of percentages estimating the enrollment of students in PSSC courses (see Table 30.2). Welch concluded that discrepancy among the data sets “leads one to doubt the accuracy of any of the reported figures” (p. 231). As he wrote, “Thus it becomes apparent that an assessment of the impact of the course content improvement program, even at the gross level of counting heads, is not reliable” (p. 234).

In spite of varying estimates of the use of the NSF-supported curriculum, it was a massive effort that updated science content, changed instruction, and influenced more traditional texts. For example, in biology, although only 50 percent of American secondary schools eventually used BSCS materials, the BSCS books influenced change in nearly 70 percent of the content of the most widely used text at that time, Modern Biology (Quick, in Jackson, 1983, p. 149). However, the success of the curricular reform in wave one also had a political pitfall. As Nelkin wrote, “By improving the public school curriculum, the NSF, despite its denial of responsibility, found itself involved not in an isolated neutral research endeavor but in a major social intervention filled with political implications” (1977, p. 27).

Summary

Although the term systemic reform was not used in the 1960s and 1970s, the United States embarked on large-scale reform efforts that addressed two major parts of the educational system, text and teaching. Both efforts substantively changed science education, and the synergy between the two foci enhanced their separate effects. However, evaluation was not required of the various projects, and, particularly for the teacher training institutes, there are few research studies. Although more research exists for the curriculum projects, most studies were done after a project's completion. That is, results were not used to refine or continue the reform. The research usually used synthesis techniques, and findings generally supported the effectiveness of the new curricula to improve student attitudes, process skills, and achievement levels in science. The reforms of the 1960s and 1970s were national in scope; they reflected a shared vision of improved science education; but, in the end, they were limited by political issues and concerns.

WAVE II: COURSES AND COMPETENCIES

The 1980s produced two distinct approaches to educational reform. One was a ‘topdown’ strategy that sought to change schools through state programs and regulatory activity. The other has been a ‘bottom-up’ reform movement in the form of school-based ‘restructuring.’ Both strategies have proved inadequate in substantial part because of the fragmentation of the current education system. (Smith, O'Day, & Fuhrman, 1992, p. 31)

The next wave of reform was signaled by the release of A Nation at Risk in 1983, which stated: “If an unfriendly foreign power had attempted to impose on America the mediocre educational performance that exists today, we might well have viewed it as an act of war” (National Commission on Excellence in Education, 1983, p. 5). Indeed, one researcher referred to reform efforts in the 1980s as “[attempts] to forcefully repair the sinking vessel” (Hawley, 1988, p. 418). Although the nation was aroused, the report did not address the dollars needed to change the educational system. However, in 1984, Congress passed the Education for Economic Security Act (EESA), which was designed to promote the teaching of mathematics, science, and foreign languages. Title II of that act directed the U.S. Department of Education to provide grants to school districts to address the shortfalls highlighted in A Nation at Risk. A second impetus for the reforms of the 1980s was the growing national awareness that the United States no longer dominated the global marketplace.

Although reform efforts occurred at both local and state levels, this section focuses on changes that were state, or top-down, efforts, because they were more systemic in their approach and effect.10 Two major changes occurred at the state level. First, states assumed the responsibility for determining high school graduation requirements; and, second, states mandated competency tests to ensure that graduating students had the skills and knowledge to be effective citizens in a global economy. The themes of the second wave of reform, therefore, are courses and competencies.

Courses

In total, states enacted more rules and regulations affecting education in the three- to four-year period following the release of A Nation at Risk than at any time since the early 1960s (Timar & Kirp, 1989). Between 1983 and 1985, approximately 700 new policies were legislated (Darling-Hammond & Berry, 1988), and much of the activity focused on trying to ensure that all students reached high school graduation standards and, in many states, passed competency tests. In order to reach those goals, states increased the number of academic units or credits needed for graduation while concomitantly reducing the number and type of electives that would count toward graduation. In addition, states mandated passage of competency tests, added “pass to play” provisions (Texas), and developed grade-to-grade promotion standards (Wilson & Rossman, 1993).

The impetus for increasing course requirements was based on research, particularly in mathematics, indicating that student achievement as measured by standardized tests is improved by increased course taking (Alexander & Pallas, 1984; Schmidt, 1983a, b). Changes also were influenced by the National Commission on Excellence in Education's (1983) delineation of minimum high school graduation requirements. Those requirements included four year-long courses in English, three in mathematics, three in science, three in social science, and a semester course in computer science. Furthermmore, a foreign language was recommended for college-bound students. In 1980, 37 states defined minimal graduation requirements, but a decade later, 43 states had assumed that responsibility (Wilson & Rossman, 1993). Math or science requirements were increased in 32 of those states, and 25 raised graduation requirements in both math and science. One result of increased graduation requirements was a proliferation of science courses. Indeed, in the four years between 1982 and 1986, the largest enrollment increases were in science and foreign languages. Furthermore, to ensure that all students passed competency tests, schools offered more remedial courses.

Most of the research on course offerings relied on large national databases, providing profiles of course offerings, and/or state assessments of change. Researchers at the Educational Testing Service used the High School and Beyond database to analyze course-taking patterns across the nation, with the 1982–83 school year providing baseline data. According to Wilson and Rossman, “One topic of high interest in several of the studies was student enrollment in mathematics and science and whether there was systemic variation by track, race, gender, socioeconomic status, or other demographic variables” (1993, pp. 36, 37). As expected, analyses found that enrollments differed by school characteristics.

Clune, White, and Patterson (1989) collected interview data in six states, 24 districts, and 32 high schools in order to analyze any effects of new graduation requirements. They found that slightly more than a quarter of students enrolled in an additional math class, and a third took an additional science class. They concluded that the increased graduation requirements both succeeded and failed, stating, “They succeeded in getting a lot more students into basic academic courses; … they failed in getting students into the most rigorous possible courses, in producing a reasonably uniform education for all students, and, probably, in conveying the higher-order skills necessary for a competitive economy” (p. 47).

Another study analyzed the effects of state policies on teachers’ content decisions in elementary mathematics (Schwille et al., 1986, July). The seven states involved in the study ranged from ones with high direct state control (New York and South Carolina) to ones with a mix of direct and indirect control (Florida and California) to ones where control of school policies was at the district level or there was indirect control (Ohio, Indiana, and Michigan). Although differences were found between direct and indirect control states, the authors concluded that in both types of states, teachers had little autonomy over policies on instructional objectives, student testing, student placement, textbook selection, time allocation, and teacher qualifications. Indeed, the top-down component of the second wave of reform did not involve teachers in decision-making.

Retrospectively, Porter and his associates analyzed reforms during the 1980s from the perspective of opportunities to learn (Porter, 1994). The analysis focused on three aspects of the reform during the 1980s. First, did increased graduation requirements lead to higher dropout rates? Second, did schools accommodate students by offering more remedial courses? And, third, was instruction weakened in standard courses? Porter cites a study by the U.S. Department of Education that indicated that dropout rates, particularly among poor and/or minority students, did not increase (U.S. Department of Education, in Porter, 1994). The second question was answered by Clune and White's (1992) analysis of high school course-taking patterns. Using schools that enrolled mostly low-achieving students in four states, they concluded that course-taking patterns had not been affected by increased graduation requirements. That is, the additional academic credits were earned in courses of varying levels of difficulty, not only in basic or remedial courses. Porter focused on answering the third questions. As he wrote, “[N]o evidence was found that requiring more students to take more advanced mathematics and science resulted in compromising the curricula of the courses experiencing the increased enrollments… . The required Chemistry/Physics course looked as challenging in terms of topics covered as did the college prep Physical Science course, and the actual quality of instruction looked better” (Porter, 1994, p. 6).

The second wave of reform was characterized by a proliferation of graduation requirements by states that spawned new courses and varied types of instruction. However, the dire predictions that such top-down reform would lead to increased dropout rates or watered-down courses are not supported by the available research. The second characteristic of the second wave of systemic reform was an increase in state mandatory testing or competencies.

Competencies

In addition to increasing academic course requirements, state reform policies addressed competencies by increasing the number and importance of state-mandated tests. Although state-mandated testing was intended to drive changes in the curriculum, it often resulted in narrowing the curriculum; thereby “[creating] adverse conditions for reform” (Fullan & Miles, 1992, p. 747). For example, a study by Corbett and Wilson (1990) found that new statewide testing requirements in Maryland and Pennsylvania resulted in narrowing of the curriculum by aligning subjects taught to a specific test. Similarly, a study of the impact of state-mandated assessments in mathematics in two states (Maine and Maryland) found that the new testing mandates resulted in teachers focusing their teaching activities on the tests, especially when testing was combined with high-stake conditions (Firestone, Mayrowetz, & Fairman, 1998).

Wilson and Rossman (1993) summarize the findings concerning the effects of competency testing on student enrollments stating, “What stands out, however, are the low levels of enrollment in mathematics and science courses relative to vocational courses and the suggestion that competency testing pushes students toward basic or remedial courses, at least in mathematics” (pp. 37, 38). Furthermore, they state that “one main effect of the reform of graduation requirements was … a redistribution of course offerings and staffing patterns across departments within schools” (p. 41). For example, although math enrollments remained fairly stable, there was a substantial redistribution of types of courses offered and enrollments in various courses.

Porter (1983, January-February) examined the research concerning testing and school effectiveness. He cites multiple studies that support testing as one of five factors contributing to effective schools, writing, “Within the context of the debate on testing, the effective schools literature must be seen as aligned squarely with the advocates of testing” (p. 25). Other analyses, such as the one discussed next, concurred that testing was effective in improving student achievement and in closing achievement gaps among subgroups of students.

A comprehensive analysis of three decades of math and reading scores from NAEP and SAT provides other evidence of the combined effects of increased graduation requirements (leading to diverse course offerings within a subject) and competency testing (Lee, 2002). Although the analyses do not include science, the results are pertinent. Using NAEP data, Lee reports that “During the period from 1978 to 1986, greater academic improvement of lower performing students across all racial and ethnic groups was observed” (p. 4). This change resulted in narrowing achievement gaps between Black/White and Hispanic/White students. Similar results were found with SAT scores. As Lee summarized, “It appears that low-performing students gained more than high-performing students when minimum competency was emphasized during the 1970s and early 1980s” (2002, p. 5).

Summary

In looking back over the 1980s, two issues were paramount in affecting large-scale educational reforms. First, states increased graduation requirements while simultaneously restricting the types of elective courses that could be used to meet those requirements. Second, states began to institute high-stakes tests, which defined the skills and knowledge students should have after successfully completing the courses required. However, many state policies were mandated without substantive input from teachers, resulting in the establishment of unrealistic timelines (Fullan & Miles, 1992; Schwille et al., 1986, July). Furthermore, states often reversed policies before achievement could be affected.11 As noted in one report, “The ‘top-down’ mandates of the 1980s did little … to change the content of instruction (especially its focus on basic skills) or to alter the reigning notions of teaching and learning because, as some argued, fragmented and contradictory policies diverted teachers’ attention and provided little or no support for the type of professional learning necessary” (Goertz, Floden, & O'Day, 1996, p. xi). As the decade drew to a close, the concept of a systemic approach to reforming science and mathematics education began to emerge in the literature (Horizon Research, 1994). Reform programs previously referred to as “comprehensive” were considered less extensive, and researchers and policy makers began to define the ways in which reform could address an entire educational system.

WAVE III: EXCELLENCE AND EQUITY

Systemic education reform, in theory and practice, is at its heart: (a) ambitious— in that it establishes new learning standards for all students, (b) comprehensive—in that it simultaneously targets many pieces of the educational system for change toward a common goal, and (c) coherent—in that it supports mutually reinforcing practices and policies that send a strong, consistent message to students, educators, and society about what is important in education. (Fuhrman, 1994, in Heck, 1998, p. 162)

In 1990, the National Science Foundation (NSF) acknowledged the need to address whole state educational systems by establishing the Statewide Systemic Initiative (SSI) program. The SSI program marked the beginning of the third wave of reform in science education. The program's goal was to improve classroom practice and, ultimately, student achievement through major systemic change in science and mathematics education (Fuhrman et al., 1995). As noted in one report, “In framing its solicitation for proposals, NSF emphasized the need for states to deal comprehensively with the key factors that shape instructional practice, the policies governing education, and the economic and political contexts in which science and mathematics education must operate” (Horizon Research, 1994, p. 3). Between 1991 and 1993, NSF entered into five-year cooperative agreements with 24 states and the Commonwealth of Puerto Rico to carry out standards-based systemic reform in mathematics and science education.

The path for systemic reform was paved by the publication of standards in mathematics (National Council of Teachers of Mathematics [NCTM], 1989) and in science by the American Association for the Advancement of Science's (AAAS) Benchmarks for Scientific Literacy (AAAS, 1993). The Benchmarks were used by the state systemic initiatives as standards for science education until the publication of the National Science Education Standards (National Research Council [NRC], 1996). All three documents stress both excellence and equity. For example, the National Science Education Standards (1996) state, “[t]he intent of the Standards can be expressed in a single phrase: science standards for all students. The phrase embodies both excellence and equity” (p. 2). Furthermore, both the NCTM and the NRC standards are based on the premise that common, minimum standards do not promote equity; on the other hand, common, high standards of excellence do. NSF embraced that premise, and excellence and equity became cornerstones of systemic reform in science and mathematics in the 1990s.

Note: From A report on the evaluation of the National Science Foundation's Statewide Systemic Initiatives (SSI) Program, by A. A. Zucker, P. M. Shields, N. E. Adelman, T. B. Corcoran, & M. E. Goertz, 1998, (Report No. NSF 98-147) p. viii. Arlington, VA: National Science Foundation.

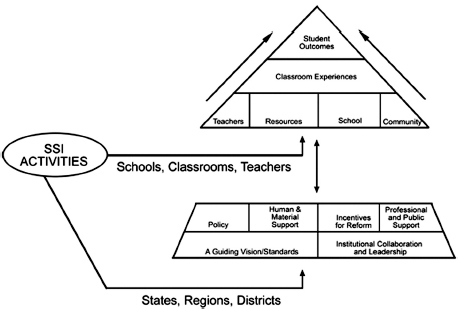

FIGURE 30–2. A model of systemic reform.

Perhaps learning from the reforms of the 1960s and 1970s, NSF made comprehensive evaluations a cornerstone of its systemic initiative program. Several groups were contracted to evaluate the reforms through multiple studies that used both quantitative and qualitative methods.12 In addition, a model of systemic reform was developed that helped focus evaluation and research questions (Zucker, Shields, Adelman, Corcoran, & Goetz, 1998). Figure 30–2 illustrates components of state educational systems in the United States. With the overall goal of improving student achievement in mathematics and science, the SSI reforms generally entered the system at two levels, as shown in Fig. 30–2. The majority of the reform activities entered in the middle and worked up through local classrooms, students, and teachers to improve student achievement. However, other activities began at the state, region, or district level to affect policies and public support for the reforms.

At the classroom level, the SSIs’ primary focus was on teacher professional development (20 states), on developing instructional materials (initially 6 states but, by 1997, 13 states), and on supporting model schools (7 states). The states involved in systemic reform mainly used five strategies to affect state and district levels. Twenty-two states created an infrastructure to support systemic reform, 13 addressed teacher education, 14 mobilized public opinion, nine funded local systemic initiatives, and three attempted to align state policy as a primary target of the reform effort (Zucker, Shields, Adelman, Corcoran, & Goertz, 1998).13

Zucker and Shields (1995, 1998a, 1998b) conducted school-level case studies in 12 of the SSI states from 1992 through 1997 in order to assess the SSI program's impact on classroom practice and to identify characteristics of successful SSI strategies. Wherever possible, data from an SSI's internal evaluation were incorporated into the study. There was general agreement among the SSIs on what the problems were in mathematics and science classrooms and on the kinds of reforms needed to address the problems. The SSIs recognized the need for new, integrated forms of assessing student progress (e.g., portfolios, exhibitions) and the need for instructional strategies that focused more on active and interactive learning. Collectively, the SSIs called for new curriculum materials that placed increased emphasis on learning of all students, conceptual understanding of fundamental mathematical and scientific concepts, applications of mathematics and science to real-life situations, and interrelatedness across disciplines. Another study found that, overall, the SSIs’ professional development stressed opportunities for teachers to enhance their content knowledge (Shields, Marsh, & Adelman, 1997).

Although the NSF-funded evaluations of the systemic initiatives are available, to date little research on the SSIs has been published. This is due partly to location of the initiatives within state departments of education (7), state higher education councils (3), or private groups (7), some of which were developed to run the initiatives. Only three SSIs were housed at universities. Much of the research focused on the two aspects of the third wave of reform that both the national standards and the NSF program addressed, excellence and equity. The review of research for the third wave of systemic reform traces both themes.

Excellence

Because improved student achievement was the overall goal of the program, excellence and equity are addressed in relation to changes in student achievement. Although the ultimate goal of systemic reforms of the 1990s was to improve student learning, the SSIs varied in both the type and amount of student achievement data collected. Heck (1998) characterized the gathering of student achievement data by the SSIs as “problematic,” because the measures of achievement did not fully reflect the standards the initiative had adopted, were not given at the grade levels the initiative had targeted, did not aggregate and report scores at the level of the system the initiative primarily addressed, were given infrequently or changed too frequently to provide stable longitudinal data, or simply did not include science and/ or mathematics.

A comprehensive review of the SSIs’ impact on student achievement in science limited its findings to seven states “that were likely to generate the most credible evidence that student achievement has risen” (Laguarda, 1998, p. 1).14 In spite of varying methodological and logistical problems, there was evidence of gains in achievement in science in Louisiana, Montana, Ohio, and Puerto Rico. In addition, findings in Ohio and Louisiana indicated that the reforms particularly benefited African American students. Laguarda (1998) concluded that student achievement gains were most likely to be found in states that focused their reform activities primarily at the school, classroom, and teacher level, as shown in Fig. 30–2. She stated:

Of the seven SSIs that were reviewed, the four with the most credible evidence of changes in student achievement—Louisiana, Montana, Ohio, and Puerto Rico—were those with the most intensive interventions aimed directly at classrooms… . [T]hose SSIs that invested most heavily in activities directed at state-level policy alignment (such as New Mexico and Vermont) or concentrated on building a state-level infrastructure to support change or on building local capacity to reform instruction (such as Kentucky and Vermont) … found it much more difficult to produce evidence of changes in student achievement that could be attributed directly to the SSI. (Laguarda, 1998, pp. 5, 6)

Laguarda's conclusion is supported by Zucker, Shields, Adelman, Corcoran, and Goertz's (1998) study. Although Zucker and his colleagues’ evidence is based upon findings from four states, they concurred that improved student achievement in science occurred when a state-level reform focused at the school, classroom, and teacher levels.

Clune (1998) synthesized data across nine SSI states to test his theory of systemic reform; that is, systemic reform (SR) leads to systemic policy (SP) that leads to a rigorous implemented curriculum (SC) that results in higher student achievement (SA). He posited that those components could be represented as a continuous causal sequence:

SR → SP → SC → SA

In testing his theory, he included several indicators of student achievement; such as overall achievement gains, gains in equity (closing any achievement gaps), gains in course enrollments, and achievement levels in subsequent courses. Using two dimensions to test his theory, breadth of change (number of students) and depth of change (size of change and quality of the data) and the nine case-study states, Clune found that, on a five-point scale, the nine states averaged 2.3 points on both breadth and depth of student achievement gain.15 The results of his study suggest that the goal of excellence, as indicated by overall achievement gains, was not reached in the five years of NSF support.

Near the end of the decade, NSF funded RAND to conduct a study of teaching practices and student achievement in six states and urban districts that had participated in the systemic initiative program (Klein, Hamilton, McCaffrey, Stecher, Robyn, & Burroughs, 2000). The research design included: (a) a measure of instructional practices, (b) an assessment of student achievement, and (c) an analysis of any relationship between them after controlling for student demographics. There are several concerns with the design. First, although large samples of teachers at each site responded to questionnaires, there was no effort to identify teachers who had participated with the SSI and those who had not. Second, there was no effort to link student responses with those of the teachers.16 And, third, in order to reduce testing burden, scores from existing statewide or district-wide tests were used when available. The study included grades three through seven, and over 300 teachers and approximately 9000 students were included in the data analyses. Klein and his colleagues report, “After controlling for student background characteristics, we found a generally weak but positive relationship between the frequency with which a teacher used the reform practices and student achievement. This relationship was somewhat stronger when achievement was measured with open-ended response tests than with multiple-choice tests” (2000, p. xiv). They note that the findings may be compromised by inaccurate teacher reporting of frequency of use of various instructional practices, lack of alignment between tests and reform curricula, differences in student groups (unrelated to frequency of teacher use of reform practices), and length of time students were taught by teachers using reform practices. However, they suggest that the consistency of findings across sites, despite differences among the sites, is encouraging.

A carefully controlled, smaller study focused on student achievement in eight urban junior-high/middle schools in one SSI (Kahle, Meece, & Scantlebury, 2000). In this study, frequency of use of reform teaching practices was reported by both students and teachers, with student responses validating those of the teachers. Student and teacher data were linked, and teachers were identified as participating (or not) in the SSI's professional development. Furthermore, only one measure of achievement was used, and it was aligned with standards-based science content. However, results were reported only for African American students, because the number of European American students in the schools was too low to use in statistical analyses. Kahle and her colleagues reported that “students of teachers who participated in the SSI professional development, compared with students whose teachers had not participated, scored higher on the science achievement test. Second, students of SSI teachers rated their teachers as more frequently using standards-based teaching practices than did students in non-SSI teachers’ classes” (Kahle, Meece, & Scantlebury, 2000, p. 1034). However, participation in the SSI's professional development was not a significant predictor of student achievement; rather the use of standards-based teaching practices was predictive.17

Huffman and Lawrenz (2003) investigated the extent to which one state's systemic initiative reformed science education. Eighth-grade science teachers were involved in an ex post facto comparative study that assessed impacts on teachers’ instructional practices, external relations, professional communities, school policies, and family involvement. They compared schools with high or low participation in the SSI, matching them on demographic characteristics, such as geographical location (urban/rural/etc.), size of school, and socioeconomic and ethnic characteristics of the students. In schools with high participation, they found that the use of standards-based instructional techniques increased and that external groups had more influence over science instruction. However, no differences were found for (1) teachers’ influence on school policy (low in both types of schools), (2) teachers’ involvement in professional communities (high in both), or (3) family involvement with the reform (low in both). From their findings, they concluded, “Perhaps comprehensive systemic reform is an unrealistic goal, but it does suggest we need to better understand systemic reform and use research to help design future reform efforts” (p. 376).

Although individual research studies identified successful components of the state systemic initiatives, Anderson, Brown, and Lopez-Ferrao (2003) synthesized official evaluation reports to analyze the effectiveness of the program. They concluded that the systemic initiative program contributed in three significant ways to the improvement of science and mathematics education. First, it led to the broad acceptance of a systemic approach in addressing education problems; second, it made improvement of mathematics and science education a priority nationally; and, third, the systemic reform efforts made serving all students a national mandate.

Equity

Although NSF officially defined equity as the “reduction in attainment difference between those traditionally underserved and their peers” (Zucker, Shields, Adelman, Corcoran, & Goertz, 1998, p. 37), states approached equity in different ways. For example, most states focused on elementary and middle school science and mathematics, because all children would benefit from improvements in instruction and curriculum—improvements that might lead to more equitable enrollments in elective courses (Kahle, 1997). In addition, many states found that leadership both in the SSI and at the state and regional levels was not representative, and some took measures to expand the pool of effective leaders. Furthermore, states addressed the lack of minority teachers of science and mathematics. Overall states reported that 28 percent of teachers served by the SSIs’ professional development activities were nonwhite—over double the proportion of minority science and math teachers in the workforce (Zucker, Shields, Adelman, & Powell, 1995).

However, most of the research on equity focused on reducing achievement gaps among identifiable subgroups of students. States varied in relation to the subgroups addressed. For example, ethnic/racial were the focus of 21 states, females of 19, and/or low-income students of four.18 The different equity emphasis, in most cases, reflected population or achievement differences across the states; for example, Vermont, with few racial/ethnic minorities, did not have that focus, whereas Puerto Rico—with girls achieving higher than boys—did not focus on females. States also differed in the strategies used to improve equity, although the two most commonly identified ones were professional development and targeted funds. Professional development involved specific equity workshops (Georgia, Louisiana, and Ohio), whereas targeted funds were directed to low-performing schools (Connecticut, Michigan, New York, Louisiana, South Dakota, and Puerto Rico) (Zucker, Shields, Adelman, & Powell, 1995).

Much has been written about the “equity challenge” for the SSIs and for standards-based education in general (Boone, 1998; Kahle, 1998). For example, Mas-sell, Kirst, and Hoppe (1997) conducted research on standards-based systemic reform in nine states. Their findings are based on in-depth interviews with policy makers and educators at state and district levels in California, Connecticut, Florida, Georgia, Kentucky, Minnesota (a non-SSI state), New Jersey, South Carolina, and Texas. They concluded, “[F]or the most part, attention to equity issues within the context of standards-based reform remained episodic and weak. With few notable exceptions [Kentucky], desegregation and school finance policies were pursued as separate, independent initiatives” (p. 9).

In 1996, the Office of Educational Research and Improvement (OERI) of the U.S. Department of Education funded 12 studies of different aspects of current education reform, including a study of the systemic reform movement (Goertz, Floden, & O'Day, 1996). The systemic reform study focused on math and reading and was conducted in three stages: first, a comprehensive review of the literature; second, intensive case studies of 12 reforming schools in six districts of three SSI states (California, Michigan, and Vermont); and, third, analyses of the findings in the context of state policies and sustainability. The study focused on all parts of the education system, analyzing capacity for change, governance structures, and teaching practices. It also included a section on equity, noting that both California and Michigan targeted some of their funds for schools and districts with large concentrations of low-performing and/or minority students. Incentives were offered to entice such schools to participate. Goertz and her colleagues reported that both districts and schools were restructured to be more responsive to diverse social, cultural, and educational needs of their students. Furthermore, they noted many examples of restructured curricula across the sites, particularly introducing curricula that embraced state and national standards and providing for bilingual education. Last, they reported that academic tracking declined.

Individual studies also addressed equity. Using Hierarchical Linear Modeling, Kahle, Meece, and Scantlebury (2000) concluded that the standards-based teaching, advocated by Ohio's SSI and promulgated through its sustained professional development programs, improved the attitudes and achievement levels of urban, African American youth. Furthermore, the gender gap in achievement between African American girls and boys (favoring girls) was narrowed in SSI classes, compared with non-SSI classes in the same schools. Their findings are supported by Laguarda (1989), who states, “Special analyses conducted by both of these SSIs [Louisiana and Ohio] show that the gains demonstrated by students in SSI classrooms were greatest among African American students, students who received free or reduced-price lunches, and girls” (p. 3).

Fenster's (1998) study of the New Jersey SSI probed three questions: (a) Do students learn more because of the SSI? (b) Are they better equipped to apply what they learn to everyday problems? and (c) Have inequities in performance among different groups of students been reduced? Using scores on state-mandated assessments, he found that students in NJSSI schools did not learn more than their counterparts in schools without NJSSI affiliation, that the evidence for question (b) was too limited to draw conclusions, and that inequities in performance had widened between underserved school districts and the rest of the state on the required eighth-grade assessment.

Lee (2002) researched changes in racial and ethnic achievement gaps across 30 years. He assessed trends in both NAEP and SAT data for mathematics and reading. Although his study did not include science scores, other analyses suggest that his findings for NAEP mathematics are similar to those for science (Kahle, 2004). His synthesis did not separate SSI states from non-SSI states. His overall finding— that achievement gaps between Black/White as well as between Hispanic/White students widened in the 1990s—reinforces the importance of some systemic initiatives’ success in narrowing similar gaps.

Summary

The SSIs were designed to improve all parts of state educational systems; in so doing it was assumed that changes would enhance student achievement in science and mathematics (Weiss & Webb, 2003). The extensive NSF evaluations of the program as well as a smattering of research studies, however, suggest that the overall goal of excellence (as defined by improved student achievement) was not reached in the five years of NSF funding. Lee's (2002) comprehensive review of achievement changes indicates that 30 years are needed for widespread improvement in achievement. Individual research studies as well as case studies of selected SSI states, however, provide indications that the reforms of the 1990s strove for excellence and achieved it, albeit in small increments at various parts of the educational system.

In summary, Heck (1989) notes that excellence and equity were both mandated by NSF's systemic reforms of science and mathematics. He writes, “Equity, as NSF has conceived it and asserted it in the SSI program, is not a concept reserved for the traditionally underserved and underachieving groups” (p. 169). Rather, the SSIs were directed toward changing the entire system so that all children were able to reach their full potential. Heck recommended that research questions address both excellence and equity at all levels of the educational system, shown in Fig. 30–2. Clearly, in the third wave, evaluation and research concerning excellence and equity was needed to address changes in multiple parts of the system. However, the published literature primarily addresses improved student achievement, the ultimate goal of systemic reform.

LESSONS LEARNED

Systemic reform is an extremely complex process, and it is not clear whether systemic reform is even possible. (Huffman and Lawrenz, 2003, p. 358)

So, across 50 years, what has been learned about systemic reform? In each wave of reform—from Sputnik to MACOS, from A Nation at Risk to the math standards and science benchmarks, from the SSIs to No Child Left Behind—interactions between educational vision and political realities have shaped the reforms as well as the research about them. As research has documented, the vision of improved curricula in the first wave of reform was compromised by several political as well as educational realities. First, some of the new materials did not adhere to local norms concerning human behavior, evolution, and other topics; and, second, research concerning their effectiveness was seriously compromised by the lack of independent tests aligned with the goals of the curricula. Furthermore, although the first wave addressed two major components of the education system—texts and teaching—the politics of the day prevented articulation between those two massive efforts to improve science education, and each was weakened by that reality. In retrospect, there is a considerable body of research suggesting that the new curricula improved both student attitudes and achievement. However, there is little evidence of the effect of the Teacher Training Institutes on either classroom practice or on student achievement. Positive gains from that program were identified, on the other hand, by changes in the science teaching profession.

It is arguable whether the second wave of reform was systemic. However, authors have identified it as a time of major educational reform, and it involved both top-down strategies and bottom-up reforms (e.g., comprehensive school reform at the local level). The research reviewed focused on state-level, or top-down, reforms during the 1980s, assessing any effects of increased courses in science as well as competency testing on student skills and achievement. Lee (2002) found that the achievement gap between subgroups of students narrowed in the 1980s, a finding based on increases in minority scores while majority scores remained flat. Generally, a review of the research suggests that course proliferation was primarily at the basic level, and that competency testing, rather than raising the bar lowered it, pushing “students toward basic or remedial courses” (Wilson & Rossman, 1993, p. 38).

Indeed, the proliferation of science courses in the 1980s may have contributed to what was, in effect, tracking in science, the elimination of which was one of the goals of the NSF's systemic initiative program. The third wave of large-scale reform, discussed here in relation to the statewide systemic initiatives, had a theoretical underpinning, was driven by an educational vision that excellence for all leads to equity, and was supported politically at the national and state levels. Although much can be learned from multiple and broad-scale evaluations of the SSIs, research concerning the third wave is somewhat elusive. The published literature is mixed concerning achievement of the dual goals of excellence and equity. There are several reasons for the lack of research concerning the third wave: first, there is the issue of time (the lag between collecting data and publishing results); second, there was more emphasis (at the state level) on doing the reforms than on studying their effectiveness; and, third, the driving vision of systemic reform as articulated through the SSIs was changed dramatically by the politics of the new century, and science education researchers may have shifted their focus before definitive studies were completed.

Across the three waves of reform, two basic lessons have been learned: first, large-scale reform of science education takes time; and, second, systemic reforms must include both top-down and bottom-up approaches. Research supports those lessons. McLaughlin (1990) reviewed a RAND study of 293 local projects in 18 states from 1973 to 1978. All of the projects involved significant federal funds. McLaughlin drew the following conclusions:

- Adoption of a project consistent with federal goals does not ensure successful implementation.

- Successful implementation does not predict long-run continuation.

- Success depends primarily on local factors, not federal guidelines or funding levels.

- Local factors enhancing or distracting from success include commitment of district leadership, the project's scope, and the project's implementation strategy.

Likewise, a summary of research concerning systemic reform provides insights into prerequisites for success as well as for sustainability of systemic reform within and across schools (Carpenter et al., 2004, February). The authors identify the following factors:

- Learning communities through which teachers can articulate and examine new ideas about mathematics, science, student learning, and instructional practice.

- Professional development that enables and supports teachers’ inquiry into subject matter, student learning, and teaching practice.

- Infusions of material and human resources from outside the school or the educational system, which are necessary to initiate and sustain reform

Synergy across various levels in the educational system, indeed, leads to sustained change. This synergy is articulated by the findings of the OERI review of research on systemic reform (Goertz, Floden, & O'Day, 1996). Those findings are summarized in Fig. 30–3. Systemic or large-scale reform across the last half-century has attempted changes at both local and state levels. Success has come (in terms of student outcomes) when it has been guided by vision and supported by politics of the day. Research across the past 50 years has probed to understand systemic reform, to develop models to guide it, and to provide findings to guide it. However, both the type of research as well as the type of questions asked have been affected by the politics of the day.19

EPILOGUE

One reviewer of this chapter suggested that I include insights that I gained about systemic reform as a principal investigator of one of the initial Statewide Systemic Initiatives—one that continues with state funding. Although I studiously avoided personal thoughts in the chapter, the longer I thought about her suggestion, the more I liked it; for too few researchers are actively involved in systemic reform, and the field suffers from their absence. So, if my personal reflections can entice other scholars to the fray; then, I will modestly add them.

• Coherence among state policies and a guiding vision is needed. • Communication about the reform must reach parents, students, and teachers as well as policy makers. • Reform takes time—time to learn new content and new skills, time to change instruction, time to enhance learning. • Goals of the reform may have to strike a balance between current and desired practice. • Deliberate, consistent, and pervasive strategies are needed to infuse equity into the reforms. • Capacity building must include individual as well as organizational and systemic needs. • Consistency, alignment, and coherence are needed across and throughout the system. • Teachers and administrators need to be involved in multiple levels, not only at the school/classroom level. • Capacity building strategies must pay attention to diversity at all levels of the system. • Outside stakeholders and supporters must be involved if the reform is to be sustained. |

Note. From Systemic Reform. [Volume I: Findings and conclusions.] Studies of education reform, by M. E. Goertz, R. E. Floden, and J. A. O'Day, 1996, (ERIC Document Reproduction Service No. ED397553). East Lansing, MI: National Center for Research on Teacher Learning.

FIGURE 30–3. Findings of OERI review on systemic reform.