14

Multimode Multiband Terminal Design Challenges

Jean-Marc Lemenager, Luigi Di Capua, Victor Wilkerson, Mikaël Guenais, Thierry Meslet, and Laurent Noël

The mobile phone industry has experienced unprecedented growth since it was first launched over 20 years ago using 2G/ EDGE, GSM, GPRS (EGPRS) technologies. Combined with 3G standards, such as WCDMA/HSPA, and more recently, 4G/LTE (FDD and TDD), mobile phone shipments have continuously beaten records, tripling volumes from 533 million devices in 2003 to more than 1.8 billion devices in 2013. Looking ahead to 2017, it is expected that shipments of mobile phones will then reach 2.1 billion units worldwide [1]. Put into perspective, this means that nearly 70 devices will be shipped every second! Beyond these impressive production volumes, the introduction of smartphones in 2007, unleashed by Apple's original iPhone, is the other major factor that has completely reshaped the definition of mobile terminals. A high-end non touch-screen 2009 device would today be qualified as “feature phone” or “voice-centric phone.” The year 2013 was a key turning point in the history of this industry as the “data-centric / do-it-all” smartphone shipments exceeded the volumes for these feature phones. Based on current trends, what are now collectively called “smartphones” are likely to transition to a market segmented into three retail price ranges: low end/cost (<100$), mid-end (<300$), and high-end super-phones (>500$). Given this expectation, it will become difficult to make a distinction between these segments, based on a common classification as “smartphones.” This is not unlike the trend seen in the world of PCs. In the high-end segment, the differentiator is likely to translate into a race to be the first to deliver new hardware (HW) or telecom features, such as being the first device to support LTE-Advanced. In the lower-end segment, retail prices will be the primary differentiator.

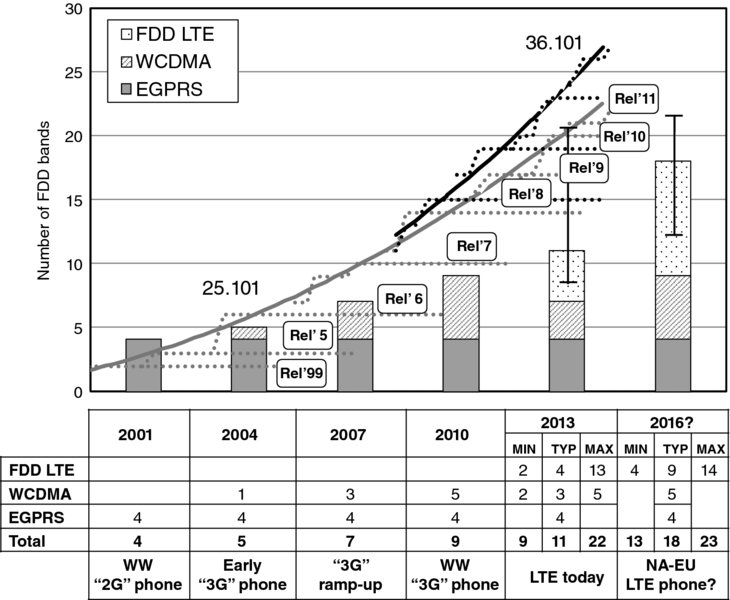

The other key factor which has influenced the situation is the quick pace at which 3GPP has been delivering new telecom features and air-interfaces, moving from the well established mono-mode EGPRS terminal, then to dual-mode EGPRS-WCDMA, and more recently to the triple-mode EGPRS/WCDMA/LTE user equipment (UE). Some of the most recent smartphones also have to support a fourth air interface: TD-LTE. Commensurate with support for this evolution comes the necessity to support more frequency bands. The rate at which bands have been introduced by 3GPP is illustrated in Figure 14.1, which plots the number of bands vs. the initial introductory years for LTE-FDD (36.101) and WCDMA/HSPA (25.101). With the introduction of LTE, the number of bands standardized at 3GPP as of October 2013 is 29 FDD bands and 12 TDD bands (TDD bands are not shown in Figure 14.1). This trend is not expected to abate as the UARFCN formulae are modified to accommodate up to 128 bands in future releases [2]. The table inserted in Figure 14.1 shows that the number of bands supported in mobile phones has followed a similar trend. From 2003 to 2006–2007, dual-mode terminals only needed to support a small number of bands: band I for most networks, plus band II and V in North America. Today, worldwide roaming is achieved in dual-mode terminals by supporting a total of nine bands: four EGPRS (850,900,1800,1900) and five WCDMA bands (I, II, IV, V, VIII).

Figure 14.1 Number of frequency bands for WCDMA (TS25.101 -UTRA) and LTE (TS36.101 – E-UTRA) air interfaces vs. typical commercial UE band support per air interface (bar graph)

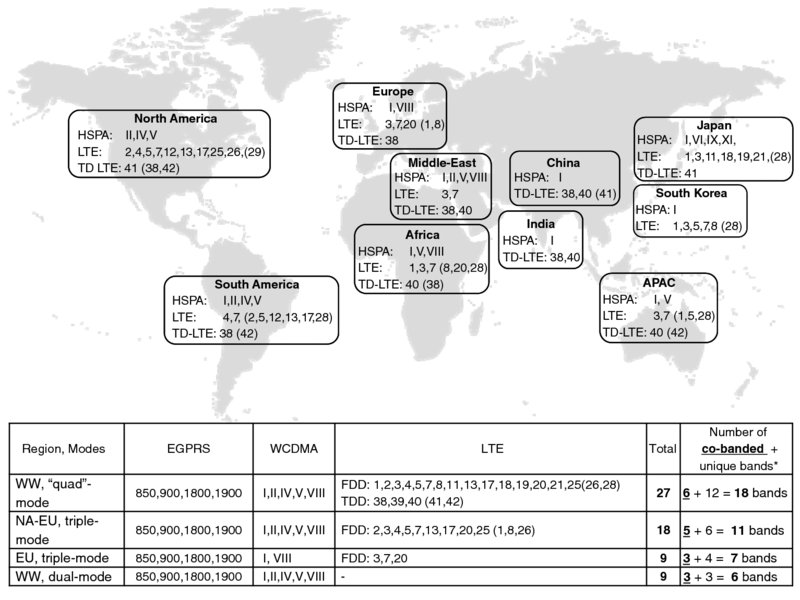

Figure 14.2 is a depiction of the most common bands deployed worldwide, and helps to better illustrate the complexity of multiple band support for multimode terminals. The map is complemented with a short table listing the band support requirements for three hypothetical geographical regions: worldwide (WW), North America – Europe combined (NA-EU), and EU only triple-mode terminals. Global WW dual-mode requirements are provided as a baseline for comparison purposes. These examples highlight the impact of adding LTE support: while 9 bands is all that is required to cover the world in a dual-mode terminal, the triple-mode UE needs to support 24 bands (20 FDD, 4 EGPRS); and this becomes 27 if TD-LTE is to be supported. With 9 bands, the triple-mode terminal can only cover the EU market. Remarkably, the number of bands required to provide just NA-EU coverage in a triple mode terminal1 is double that of a dual-mode WW phone: 18 bands vs. 9 bands for dual-mode handset. Of course, this situation is somewhat offset by the fact that doubling the number of bands does not necessarily translate into doubling the number of power amplifiers and/or RF filters, thanks to the concept of co-banding2. However, even with co-banding, the explosion in the number of bands supported imposes some new constraints. Today, the triple-mode WW “flagship” terminal must be realized using several variants, each covering a particular region of the world/ecosystem. Even dual-mode terminals are frequently produced using several variants to deliver the best roaming/cost compromise. However, the need to support a large number of bands is not, in itself, the most critical problem. Not long ago, the same situation existed for the dual-mode phone, where supporting five WCDMA bands seemed difficult to achieve at minimal cost increase. Rather, the primary challenge introduced by the existing ecosystems is that each have telecom operator-specific and/or region specific band combinations which do not overlap. This in turn translates into having to develop several variants of a mobile phone. The problem is not expected to improve in the longer term as carrier aggregation introduces additional operator specific frequency band combinations.

Figure 14.2 Main commercial frequency bands and band requirements for multimode terminals. Future LTE commercial bands are shown in brackets. The number bands that can be shared amongst RAT or “co-banded” are underlined

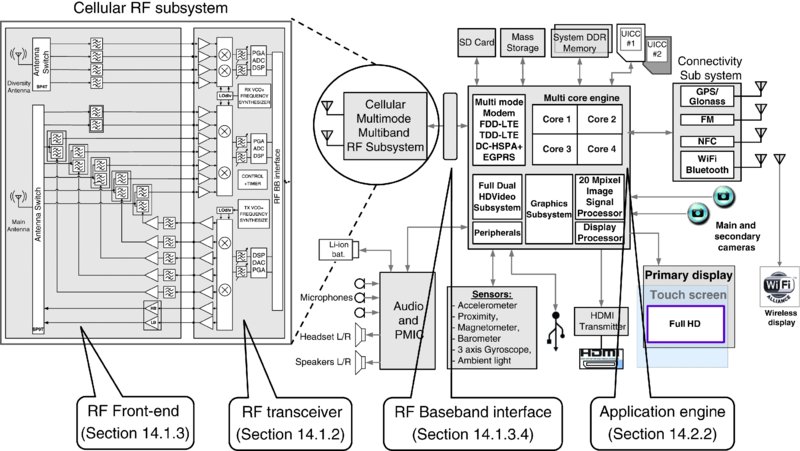

In this competitive and changing environment, the dynamics of the mobile phone industry translates into seemingly conflicting cost/feature/performance requirements – can the next generation platform deliver more hardware features (e.g., more bands, modes, peripherals, etc.) at the same cost, or even at a lower cost, than the previous generation, and yet offer an improvement in performance? This chapter aims to illustrate the innovations of the past 10 years, and to use these as a basis to describe the future opportunities to address these ever-increasing challenges. A discussion on the tradeoffs between cost and performance resulting from these requirements is presented using two metrics: those of component count and PCB footprint area for cost constraints, and power consumption for performance needs. This chapter invites readers to take a journey to the center of a multimode multiband terminal through the selected subsystems of Figure 14.3. Each subsection provides a focused view of that particular area or function. Section 14.1 covers design tradeoffs within the constraints of cost reduction. Section 14.2 presents techniques and challenges experienced in delivering the optimum power consumption with a focus on two key contributors: the application engine and the cellular power amplifier. Many other key aspects encountered in modern terminal designs deserve attention, but cannot be covered within the scope of this short overview.

Figure 14.3 Generic multimode multiband terminal top level block diagram

This chapter aims to show that, in this highly competitive and complex ecosystem, the key to winning designs is to deliver easily reconfigurable, highly integrated hardware solutions. In this respect, LTE is included in most subsections, since it becomes less and less cost-effective to deliver dual-mode-only chipsets.

14.1 Cost Reduction in Multimode Multiband Terminals

14.1.1 Evolution of Silicon Area and Component Count

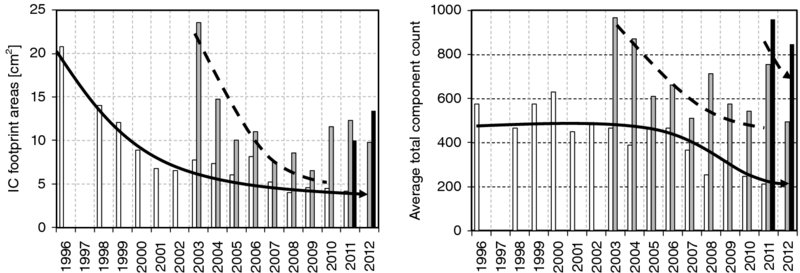

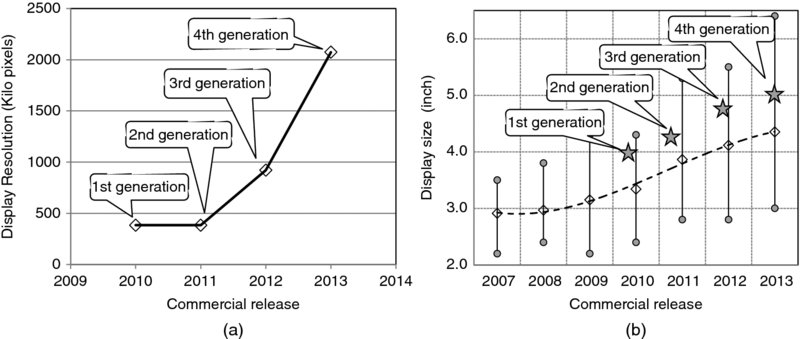

The trend in mobile phone hardware cost metrics is illustrated in Figure 14.4, by plotting component footprint area and total component count vs. year of introduction. The graph is generated with data extracted from the teardown reports of 69 mono-mode, 48 dual-mode, and 7 triple-mode handsets [3]. While the total number of components in EGPRS has remained virtually constant from 1996 to 2005, the required area has been reduced considerably over that time – from 14 cm2 (1998) to reach a minimum at approximately 5 cm2 (2006–present). This represents a reduction of about 65%. The maturity plateau has been reached thanks to highly integrated single chip solutions in which four key components are integrated into a single die: RF transceiver, baseband modem, power management unit, and multimedia processor. It took dual-mode handsets only four to five years to reach the level of complexity that took nearly eight years to reach in the GSM realm. Due to the low number of teardown reports available at the time of printing, it is difficult to make an accurate assessment of the trend in triple-mode devices. The metrics tend to show that the introduction of LTE has not significantly increased the cost of a dual-mode smartphone. The step occurring around 2009–2010 is primarily due to the shift in complexity between the voice centric “feature” phones and feature rich smartphones. The trend shows that these remarkable achievements were accomplished by higher levels of component integration and miniaturization. These improvements must also be offset against the simultaneous increase in mobile phone features. In 1998, handsets used monochrome LCD screens and lacked any significant multimedia capabilities. This is in stark contrast to the latest “super-phone” devices, some supporting full HD screens, which will soon be able to decode 4 K video resolution, a feature that is barely supported by cable TV decoders. Section 14.1.2 zooms in on the RF subsystem and shows how fast the 2013 LTE solutions managed to reach the same level of complexity as the most optimized dual-mode platforms.

Figure 14.4 Mobile phone stacked IC footprint area3 and total component count vs. year vs. supported modes. White: mono-mode EGPRS feature phones, Gray: dual-mode EGPRS-WCDMA (2003–2009 “feature” phones, 2010–2012 “smartphones”), Black: triple-mode EGPRS, WCDMA, LTE “smartphones”

14.1.2 Transceiver Architecture Evolutions

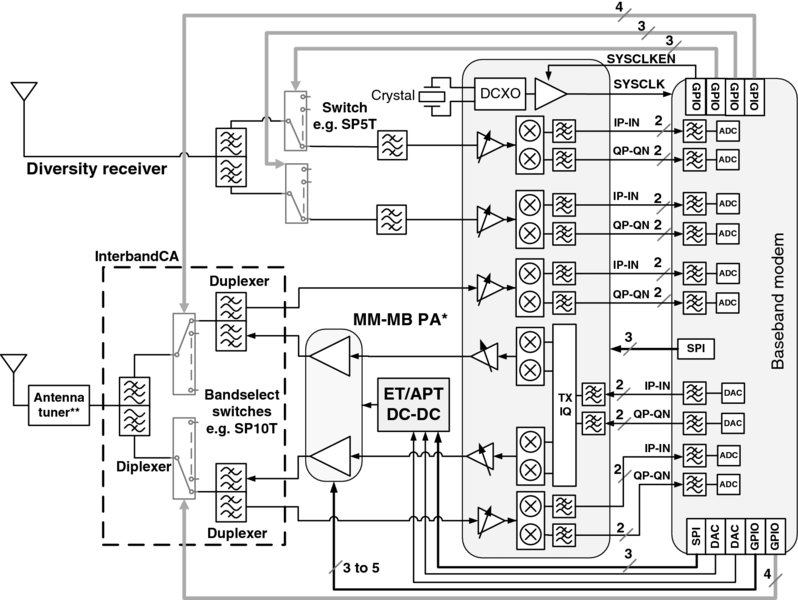

WCDMA has been in production for a little more than 10 years. But, over this decade, the RF subsystem has undergone tremendous changes. Through the selection of various RF transceiver architectures, as presented in Figure 14.5, this section aims to illustrate the efforts made by the industry to reduce costs, while also answering the demand for more modes and more bands. What a change between the early mono band I WCDMA UE (solution (a, b, c) Figure 14.5) and the recent triple mode LTE-HSPA-EGPRS phone. In this section will be shown the evolution of design challenges, which enabled reducing PCB area by a factor 4, and component count by 1.6, all while supporting nearly 3 times more frequency bands and one extra mode: LTE.

Figure 14.5 10 years of RF subsystem PCB evolutions4. PCB pictures relative scaling is adjusted to illustrate relative size comparison (a) WCDMA super-heterodyne RX IC, (b) WCDMA super heterodyne TX IC, (c) EGPRS transceiver IC, (d) WCDMA DCA transceiver, (e) EGPRS transceiver, (f) Single-chip dual-mode EGPRS and WCDMA transceiver, (g) Single-chip dual-mode with RX diversity EGPRS and WCDMA transceiver, (h and i) single-chip triple-mode with RX diversity EGPRS, WCMDA, LTE FDD (and GPS RX)

In the early years of deployment, WCDMA received criticism for lack of devices and poor battery life. Under this pressure, the challenge for first-generation transceiver (TRX) designs consisted of delivering low risk, yet quick time-to-market, solutions, sometimes at the expense of cost (high BOM) and power consumption. The choice of architecture was frequently influenced by the two- to three-year-long development cycle required for a cellular chip set. And, once a platform is released, OEMs need at least an extra five to eight months to get a UE ready for mass production. To minimize risk, some of these early generations selected the super-heterodyne architecture, requiring three RF ICs (Figure 14.5a, b, c): one WCDMA receiver and transmitter IC, and a companion EGPRS transceiver (TRX). With two Intermediate Frequency (IF) Surface Acoustic Wave (SAW) filters and two RF filters required per chain and per band, an associated complex local oscillator (LO) frequency plan, a high power consumption, and an intrinsically-high component count, super-heterodynes were not suited to meet the longer-term multiband and multimode requirements of modern handsets. The resulting priority, then, was to focus R&D efforts on reducing component count by delivering single chip TRX solutions.

Direct Conversion Architecture (DCA) is an ideal solution to achieve this goal because the received carrier is converted, in a single RF mixing conversion process, to baseband in-phase/quadrature (IQ) signals. The same principle, in reverse, applies to the transmit chain, a concept sometimes referred to as Zero-IF. For a long time, the DCA was not put into production due to problems with DC-offsets/self-mixing, sensitivity to IQ mismatches, and flicker noise. The use of fully-differential architectures, along with adequate manufacturing processes and design models, made IC production using these principles a reality in around 1995 for EGPRS terminals. Numerous advantages make this technology essential in dual-mode handsets: substantial cost and PCB footprint area is saved by removing the heterodyne IF filters. An example of this is presented in Figure 14.5d and e, where early WCDMA single-chip DCA solutions (2006–2007) reduce PCB area by a factor 4, and used 2.5 times fewer components than the super-heterodyne (Figure 14.5a, b, c) solution. Moreover, with its single PLL, the DCA not only contributes to reducing power consumption, but also provides a flexible solution to the design of multiple band RF subsystems. Additionally, this architectural approach is future-proof, since multimode operation consists in simply reconfiguring the cut-off frequency of the I/Q low pass channel filters.

As WCDMA technology started gaining commercial momentum, staying in the lead meant racing to deliver solutions that supported multiple WCDMA bands. Despite its numerous advantages, multiband support was not cost-efficient for early DCA commercial implementations because it required two external inter-stage RF SAW filters per band: one filter between the LNA and mixer in receive, and another between the TX modulator output and the power amplifier (PA) input in transmit.

In the receiver chain, filtering is required to reject the transmitter carrier leakage when the UE is operated near its maximum output power. In this case, it is not unusual for PAs to operate at 26-27 dBm output power to compensate for front-end insertion losses. The requirement to support more bands, and in the case of LTE the need to support more complex carrier aggregation scenarios, results in increasing RF front-end insertion losses. This, in turn, means that the PA maximum output power requirements may exceed 27 dBm in certain platforms. Because duplexer isolation is finite, TX carrier leakage can reach the LNA input port with a power as high as −25 dBm. In the presence of this “jammer,” the mixer generates enough baseband products through second order distortion5 to degrade sensitivity, thereby partially contributing to a phenomenon known as UE self-desensitization, or “desense” [4]. In this case, and depending on system dimensioning assumptions, RX SAW-less operation sets a stringent mixer IIP2 requirement on the order of 48 to 53 dBm (LNA input-referred) [4].

In the transmitter chain, further UE self-desense occurs due to TRX and PA noise emissions generated at the duplex distance. Depending on duplexer isolation, SAW-less operation sets TX noise requirements in the range of −160 dBc [4]. Meeting this requirement in bands with short duplex distances, such as band 13 or 17, constitutes a serious challenge to both TRX and duplexer designers as performance may become dominated by PA ACLR emissions rather than the far out of band noise level. The duplexer high-Q factor often pushes designers to trade isolation against insertion loss. In these bands, 3GPP has introduced relaxations to ease factors related to these design constraints [5].

As the number of bands to be supported was increasing rapidly, the race towards continuous cost reduction called for novel TRX architectures that would enable both TX and RX SAW-less operation. For example, removing the interstage filters in a penta-band RF subsystem may save the cost of a PA and the area of almost an entire RF transceiver6. SAW-less operation also means that RF transmitter ports can be shared between several RF bands. For example, a single low-band TX port could be used to support all UHF bands, for example, LTE bands 8, 13, 17, and 20. Similarly, a high band port could be shared to support either WCDMA or LTE transmissions in bands 1, 2, 3, 4. The SAW-less TX architecture is therefore the sine qua non to enable replacing discrete single-band PAs with a single multiband, multimode (MMMB) PA. The cost savings and design tradeoffs associated with the use of MMMB PAs are presented in Section 14.1.3.2 using a North American variant. Initial RX SAW-less commercial solutions appeared around 2008 (Figure 14.5f). Some of the techniques developed to meet the high IIP2 requirements range from careful design of fully differential structures, use of passive mixers or trap filters to reject TX leakage, as well as the integration of IIP2 calibration circuitry.

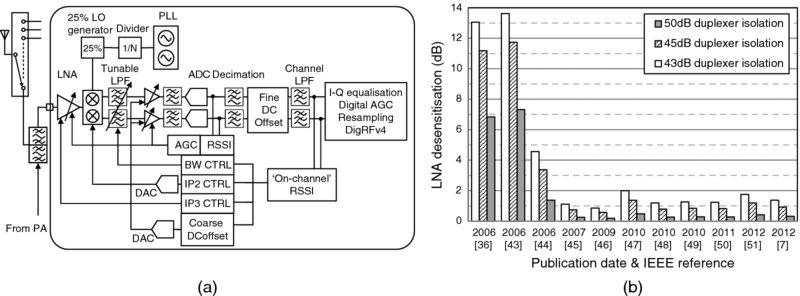

In contrast, TX SAW-less operation is more challenging and took a longer time to emerge since every block of the transmitter chain impacts the overall performance. The efforts required to meet the stringent −160 dBc requirement are illustrated in Figure 14.6a. This graph plots UE self-desense in a SAW-less application using the reported band I TRX noise performance7. With a desense less than a decibel under typical duplexer isolation, TX SAW removal started to become a reality around 2007–2008, and the first handsets using this technique made their appearance a couple of years later (Figure 14.5g). Note that bands with low duplex gap and distance, such as bands 13,17, may still require inter-stage filtering for certain vendors (Figure 14.5i). The primary principle for achieving low noise consists in minimizing the number of cascaded gain stages at RF. One example [6] proposes to carefully balance the 74 dB gain control range between digital, analog IQ, and a bank of parallel RF mixers. In [7] a single pre-amplifier RF stage made up of multiple parallel amplifiers delivers the equivalent of an RF power DAC. Each solution provides a mixture of pros and cons. For example, controlling carrier leakage in an architecture based on a bank of mixers can be a challenge at minimum output power, due to mixer mismatches. The mixers must deliver approximately −65 dBm8 output power with 15 to 20 dB LO rejection, so keeping LO leakage below −85 dBm while transmitting around 0 dBm sets, a non-trivial requirement on the LO-to-RF port isolation when dealing with multiple mixers. One key problem is not only to be able to implement an architecture which meets low noise requirements at high transmit powers, but also able to meet the high WCDMA dynamic range requirements with better than 0.5 dB gain step accuracy. The step size accuracy challenge can partially be solved using a high dynamic range IQ DAC. But for many years, these were either not available, or they consumed too much power.

Figure 14.6 (a): Modern RF CMOS DCA receiver. Adapted from Xie et al. [8], (b): Evolution of LNA desensitization due to TX noise in a hypothetical TX SAW less application (IEEE survey). LNA input referred noise figure of transceiver is assumed to be 3 dB

Despite achieving an impressive level of integration, the BiCMOS solution in (Figure 14.5f) did not support RX diversity, a feature which was not absolutely necessary for early Cat-6 HSDPA terminals, but which quickly became mandatory for LTE operation. A series of factors pushed this technology from the forefront, in favor of CMOS. With digital BB production volumes moving rapidly from 130 nm to 28 nm, the lower cost of manufacturing made this technology process very attractive. Beyond the intrinsic cost advantage, using CMOS for the RF-IC implementation enabled novel architectures to deliver an unprecedented level of performance and power consumption. With low operating supply voltages, CMOS significantly lowers the power consumption of functions that were implemented in an analog fashion in BiCMOS designs. High dynamic range (HDR) ADCs [5] and DACs are key blocks which allow for a reduction in complexity for the analog low pass filters. For example, due to limited ADC resolution, the receiver in Figure 14.5f introduced rather complex auto-calibrated analog I/Q group delay equalizers to flatten the channel filter group delay distortions [9]. With high dynamic range ADCs, the role of the analog filter can be changed from a channel filtering to a simple anti-aliasing function. Not only is the filter complexity reduced as the filter order is relaxed, but also EVM performance is improved since the level of in-band distortion is kept to a minimum. Moreover, with most of the channel filtering implemented in digital filters, the difficulties in supporting multimode operation are eased, since reconfigurability then becomes a matter of reprogramming an FIR or an IIR filter. The emergence of a standardized digital baseband interface such as DigRFSMv4 (Section 14.1.3.4) has further facilitated the transition towards a more digital-oriented CMOS transceiver design. With microcontrollers now available at lower cost impact, traditional digital BB functions can be moved into the RF transceiver. An illustration of a modern DCA receiver is shown in Figure 14.6a, where the use of a Digital Signal Processor (DSP) allows automatic calibration of LNA IIP3 and IIP2 mixer, digital DC offset compensation, and I/Q image rejection correction to deliver a level of performance that would have been nearly impossible to achieve in analog BiCMOS designs. Comparing receiver [8] with solution (f) in Figure 14.5, 70 dBm IIP2 (vs. 58 dBm) provides ample margin for SAW-less operation and, with an EVM performance below 3% (vs. 6%), near zero impact on LTE/HSPA+ demodulation performance is expected. And all of this is also achieved at half the power consumption (43 mW vs. 92 mW). In Figure 14.5, transceivers (g), (h), and (i) are all designed in CMOS technology. Finally, designing in CMOS paves the way for a low-cost, highly integrated single-chip solution in which the RF TRX, the digital BB, and power management ICs may be manufactured in a single die. Initially developed for EGPRS low-cost terminals, monolithic solutions are now in production for HSPA and have recently been announced for triple-mode LTE platforms [10,11]. With such a high level of integration, only a handful of ICs is required to build the platform.

With the removal-of-SAW-filters challenge solved for the majority of commercial chip sets and frequency bands, what are the new challenges the industry faces today?

In single chip devices (also sometimes denoted as System-On-Chip, or “SoC”), the collocation of multiple noisy circuits next to the sensitive RF TX/RX chains create numerous co-existence challenges. Solving these myriad of co-existence issues is a fertile field of innovations, and is briefly introduced in Section 14.1.3.5.

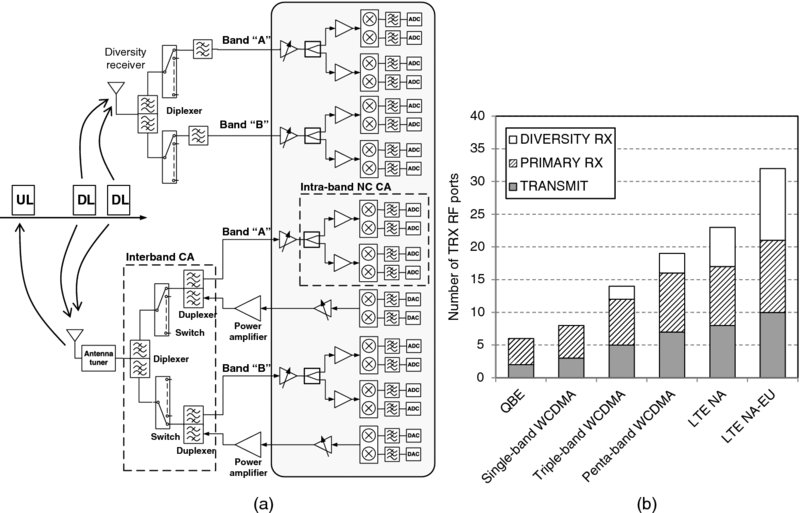

Another interesting challenge lies in TRX IC area reduction. With the large number of bands required for a WW LTE solution, the number of LNAs to be integrated increases. The problem is exacerbated due to requirements for RX diversity for each band of operation. The resulting increase in number of RF ports is shown in Figure 14.7b. For example, a NA-EU triple mode LTE, HSPA+, EGPRS variant may require up to 32 RF ports9. This is a 50% increase compared to a typical dual-mode WW phone. Unfortunately, RF blocks, such as LNAs and RF PGAs, do not typically shrink with decreasing CMOS nodes. As Figure 14.5 shows, the area occupied by TRX packages has shrunk over the years, reaching, in early 2013, the ultimate Wafer Level Packages (WLP), in which the package is basically the size of the active die (25 mm2). If pin count continues to grow, the modern RF CMOS transceiver package size may be dominated by pin count. In solutions using differential LNAs, the RF ports of the NA_EU variant would require a total of 54 RF pins. With such a high number of ports, ensuring acceptable pin-to-pin isolation is also further complicated. Lastly, PCB routing becomes more complex because high pin density packages often require additional PCB layers, which further raises the total cost to manufacture.

Figure 14.7 (a): Example RF front end complexity in supporting triple-mode and multiple downlink only carrier aggregation scenarios: contiguous and non-contiguous, intra and inter band. Only a pair of bands is shown. (b): RF transceiver number of RF ports required per application

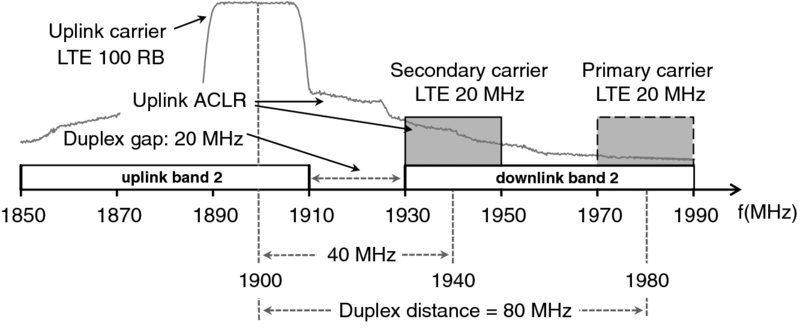

Finally, the introduction of Carrier Aggregation (CA) adds complexity to the entire RF subsystem as illustrated in Figure 14.7a. There are three categories of CA scenarios from 3GPP: inter-band, intra-band contiguous (IB-C-CA), and intra-band non-contiguous (IB-NC-CA). The immediate impact on TRX design is the need to support greater modulation bandwidth. The initial commercial deployments started in August 2013 with 10+10 MHz. The next phase, 20+20, will start during 2014, thereby requesting UE receivers to support a total of 40 MHz BW. There are numerous interesting challenges associated with CA, but a detailed description would go far beyond the scope of this introduction. While most challenges impact the RF-FE linearity, filter rejection, and topology, the special case IB-NC-CA is problematic for the design of a single-chip TRX solution. In this CA category, the wanted downlink carriers may be located at, or near, the edges of a given band as depicted in Figure 14.8. This situation creates at least two types of new challenges.

Figure 14.8 Example of worst case uplink–downlink carrier frequency spacing in the case of band 2 intra-band CA

The first problem results from the fact that, while the primary carrier is located at the duplex distance, the secondary carrier could be located at a distance slightly greater than the duplex gap (‘DG’ – cf. Figure 14.8). In bands with large DG, such as band 4, this is not an issue. But, for example, in the case of band 2, where the DG is only 20 MHz, the secondary carrier can be desensitized by the PA ACLR emissions. Thus even if inter-stage saw-filtering is applied, for certain combinations of uplink and downlink resource block allocations, the secondary downlink carrier sensitivity performance is degraded. This case is similar in nature to the desensitization occurring in band 13/17, where only a 3GPP performance relaxation can solve the issue. At the time of writing, these relaxations are being debated.

Secondly, it becomes apparent that opting for a single LNA/mixer chain to demodulate two carriers that could be located at the opposite edges of the band does not appear feasible for linearity and bandwidth reasons. As a consequence, a dual receiver and dual LO architecture is required to down-convert each carrier, as shown in Figure 14.7a. Contribution [12] summarizes in great detail the implications, and shows that due to limited isolation in a single-chip solution, cross-LO leakage occurs. In practice, this means that each mixer will not only be driven by its intended LO, but also by the leakage of the second LO, and by the multiple IMD products generated by non-linear mixing of the two LOs. The analysis indicates that requirements on cross-LO leakage and LO IMD product rejection might be difficult to achieve in a single chip. VCO-pulling is another phenomenon which is difficult to solve in single-chip devices. Pulling occurs when VCOs, operating either at close, or at harmonic, frequencies of another VCO, couple to one another, which may push the PLL to go out-of-lock. In the case of IB-NC-CA, [13] since carrier frequencies are signaled by the network, the reprogramming of VCO divide ratios has to be done “on the fly.” During this time, PLL synthesizers must be disabled and consequently IQ samples are interrupted. Only a 3GPP relaxation can help solve this issue. It is suggested to introduce a 2-ms interruption, a penalty equivalent to 4 LTE time-slots. Note that this issue is also applicable to inter-band CA. Finally, the problem is not expected to abate as the recent introduction of triple-band downlink carrier aggregation requires the UE to activate simultaneously four local oscillators (LO): one uplink and one for each downlink carrier, that is, 3 LOs in RX. Most triple-band combinations call for inter-band-CA, such as CA 4+17+30 or 2+4+13. But one special requirement combines all of the above mentioned challenges: CA 2+2+13 requires the UE to perform IB-NC-CA in band 2, plus inter-band CA with a carrier located in band 13.

14.1.3 RF Front End

The RF-FE includes a rich diversity of components ranging from RF filters, duplexers, diplexers, antenna switches, power amplifiers (PA), and other circuitry such as antenna tuners, sensors, directional couplers, and obviously antennae. In the RF TRX, multimode multiband operation is made possible via the use of reconfigurable architectures, mostly in the IQ domain and in the PLL/VCO blocks. Reconfigurability in the front-end is much more problematic, since it is difficult to implement tuneable RF filters without incurring either insertion loss or attenuation penalties. This section aims to illustrate the solutions found by the industry to address cost reduction in the front-end, while also meeting the needs posed by greater complexity.

14.1.3.1 Trends in Filters and Switches

In the receiver path, filters are used to eliminate the unwanted RF signals. In the transmit direction, they attenuate out-of-band noise and spurious emissions to ease coexistence effects between bands and terminals. For each supported FDD band of operation, Figure 14.10 shows that three filters and an antenna switch are required: two band-pass filters (BPF) in the duplexer and one BPF in the diversity receiver branch. The number of throws in antenna switches is driven by the number of bands that must be supported. For example, Figure 14.5 shows that switches have evolved from single pole 6 throw (SP6T) devices in 2003, to SP12T in 2013. This number is obviously bound to grow in coming years with increasing band count, support for LTE-TDD and Carrier Aggregation. Carrier Aggregation also raises additional RF performance requirements – improved linearity for example (Section 14.1.3.5) – and the need for a diplexer to further separate the aggregated bands.

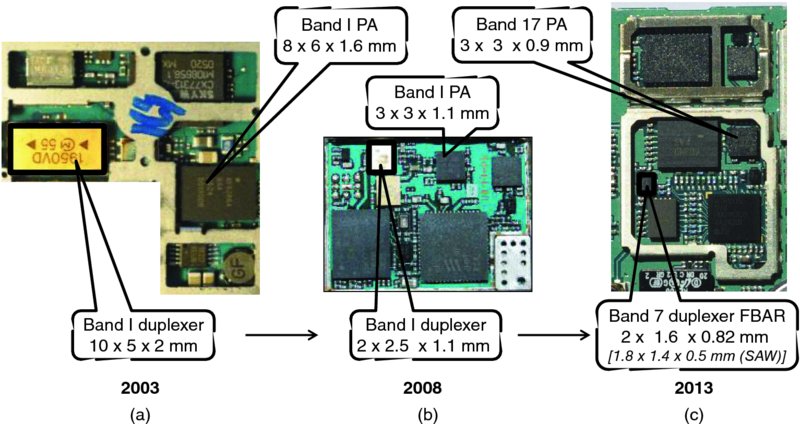

Figure 14.9 Example of duplexer miniaturization. (a): Ceramic 2003, (b): SAW 2008, (c): FBAR 2013 – PCB pictures are extracted from Figure 14.5 and scaled to reflect exact relative sizes

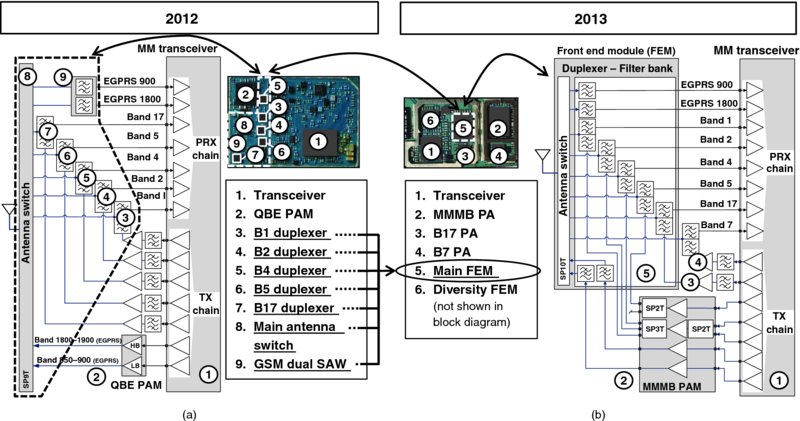

Figure 14.10 Enlargement of the RF front end of (block diagram11 and PCB pictures) of 2012 and 2013 terminals from Figure 14.5. (a): Discrete solution, (b): Integrated front end module example which supports quad-band EGPRS, WCDMA bands I, II, IV, V, and LTE bands 1, 2, 4, 5, 7, 17

For switches, only an improvement in technology can lead to significant cost reductions. Legacy GaAs PHEMT solutions are being replaced with cheaper single die SOI (Silicon on Insulator) semiconductor technology. In the world of RF filtering, the ideal solution would make use of tuneable RF filters to address cost reduction. Research is ongoing in this area and initial tuneable duplexers [14] show promising results, but these are far from meeting the stringent FDD operation isolation/insertion loss requirements. In practice, the approaches taken to reduce cost/PCB footprint area essentially fall into three categories: component miniaturization, co-banding, and module integration.

The main challenge in filter miniaturization has been and still remains to maintain low insertion loss while delivering high attenuation. Filter technology rapidly evolved from ceramic to SAW filters, a technology based on ceramic piezoelectric materials. It is complemented by silicon BAW/FBAR10 technology for the most demanding requirements related to short duplex distance and for frequency of operation beyond 2 GHz. An illustration of the efforts made for duplexers is shown in Figure 14.9: the size of the 2003 ceramic duplexer was 10 × 5 × 2 mm. SAW-based duplexers introduced around 2005–2008 shrank to 2 × 2.5 × 1.1 mm (Figure 14.9b), whereas the latest mass-produced models come in packages of 1.8 × 1.4 × 0.5 mm for SAW and 2.0 × 1.6 × 0.9 mm for FBAR (Figure 14.9c). Compared to the initial ceramic devices, the size is remarkably reduced to 1/15 in area ratio and 1/60 in volume ratio for SAW devices.

Co-banding is a technique which consists of sharing RF filters in bands for which multimode operation must be supported. For example, in band II (PCS 1900), rather than implementing an EGPRS RX chain with a dedicated PCS RX BPF, it is tempting to reuse the antenna to RX path of the band II/band 2 FDD duplexer required for LTE and HSPA operation. By routing EGPRS through the duplexer, one RF BPF is saved. The drawback of this approach is that the duplexer insertion losses are usually higher than that of a standalone BPF, thereby leading to a slight degradation in EGPRS sensitivity. An example of co-banding is shown in Figure 14.5g (2011). In practical terms, if co-banding was applied to UE [15], the 17 frequency bands supported by this terminal could be reduced to only 8 unique RF receiver paths and 4 transmitter line-ups: bands 1, 2, 5, and 8 could be common to LTE, WCDMA, and EGPRS, band 4 common to LTE and WCDMA, band 3 common to LTE and EGPRS, leaving band 20 and band 7 as specific bands to LTE. In reality, the tradeoff between cost savings and performance degradation depends on OEMs/operator targets. For example, in many instances, the performance loss of co-banding EGPRS with LTE in band 3 is not acceptable and leads to separate RF paths for each air interface.

For a higher level of integration, and therefore in a move to achieve further PCB area savings, the switching and filtering functionalities may be integrated into a module also commonly known as a FEM (Front-End Module). An example of a block diagram of a FEM is presented in Figure 14.10. Compared to a discrete filtering solution, such modules enable significant component count savings. Nevertheless FEMs show less flexibility when adapting the band support configuration. Therefore, existing solutions are customized to match the specific requirements of a phone manufacturer for a given variant. In this respect, FEMs make the most economical sense when applied to a band combination needed in a large number of variants where volumes are high and therefore production costs are lower. The 3G WW band combination I, II, IV, V, VIII is one example. The more exotic the band combination, the more difficult it becomes to justify a custom FEM design. With the number of band combinations increasing, OEMs need to carefully study which band combinations are worth integrating for future products. At the time of writing, the most advanced FEMs deliver further savings by integrating some of the TRX LNA matching components.

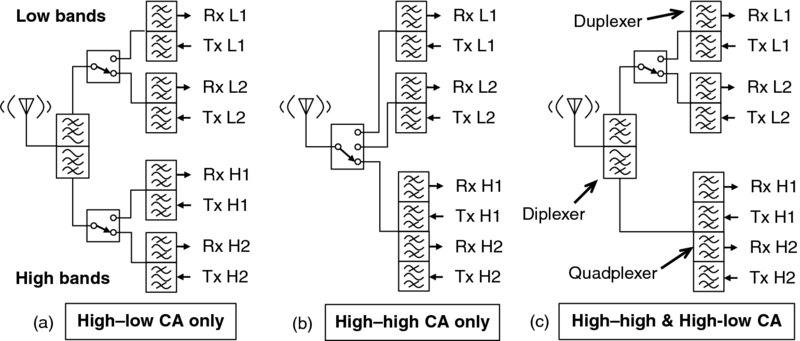

Looking ahead into Carrier Aggregation (CA) solutions, the optimized bank of duplexers may not always lead to the best cost/performance tradeoff, depending on the CA band combinations. Solutions based on more complex RF multiplexers, such as quad or hexaplexers, could deliver interesting alternatives. Quadplexers are not the simple concatenation of two duplexers: they consist of four individual bandpass filters (BPF) connected to a common terminal antenna port. As such, each BPF differs from those used in duplexer designs. For example, the TX BPF of a duplexer is designed with the main constraints of delivering high TX noise rejection at the RX band (cf. Section 14.1.2). In the example of a quadplexer, each TX BPF has to reject TX noise into two distinct RX frequency bands. This increases the number of constraints (zeros in the stopband) under which the BPF must be designed. Depending on band combination, the designer may have to tradeoff isolation in return for insertion losses. One additional factor leading to higher losses comes from the matching networks required to ensure that each filter does not load each other. The main advantage of multiplexers is that they simplify the RF front-end architecture. An example using two high and two low hypothetical frequency bands is shown in Figure 14.11 below and illustrates the tradeoff between cost and complexity.

Figure 14.11 (a): Duplexer bank, high–low band switches, diplexer solution, (b): quadplexer, antenna switch solution, (c): duplexer, quadplexer, switch, diplexer solution

Figure 14.11a uses a common diplexer to all bands and supports only high–low CA, for example band 1–band 8, or band 2–band 5. The main drawback of this approach is that bands and air interfaces which are not meant to be used in Carrier Aggregation pay an insertion-loss penalty in both uplink and downlink. The use of a quadplexer in Figure 14.11b considerably simplifies the front-end circuitry, since only one antenna switch is required, and each band of operation benefits from a removal of the diplexer. In this example, only a high–high CA is supported, for example band 2–band 4. Figure 14.11c supports both high–high and high–low CA, but the price that must be paid for this extra capability is that of adding a diplexer. Note that this latter configuration also supports triple-band CA at no or little extra cost compared to the dual-CA solution (a). For example, solution (c) could support inter-band triple band CA of bands 2–4–13. It can be seen that with CA, a variety of front-end architectures must be assessed to deliver the optimum cost/performance tradeoff. For each combination, a tradeoff must be found between complexity and performance. These tradeoffs have generated many discussions at 3GPP on ways to determine the insertion loss associated with each configuration, and how these extra losses should be taken into consideration to define reference sensitivity and maximum output power relaxations. As a consequence, for each dual downlink CA scenario, many filter manufacturers' data had to be collected to agree upon an average insertion loss per type of multiplexer. Further complication occurs since insertion losses are heavily design dependent, as well as band, temperature, and process dependent. An example of the tedious and hard-fought agreements can be found in [16].

14.1.3.2 Power Amplifiers

Power amplifiers are no exception to the design constraints and tradeoffs previously mentioned. With highly integrated single chip transceivers, the RF subsystem area is heavily influenced by the area occupied by FE components and in particular PAs. The ideal solution would call for a unique PA which could be reconfigured to support all bands and all air interface standards. In practice, two approaches are taken to deliver the best compromise between performance and cost: discrete architectures based either on several single-band PAs, or the use of a MMMB PA architecture complemented by a few single-band PAs.

In discrete PA architectures, the most common solution uses a quad-band EGPRS (QBE) power amplifier module (PAM) and dedicated single-band PAs for each WCDMA/LTE band. When the band is common to both WCDMA and LTE, a unique PA is used for both modes, usually with different linearity settings to accommodate the slightly higher PAPR of LTE transmissions.

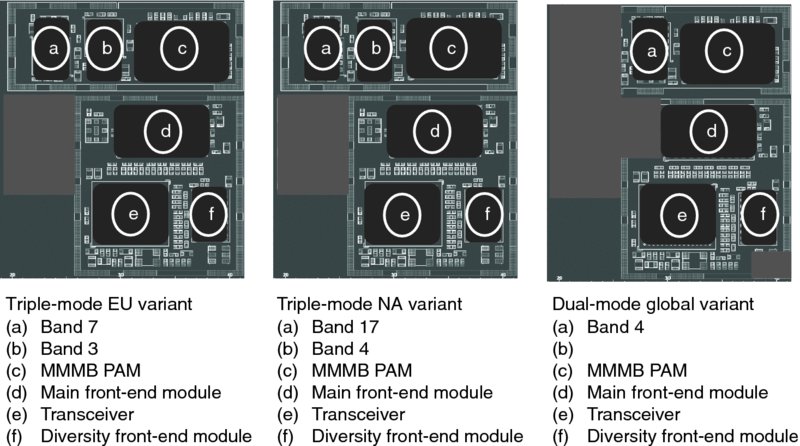

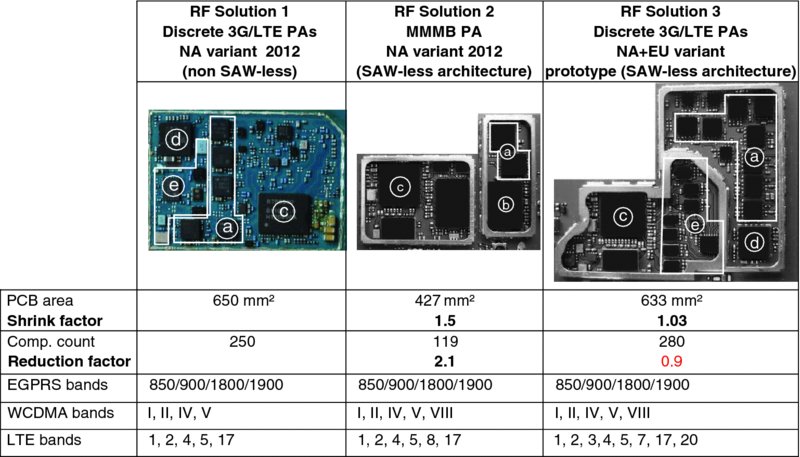

In MMMB PA architectures, a single triple-mode PAM replaces the QBE PAM, and some of the WCDMA/LTE single-band PAs. Yet, certain LTE specific bands, such as band 7, are not yet covered by MMMB PAM and therefore require a dedicated single-band PA. Examples of implementations of the two approaches are presented in Figure 14.15: solutions 1 and 3 are discrete architectures; solution 2 uses a MMMB PAM complemented by two single-band PAs (band 4 and band 17).

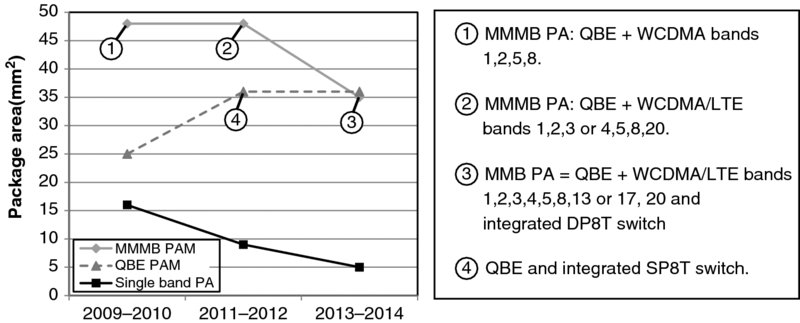

Figure 14.12 PA package size evolution and number of integrated bands – compiled from major suppliers' public domain data

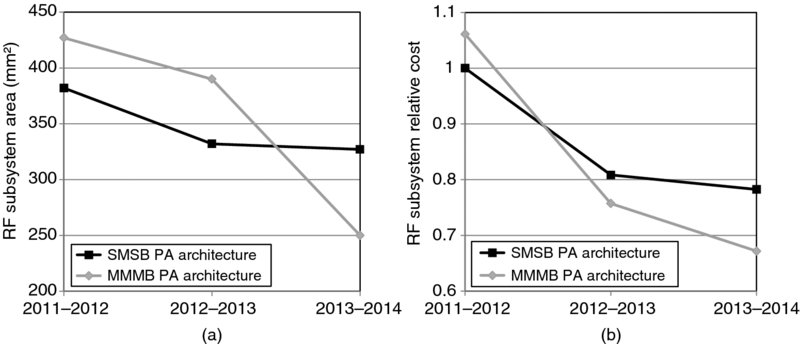

Figure 14.13 Single band vs. MM-MB PAs: RF subsystem area (a) and cost (b) tradeoffs in a NA triple-mode application

Figure 14.14 Reconfigurability concept with MMMB PA architecture. MMMB PAM covers quad-band EGPRS, penta band 1, 2, 5, 8, 20 in WCDMA and LTE modes [17]. Triple-mode NA variant: QBE, WCDMA I, II, IV, V, VIII, LTE 1, 2, 4, 5, 8, 17, triple-mode European (EU) variant: QBE, WCDMA I, II, IV, V, VIII, LTE 1, 2, 3, 5, 7, 8, 20, WW dual-mode variant: QBE, WCDMA I, II, IV, V, VIII. Renesas 2012 [17]. Reproduced with permission of Renesas Mobile Corporation

Figure 14.15 Examples of triple-mode RF solutions (discrete and MMMB) – (a) Single-Band PAs (3 × 3 mm), (b) MMMB PAM (5 × 7 mm), (c) Triple-mode transceiver IC, (d) 2.5G Quad-band amplifier module (5 × 5 mm), (e) Duplexers (2.0 × 2.5 mm and 2.0 × 1.6 mm) + antenna switch

The evolution of PA package area (L*W in mm2) over the last 4–5 years is shown in Figure 14.12 for three families: single-band, QBE PAM, and MMMB PAMs.

Single-band PAs (squares) have reduced their footprint by a factor 3, from about 16 mm2 to about 5 mm2. This category has reached a maturity plateau and future packaging technology improvements are unlikely to significantly impact the overall radio area. With 25 mm2 package area, and their long history of production and optimization, QBE PAM (triangles) have also reached a level of maturity and will most likely remain at this level for the coming years. The recent increase in area to 36 mm2 is due to the integration of an SP8T antenna switch. The initial 2009–2010 MMMB PAMs (diamonds) combined a QBE and support for quad-band WCDMA operation. They rapidly evolved to support dual-mode penta-band, and the triple-mode operation octa-band operation. Remarkably, the 2013–2014 MMMB PAM, which covers QBE, octa-band WCDMA/LTE PAM, and a DP8T band distribution switch comes in a package size comparable (35 mm2) to that of a QBE–SP8T module. It is expected that PA suppliers will invest further efforts into these solutions as triple-mode will soon become a de facto requirement.

The choice of architecture is primarily driven by the tradeoff between cost and size for a given band-set. The vast majority of dual-mode EGPRS-WCDMA terminals support two or three WCDMA bands. In these solutions, the discrete PA architecture is often the most preferred solution as there is little or no cost advantage to using a MMMB PAM. An MMMB PAM becomes advantageous when there are four or more WCDMA/LTE bands to be supported. An example of cost tradeoff selection metrics is shown in Figure 14.13 in the specific case of a North America (NA), triple-mode product12. Over time, the MMMB PA architecture is expected to provide both size and cost benefits over the discrete PA architecture. From an RF subsystem PCB area perspective, it requires an octa-band MMMB PAM to better the discrete PA solution. From a cost perspective, the dynamics are less trivial as the introduction of such highly integrated products often benefits from a positive spiral effect: the introduction of a smaller package with increased band coverage induces a cost reduction, which induces a production volume increase, which in itself induces a reduction in sales price.

Several other factors come into play when making a selection of architecture:

- – Power consumption: single-band PAs exhibit better power consumption than MMMB PAMs. This is mainly because discrete PA matching networks can be optimized for a narrow frequency range while MMMB PAMs must be matched over a wider band. In addition, MMMB PAM efficiency is lower as they absorb the insertion losses of the band distribution switch,

- – Design effort: MMMB PAMs considerably simplify the PCB place and route task, especially in variants covering a large number of bands. An example of complexity in attempting to cover a future NA-EU variant using discrete PAs is shown for illustration purposes in Figure 14.15.

- – Multiple vendor sourcing management: MMMB PAMs bring a significant advantage over discrete solutions as they reduce the number of references for a terminal model/variant,

- – Ease of reconfigurability: MMMB PAMs, complemented with one or two single-band PAs, provide the best tradeoff today between cost, PCB place and route complexity, area, and ease of reconfiguration to support multiple ecosystems. An illustration of the reconfigurability concept is shown in Figure 14.14 [17], where a MMMB PAM is used to cover QBE and quad-band WCDMA (I, II, V, VIII) and LTE band 20. This example shows that a single reference design may be quickly adapted to serve three different regions of the world with minimal changes, and yet can achieve rather aggressive, and nearly identical, PCB area and component count metrics.

Finally, Figure 14.15 summarizes all of the previous discussions in one place. RF solutions 1 and 2 address the same NA, triple-mode telecom operator variant and illustrate both graphically and quantitatively the significant gains that MMMB PAMs bring to such products: the area is reduced by 150%, and uses half the components. Solution 3 is a prototype which uses single-band PAs and a QBE PAM to target a NA and EU variant in triple-mode operation. In comparison with solution 1, one can see that this PCB is extremely well-optimized, and it covers three additional LTE and one extra WCDMA band with nearly identical PCB metrics. Yet, it is easy to note that the complexity and the cost associated with such architecture would most likely be unacceptable for OEMs. It is evident from this prototype that next-generation variants which attempt to target more than one ecosystem, such as this effort for NA-EU coverage, can only be cost effective through the use of MMMB PAMs. It is estimated that in this example, the use of the latest octa-band MMMB PAM (Figure 14.12, 2013–2014) could save 7 out of the 8 single-band PAs, with band 7 being the only band which would need a dedicated PA. It thus comes as no surprise that the focus for future PA design will almost surely target the use of MMMB modules.

14.1.3.3 Over-the-Air (OTA) Performance

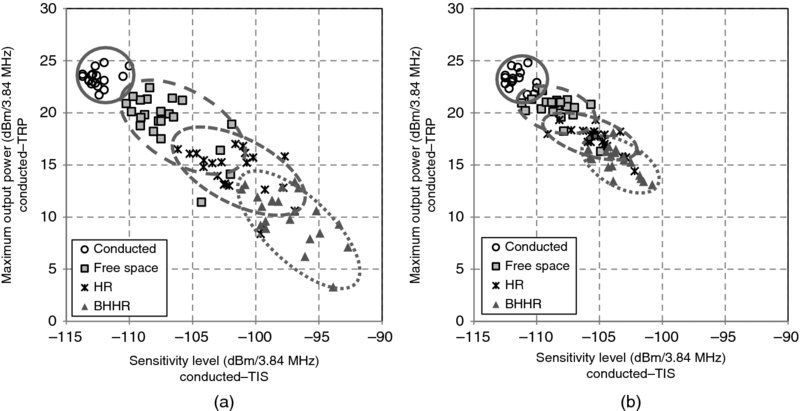

While chip set suppliers are benchmarked by OEMs to deliver the best RF performance in conducted test conditions, and often have to iterate a design to improve performance by a fraction of a dB, telecom operators, on the other hand, are primarily interested in OTA performance. Between these, the OEMs are more concerned with ensuring their products will pass the impressive set of 3GPP/PTCRB/GCF tests. Typically, the test plan of a triple-mode phone involves approximately 1500 tests, the majority of which are conducted tests, and which typically lead to several months of costly testing. When it comes to OTA performance, the dual-mode EGPRS/HSPA UE is measured against two simple Figures of Merit (FOM)13: Total Radiated Power (TRP) and Total Isotropic Sensitivity (TIS). Each FOM is then re-measured in different user interaction (UI) scenarios: UE in Free Space (FS), held in a hand phantom14, placed against a head phantom or held in hand and placed against the head phantom [18]. Figure 14.16 plots the WCDMA TRP vs. TIS performance, in band V and band II, across a sample of 30 class 3 recently PTCRB-certified smartphones. Looking at the conducted results (circles), UEs generally perform within 1 or 2 dB from each other in both axes. In output power, performance ranges across the class 3 tolerances (24 dBm +1/−3 dB), the majority being calibrated to deliver 23 dBm. In sensitivity, all UEs pass with a comfortable margin, but because this metric is used by OEMs to benchmark chip set platforms, the performance spread is much tighter than in TX power. With OTA TRP and TIS, the spread across UI increases dramatically between bands.

Figure 14.16 Example of modern WCDMA OTA TRP vs. TIS performance of 30 recent smartphones extracted from PTCRB reports based on CTIA OTA test plan. (a): band V mid-channel, (b): band II mid-channel. (HR: Phantom Hand Right only, BHHR: Beside Head and Hand Right Side, that is, head and hand). These graphs compiled with permission of PTCRB

For example, in band V the spread between conducted and BHHR varies between 10 and 18 dB. But in band II, the spread is nearly half that of band V, ranging from 5 to 9 dB in both TRP/TIS. In a given band, the difference between conducted and FS gives a good indication of antenna gain, with superior performance in band II as compared to that in band V. This illustrates that designing an antenna with desired performance in low bands is a significant challenge in modern smartphones. Figure 14.17b, shows that the task of the antenna designer is not becoming any easier, since the volume made available is continually reduced. Because of these constraints, it is not unusual for antennae to reach alarming VSWR values as high 10 : 1, corresponding to mismatch losses (ML) of 4.8 dB. This loss impacts TRP and TIS. One can see that with chip sets no longer being loaded/sourced with the ideal 50 Ohm of the conducted tests, the 0 dBi antenna gain assumption made in 3GPP is far from being met in actual situations.

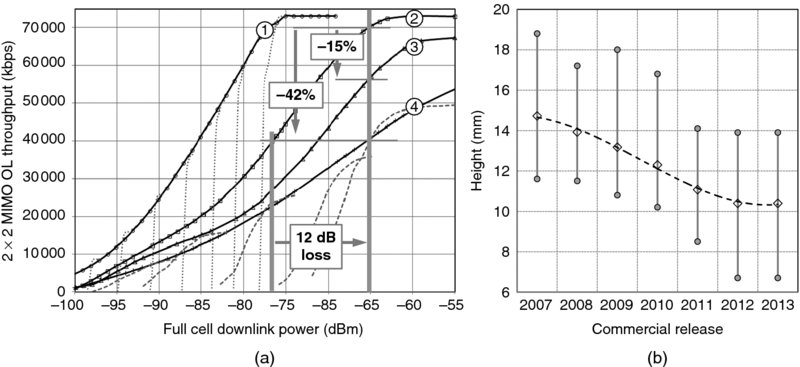

Figure 14.17 (a): example of MIMO throughput loss vs. antenna correlation:  Static bypassed RF channel,

Static bypassed RF channel,  Low correlation EPA model,

Low correlation EPA model,  Medium correlation EPA model,

Medium correlation EPA model,  High correlation EPA model, (b): trends in smartphones heights. Reproduced with permission of Videotron Ltd.

High correlation EPA model, (b): trends in smartphones heights. Reproduced with permission of Videotron Ltd.

With user interaction, absorption losses (AL), due to the proximity of body tissues, further degrade antenna performance. Absorption losses depend on grip tightness [19] or on the gap between the UE and the human body, with the hand-only test case usually presenting the lowest loss.

Antenna design becomes further complicated for LTE, since devices must then operate over a wider range of frequencies, from the new ultra-high 2600 MHz band to the ultra-low UHF bands (700–800 MHz) at the lower end of the spectrum [15]. The traditional way to optimize matching, which normally consisted of using a single matching network to deliver the best gain over such a large range thus becomes much more difficult to achieve. These constraints have pushed the industry to look into solutions that enable antenna reconfigurability or antenna tuning capabilities. Two approaches are currently being investigated: one is of antenna-tuners connected to a non-tuneable antenna, and the other is antenna tuners associated to antennae with tuneable resonators. Antenna tuners basically consist of a digitally tuneable impedance matching network. Tuners deliver variable reactances by using an array of switched capacitors and fixed inductors often arranged in a pi topology. The digital settings which optimize performance for each band can be pre-calibrated using look-up tables. However, the best agility is accomplished by using a coupler at the antenna port, so real-time VSWR can be monitored and used in a closed-loop operation to continuously deliver optimal performance, independent of UI. In this respect, the advantage of standardizing an RF-FE digital control bus, such as MIPI® RFFE, allows multiple vendors to be easily interchanged (cf. Section 14.1.3.4). In theory, an antenna tuner should aim at delivering close to 50 Ohm impedance. In practice, depending on the band and antenna structure, the tuner will only be able to partially correct mismatch losses. For example, the study on the impact of grip style in [19] indicates that in most configurations performance is largely dominated by absorption losses, with values ranging from 1.5 to 7 dB loss, while impedance MLs only account for 0.5 to 2.5 dB. In this example an antenna tuner connected to a non-tuneable antenna would not bring significant gains. These moderate gains must be weighed against design challenges associated with front-end circuitry. The linearity requirements for components are high15 [20], and yet components must deliver minimal losses while meeting the low cost and low PCB footprint targets. This is one of the primary reasons why the industry is looking at ways to improve radiated efficiency by developing tuneable antennae. If the antenna is capable of tuning its resonators to the band of operation, then theoretically it should be possible to deliver enhanced antenna efficiency. In this case, antenna tuners become mandatory since the resonator tuning process induces impedance mismatches. Looking further ahead, some companies are also investigating ways of solving AL degradation by designing antennae with preset radiation patterns. With the set of proximity sensors embedded in modern smartphones, one could imagine a phone capable enough to adapt the antenna tuners based upon proximity detection of the user. At this time it is difficult to assess which technique will be the most promising. Some of the most advanced techniques in mass production today rely on antenna selection switching, with or without limited impedance tuning. This technique consists of replacing the diversity receive antenna with an antenna capable of supporting both transmit and receive frequency bands. In this case the platform may swap the primary and diversity antennae at any time so as to maximize system performance. Note that this scheme will not be applicable to future platforms needing to support transmit diversity.

With MIMO LTE, downlink throughput is the new FOM. MIMO throughput depends on the end-to-end correlation between the two data streams. Consequently, the UE demodulator experiences the product of three matrices: eNodeB TX antennae, RF propagation channel, and UE RX antennae correlation matrix.

Figure 14.17a, shows the impact of the RF channel correlation matrix on a recent triple-mode UE in a conducted test setup. The measurement is performed in AWS band, 10 MHz cell BW, using a 2 × 2 MIMO RF multipath fader. The graph plots UE DL throughput measured by an Anritsu 8820c eNodeB emulator vs. DL full cell BW power under four RF propagation conditions: static bypass mode (dots), low (squares), mid (triangles), and high (crosses) correlation matrices of the 3GPP EPA fading model. For each RF fading model, the DL MCS index is varied to capture the UE behavior across its entire range of demodulation capabilities. To avoid overloading the figure, only a few waterfall curves are shown in light gray dotted lines. The resulting envelope of each waterfall is plotted in plain lines.

This graph provides insights into the susceptibility of LTE MIMO performance to correlation. There are two ways to read this experimental data:

- Case of a user located under an eNodeB, experiencing a high DL RF power of, say, −65 dBm. The difference between low vs. mid, and low vs. high correlation results in a 19% and 42% throughput loss, respectively. In the latter case, the throughput drops from 70 Mbit/s to about 40 Mbit/s. Under high correlation conditions, the impairment is so bad that the BB is unable to deliver more than 54 Mbit/s even at maximum input power (not shown on graph). From a user experience perspective, it is unlikely that this lower-than-expected performance would be noticed, since absolute throughput is not a sufficient metric to guarantee a good user experience (see Chapter 13 for more details). From a telecom operator's perspective, the situation is quite different as this loss prevents the cell from delivering the maximum theoretical performance.

- At a given target throughput, increasing correlation leads to a degraded UE RF sensitivity. For example, at a target 40 Mbit/s, a user experiencing high correlation suffers from a 12 dB penalty in link budget. This penalty increases with increasing target throughput because of the floor in performance of that particular test case. This graph can also be interpreted as a fair illustration of the differences one can expect between operation in high bands (e.g., 2600 MHz) where the antenna should perform well, and low band operation (e.g. 700 MHz). Note that at cell edge, in low SNR conditions, there is little difference in performance.

This graph shows how crucial it is for telecom operators to jointly define with 3GPP a standardized OTA test method. In an ideal world, OTA testing should be able to reproduce and predict field performance. In practice, predicting OTA MIMO user experience appears to be a nearly impossible task because so many variables can impact performance. At worst, OTA testing will deliver a reliable tool to benchmark terminals against one other. Here are a few examples of the challenges – based on recent contributions:

- Contribution [21] has shown that antenna correlation in a smartphone is so sensitive to its close surroundings that the presence of tiny co-axial semi-rigid cables impacts the measurement accuracy. Using optical fiber feeds, FS correlation reached 0.8, a high value, while measurements with semi-rigid feeds indicated a correlation on the order of 0.4. If tiny semi-rigid cables can change the correlation by a factor of two, it is reasonable to assume that the presence of larger objects could induce greater impairments. Unfortunately, the nature of interactions is so complex that no general rule governing the degradation of antenna correlation can be drawn. For example, the study in [22] shows that a smartphone with good FS performance can turn into a poor terminal in the presence of a human body – but the exact opposite is also true. The study in [23] goes a step further by measuring the impact of a real user on antenna correlation vs. FS and phantom head. The study uses prototypes specifically designed to deliver high and low correlation performance in the 700 and 2600 MHz bands. Measurements demonstrate that the difference between high and low correlation decreases with UI, so much so that at 700 MHz, it becomes difficult to distinguish the two prototypes in the presence of a real person. This suggests that any ranking between good and bad devices based on FS and phantom head measurements might not be in agreement with the more subjective rankings resulting from actual use (see also [24]).

- In addition, antenna correlation varies vs. carrier frequency in a given band.

- From the above results, it may be intuitively understood that the angle of arrival also plays an important role. For example, one may expect that the use of phantom head only might not block as many incident waves as would be blocked by real person. This implies that measuring user experience requires a 3D isotropic test setup. 3GPP is assessing three test methods that could fulfill this requirement: anechoic, reverberation chambers, and a two-stage method. With their intrinsic 3D isotropic properties, reverberation chambers are an attractive solution to this problem.

- Another difference between lab measurements and practical user experience is that 3GPP test cases do not include closed-loop interactions between a NodeB and UE. Good MIMO performance is about making the best of the instantaneous radio conditions, either by using frequency selective scheduling or by adapting rank. These closed-loop algorithms are proprietary to each network equipment vendor and cannot be replicated using eNodeB emulators.

These are some of the complex challenges that OEMs, telecom operators, and chip set vendors are currently facing when it comes to defining OTA performance test cases. Not only must 3GPP assess and recommend test methods (AC, RC, or two-stage) but it must also define test conditions and pass/fail criteria to provide operators with a reliable and realistic tool to benchmark devices. Considering the complexity of the interactions between UE antennae and the surroundings, this task is far from trivial.

14.1.3.4 RF Subsystem Control and IQ Interfaces

The conventional RF subsystem architecture relies on analog IQ RF-BB interfaces and a myriad of control interfaces specific to each of the RF-FE components. The primary disadvantages of this approach are twofold:

- – The associated number of pins tends to be larger, which increases package size, cost, and further complicates PCB routing.

- – Dealing with a wide variety of proprietary interfaces restricts the ease with which handset makers can “mix and match” RF-FE components, and often leads to software segmentation.

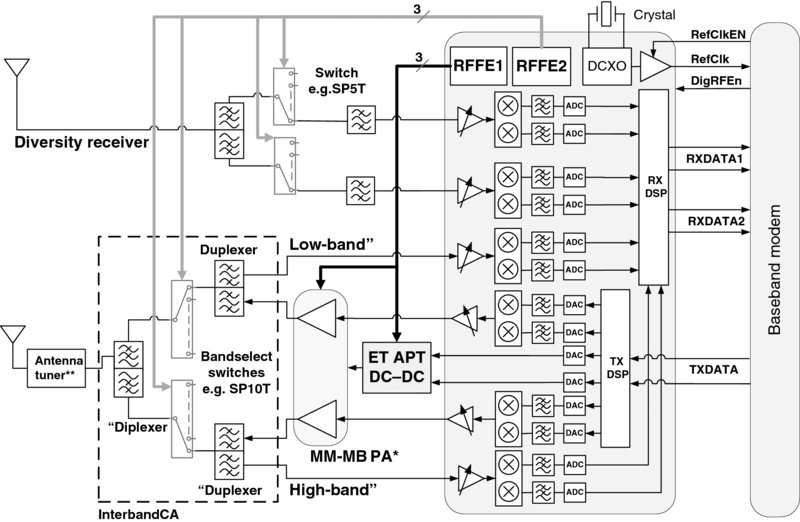

The example in Figure 14.18 shows a hypothetical downlink-only carrier aggregation RF subsystem focusing on control and IQ interfaces only. In total, 47 pins are required. This number depends on the choice of ICs, such as the type of power amplifier, the number of bands and antennae that are selected for a given handset variant.

Figure 14.18 LTE inter-band carrier aggregation hypothetical block diagram using conventional buses. Pin count: 14 (switches) +20 (IQ) + 8 (PA-CTL) + 3 (SPI) + 2 (sysclk) = 47 pins. Interconnect for MM-MB PA temperature sensor (*), and antenna directional coupler (**) not included. For illustration purposes only. Pin count required for RF switch control could be reduced by sharing one or several sets of GPIO lines

The MIPI Alliance16 RFFE and DigRF v4 digital interfaces have been designed to specifically address these issues. DigRF v4 offers features and capabilities specifically designed for BBIC-RFIC bidirectional exchange of both data and control. With 1.248 GBit/s minimum bus speed, DigRF v4 is a high speed, low voltage swing, point-to-point digital interface. It provides numerous flexible options for implementers, such that aspects like pin count, spectral emissions, power consumption, and other parameters may be optimized for various operating conditions imposed by a given design. While these interface options allow for tradeoffs to be made in a current design, they also provide adaptive growth potential for new services, such as carrier aggregation, without undue modifications to either the interface specification or to existing Intellectual Property (IP) used for building implementations. DigRF uses a minimum of seven pins: a pair of differential lines for RX and a pair for TX with both carrying IQ data and control/status information, a reference clock pin (RefClk), a reference clock enable pin (RefClkEn), and a DigRF interface enable pin (DigRFEn).

The Radio Frequency Front-End Control Interface Specification, or RFFE as it is commonly known, has emerged in recent years as the de facto standard for implementing RF front-end control. Even though other digital control interfaces might provide some of the basic functionality required, RFFE has been designed to address specific needs that are frequently presented, or are increasingly desired, in modern UEs. A single RFFE bus instance supports a single master, along with up to 15 slave devices connected in a point-to-multipoint configuration. Comparatively, RFFE is a low speed, high voltage-swing interface. The master, or major controlling entity for an RFFE interface instantiation, may be hosted within an RFIC, or in some other component such as a BBIC or other device which is suitable for the slightly higher level of integration required for an RFFE controller. An RFFE bus uses three pins: a unidirectional clock line driven by the master (SCLK), a common unidirectional (optionally bidirectional) data line called SDATA, and a common line for voltage referencing, and optionally for supply, called VIO. Some of the high-level characteristics for each of these interfaces are summarized in Table 14.1.

Table 14.1 Selected DigRF v4 and RFFE characteristics. DigRF clock frequencies (*) assume a 26 MHz reference crystal oscillator. DigRFv4 has defined a set of alternate clock frequencies associated with 19.2MHz crystal reference oscillators

| DigRF v4 | RFFE | |

| Point-to-Point | Point-to-Multipoint | |

| Bus topology | Transceiver ↔ Baseband | Transceiver or Baseband → RF-FE |

| Termination | Terminated or unterminated | Unterminated |

| Voltage swing | 100 to 200 mV pk-pk differential | 1.8 or 1.2 V single-ended |

| Clock frequencies | 1248, 1456, 2496, 2912, 4992, 5824* MHz | 32 kHz to 26 MHz |

| Pin count | Minimum 7 pins | 3 pins |

Figure 14.19 shows that applying both interfaces to the example of Figure 14.18 may save up to 32 pins. Note that the system partitioning is implementation-specific and Figure 14.19 is only presented to illustrate cost/pin savings. If the RF IC is already pin count limited, it might be decided to “host” some, or all, of the RFFE buses either in the BBIC or in the PMIC.

Figure 14.19 LTE inter-band carrier aggregation hypothetical block diagram using DigRF and RFFE. Assumes DigRF v4 is clocked at 2496 MHz, assumes a separate RFFE bus for switches and for PA control. Interconnect for MM-MB PA temperature sensor (*), and antenna directional coupler (**) not included.9 (DigRF) + 6 (RFFE) = 15 pins

Beyond these direct hardware savings, both interfaces provide additional advantages which can be of benefit to both component suppliers and platform designers.

With DigRF, IQ ADCs and DACs are located in the RF TRX. The BB IC may then become a pure digital IC, which helps in decoupling the CMOS node used in each IC. The BB may then be shrunk/ported to the latest CMOS node more easily, while the RF transceiver can remain in an “older” CMOS node which offers a more appropriate performance/cost/power consumption tradeoff specific to the needs of the RF IC. This is particularly important as the latest, most advanced CMOS nodes rarely offer the design libraries required to simulate and design all portions of the complex RF transceiver. Further, the DigRF interface was also defined to assist handset makers to interface RF ICs and BB ICs from different suppliers, which allows more flexibility in component selection. DigRF also provides a standardized and low pin count debug interface to monitor traffic at the IQ interface. Indeed, a number of well-known test equipment providers distribute systems to enable this capability. For chip set makers, DigRF v4 is built upon MIPI's M-PHY, a physical layer IP which, once developed for DigRF, may be reused for other protocols and applications, since an increasing number of applicable standards use the M-PHY physical layer. IP reuse is increasingly important in meeting tough time-to-market deadlines. For example, future generations of DDR memories could make use of M-PHY, and an existing standard for FLASH already leverages M-PHY.

For RF front-end components, the current situation with regards to the types, numbers, and suppliers of these is perhaps even more diverse and far-reaching. To handset makers, RFFE is a tool which considerably simplifies the selection, ease of integration, and swapping of RF-FE components. To RF-FE IC makers, it offers increased opportunities to integrate with many chip set platforms, while implementing a single, widely-deployed control interface. With a wide array of optional features, particularly for slaves, this ensures that a bus and, in particular, the FE components may be optimized for the features implemented vs. cost and the implementation technology. RFFE's wide scope of applicability to FEMs provides increased potential for IP reuse of hardware and/or software.

As with any interfaces deployed in an RF-sensitive area, one must pay special attention to the issues of EMI/co-existence management. The specifics of each of these interfaces leads to different co-existence challenges. The main issue with DigRF v4 in this respect is that even at its lowest operating clock frequency of 1248 MHz, the first spectrum lobe of a pair of lanes overlaps all UHF cellular frequency bands. At 2496 MHz, it is nearly the entire set of 3GPP operating bands which fall under the first lobe. In addition, with high duty cycle operation for standards such as LTE, the probability of collision in the time domain is high. For example, in the case of LTE single carrier 20 MHz operation, the load of a single RX pair of lanes ranges from 137% at 1248 MHz (and thus requires two pairs of lanes) to 67% at 2496 MHz using one lane pair [5]. The inherent flat decay of the common mode PSD also poses some threats to differential LNA input structures. Factors which tend to help in reducing co-existence issues are that the interface may be operated using low voltage swings, in a controlled transmission impedance, and with bus routing that in most handset designs is unlikely to be routed at, or near, the sensitive front-end components such as antennae. RF transceiver pin-to-pin isolation is therefore the main coupling mechanism.

With RFFE being a control interface only, the bus load is often bursty, with only rare instances where higher bus loading might be required. Therefore, the probability of collision in the time domain is lowered. In addition, its relatively low frequency range of operation (32 kHz to 26 MHz) is such that even the lowest cellular frequency bands, such as the LTE 700 MHz bands, are located at the 28th and 22 500th harmonic of the extreme interface rates of 26 MHz and 32 kHz, respectively. This provides plenty of “room” to implement spectrally efficient pulse shaping techniques. Yet, in contrast to DigRF, RFFE voltage swing is higher, and its point-to-multipoint topology can lead to longer PCB traces, some of which may be routed close to sensitive front-end components. Further, since the bus is not designed to be terminated, its impedance is more difficult to control, and any reflections will tend to impair the decay of spectral lobes. Because PSD decays slowly at high harmonic numbers, and pin-to-pin isolation decreases as operating frequency increases, RFFE co-existence issues may dominate above 1 GHz of operation. The most sensitive victim of this may be GPS, which requires aggressor noise PSD < −180 dBm/Hz to prevent less than 0.2 dB desense.

To help both chip set vendors and handset makers foresee co-existence issues in the early phase of product development, and to prepare for them, both DigRF and RFFE working groups anticipated the need to include a set of EMI mitigation tools. These unique features provide a rich, efficient, and yet relatively simple-to-implement set of techniques for reducing the associated impacts in the frequency and the power domains.

- Pulse shaping/slew rate control: Perhaps the most efficient of all EMI tools made available to handset makers. This feature allows shaping of the rising and falling edges of each transmitted bit so as to reduce the power spectral density (PSD) of the digital bit stream. This is available in both DigRF [5] and in RFFE.

- Amplitude control: A simple and yet efficient way of reducing the aggressor PSD. This option is available in DigRF, and also to a certain extent in RFFE, where 1.2 V is also specified in addition to 1.8 V.

- Clock dithering: A useful feature in cases when repetitive and frequent transmissions of a given pulse pattern generate discrete spurs colliding with a cellular victim. This can be the case, for example, for the DigRF training sequence transmitted at the beginning of each burst to ease clock recovery. This tool is available as a part of the DigRF specification. It is also inherent in RFFE, where the master clock may be dithered between messages, since the clock used for control information need not be related to RF data. Also in RFFE, the timing between messages may be randomized, offering some ability to affect the “signature” for both clock and data streams.

- Alternate clock frequency: This feature is available in both interfaces and can be used to either place the victim in the vicinity of a spectral “zero” or “null,” or by placing the victim under a higher order spectral lobe. For example, in DigRF v4, if GPS RX is desensitized when the bus is operated at 1248 MHz, the alternate clock rate of 1496 MHz places the GPS RX in close proximity of the first DigRF spectral null. In this instance, the aggressor PSD is significantly reduced with minimal impact on DigRF performance. In RFFE the extent of any harmonics is relatively narrow, and a slight change in the fundamental may often be utilized to move a resultant spike away from a specific band frequency of interest.

With the increasing need for more reconfigurable RF subsystems, and the mounting complexity in RF-FEs, these interfaces offer handset designers effective means for the solution of complex challenges. The ability to tailor them to specific needs provides component suppliers with an efficient method to achieve faster design cycles and to foster reuse. And, because EMI effects have been taken into consideration from the outset, these interfaces provide features well-suited to the goals and situations of all those involved in UE design. The DigRF interface will be adding 4998 MHz operation as a means to extend applicability to the future higher bandwidth requirements of carrier aggregation. And work is underway on RFFE to further enhance interface throughput, which will help to improve critical timing, and provide even more bandwidth for increasingly complex RF-FEs. Thus both of these interfaces offer continued promise for the future of handset design through cost minimization and complexity reduction, while also maximizing business opportunities.

14.1.3.5 Co-Existence Challenges

The principles of co-existence have been introduced in Section 14.1.2, where due to the full duplex nature of WCDMA, a UE located at cell edge may be desensitized due to its own transmitter being operated at maximum output power. In this relatively simple example, solutions range from the use of external SAW filters, to novel RF TRX architectures, and in the most challenging cases to 3GPP relaxations.

Co-existence issues become significantly more complex when multiple radios start to interact with each other, or when a radio is operated in the presence of internal jammers that are not enabled during conformance tests. For example, WCDMA receiver sensitivity may be impacted by USB high speed harmonics during a UE to external PC media transfer. In SoCs, aggressors range from simple clock harmonics, to noisy high speed digital buses associated with DSP, CPUs, camera and display interfaces, high current rating DC–DC converters, USB and HDMi ports. As for victims, nearly all RF subsystems are susceptible to EMI interference. Further complexity arises from the fact that RF transmitter chains are not exempt from becoming victims of these digital aggressors. Desensitization occurs when aggressors collide with victims in time, frequency, and power domains. In SoCs, both conducted and electromagnetic coupling may occur. The WCDMA UE RF self-desense is one example of conducted coupling. Coupling via power supply rails and/or ground currents is another. Electromagnetic coupling may occur via pin-to-pin interactions, bonding wires, PCB track-to-track, and even antenna-to-antenna coupling. Problems are exacerbated when collisions occur between the cellular and the connectivity radios. For example, the third harmonics of band 5 fall into WLAN 2.4 GHz, while the WLAN 5 GHz receiver might become victim of either band 2, or band 4 third harmonics, or band 5 seventh harmonics. The number of scenarios may become so great that co-existence studies often have to be performed using a multidimensional systems analysis. This topic constitutes an excellent playing field for innovative mitigation techniques, which commonly fall into two categories:

- Improve victim's immunity by increasing isolation in all possible domains: floor plan optimization, careful PCB layout, the use of RF and power supply filters as well as RF shielding. Victims may also be protected by ensuring non-concurrent operations in time.

- Reduce the aggressor signal level. Slew rate and voltage swing control are two of the most efficient techniques to reduce the level of interference at RF. Slew rate control consists in shaping the rising and falling edges of digital signals. This results in an equivalent low-pass filtering of the digital signal high-frequency content, and therefore helps to reduce the aggressor power spectral density in the victim's bands. For example, DigRFSMv4 line drivers are equipped with slew rate control as a tool to reduce EMI. Frequency evasion is another commonly used technique [25]. For example, if the harmonic of a digital clock is identified as a source of desensitization, it is tempting to slightly alter the clock frequency only when the victim is tuned to the blocked channel. Frequency avoidance can be implemented by either simple frequency offset, or by dithering (e.g., DigRFSMv4, Section 14.1.3.4), frequency hopping, or even by using direct sequence spreading of the clock.

The well-documented example of Band 4 (B4) – Band 17 (B17) carrier aggregation is a good illustration of co-existence issues within the cellular RF subsystem. B17 transmitter chain third harmonics (H3) can entirely overlap the B4 receiver frequency band. Nearly all components in the RF-FE generate B17 H3, with the PA being, of course, the dominant source. It has been shown in [26] that the sole contribution of the PA would cause 36 to 43 dB B4 RX desensitization, therefore calling for the insertion of a harmonic rejection filter. This might not solve the problem entirely since other sources of leakage, such as PCB coupling and TRX pin-to-pin isolation may dominate system performance [27]. Given reasonable PCB isolation and typical component contributions, B4 RX desense should not exceed 7 to 9 dB for 10 MHz and 5 MHz bandwidth operation respectively [28].

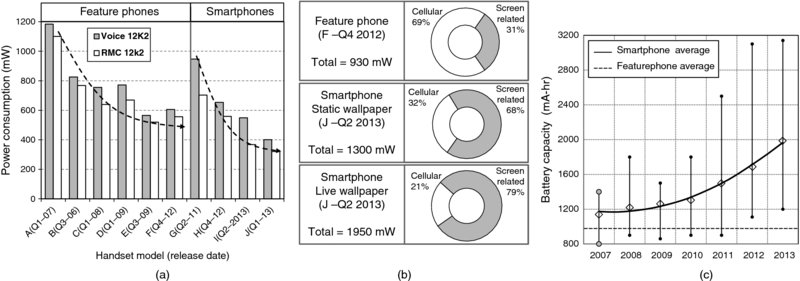

14.2 Power Consumption Reduction in Terminals

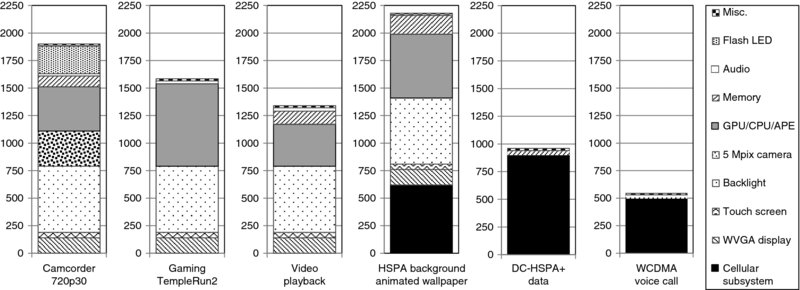

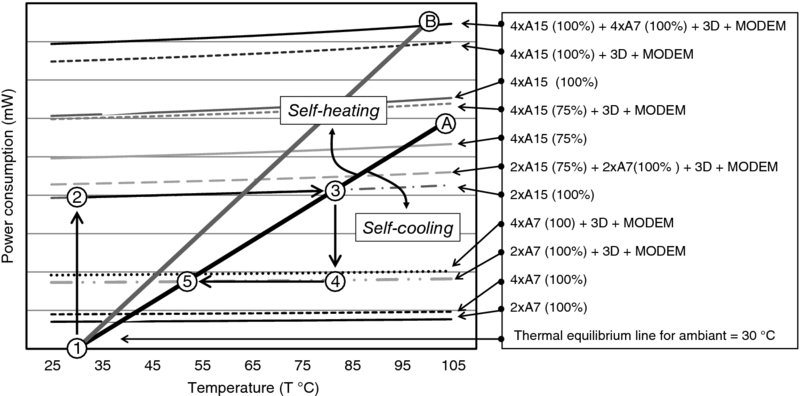

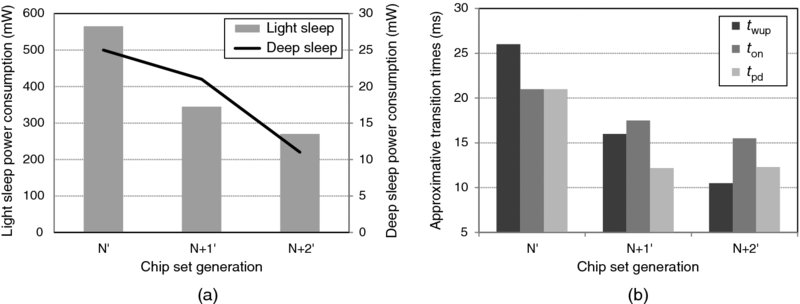

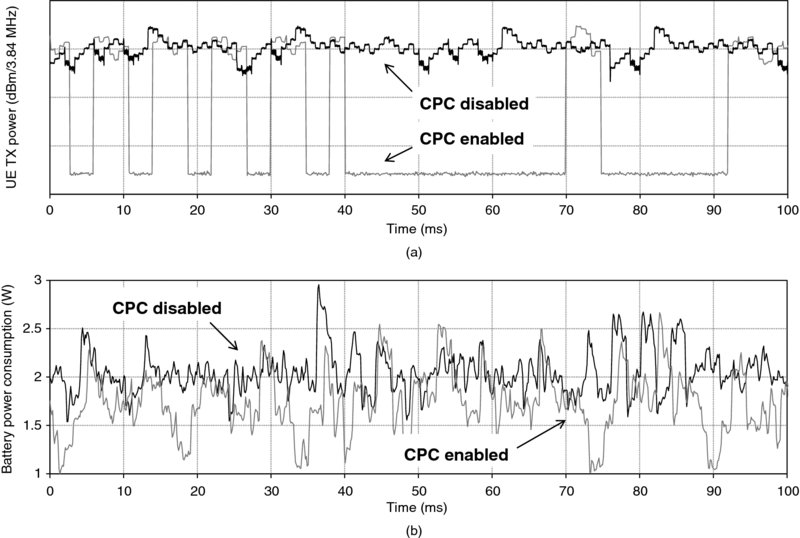

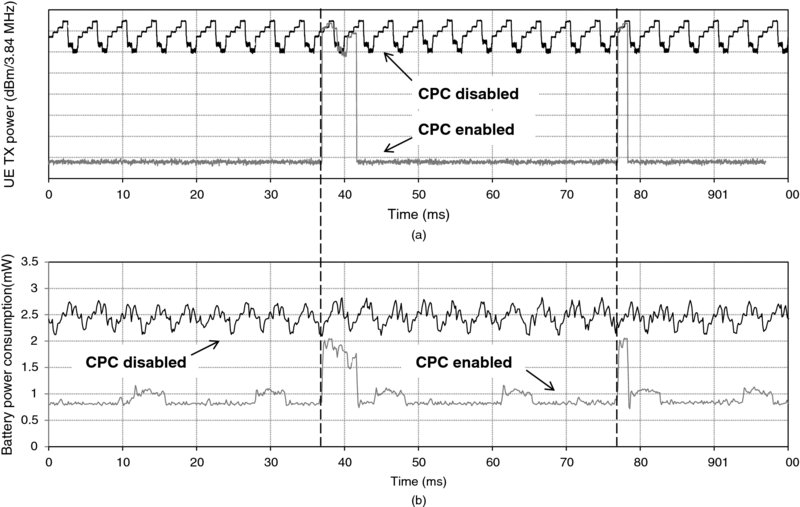

14.2.1 Smartphone Power Consumption