7

Cognitive Computing

T Gunasekhar* and Marella Surya Teja

Department of CSE, K L E F, Guntur, Andhra Pradesh, India

Abstract

The advancement of network-empowered sensors and Artificial Intelligence (AI) gives rise to different human-focused smart techniques that offer excellent quality services, for example, productive collaboration, self-governing driving, and brilliant medical services. Considering cognitive computing as a crucial innovation to build up these smart frameworks, this paper proposes human-focused cognitive computing supported by cloud computing. To begin with, we give a complete evolution description and analysis of the cognitive computing system including its advancement from data discovery, big data, and cognitive cloud. At that point, the architecture of cognitive computing is proposed, which comprises three basic innovations including IoT (networking), big data analytics (which enables technologies like reinforcement learning, deep learning, and image understanding), and cloud computing. At last, it portrays the applications of human-focused cognitive computing, involving chatbot creation, expressive communication structure like sentiment analysis & face/emotion detection, medical cognitive techniques, and risk assessment.

Keywords: Cognitive computing, artificial intelligence, internet of things, cloud computing, reinforcement learning, deep learning, sentiment analysis, natural language processing

7.1 Introduction

Recently, cognitive computing has obtained significant attention in industry and research areas with a sudden development in information technologies, artificial intelligence, and big data. Cognition is a mental process of creating knowledge and understanding by achieving a conceptual model of the world. Theoretically, cognitive computing has several definitions according to several researchers [1].

It tries to create machines that will have reasoning abilities identical to a human brain. It is the innovation of self-learning techniques that exploit data mining, natural language processing, and pattern recognition to reflect the way the brain of human functions. It can be done using strategies from biology, psychology, information theory, physics, mathematics, statistics, and processing of signals. It is a centralized, universal mechanism motivated by cognitive informatics, analytical intelligence, and psyche abilities. It includes information processing of the biological nervous system (processing of a human brain), decision making, reasoning, and natural language processing in real-time to tackle real-world problems. It can also be defined as a way of summarizing detailed behavior, goals, plans, limitations, and techniques to a high degree.

In industry, such a cognitive system could learn from records without supervision and perform natural language processing. But modern cognitive computing is still far behind to realize like human intelligence such as emotional sentiments and intellect. Without continuous requirement and upkeep of big data, it is difficult to discover knowledge for the growth of machine learning. Most of the prevailing AI-based industrial applications are based on neural networks and deep learning but not extended enough to realism yet. It is considered important for AI to be able to wipe out the constraints of data. A human-intelligence like cognitive computing should consist of both, hardware facilities and a storage space comprised of information. It can interact with machine and human intelligence at a deep level of the algorithm using that space and wipe out the dependency of machine learning on data and explore the human needs enabling deep human cognition. Thus users are provided with a more intelligent cognitive system [2].

In order to attain a better understanding of cognitive computing, it is required to define its relevant areas and understand all the terms. According to different definitions of cognitive computing, it is an overall combination of cognitive sciences, cognitive informatics, cognitive psychology, knowledge, reasoning, nervous system, the human brain, biological processing, decision making, neurobiology, Artificial Intelligence (AI), behavioural science and manipulation of a large amount of information such as big data. AI is correlated with reasoning and its fundamental processes of thinking, beliefs, and emotions in order to create an intelligent system that can behave and understand in a rational way just like humans. The reasoning is a succession of steps helps in obtaining conclusions or knowledge. And knowledge is the interpretation of a problem or a topic attained through study or experience. Expanding such knowledge from a large amount of data to support decision making is the big data where decision making is defined as the process of analyzing and selecting the best alternative from a given set of possible choices based on some standard or conditions to achieve an objective. The nervous system is composed of neurons, reacts to physical impulses, and exchanges electrochemical signals between neurons to organize body reactions. These are all naturally related to cognitive computing where mind and brain are two different things. The mind is comprised of a set of actions enabling humans to think, understand, and feel whereas the brain is an organ that provides humans with an ability to think, understand, and feel. Therefore, cognitive computing should emphasize on simulating the brain mechanism to improve computers with all the brain’s ability.

Cognitive computing describes era systems that combine gadget learning, reasoning, natural language processing, speech, vision, human–pc interaction that mimics the functioning of the human brain and facilitates to improve human selection making. Cognitive computing applications link data evaluation and adaptive page presentations to modify content material for a particular form of audience.

The motivation behind cognitive computing is to reproduce human thought strategies in a computerized model. Using self-learning algorithms that use information mining, sample recognition, and natural language processing, the computer can mimic the way the human mind works.

7.2 Evolution of Cognitive System

Cognitive computing speaks to the third innovation of registering. In the primary period, (19th century) Charles Babbage, also known as ‘father of the computer’ delivered the concept of a programmable computer. Used in the navigational calculation, his pc was designed to tabulate polynomial features. The second technology (1950) experienced digital programming computer systems including ENIAC and ushered a generation of modern-day computing and programmable systems [3]. The evolution of cognitive computing started 65 years ago, [4] with a test performed by Alan Turing known as validation of computing machine intelligent behavior and detailed analysis of the chess play as a search which was done by Shannon. The Lisp inventor, John McCarthy coined the concept of Artificial Intelligence during the second conference of Dartmouth. The development of the first smart programs like the checker’s play takes place during the 50s. McCarthy and M. Misky found the MIT lab. Masterman and colleagues designed semantic nets for machine translation at the University of Cambridge. In the early 60s, the Solomonoff put AI’s mathematical theory by introducing the universal Bayesian techniques for prediction and inferences. Danny Bobrow, a student at MIT demonstrated that computers could be programmed to recognize natural language well enough to solve hard algebraic problems accurately. Before the first database system ever begun, due to the complexity and inadequacy of developed technologies, machine translation was getting negative reports at that time. In the late 60s, J. Moses illustrated the strength of symbolic reasoning and the first good chess-playing program.

Then in the 70s, Perceptrons were developed by Minsky and Papert, realizing the limitations of fundamental neural network systems. Though, at about the same period, Robinson and Walker furthermore defined a powerful NLP group. The first machine learning algorithms were introduced during the 80s. It brought probability and decision theory into Artificial Intelligence by this moment and neural networks came to be broadly adopted with backpropagation algorithms. A neural network is a gadget of hardware and software mimicked after the vital nervous gadget of humans, to estimate capabilities that rely upon the big amount of unknown inputs [5].

Then in the early 90s, a successful reinforcement learning-enabled creation of robust gammon software. The era of the 90s observed suitable advancements in all regions of AI with the combination of semantic classification and probabilistic parsing.

In 2000, QA systems got importance. Watson is an elegant work performed by IBM for further development and enhancement of Piquant (Practical Intelligent Question Answering Technology). The above Figure 7.1 clearly depicts the evolving of cognitive in years. From effectively solving checkers to the advancement in robotics, everything is granting computers the ability to identify images and videos with great accuracy. And now coming to cognitive computing that works on deep gaining knowledge of algorithms and large data analytics to provide the insights. In this way, the brain of a cognitive system is the neural network, the basic thought behind profound becoming acquainted with.

Figure 7.1 Evolution of cognitive computing [6].

7.3 Cognitive Computing Architecture

Architecture is a hypothesis motivated by the human intellect in which systems operate together with the necessary resources to obtain intelligent behavior in any complicated environment. Cognitive architecture [7] directs on developing both, artificial intelligence and the inherent intelligence. It should be able to incorporate intelligence and capabilities from interactions and represent entities from the short-term as well as the long-term memories about the assumptions, motives, ideas and knowledge of the system. It should be able to define clearly the functional methods based on the operations available in the system, which contain mechanisms of learning and performance. The assumptions related to cognitive architecture could alter in time, just like the human mind. It supports recognizing the infrastructure essential for an intelligent system. Some cognitive architecture is based on mind–computer similarity principles, while others are based on general principles. An ideal cognitive architecture should be able to improve the decision-making potential by learning. It should be competent in developing plans and solving problems for intelligent systems to achieve their expected purposes. Traditional AI is formulated to learn by functioning on the basis of rules while cognitive architecture uses bio-stimulated methods, more specifically for interaction-based learning.

Researchers in IBM are developing a new computer architecture that can process data productively for AI workloads [7, 8]. The researchers insist that traditional computers were invented on Von Neumann architecture, having a central processor that executes arithmetic and logic, storage, a memory unit and input–output devices. However, the recent industry requirement has made the heterogeneous computing architecture essential rather than depending on the homogeneous architecture leading to an intensified research in neuroscience along with applied materials. It requires a new heterogeneous architecture that requires a change in already existing computing architecture for the AI workload. There are two important requirements for AI workloads which have originated a curiosity in brain-stimulated computer architecture:

Memory: The main challenge for such computing is that multi-core processors have attained their performance and efficiency threshold. The AI workloads depend on the processing of a large amount of data and expect faster memory access. The traditional processors are having multi-level cache architecture and are not suited for AI.

Parallel Processing: The architectures for AI workloads have to be designed in such a way that can execute and compute parallelly. The parallel computing technologies are required for such architecture which can successfully operate AI functionalities for such large amount of data.

It has led to increasing in the development of cognitive architecture modeling that simulates the brain, cognitive architecture design in which the system learns in a specific environment and applying them to the products. Such cognitive architecture works on three features. First are the brain’s memory and processing that simulates a memory device to execute computational tasks in it. The second is the structure of the brain’s synaptic network that can be developed as arrays devices to fasten the training process for deep neural networks. And the last one is the stochastic nature of neurons as well as synapses to generate an influential computational substrate for altering neural networks. These systems are expected to be more efficient than conventional computers. Researchers found that the brain-simulated memory strategy in unsupervised learning is 200× faster in performance than conventional computing systems.

Figure 7.2 shows the system designing of cognitive computing. With the help of fundamental advancements, for example, 5G system, mechanical autonomy, and profound learning alongside IoT/cloud frameworks, undertakings including human-machine collaboration, voice acknowledgment, and PC vision will be executed for a huge scope. The upper applications upheld can be wellbeing oversight, subjective social insurance, savvy city, keen transportation, and logical examinations. Thereinto, each layer in the framework engineering is joined by comparing mechanical difficulties and framework prerequisites. Subsequently, the significance between cognitive computing and each layer is considered and examined in detail in this paper [9].

7.3.1 Cognitive Computing and Internet of Things

The Internet of Things (IoT) and Cognitive Computing are ideas that have been creating since the 1950s with differing terminology. Empowered by propels in innovation, for example, the advancement of lower cost, lower power, smaller microchips and microcontrollers, cell/remote chips, and the enablement of progressively across the board machine-to-machine correspondences, IoT advances are presently more broadly sent. It is the next stage in improving the precision and potential of complex-sensoroperated systems by learning and implanting more human awareness into the highly communicated devices and settings. Cognitive IoT utilizes the data generated by connected devices and actions performed by them in a combination with cognitive computing technologies and a new standard of computing called cognitive computing. Cognition implies thinking that will lead IoT to the most advanced level, making it more intelligent and more interactive. Thus, the Cognitive IoT is contemplated as a combination of the modern IoT with cognitive and unified mechanisms that are focussing at increasing the all-around performance and obtains intelligence.

Figure 7.2 The system architecture of cognitive computing.

The IoT is an important aspect of AI-enabled cognitive computing which enhances the two opposing and critical outputs of data-centered processes such as machine-automated activity and human decision making. Nonetheless, the inclusion of IoT and its data sources in cognitive computing allows automation to improve the process of decision making and gives the best of their potentials. For the automation of the data modelling requirements, machine learning and neural networks are the best sources that can produce targeted algorithms and refine data control and management characteristics of integration and transformation speeding up the initial data consumption in the predictive analytics. The omnipresence of the IoT is predictable due to its potential of producing real-time activity from data. IoT’s applications when supported with cognitive computing automation establish an ultimate predictive analytics precision for best decision making.

It is obvious from the above-portrayal that cognitive computing depends on data. The area of contact emphasizes information processing while the world of computers emphasizes knowledge consumption. In real cognitive computing applications, the information is conveyed mainly by data containing various structured and unstructured data [10]. Cognition embodies three key elements—interpreting, reasoning and learning. In terms of computing, interpretation represents the capability of taking structured and unstructured data in a huge amount in order to obtain meaning from it like setting up a prototype of ideas, entities (elements) and relationships among them. The reasoning is to employ that specific prototype to be able to attain conclusions and decipher similar problems without having the already programmed specific solutions. And learning is the ability to automatically comprehend new knowledge from the information and stands as a crucial component in understanding.

The IoT collects diverse real-time valuable information of objects’ concerns in the objective world, forms a huge internet network that interconnects large sensing systems in order to make co-fusion between the data world and the physical world. Currently, some advanced distributed information fusion technologies can also be employed to improve the accuracy of the sensed large-scale network information [11]. Firstly, the IoT obtains knowledge about tracked artifacts through perception technologies such as RFID and a wireless sensor, satellite positioning and WiFi, and fingerprint tracking. Secondly, it disseminates relevant information within the network using various efficient means of communication and shares and integrates. Finally, it performs knowledge collection and retrieval utilizing advanced software technology such as cloud storage, deep learning, and data mining to realize intelligent decision-making and control in the information fusion framework [12].

Cognitive computing is essential to the IoT for some of the important reasons. Some of them are rating and scaling of data initiation. Learning makes the system or processes more structured depending on merging sensor data about the system with their actions that can affect the human ability to recognize necessary patterns and understandings. The scaling is done using machine learning in order to improve it. Another reason includes moving computation into the physical world as people from various demographics and different level skills communicate with IoT, there is a need to move beyond existing machine interface that requires human to learn interface concepts for communicating with machines. A human-centred interface is needed where the system can communicate with humans in natural language. And the last reason is integrating multiple data sources and categories that may give associated information for better understanding and decision-making. It is having the ability to understand and control numerous kinds of data such as digital sensor data, textual data, audio, video, and location data and so on to perceive the several correlations across these data-types, for example, linking data from sensors with acoustic data.

The IoT knows how information is deciphered and appropriated. The popularization and mainstream usage of IoT would produce more and more evidence that will offer valuable sources of knowledge for cognitive computing. In addition, cognitive computing, as a modern form of computing style, can have higher and better energy-efficient means of action for data interpretation and processing in IoT.

7.3.2 Cognitive Computing and Big Data Analysis

Within the production of huge records, the relentless growth of numbers, and the constant creation of computer processing resources are inevitably apparent [13]. Stood out from the advancement of traditional structured data, the surge of unstructured data, for example, social networking and mobile internet data continue to expand exponentially. Structured and unstructured data form cognitive big data and can be defined by 5V, i.e. quantity (large volume), pace (rapid shift and high speed), range, meaning (meaning-oriented), and veracity. Meanwhile, these features create a number of difficulties during knowledge collection and delivery. Big data modeling and semantic engineering provide powerful approaches, though. We present the connection between huge information preparing and cognitive computing, and contrasts.

However, the biggest problem of big data is that there is not enough skill to manage and exploit such a large amount of data. The perpetually rising demand for such skill cannot behold up by stock of data scientists or analysts. There are a lot of advanced data platforms available but due to a shortage of experienced professionals who can manage and operate them, they are of no use. The solutions can include training people and computers to manage them so that less experienced ones can handle such complexities of the data. This approach can be successful with the help of improvements in cognitive computing.

Cognitive computing gives rise to an extreme level of flexibility to analytics and natural language processing makes the machine communication a lot easier. Less experienced who are not knowledgeable of data language and processing that are necessary for decent analytical function can also interact with the data platform and related programs in a similar way how human interacts. It is done by providing easy commands and employing natural language in the AI technology-based data platforms that could interpret regular speech, conversation and requests into a query language and then delivering feedbacks in the same way they were attained. Such functionalities make it easier for everyone to manage any platform.

Cognitive computation emulates senses in humans. One correlation between Big Data Processing and cognitive computation is the deep data thought of humans. During human life, knowledge is continually accumulating. On condition that the amount of information from different communications is high, the individual in question may embody the big data thought of humans, which is hierarchical as deep learning. The first degree analyzes the amplification of material existence and state. The second level is about the advancement of hallowed qualities and the third stage is about the nature of life. The quantity of top-level people is the least noteworthy. As of now, the reasoning that is reproduced by machine knowledge basically centers on the first and second levels to concern the expectation for everyday comforts and the passionate condition of people. The relating applications are wellbeing checking, savvy medicinal services, brilliant home, keen city, and enthusiastic consideration [14]. The third level is further profound that worries the significance of life and advances customized proposals for consumer life-long growth to motivate the consumer to enable the client to acknowledge cheerful yet increasingly important life. It is impossible by machines at present and is an extraordinary test in man-made reasoning later on. Under the situation where informational collection consents to highlights huge information, the most receiving the new machine learning technique is the immediate path to breakdown and analyze the information [15].

The main argument for separating the large data modelling from semantic analytics is the data processing approach of “brain-like” computation. The approach that focuses based on the meaning capacity of data should be used to allow the computer to achieve the third level by knowing the connotation of data and the picture knowledge found in such data and realizing the environmental details much as a human being does.

One difference between big data analysis and cognitive computing is data size. Big data processing is not inherently semantic computation, in allusion to any data collection. Big data analysis stresses extracting the interest and gaining information from a huge amount of data. The accuracy and toughness of conjectures cannot be guaranteed as a source of perspective, without a colossal measure of information. Remembering the measurement of an assortment of cognitive computation information does not suggest that the information scale is contingent.

Cognitive science, based on reasoning and intuition like the human brain, aims to address the challenges of fluffiness and confusion in the biological framework. In this manner acknowledges different degrees of procedures, for example, cognizance, memory, reasoning, thinking, and critical thinking. Despite the fact that the information size isn’t sufficiently enormous however the cognitive computing can at present be utilized to process the information. With respect to ordinary citizens and space specialists, it is accepted that the information is indistinguishable yet the significance of information got by average folks may vary from that got by area specialists. Since the stature of reasoning is unique, the edge to decipher the information may likewise be extraordinary. The machine can mine increasingly concealed importance from restricted information utilizing cognitive computing [16].

Some of the solutions already exist with some very remarkable examples to demonstrate such as Watson, DeepMind (Google’s project) and Zeroth platform by Qualcomm. Watson is a cognitive computing system having an already internally constructed natural language processing (NLP), hypothetical production-analysis and active learning. It is extra advanced than the artificial assistants on our mobile device. In evidence, the system works so adequately, it enables users to ask questions in simple and ordinary speech, which this system then translates into the data query language.

DeepMind is the advanced computer invented to reflect some of the short-term memory characteristics of the brain. Practically, the computer system is constructed with a neural network that interacts with external memory adaptively. It stores memories that it can later procure and utilize to understand new information and perform unexpected chores that it was never programmed to do. It is having a brain like potentials so that analysts can lean on information and commands. The program can compare such information with past data queries and respond to without any need for continual supervision.

The Zeroth [17], a Cognitive Computing Platform, is invented to depend on optical and acoustic cognitive computing so that it can reflect human-based thinking and actions i.e., a device operating the platform could identify objects, examine handwriting, recognize people and comprehend the across-the-board context of a situation and environment. For example, the device such as a mobile phone could adapt automatically while taking snapshots at a cloudless seaside or a trek in the moon brightness. It could also automatically modify its microphone configurations whenever there is a lot of background noise to provide satisfactory sound quality. Its ability to reproduce instinctive experiences gives several chances in the sentiment analytics. Its ability to interpret scenes, events and context show that it has supported with right image technology devices, it could untangle how a person is feeling based on facial moods and expressions and can recognize levels of stress or anger based on their voices.

At last, shifting from cognitive platforms to on-device decision reduces the number of back and forth cloud communications and transmissions. The devices can improve performance and security while saving power. Therefore, the advancements in cognitive computing, and implanting AI, machine learning and deep learning into big data platforms and mechanisms, will not only facilitate less experienced professionals to deal with the complexities of data analytics but also enhance the resulting quality. Large amounts of data, information and a lot of work could be transferred to our machines will allow us to respond rapidly and real-time decisions can be made employing real-time analytics.

7.3.3 Cognitive Computing and Cloud Computing

Cognitive computing is supposed to be highly resource-intensive that requires powerful and effective servers, involving strong computers, deep technological expertise, profound technical agility, and frequently leads to a high level of technological obligation and sometimes contributing to a large degree of professional debt. For instance, creating machine learning models not only consumes substantial computing power but also expands specific responsibilities such as technological obligations with the ever-increasing learning process. Therefore, such computing was restricted to huge business industries and ventures for quite a while, for example, the Fortune 500s. But this has been entirely reversed by the cloud.

The cloud absolutely overturned everything. The Cloud helps developers to produce semantic templates, check structures, and integrate without a physical basis into established systems. The Cloud enables architects to create cognitive models, experimenting solutions, test, debug and incorporate with current systems without requiring physical infrastructure. Hence, due to the resource expenses and asset costs are still involved, businesses can flexibly advocate clouding resources for cognitive development and can downscale as whenever important [18].

Conventionally, enterprises are benefiting from cognitive in the sense of ROI perspective only. They would dedicate quantitative time, effort, and stakes in research and development. They could afford delays or any kind of uncertainties in quality production. Presently, even small or mid-size industries can employ the cognitive cloud to apply AI for their daily information technology ecosystem, instantly producing quality without an infrastructure of broker reliances. Generally, from a basic ROI point of view, Cognitive will only bode well for massive endeavors. They should apply tremendous energy, commitment, and R&D desires, and in respect, age could handle the expense of deferrals/vulnerabilities. Currently, even small-to-moderate companies may use the Cognitive Cloud to add AI as a part of their everyday IT biological program, easily creating an opportunity without the merchant circumstances being created.

Processing in the cloud virtualizes processing, data, and bandwidth. This also reduces the expense of delivery for information infrastructure and encourages industrialization and facilitates intelligent computer technologies. In addition, cloud computing’s strong computing and storage capability provides cognitive computing with dynamic, flexible, virtual, shared, and efficient computing resources services [19].

In fact, for huge amounts of information created in the wake of analyzing large information about the distributed computing stage, innovations are being received; for example, machine learning to perform information mining and the discoveries are being updated in different zones. The different data classes compare with different innovations in handling. For example, the literal information and the pictorial data compare individually to characteristic language preparation and machine vision.

IBM’s cognitive help for Google’s use of language and psychological processing underlines the recognition of brain-like cognition and judgment by conveying a model of cloud administration to provide accurate dynamic assistance. Cloud infrastructure and IoT provide computational processing with a foundation for the software and hardware, while large-scale knowledge analysis offers strategies and thought to recognize and interpret potential technology possibilities and benefits [20].

Conventional cloud-based communications give ground-breaking cloud computing managements. However, only supporting intensive data processing isn’t enough, particularly when a limit is restricted and super-low latency is required. Hence, it is basic to propose another Artificial Intelligence empowered heterogeneous systems, including different terminal networks and clouds. Gotten from cognitive science and information analytics, cognitive computing can copy or increase human intellect. At the point when such cognitive knowledge is integrated with communications, conventional services will be redesigned with higher accuracy and lower inactivity.

With the assistance of cognitive cloud computing, we can:

- Advance resource usage: Enterprises no longer need to spend on the cognitive prepared framework. The Cognitive Cloud can be utilized whenever required and discontinued when inactive.

- Access more extensive ranges of abilities: Instead of employing a data scientist or AI modeling master, enterprises can partner with Cognitive Cloud sellers at an adaptable month to month rate. This is especially valuable for those confronting slow digital transformation.

- Speed up investments: The overlong arranging, planning, speculation, and set-up period is substituted by a prepared to send solution. Some cloud merchants significantly offer adaptable default AI models.

7.4 Enabling Technologies in Cognitive Computing

7.4.1 Cognitive Computing and Reinforcement Learning

You can split modern machine learning approaches into supervised learning and unsupervised learning. In those strategies, the machines train those models with information that are frequently in a fixed organization, and machines total errands, for example, regression, classification, and aggregation. The information that machines can reach is nevertheless restricted. It is hard for machines to learn data in nonlinear cases since they can just lead expectations as indicated by the got data. The tag for related knowledge may even be varied under varying circumstances, which means that the user-friendliness of machine-learned data is distinctive among specific clients. Conventional supervised learning and unsupervised learning rely on planning to shut down with input of knowledge. These conventional learning techniques can’t meet the needs of economic improvement in machine insight. Reinforcement learning has now become a popular work branch in the area of machine learning.

Finding out about activities and results essentially shapes social cognition and behavior. For instance, to help other people, we have to know how our choices remunerate or abstain from hurting another person. Before we choose what to decide for ourselves, we can participate in observational learning by viewing the positive or negative things that happen to others, and we can gather others’ psychological states by following their activities and results after some time. One of the most significant impacts on brain research, neuroscience and financial aspects has originated from associative or reinforcement learning hypothesis that exactly and numerically depicts how decisions are associated with results over some time. Analysts proposed their learning model which portrayed how learning happens by means of a prediction error, the error between what we hope to occur and what really occurs. This error rectification learning cycle can also be portrayed numerically.

Maybe answer to inquiries of reinforcement learning, these expectation mistakes can be social in nature, for example, social-predicted mistakes, for example, the desire that my activity will help somebody versus the result that it did or didn’t or my desire that I will be enjoyed by another person and the result that I was or was definitely not. While mind regions traditionally connected with reinforcement learning, for example, the ventromedial prefrontal cortex and ventral striatum have been connected to handle both social and non-social predicted mistakes, there may likewise be social expectation error signals in areas fairly particular for social preparing. A more broad conversation about the distinction between mind areas coding social and non-social expectation error is covered in a few other ongoing audits.

Reinforcement learning is linked to making reasonable moves in order to boost awards in a given circumstance. Various programming and machines use it to locate the most ideal conduct or manner it should take in a given circumstance. We may find the situation, for example, anytime an infant finds out how to talk. When a kid gets acquainted with a phrase, usually an adult will read the word, over and over, allude to something addressed by that word or play out the activity addressed by that word. In case the interpretation of the kid is not right due to off-base judgment, the grown-up will be an immediate correction. When the teenager strikes the target on the ear, it gives the grown-up a reward. Equally, the underlying environment is a major influence during the human learning process. At this stage, reinforcement learning takes the model and can draw from nature and find behavior. The remuneration has various sections, i.e. when certain action becomes beneficial for practical reasons, and some control becomes implemented beyond what might be predicted. Along these lines, the choice at each time isn’t really the ideal, yet it must be useful for the machine to acquire rewards.

How about we take AlphaGo, [21] for instance, subsequent to engrossing a huge number of rounds of chess for profound learning, and it plays chess with itself by support learning. During the self-learning stage, each progression isn’t really ideal. However, the progression is well on the way to cause the last win according to worldwide arranging. In this procedure, the machine isn’t just subject to past understanding yet would likewise attempt new ways to amplify the objective prize. Much the same as drawing learning, the unpremeditated play would be included once the essential aptitudes are aced. Information is produced during the time spent endeavours of the machine; the last goal isn’t relapse, grouping, or accumulation yet the greatest prize. For this reason, both fruitful and ineffective endeavours are significant for the machine. It would gain as a matter of fact in past endeavours at each ensuing advance.

Yet if a computer interacts solely with itself, then its intellect becomes unsatisfactory. Thus, if a learning arrangement pays little attention to outer conditions; it is not a good system of cognitions. Therefore, a neural machine can specifically interact with humans. Unless a human was directly appointed to interact with a computer, however, it will take a great deal of time and manpower. Fortunately, the crowd-sourcing approach will render machine–human contact normal.

One standard case is the Foldit game. In this game, an objective protein is given, the players lead to getting together with various amino acids until this bit of protein’s whole body is sorted out. The players volunteer to engage in the amino corrosive get together procedure. In addition, the collective intelligence of this crowd of unprofessional players will surpass that of a few professionals if there are enough players. This methodology can be utilized in specific territories of innovation to improve computer insight by encouraging users to interact implicitly with the system by personalized cognitive computing applications. The complex knowledge generated by the users who engage in crowd-sourcing unintentionally often remits the reliance on cognitive learning on information and simultaneously offers an alternate kind of data processing tool.

Significantly, the utility of reinforcement learning (RL) models has been supported by their neural credibility—the revelation that the phasic action of dopamine neurons in the midbrain encodes a prediction error. In addition to the fact that this model has changed the old style neuro-imaging investigation procedures. Model-based FMRI might help connect various degrees of clarification from the cognitive and behavioural to the neural. Studies in the field of social neuroscience have started to apply these models to see how and whether quantities predicted by reinforcement learning are represented in the brain during social circumstances.

7.4.2 Cognitive Computing and Deep Learning

Innovative revolution, PC integration and the progressions on the Internet saw after the industrial revolt has become a sensation in all parts of our everyday lives. Aside from that, digital devices have assisted with forming our social orders. This has been acknowledged because of the fixed presence of digital information and a change that has been seen with the manner in which people think and act.

Besides, the majority of the digital integration procedures have seen the development of many processing disciplines which have achieved adequacy. One outstanding region that has changed the impression of computer behaviour and how machines work is the control of Deep Learning which is a subset of Artificial Intelligence. Deep learning makes it workable for multi-layered neural systems which can be applied in tuning machines so as to achieve some ideal undertakings. In reality, deep learning has been envisioned as a state-of-the-art approach that can convey numerous precise derivations, which have likewise changed the manner in which intelligent choices are made by computers. For instance, an appropriate conventional system or approach through which deep learning cognitive computing ideas and strategies can be incorporated into cyber forensics so as to acknowledge adequacy during criminological examination utilizing AI approaches.

Deep Learning is an Artificial Intelligence work that can mimic the methods of processing the human brain. It basically includes AI methods that are utilized to speak to realities. They additionally feature that deep learning is an ongoing advancement in AI that can be applied in various fields.

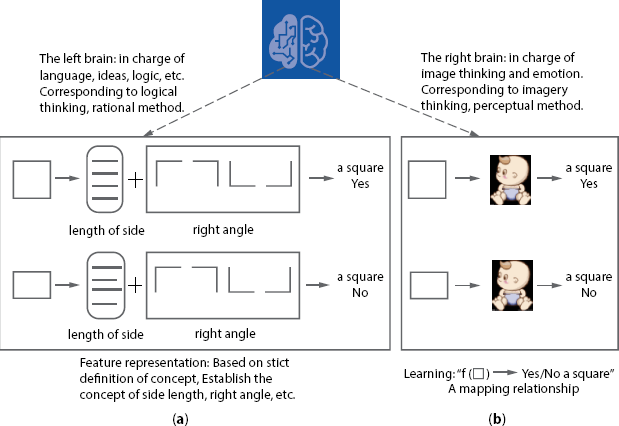

7.4.2.1 Rational Method and Perceptual Method

The human cerebral cortex is partitioned into two hemispheres with clearly different capacities. The left brain, as in most individuals, is responsible for vocabulary, concepts, reasoning, etc, while the right mind is answerable for considerations and visual recognition. People with developed left brain usually have strong logic and are more rational (for example, scientists). Although individuals with a trained-right brain usually have high imagination, space, and entity forms (e.g., artists) are excellent at cognition. Subsequently, the human perspective is separated into coherent reasoning and visual reasoning. The explanation for human regular world change is a lot of sensible techniques and visual apparatuses owing to the interesting multifaceted embodiment of reasoning [22].

The reasonable approach is focused on strict principles and interpretation, whereas the perceptual approach is characterized as a certain mapping connection between input and output. It is still unclear how the human brain knows the knowledge coding, transmission, and storing of 100 billion nerve cells. However, human brain thinking can be simulated in the cognitive system by means of data analysis. The method for the manual design of features is specifically specified. The learning technique for the component is to get familiar with the mapping connection between information and yield. It’s a kind of cognitive strategy, that is, its fasters the human’s ability to actually perceive.

Figure 7.3 Perceptual and rational method to recognize a square. (a) Rational method.

Recent examination demonstrates that perceptual learning, experience-induced transformations in complex cognitive operations and neuroscientific research held relationships among cognition, perception, and learning. We diagram three updates to regular suppositions about these relations. Firstly, the Perceptual systems give unpredictable and theoretical depictions of the real world. Second is that perceptual descriptions are frequently amodal and not restricted to methodology-specific-sensorycharacteristics and lastly, the perception is particularly choosy [23]. These three properties empower relations among perception and cognition that are both dynamic and they make possible perception learning calculations that adapt information extraction to enhance task execution. Researchers have illustrated a developing perception learning innovation that has just delivered sensational learning profits in an assortment of scholarly and expert learning settings, including arithmetic, science, avionics, and medical fields. The above process was illustrated in the Figure 7.3.

Proceeding with logical advancement and reasonable uses of perception learning, it will be encouraged by a superior comprehension of the relations between perception, cognition, and learning. One may accept that these relations are surely known, yet in reality, they are most certainly not. An essential explanation is that progress in understanding observation in the previous few years requires a reconsidering of a portion of these relations, nullifying a few different ways of reasoning and making ready for new experiences. Consequently, before the most recent couple of years, in the event that somebody proposed a part for perception in learning mathematics that included shaded charts to outline fraction that may permit students to have some solid acknowledgment of rational numbers. These applications are not quite the same as the possibility of overall learning by which students dynamically change the manner in which they remove structure and relations from symbolic equations. Lately, there have been patterns in cognitive science claiming for a nearby connection among perception and cognition.

Cognition is arranged. Psychological movement happens with regards to a true domain, and it naturally includes perceptions and actions. We off-burden cognition work onto nature by exploiting it to control data and information for us, and we collect that data just on a need-to-know premise. The atmosphere is essential for the cognitive framework. The data stream among mind and the world is so thick and consistent that, for researchers considering the idea of intellectual activity, the mind alone is definitely not an important unit of analytics. Cognition is for activity. The capacity of the mind is to direct activity, and intellectual components, for example, observation and memory must be perceived as far as their definitive responsibility position suitable behaviour. Perception is body-based. In any event, when decoupled from the atmosphere, the action of the mind is grounded in devices that developed for connection with nature.

7.4.2.2 Cognitive Computing and Image Understanding

On the off chance that the machine will tackle issues, in reality, the most ideal path is to mimic the thinking more about the human cerebrum. In the psychological framework, the highlights can either be removed from unique information for grouping and forecast model utilizing the technique for manual component configuration to recreate the legitimate thinking capacity about the human cerebrum or can be learned through profound figuring out how to reproduce the capacity of visual considering human mind.

There are two strategies for highlight extraction in face pictures: manual element structure and feature learning. In the manual feature design method, the computer simulates the rational method for recognition and determines which features on the architecture and extraction of the functionality are very problematic owing, for example, to elements expression, making up, beard, glasses, change in illumination, and photographing angle [24].

Be that as it may, when a person conducts face acknowledgment, the individual in question only here and there thinks about explicit highlights in the picture, rather, the person makes judgment absolutely by ethicalness of instinct. The articulation, light, capturing edge, and glasses in the photos would not impact their perception impacts a tall [25].

In other words, effective data features cannot be designed with a manual feature design method and it is quite difficult for a computer to realize the feature expression. Image feature extraction is the base for understanding the image paying little heed to use. For instance, we should take face recognition [26].

Conventional image processing is a field of science and innovation created to encourage human-focused image management and control. Be that as it may, today when enormous amounts of visual information immerse our environmental factors because of the unstable development of image capturing tools, expansion of Internet correspondence means and video sharing administrations over the World Wide Web (WWW), human-focused treatment of big data becomes impossible. In this way, it must be substituted with a machine upheld companion. Obviously, such an artificial partner must be outfitted with some cognitive abilities, normally characteristics of a person. Cognitive image understanding unquestionably will be one of its fundamental responsibilities. Today, the progress from a ‘computational data-processing worldview’ to a ‘cognitive information-processing worldview’ influences numerous fields of science, innovation, and designing. This change is an honoured oddity, yet its prosperity is hampered between the idea of information and the thought of data. As the computer applications are getting increasingly complex, the specialists understand that numerous issues in genuine that are straightforward by people are hard to portray with judicious strategy making the sane scientific technique incapable or totally unimaginable computer. At the end of the day, the successful information highlights can’t be planned with manual component structure procedure and it is extremely difficult for a computer to comprehend the component articulation.

It very well may be summarized that the mapping connection between the info and the yield (i.e., who is that individual) is set up with this acknowledgment strategy for humans by uprightness of instinct. At the point when picture order is directed with profound learning, the perceptual strategy for people for picture acknowledgment is mimicked [27].

7.5 Applications of Cognitive Computing

7.5.1 Chatbots

Chatbots [28] are applications that can mimic a human conversation with a conceptual perception of contact. The active individual bots are getting pervasive by being inserted in cell phones, wearables, and web of things endpoints, offering self-governing knowledge on more parts of the material world. Maybe the correct name for this kind of smart individual specialist is the cognitive chatbot.

This new inflow of smart individual specialists initiates in the spread of a cloud-based cognitive register environment, for example, IBM’s Watson. Through their capacity to utilize AI to drive characteristic language conversations, cognitive chatbots can be recognized from the long queue of “chatterbots” that preceded. What’s more, as characteristic language conversational bots like Siri and Amazon’s Alexa flourish in the customer world, I consider these operators as part of cognitive IoT chatbots. As engineers move their concentration to Cognitive chatbots, they have to receive crisp reasoning, practices, and systems for building these capacities as reusable management for arrangement in the cloud, portable, IoT, and different situations. At the point when you take other cognitive chatbot advancement natives—past those related with the UI—it’s ingenious one that is generalizable enough to serve a wide scope of utilization cases. The examples depend on how much individual bots learn, continue, and follow up on substance and state factors related to explicit clients, channels, and discussions. For instance, a hand animating and entertaining thought expert for investigation escalated Twitter chatbot applications.

Developments in cognitive chatbot advancements, calculations, and applications are coming to advertise at a surprising pace. As space develops throughout the following quite a while and best practices take shape, coordinated improvement systems will very likely rise.

7.5.2 Sentiment Analysis

Sentiment Analysis (otherwise called sentiment mining or emotion AI) refers to the utilization of regular language preparing, text examination, computational phonetics, and biometrics to deliberately recognize, extricate, measure, and study emotional states and abstract data.

Sentiment evaluation is the examination of spotting sentiments expressed in a message. Sentiment evaluation is popularly used to evaluate social network messages which include posts, remarks, ratings, grievances, etc.

The sentimental investigation is generally applied to the voice of the client entities, for example, audits and overview reactions, on the web and online media, and medical care materials for applications that run from promoting to client care to clinical medication. The sentimental investigation, nonetheless, assists organizations with understanding this unstructured content via naturally labeling it.

The sentimental examination is an AI method that recognizes extremity (for example a positive or negative feeling) inside content, regardless of whether an entire archive, passage, sentence, or statement. For instance, utilizing sentimental examination to consequently break down more than four thousand audits about your item could assist you with finding if clients are active about your valuing plans and client care. It’s assessed that eighty percent of the world’s information is unstructured and enormous volumes of text information such as messages, uphold tickets, visits, online media discussions, reviews, articles, records, and so forth is made each day yet it’s difficult to examine, comprehend, and also monotonous and costly.

Understanding individuals’ feelings are basic for organizations since clients can communicate their musings and emotions more transparently than any other time in recent memory. Via consequently examining client input, from study reactions to web-based media discussions, brands can listen mindfully to their clients and fasten items and management to address their issues.

Hence, Cognitive AI includes feeling investigation, relevant references to comprehend, distinguish and mine the information, for example, linguistic structure, time, area, space, business prerequisites, a particular client need, undertakings or objectives and mixes thereof emulating human perception far beyond the AI we know. Intellectual AI may draw inductions from various information models, outside sources of the data, including organized and unstructured information and visual, hearable or machine information and metadata.

7.5.3 Face Detection

The specialized stage of picture processing is facial recognition. A cognitive system utilizes facial data such as shape, contours, eye color, etc. to separate it from others.

Apple has obtained Emotient, a face detection startup that utilizes manmade consciousness innovation to survey feelings by using individuals’ outward face appearances. It can be utilized by Doctors to decipher the agony of patients who can’t communicate. It can also be used by the organization to check the responses of purchasers on the items in its stores.

7.5.4 Risk Assessment

Risk management in financial services includes the expert experiencing market patterns, chronicled information, and so on to foresee the vulnerability associated with a venture. In any case, this is examination isn’t just identified with information yet in addition to patterns, gut feel, conduct investigation, and so forth. Organizations and open area associations are utilizing progressively enormous measures of inner and outer information to face a more precaution challenge position. Notwithstanding, with that expansion in information volume, the viability of conventional examination strategies is decreasing. Cognitive capacities including AI, regular language handling, and numerous different kinds of intellectual innovation give an advanced option in contrast to customary examination and are being applied to monstrous informational collections to help discover markers of known and obscure dangers. Cognitive computing can assist organizations with identifying and assessing rising essential dangers that can threaten any or all of what administrators care generally about before those dangers cause conceivably critical harm or lead to greater expenses or speculations.

Simultaneously, Cognitive processing is especially compelling when taking care of and assessing unstructured information or the sort of data that doesn’t fit conveniently into organized lines and segments. Cognitive innovations, for example, natural language processing, semantic analysis and image understanding utilize progressed calculations to break down unstructured information to determine experiences and beliefs. Since about ninety percent of information produced today is unstructured, utilization of cognitive processing can put organizations directly on the edge of the threat. This is the place where cognitive analysis and the risks unite. Cognitive processing can assist organizations with distinguishing other rising patterns, understand the danger/reward tradeoffs naturally in esteem innovation, and improve investing options and resource selection. Pioneers who influence cognitive abilities can increase the upper hand and use risks to control their associations’ exhibition.

Also, as cognitive fraud identification frameworks keep on learning, they can identify more perplexing misrepresentation, a preferred position that may have the greatest effect on hazard the administrators. By uncovering developing examples that people would never distinguish, cognitive advancements make new examples to search for an upright cycle that in principle never closes, which is a genuine favorable position when fraudsters are unstoppably advancing their extortion plans.

7.6 Future of Cognitive Computing

The cognitive analysis is normally similar to that of the human idea and perspective in a modernized domain. It is a totality of self-learning frameworks that fuses design acknowledgment, information mining and natural language processing (NLP) to imitate the manner in which the human mind works. The fundamental goal of cognitive computing is to make self-working modernized frameworks that are capable of taking care of even complex issues with no human assistance or interruption [29].

The cognitive cycle can be appreciated in a much basic manner as the component which utilizes the current information for producing new information. The fundamental topic of perception is firmly identified with unique ideas, for example, psyche, mentality, recognition and knowledge. It resembles understanding the commitment of a human cerebrum and taking a shot at the humankind of issues. Such frameworks and set-up consistently gain information from the data. The cognitive processing framework merges information from differing and incidental data sources while thinking about setting and conflicting proof to recommend the best achievable answers.

It is one of the characterizations of advancements that use AI and Natural Languages Processing (NLP) to empower individuals and machines to communicate and increase understanding all-around for amplification of human skill, observation, and perception.

Utilizing computer systems to unwind the diverse class of issues that people are generally worried about, requires a broad measure of organized and unstructured data taken care of by AI calculations. With the progression of innovation, cognitive systems can refine the manner in which it recognizes designs and the manner in which it measures information to get fit for catching new issues and outline the potential arrangements. To achieve this capability, cognitive analytics must have these five key qualities, as recorded by the Cognitive Computing Consortium [30].

- Versatile

This is the initial phase in making an AI-based cognitive framework. The arrangements should copy the capacity of the human cerebrum to adapt from the environmental factors. The frameworks can’t be modified for a segregated task. It should be dynamic in information gathering, getting objectives, and prerequisites.

- Intelligent

Like the mind, the cognitive arrangement must collaborate with all components in the framework—processor, devices, cloud administrations and client. Cognitive frameworks ought to associate in an Omni-directional manner. It ought to understand human information and give significant outcomes utilizing natural language processing and machine learning.

- Iterative and Stateful

The framework should ‘recall’ past collaborations in a cycle and return data that is appropriate for the particular application by then. It ought to have the option to characterize the issue by posing inquiries or finding an extra source. This component needs a cautious utilization of the information quality and approval procedures so as to guarantee that the framework is constantly furnished with enough data and that the information sources it works on to convey dependable and cutting-edge input.

- Relevant

They should understand, recognize, and remove logical components like language structure, time, area, proper space, guidelines, client’s profile, cycle, errand, and objective. They may draw on numerous sources of data, including both organized and unstructured advanced data, just as tangible information sources like visual, gestural, hearable, or sensor-based.

Some of the scopes of cognitive computing are as follows:

- Engagement

It is the capacity to gain clear insights into the subject and to offer professional assistance. The Cognitive system has tremendous stores of organized and unstructured information. These can grow profound area experiences and give master help. The models work by these frameworks remembers the relevant connections between different elements for a framework’s reality that empower it to shape speculations and contentions. The chatbot innovation is a genuine case of commitment model. A large number of the AI chatbots are pre-prepared with space information for fast selection in various business-explicit applications [31].

- Decision

There is an independent decision-making through improved thinking. A step in front of responsibility systems, these have dynamic abilities. These systems are displayed using support learning. Decisions made by cognitive systems constantly develop being dependent on new data, results, and activities. Self-sufficient dynamic abilities rely upon the capacity to follow why the specific selection was made and change the certainty score of a system’s reaction. For instance, giving options help to improve the abilities and diminishing desk work that allows clinicians to invest more energy with patients.

- Discovery

Through a method, the program will recall past experiences and return knowledge. Discovery is the most progressive extent of cognitive computing. The vision includes discovering bits of knowledge and understanding a tremendous measure of data and creating aptitudes. These models are based on profound learning and solo AI. While still in the beginning phases, some discovery capacities have just developed, and the offers for future applications are convincing.

7.7 Conclusion

To summarize, Cognitive Computing doesn’t carry an extreme curiosity into the AI and Big Data industry. Or maybe it inclined towards advanced answers for meeting human-driven prerequisites: act, think and carry on like a human so as to accomplish the most extreme harmonious energy from human-machine communication.

In this paper, we attempted to clarify this expansive and high-level idea into detailed automated difficulties which give some broad sense proposals where they can be tended to. We accept that soon every computerized system will be estimated on its cognitive capacities. Like user experience was the following huge advance for improving application comfort. Cognitive Computing will be a noteworthy advance towards automated digital humanism. The impact of cognitive technology on organizations will absolutely be anticipated to rise altogether in less than 5 years. Notwithstanding all the obstacles and barriers, we cannot neglect the advantages of cognitive technologies. Starting the transformation cycle and embracing revolutionary technologies for a promising and far more productive future would be in support of both the organizations and society at large [31].

References

1. Octavio, J. and Lopez, Cognitive Computing: A brief survey and open research challenges. 3rd International Conference on Applied Computing and Information Technology, IEEE, 2015.

2. Karie, N.M., Kebande, V.R. and Venter, H.S., 2019. Diverging deep learning cognitive computing techniques into cyber forensics. Forensic Science International: Synergy, 1, 61–67, 2019.

3. Chen, M., Mao, S., Liu, Y., Big data: A survey. Mobile Netw. Appl., 19, 2, 171– 209, 2014.

4. Leonel, J., Cognitive Computing: Basic concepts and timeline, Retrieved from Medium: https://medium.com/@jorgesleonel/cognitive-computing-reflectsa-new-computing-era-4945aeb0e843.

5. Johnston, V.S., Why we feel: The science of human emotions. Perseus Publishing, 1999.

6. Chen, M., Mao, S., Liu, Y., Big data: A survey. Mobile Netw. Appl., 19, 2, 171– 209, 2014.

7. Techopedia, Cognitive architecture, Retrieved from Techopedia: https://www.techopedia.com/definition/6537/cognitive-architecture, 2017.

8. Bhatia, R., Brain-Inspired Cognitive Architecture is now solving computational challenges faced by AI, Retrieved from Analytics India Magazine: analyticsindiamag.com/brain-inspired-cognitive-architecture-is-now-solvingcomputational-challenges-faced-by-ai/, 2018.

9. Chen, M., Hao, Y., Hu, L., Huang, K., Lau, V., Green and mobility-aware caching in 5G networks. IEEE Trans. Wireless Commun., 16, 12, 8347–8361, 2017.

10. Chen, M. and Y.M., Narrow band Internet of Things. IEEE Access, 5, 20557– 20577, 2017.

11. Sheth, A., Internet of Things to smart IoT through semantic, cognitive, and perceptual computing. IEEE Intell. Syst., 31, 2, 108–112, 2016.

12. Tian, D., Zhou, J., Sheng, Z., An adaptive fusion strategy for distributed information estimation over cooperative multi-agent networks. IEEE Trans. Inf. Theory, 63, 5, 3076–3091, 2017.

13. Fernández, A., Big data with cloud computing: An insight on the computing environment, mapreduce, and programming frameworks. Wiley Interdiscip. Rev., Data Min. Knowl. Discov., 4, 5, 380–409, 2014.

14. Chen, M., Zhou, P., Fortino, G., Emotion communication system. IEEE Access, 5, 326–337, 2017.

15. Chaturvedi, I., Cambria, E., Welsch, R.E., Herrera, F., Distinguishing between facts and opinions for sentiment analysis: Survey and challenges. Inf. Fusion, 44, 65–77, 2018.

16. Hurwitz, J., Kaufman, M., Bowles, A., Cognitive Computing and Big Data Analytics, Wiley, Hoboken, NJ, USA, 2015.

17. Delgado, R., Cognitive Computing: Solving the Big Data Problem?, Retrieved from KDnuggets: https://www.kdnuggets.com/2015/06/cognitive-computingsolving-big-data-problem.html, 2015.

18. Zhou, L., On data-driven delay estimation for media cloud. IEEE Trans. Multimedia, 18, 5, 905–915, 2016.

19. Armbrust, M., Above the clouds: A Berkeley view of cloud computing, vol. 53, no. 4, pp. 50–58, Eecs Dept. Univ, California Berkeley, 2009.

20. Botta, A., Donato, W.D., Persico, V., Pescapé, A.E., On the integration of cloud computing and Internet of Things. Proc. IEEE Int. Conf. Future Internet Things Cloud, pp. 23–30, 2014.

21. Silver, D., Mastering the game of go with deep neural networks and tree search. Nature, 529, 7587, 484–489, 2016.

22. Chen, M., Herrera, F., Hwang, K., Cognitive Computing: Architecture, Technologies and Intelligent Applications. IEEE Access, 6, 19774–19783, 2018.

23. Kellman, P.J. and Massey, C.M., 2013. Perceptual learning, cognition, and expertise. In Psychology of learning and motivation (Vol. 58, pp. 117-165). Academic Press.

24. Fasel, B. and Luettin, J., Automatic facial expression analysis: A survey. Pattern Recognit., 36, 259–275, 2003.

25. Chen, M., Hao, Y., Kai, H., Wang, L., Wang, L., Disease prediction by machine learning over big data from healthcare communities. IEEE Access, 5, 1, 8869– 8879, 2017.

26. Charte, D., Charte, F., García, S., Jesus, M.J., Herrera, F., A practical tutorial on autoencoders for nonlinear feature fusion: Taxonomy models software and guidelines. Inf. Fusion, 44, 78–96, 2017.

27. Chen, M., Shi, X., Zhang, Y., Wu, D., Mohsen, G., Deep features learning for medical image analysis with convolutional autoencoder neural network. IEEE Trans. Big Data, 2017.

28. Leonel, J., Cognitive-computing-reflects-a-new-computing-era, Retrieved from Medium: https://medium.com/@jorgesleonel/cognitive-computingreflects-a-new-computing-era-4945aeb0e843.

29. Makadia, M., What-is-cognitive-computing-how-are-enterprises-benefittingfrom-cognitive-technology, Retrieved from Towards Data Science: https://towardsdatascience.com/what-is-cognitive-computing-how-are-enterprises-benefitting-from-cognitive-technology-6441d0c9067b, 2019.

30. Cognitive Computing Definition, Retrieved from Cognitive Computing Consortium: https://cognitivecomputingconsortium.com/definition-ofcognitive-computing/, 2018.

31. Witan World, Cognitive computing, Retrieved from Witan World: https://witanworld.com/article/2018/10/08/cognitive-computing/, 2018.

*Corresponding author: [email protected]