6

Cloud Cryptography for Cloud Data Analytics in IoT

N. Jayashri1* and K. Kalaiselvi2†

1Department of Computer Applications, Dr. M.G.R. Educational and Research Institute, Chennai, Tamilnadu, India

2Department of Computer Applications, School of Computing Sciences, Vels Institute of Science, Technology and Advanced Studies (Formerly Vels University), Chennai, Tamil Nadu, India

Abstract

The potential Internet of Things (IoT) will have a profoundly prudent business and social impact on our lives. A hub of interest in IoT systems is typically an asset obligation, which makes them focus on digital assault baiting. In this way, broad attempts have been devoted to identifying security and safety difficulties in trendy IoT, primarily through conventional cryptographic methodologies. In any case, the remarkable qualities of IoT hubs make it the main objective of the association and collaboration between objects and objects sent through remote systems to satisfy the target set for them as a united element, to achieve a superior domain for the use of big data. What is more, based on the creativity of remote systems, both platforms and IoT may grow quickly and together. In this section, it methodically audits the security needs, the assault vectors, and the current security responses for IoT systems. It also addresses insights into current machine learning solutions for solving various security issues in IoT systems and a few future cryptography cloud analysis headlines.

Keywords: Internet of Things, cloud computing, big data, security, privacy

6.1 Introduction

Cloud-based organizations’ introduction presents many obstacles for memberships. When a membership moves to use cloud organizations and open cloud organizations, a huge part of the system creation is affected by the cloud provider (CSP) of laughing stock directly. A variety of these disadvantages can and should be resolved by the organization [1]. These organizational exercises require a clear depiction of the CSP (which might be an affiliation)’s ownership and commitments and the affiliation’s client work. Security administrators also must be able to ascertain the inspector and insurance checks that exist for the security status of the associate to be adequately defined. Nevertheless, appropriate safety controls should be conducted out subordinating to systems for the asset, danger, and chance screening, and depending on the level of data protection necessary, with a few large-scale modes of the organization paying little notice to think about trade in the organization [2]. That includes the following:

- Security approach execution

- Computer interruption identification and reaction

- Virtualization security the board

The board of directors for cloud security can also develop advanced capabilities and conduct criminal investigations using a quantifiable cloud-based information framework. In this report, swift and thorough clarity is required in the event of the exploitation of modified hazards and the lack of data in the cloud. Also, cloud security managers will make improvements by using robotics in practice and using better server management levels in regions such as interoperable security, operational design, bookkeeping, delivery, and API zones. APIs are meant to control cloud resources that GUI frameworks and remote APIs must figure out how to chronicle and unfiltered [3]. The board can handle information security applications to control huge trade by way of flexibility, invest more because expense models occur, use and supply, on request, and delegate data to the cloud operating activities of the administrator.

6.2 Cloud Computing Software Security Fundamentals

Security is a primary consideration when confiding the basic data of an organization to the cloud stages which are not topographically distributed. Despite regular IT data framework security procedures, the cloud surface will unbelievably be lowered by structuring safety to software programming throughout the invention of life cycle development. Three properties to be safe are as follows [4].

Addiction: Software that typically runs and works effectively under a variety of conditions, even when targeting or working on a dangerous host.

Trust: Program that contains a few baseline bugs or faults that may threaten the reliability of the product. It should also be irrelevant to false reasoning.

Survival: Program that is prone to and oblivious of threats and can rebound as easily as can be imagined, with the least maliciousness that is predicted in the circumstances.

Seven relevant rules for the authentication of data include secrecy, truthfulness, entry, assurance, consent, inspection, and responsibility.

Confidentiality, Integrity, and Availability The anonymity, cleverness, and usability of the data framework are sometimes referred to as the CIA group of three and are essential colons of cloud service security.

Confidentiality

This introduces the possibility of intentional or accidental data disclosure. Cloud protection is related to mental data, incognito networks, behavior analysis, coding, and inference fields [5].

- Intellectual Property (IP) Rights: IP blends innovations, strategies, and creative, melodic, and informative practices. IP patent regulations that guarantee signals of judgment skills and permits that are given to innovative innovations protect mental protection. Mental property rights are guaranteed.

- Covert Channels: A secret channel allows the sharing of information through an illegal and accidental means of communication. Uncovered networks may be accomplished by pacing messages or using power structures in an undeclared manner.

- Traffic Analysis: Intervention test may be a design of a breach of mystery that can beyond question be fulfilled by examining the duration, pace, direction, and intent of the communication action, should it be combined. Extensive correspondence and large action bursts can show that a major event takes place. Countermeasures for action review incorporate the near-constant rate of communication measures to protect the traffic’s origin and aim areas.

- Encryption: Encryption involves scrambling notes, on the day of their interception, so that they cannot be searched by an illegal material. The overall amount of effort needed (work figure) to decode the message may be a process of encryption quality and encoding estimation quality and quality.

- Inference: Derivation is often associated with the efficiency of the sample. The capacity of a product to use and device details obtained to reveal material that consumes remained assured at an advanced level of safety is established.

6.3 Security Management

The application concept includes the practice of cloud computing in effective security management. The appropriate management and administration of cloud security, such as surveillance, review of powerlessness, law adjustment, response to incidents, catastrophe resistance, dyspeptic recovery, and trade union arrangement, may consider problems of management and management. The correct use and analysis of cloud security controls improve these systems and safeguards them. The classification principles indicate those used in the private sector and are appropriate for cloud data. The classification laws are as follows [6].

Public Information: Data that is like unclassified information; the mixture of knowledge from a class incompatible organization, because data are available. While its unapproved liberality will undermine its policy, it must not influence either positively or unfavorably the organization, its members, or its customers.

Sensitive Data: Data on a classification level that is more relevant than normal data. Due to unauthorized modification, this information is protected from inaccurate classification. This is an intelligence course that requires an unparalleled pledge to confidence in protection against unauthorized alteration or eradication. Knowledge needs to be re-examined more correctly and accurately than ever.

Private Data: This function refers to certain information submitted for use by the organization. An unapproved indulgence of the Company and its employees may result from an honest and antagonistic influence.

Confidential Information: This agreement applies to the most important trade information that is intentionally expected for the partnership. It seems real and antagonistic to the accepting of the investor, his speculators, his staff, or perhaps his clients. Although data about the almost unknown production are classified as unidentified, market advantages, and fusion courses.

Controls: Cloud security checks are designed to minimize the consequences of an intrusion and reduce vulnerabilities to a reasonable extent. An organization needs to evaluate the impact of an attack and the risk of failure in this context. Cash stealing, reputation misfortune, and physical destruction are indicators of misfortune in commitments to sensory data. The method for analyzing different risk scenarios and creating a vulnerability of the agent toward the calculated possible disaster is called a hazard analysis (RA). As practicable countermeasures, controls serve [7].

6.4 Cryptography Algorithms

Are we naive to believe that we do not have to provide security with basic safeguards? Security is an important issue in this era of direct communication between tens of millions of people and is increasingly being used as a platform for trade. Security aspects are complex and the basic needs of users include numerous applications for secure business, private communications, and password protection transactions. Consequently, encryption is necessary because it gives an encrypted messaging environment. The main determination of cryptography remains toward stop unauthorized access to secure information [8]. Cryptography involves research and analysis of safe communication methods in the presence of third parties. Hidden research and analysis are hidden in cryptography. It is the craft and sympathetic of the turn into a clear text cipher board. In cryptographic language, the communication is named plaintext. The authentication is called encryption so that the content of the message cannot be exposed by external sources. The authentication process is indeed a number and types used to label the sender’s message or sign ID which ensures that the meaning of the initial message or email is modified. Digital signatures that no one else can replicate are easily transmitted and can be synchronized automatically. The original signed message cannot be refused by the ability to ensure that it is authenticated. Use of a digital signature may be allowed with any type of communication, whether concealed or not, indicating an encrypted message or a decrypted text, allowing the receiver to be sure of the sender’s identification and demonstrating that the message is consistent and implying that the message is initial or unchanged [9].

6.4.1 Types of Cryptography

Symmetric Key Cryptography: This is also known since main key, secret key, or single key Encryption. The same key for encryption and decryption is used to send and receive in that process [10]. The key symmetric is split into two parts: first, the BLOCK CIPHER data block, which is then separated into bits and used as encryption and decryption. The first is a BLOCK CIPHER data layer. For example, the block chauffer is AES, the most common symmetrical algorithm techniques are three times DES. Secondly, STREAM CIPHER operates on a given part. It is also a threat to breach faith and transfer the secret key to the unsafe network. Symmetrical code offers many benefits, as coding is easier. Single key encryption requires not a lot of computer resources in comparison to public-key encryption. A single secret key is used to communicate with all stakeholders. When a key is compromised, messages between a dispatcher and the beneficiary are only affected. The communication of other people remains secure.

Asymmetric Key Cryptography: Encryption of public key is also known. There are two types of keys, one of them is public encryption main and the other is a private decryption key. Only a consumer/device knows the private key, while the public key is used by everyone who wants to communicate. They are slightly faster and more vulnerable to attack than symmetrical algorithms but make key sharing more convenient. For asymmetric cipher, their pace and defensive strength are the major problems. RSA, elliptic curve, Diffie-Hellman key algorithm, and digital signature are the popular asymmetric chipboard. The advantages of the asymmetric key method are that they overcome the key problem of cryptography. The publishers of public keys and isolated keys are reserved secret [11]. The public key encryption requires the use of digital signatures to ensure that the communication is from a specific source. A digitally signed record cannot be changed deprived of canceling the sign.

Algorithms

DES

In the 1970s, when the US government needed the security of data and sensitive information, DES was established. However, for the NIST’s notion, none was acceptable. In 1974, IBM developed an algorithm based on HostFiestal which was called Lucifer Cipher in 1973–1974. The machine encoding system (DES) is the symmetrical block cipher used for data encoding. It was based on a Lucifer algorithm proposed by IBM. In the 1970s and later 1975s, DES was developed and it needs a set plaintext length and has been translated by several operations to ciphertext.

Blowfish

Bruce Schneider invented Blowfish in 1993 and was used in multiple encryption products. The variable key length for Blowfish is from 0 to 448 bits ideally from 32 to 448 bits. It has a 64-bit block size and a 16-round Feistel slot, which uses large S-boxes. Blowfish utilize circular keys and several variations of the block chip generate all S-boxes. This improves the safety of the block cipher because of the complex key check and could be known as the safest symmetric algorithm.

3-Way

Joan Daemen developed a block cipher in 1994. In a broad spectrum of hardware platforms, it was very good. The key duration of the algorithm is 96 bits and it uses 32-bit words to measure it, and so, its name is 3-Way. The 3-Way block size is 96 bits and the S-P network is 11-round.

GOST

As a national standardization strategy, a GOST block cipher was created by the Soviet government. GOST operates over 64-bit block size and has a key size of 256 bits. GOST is a 32-round Feistel network with the algorithm of S-box. The new enhanced encryption standard (IPES) was originally referred to as an updated version. As a replacement for DES, the algorithm was developed. It uses 52 subkeys, each 16-bit long. The block size of the definition is 64-bit and it has 8 triangles.

LOKI 97

The original design is LOKI 89 or LOKI 91 as a DES replacement, each using a differentiating from Xbox, an S-Box to an Extension table, respectively.

RC2

RC2 was created by symmetric key block ciphers, which Lotus supported with Lotus Notes’ tech. RC2 is a 64-bit, variable key and has 16 rounds of the block size.

RC5

In 1994, RC5 was created as a symmetrical block processor. RC5 is a quick-block cipher and is suitable for the application of hardware. The number of rounds, the text dimensions, and an underground important variable is on RC5. It has extensive utilization of facts in need of revolutions.

RC6

The RC6 was created from the RC5 with an upgraded RC5, providing better protection against attacks than the RC5.

Information Concealment Engine (ICE)

Kwan developed a Knowledge Case Engine (ICE) symmetric block cipher that was introduced at the Quick System Encryption Workshop in January 1997. The Feistel architecture is 64-bit like the DES, except that it incorporates the principle of main-dependent permutation contributing to linear and differentiated attack resistance. The standard version of ICE is usable on a 64-bit block and contains 64-bit keys in 16 rounds plus 16 subkeys. A quick-mounted model called Thin-ICE operates on a 64-bit8-round block with a 64-bit key and open-ended 16n and 64-bit ICE-n models. ICE input is 64-bit, divided into two parts, i.e., 32-bit. The right half of the 32-bit sub-key and the 60-bit sub-key take each round of the F function, and this value is XORed and left, and then swapped. Repeats for all but the final section, the expansion part, main permutation, Xor operation, S-boxes, permutation mechanism, and key schedule where the transformation is left out, the half is concatenated and the ciphertext is formulated [12].

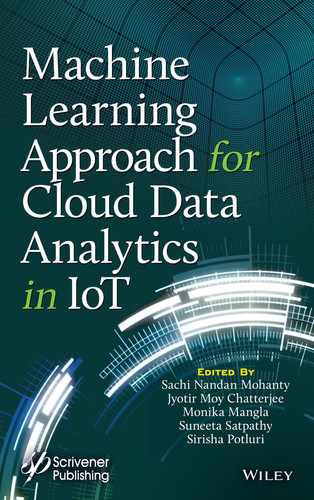

The comparison of algorithms explained in Figure 6.1 with the different modes based on processing and size.

Figure 6.1 Comparison of algorithms.

KASUMI

A 64-bit cipher block algorithm, a 128-bit hardware knowledge optimization element, is a KASUMI idea originally derived from the MISTY1 algorithm. KASUMI is an eighth round, Feistel-like, network structure with round functions irreversibly and around key composed of 16 sub-keys originally drawn from a 128-bit fixed key program [31] is used for each round function [13]. KASUMI is mainly used in mobile communications systems for universal mobile telecommunications systems (UMTS), GSM, and Global Packet Radio (GPRS).

Threefish

Asymmetrical chip block was produced in 2008 by Bruce Schneier and Jesse Walker, Niels Ferguson, Stefan Lucks, Doug Whiting, and Mihirbellare. Threefish is the same as a blowfish. However, Threefish uses three keys: 256, 512, and 1,024 bits with the same string size. It usually has 72 rounds, but it operates for 80 rounds and 1,024 pieces. In addition to these two, Threefish is a tweakable block cipher, i.e., the key one, the shift value, and the message node are three parameters. The tweak value is used to encrypt a message block. To avoid time assaults, Threefish does not use S-Box or other table searches [14].

6.5 Secure Communications

As the creation of cloud application involves a revaluation of communications security, it is restricted to providing supervised secure communication between storage assets within an enterprise. These signals are essential both to movement information and to rest knowledge. Structures, transmission techniques, transport designs, and security measures that provide confidentiality, astuteness, accessibility, and legitimacy for transmissions through private or accessible communications systems are part of secure cloud communications. Secure cloud computing will ensure the following [15–17]:

Confidentiality: It ensures the retrieval of information by the isolated person. Loss of classification may occur if privately owned business data are intentionally shown or device privileges are misapplied. Part of the media communications elements used to ensure privacy is as follows [18]:

- Conventions on network protection.

- Administrations of network authentication.

- Administrations for data encryption.

Integrity: It makes sure the knowledge is not changed due to maladministration or malignancy. Trust is the assurance that the letter that is sent is accepted and that the response is not modified deliberately or accidentally. The principle of non-repudiation of a message source is also expressed with reverence. A part of the trustworthiness electors shall be as follows:

- Control of firewall.

- Security management of messages.

- Administrators of the location of intrusion.

Availability: It allows accepted clients to access the system or systems when and where they are needed and maintain that functionality is accessible when required. Also, the assurance that security administrations for the security specialist can be used as needed is recalled for this clarification. A few of the elements used to ensure accessibility shall be as follows:

- Fault resistance to access to information, such as refurbishments and excess circle structures.

- Suitable logins and views of work procedure.

- Security procedures and system components secure and interoperable.

APIs

Common vulnerabilities such as powerless antivirus computer program, unattended computing stages, destitute passwords, frail verification components, and lacking interruption discovery that can affect communications must be more stringently analyzed, and appropriate APIs must be utilized [19].

Virtual Private Networks

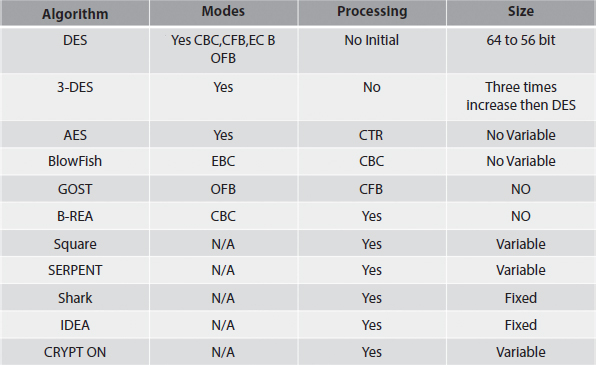

A virtual private network (VPN) is another key strategy to secure internet communications. A VPN will be generated by establishing safe connections between two hubs, imitating the properties of a private network point-to-point. A VPN can be used for safe, insecure access to the cloud, for protected interfaces between two systems, or a secure information burrow inside a network. The portion of the connection that typifies personal information is known as the burrow. It could be referred to as a safe, coded burrow, although it is defined more specifically as a standard burrow because encryption can or cannot be used. Connecting information was typed or filled with a header that offers to guide details to mimic a point-to-point. The data is most often broken up for privacy. This scrambled connecting portion is known to be the true private computer system. Figure 6.2 shows a shared VPN arrangement with instance IP discourses for distant access via the Internet to an organization’s intranet. The organization’s router names are 192.168.123.2 [20].

Figure 6.2 VPN configuration.

Incredible to and from network to network are two common VPNs that are essential for cloud computing. These VPN forms are shown in the following sections.

Remote Access VPNs

A VPN can be designed to provide hidden access to business resources through the open web to maintain privacy and continuity. It allows the farther user, without pushing the client to establish a gap, to use anything accessible via local ISPs to the network and to make 800 calls to a third party. The VPN program makes a virtual private relationship between the customer dial-up and a business VPN server on the network by way of the ISP neighborhood association. A farther VPN client link occurs in Figure 6.2 [21].

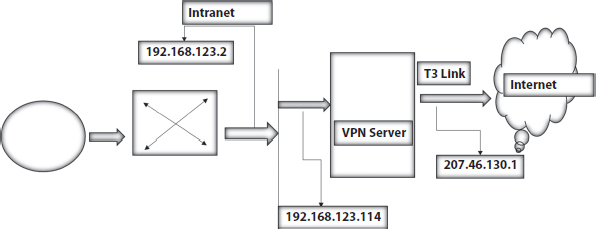

Network-to-Network VPNs

Two systems that may be the more company LAN and a far-off office LAN are commonly associated with a VPN via the network. The partnership can use either dedicated lines for the internet or wireless dial-ups. However, if the VPN server is accessible 24 hours a day, the business center switch, which functions as a VPN server, must be linked with a local ISP on a dedicated thread. The VPN computer program utilizes the connection to make a VPN burrow between the office switch and the network switch of the business center. In Figure 6.3, the departmental agency associated with the fundamental corporate office using a network VPN tunnel appears to be inaccessible [22].

Figure 6.3 Remote access VPN configuration.

VPN Tunneling

Tunneling could be a technique to exchange information in an extra header from one entity to another organization [23]. The additional header provides directional information so that the standard payload can access the intermediate networks, as shown in Figure 6.4. The burrow client and the burrow server must use the same tunneling system to build a burrow. Innovation in tunneling may be based either on a convention on layer 2 or a convention on tunneling layer 3. These layers equate to the interconnection of open systems (OSI). Type for example. As a replacement for encryption/decryption tunneling and use of a VPN is not expected. If a high-security standard is necessary, then the most well-founded possible encryption should be used in Figure 6.5 within the VPN and tunneling should be convenient.

Figure 6.4 A network-to-network VPN configuration.

IPsec, which in comparison to the IP header characterizes IPsecs, is a well-known network tunneling protocol. IPsec operates on the Assembly Layer OSI Reference Panel which allows multiple simultaneous burrows. IPsec provides the ability to scrape and verify IP information. The IPv6 standard is included and serves as a complement to the current IPv4. You can hack and convert the wireless packages in the IPsec burrow mode via the company Mobile intranet or the free IP internet like the internet into a typewritten IP header [24]. The IPsec Authentication Header (Ah) uses the ESP (ESP) function to authenticate and evaluate the source and the consistency without encryption. The sender and the receiver know the IPsec code.

Public Key Infrastructure and Encryption Key Management

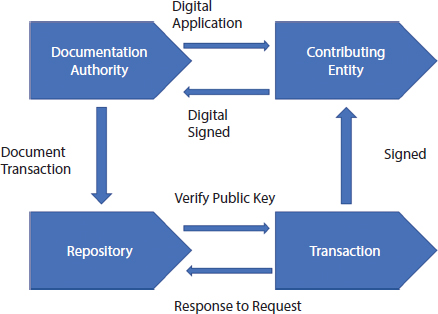

The cloud can exchange information to encrypt communications, track connections to other secret malware networks, and to use it and preserve it. Preparing passwords would be used to connect people to open keys for secure key coding purposes. The Certificate Authority (CA) recognizes a person’s identity as a lawyer and issues credentials to make sure that the accepted claimant is publicly available. The credential is checked with its private key by the qualified individual. The receiver is therefore identified when opening the files as the sender. In what is called encryption resources (PKI), computerized logos and licenses, and other e-commerce agencies are being implemented [25]. They make electronic transactions easy to track, secrecy, secrecy, and denial.

Figure 6.5 A VPN tunnel and payload.

Digital Certificates

Core elements of PKI are the digital certificate and qualification administration. Keep in mind that specialized certificates are designed to show us that an individual’s open key—shown on a clear “keyring”— is his own is explained in Figure 6.6. An important third party CA guarantees that the name of the person is the open key and that a certificate to validate this reality is provided. The CA completes the certificate by carefully identifying the person’s open key and associated information. Licenses and CRLs between the shop and the CA with agreements should be stored in the warehouse. The shop decides how these tasks can be assigned. At a conference, the shop meets other stores, CAs, and customers. The CA holds the CRLs and their licenses. Customers will at some stage use this stuff [26].

Key Management

The same protections as physical keys are used to protect regions or variations to the safeguards while handling them with encryption keys [27]. The following fields represent the key administration components.

Key Distribution

As the password symmetry keys are a difficulty to convey, asymmetrical crypto-systems will communicate mystery keys. Other means that mystery keys are passed to personal meetings, to stable flag bearers, or some other secured swap network for exchange keys. Another option is to delete the mystery key and give the expected recipient a scratched mystery main with another secret, the key-encryption key [28]. These major coding keys can be distributed physically, but need not be scattered constantly. The keys can also be partly divided into different parts and each part can be conveyed through a separate media. Key distribution in costly schemes can lead to a real problem as N(N − 1)/2 is an N-person scheme that is named multiple key firms. To control the main transport issue, the use of open key cryptography or the development and trade of session keys, which are necessary for a session and length. The main could be changed by using the old password to create a modern password. If you are likely to have a mystery key for yourself, Alice and Bounce can use the same change process (a hash algorithm).

Figure 6.6 A transaction with digital certificates.

Multiple Keys

A private always has more than one match between a public and a remote key. The keys can be of differing sizes for different safety levels. For carefully labeling records a bigger key calculation can be used, while a smaller key indicator can be used for encryption. An individual may additionally be required to sign messages with a distinctive signature in different parts or duties. One essential match can be used for the company, another for individual purposes, and another for a few other actions, for example, as part of a school board [29].

Distributed Versus Centralized Key Management

A CA can be a centralized key power. It can be a key area where certificates are given and CRLs are maintained. Optional key management is distributed to establish a “trust chain” or “esteem network” among familiar clients. They can presume that each open key is essential since they know each other. Some of these customers can recognize other users and thus accept their open key. From the first encounter, the chain extends further [30]. This course takes place in a relaxed system of validation based on people understanding each other and respecting each other.

6.6 Identity Management and Access Control

Recognition and entry are key capabilities for secure cloud assessment [31]. A programming system with a client name and a secret word is the best way to manage the character. In any case, real character management needs better security, identification, and control for cloud computing, as needed. It shall set out the characteristics of products, such as biometric or shrewd cards, which may be entered or used by the user, and decide the illegal content that has been received.

Identity Management

Identification of this security is the foundation of most control frameworks. Identification is the act of a customer when sending a character to a database, usually in the form of a user login ID. Client liability for staggered operations is established by law. User IDs should be of a sort and should not be sold by distinguished individuals. Customer IDs follow similar requirements in several large organizations, such as beginning after the final title. The ID should not represent the user’s job title or feature to upgrade and reduce the amount of data accessible to the intruder. Authentication confirms that the stated identity of the individual is true and is usually executed utilizing a hidden login word of the client. Confirmations are based on three types of data. Category1: for example, an individual recognizable number of proofs (PIN) or a secret key. Category 2: It has an ATM card or a smart wallet. Something for contrast [32]. Category 3: Sometimes, the fourth element is used for this repeat (e.g., unique fingerprinting or retina check). Something you did can be classified as a name or various phrases on your phone. Two-factor authentication allows two of the three variables to be used in the verification process.

Passwords

Passwords must be guarded because they can be abused. In the perfect case, a watchword should be used as it once was [33]. This “one-time watchword,” or OTP, is very secure because every new connection needs a modern secret word. A secret word is equal to each username, a secret inactive word is named. A dynamic secret word is a secret word that varies with every logon. Passwords can also be modified from one extreme to another. Depending on the criticality of the data that require verification and the recurrence of the password execution, passwords may need to be changed on a weekly, annual, or another basis. Of necessity, the more often a password is used, the more likely it is to break. A character assignment that is often longer than the number given for a secret word may be a passphrase. The passphrase is converted into a secret computer word by the door. An inbound authorization gadget or a back-end evaluation device can perform tests for all these proposals and support different workstations or hosts. Various devices, such as buttons, memory cards, and smart cards, can provide passwords.

Tokens

Tokens are used to provide passwords as tiny, handheld devices. The four basic types of tokens will arrive next:

- Token are standard hidden statement.

- By writing a cryptic secret phrase, owners validate the token.

- The token would prove that the owner has a data framework if the secret phrase is right.

A clock-based token, a synchronous dynamic hidden expression, creates an additional, uncommon, secret word, a fixed time incentive associated with a similar secret word on a validity server (this key is a hidden-stick period). A mysterious secret key is mounted in a workstation alongside the owner’s lock. The information search in the working set or frame recognizes the mystery key of the owner and the lock, and the segment verifies that the secret key entered is legitimate and that it is installed in the time window.

Asynchronous Tokens, Challenge-Reaction

- An abnormal test string arrives from a workstation or system and together with the strongest PIN, the proprietor inserts the string into the token.

- The token counts on the string that uses the PIN which produces an answer recognition that is then put in the workstation or the system.

- The authentication method at the workstation or frame provides an evaluation equivalent to a token that utilizes the PIN of the user and contests the performance and validity the owner has entered.

Memory Cards

Memory cards give non-volatile data capacity, but they have no preparedness. Scrolling passwords and other associated information are stowed in a unique recollection card. Memory card images are a cell calling card and an ATM card.

Smart Cards

Smart cards make holding cards more efficient than memory cards. Batteries and chips are stored in the credit card facilities and used for holding computerized numbers, private keys, passwords, and other personal information.

Biometrics

Biometrics is an alternative to use codes to validate in a precise or sophisticated manner. The biometrics emphasis on the search factor Sort 3—you’re anything. Biometrics is characterized as a robotic device that indicates the distinction or evaluation of the physiological or behavioral characteristics of a living person. Identity in biometrics could be a one-to-one look at the characteristics of an individual in a series of lost images. Evaluation can be a single attempt to verify an individual’s identity assertion [34]. Biometrics are used to identify the evidence in physical checks and to validate them in reliable tests.

- There are three fundamental gages of execution in biometrics: false dismissal rate (FRR) or form I error—the level of valid topics that are falsely dismissed.

- False recognition rate (Extreme) or type II error—the level of invalid objects that are wrongly identified.

- CER—The number at which the FRR increases to the FAR. The bigger the CER, the more efficient the device.

Additional variables, including the enrolment period, throughput rate, and reliability, must be tested to increase the accuracy of the biometric system. The registration period is the time to register with the system first by checking the biometric characteristics. It is about 2 minutes to the point of entry. The true mark is provided with unique fingerprint frames for illustration with needs up to 250 KB per digit for a high-quality photograph. This level of data is sufficient if you are searching for exceptionally large databases under forensic applications. Absolute special labeling shall not be omitted from the innovation of finger scanning, or the highlights derived from that unique fingerprint shall be processed in a small format needing about 500 to 1,000 bytes of space. Enrolment data services may well be required because certain biometric features, such as voice and signature, can change over time. The rate of output is the rate at which the system defines and separates or recognizes individuals [35–38].

Valuable throughput rates are per decrease in the region of 10 subjects. Acceptability refers to protection, evasiveness, and physical and mental reassurance when the framework is used. In some situations, the exchange in body fluids in the oyster may be an issue with the eye filtering system. In the area that implies as a corpus, the biometric assembled photographs are incomplete. The collection is not included in the image database. Potential bumble sources include the confusion and mislabeling of images and other identity issues related to the image database. Similarly, the process and intent of image grouping should be carried out with unrelenting control focus [39]. These images are taken within the context of the admission plan and are essential for the correct movement of the biometric contraction in these areas.

6.7 Autonomic Security

Autonomous computing refers to a self-managing software series in which computer structures are reconfigured and self-cured. It will take several years for automatic computing to be fully realized, but it offers technology that can improve the security of specific data systems and cloud storage. The capacity of autonomous systems to gather and interpret data and to suggest or update protocols can help to improve safety and recovery from dangerous accidents [40].

Autonomic Systems

Autonomous systems focus on an individual, autonomous, and self-managed nervous system that tracks changes in the body and retain internal equalization. Thus, considering the annoyances of the network, an autonomous computer system attempts at self-management to sustain redress operations. Such a system requires the ability to provide direct support, decision-making skills, and therapeutic exercises and to create a harmonious normal operating atmosphere. Illustrations of occasions to be treated separately include the following:

- Outbreaks malignant

- The liability of hardware or software

- Extreme use of CPU

- Deceptions of influence

- Regulations on administration

- Unwanted failures of the staff

- Communication with other structures

- Learning apps

Self-management is the basic definition of autonomous systems by which the programming system guarantees a consistent role in the face of changing conditions both externally and internally, determines the need for revision, performs programs, performs repeat tests, performs medium products, and identifies and overrides the circumstances of problems in general.

Autonomic Protection

Autonomous self-protection includes knowledge of a hurtful condition and actions to alleviate the state. These program structures shall also be established to predict issues with the production of tangible data and to take corrective action. The independent security solution framework is based on organizational knowledge, associated resource capability, data accumulation, scenario complexity, and effect on the program concerned. The decision-making a feature of autonomous computation will act, such as changing the quality of the necessary authentication or updating the encryption keys, considering the current security situation and the safe environment of the system. The security environment is based on data obtained from networking or system management modules and then extracted to provide a higher representation of the safety position of the request. The often-underestimated concept of autonomous systems is that configuration changes, and additionally, independent experiments aimed at resolving other computing fields can generate security vulnerabilities.

Autonomic Self-Healing

The way IT systems are handled and restored, which can be problematic, can be time-consuming, and usually require a lot of effort. Autonomous self-healing systems can provide for differentiating and fixing software issues without manual intervention and identification of system defects. The cloud describes five basic features, three model benefits, and four model combinations. As polling, the five key features are follows:

- Self-service on request

- Admission to the universal network

- Mounting of reserve

- The personality of the position

- Rapid power

- Poor implementation

The kit templates are like the following:

- Service Cloud Applications (SaaS)—Network queries by customers have been completed.

- Cloud as a Service (PaaS) platform—Organize cloud requests from customers.

- Cloud Service Architecture (IaaS)—Delivery of fees, data, net volumes, and other major computing functions.

Independent management will record and track data and evaluate the issue area. This approach is sometimes directed by an independent leader who manages the computing of asset components with well-defined interfaces that support the activities of symptoms and mitigation. Regulated components control their internal conditions and have established performance characteristics and relationships with other computer components. The aim of self-healing therapy is to ensure that the components meet the requirements of their program.

6.8 Conclusion

Cloud computing security can be a key component of the cloud computing system. The reliability of cloud usage depends on reliable computer tools, good character management, and control methods that make for safe execution. This chapter focuses on data authentication for cloud systems that rely on traditional concepts of privacy, connectivity, and knowledge and are implemented geographically, physically, and dynamically. Essentially stable program plan and execution requirements provide minimal benefit, bond exchange, in-depth protection, protection, and open design. The use of computer program requirements for computer design, scheduling parameters, code hones, security execution and decay method, and safe software testing is also important for secure cloud programs. Penetration testing, functional testing, run testing, and vulnerability testing is cost-effective software types. The primary concern with the use of cloud services would be the availability of cloud server applications and information for the enterprise. It provides a reliable source framework for the cloud. Online sources may be an important requirement to set up a Cloud Trust System. Domain building needs reliable self-managed administrators who can handle cloud users’ resources efficiently and without any human involvement.

References

1. Security Guidance for Critical Areas of Focus in Cloud Computing V3.0, 177 pp, 2011 (http://www.cloudsecurityalliance.org/guidance/csaguide.pdf).

2. Information Assurance Technology Analysis Center (IATAC), Information Assurance Technology Analysis Center Falls Church VA. July 31, 2007.

3. Komaroff, M. and Baldwin, K., DoD Software Assurance Initiative. Software security assurance: A state-of-art report (sar). Information Assurance Technology Analysis Center (IATAC) Herndon VA. September 13, 2005, (https://acc.dau.mil/CommunityBrowser.aspx?id=25749).

4. Goertzel, K., Winograd, T. et al., Enhancing the Development Life Cycle to Produce Secure Software, Draft Version 2.0, United States Department of Defense Data and Analysis Center for Software, Rome, New York, July 2008.

5. Saltzer, J.H. and Schroeder, M.D., The Protection of Information in Computer Systems. Fourth ACM Symposium on Operating Systems Principles, October 1974.

6. Davis, J. F., Information systems security engineering: A critical component of the systems engineering lifecycle. ACM SIGAda Ada Letters, 24, 4, 13–18, 2004.

7. Goertzel, K., Winograd, T. et al., Enhancing the development life cycle to produce secure software, vol. 1, pp. i-iv, U.S. Department of Defense, 2008.

8. van Lamsweerde, A., Brohez, S., De Landtsheer, R., Janssens, D., From System Goals to Intruder Anti-Goals: Attack Generation and Resolution for Security Requirements Engineering, in: Proceedings of the Requirements for High Assurance Workshop, Monterey Bay, CA, September 8, 2003, pp. 49–56.

9. American Institute of Certified Public Accountants (AICPA), Accounting for the Costs of Computer Software Developed or Obtained for Internal Use, AICPA Statement of Position (SOP) No. 98–1, The Evolution of Computer Software on Business Practices and Standards. Academy of Legal, Ethical and Regulatory Issues, 19., March 1998, www.aicpa.org.

10. ISACA, IS Auditing Guideline on Due Professional Care, Information Systems Audit and Control Association, March 1, 2008, Mangla, M., Akhare, R., Ambarkar, S., Context-Aware Automation Based Energy Conservation Techniques for IoT Ecosystem, in: Energy Conservation for IoT Devices, pp. 129–153, Springer, Singapore, 2019.

11. Akhare, R., Mangla, M., Deokar, S., Wadhwa, V., Proposed Framework for Fog Computing to Improve Quality-of-Service in IoT Applications, in: Fog Data Analytics for IoT Applications, pp. 123–143, Springer, Singapore, 2020.

12. Deokar, S., Mangla, M., Akhare, R., A Secure Fog Computing Architecture for Continuous Health Monitoring, in: Fog Computing for Healthcare 4.0 Environments, pp. 269–290, Springer, Cham., 2021.

13. Potluri, S., Quality of Service based Task Scheduling Algorithms in Cloud Computing. Int. J. Electr. Comput. Eng., 7, 2, 1088–1095, April 2017.

14. Potluri, S., Efficient Hybrid QoS Driven Task Scheduling Algorithm in Cloud Computing Using a Toolkit: Clouds. JARDCS, 12-Special Issue, 1270–1283, 2017.

15. Potluri, S., A study on technologies in cloud-based design and manufacturing. IJMPERD, 8, 6, 187–192, 2018.

16. Potluri, S., Software virtualization using containers in google cloud platform. IJITEE, 8, 7, 2430–2432, May 2019.

17. Potluri, S., Simulation of QoS-Based Task Scheduling Policy for Dependent and Independent Tasks in a Cloud Environment, in: Smart Intelligent Computing and Applications, vol. 159, pp. 515–525, May 2019.

18. Potluri, S., Quality of Service-Based Cloud Models in Manufacturing Process Automation, in: Lecture Notes in Networks and Systems, vol. 32, pp. 231–240, 2019.

19. Potluri, S., Optimization model for QoS based task scheduling in cloud computing environment. IJEECS, 18, 2, 1081–1088, 2020.

20. Potluri, S., IOT Enabled Cloud Based Healthcare System Using Fog Computing: A Case Study. J. Crit. Rev., 7, 6, 1068–1072, 2020.

21. Potluri, S., Improved quality of service-based cloud service ranking and recommendation model. TELKOMNIKA Telecommun. Comput. Electron. Control, 18, 3, 1252–1258, June 2020, accredited First Grade by Kemenristekdikti, Decree No: 21/E/KPT/2018.

22. Potluri, S., A Hybrid PSO Based Task Selection and Recommended System for Cloud Data. Test Eng. Manage., 83, 10210–10217, March-April 2020.

23. Potluri, S., A Hybrid Self-Adaptive PSO and QoS Based Machine Learning Model for Cloud Service Data. Test Eng. Manage., 83, 23736–23748, May-June 2020.

24. Le, D.N., Kumar, R., Nguyen, G.N., Chatterjee, J.M., Cloud computing and virtualization, John Wiley & Sons, India, 2018.

25. Jha, S., Kumar, R., Chatterjee, J.M., Khari, M., Collaborative handshaking approaches between internet of computing and internet of things towards a smart world: a review from 2009–2017. Telecommun. Syst., 70, 4, 617–634, 2019.

26. Chatterjee, J.M., Kumar, R., Khari, M., Hung, D.T., Le, D.N., Internet of Things based system for Smart Kitchen. Int. J. Eng. Manuf., 8, 4, 29, 2018.

27. Sujath, R., Chatterjee, J.M., Hassanien, A.E., A machine learning forecasting model for COVID-19 pandemic in India. Stochastic Environ. Res. Risk Assess., 1, 34, 2020.

28. Chatterjee, J., IoT with Big Data Framework using Machine Learning Approach. Int. J. Mach. Learn. Networked Collab. Eng., 2, 02, 75–85, 2018.

29. Moy Chatterjee, J., Fog computing: beginning of a new era in cloud computing. Int. Res. J. Eng. Technol. (IRJET), 4, 05, 735, 2017.

30. Iwendi, C., Bashir, A.K., Peshkar, A., Sujatha, R., Chatterjee, J.M., Pasupuleti, S., Jo, O., COVID-19 Patient Health Prediction Using Boosted Random Forest Algorithm. Front. Public Health, 8, 357, 2020.

31. Kumar, A., Chatterjee, J.M., Díaz, V.G., A novel hybrid approach of SVM combined with NLP and probabilistic neural network for email phishing. Int. J. Electr. Comput. Eng., 10, 1, 486, 2020.

32. Chatterjee, J.M., Priyadarshini, I., Le, D.N., Fog Computing and Its security issues, in: Security Designs for the Cloud, Iot, and Social Networking, pp. 59–76, 2019.

33. Choudhuri, A., Chatterjee, J.M., Garg, S., Internet of Things in Healthcare: A Brief Overview, in: Internet of Things in Biomedical Engineering, pp. 131–160, Academic Press, India, 2019.

34. Chatterjee, J.M., Bioinformatics Using Machine Learning. Global J. Internet Interv. Fusion, 1, 1, 28–35, 2018.

35. Shri, M.L., Devi, E.G., Balusamy, B., Chatterjee, J.M., Ontology-Based Information Retrieval and Matching in IoT Applications, in: Natural Language Processing in Artificial Intelligence, pp. 113–130, Apple Academic Press, India, 13, 4, 2020.

36. Radhakrishnan, S., Lakshminarayanan, A.S., Chatterjee, J.M., Hemanth, D.J., Forest data visualization and land mapping using support vector machines and decision trees. Earth Sci. Inf., 1–19, 2020.

37. Jain, V. and Chatterjee, J.M. (Eds.), Machine Learning with Health Care Perspective: Machine Learning and Healthcare, vol. 13, Springer Nature, India, 2020.

38. Chatterjee, J.M., COVID-19 Mortality Prediction for India using Statistical Neural Network Models. Front. Public Health, 8, 441, 2020.

39. Kumar, A., Payal, M., Dixit, P., Chatterjee, J.M., Framework for Realization of Green Smart Cities Through the Internet of Things (IoT), in: Trends in Cloud-based IoT, pp. 85–111, Springer, Cham, 2020.

40. Sujatha, R., Nathiya, S., Chatterjee, J.M., Clinical Data Analysis Using IoT Data Analytics Platforms, in: Internet of Things Use Cases for the Healthcare Industry, pp. 271–293, Springer, Cham, 2020.

- *Corresponding author: [email protected]

- †Corresponding author: [email protected]