CHAPTER THREE

Databases

A cloud database is a database service that is built and deployed on cloud infrastructure. Easily via the internet, cloud databases serve the same functions as any other modern relational or nonrelational databases, but with the added flexibility that comes with cloud computing. With a cloud database, you don't have to buy any dedicated hardware, and you don't have to worry about the infrastructure to run and scale the database. Depending on your needs and your team's abilities, you can choose a fully managed or a self-managed service.

Advantages of databases in the cloud:

- Managed: Cloud databases offer options to automate database provisioning, storage capacity management, and other time-consuming management tasks.

- Scalable: As the amount of data stored in your cloud database grows or shrinks, the storage capacity can be adjusted at runtime to accommodate the changes.

- Easy to access: Cloud databases can be easily accessed over the internet via APIs or a web console.

- Disaster recovery: Managed databases offer automated backups and recovery to restore instances to an earlier state.

- Secure: Cloud databases are secure, offering data encryption at rest and in transit, along with private connectivity to the applications that interact with them.

This chapter covers the two broad categories of cloud databases (relational and nonrelational), their advantages and common use cases, and options available for running them on Google Cloud.

Databases are critical parts of an application; they store data so that the application can easily access it when needed. For example: On a retail website you are browsing products to purchase. These items are stored in a database and rendered on the page when you request them. There are two broad categories of databases: relational (SQL) and nonrelational (NoSQL) databases.

Relational Databases

In relational databases information is stored in tables, rows, and columns, an arrangement that typically works best for structured data. As a result, they are used for applications in which the structure of the data does not change often. SQL (Structured Query Language) is used when interacting with most relational databases. They offer ACID consistency mode for the data, which means:

- Atomic: All operations in a transaction succeed or the operation is rolled back.

- Consistent: On the completion of a transaction, the database is structurally sound.

- Isolated: Transactions do not contend with one another. Contentious access to data is moderated by the database so that transactions appear to run sequentially.

- Durable: The results of applying a transaction are permanent, even in the presence of failures.

Because of these properties, relational databases are used in applications that require high accuracy and for transactional queries such as financial and retail transactions. For example: In banking when a customer makes a funds transfer request, you want to make sure that the transaction is possible and that it happens on the most up-to-date account balance; in this case, an error or resubmit request is likely fine but stale response is not.

Nonrelational databases

Nonrelational databases (or NoSQL databases) store complex, unstructured data in a nontabular form such as documents. Nonrelational databases are often used when large quantities of complex and diverse data need to be organized, or where the structure of the data is regularly evolving to meet new business requirements. Unlike relational databases, they perform faster because a query doesn't have to access several tables to deliver an answer, making them ideal for storing data that may change frequently or for applications that handle many different kinds of data. For example: An apparel store might have a database in which shirts have their own document containing all their information, including size, brand, and color, with room for adding more parameters such as sleeve size and collars later.

Qualities that make NoSQL databases fast:

- Optimized: They are typically optimized for a specific workload pattern (i.e., key-value, graph, wide-column).

- Horizontal scaling: They use range or hashed distributions to provide horizontal scaling.

- Eventual consistency: Many NoSQL stores usually exhibit consistency at some later point (e.g., lazily at read time). However, Firestore offers strong global consistency.

- Transactions: A majority of NoSQL stores don't support cross-shard transactions or flexible isolation modes. However, Firestore provides ACID transactions across shards with serializable isolation.

Because of these properties, nonrelational databases are used in applications that require reliability, availability, and large-scale, frequent data changes. They can easily scale horizontally by adding more servers, unlike some relational databases, which scale vertically by increasing the machine size as the data grows (although some relational databases such as Cloud Spanner support scale-out and strict consistency). Nonrelational databases can store a variety of unstructured data such as documents, key-value, graphs, wide columns, and more.

Which One Is Best?

Choosing a relational or a nonrelational database largely depends on the use case. Generally, if your application requires ACID transactions and your data is not going to change much, select a relational database. If your data may change later and if scale and availability is a bigger requirement than consistency, then a nonrelational database is a preferable choice.

In this chapter we will explore various types of databases available in Google Cloud and their use cases.

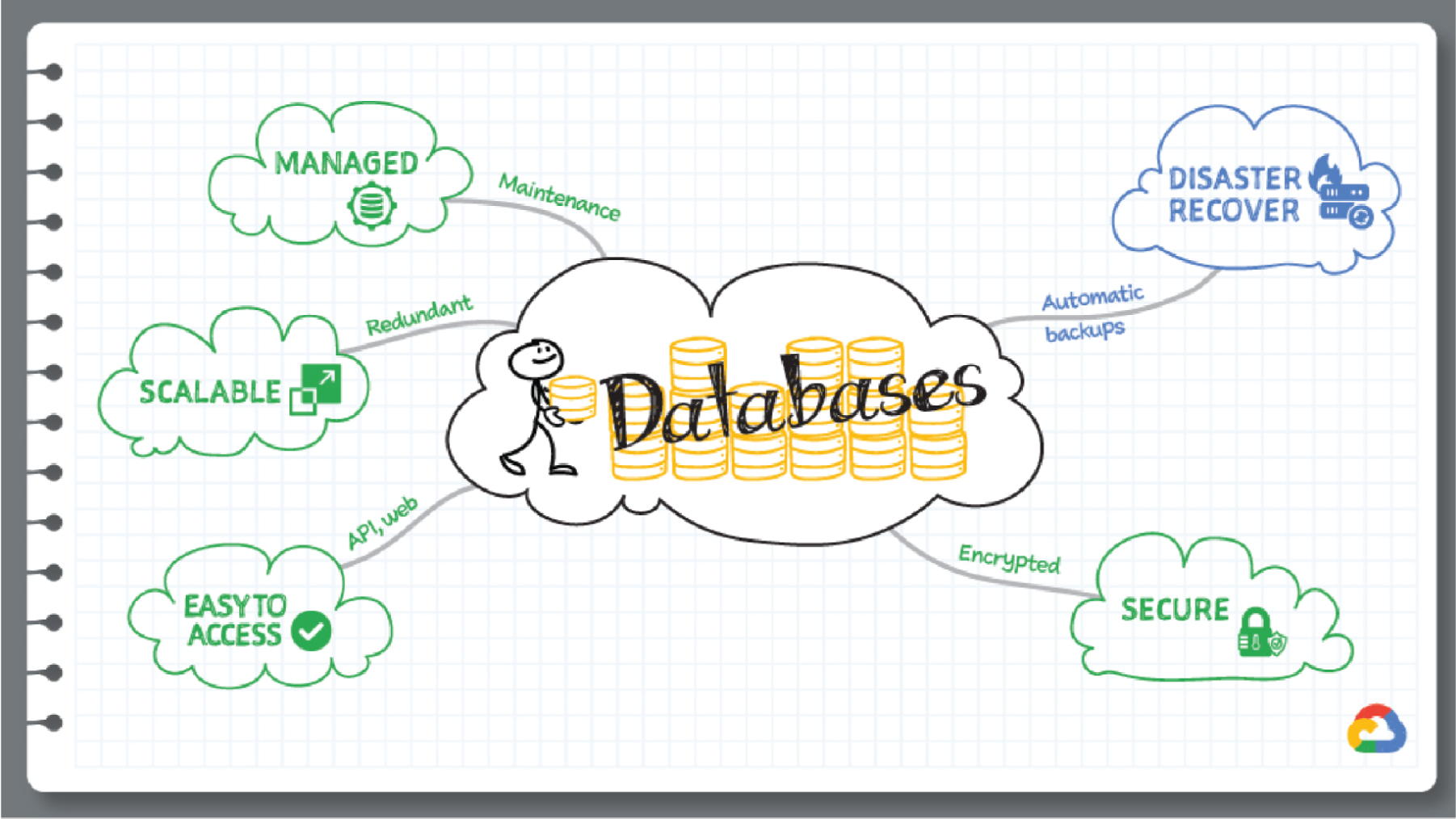

When you are building an application, chances are you will need a relational database for transaction processing. That's where Cloud SQL comes in. It is a fully managed relational database for MySQL, PostgreSQL, and SQL Server. It reduces maintenance cost and automates database provisioning, storage capacity management, backups, and out-of-the-box high availability and disaster recovery/failover. Cloud SQL offers quick setup with standard connection drivers and built-in migration tools.

How to Set Up Cloud SQL

Cloud SQL is easy to set up:

- Select the region and zone where you would like the instances to be and they are created.

- Configure the machine type with the right number of CPU and the amount of memory your application needs.

- Choose the storage type, solid-state or hard disk drives, depending on latency, queries per second (QPS), and cost requirements.

Reliability and Availability

Cloud SQL also offers automated backups and point-in-time recovery options. You can set time slots and locations for backups. For production applications, it is recommended that you enable the built-in high availability (HA) option, which supports 99.95% SLA. With this, Google Cloud continuously monitors the Cloud SQL instance with a heartbeat signal, and when a primary fails, an automatic failover is triggered to another zone in your selected region in case of an outage. You can also create replicas across regions to protect from regional failure. And you can enable automatic storage increase so that more storage is added when nearing capacity.

Cloud SQL Insights, a free tool, helps detect, diagnose, and identify problems in a query for Cloud SQL databases. It provides self-service, intuitive monitoring, and diagnostic information that goes beyond detection to help you identify the root cause of performance problems.

Migrating an Existing MySQL Database to Cloud SQL

If you have an existing application that you are moving to the cloud, chances are you need to migrate your existing SQL database to Cloud SQL. Database Migration Service (DMS) simplifies the migration of MySQL and PostgreSQL databases from on-premises, Google Compute Engine, and other clouds to Cloud SQL. It is serverless, easy to set up, and available at no additional cost. It replicates data continuously for minimal downtime migrations.

Here's how it works:

- Provide your data source details—type of database engine, such as MySQL, PostgreSQL, Amazon RDS, or others. Pick one time or continuous replication for minimal downtime.

- Create a Cloud SQL instance as your destination.

- DMS makes connectivity to the source instance easy by providing multiple options. You can allow-list an IP address, create a reverse SSH tunnel via a cloud-hosted virtual machine, or set up VPC peering.

- Finally, test and promote the migrated instance to the primary Cloud SQL instance.

Security and Compliance

The data in Cloud SQL is automatically encrypted at rest and in transit. External connections can be enforced to be SSL-only. For secure connectivity you can also use Cloud SQL Proxy, a tool that helps you connect to your Cloud SQL instance from your local machine. You can control network access with firewall protection.

Cloud SQL in Action

Cloud SQL can be used in multiple use cases in conjunction with different compute options. You can use it in any application as a transactional database, long-term analytics backend with BigQuery, predictive analytics with Vertex AI, and event-driven messaging with Pub/Sub. Cloud SQL, when combined with Datastream (Change Data Capture), makes a great real-time analysis solution for any incoming data. Some examples include web/mobile apps, gaming, predictive analytics and inventory tracking.

Databases are part of virtually every application you run in your organization, and great apps need great databases. This section is focused on one such great database: Cloud Spanner.

Cloud Spanner is the only enterprise-grade, globally distributed, and strongly consistent database service built for the cloud, specifically to combine the benefits of relational database structure with nonrelational horizontal scale. It is a unique database that combines transactions, SQL queries, and relational structure with the scalability that you typically associate with nonrelational or NoSQL databases.

How Does Spanner Work?

You can deploy your Cloud Spanner instance in a configuration of your choice with a specific number of nodes, databases, and zones. In the image you see a four-node regional Cloud Spanner instance hosting two databases. A node is a measure of compute in Spanner. Node servers serve the read and write/commit transaction requests, but they don't store the data. Each node is replicated across three zones in the region. The database storage is also replicated across the three zones. Nodes in a zone are responsible for reading and writing to the storage in their zone. The data is stored in Google's underlying Colossus distributed replicated filesystem. This provides huge advantages when it comes to redistributing load, because the data is not linked to individual nodes. If a node or a zone fails, the database remains available, being served by the remaining nodes. No manual intervention is needed to maintain availability.

How Does Spanner Provide High Availability and Scalability?

Each table in the database is stored, sorted by primary key. Tables are divided by ranges of the primary key, and these divisions are known as splits. Each split is managed completely independently by different Spanner nodes. The number of splits for a table varies according to the amount of data; empty tables have only a single split. The splits are rebalanced dynamically depending on the amount of data and the load (dynamic resharding). But remember that the table and nodes are replicated across three zones. How does that work?

Everything is replicated across the three zones; the same goes for split management. Split replicas are associated with a group (Paxos) that spans zones. Using Paxos consensus protocols, one of the zones is determined to be a leader. The leader is responsible for managing write transactions for that split, whereas the other replicas can be used for reads. If a leader fails, the consensus is redetermined, and a new leader may be chosen. For different splits, different zones can become leaders, thus distributing the leadership roles among all the Cloud Spanner compute nodes. Nodes will likely be both leaders for some splits and replicas for others. Using this distributed mechanism of splits, leaders, and replicas, Cloud Spanner achieves both high availability and scalability.

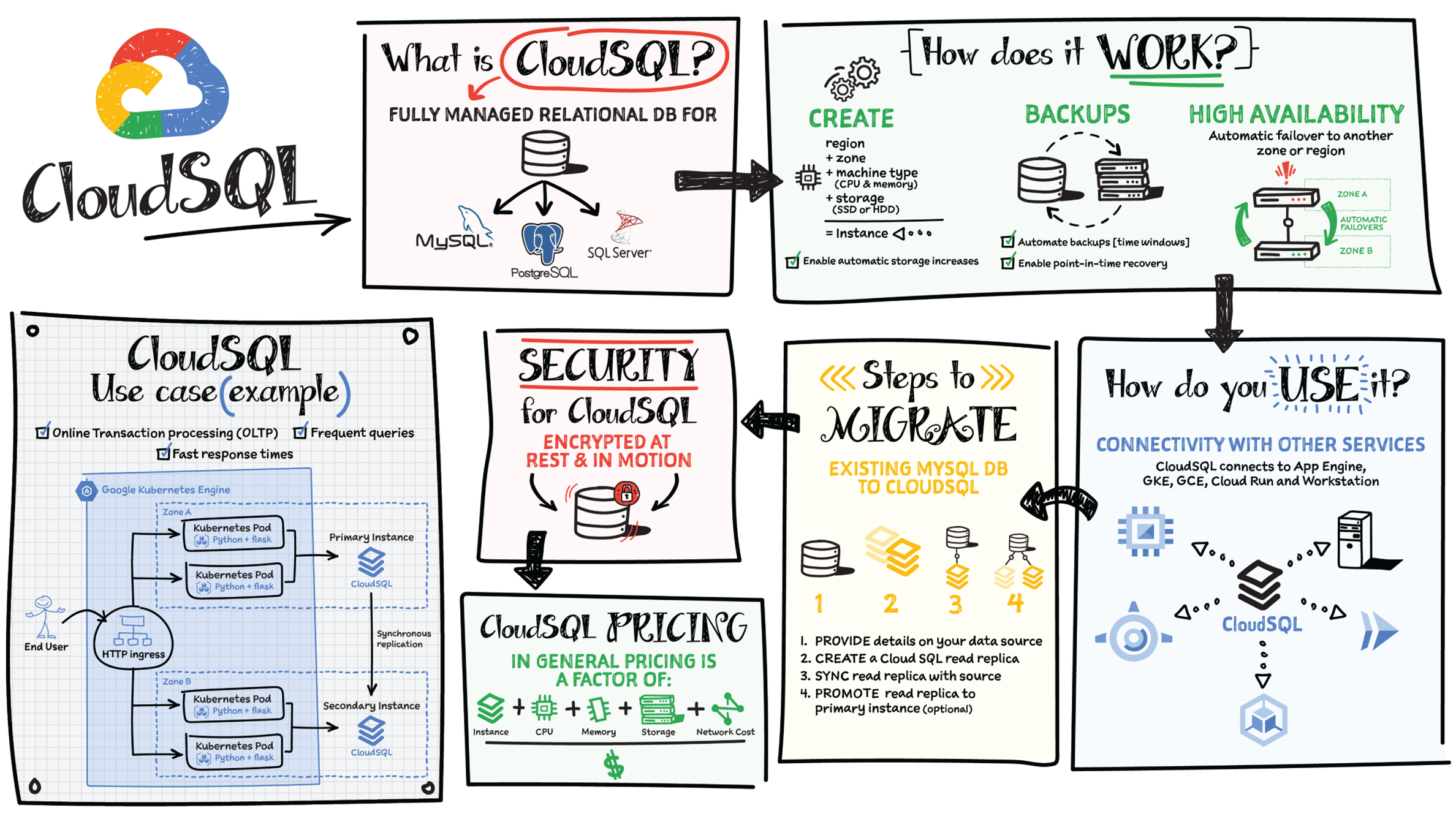

How Do Reads and Writes Work?

There are two types of reads in Cloud Spanner:

- Strong reads are used when the absolute latest value needs to be read. Here's how it works:

- The Cloud Spanner API identifies the split, looks up the Paxos group to use for the split, and routes the request to one of the replicas (usually in the same zone as the client). In this example, the request is sent to the read-only replica in zone 1.

- The replica requests from the leader if it is OK to read, and it asks for the TrueTime timestamp of the latest transaction on this row.

- The leader responds, and the replica compares the response with its own state.

- If the row is up-to-date, it can return the result. Otherwise, it needs to wait for the leader to send updates.

- The response is sent back to the client.

In some cases—for example, when the row has just been updated while the read request is in transit—the state of the replica is sufficiently up-to-date that it does not even need to ask the leader for the latest transaction.

- Stale reads are used when low read latency is more important than getting the latest values, so some data staleness is tolerated. In a stale read, the client does not request the absolute latest version, just the data that is most recent (e.g., up to n seconds old). If the staleness factor is at least 15 seconds, the replica in most cases can return the data without even querying the leader since its internal state will show that the data is sufficiently up-to-date. You can see that in each of these read requests, no row locking was required—the ability for any node to respond to reads is what makes Cloud Spanner so fast and scalable.

How Does Spanner Provide Global Consistency?

TrueTime is essential to make Spanner work as well as it does…so, what is it, and how does it help?

TrueTime is a way to synchronize clocks in all machines across multiple datacenters. The system uses a combination of GPS and atomic clocks, each correcting for the failure modes of the other. Combining the two sources (using multiple redundancy, of course) gives an accurate source of time for all Google applications. But clock drift on each machine can still occur, and even with a sync every 30 seconds, the difference between the server's clock and the reference clock can be as much as 2 ms. The drift will look like a sawtooth graph, with the uncertainty increasing until corrected by a clock sync. Since 2 ms is quite a long duration (in computing terms, at least), TrueTime includes this uncertainty as part of the time signal.

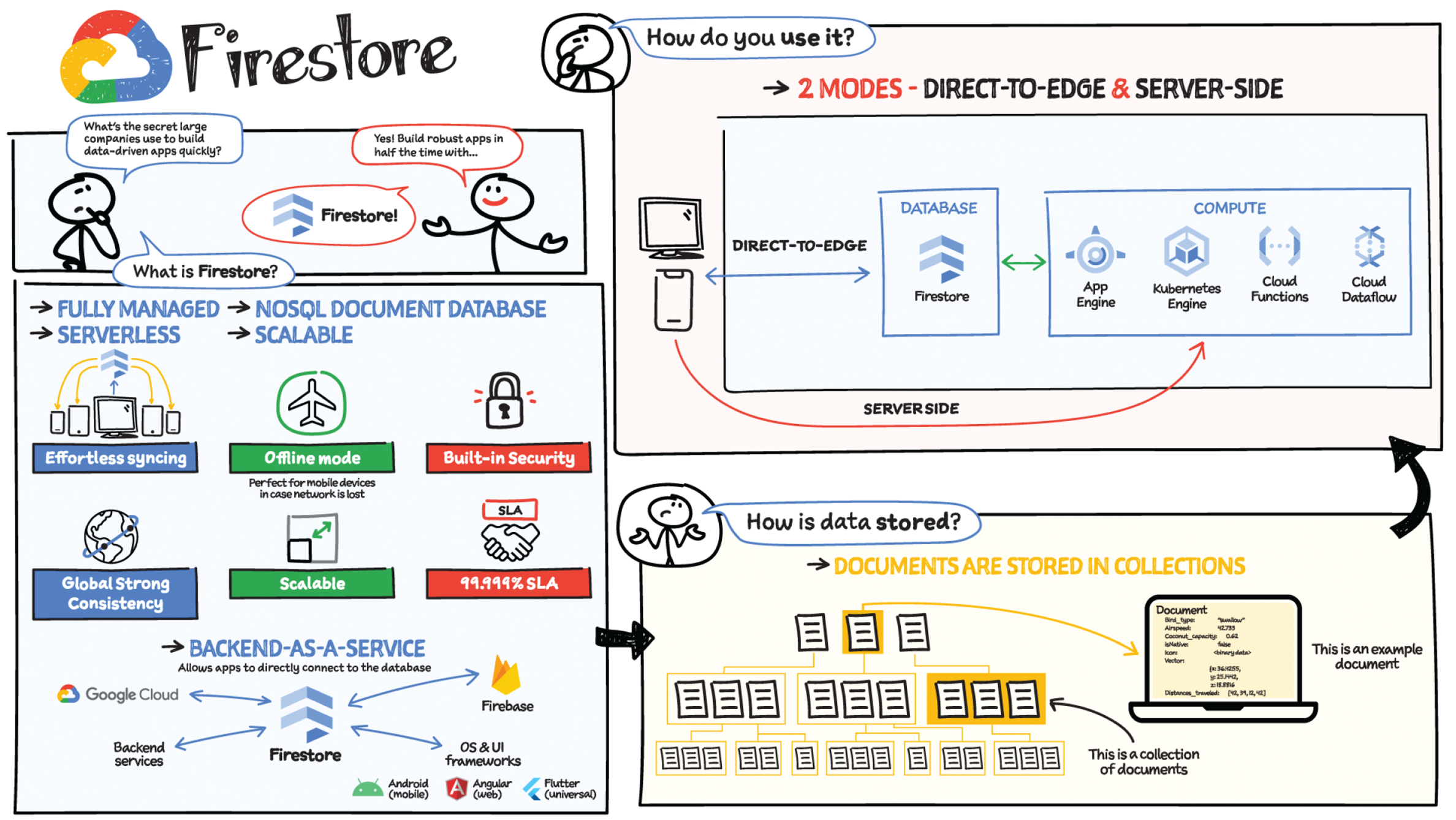

Your product teams may wonder why it takes so long to build a new feature or application. One reason that building applications is such a heavy lift is technical complexity, which includes the complexity of backend services that are used to manage and store data. Time spent dealing with this technical complexity is a distraction from delivering on core business value. Firestore eliminates this distraction by having Google Cloud manage backend complexity through a complete backend-as-a-service! Firestore acts as a glue that intelligently brings together the complete Google Cloud backend ecosystem, in-app services from Firebase, and core UI frameworks and OS from Google.

What Is Firestore?

Firestore is a serverless, fully managed NoSQL document database that scales from zero to global scale without configuration or downtime. Here's what makes Firestore unique:

- Ideal for rapid, flexible, and scalable web and mobile development with direct connectivity to the database.

- Supports effortless real-time data synchronization with changes in your database as they happen.

- Robust support for offline mode, so your users can keep interacting with your app even when the internet isn't available or is unreliable.

- Fully customizable security and data validation rules to ensure data is always protected.

- Built-in strong consistency, elastic scaling, high performance, and best-in-class 99.999% availability.

- Integration with Firebase and Google Cloud services like Cloud Functions and BigQuery.

- In addition to a rich set of Google Cloud service integrations, Firestore offers deep one-click integrations with a growing set of third-party partners via Firebase Extensions to help you build applications even more rapidly.

Document-Model Database

Firestore is a document-model database. All of your data is stored in documents and then collections. You can think of a document as a JSON object. It's a dictionary with a set of key-value mappings, where the values can be several different supported data types, including strings, numbers, or binary values.

Documents are stored in collections. Documents can't directly contain other documents, but they can point to subcollections that contain other documents, which can point to subcollections, and so on. This structure brings with it a number of advantages. For starters, all queries that you make are shallow, meaning that you can grab a document without worrying about grabbing all the data underneath it. And this means that you can structure your data hierarchically in a way that makes sense to you logically, without having to worry about grabbing tons of unnecessary data.

How Do You Use Firestore?

Firestore can be used in two modes:

- Firestore in Native mode: This mode is differentiated by its ability to directly connect your web and mobile apps to Firestore. Native Mode supports up to 10K writes per second and over a million connections.

- Firestore in Datastore mode: This mode supports only server-side usage of Firestore, but it supports unlimited scaling, including writes.

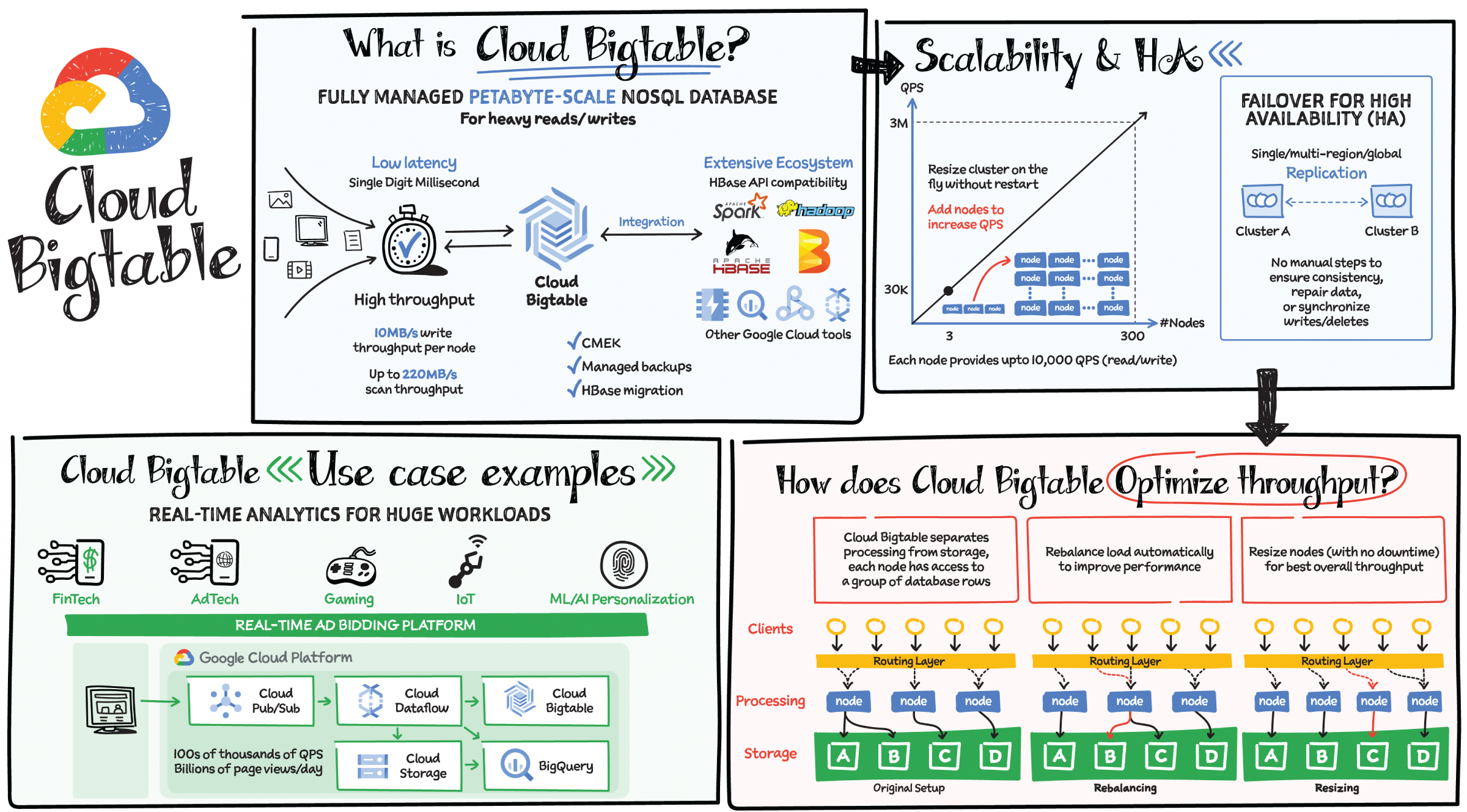

Building an application that needs low latency and high throughput? You need a database that can scale for a large number of reads and writes. Cloud Bigtable is designed to handle just that.

Cloud Bigtable is a fully managed wide-column NoSQL database that scales to petabyte-scale. It's optimized for low latency, large numbers of reads and writes, and maintaining performance at scale. It offers really low latency of the order of single-digit milliseconds. It is an ideal data source for time series and MapReduce-style operations. Bigtable supports the open source HBase API standard to easily integrate with the Apache ecosystem, including HBase, Beam, Hadoop, and Spark. It also integrates with the Google Cloud ecosystem, including Memorystore, BigQuery, Dataproc, Dataflow, and more.

Some Cloud Bigtable Features

- Data is by default encrypted with Google managed encryption keys, but for specific compliance and regulatory requirements if customers need to manage their own keys, customer managed encryption keys (CMEKs) are also supported.

- Bigtable backups let you save a copy of a table's schema and data, then restore from the backup to a new table at a later time. Backups can help you recover from application-level data corruption or from operator errors such as accidentally deleting a table.

Scale and High Availability (HA)

How BIG is Bigtable? Bigtable has nearly 10 exabytes of data under management.

It delivers highly predictable performance that is linearly scalable. Throughput can be adjusted by adding/removing nodes—each node provides up to 10,000 operations per second (read and write). You can use Bigtable as the storage engine for large-scale, low-latency applications as well as throughput-intensive data processing and analytics. It offers high availability with an SLA of 99.9% for zonal instances. It's strongly consistent in a single cluster; replication between clusters adds eventual consistency. If you leverage Bigtable's multicluster routing across two clusters, the SLA increases to 99.99%, and if that routing policy is utilized across clusters in three different regions, you get a 99.999% uptime SLA.

Replication for Cloud Bigtable enables you to increase the availability and durability of your data by copying it across multiple regions or multiple zones within the same region. To use replication in a Bigtable instance, just create an instance with more than one cluster or add clusters to an existing instance. Bigtable supports up to four replicated clusters located in Google Cloud zones where Bigtable is available. Placing clusters in different zones or regions enables you to access your data even if one zone or region becomes unavailable. Bigtable treats each cluster in your instance as a primary cluster, so you can perform reads and writes in each cluster. You can also set up your instance so that requests from different types of applications are routed to different clusters. The data and changes to data are synchronized automatically across clusters.

How Does It Optimize Throughput?

Through separation of processing and storage, Cloud Bigtable is able to automatically configure throughput by adjusting the association of nodes and data. In the rebalancing example, if Node A is experiencing a heavy load, the routing layer can move some of the traffic to a less heavily loaded node, improving overall performance. Resizing comes into play when a node is added to again ensure a balanced load across nodes, ensuring best overall throughput.

Choice of app profile and traffic routing can also affect performance. An app profile with multicluster routing automatically routes requests to the closest cluster in an instance from the perspective of the application, and the writes are then replicated to the other clusters in the instance. This automatic choice of the shortest distance results in the lowest possible latency. An app profile that uses single-cluster routing can be optimal for certain use cases, like separating workloads or having read-after-write semantics on a single cluster, but it will not reduce latency in the way multicluster routing does.

Replication can improve read throughput, especially when you use multicluster routing. And it can reduce read latency by placing your data geographically closer to your users. Write throughput does not increase with replication because write to one cluster must be replicated to all other clusters in the instance, resulting in each cluster spending the CPU resources to pull changes from the other clusters.

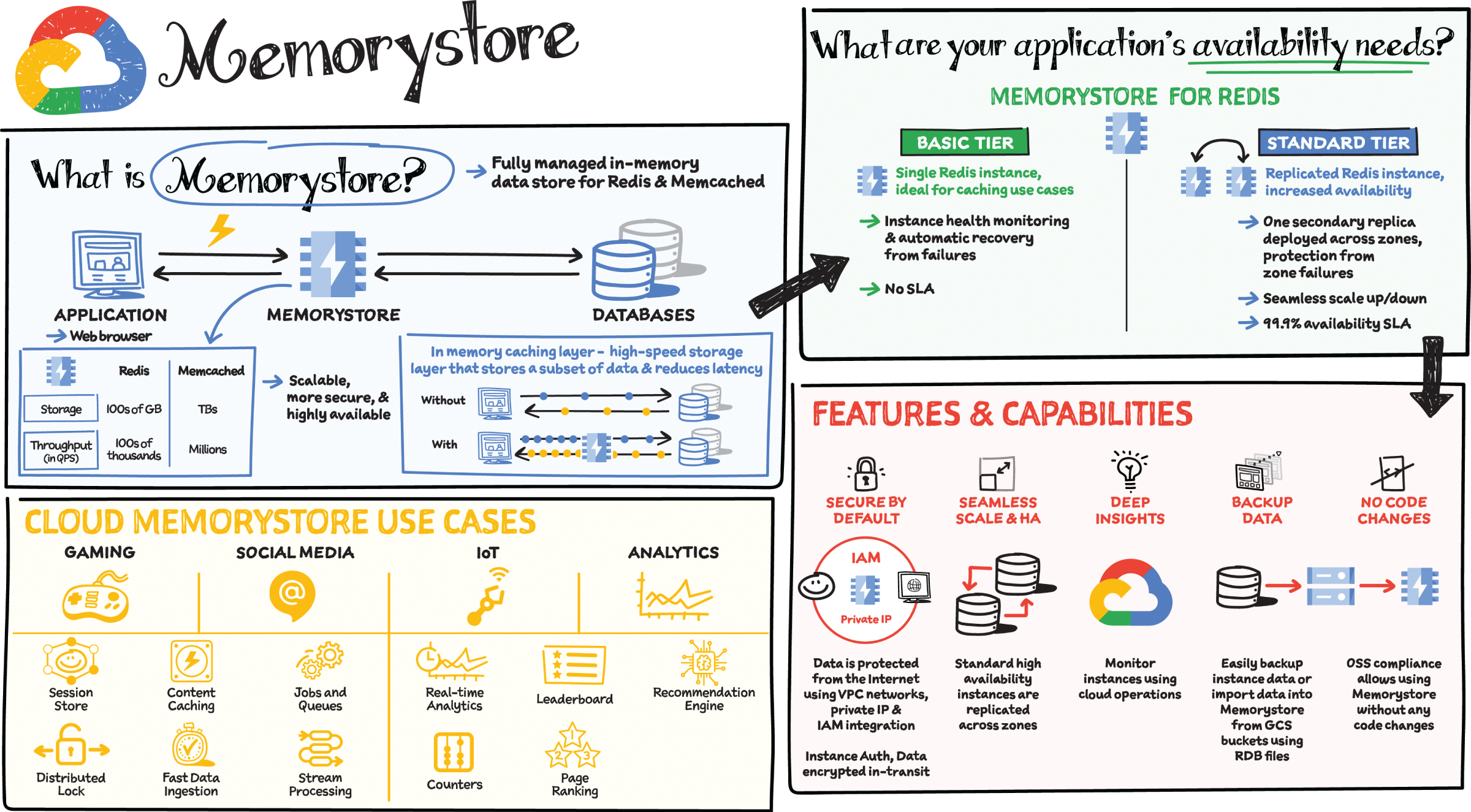

Many of today's applications, ranging from gaming, to cybersecurity, to social media, require processing data at sub-millisecond latency to deliver real-time experiences. To meet demands of low latency at increased scale and reduced cost, you need an in-memory data store. Redis and Memcached are among the most popular. Memorystore is a fully managed in-memory data store service for Redis and Memcached at Google Cloud. Like any other Google Cloud service, it is fast, scalable, highly available, and secure. It automates the complex tasks of provisioning, replication, failover, and patching so that you can spend more time on other activities. It comes with a 99.9% SLA and integrates seamlessly with your apps within Google Cloud.

Memorystore is used for different types of in-memory caches and transient stores. Memorystore for Redis is also used as a highly available key-value store. This serves multiple use cases, including web content caches, session stores, distributed locks, stream processing, recommendations, capacity caches, gaming leaderboards, fraud/threat detection, personalization, and AdTech.

What are Your Application's Availability Needs?

Memorystore for Redis offers Basic and Standard tiers. The Basic tier is best suited for applications that use Redis as a cache and that can withstand a cold restart and full data flush. Standard tier instances provide high availability using replication and automatic failover.

Memorystore for Memcached instances are provisioned on a node basis with vCPU and memory per cores per node, which means you can select them based on your specific application requirements.

Features and Capabilities

- Secure: Memorystore is protected from the internet using VPC networks and private IP and comes with IAM integration to protect your data. Memorystore for Redis also offers instance-level AUTH and in-transit encryption. It is also compliant with major certifications (e.g., HIPAA, FedRAMP, and SOC2).

- Observability: You can monitor your instance and set up custom alerts with Cloud Monitoring. You can also integrate with OpenCensus to get more insights into client-side metrics.

- Scalable: Start with the lowest tier and smallest size and then grow your instance as needed. Memorystore provides automated scaling using APIs and optimized node placement across zones for redundancy. Memorystore for Memcached can support clusters as large as 5 TB, enabling millions of QPS at very low latency.

- Highly available: Memorystore for Redis instances are replicated across two zones and provide a 99.9% availability SLA. Instances are monitored constantly and with automatic failover—applications experience minimal disruption.

- Migrate with no code changes: Memorystore is open source software compliant, which makes it easy to switch your applications with no code changes.

- Backups: Memorystore for Redis offers an import/export feature to migrate Redis instances to Google Cloud using RDS snapshots.

Use Cases

Memorystore is great for use cases that require fast, real-time processing of data. Simple caching, gaming leaderboards, and real-time analytics are just a few examples.

- Caching: Caches are an integral part of modern application architectures. Memorystore is used in caching use cases such as session management, frequently accessed queries, scripts, and pages.

- Gaming: With data structures like Sorted Set, Memorystore makes it easy to maintain a sorted list of scores for a leaderboard while providing uniqueness of elements. Redis hash makes it fast and easy to store and access player profiles.

- Stream Processing: Whether processing a Twitter feed or stream of data from IoT devices, Memorystore is a perfect fit for streaming solutions combined with Dataflow and Pub/Sub.

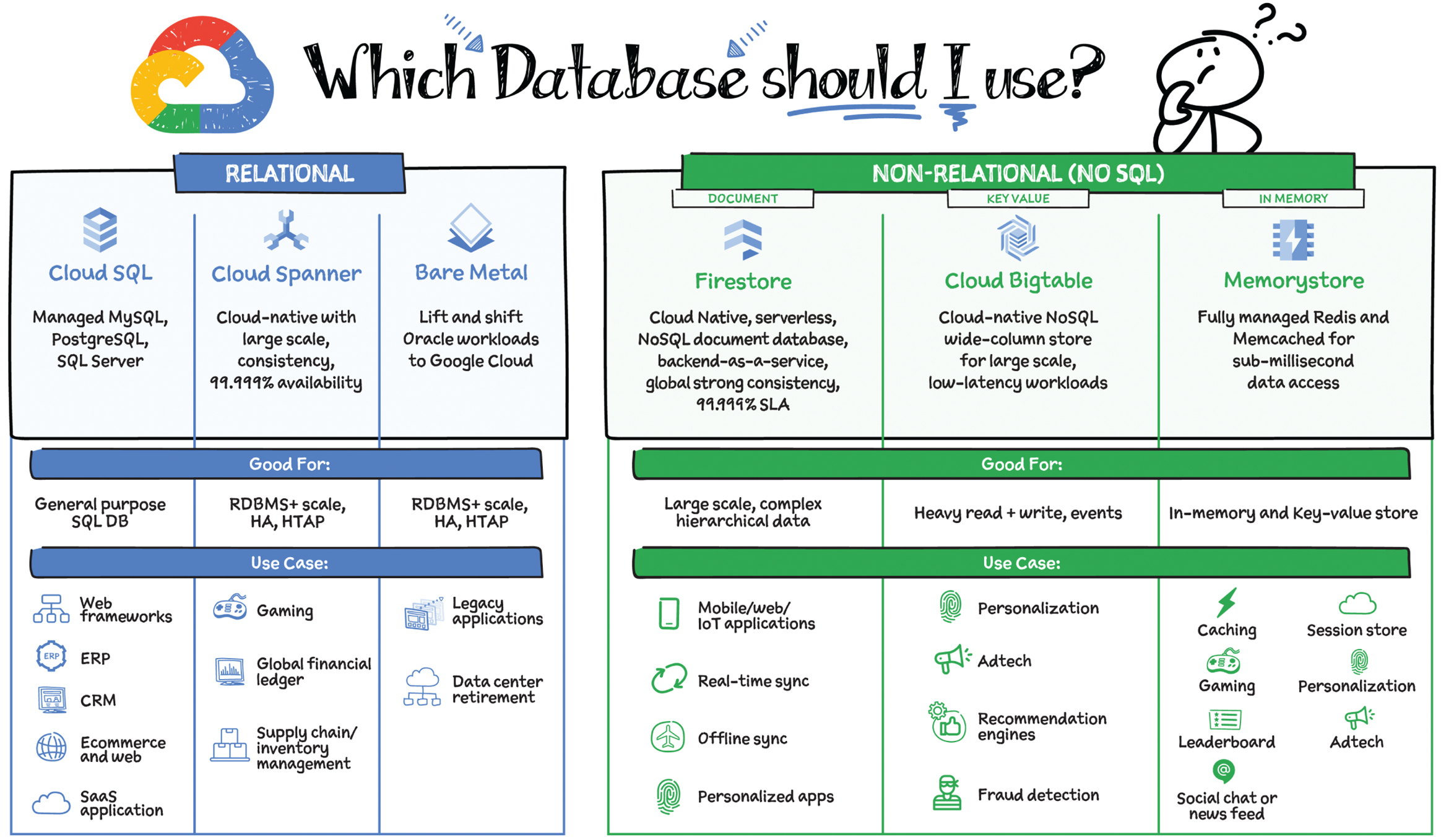

Picking the right database for your application is not easy. The choice depends on the use case: transactional processing, analytical processing, in-memory database, and so forth. In the beginning of this chapter, you learned when to use relational (SQL) and nonrelational (NoSQL) databases, and throughout this chapter we covered each of the databases in Google Cloud. Here let's stack them side by side to get a clear understanding of the use cases they support.

We have three different relational database options:

- Cloud SQL: Provides managed MySQL, PostgreSQL, and SQL Server databases on Google Cloud. It reduces maintenance cost and automates database provisioning, storage capacity management, backups, and out-of-the-box high availability and disaster recovery/failover. For these reasons, it is best for general-purpose web frameworks, CRM, ERP, SaaS, and e-commerce applications.

- Cloud Spanner: Cloud Spanner is an enterprise-grade, globally distributed, and strongly consistent database that offers up to 99.999% availability, built specifically to combine the benefits of relational database structure with nonrelational horizontal scale. It is a unique database that combines ACID transactions, SQL queries, and relational structure with the scalability that you typically associate with nonrelational or NoSQL databases. As a result, Spanner is best used for applications such as gaming, payment solutions, global financial ledgers, retail banking, and inventory management that require the ability to scale limitlessly with strong consistency and high availability.

- Bare Metal Solution: Provides hardware to run specialized workloads with low latency on Google Cloud. This is specifically useful if there is an Oracle database that you want to lift and shift into Google Cloud. This enables data center retirements and paves a path to modernize legacy applications. For specifics on Bare Metal solution, refer to Chapter 1 where we covered this in detail.

We have three nonrelational databases in Google Cloud:

- Firestore: Firestore is a serverless document database that scales on demand, is strongly consistent, supports ACID transactions, offers up to 99.999% availability, and acts as a backend-as-a-service. It is a DBaaS that is optimized for building applications. It is perfect for all general-purpose use cases such as e-commerce, gaming, IoT, and real-time dashboards. With Firestore, users can interact with and collaborate on live and offline data, making it great for real-time application and mobile apps.

- Cloud Bigtable: Cloud Bigtable is a sparsely populated table that can scale to billions of rows and thousands of columns, enabling you to store terabytes or even petabytes of data. It is ideal for storing large amounts of single-keyed data with very low latency. It supports high read and write throughput at single-digit-millisecond latency, and it is an ideal data source for MapReduce operations. It also supports the open source HBase API standard, so it easily integrates with the Apache ecosystem, including HBase, Beam, Hadoop, and Spark, along with Google Cloud ecosystem.

- Memorystore: Memorystore is a fully managed in-memory data store service for Redis and Memcached at Google Cloud. It is best for in-memory and transient data stores and automates the complex tasks of provisioning, replication, failover, and patching so that you can spend more time coding. Because it offers extremely low latency and high performance, Memorystore is great for web and mobile, gaming, leaderboard, social, chat, and newsfeed applications.