CHAPTER FIVE

Application Development and Modernization Opening

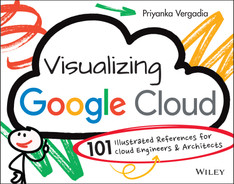

While virtually all applications share the same basic elements (compute, storage, database access, and networking ), there are different ways to develop and deploy those applications in the cloud depending on your specific requirements. You can migrate an existing on-premises application to the cloud, deploy your application to a hybrid or multicloud environment, or build a cloud-native, serverless application. Most modern applications benefit from a microservices-based, event-driven architecture. In this type of architecture, each microservice can be developed and deployed independent of other services, which offers flexibility to developers and increases development velocity. Building or modernizing an application requires:

- Service orchestration and choreography

- DevOps for continuous integration and continuous delivery from code to deployed applications

- Operations to monitor, log, and troubleshoot applications

- Security to secure the data and the infrastructure

This chapter covers the Google Cloud tools used to develop and modernize an application, whether you're migrating, deploying to hybrid and multi-cloud environments, or creating cloud-native, microservices-based applications.

Picture this: You are building or enhancing an application to fulfill a business need. You know that you want to deploy this application in the cloud. At the start, you'll need to answer a few questions that will shape the entire effort and the tools you will need:

- Will you migrate an existing on-premises application to the cloud and then add additional functionality?

- Will you deploy to a hybrid or a multicloud environment?

- Will you build a cloud-native application?

Building and Modernizing Cloud Applications

- Migrating an application to the cloud: Migrating an existing application can increase the speed of deployment, while enabling you to capitalize on the benefits of the cloud, modernize your application in the process, and focus more on day 2 operations like maintenance and optimization.

- Hybrid and multicloud applications: Sometimes simple migration is not the best way forward. You may have applications that need to be deployed across multiple clouds and on-premises. To support such applications, you must be able to build, deploy, and optimize them consistently, with ease and security across hybrid and multicloud environments.

- Cloud-native applications: Cloud-native applications are built to run in the cloud, focusing more on services and code than on the underlying infrastructure that is used to run them. Often based on a microservices architecture, they typically rely on containers for packaging and deployment of code. This speeds delivery and supports better DevOps practices where development and operations teams work closely together.

You can deploy cloud-native applications in fully managed serverless environments where you develop, deploy, and scale applications rapidly and securely without having to manage the infrastructure as you scale. This helps reduce time-to-market and simplifies the developer experience.

Microservices or Monolith?

Whether you are building an application from scratch or migrating and enhancing it, you want to think about modernizing your application for scale, agility, manageability, and delivery speed. Most modern-day requirements call for a microservices architecture because:

- Services are standalone or modular and can be reused across multiple applications.

- There is no single point of failure.

- You can independently release services.

- Development teams can be diverse, using different programming languages.

- DevOps practices have matured to support the speed of microservices deployments.

Monolithic application is built as a single unit; to make any changes to the system, a developer must build and deploy an updated version of the server-side application. When you are migrating a monolithic application to the cloud, you can approach it three ways:

- Lift and shift: You can migrate the entire monolith to a virtual machine or needed hardware in the cloud. This is often the fastest, simplest way to migrate, but it is not likely a long-term solution.

- Move and improve: You could migrate one service at a time, decoupling the service and its dependencies while containerizing your applications and adopting a microservices architecture. This approach takes longer, but you will modernize your application in the process, helping you reap the benefits of cloud.

- Refactor: If you choose to refactor your application, you can adopt serverless deployments with even more benefits.

What Do Most Microservices Need?

Hybrid and multicloud applications are often deployed in containers so that they can be orchestrated consistently across multiple environments. But these microservices need to communicate with each other, need a proper DevOps process for continuous deployment, and have to be monitored and secured.

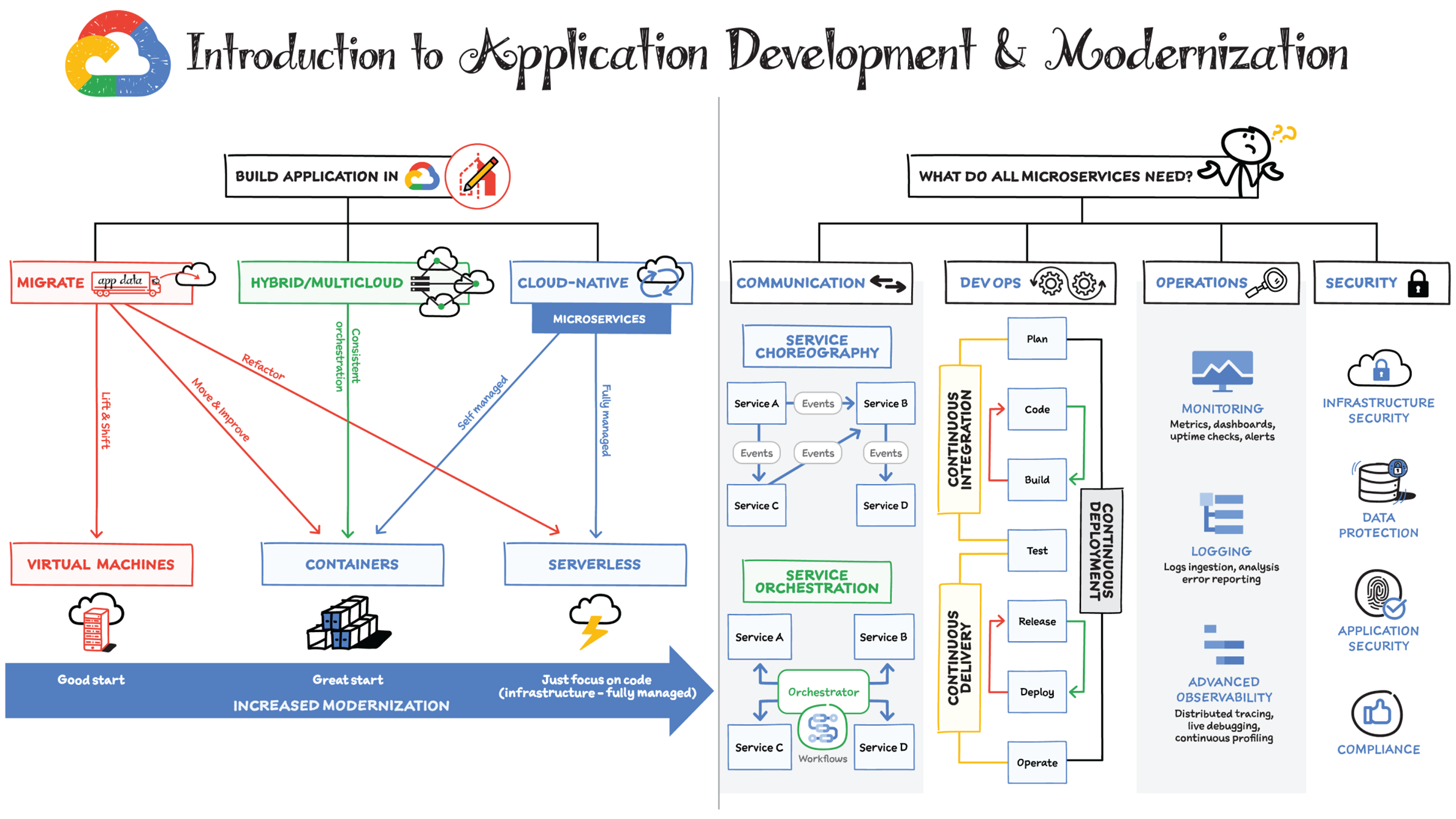

Where to Begin?

Understanding your starting point is essential to planning and executing a successful application migration strategy. Take a comprehensive approach, including not only technical requirements, but also consideration of your business goals (both present and future), any critical timelines, and your own internal capabilities. Depending on your situation you might fall in any of the below categories as it relates to time-to-value. There is no one-size-fits-all approach to migration, but the key here is to know that whichever path you choose, there is always a way to build on top of that and continue to enjoy more advantages of the cloud in an incremental fashion.

Should You Migrate to Google Cloud?

To determine whether your application can and should migrate to cloud, begin by asking yourself the following questions:

- Are the components of my application stack virtualized or virtualizable?

- Can my application stack run in a cloud environment while still supporting any and all licensing, security, privacy, and compliance requirements?

- Can all application dependencies (e.g., third-party languages, frameworks, libraries) be supported in the cloud?

If you answer “no” to any of these questions, you should evaluate whether it is feasible to replace those application components with a cloud offering. If not, leave those components on-premises during the initial phase of your digital transformation while you focus on the migration of your other application components.

If retention on-premises is no longer viable (e.g., if you must completely shut down your data center) or if you want to increase proximity to cloud resources, then recommended alternatives include taking advantage of Google Cloud's Bare Metal Solution, or shifting to a co-location facility (colo) adjacent to the appropriate cloud region.

Which Migration Path Is Right for You?

As you embark on your transformation journey, we recommend considering five key types of migration to Google Cloud:

- Migrating to Google Cloud managed services

- Migrating to containers on Google Kubernetes Engine (GKE) or Anthos

- Migrating to VMs (“Lift and Shift”) on GCE (Google Compute Engine)

- Migrating to Google Cloud VMware Engine

- Migrating to the Google Cloud Bare Metal Solution

Here are example scenarios:

If you are dealing with aggressive timelines, “lift and shift” might be a good choice to gain immediate infrastructure modernization via relocation to cloud. And you can follow up with additional modernization at a later time.

If you seek to take immediate advantages from moving to cloud but are still under a constrained time and skills, then “lift and optimize” is a great choice. Using compute virtual machines or VMware Engine in the cloud, you use the same virtualized familiar environment but can now take advantage of cloud elasticity and scale.

If you are seeking to immediately leverage the full benefits of cloud (e.g., elasticity, scale, managed services), it might be most efficient to modernize more aggressively (such as by adopting container technology) in conjunction with migration. “Move and improve” and “refactoring” are great fits in this situation, but know that it will take a bit longer to execute either strategy due to some changes required in the current apps to make them container friendly and/or serverless.

This decision tree will help you decide which path is right for your application.

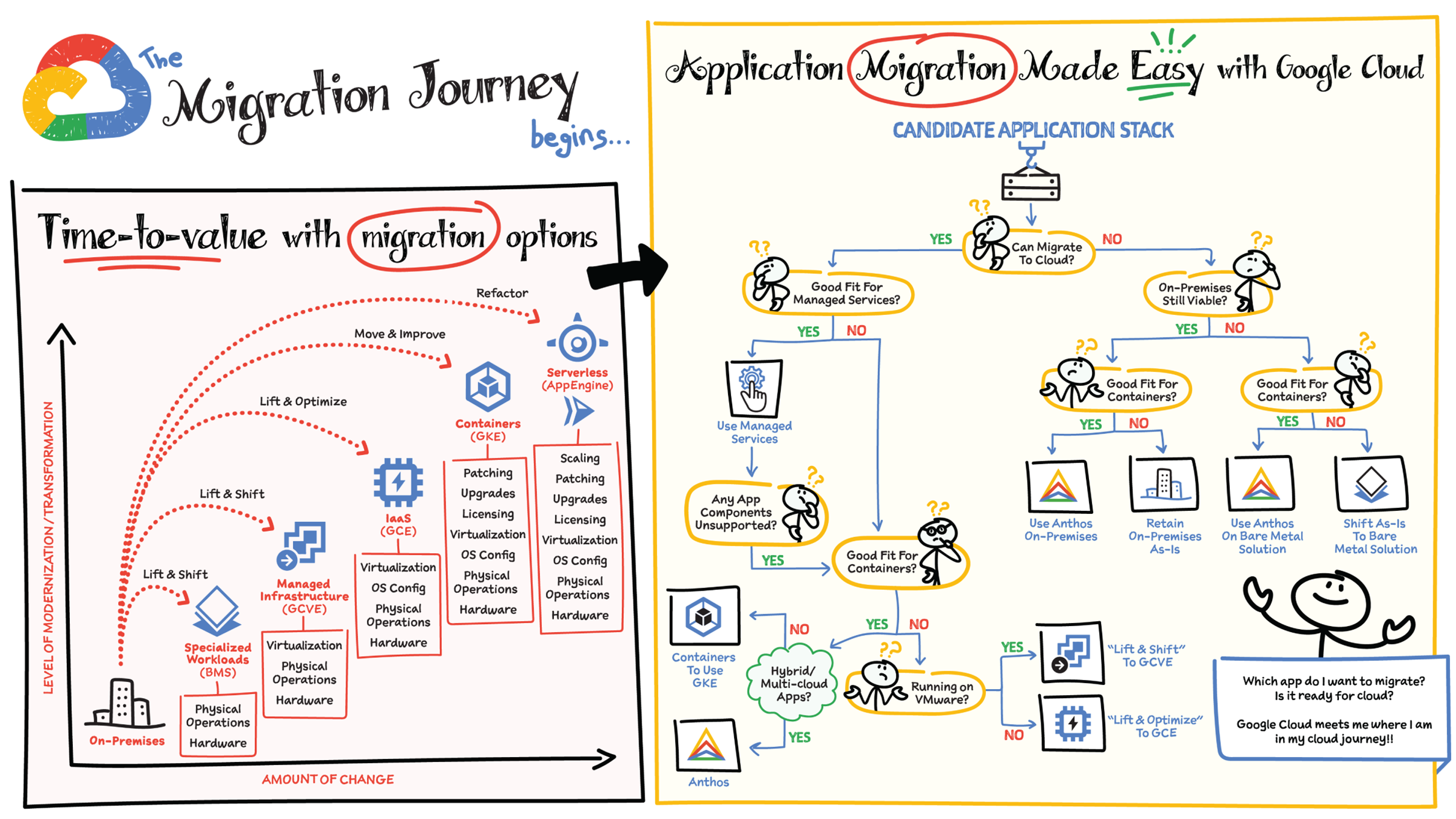

Common Cloud Migration Use Cases

Use Case 1: Hybrid Cloud Burst

- Set up the connectivity between on-premises and the cloud using Cloud Interconnect.

- Create a cloud landing zone; this includes creating the project and the resources such as Google Compute Engine (GCE), Google Kubernetes Engine (GKE), Google Cloud VMware Engine (GCVE), or Anthos.

- Then “lift and shift” or “lift and optimize” from on-premises to the cloud in the appropriate resource.

- At this point, you are ready to send the traffic bursts or excess traffic to Google Cloud to lower the stress on the existing data center.

Use Case 2: Modernize with Anthos

- Establish network connectivity to Google Cloud using Cloud Interconnect.

- Create the cloud landing zone.

- Then, “lift and shift” workloads to free up capacity on-premises.

- Build Anthos on-premises landing zone.

- Then, modernize apps both on-premises and in the cloud.

Use Case 3: Land, Expand, Retire

- Establish network connectivity to Google Cloud using Cloud Interconnect.

- Create the cloud landing zone.

- Then, migrate all workloads.

- Finally, retire the data center once complete. Iterate through hardware retirement as needed.

Use Case 4: DR Site Promotion

- Establish network connectivity to Google Cloud using Cloud Interconnect.

- Create the cloud landing zone.

- You are then ready to duplicate all workloads in the cloud.

- Then, swap user connectivity to the cloud as Primary.

- Finally, retire the colo all at once.

Whether you are starting or in the middle of your digital transformation journey, Google Cloud meets you wherever you are and makes it easy for you to move toward a more flexible and agile infrastructure. Hopefully, these steps act as a starting point in your journey and make your digital transformation journey easier.

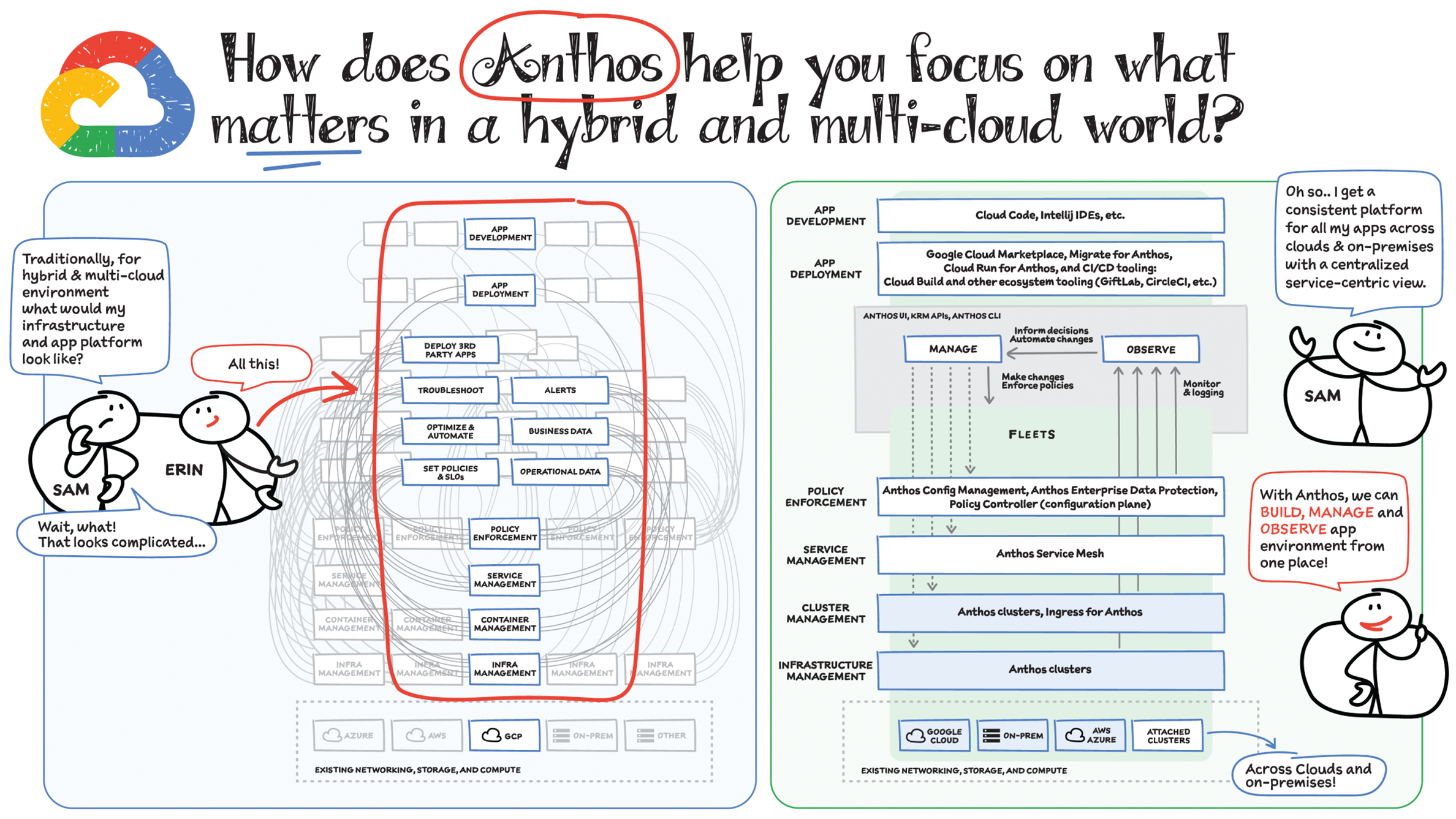

Most enterprises have applications in disparate locations — in their own data centers, in multiple public clouds, and at the edge. These apps run on different proprietary technology stacks, which reduces developer velocity, wastes computing resources, and hinders scalability. How can you consistently secure and operate existing apps while developing and deploying new apps across hybrid and multicloud environments? How can you get centralized visibility and management of the resources? Well, that is why Anthos exists. Here we explore why traditional hybrid and multicloud deployments are difficult, and then see how Anthos makes it easy to manage applications across multiple environments.

Why Is Traditional Hybrid and Multicloud Difficult?

In hybrid and multicloud environments, you need to manage infrastructure. Let's say you use containers on the clouds, and you develop apps using services on Google Cloud and Amazon Web Services (AWS). Regardless of environment, you will need policy enforcement across your IT footprint. To manage your apps across the environment, you require monitoring and logging systems. You must integrate that data into meaningful categories, like business data, operational data, and alerts.

Digging further, you might use operational data and alerts to inform optimizations, implement automations, and set policies or service-level objectives (SLOs). You might use business data to do all those things and to deploy third-party apps. Then, to actually enact the changes you decide to implement, you must act on different parts of the system. That means digging into each tool for policy enforcement, securing services, orchestrating containers, and managing infrastructure. Don't forget, all of this work is in addition to what it takes to develop and deploy your own apps.

Now, consider repeating this set of tasks across a hybrid and multicloud landscape. It becomes very complex, very quickly. Your platform admins, site reliability engineering (SRE), and DevOps teams who are responsible for security and efficiency have to do manual, cluster-by-cluster management, data collection, and information synthesis. With this complexity, it's hard to stay current, to understand business implications, and to ensure compliance (not to mention the difficulty of onboarding a new hire). Anthos helps solve these challenges!

How Does Anthos Make Hybrid and Multicloud Easy?

With Anthos, you get a consistent way to manage your infrastructure using open source, with similar infrastructure management, container management, service management, and policy enforcement across your landscape.

As a result, you have observability across all your platforms in one place, including access to business information, alerts, and operations information. With this information you might decide to optimize, automate, and set policies or SLOs.

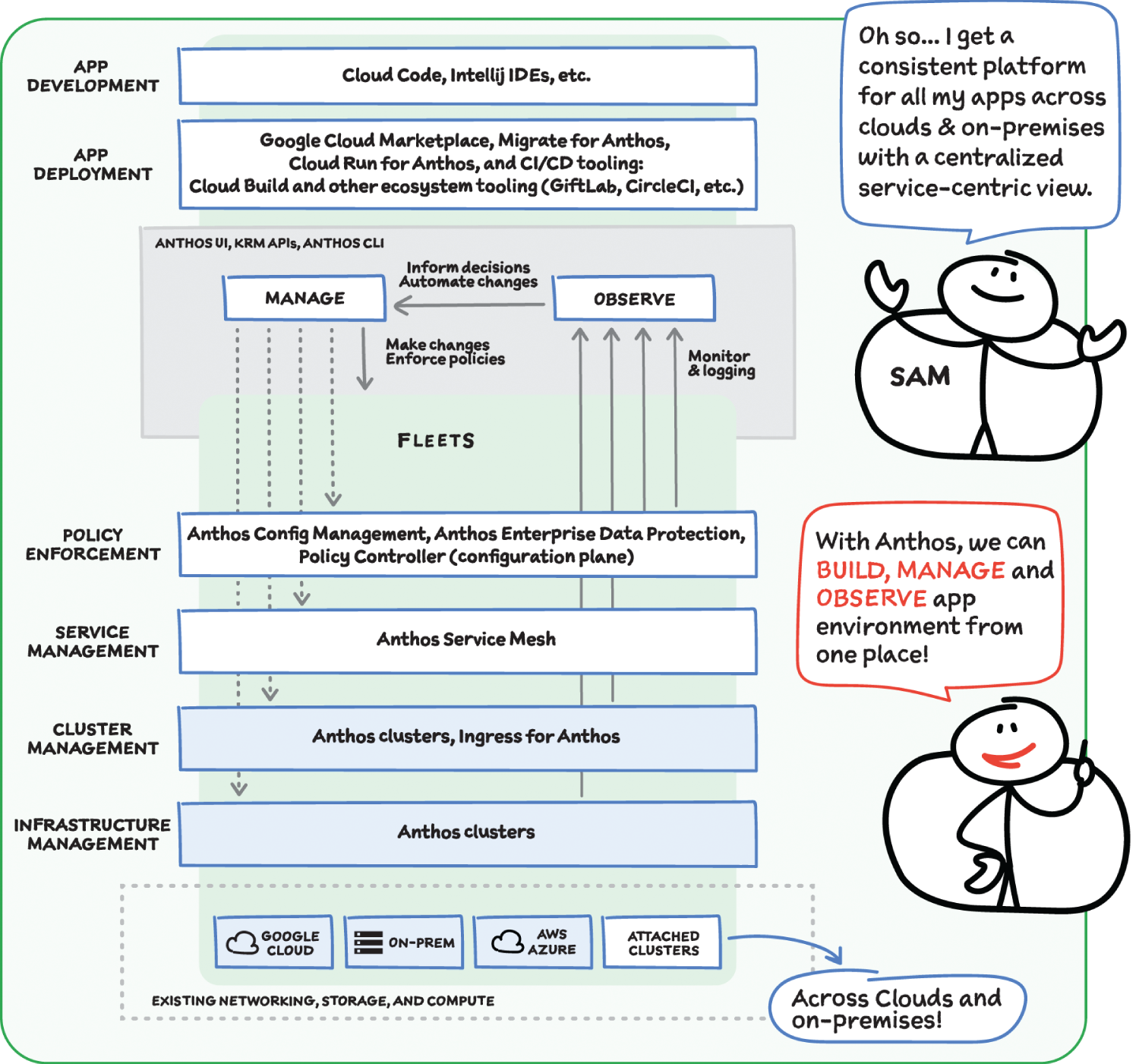

Digging Deeper into Anthos

Fleets You may have different regions that need different policies, and also have different development, staging, or production environments that need different permissions. Some parts of your work may need more security. That's where fleets come in! Environs are a way to create logical sets of underlying Kubernetes clusters, regardless of which platform those clusters live on. By considering grouping and managing sets of clusters as logical environs, you can think about and work with your applications at the right level of detail for what you need to do, be it acquiring business insights over the entire system, updating settings for a dev environment, or troubleshooting data for a specific cluster. Using environs, each part of the functional stack can take declarative direction about configuration, compliance, and more.

Modernize Application Development Anthos also helps modernize application development because it uses environs to enforce policies and processes, and abstracts away the cluster and container management from application teams. Anthos enables you to easily abstract away infrastructure from application teams, making it easy for them to incorporate a wide variety of continuous integration and continuous delivery (CI/CD) solutions on top of environs. It lets you view and manage your applications at the right level of detail, be it business insights for services across the entire system, or troubleshooting data for a specific cluster. Anthos also works with container-based tools like buildpacks (transform your application source code into images that can run on any cloud) to simplify the packaging process. It offers Migrate for Anthos to take those applications out of the VMs and move them to a more modern hosting environment.

What's in It for Platform Administrators? Anthos provides platform administrators with a single place to monitor and manage their landscape, with policy control and marketplace access. This reduces person-hours needed for management, enforcement, discovery, and communication. Anthos also provides administrators with an out-of-the-box structured view of their entire system, including services, clusters, and more, so they can improve security, use resources more efficiently, and demonstrate measurable success. Administrators also save time and effort by managing declaratively, and they can communicate the success, cost savings, and efficiency of the platforms without having to manually combine data.

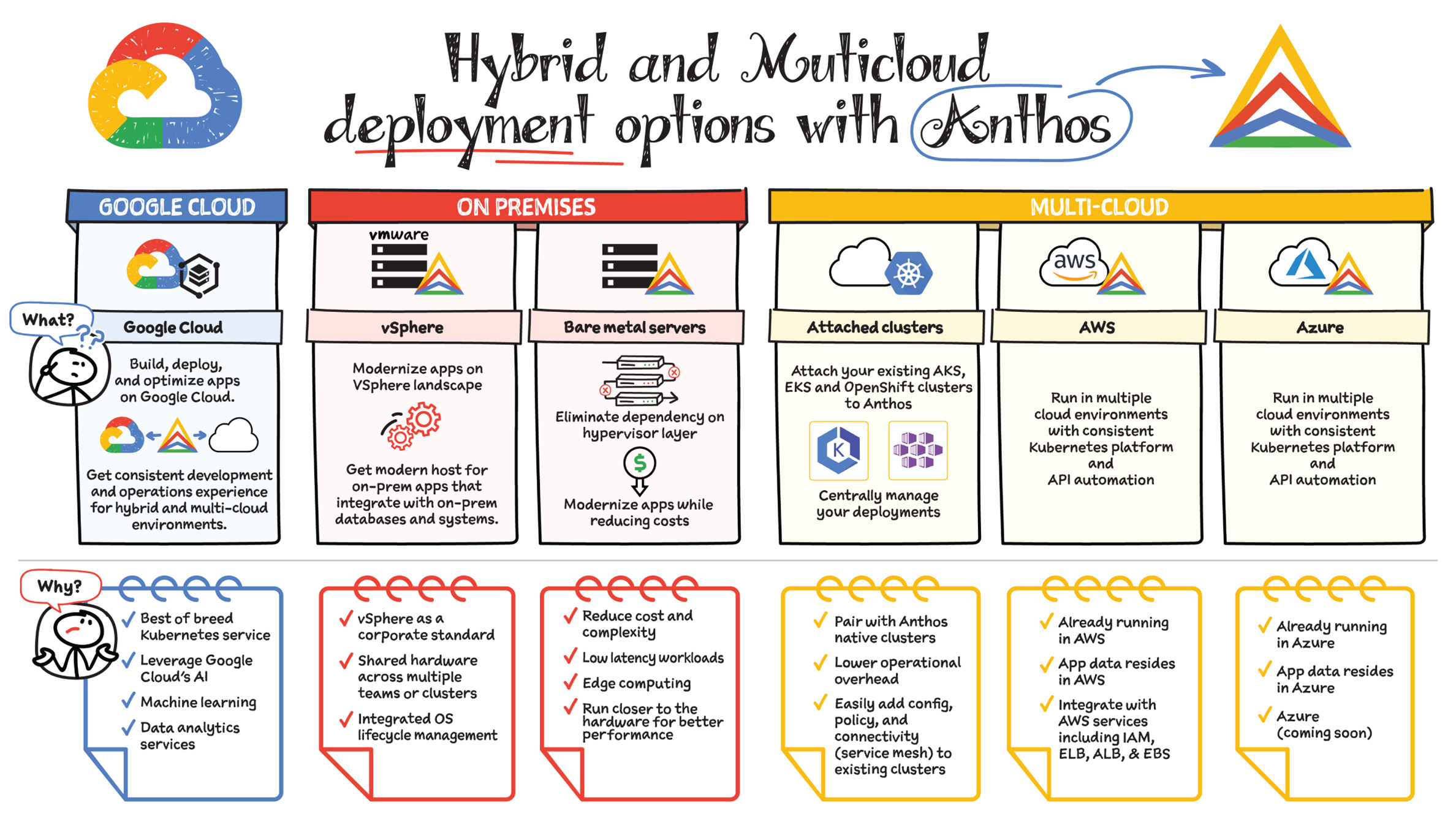

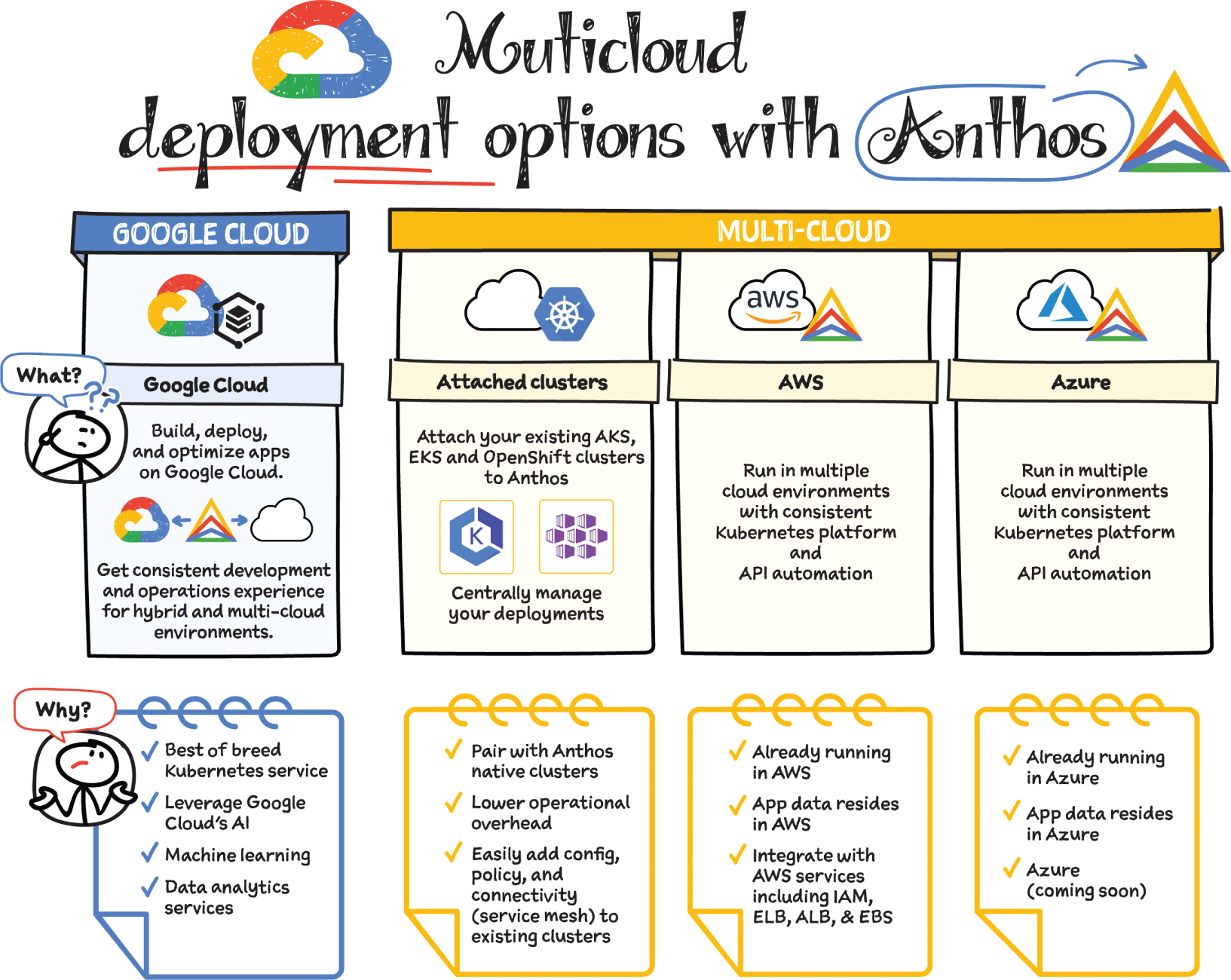

Anthos is a managed application platform that extends Google Cloud services and engineering practices to your environments so that you can modernize apps faster and establish operational consistency across them. With Anthos, you can build enterprise-grade containerized applications faster with managed Kubernetes on Google Cloud, on-premises, and other cloud providers. In this section, we outline each of Anthos’s deployment options:

- Google Cloud

- VMware vSphere

- Bare-metal servers

- Anthos attached clusters

- AWS

- Microsoft Azure

Deployment Option 1: Google Cloud

One way to improve your apps' performance is to run your compute closer to your data. So, if you are already running your services on Google Cloud, then it's best to use Anthos to build, deploy, and optimize your containerized workloads directly on Google Cloud. You can take advantage of Google Cloud AI, machine learning, and data analytics services to gain critical business insights, improve decision-making, and accelerate innovation.

Deployment Option 2: VMware vSphere

If you are using VMware vSphere in your own environment, then you can choose to run Anthos clusters on VMware, which enables you to create, manage, and upgrade Kubernetes clusters on your existing infrastructure. This is a good option if vSphere is a corporate standard for your organization and if you have shared hardware across multiple teams or clusters and with integrated OS life cycle management. With Anthos clusters on VMware, you can keep all your existing workloads on-premises without significant infrastructure updates. At the same time, you can modernize legacy applications by transforming them from VM-based to container-based using Migrate for Anthos. Going forward, you might decide to keep the newly updated, containerized apps on-premises or move them to the cloud. Either way, Anthos helps you manage and modernize your apps with ease and at your own pace.

Deployment Option 3: Bare-Metal Servers

Though virtual machines are unquestionably useful for a wide variety of workloads, a growing number of organizations are running Kubernetes on bare-metal servers to take advantage of reduced complexity, cost, and hypervisor overhead. Anthos on bare metal lets you run Anthos on physical servers, deployed on an operating system provided by you, without a hypervisor layer. Anthos on bare metal comes with built-in networking, life cycle management, diagnostics, health checks, logging, and monitoring. Mission-critical applications often demand the highest levels of performance and lowest latency from the compute, storage, and networking stack. By removing the latency introduced by the hypervisor layer, Anthos on bare metal lets you run computationally intensive applications such as GPU-based video processing, machine learning, and more, in a cost-effective manner. Anthos on bare metal allows you to leverage existing investments in hardware, OS, and networking infrastructure. There are minimal system requirements to run Anthos on bare metal at the edge on resource-constrained hardware. This means that you can capitalize on all the benefits of Anthos — centralized management, increased flexibility, and developer agility — even for your most demanding applications.

Deployment Option 4: Anthos Attached Clusters

When thinking about deploying Anthos, you may be wondering about what you'll do with your existing Kubernetes clusters. With Anthos attached clusters, you can retain your existing Kubernetes clusters while taking advantage of key Anthos features. Whether you're running Amazon EKS, Microsoft AKS, or Red Hat OpenShift, you can attach your existing clusters to Anthos. That means you can centrally manage your deployments in Google Cloud Console, enforce policies and configuration using Anthos Config Management, and centrally monitor and collect logs. Of course, Anthos doesn't manage everything; you still must manually maintain your clusters and keep them up to date. This deployment option does, however, enable you to begin your Anthos journey at a pace that works well for you, and it eases the transition to Anthos in other cloud environments.

Deployment Option 5: AWS

If your organization has more than a few teams, chances are pretty good that they're using different technologies, and perhaps even different cloud platforms. Anthos is designed to abstract these details and provide you with a consistent application platform. Anthos on AWS enables you to create Google Kubernetes–based clusters with all the Anthos features you'd expect on Google Cloud. This includes easy deployment using Kubernetes-native tooling, Anthos Config Management for policy and configuration enforcement, and Anthos Service Mesh for managing the increasing sprawl of microservices. When you use the Google Cloud Console, you have a single pane of glass that you can use to manage your applications all in one place no matter where they are deployed.

Deployment Option 6: Microsoft Azure

Google Cloud are always extending Anthos to support more kinds of workloads, in more kinds of environments, and in more locations. Google announced last year that Anthos is coming to Azure. Support for Microsoft Azure is currently in preview, so stay tuned for more details!

So there you have it — six different hybrid and multicloud deployment options for Anthos! Depending on where your infrastructure and data is today, one or perhaps a combination of these options will help you power your application modernization journey, with a modern application platform that just works on-premises or in a public cloud, ties in seamlessly with legacy data center infrastructure, enables platform teams to cost-optimize, and supports a modern security posture anywhere.

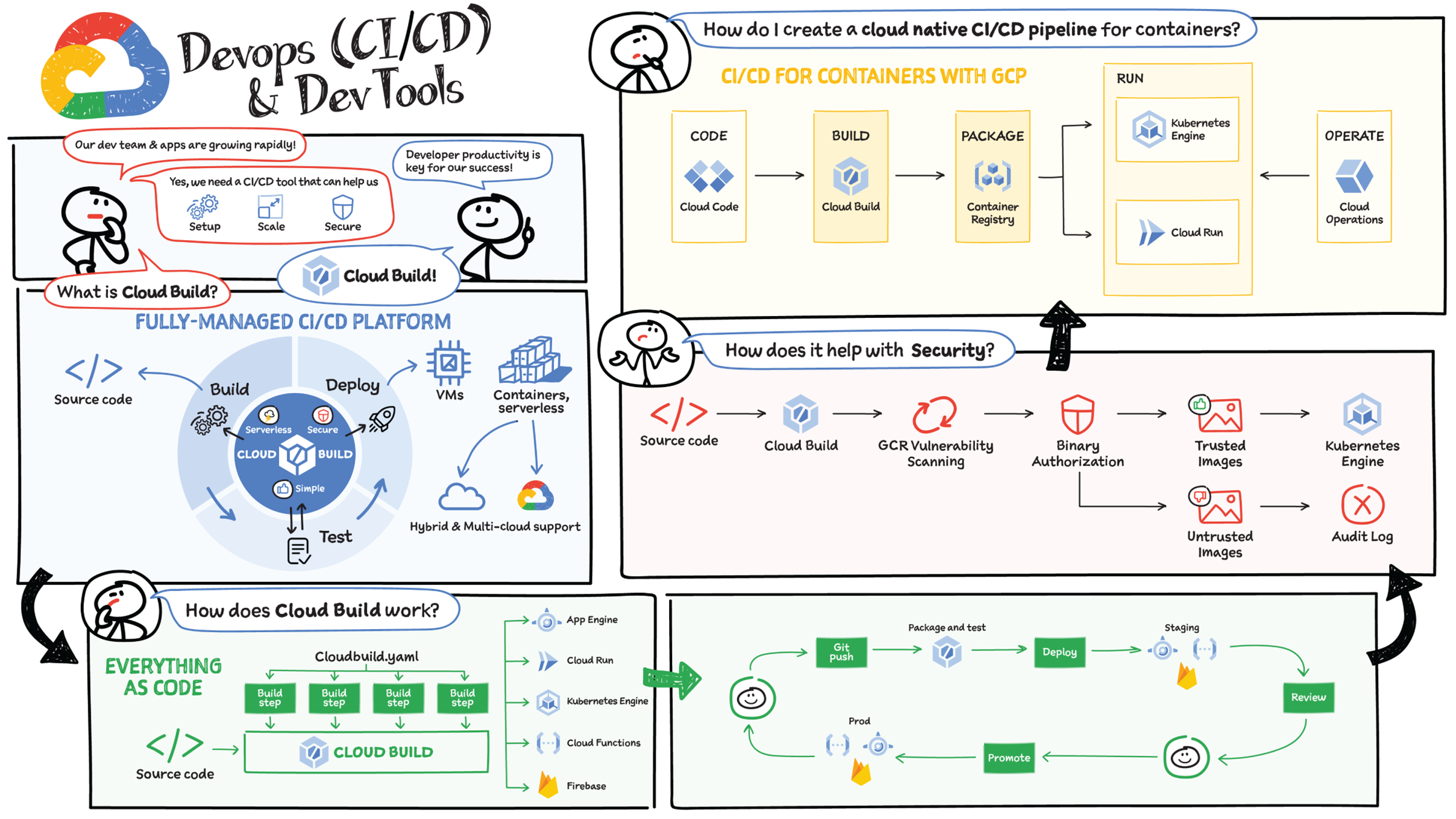

Continuous integration (CI) is the process of identifying and correcting problems early in the development process. With CI, you integrate your work frequently, instead of waiting for one large integration later on. Each integration is verified with an automated build, which enables you to detect integration issues as quickly as possible and reduce problems downstream.

Continuous delivery (CD) extends CI. CD is about packaging and preparing the software with the goal of delivering incremental changes to users. Deployment strategies such as red/black and canary deployments can help reduce release risk and increase confidence in releases. CD lowers risk and makes the release process safer, faster, and when done well, boring. Once deployments are made painless with CD, developers can focus on writing code, not tweaking deployment scripts.

How Has the Application Development Landscape Changed?

Much has changed in the app development space recently, and you'll want to take these changes into account as part of your CI/CD strategy.

- Hybrid and multicloud deployments — Large enterprises want to deploy applications in hybrid cloud environments, with tools and services that don't lock them into a specific vendor.

- The shift from monolith to microservices — Teams are breaking down large monoliths into microservices for greater agility. This makes it possible for different teams to use different languages, tech stacks, and development life cycles, which means deployment patterns, tooling needs, and scaling patterns are changing.

- Cloud-native applications — It's not just VMs anymore; companies are shifting paradigms and embracing serverless, containers, and Kubernetes. While simplifying some aspects of app development, this move adds complexity in other areas.

Ideally, developers should be focused on their code, not on ushering their changes through a CI/CD process. CI/CD steps should be triggered and run behind the scenes as soon as code is checked in. So, your CI/CD pipeline should support Packaging of source code, Automated unit and integration tests, Consistent build environments, Approvals before deploying to production, Blue/green and canary rollouts. That's where Cloud Build comes in.

Cloud Build

Cloud Build is a fully managed CI/CD platform that lets you build, test, and deploy across hybrid and multicloud environments that include VMs, serverless, Kubernetes, and Firebase. Cloud Build can import source code from Cloud Storage, Cloud Source Repositories, GitHub, or Bitbucket; execute a build to your specifications; and produce artifacts such as Docker container images or Java archives.

Cloud Build executes your build as a series of build steps, with each step run in a Docker container. A build step can do anything that can be done from a container regardless of the environment. To perform your tasks, you can either use the supported build steps provided by Cloud Build or write your own build steps. As a part of the build step, Cloud Build deploys the app to a platform of your choice. You also have the ability to perform deep security scans within the CI/CD pipeline using Binary Authorization and ensure only trusted container images are deployed to production.

Cloud Build private pools help you meet enterprise security and compliance requirements. These are private, dedicated pools of workers that offer greater customization over the build environment, including the ability to access resources in a private network. For instance, you can trigger fully managed DevOps workflows from source code repositories hosted in private networks, including GitHub Enterprise.

Cloud Code

If you are working entirely in a cloud-native environment, then you'll want to use Cloud Code to kick off your CI/CD pipeline. Use Cloud Code in your IDE; it comes with tools to help you write, run, and debug cloud-native applications quickly and easily. Then push your code to Cloud Build for the build process, package it in the Artifact Registry, and run it on GKE or Cloud Run. You can get all the visibility and metrics you want for the deployment in Google Cloud's operations suite.

Cloud Deploy

Google Cloud Deploy (In preview at the time of this writing) is a managed, continuous delivery service that makes continuous delivery to GKE easier, faster, and more reliable. It has built-in security controls and can be integrated with your existing DevOps ecosystem.

The evolution of technologies such as containerization, container orchestration, and cloud-native serverless services has given us the ability to develop distributed solutions based on microservices architectures that are both more scalable and more reliable.

What Is Microservices Architecture?

Microservices architecture (often shortened to microservices) refers to an architectural style for developing applications. Microservices enable you to break down a large application into smaller independent services, with each service having its own realm of responsibility. To serve a single user request, a microservices-based application can call on many individual microservices to compose its response.

Containers are well suited to microservices, since they let you focus on developing the services without worrying about dependencies. Modern cloud-native applications are usually built as microservices using containers.

When you use Google Cloud, you can easily deploy microservices using either the managed container service, Google Kubernetes Engine (GKE), or the fully managed serverless offering, Cloud Run. Depending on your use case, Cloud SQL and other Google Cloud products and services can be integrated to support your microservices architecture.

How Are Monolithic and Microservices Architectures Different?

With a monolithic architecture, the solution is built as one large system, often with a single codebase. It is extremely difficult to change a monolith's technology, language, or framework because all components are tightly coupled and dependent on each other. As a result, even relatively small changes can require lengthy development and deployment times.

With a microservices architecture, the solution is built as a set of independent modules based on business functionality. Each module, or service, is small (“micro”), which decreases overall build and development time and makes it easy to create CI/CD pipelines. Smaller functional units also make it easy to change technology and frameworks or use different languages for different services. It's easier to isolate faults since they are usually limited to a specific service. Services can be scaled independently, letting you scale out subsystems that require more resources without scaling out the entire application.

Microservices Use Cases

Let's consider a scenario in which you are migrating a monolithic web application or developing a new one with a microservices architecture. Microservices architectures are often event-driven with the pub/sub model, where one service publishes events and other services subscribe to the events and take action on them.

In this example scenario there are four services: Order, Packaging, Shipping, and Notification:

- When a user places an order on the website, the Order service receives the order, does some preliminary processing, and sends the event to Google Pub/Sub.

- The Packaging and Notification services, which are subscribed to the events from the Order service, start the packaging process for the order and send an email notification to the customer.

- The Packaging service sends an order packaging event to Pub/Sub. The Shipping service, which has subscribed to these events, processes shipping and sends an event to Pub/Sub. The Notification service consumes this event and sends another message to the customer with order shipment info.

Of course, there are multiple ways of deploying a website like this. Choosing the best option will depend on your team's specific requirements and preferences. Notice that in the example the Notification service uses Cloud Functions to run the logic for sending notifications and uses a Firestore database to look up user email addresses. The Shipping and Order services are deployed on Cloud Run while the Packaging service is deployed on GKE. They all are connecting to a Cloud SQL database.

Some other microservices use cases are:

- Media content: Using a microservices architecture, images, and video assets can be stored in a scalable object storage system and served directly to web or mobile apps.

- Transactions and invoices: Payment processing and ordering can be separated as independent services, so payments continue to be accepted even if there is a service disruption with invoicing.

- Data processing: A microservices platform can extend cloud support for existing modular data processing.

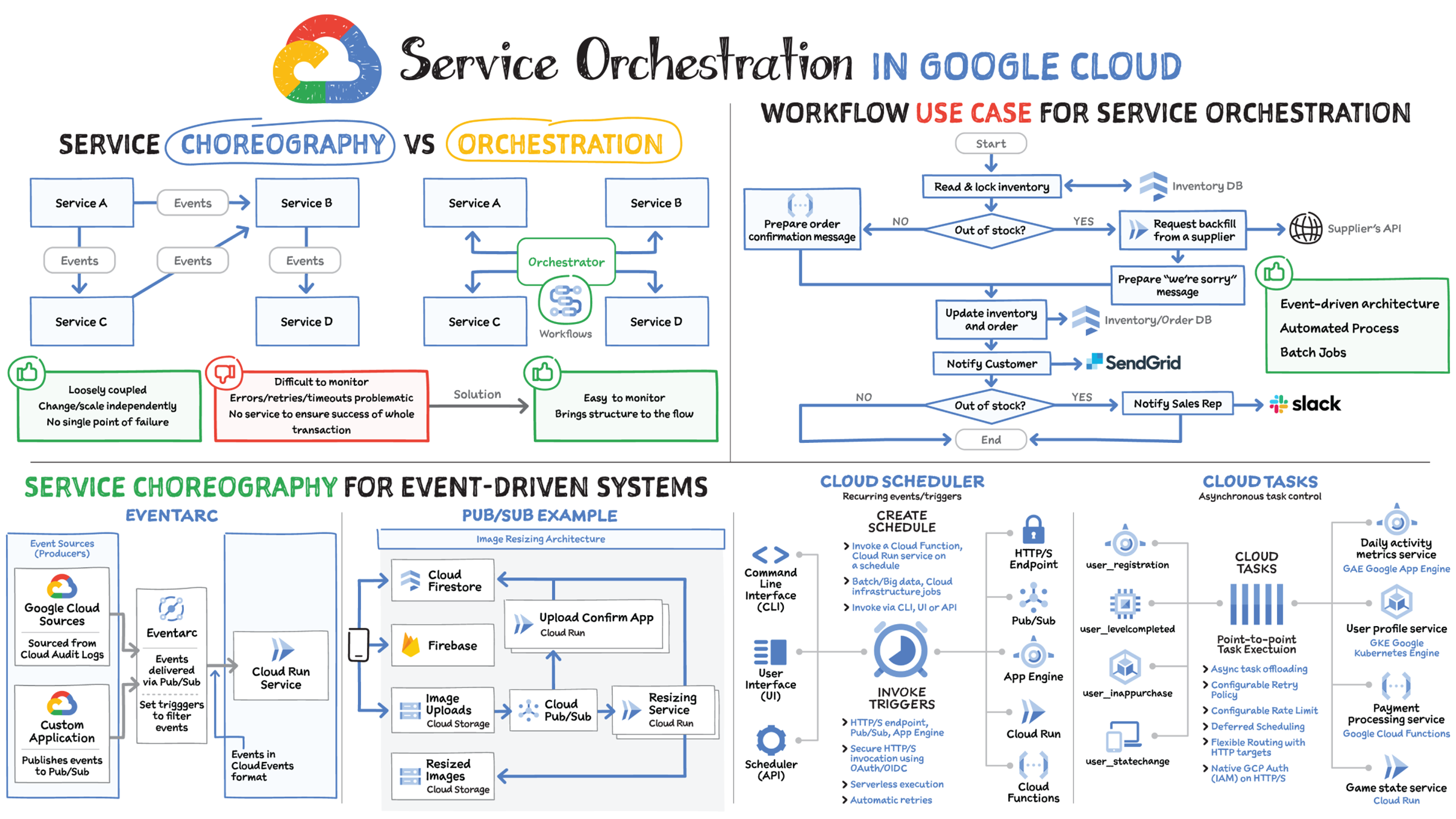

Going from a monolithic architecture to microservices has clear benefits, including reusability, scalability, and ease of change. Most of the time, business problems are solved by coordinating multiple microservices. This coordination is based on event-driven architectures, which can be implemented via two approaches: choreography and orchestration.

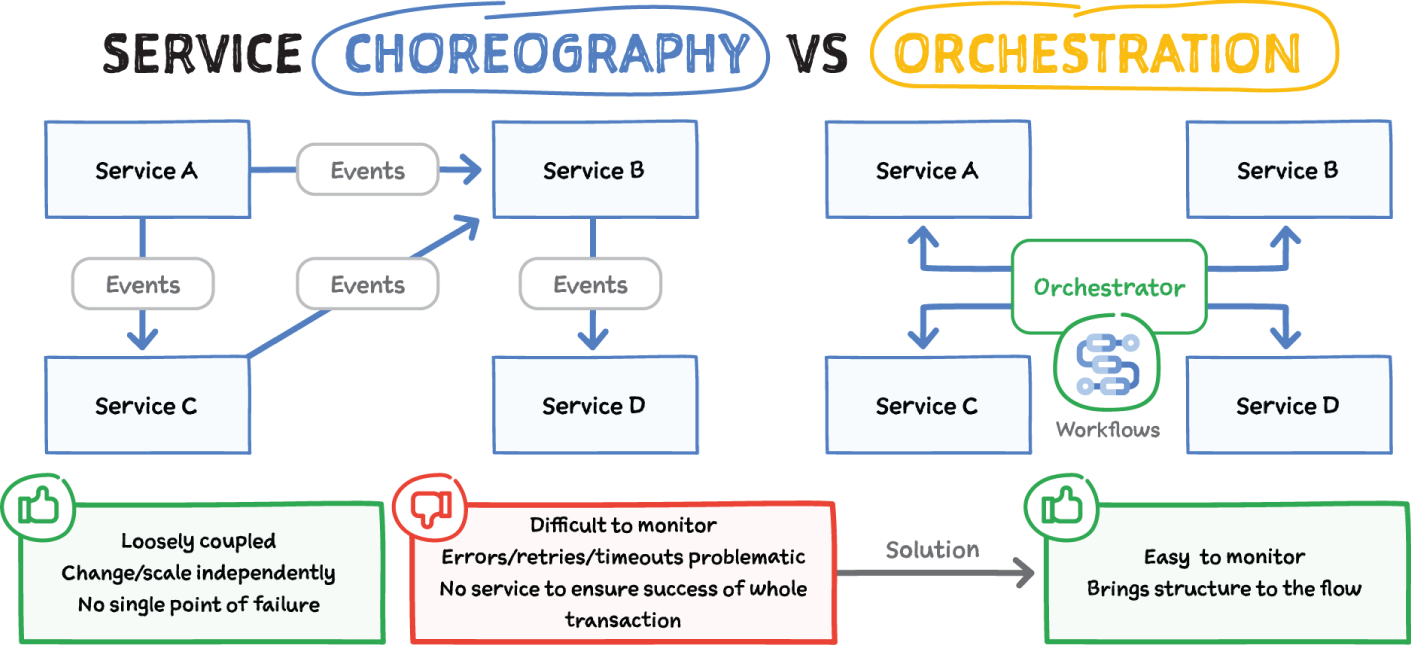

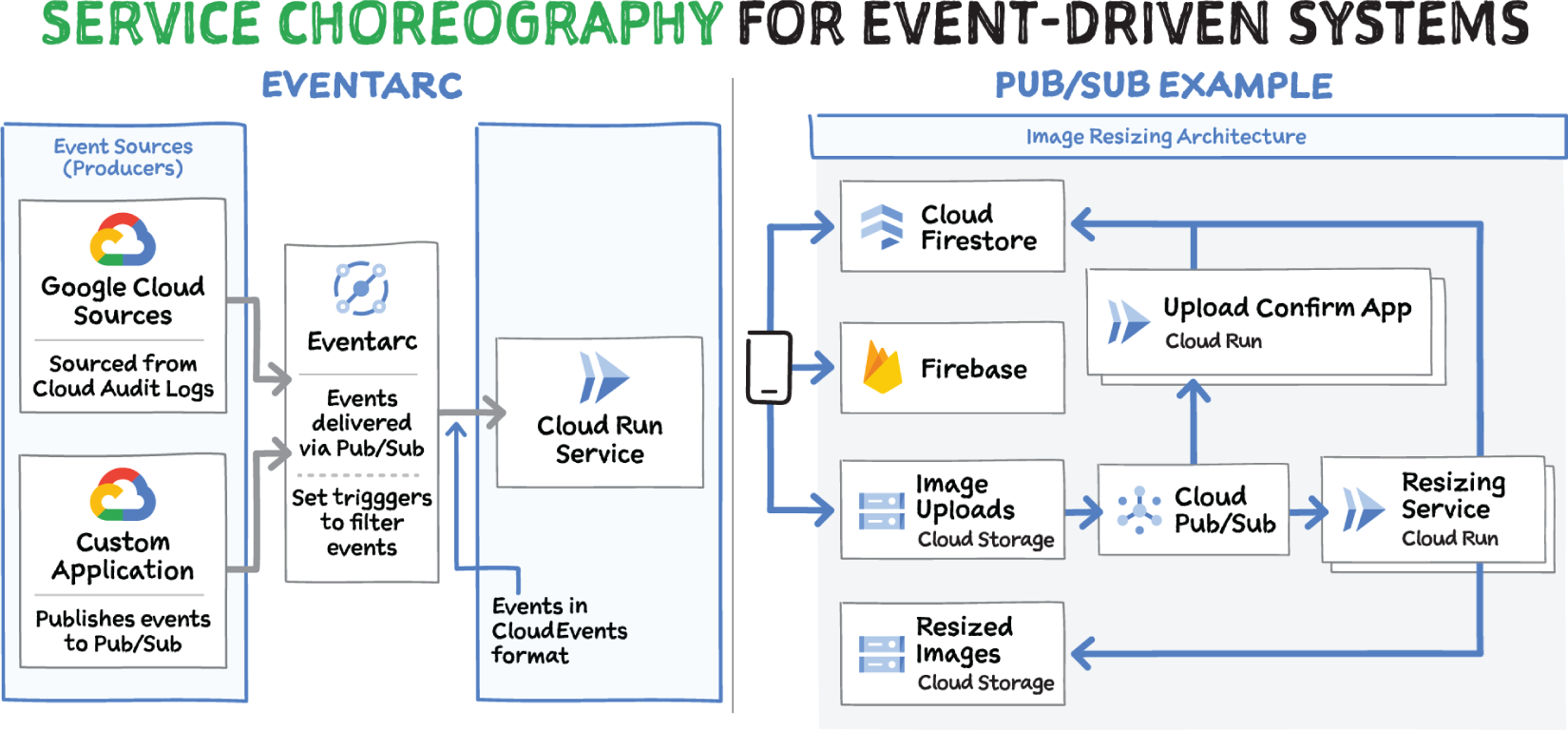

Service Choreography and Service Orchestration

- Service Choreography — With service choreography, each service works independently and interacts with other services in a loosely coupled way through events. Loosely coupled events can be changed and scaled independently, which means there is no single point of failure. But, so many events flying around between services makes it quite hard to monitor. Business logic is distributed and spans across multiple services, so there is no single, central place to go for troubleshooting. There's no central source of truth to understand the system. Understanding, updating, and troubleshooting are all distributed.

- Service Orchestration — To handle the monitoring challenges of choreography, developers need to bring structure to the flow of events, while retaining the loosely coupled nature of event-driven services. Using service orchestration, the services interact with each other via a central orchestrator that controls all interactions between the services. This orchestrator provides a high-level view of the business processes to track execution and troubleshoot issues. In Google Cloud, Workflows is the service orchestration service.

Once you have decided between the two approaches for your application, design questions are largely about the characteristics of the services and the use case. You should prefer orchestration within the bounded context of a microservice, but prefer choreography between bounded contexts. That is, you'll likely have choreography at a higher level, with orchestration at lower levels, both in the same system.

Google Cloud provides services supporting both orchestration and choreography approaches. Pub/Sub and Eventarc are both suited for choreography of event-driven services, whereas Workflows is suited for centrally orchestrated services.

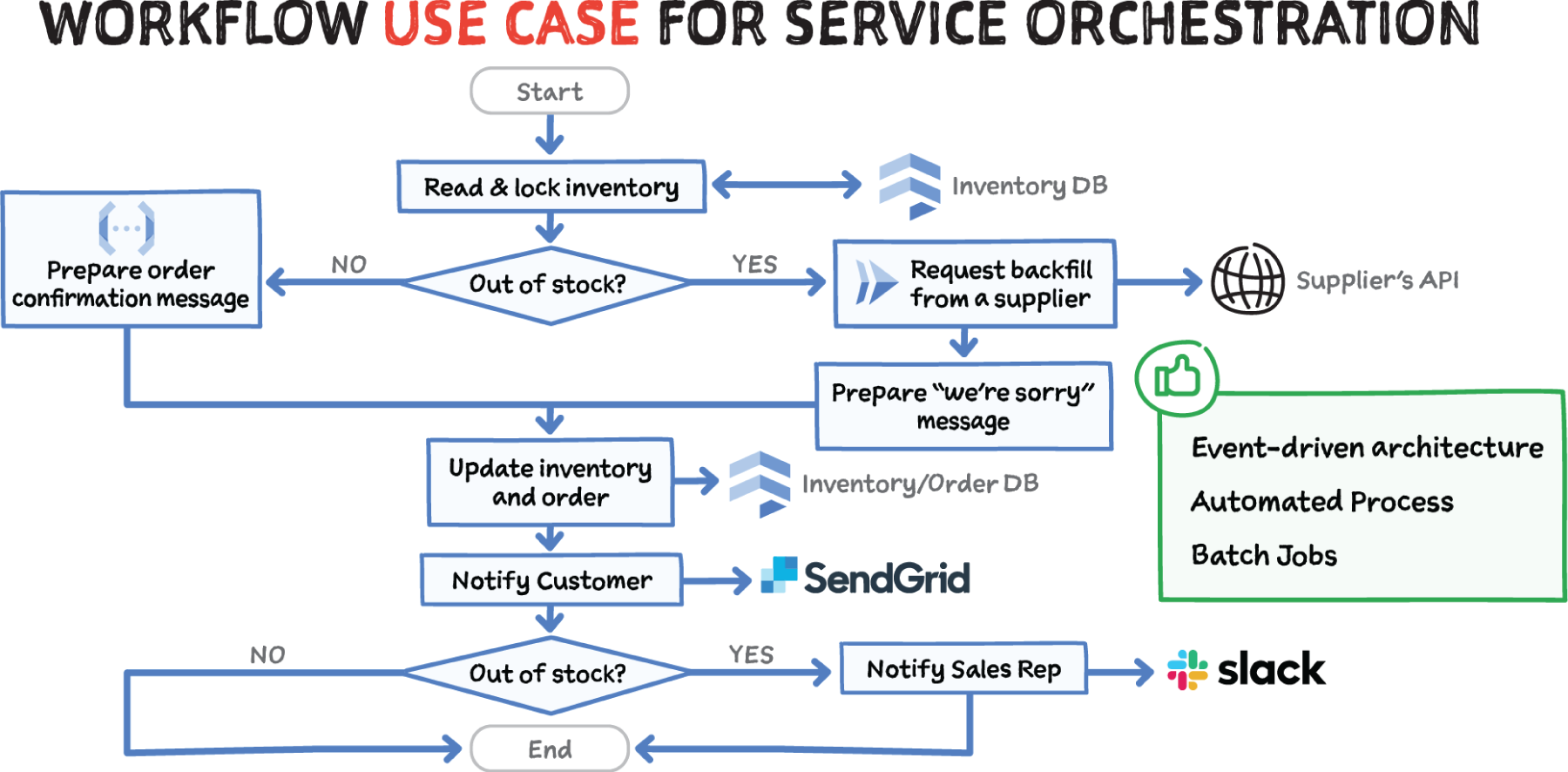

Google Cloud Support for Service Orchestration

Workflows

You use Workflows to orchestrate and automate Google Cloud and HTTP-based API services with serverless workflows. It is a fully managed, scalable, and observable way to define a business process and orchestrate calls to several services. Workflows calls those services as simple web APIs. Using Workflows you can define the flow of your business logic in a YAML-based workflow definition language and use the UI or API to trigger the workflow. You can use Workflows to automate complex processes, including event-driven and batch jobs, error handling logic, sequences of operations, and more. Workflows is particularly helpful with Google Cloud services that perform long-running operations; Workflows will wait for them to complete, even if they take hours. With callbacks, Workflows can wait for external events for days or months.

Google Cloud Support for Service Choreography

Pub/Sub

Pub/Sub enables services to communicate asynchronously, with latencies on the order of 100 milliseconds. Pub/Sub is used for messaging-oriented middleware for service integration or as a queue to parallelize tasks. Publishers send events to the Pub/Sub service, without regard to how or when these events will be processed. Pub/Sub then delivers events to all services that need to react to them (Subscribers). Pub/Sub is also used for streaming analytics and data integration pipelines to ingest and distribute data (as covered in Chapter 4, “Data Analytics”).

Eventarc

Eventarc enables you to build event-driven architectures without having to implement, customize, or maintain the underlying infrastructure. It offers a standardized solution to manage the flow of state changes, also known as events, between decoupled microservices. Eventarc routes these events to Cloud Run while managing delivery, security, authorization, observability, and error-handling for you. Eventarc provides an easy way to receive events not only from Pub/Sub topics but from a number of Google Cloud sources with its Audit Log and Pub/Sub integration. Any service with Audit Log integration or any application that can send a message to a Pub/Sub topic can be event sources for Eventarc.

Additional Services That Help with Both Choreography and Orchestration

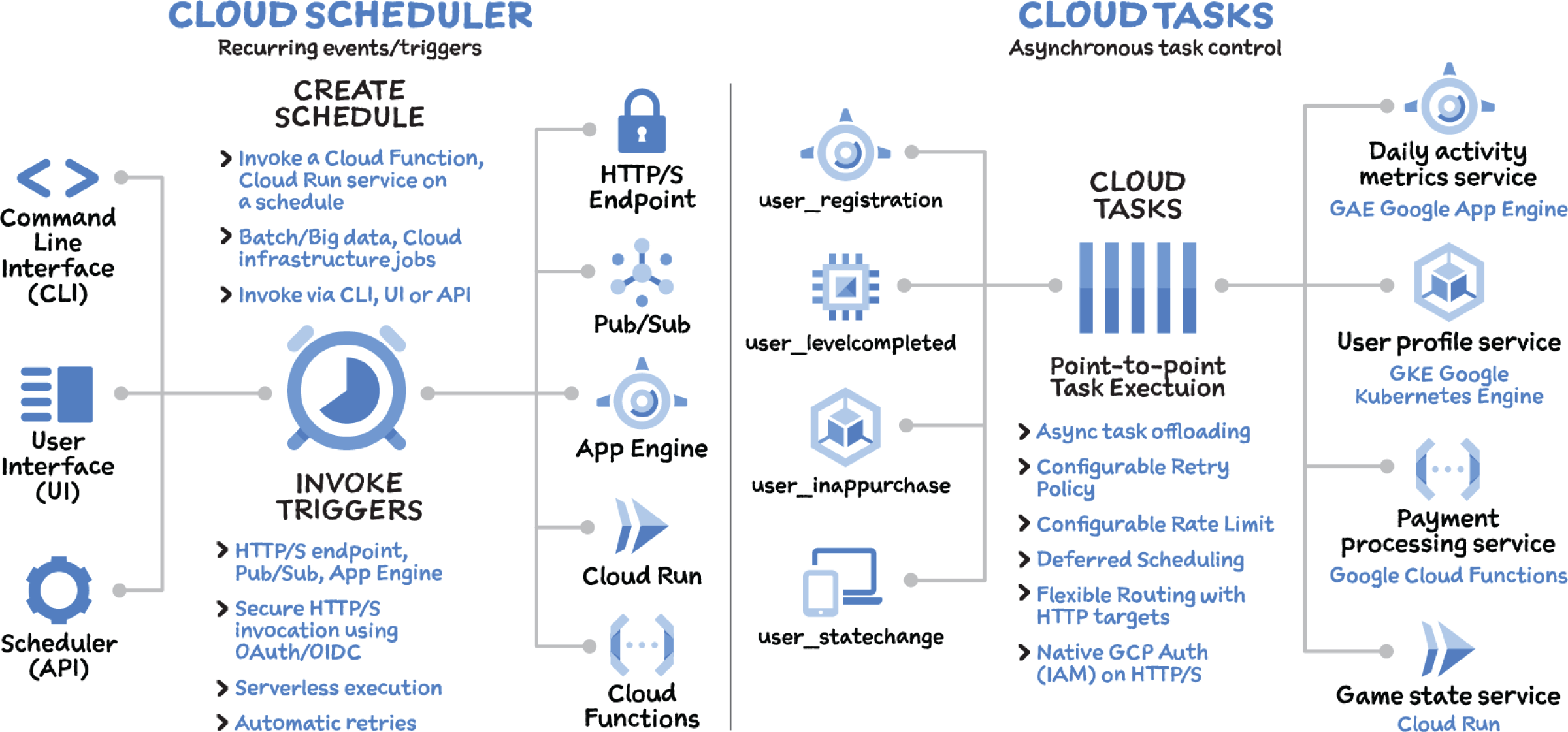

Cloud Tasks

Cloud Tasks lets you separate pieces of work that can be performed independently, outside of your main application flow, and send them off to be processed asynchronously using handlers that you create. These independent pieces of work are called tasks. Cloud Tasks helps speed user response times by delegating potentially slow background operations like database updates to a worker. It can also help smooth traffic spikes by removing non-user-facing tasks from the main user flow.

Difference between Pub/Sub and Cloud Tasks Pub/Sub supports implicit invocation: a publisher implicitly causes the subscribers to execute by publishing an event. Cloud Tasks is aimed at explicit invocation where the publisher retains full control of execution, including specifying an endpoint where each message is to be delivered. Unlike Pub/Sub, Cloud Tasks provides tools for queue and task management, including scheduling specific delivery times, rate controls, retries, and deduplication.

Cloud Scheduler

With Cloud Scheduler, you set up scheduled units of work to be executed at defined times or regular intervals, commonly known as cron jobs. Cloud Scheduler can trigger a workflow (orchestration) or generate a Pub/Sub message (choreography). Typical use cases include sending out a report email on a daily basis, updating some cached data every x minutes, or updating summary information once an hour.

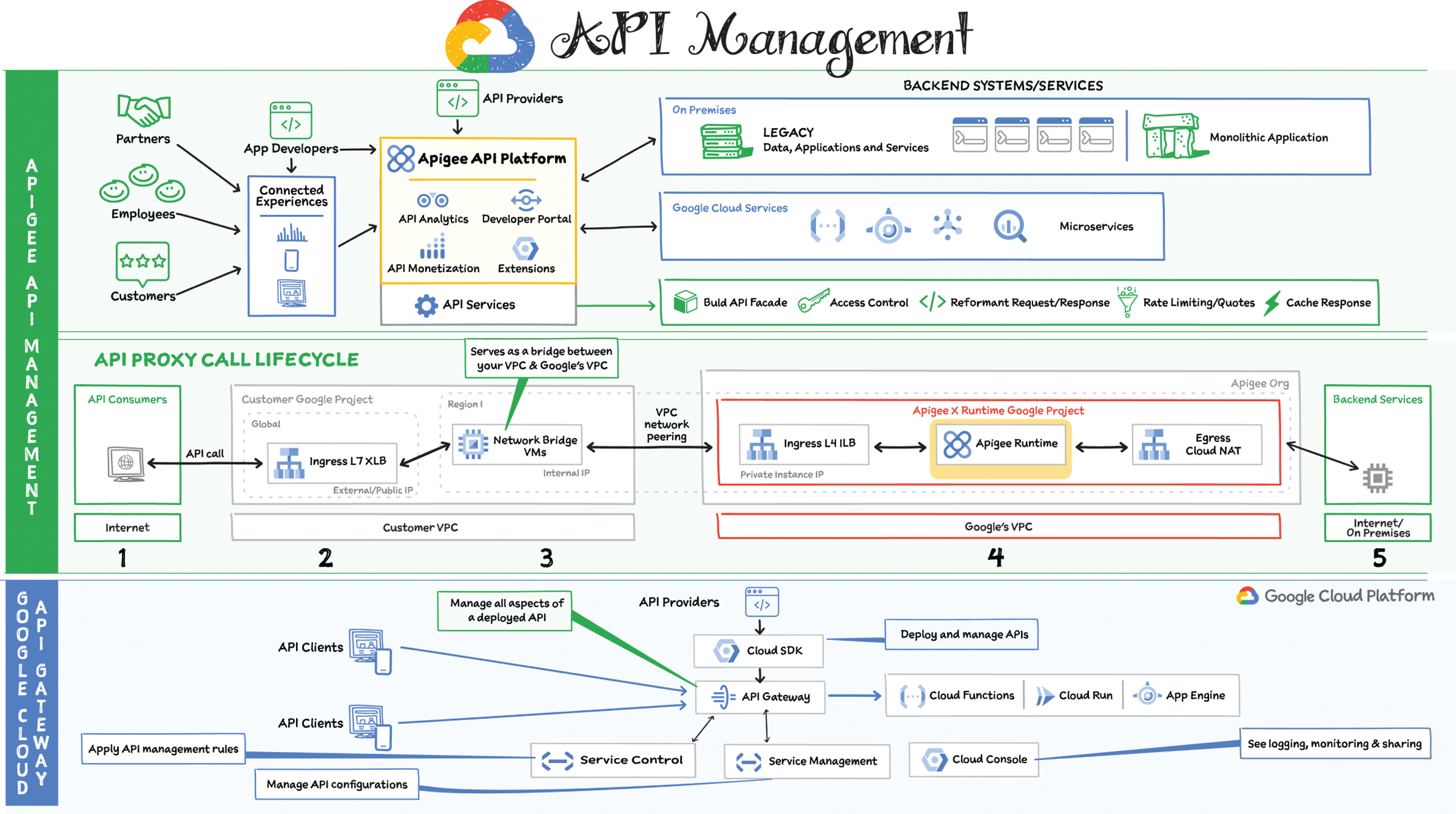

APIs are the de facto standard for building and sharing the modern applications that power today's enterprises. All modern businesses leverage APIs to move fast and stay competitive. But securely delivering, managing, and analyzing APIs, data, and services is complex and critical — and it's getting more challenging as enterprise ecosystems expand beyond on-premises data centers to include private and public clouds, SaaS, and other IT endpoints. To navigate this complexity, businesses need API management.

What Is API Management?

Let's consider a scenario. Suppose you have some backend services, including REST/SOAP services, microservices, a service bus, and maybe some more third-party services. You've got some consumers of these services on the other side: partners, employees, and customers. They have applications that need to get data from your backend services or trigger an action with an API.

Developers are composing new and compelling applications using APIs to build and participate in ecosystems. Productizing those APIs underscores all of the compelling new business applications we see in the Internet economy. All of those things it takes to productize APIs — developer portal, API packaging, flexible security options, and turning operational metrics into business analytics — are what API management is about and what Apigee offers.

What Is Apigee?

Apigee API management helps modernize your applications and monetize your APIs. It helps control an application's access to the backend data and services. It also offers application developers tools they need to access the API and helps API providers with tools they need to manage and provision the APIs.

- API Services: This is the part that routes traffic from the applications to the backend services and acts as an enterprise gateway orchestrating those backend services and preventing people from abusing them. This is where an API provider can enforce throttling and quotas on the services to protect the backend. You can build a facade over the back-end services and present a set of interfaces to those external applications compared to what's inside. You can even control access so different applications might have access to different services and they might get different results when they call them. You could reformat the request as it comes in and make it appropriate for your backend services. You could change the response that comes from the backend services around before sending it back to the applications. You can add caching to improve performance by responding directly from cache, avoiding a call to the backend.

- Developer Portal: Apigee offers a developer portal to serve the API consumer or the application developer. They can sign up to use the API, get credentials to access the service, and access documentation to learn how to use the API. If there's a need to serve the APIs as products, API providers can take all the services and bundle them together into different packages that provide different access. You can even brand the portal to match the branding of your website.

- API Monetization: You can create a variety of monetization plans that charge developers (or pay them through revenue sharing) for the use of your APIs.

- API Analytics: API Analytics helps your API team measure everything from developer engagement metrics to business and operational metrics. These analytics help API teams improve their APIs and app developers improve their apps. They also help answer questions about traffic patterns, top developers, which API methods are popular, API response times, and other such metrics needed to improve the service.

Apigee gives you a choice between configuration or coding; there are a number of out-of-the-box policies that do basic things. You can drop in these policies to request a token or add response caching if you want to do code-driven development. You can build your own policies, and you can drop those in and mix them with the out-of-the-box policies; that can be done with JavaScript, Java, or Python.

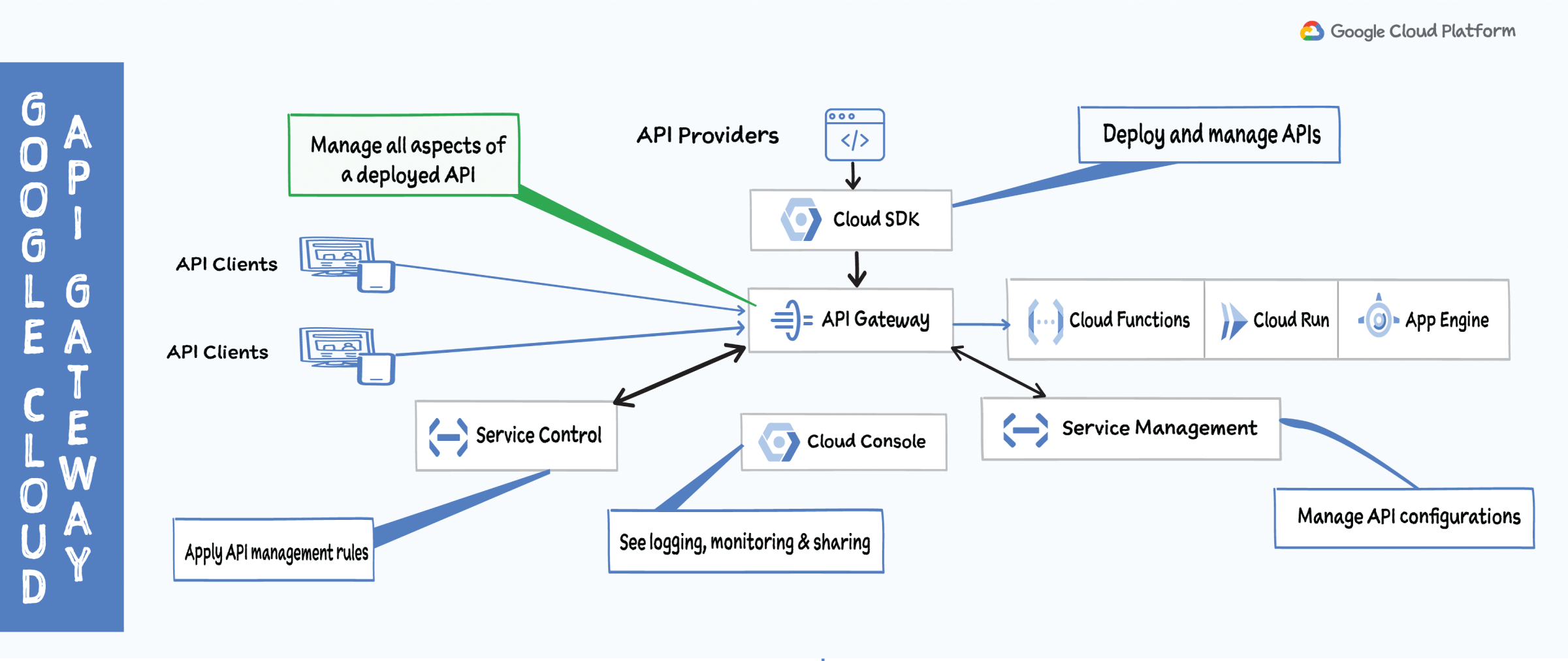

What is API Gateway?

API Gateway is an API management system that provides management, monitoring, and authentication for your APIs. It enables you to provide secure access to your services through a well-defined REST API that is consistent across all of your services, regardless of service implementation. A consistent API:

- Makes it easy for app developers to consume your services

- Enables you to change the backend service implementation without affecting the public API

- Enables you to take advantage of the scaling, monitoring, and security features built into Google Cloud

API Gateway Architecture

The API provider is responsible for creating and deploying an API on API Gateway. Each API is defined by a file written as an OpenAPI 2.0 spec. The OpenAPI spec defines the publicly facing URL of the REST endpoint for the API, the backend service accessed by the API, and any other characteristics of the API such as authentication, data format, and response options.

The API client makes a REST request to an API hosted on API Gateway to access backend services. An API client can be any app capable of making a REST call, such as a browser, mobile app, or web app. The API client only needs to know the URL of the API, the request verb (such as GET, PUT, POST, DELETE), any authentication requirements, and the format of any data sent to or received from the API. The API client does not need to know anything about the backend implementation. In fact, a single API hosted on API Gateway can be configured to access different backends based on information passed in the request.

What's the Difference Between API Gateway and Apigee API Management Platform?

API Gateway is a small subset of an API management platform. It enables you to provide secure access to and exposure of your services in Google Cloud (Cloud Functions, App Engine, Cloud Run, Compute Engine, GKE), through a well-defined REST API that is consistent across all of your services, regardless of service implementation. A consistent API:

- Makes it easy for app developers to consume your services

- Enables you to change the backend service implementation without affecting the public API

- Enables you to take advantage of the scaling, monitoring, and security features built into the Google Cloud Platform (GCP)

Apigee, on the other hand, includes a gateway, but it helps drive API consumption because it also includes a developer portal, monitoring, monetization, advanced API operations, and other extension possibilities. The gateway itself is more capable, with built-in policies. Apigee can connect to arbitrary backends, including but not limited to upstreams hosted in Google Cloud.

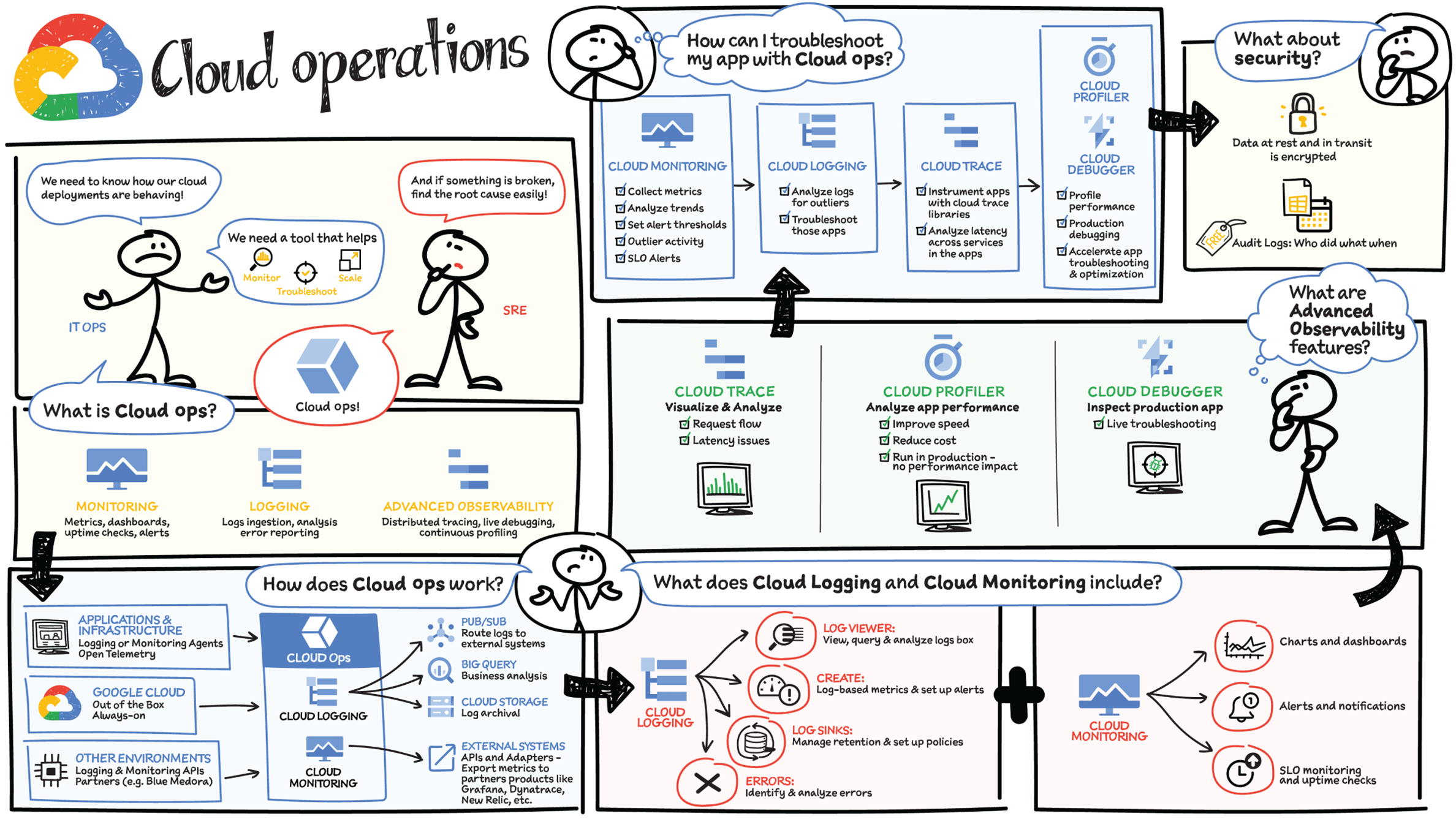

You know the time when you want to see how your cloud deployment is behaving or if something is broken in production and your users might be impacted. You are scrambling because there are too many tools to look through to find and resolve the issue. That's where Google Cloud's operations suite comes in.

What Is the Operations Suite?

Google Cloud's operations suite is made up of products to monitor, troubleshoot, and operate your services at scale, enabling your DevOps, SREs, or ITOps teams to utilize the Google SRE best practices. It offers integrated capabilities for monitoring, logging, and advanced observability services like trace, debugger, and profiler. The end-to-end operations solution includes built-in telemetry, out-of-box dashboards, recommendations, alerts, and more: It helps you capture signals, monitor systems, manage incidents, and troubleshoot issues.

What Does Cloud Operations Include?

Cloud Logging is a fully managed and highly scalable service that aggregates log data from all your infrastructure and applications across Google Cloud into a single location. Cloud Logging collects log data from Google Cloud services automatically, and any custom log source, such as applications, on-premises sources, or other clouds, through the Ops Agent, open source fluentd, or an API. It provides complete control to decide how and where to store the logs, including keeping the logs in Cloud Logging, exporting them to Cloud Storage, or streaming the logs via Cloud Pub/Sub to custom third-party destinations. Logs Explorer gives you powerful capabilities to filter the logs and convert them to log-based metrics for monitoring, alerting, analyzing, and visualizing.

Cloud Monitoring provides observability across your apps and infrastructure, regardless of where it is — Google Cloud, on-premises, or in other clouds. It supports a variety of metrics integrations and allows you to define custom metrics unique to your use cases and even send those metrics to external systems. Using the Metrics Explorer and Monitoring Query Language, you can analyze these metrics on the fly, identify correlations, and easily add the corresponding charts to a dashboard. You can use out-of-box or custom-built dashboards to get a consolidated view of the health of your infrastructure, service, or application and easily spot anomalies. But we cannot sit around all day looking at dashboards. Cloud Monitoring provides alerting, which lets you create policies to alert on performance metrics, uptime checks, and service level indicators.

Application Performance Management (APM) combines monitoring and troubleshooting capabilities of Cloud Logging and Cloud Monitoring with Cloud Trace, Cloud Debugger, and Cloud Profiler, to help you reduce latency and cost so that you can run more efficient applications.

- Cloud Trace provides visualization and analysis to understand request flow, service topology, and latency issues in your app.

- Cloud Debugger allows you to inspect the state of running applications after deployment, without needing to stop or slow it down.

- Cloud Profiler continually analyzes your code's performance on each service so that you can improve its speed and reduce your costs. And it is designed to run in production with effectively no performance impact.

While Cloud Trace is used to track relationships and latency between services, Cloud Profiler tracks this across the individual functions in your codebase and Cloud Debugger helps find the root cause from the method to the problematic piece of code.

How Does Cloud Operations Work?

You can use the tools directly in the cloud console or via APIs. Cloud Logging automatically ingests Google Cloud audit and platform logs. You can also ingest logs and metrics from other environments and on-premises using the API. You can then: Use Log Viewer to view, query, and analyze logs; Create log-based metrics to set up alerts; and Create log sinks to manage retention and set up policies

Cloud Monitoring provides a view of all system metrics created by Google Cloud services, at no cost. Cloud Monitoring also integrates with a variety of third-party providers and supports custom metrics ingested from non- Google Cloud sources via agents or API.

From the security perspective, all your data is encrypted at rest and in transit. All your security-focused audit logs are automatically available in Cloud Logging, telling you who did what, where, and when. With Access Transparency logs, you can always stay in compliance because it captures the actions Google personnel take while offering you support.

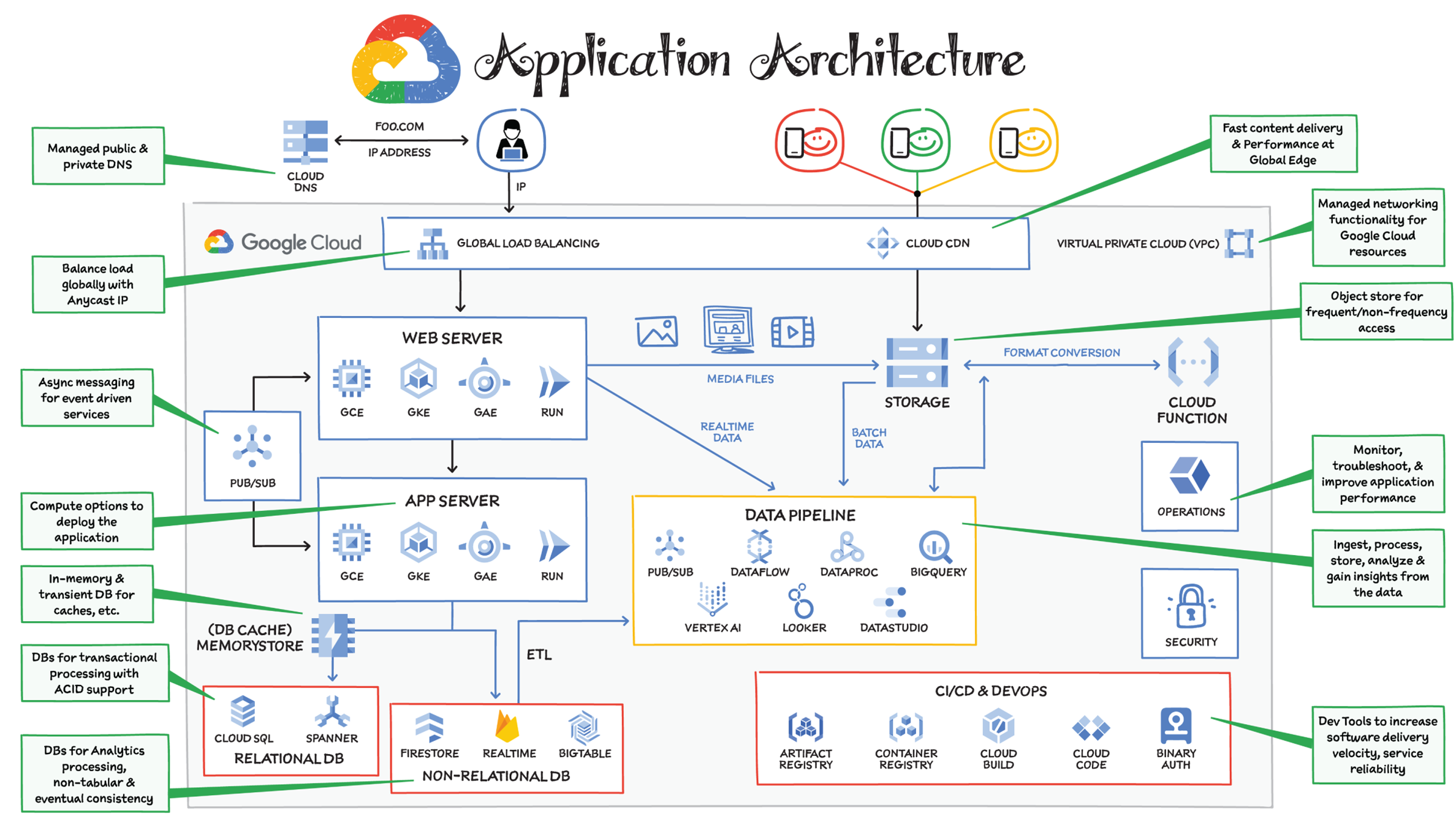

The critical pieces of a typical web application include the web server, application server, databases, and load balancer, among other components. Let's consider one such application to see how it would be deployed on Google Cloud.

Sample Application Architecture

Imagine you are a Google Cloud Architect for foo.com, an internet accessible application. There are many different ways to architect such an application on Google Cloud; no one way is right or wrong. Let's examine one approach, from the perspective of a generic request flow for when a user opens the browser and types foo.com in the address bar.

Domain Name System (DNS)

The request goes to the DNS server, which responds with an IP address. Cloud DNS is Google's infrastructure for high-volume authoritative DNS serving that offers 100% SLA (which means it never goes down). It uses Google's global network of anycast name servers to serve DNS zones from redundant locations around the world, providing high availability and low latency for your users.

Web and Application Servers

The IP address obtained from the DNS is used by the user's computer to make a connection to the web server where the code for the foo.com front-end is deployed. The application's business logic is deployed on the application server. This includes functionalities such as authentication service, inventory, and payment service. Requests to this application server are usually limited to only web servers and internal services. The web and application servers are housed inside VPC, which provides managed networking functionality for all Google Cloud resources.

For web and application servers, you have multiple options across Cloud Run, App Engine, GKE, and Compute Engine. Check out “Where I should run my stuff” for more details.

- Serverless: If you have a small team of developers, you want them to focus on coding and not worry about infrastructure and scaling tasks. Cloud Run or App Engine would be great picks. Both are serverless and scale from low to high traffic as needed. If you want to run serverless containers serving web and event-driven microservice architectures, then Cloud Run is recommended. Cloud Run should work for most use cases, but you should also check out App Engine if you are developing websites with built-in static file hosting.

- GKE: If you want to run containerized apps with more configuration options and flexibility, you can use GKE. It helps you easily deploy containerized apps with Kubernetes while giving you control over the configuration of nodes. Scaling is also easy; you can define the number of nodes to scale to as traffic grows.

- Compute Engine: Your other maximum-control option is Compute Engine. It is straight-up virtual machines (VMs), so you can precisely define the configuration of your machines depending on the amount of memory and CPU you need. This level of control, however, means you have more responsibility to scale, manage, patch, and maintain the VMs as needed. Compute Engine works well for legacy applications with specific needs and in situations that truly require full control.

Database

Of course, foo.com needs one or more databases to store information. These could be relational or nonrelational databases depending on the type of data and the use case. (For more detailed guidance on picking the right database for your use case, see “Your Google Cloud database options, explained.”)

Google Cloud relational databases include Cloud SQL and Cloud Spanner, which are both managed.

- Cloud SQL is perfect for generic SQL needs — MySQL, PostgreSQL, and SQL Server.

- Spanner is best for massive scale relational databases that need horizontal scalability. (Massive here means thousands of writes per second and tens of thousands of reads per second, while supporting ACID transactions.)

For nonrelational databases, Google Cloud has three major options: Firestore, Bigtable, and Memorystore.

- Firestore is a serverless document database that provides strong consistency, supports ACID transactions, and delivers fast results to complex queries. It also supports offline data and syncs, which makes it a great choice for mobile use cases along with web, IoT, and gaming.

- Bigtable is a wide-column NoSQL database that supports heavy reads and writes with extremely low latency. This makes it a perfect choice for events, time series data from IoT devices, click stream data, ad events, fraud detection, recommendations, and other personalization-related use cases.

- Memorystore is a fully managed in-memory data store service for Redis and Memcached. It's best for transient stores and database caches.

Load Balancing and Scale

As your traffic grows, you will need to scale the web and application servers with it. And as the number of servers grows, you will need a load balancer to route traffic to the web and application servers. Cloud Load Balancing is a fully distributed and software-defined system based on anycast IP addresses, which means you can set up your frontend with a single IP address. It is also global, so it can serve content as close as possible to your users and respond to over a million queries per second. You can set up content-based routing decisions based on attributes, such as the HTTP header and uniform resource identifier. It also offers internal load balancing for internal application servers so that you can route traffic among them as needed.

Content Delivery Network (CDN)

Static files don't change often, so CDN is used to cache these files and serve them from a location closest to the user, which helps reduce latency. Right at the load balancer you also have the option to enable Cloud CDN to cache frequently requested media and web content at the edge location closest to your users. This reduces latency and optimizes for last-mile performance. It also saves cost by fielding requests right at the edge, so they don't have to be handled by the backend.

Object Store

All static files for foo.com such as media files and images as well as CSS and JavaScript can be stored in an object store. In Google Cloud, Cloud Storage is your object store for both long- and short-term storage needs.

Serverless Functions

Let's say foo.com is also available on mobile devices, which need images rendered in smaller mobile formats. You can decouple functionality like this from the web server and make it a function-as-a-service with Cloud Functions. This approach enables you to apply your image resizing logic to other applications as well. You can trigger the serverless function as soon as a file is added to Cloud Storage and convert the file into multiple formats and put them back into storage, where they are used by the web server. You could also use serverless functions for other use cases such as address lookups, chatbots, machine learning, and more.

Events

In certain situations, foo.com might need to send messages, notifications to the user, or events between various microservices. This is where an asynchronous messaging service such as Cloud Pub/Sub can be used to push notifications to a topic and have other services subscribe to the topic and take appropriate action on it asynchronously.

Data Analytics

Applications like foo.com generate real-time data (e.g., clickstream data) and batch data (e.g., logs). This data needs to be ingested, processed, and made ready for downstream systems in a data warehouse. From there it can be analyzed further by data analysts, data scientists, and ML engineers to gain insights and make predictions. You can ingest batch data from Cloud Storage or BigQuery and real-time data from the application using Pub/Sub, and scale to ingesting millions of events per second. Dataflow, based on open source Apache Beam, can then be used to process and enrich the batch and streaming data. If you are in the Hadoop ecosystem, you can use Dataproc for processing; it is a managed Hadoop and Spark platform that lets you focus on analysis instead of worrying about managing and standing up your Hadoop cluster.

To store the processed data, you need a data warehouse. BigQuery is a serverless data warehouse that supports SQL queries and can scale to petabytes of storage. It can also act as long-term storage and a data lake along with Cloud Storage. You can use data from BigQuery to create a dashboard in Looker and Data Studio. With BigQuery ML, you can create ML models and make predictions using standard SQL queries.

Machine Learning

For ML/AI projects, you can use the data in BigQuery to train models in Vertex AI. Your media, image, and other static file datasets from Cloud Storage can be directly imported into Vertex AI. You can create your own custom model or use the pretrained models. It's a good idea to start with a pretrained model and see if it works for you. Most common use cases are covered (including image, text, video, and tabular data). If a pretrained model does not work for your use case, then use the AutoML model in Vertex AI to train a custom model on your own dataset. AutoML supports all the common use cases and requires no code. If you have lots of ML and data science expertise in house, you may decide to write your own custom model code in the framework of your choice.

Operations

foo.com needs to be holistically monitored to make sure the servers and every part of its architecture is healthy. Google Cloud's operations suite offers all the tools needed for logging, monitoring, debugging, and troubleshooting your application and infrastructure.

DevOps

You also need to make sure the foo.com development and operations teams have the right access and the right tools to build the application and deploy it. As developers write the code for the app, they can use Cloud Code within the IDE to push the code to Cloud Build, which then packages and tests it, runs vulnerability scans on the code, and invokes Binary Authorization to check for trusted container images, and once the tests are passed, deploys the package to staging. From there you can create a process to review and promote to production. Container images are stored in Artifact Registry from which they can be deployed to GKE or Cloud Run. Compute Engine images are stored in your project.

Security

foo.com needs to be secured at the data, application, user/identity, infrastructure, and compliance levels. This topic will be covered in detail in Chapter 8, “Security.”