CHAPTER 3

Playbook for Phase 1 – Deployment Coaching

IN 2015, I FLEW to Paris to facilitate an OKRs training workshop. My client requested that I spend a full day with their leadership team to introduce OKRs. So, I took the project and did exactly what I was hired to do. At the time, I was still figuring out my approach. I did not know about the three phases outlined in Chapter 2. I was not clear about how best to prepare for the workshop. My client suggested we have a couple calls prior to the workshop to align on the agenda. After these two brief calls, I packed my bags and headed to the airport.

The workshop in Paris did not go so well. Although we eventually drafted some decent OKRs, we spent most of the afternoon debating how to deploy OKRs. I was bombarded with questions like (1) “How will these OKRs work with our current system of KPIs?” (2) “I read that OKRs should be decoupled from performance reviews, but how is that possible given they represent our most important goals?” and (3) “If top‐level key results do not become team‐level objectives like John Doerr's football team example, how can we ensure alignment?”1

I had plenty of time to reflect on that training workshop in Paris. I spent the 12‐hour flight home writing in my journal, uncovering the critical questions that must be answered prior to an OKRs training workshop. I named the answers to these questions deployment parameters. Your client's deployment parameters are the foundation for their OKRs program. Your client uses these parameters to communicate how they are deploying OKRs to the entire organization.

There is no one‐size‐fits‐all, definitive list of deployment parameters; however, we recommend covering 10 parameters with every client. Therefore, we refer to these parameters as “universal.” Aligning on the 10 universal parameters with your client prior to delivering a training workshop is always time well spent.

This chapter provides a detailed analysis of the 10 universal deployment parameters and concludes with a sample list of other deployment parameters that you may find important to cover with certain clients.2 To create context for aligning on these parameters, begin by confirming why your client wants to leverage OKRs.

BEGIN WITH “WHY?”

Most workers are busier than ever, and therefore, to cut through the noise and get a commitment to OKRs, your client's leadership team must be able to provide a compelling answer to why they are implementing OKRs and how employees will benefit. As for the answer itself, each organization should provide a unique response. Bad answers come in two flavors:

- Imitation. “We're implementing OKRs because Google does it,” or “We read Measure What Matters and lots of successful companies are using it, so we will, too.”

- Too broad. “We're implementing OKRs to improve focus, alignment, execution, accountability, transparency, engagement, clarity, continuous improvement, and organizational learning, as well as shifting our mindset from output to outcomes.” Listing too many buzzwords when explaining “Why OKRs?” dilutes the message.

Good answers explain the specific problem that leadership seeks to solve by introducing OKRs. Some examples taken directly from CEOs explaining why they've decided to deploy OKRs, followed by a brief analysis of how their answer informs the selection of deployment parameters are:

Cross‐Functional Alignment

- CEO on “Why OKRs?” “We've doubled in size over the last year. As we scale, we are seeing silo effects typical of larger corporations. To preserve our collaborative culture, we are introducing OKRs. We believe the common goal language of OKRs can prevent these silo effects, improving alignment and communication across teams.”

- Impact on Deployment. Consider starting with company‐level OKRs only. Have each team explore how they can work together to achieve higher‐level goals.

Focus

- CEO on “Why OKRs?” “We have too much on our plates. There are endless potential projects to take on. However, if we try to take on more over the next few years, we risk failing in many areas rather than succeeding in our core growth areas. We're bringing in OKRs to help us focus on what matters most.”

- Impact on Deployment. Consider defining a single OKR for each team. It is not the goal for teams to include all their work in this OKR. Simply define one focus area for near‐term improvement.

Better Communicate Company Strategy

- CEO on “Why OKRs?” “As a leadership team, we feel we have a well‐defined strategy. However, we keep getting feedback from our staff that they do not even know we have a strategy. We need to better articulate our strategy and ensure leadership clearly presents the strategy at quarterly updates. We are adopting OKRs to communicate our company's strategy in a consistent format.”

- Impact on Deployment. Start with company‐level OKRs only. Communicate OKRs at recurring meetings, such as a quarterly all‐hands.

In practice, it is quite rare to ask your client, “Why OKRs?” and get a concise answer. Ask this question several times and get input from multiple sources. Leadership's answer to this question will be most critical, but test to see that leadership's motivation for deploying OKRs resonates with OKRs project leads, team leads, and team members.3 Your client's motivation for deploying OKRs informs their selection of deployment parameters.

TEN UNIVERSAL DEPLOYMENT PARAMETERS

1. At What Level Will We Set OKRs?

This parameter explores if, how, and when to define OKRs at various levels in an organization. These levels include top/company, team, and individual. Rather than rolling out OKRs at multiple levels all at once, we suggest introducing various levels over time. For example, you may choose to begin with top‐level OKRs only and plan to introduce team‐level OKRs after succeeding at the top level. As you read through this section, notice that how you define “top/company level” as well as “team level” is not quite as obvious as it might seem at first.

Top/Company Level

We advise beginning OKRs coaching engagements with a mindset that your client will benefit by defining OKRs at the company level. OKRs at higher levels create context for OKRs at lower levels in the organization. To set OKRs at the company level, you must interface directly with the CEO. Assuming you have access to the CEO and can confirm that your client wants to define OKRs for the overall company, the company‐level OKRs become the top‐level OKRs. However, defining OKRs at the company level is not always the right place to start.

Your client may define a set of top‐level OKRs that exist below the company level. There are two reasons for this: (1) feasibility and (2) effectiveness. Feasibility is obvious: You may not have access to the CEO, especially in large companies with thousands of employees. The second reason is less obvious: Company‐level OKRs may not be effective. That is, the process of defining company‐level OKRs may not be of value. Many of our clients in the Global 1000 with hundreds of products and departments already have a strategic planning system in place that obviates the need for company‐level OKRs. In these massive organizations, introducing OKRs at a lower level often makes more sense.

Our clients have defined the top level as the office of the CIO, a business unit, and even a single product team. One of our larger clients with dozens of business units chose to define top‐level OKRs for each business unit. The company maintains an annual budget and metrics dashboard to capture the financials of each business unit and felt it would be a redundant exercise to define OKRs for the overall company.

Team Level

We recommend getting top‐level OKRs right before rolling out OKRs to lower‐level teams. This enables leaders to lead by example and may provide the context teams need to connect their OKRs to top‐level goals. If your client is eager to roll out OKRs across teams on day one, advise them to pilot OKRs with several teams prior to scaling their program. How your client chooses to implement OKRs at the team level is a critical predictor of their success with OKRs.

Your client's definition of team can make or break their OKRs program. When I worked at Betterworks, the leading OKRs software solution in 2013, we started every implementation by loading the org chart into our software. This automated the process of defining OKRs teams. Oh, wouldn't it be nice if we could simply define teams based on a one‐to‐one mapping to the org chart? While the org chart approach is simple and scalable, we find it is not always effective. In fact, we have encountered very few cases in which the org chart approach is effective across an entire organization over the long haul.

Dozens of organizations have approached us for coaching and guidance after concluding that their org chart approach failed. One of the most common complaints is that a functional team approach increased silo effects rather than alignment across teams. Defining team‐level OKRs based solely on the org chart often conflicts with the “work together” part of the definition of OKRs.

While no single approach works with every organization to define an optimal set of teams that should set OKRs, there are several approaches to consider based on what is proven to work in the field. Here are the three most common ways we've seen organizations think beyond their org charts to set up team‐level OKRs that encourage cross‐functional alignment. As you review each of the three approaches, keep in mind that they are not mutually exclusive. For example, your client might merge highly dependent teams, case 1, as well as define a cross‐functional team based on a top‐level OKR, case 3.

Case 1: Merge Highly Dependent Teams In an OKRs engagement with a mid‐sized tech company, an engineering manager asked, “Do we set up separate OKRs for the product team and a separate set of OKRs for the engineering team?” I replied with, “Given you have a separate head of product and head of engineering, then yes, we should define one set of OKRs for the product team and another for engineering.” In other words, I was sticking to the assumption that the org chart structure defines OKRs teams. But this was not the right answer. After a few minutes attempting to draft OKRs with just the product team, we concluded that nearly every key result was dependent on engineering. As my client's motivation for implementing OKRs was to increase alignment across teams, we immediately adjusted the game plan to ensure we did not reinforce silo effects by strictly adhering to the org chart.

In the spirit of keeping things simple, we merged product and engineering into a single OKRs team and named this team “ProdEng.” In the next cycle, we identified critical dependencies with marketing, so we expanded the team to include a few members from marketing. As this OKRs team loosened the constraint of defining their OKRs based purely on the org chart, we took a step toward creating more alignment across teams. We adjusted the default approach of using the org chart to define teams and leveraged OKRs as a common framework for aligning on a smaller number of OKRs that were shared across the org chart.

Given the reality that product and engineering were still functional teams and had their own cadence and sprint cycles, we agreed to identify a key result champion from the product team and co‐champion from engineering for each key result. Simply developing OKRs that spanned the org chart and included the right people from the start improved alignment. This same approach often works with sales and marketing, IT and finance, or any other collection of highly dependent teams.

Case 2: Leverage Existing Cross‐Functional Squads From 2014 to 2017, I was lucky enough to coach several leading online classified companies using OKRs in the United States, Canada, and the Netherlands. The online classified company in Canada completely abandoned the notion of functional team OKRs. They had an org chart with a clearly defined reporting structure. However, the org chart was totally decoupled from the teams that set OKRs.

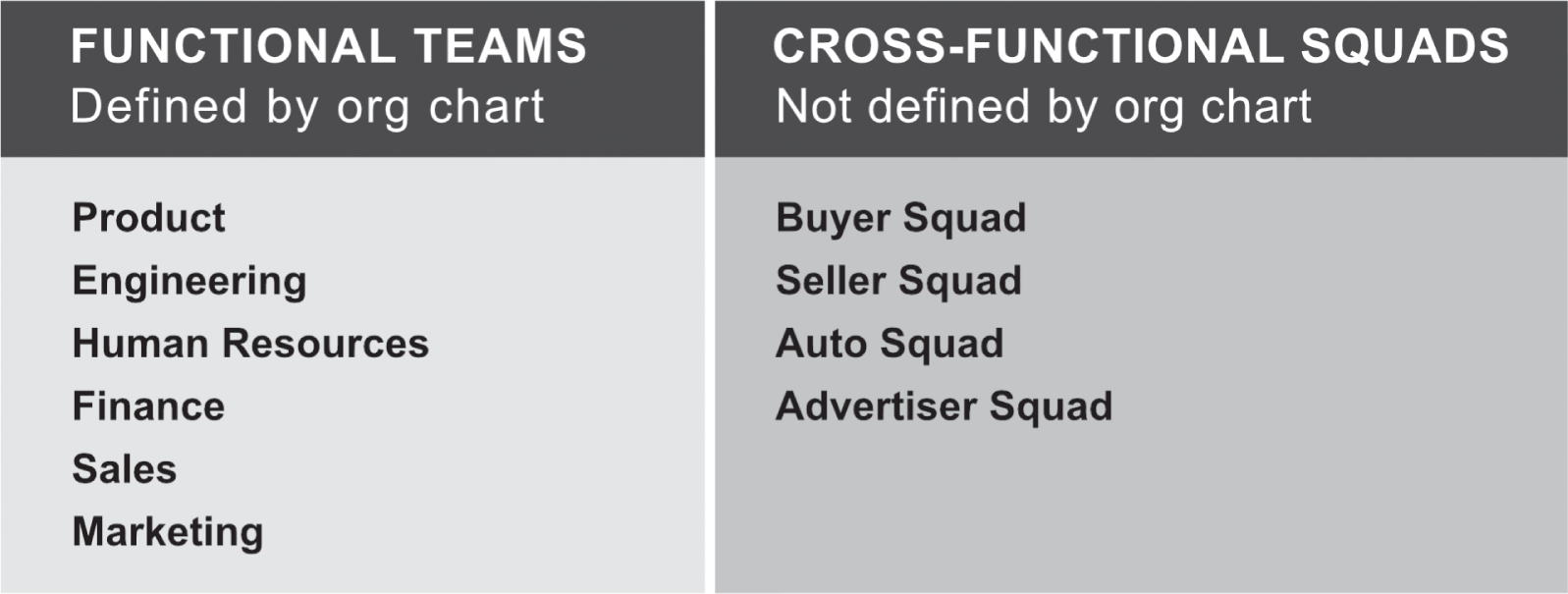

Rather than a list of OKRs teams based on the org chart (e.g., product, engineering, human resources, finance, sales), the teams that set OKRs were mapped to the needs of various customer segments. This mapping reflected their cultural focus on customer centricity. Team‐level OKRs were associated with cross‐functional squads, each named after their customer segment. They included “Buyer Squad,” “Seller Squad,” “Auto Squad,” “Advertiser Squad,” as shown in Figure 3.1.

FIGURE 3.1 Two common ways to define OKRs teams

Figure 3.1 lists four squads defined by customer segments. However, this is just one example. One of our clients, a leading global e‐commerce company, defines squads based on different parts of their customer's journey. Its customer journey squads include: “Account Registration,” “Checkout,” “Payment and Fraud,” “Last Mile Delivery,” and “Recommendations.”

In his book Inspired, Marty Cagan describes a squad as a best‐practice approach for designing product teams:

A product team is a cross‐functional set of professionals, typically comprised of a product manager, a product designer, and a small number of engineers. In addition, there are sometimes additional people with specialized skills included on the team such as a data analyst, a user researcher, or a test automation engineer… . The key is that these people with their different skill sets usually come from different functional departments in the company, but they sit and work all day, everyday… . It's not unusual in larger organizations to have on the order of 20 to 50 of these cross‐functional product teams, each responsible for different areas, and each product team with its own objectives to work on.4

In 2017, we started seeing more and more organizations, especially in high tech, move beyond the org chart to define their OKRs based on cross‐functional squads. Leading organizations such as eBay, PayPal, Nike, and Walmart have implemented product squads. When such squads are in place, organizations find it intuitive to define OKRs at the team level for each cross‐functional squad.

Case 3: Define Cross‐Functional Teams Based on Top‐Level OKRs Our clients mentioned in case 2 defined cross‐functional squads prior to launching OKRs. In such cases, we advise defining team‐level OKRs based on existing squads. However, many organizations do not have such cross‐functional squads in place. Identifying potential cross‐functional squads is an unexpected benefit of the OKRs process.

Starting with top‐level OKRs may lead to the formation of OKRs teams that go beyond the org chart. One of our clients did just this. After facilitating a training workshop with key leaders, we created a top‐level objective focused on organic growth. The CFO pointed out that the five‐year financial model depended on maintaining an organic growth rate of at least 50%. However, there was no team responsible for monitoring and maintaining organic growth. Rather than asking each functional team to define OKRs in the context of top‐level objectives, our client decided to create a new team focused on organic growth. This team included leaders from several functional areas and was charged with defining and managing the company's organic growth objective.

Individual Level

If your client wants to set individual‐level OKRs, we advise making this practice optional. Other than Google, we have not seen an organization succeed when mandating individual‐level OKRs for all employees. Some organizations force individuals to define their OKRs by a certain date and load them into a certain location as a compliance ritual. Such practices introduce overhead and often create unintended consequences. Here's an anonymous story from a former customer success manager of an OKRs software vendor that illustrates some of these consequences.

One of our clients was an irate inbound call center manager. Her company had recently purchased OKRs software licenses for every employee and instructed all staff to publish their OKRs into the software tool by a certain date. She asked me how a given person working in the call center could possibly benefit by loading their personal goals into an OKRs software tool given that she already installed a call‐center tracking system that monitored every metric. She claimed, “No one at our company other than me cares about the individual performance of the workers in our call center.” Call center employees already had a real‐time dashboard that measured every metric that could possibly be worth tracking. The tracking tool cost quite a bit and was running well. We agreed with the call center manager that it would actually be a step backward if each of the nearly 200 call center workers were forced to copy and paste their metrics and load them into our software tool as a compliance exercise.

—Anonymous OKRs software customer success manager

We recommend that your client explore individual‐level OKRs only after succeeding with team‐level OKRs for at least two cycles. Even then, we advise organizations not to require that all employees define individual‐level OKRs, as this often turns an OKRs program into yet another compliance exercise. When individuals set OKRs for themselves, they often worry, rightfully so, that the achievement of OKRs will be used to evaluate their performance. As such, rather than setting stretch goals, they define key results with low targets so they can underpromise and overdeliver.

Yes, Google has thousands of employees setting OKRs at the individual level. While this approach has worked well for some workers at Google, we've spoken with Googlers who report that individual‐level OKRs are a waste of their time. In extreme cases, individual‐level OKRs become personal goals that are totally disconnected from business goals.5

We've met with a few teams at Twitter who abandoned the practice of setting OKRs at the individual level. These teams stopped setting individual‐level OKRs for two reasons. First, most OKRs at the individual level looked like a list of key tasks rather than key results. Second, some teams failed to make progress on team‐level OKRs, even though most individual‐level OKRs were achieved. In this case, the practice of defining individual‐level OKRs led to unintended consequences. Individual contributors tended to focus more on individual‐level OKRs rather than working as a team to achieve higher‐level OKRs.6 So, if individuals do not define their own OKRs, how do individuals connect their work to OKRs? How do individual contributors stay engaged?

Individual contributors can – and should – be actively engaged with OKRs at higher levels. Some individuals engage with OKRs by taking on roles such as team member, internal OKRs coach, and/or key result champion. Individuals often discuss OKRs during their regular 1:1s with their manager. Some use OKRs as context for focusing their effort on activities that drive OKRs forward. However, as OKRs do not capture all work, individuals also allocate their time to business‐as‐usual activities that maintain health metrics (e.g., update software to ensure website uptime remains >99.99%) or are simply required for compliance (e.g., provide reports to support the auditors).7

The guidance to begin with top‐level OKRs and to avoid requiring individual‐level OKRs is universal. However, your approach to choosing the teams that will set OKRs is perhaps the most important deployment parameter of all. This is an opportunity for your client to translate their corporate culture into the fabric of their OKRs program. Let's summarize three cases:

- You want a hierarchical culture. Maybe you will choose the default approach to define OKRs teams based on the functional teams in the org chart. In this case, OKRs can help reinforce the org chart hierarchy.

- You want a culture that fosters cross‐functional execution. Consider merging highly dependent functional teams to build your OKRs teams. This approach embraces dependencies and meets them head on. You may also define top‐level OKRs first and see if teams emerge as illustrated in case 3 in which our client created a cross‐functional squad to manage organic growth.

- You want a customer‐centric culture. Consider defining team‐level OKRs based on the cross‐functional squad model as illustrated in case 2. Leverage this approach with clients that already have squads in place.

2. How Many OKRs Will We Set? Will We Include an Internal Objective?

Back in the 1980s, most teams set five to seven objectives, each with three to five key results. Now, in the 2020s, we find it unusual for a team to set more than three objectives. The fact that teams are defining a smaller set of OKRs is probably a good thing. After all, OKRs are intended to increase focus. As OKRs coaches, we live by the mantra less is more.

We advise limiting OKRs to a narrow area of focus rather than attempting to map all work to OKRs. In general, we advise teams to define at most three objectives and four key results per objective. The optimal number of OKRs varies from team to team, so don't be too rigid about the number of OKRs.8 Given that OKRs should not attempt to capture all work, most of our clients require any teams that are setting OKRs to focus on at most three objectives that capture 40% to 80% of their total work effort.9 Although we advise adhering to the less is more mantra, certain teams may benefit from another approach.

Some teams capture more than 90% of their work effort in their OKRs. This can work well for resource‐constrained teams that serve multiple stakeholders and are constantly inundated with requests to take on new projects. These teams use OKRs as a shield to protect their team members from getting pulled into new directions in the middle of the OKRs cycle. They use OKRs to send the message, “If you don't see it in our OKRs, we're not working on it right now.” These teams tend to define as many as five objectives, each with as many as five key results. While we do not advise teams attempt to capture 90% of their work in their OKRs, we recognize this approach may work for teams looking to adopt OKRs as a way of communicating what they are not prioritizing. In our experience, engineering, platform, and infrastructure teams may benefit from this approach.

On the other extreme, some OKRs experts recommend teams align on a single objective.10 As of 2020, we find that most teams we coach prefer to focus on a single OKR during their learning cycle. Then, after succeeding with one OKR, they explore multiple OKRs in their next cycle. In general, we advise that teams just starting out with OKRs aim for just one or two objectives, at most three. While we would never recommend every team begin by defining exactly two objectives, we do advise challenging your client to go through the exercise of drafting two objectives to balance internal and external goals.

Some of our clients define two OKRs, an internal OKR and a separate, external OKR. They find it critical to focus on making a positive impact on both external customers and their internal team. External objectives are aimed at making an impact outside the team. Nearly all our clients are focused on external objectives. Examples include:

- External Objective 1: Win Belgium market.

- External Objective 2: Successfully launch add‐on product ABC.

- External Objective 3: Win more big accounts to accelerate growth.

While customer‐facing teams often focus on external objectives, support teams such as HR and finance often have internal objectives. Internal objectives are about improving processes that impact our employees and how we can work better as a team. Examples of internal objectives include:11

- Internal Objective 1: Improve onboarding process for new engineers.

- Internal Objective 2: Create a culture of cybersecurity readiness.

- Internal Objective 3: Figure out where the money we make comes from.

Questions to ask your client as you help them define how many and what types of objectives to define include:

- If you could only set one objective, would it be internal or external?

- Would it be useful to draft an internal and an external objective?

- Is it acceptable for a team to have only an internal objective even if the top‐level OKRs are all external?12

3. How Will We Score OKRs? How Will We Update Progress?

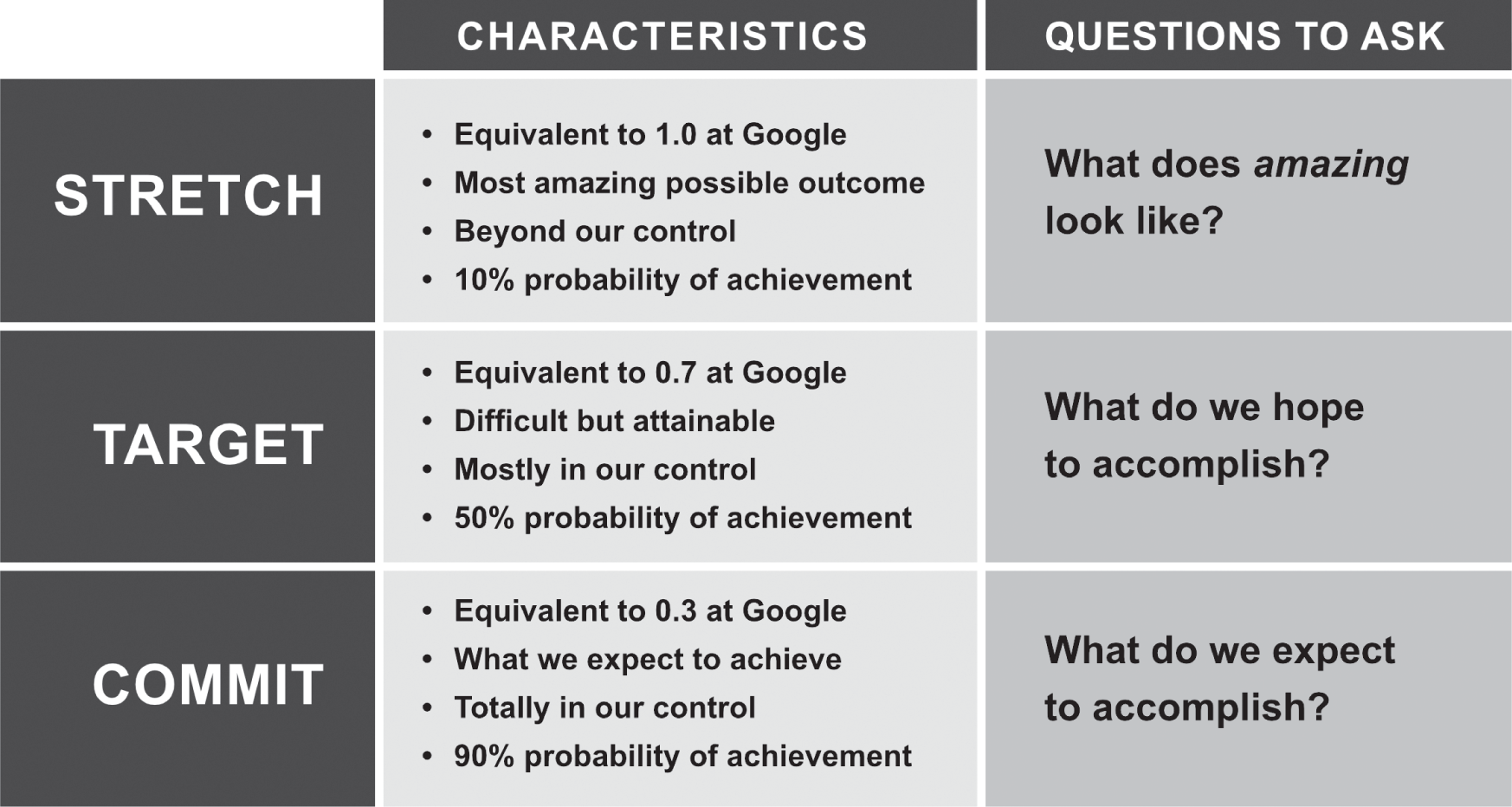

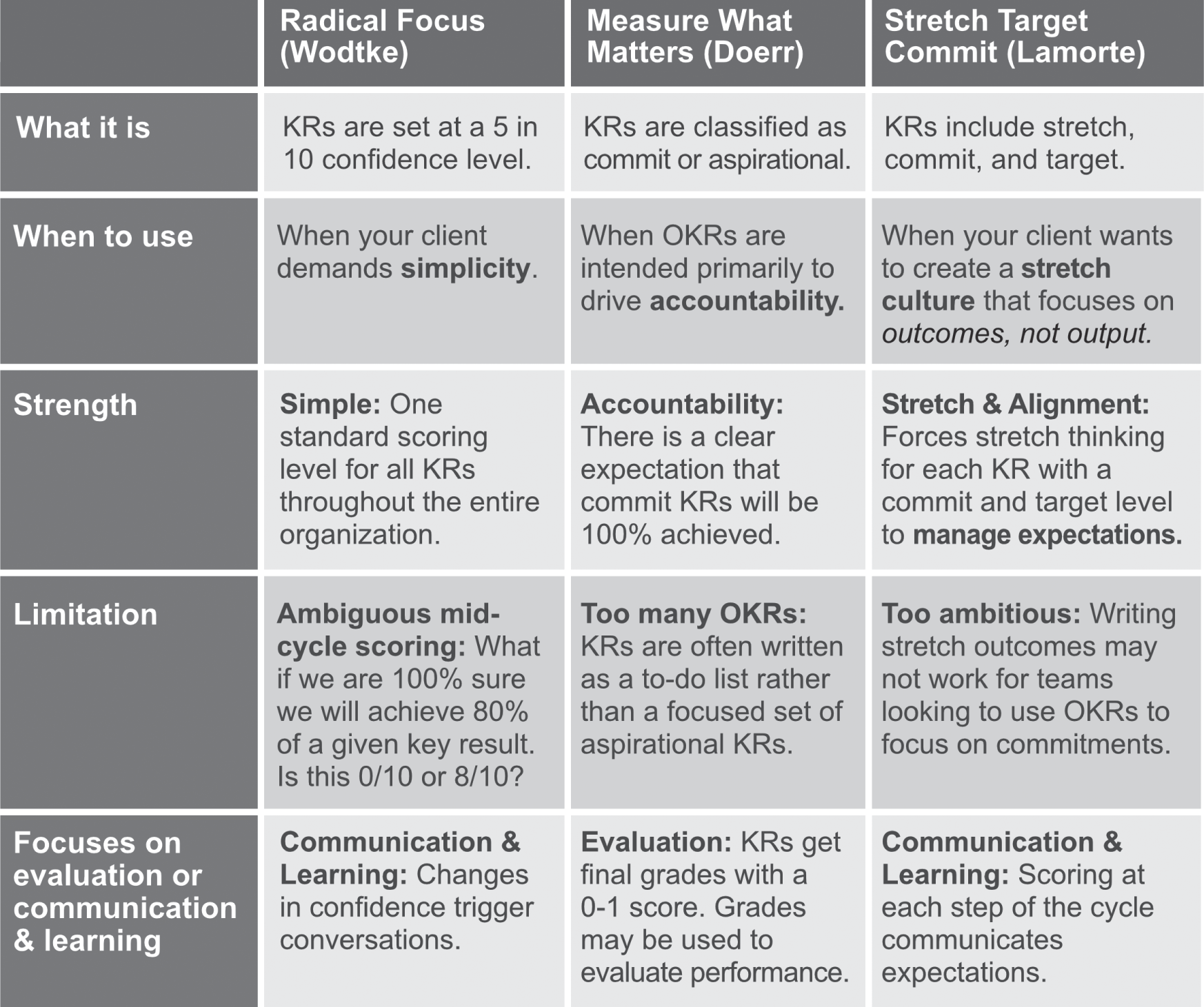

While scoring key results is essential to success with OKRs, we advise you not to score objectives.13 In my early years as an OKRs coach, I realized many organizations did not have a clear approach to scoring key results. So, I developed a standard scoring system and named it “Stretch Target Commit.”14 While most of our clients adopt this scoring system, no one approach to scoring key results is ideal for every organization. As a coach you should be familiar with the three most common scoring systems used in the field:

- Radical Focus. Key results are set at a 50% confidence level; key results are achieved or not.

- Measure What Matters. Key results are classified as “commit” or “aspirational.” Key results are scored on a 0–1 scale. Commit key results are expected to be fully achieved with a score of 1.0. The target score for aspirational key results is 0.7.

- Stretch Target Commit. Key results are defined by: (1) a stretch with 10% confidence of achievement, (2) a target with 50% confidence, and (3) a commit with 90% confidence.

Regardless of which scoring system your client adopts, be sure they agree to deploy a single system. In several of our early OKRs projects, we provided teams with various options for scoring key results. Several OKRs project leads gave us direct feedback that they would like us to require that all teams adopt a single scoring system when getting started.

Binary Scoring (Oracle)

I first used OKRs in 2011 at a small startup company. We adopted a scoring system based on what worked for Oracle back in the late 1980s. Each key result was either achieved or not. Things were simple. It was binary. If a key result was “Sign 10 new customers by end of quarter” and the quarter ended with nine new customers, the key result was not met. In fact, it was assumed that you'd hit 10 customers midway through the quarter, cross out the 10, raise the bar to 15, and achieve 20 by end of quarter. This approach to key result scoring is known as “set the bar high and overachieve.”15

It was an unwritten rule that if your team achieved its objectives, your team would be celebrated, and individuals comprising the team would be more likely to be promoted. Your team was successful to the extent that OKRs were achieved.

Oracle's scoring system was not perfect. Consider the previous situation, where the key result was “Sign 10 new customers” but the quarter ended with nine new customers. The binary scoring system could create a sense of failure, as signing nine new customers was interpreted as falling short. Not by much, but still, the feeling was one of losing. Oracle's binary approach based on setting the bar high and overachieving was diametrically opposed to the culture of OKRs at Google, where the worst thing you can do is blow out all your OKRs.

Grading on a 0–1 Scale at End of Cycle (Google)

I learned about how Google grades16 OKRs right about the time the Google Ventures video came out in 2013. The idea was to standardize how all key results are scored across the organization. This normalized scoring model gives everyone a way of knowing how to measure success. A score of zero is bad, a score of 0.3 indicates some progress, a score of 0.7 reflects the target level of achievement, and a score of 1.0 reflects success beyond the target.

The culture at Google emphasizes stretch goals. In fact, scoring all 1s on key results implies gaming the system. I heard a story about a Googler who set lofty goals and then went on to achieve them all. Apparently, everyone assumed he sandbagged. Pranksters brought sandbags into his office as a practical joke.17 While it might not be perfect, Google's scoring approach standardizes conversations and streamlines communication about the level of achievement for each objective.

Stretch Target Commit with Predefined Scores (Lamorte)

Nearly all organizations score key results at the end of the cycle. Many score key results several intervals throughout the cycle. However, very few define scoring criteria when defining key results. Nearly all our clients find it valuable to establish scoring criteria up front. The conversation about what makes a “0.3” or a “0.7” is more meaningful when we translate these numbers into English. After discussing this with Vincent Drucker – and yes, Vincent is Peter Drucker's son – I developed the guidelines shown in Figure 3.2 that our clients find useful.

FIGURE 3.2 Defining a stretch, target, and commit “prescore” for each key result

Here is a story that illustrates the value of aligning on key result scoring up front. The story begins with a VP of engineering creating the following OKR:

The key result as written represents the 1.0/stretch scoring level. Aligning on the 0.3/commit and 0.7/target scoring levels before finalizing key results forces a conversation about what is a commitment versus what is a stretch (or aspirational) outcome early in the OKRs cycle. When presented with this scoring model, some of our clients are initially concerned that defining three levels will require too much time. However, in practice, defining these scoring levels takes just minutes.

In this case, the engineering manager let the VP know that the drafted 0.7/target score was not even possible and that the 0.3/commit score would be quite a stretch. The engineering manager had a contact in the legal department who explained that the organization did not have permission to even sell the product until the following quarter. Surprisingly, the VP was not aware of this legal barrier.

The prescoring exercise aligned expectations. It enabled the VP to learn that it would be impossible to get paying users by the end of the quarter. Having these conversations before finalizing the key result ensured everyone was on the same page from the start. In this case, to better manage expectations and resources, the engineering team refined the key result. Ironically, the proposed commit level of the key result as drafted by the VP ended up as the stretch level of the finalized key result:

Even though Google uses a 0–1 numerical scoring system, most of our clients do not adopt a numerical scoring scale. Rather than using 0.3, 0.7, and 1.0, they simply use words such as commit, target, and stretch to align on three levels of progress for each key result.

Mid‐Cycle Scoring: Historical versus Predictive

Many organizations that approach us for help with OKRs have already started working with OKRs and have implemented a scoring system that focuses on progress to date. As such, they measure historical progress on each key result in the form of “X% complete.” While we do not discourage you from looking back and monitoring historical progress on key results, we highly recommend including a predictive element. Let's go back to the “10 new customers” key result to analyze why predictive scoring is so valuable.

Suppose we sign six customers in the first month of the quarter. Great, the key result is 60% complete! However, suppose this same team is not confident that they can sign 10 total customers by quarter end; perhaps the pipeline dried up or a key sales rep just resigned. It would be nice to have a scoring system that communicates this concern.

Predictive scoring serves as an early‐warning system to better manage expectations and reduce the surprises many leadership teams would rather avoid. The simplest form of predictive scoring features a single confidence level that a given key result will be achieved or not by the end of the cycle. Radical Focus adopts such a system. All key results begin with a confidence score of 5/10 by definition and scores for each key result are updated weekly throughout the cycle. In this case, we could communicate that the confidence score dropped from 50% to just 20% to reflect the unlikelihood of signing 10 customers by quarter end. It is the change that then triggers conversations about progress.18

Predictive Scoring That Goes beyond the Numbers

Our analysis of scoring thus far has focused on the actual achievement level of the key result. However, the numerical score of a key result often does not tell the full story. For example, your client may be on track to achieve a key result to onboard five new customers, but achieving the key result requires excessive overtime. The key result champion may provide a high confidence score but feel like saying, “We're on track to achieve the key result, but . . .” If there is something that feels bad about the key result, they could flag this by coloring the key result red or selecting a sad‐face emoji to escalate and resolve as a team. While some systems use color coding based on numerical scores, other organizations introduce color coding to look beyond the numerical progress of a key result.19

One of our clients had a key result to get 100,000 mobile app downloads. They were on track to get 200,000, but the quality of these downloads was horrible. In fact, they were gaming the system by purchasing low‐quality downloads to achieve the key result. The key result was not driving the right behavior. Given that the quality was so low, the key result scored a “red 1.0.” The opposite case of a “green zero” can also occur.

In the case of a “green zero,” there is no progress on the numerical score of the key result, but there is a positive development. Consider the key result, add five new customers this quarter. If your client is on track to add zero customers this quarter, the key result scores a zero. However, they might have 10 new customers lined up to sign next quarter and feel compelled to color the key result green.

Systems for scoring OKRs are still evolving. We predict that the topic of scoring key results will become more widely discussed as organizations hit a roadblock due to their lack of a clear approach to key result scoring.

Analysis of the Three Most Common Scoring Systems

In the Radical Focus scoring system, all key results are set at a 5 in 10 confidence level. It is expected that each team setting OKRs will achieve roughly half of its key results. Key results are updated weekly with a predictive confidence score using a 1–10 rating. Any change in confidence level should trigger conversations. At the end of the period, each key result is either “achieved” or “not achieved.” If you achieve 80% of a given key result, it receives a final score of zero. We find teams often achieve “all or nothing.” That is, some teams tend to achieve all their key results and others tend to achieve none of them. Teams learn to adjust their level of stretch to better manage expectations as they define future OKRs. Radical Focus scoring often works well for organizations just getting started with OKRs that demand simplicity.

The Measure What Matters scoring model requires each key result to be classified as a “commitment” or an “aspiration.” At the end of the period, each key result is given a final score, often called a grade, between 0–1. Although this system encourages teams to set both commit and aspirational key results, most teams emphasize commit key results. In our experience, teams adopting this system tend to create commit key results that look like a lengthy to‐do list. Aspirational key results, if any are included, are often added as an afterthought. With most key results written as commitments in the form of binary milestones, the scoring process can create confusion. We once saw a final score of 0.4 for the aspirational key result “End Q3 with a permit to build in Portland.” What does that even mean?

In the Stretch Target Commit approach, all key results are written as stretch outcomes that are typically defined at a confidence level of 1 in 10. The same key result also has a predefined commit and target level. The commit reflects a 9 in 10 confidence level and should be highly controllable with minimal dependence on external factors. The target level reflects a 5 in 10 confidence level, falling somewhere between the commit and stretch.20 While we find this approach works best in most cases, some organizations benefit by adopting one of the other scoring systems. Figure 3.3 compares the three most common scoring systems.

FIGURE 3.3 Comparing the three most common systems for scoring key results

4. How Long Is an OKRs Cycle?

The first OKRs cycle varies from a rapid, one‐month pilot cycle on up to a full year. We recommend avoiding a one‐month cycle as it is difficult to write OKRs for such a short period. Encourage your client to adopt a common cycle time when getting started. Beginning with a single cycle time ensures each team completes a cycle together and shares learnings along the way. Most teams define OKRs on a quarterly basis. However, as noted in the introduction, some organizations prefer a four‐month cycle to avoid holiday delays and the quarterly crunch.

After using OKRs for a year or so, many organizations do not require a single cycle time for all teams.21 While most teams stick to the default timing, other teams may adjust their cycle time after completing a cycle or two. This is especially common for teams like research and development that require more time to make measurable progress. In addition, the default cycle duration may vary depending on the level within the organization. Higher‐level OKRs tend to be more strategic and therefore are defined for a longer period. OKRs at lower levels in the organization tend to be more operational and are often set for shorter durations.

Top‐level objectives often do not change throughout the course of a year. In fact, keeping top‐level objectives consistent quarter over quarter can be a good thing. This consistency provides workers at lower levels in the organization more time to understand top‐level objectives and use them as context for creating their team's OKRs.

Team‐level OKRs tend to be defined quarterly or on four‐month cycles, but this is not required. Some organizations define several layers of team‐level OKRs with various cycle times. One of our larger clients in the banking sector had three levels for team OKRs. They referred to each of the three levels as “tiers.” Tier 1 teams set annual objectives with annual key results that were reviewed quarterly. Tier 2 teams set annual objectives with key results defined for a six‐month period with quarterly check‐ins. Tier 3 teams set quarterly objectives with quarterly key results and conducted their check‐ins midway through the quarter.22

5. What Are the Three Types of Key Results? Are Milestones Appropriate?

There are three types of key results: metric, baseline, and milestone. Metric key results are the most common. They look like “move metric A from X to Y.” Baseline key results are used when X is not being measured and your client seeks a metric to reflect progress on a given objective. Your client should only put in the effort to establish a baseline if they expect to use that baseline as the starting point for a metric key result in a future OKRs cycle.23 Most leadership teams define a solid set of metric key results for top‐level objectives. However, many teams struggle to define metric key results.

Dozens of teams send us their OKRs for feedback each year. Their key results often look more like a list of tasks that reflect work output rather than measurable outcomes. Unlike metric key results, milestone key results tend not to include numbers. Milestones are binary – they are either achieved or not. Given that milestones are notorious for reflecting work output rather than outcomes, should milestone key results even be allowed?

Some OKRs coaches advise avoiding milestone key results entirely. On page seven of his OKRs book, John Doerr credits Marissa Mayer with her observation, “It's not a key result unless it has a number.” However, in this same book, Doerr provides examples of milestone key results such as “Develop a demo.”24 Marissa might not be happy with this key result! As an OKRs coach, you work with your client to transform draft key results that often look like a to‐do list into refined key results that reflect measurable outcomes. Here is a hypothetical OKRs coaching conversation to make this concrete:

| Client: | My key result is to develop a demo. |

| Coach: | What is the intended outcome of developing this demo? How will we know the demo is a success? |

| Client: | Well, the demo is a success if we can get positive customer feedback, but all I can commit to is developing the demo this quarter. It will be quite a stretch to get feedback. |

| Coach: | OK, what will be demo'd and how will we know it is developed? |

| Client: | We're developing a demo for product X and our sales team decides if it's developed and ready to be used. Ultimately, it is our customers that will decide if it's a valuable product. |

| Coach: | Are you committing to presenting the demo to the sales team or to customers? |

| Client: | I can't commit to showing it to customers. That is the decision of the sales team. I can commit to presenting the demo to our sales team. |

A bit more OKRs coaching might lead to the following refined key result that (1) focuses on outcome, (2) distinguishes between a commitment and a stretch outcome, and (3) specifies what is being “demo'd” and who decides it is “developed.”

In this hypothetical coaching conversation, the draft key result, “Develop a demo,” becomes the commit level of progress. However, the stretch key result now reflects customer interest in the product. It is the number of customers interested in the product that reflects the needle the client is ultimately trying to move. Marissa would likely approve now that the key result has a number.

As an OKRs coach, you help your client translate milestone key results like “produce a demo of product X” into aspirational outcomes like “three customers sign an agreement for product X” that move a metric rather than simply represent completion of a task. Therefore, we might conclude that all key results should be metrics. However, while we recommend defining mostly metric key results, our clients often choose to define milestone key results as well.

Rather than declaring all milestone key results are bad, we invite you to consider the possibility that milestone key results can be used to reflect outcomes, not output. Consider the following two milestone key results one of our clients drafted: (1) Present requirements to obtain a permit to build houses in Portland to leadership team; and (2) Obtain a permit to begin new construction in Portland. The first milestone is a task that reflects work output. One person should be able to research required documentation for a permit and schedule a meeting with leadership. However, the second milestone is not a task; it is a potential key result that reflects a binary outcome. Ask questions to guide your client to move from task‐like milestones that reflect work output to key results that reflect outcomes.

6. Where Will We Draft, Publish, and Track OKRs?

When I got started as an OKRs coach, my clients and I included the word “DRAFT” in all capital letters at the top of each team's OKRs drafting template. As each team refined their OKRs, they did so in their own drafting template in Microsoft Word. Each team published their OKRs in a single spreadsheet only when they were final. Today, our clients still prefer to draft OKRs in one environment and publish their final OKRs in a single location, most often a spreadsheet. Many of our clients still use Microsoft Word (or Google Docs) for drafting and Microsoft Excel (or Google Sheets) for publishing.

As the initial wave of OKRs software applications gained traction in 2015, to our knowledge none of them supported a “draft mode.” In other words, once you typed your OKRs into the system, there they were. The CEO of one OKRs software solution suggested that drafting OKRs into the software right from the start would keep things lightweight and be great for collaboration. This CEO felt that adding a “draft mode” would just add more work and make the process too complex. In practice, we find that many of our clients are not comfortable entering their OKRs into a transparent software system until their OKRs are reviewed and approved. Even worse, if draft OKRs are entered into the software, there is no way to know which OKRs are final and which are still in draft mode. Keeping draft OKRs separate from the published set of OKRs resonates with all our clients.25

Be flexible when drafting OKRs. We advise providing your client with an OKRs drafting template.26 Most of our clients encourage their teams to use whatever environment feels most comfortable when drafting OKRs. However, all our clients require OKRs be published in the same format in a single location. Documenting all OKRs in one location helps drive visibility, accountability, and alignment. We refer to this single location as the “OKRs tracker.”27

Advise your client to create their own OKRs tracker prior to exploring dedicated OKRs software. You can help your client create and even populate their OKRs tracker in an hour or two. About once a month, an organization approaches us right after purchasing a dedicated OKRs application before even attempting to define their OKRs. Somehow, these organizations purchased software to help automate a process that they did not yet have in place. We advise completing at least one OKRs cycle with several pilot teams prior to shopping for OKRs software. Once your OKRs process is established, it often makes sense to explore software to automate and scale that process.

7. How Will OKRs Relate to Performance Reviews?

Be sure to involve your client's HR leadership and executive sponsor to align on how best to relate OKRs to performance reviews. While there is no one right answer to this question, let's agree on two wrong answers.

The first wrong answer is that OKRs are the official system for performance reviews and compensation. As OKRs are designed to reflect stretch thinking, it should be obvious that an OKRs program is doomed from the start if OKRs are the basis for determining incentive compensation and evaluation of staff.

To ensure that OKRs are not interpreted as the performance management system itself, advise your clients not to define OKRs at the individual level when getting started. Defining OKRs only at the company or team levels makes it clear that OKRs are distinct from individual performance evaluation. We advise caution when using HR software that tracks both performance review data and OKRs. Employees that access such integrated HR tools often report that managing OKRs and performance reviews in a single tool makes OKRs and performance reviews feel as if they are a single system. Our clients also struggle when introducing OKRs and a new performance review process concurrently, as this conflates the two systems.

The second wrong answer is that OKRs have absolutely nothing to do with performance reviews and compensation. An executive at a leading tech company was once asked what he wished he had done differently after launching a successful OKRs program. He answered, “I wish I didn't say that OKRs are decoupled from performance management. That's just what I thought I was supposed to say based on watching the Google Video on OKRs.” He went on to explain that OKRs and performance management should be distinct but related. So, the question becomes what does “distinct but related” mean? We are right back to our original question: How should OKRs be related with performance?

Begin by interviewing your client to learn about their existing performance management system if such a system is in place. Next, consider the following two principles that resonate with all organizations that take time to define how OKRs relate to performance reviews and incentive compensation:

- OKRs should be included in performance review discussions via structured questions to position managers as coaches.

- Scores on key results should not be used to calculate bonuses.

Principle 1: OKRs Should Be Included in Performance Review Discussions via Structured Questions to Position Managers as Coaches

Given that team‐level OKRs reflect a focus for improvement, it is only natural that individual performance reviews incorporate a structured discussion of their team's OKRs. We advise our clients to consider incorporating OKRs‐related questions into their performance review process. These questions are informed by why they have chosen to implement OKRs. In addition, managers may bring these questions into 1:1s with their direct reports.28 Here are sample questions our clients have adopted to address impact, focus, communication, and learning:

- Impact. Which key result/s do you feel you impacted?

- Focus. How did you use OKRs to focus on high‐priority work?

- Communication. How did you leverage OKRs to better communicate?

- Learning. Which key result did you learn from? How will you apply this learning going forward?

While some organizations include OKRs questions in their formal performance reviews, others distinguish between a performance review 1:1 and an OKRs review 1:1. One of our clients already had a quarterly performance review process in place. They happened to define a four‐month OKRs cycle time. This client stumbled into two unexpected benefits resulting from these distinct time frames: (1) it was easier to decouple OKRs from the performance review process; and (2) an increase in the number of structured 1:1 conversations from four to seven each year.

Managers can certainly have more than seven such 1:1s over the course of the year, but these seven touch points are required and documented with HR. While there may be some overlap between OKRs and performance management, there is an agreement to address OKRs and performance management in separate meetings. This approach may resonate with your client if they are looking to create more engagement between managers and their direct reports.

Principle 2: Scores on Key Results Should Not Be Used to Calculate Incentive Compensation

Incentive compensation should be calculated independently of OKRs. Some key results may not connect to incentive compensation at all. Other key results may correlate with bonuses. For example, the value of a metric key result based on revenue is often used as part of a bonus calculation. That's fine. It is the value of the metric key result that determines compensation, not the score. The distinction is subtle, but important. OKRs should enable us to think big as we align on stretch goals. Linking key result scores to compensation creates incentives to set low targets and may reinforce silo thinking. Two examples are:

Example 1: Key result value (not score) tied to bonus

- Sales key result: Increase new sales from $1,000,000 to $2,000,000 this quarter (prescoring: commit = $800,000; target = $1,200,000)

- In this case, the sales team is paid a bonus based on revenue from new sales, so the actual value of this metric is directly correlated to the bonus. However, bonuses are not determined based on whether a score of commit, target, or stretch is achieved. The bonus is calculated based on the value of the actual metric itself.

Example 2: Key result not tied to bonus

- Human resources key result: Increase the number of phone screens for VP candidates from 40 last month to 80 this month (prescoring: commit = 40; target = 60)

- In this case, there were no bonuses calculated based on the number of candidates screened over the phone. This is still an important metric to the organization, but neither the scores on the key result nor the value of this metric determine bonus payout.

8. How Are OKRs Different from KPIs?

If your client is already using key performance indicators to evaluate performance or make calculations for incentive compensation, they must distinguish between OKRs and KPIs. However, many of our clients report KPIs, even though they do not have a formal performance review process in place. Such organizations tend to worry about how and if they can integrate OKRs with KPIs. They often worry that the two systems are either redundant or are somehow in conflict. Here is a high‐level overview followed by a detailed comparison of KPIs and OKRs.

High‐Level Overview: OKRs versus KPIs

Based on our experience with hundreds of organizations that use KPIs, we can tell you one thing about KPIs for certain: the term “KPI” does not have a standard definition. Some companies use KPI interchangeably with metric. That is, every metric that is reported is also referred to as a KPI. While some companies have just one KPI, others have thousands.29 Some organizations use KPIs as the basis for calculating incentive compensation for all staff while other organizations define KPIs to evaluate performance of the overall company and do not even offer incentive compensation.

Unlike KPIs, key results do have a standard definition. Key results answer the question, “How will we know we've made measurable progress on a specific objective by a certain date?”

Organizations with an established KPI system in place often ask if they should replace KPIs with OKRs. The fact that they are asking this question reflects a deep misunderstanding. Choosing between OKRs and KPIs is a false choice. OKRs and KPIs work together in tandem. A given KPI is a key result if it is the focus for near‐term improvement. A KPI is classified as a health metric if it is important to monitor but is not the focus for near‐term improvement. Metric key results are typically based on underlying KPIs.

To illustrate how OKRs and KPIs work together, consider a company with the objective: “Achieve financial targets” and the following three key results:

- Double company revenue from $5M in Q1 to $10M in Q2.

- Increase gross profit margin from 20% in Q1 to 25% in Q2.

- Increase recurring revenue from existing install base from $400k in Q1 to $600k in Q2.

Each of these metric key results has the KPI in bold built in. In fact, metric key results equate to moving a given KPI from X to Y within a set time frame.

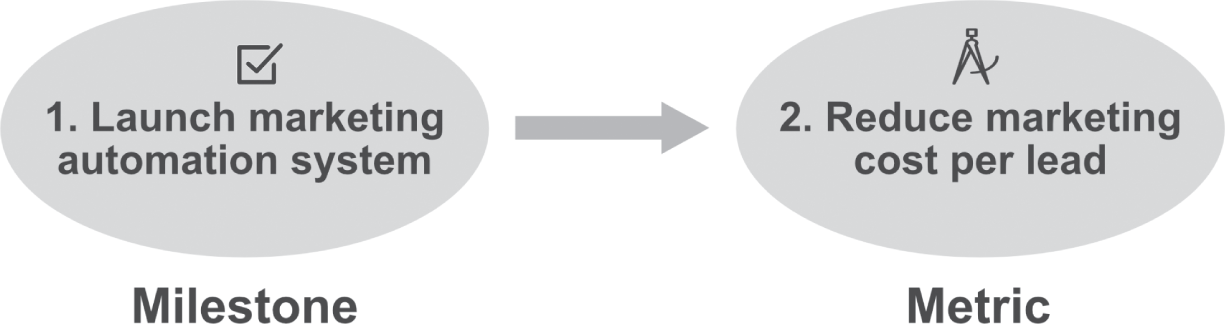

At the team level, milestone key results are more prevalent. Milestone key results do not translate directly to KPIs. Differentiating between metric and milestone key results helps clarify how OKRs and KPIs work together. Suppose a marketing department has the following OKR:

Notice that marketing cost per lead, in bold, is a KPI at the marketing team level. However, “sending our first set of nurture emails” is unlikely to be classified as a KPI; this statement is classified as a milestone key result. Although a milestone key result is not defined as movement of a numerical KPI from X to Y, milestone key results should be designed to impact a KPI in the future. For example, achieving the milestone of launching a marketing automation system may not impact marketing cost per lead in the current period. However, it is expected to have an impact on a KPI in the future, as illustrated in Figure 3.4.

FIGURE 3.4 A milestone key result designed to impact a KPI in the future

Detailed Analysis of KPIs versus OKRs

The original question of how OKRs differs from KPIs is better framed as: “How do key results differ from key performance indicators?” Objectives are qualitative statements, and as such, should not be confused with quantitative KPIs. To distinguish between KPIs and key results, some of our clients like to include a chart like the one shown in Figure 3.5 in their OKRs training materials.

FIGURE 3.5 Distinguishing between key results and KPIs

Defined in Context of an Objective?

The most obvious difference between key results and KPIs is the fact that key results are defined in the context of an objective. The objectives that contain key results should include a brief analysis of why the objective is so important now. While KPIs are sometimes defined in context of higher‐level goals, they often lack context and appear as a list of metrics to quantify the performance of some part of the organization.

Achievement Tied to Compensation?

While KPIs are often designed specifically to determine incentive compensation structures, OKRs are not. OKRs should be decoupled from compensation. While scores on OKRs should not be used as the basis for determining incentive compensation, OKRs are often incorporated, qualitatively, as part of the performance review process. The most critical reason for separating OKRs from incentive compensation goes back to Andy Grove's original intent that OKRs should be stretch goals.

Visible to All Employees?

Management by Objectives (MBOs), Andy Grove's inspiration for creating the OKRs model, is often used as a system for determining incentive compensation. Thus, MBOs are often kept secret between manager and employee. Similarly, KPIs are often tied to compensation and may therefore be kept private between manager and employee.

Andy Grove wanted to take the MBO model to the next level. Grove insisted that OKRs be made visible across the entire organization. The fact that everyone can see a set of well‐defined goals in a standard format sets the stage for alignment. With OKRs, the question is not whether to make them visible. The question is to what extent.30

Most coaches advise their clients to make OKRs visible across the entire organization. However, some of our clients begin their OKRs programs by giving access to OKRs only to certain groups of employees, for example, director and above.31 Given that nearly all organizations decide to make OKRs visible to all staff by default, we do not consider this a universal deployment parameter. Nonetheless, as some organizations choose to specify restrictions, we've added visibility to the list of “other deployment parameters” at the end of this chapter.

Focused on Maintenance Work and Health Metrics?

Many organizations classify every metric they measure as a KPI. However, the fact that you are measuring something does not make it a key result. If we think of KPIs as the set of metrics a company measures, then a KPI is either a key result or a health metric.

A given KPI is classified as a key result if the company decides to focus on improving the value of the KPI in the near term. If a KPI is not the focus for near‐term improvement, it is classified as a health metric. Health metrics are already within an acceptable range. For example, while website uptime may be a KPI, if the website is currently at 99.99% uptime, it is unlikely to be a key result that is a focus for near‐term improvement. Does it really make sense to define a key result to drive a team to “improve website uptime from 99.99% to 99.999%”?

Intended to Increase Alignment across Teams?

KPIs are often created to measure a given team's performance. Team members care a lot about their own team's KPIs. However, they are often disinterested in the extent to which other teams achieve their KPIs. In fact, a team member might even get a feeling of superiority when their team achieves its KPIs and other teams do not. Conversely, the OKRs framework seeks to get employees working together to make measurable progress. OKRs are often defined and shared across functional teams. As noted in the first deployment parameter, defining OKRs may even lead to the formation of cross‐functional squads.

Informs Near‐Term Focus and Prioritization?

KPIs do not require a specific time frame. They are simply metrics such as “revenue,” “room nights booked,” and “net promoter score.” KPIs may or may not have target values within a set time frame. As KPIs include health metrics, lists of KPIs can become massive. KPIs are often intended to be reported in dashboards rather than to communicate the near‐term priorities.

Conversely, key results articulate how an organization will measure progress of an objective within a set time frame. While KPIs tend to stick around year after year, most key results are modified or removed as part of the reflect and reset step in the OKRs cycle.

Controllable by a Single Team?

Given KPIs are often used to evaluate a team's performance, it is only natural that KPIs tend to be totally controllable by a single team. However, key results are often written as amazing outcomes. Amazing outcomes tend to depend on external factors that may not be totally controllable by a single team. And that's fine. Both Measure What Matters and the Stretch Target Commit scoring systems define “commit” key results that should be mostly controllable by the team. Nonetheless, all scoring systems, including Radical Focus, advocate setting key results that stretch teams out of their comfort zone rather than what they feel is completely within their control.

Originates from Team Members?

As KPIs are often used to measure performance and even determine bonuses, they tend to come from the top. While most objectives come from the top, most key results originate from team members rather than the boss. To clarify, some key results will (and should) come from top‐level leadership. However, the process of creating key results involves a dialog between team members and leadership. This leads us nicely into our last two universal parameters where we explore how to align OKRs and ensure that most key results originate bottom‐up.

9. How Will We Ensure OKRs Are Aligned?

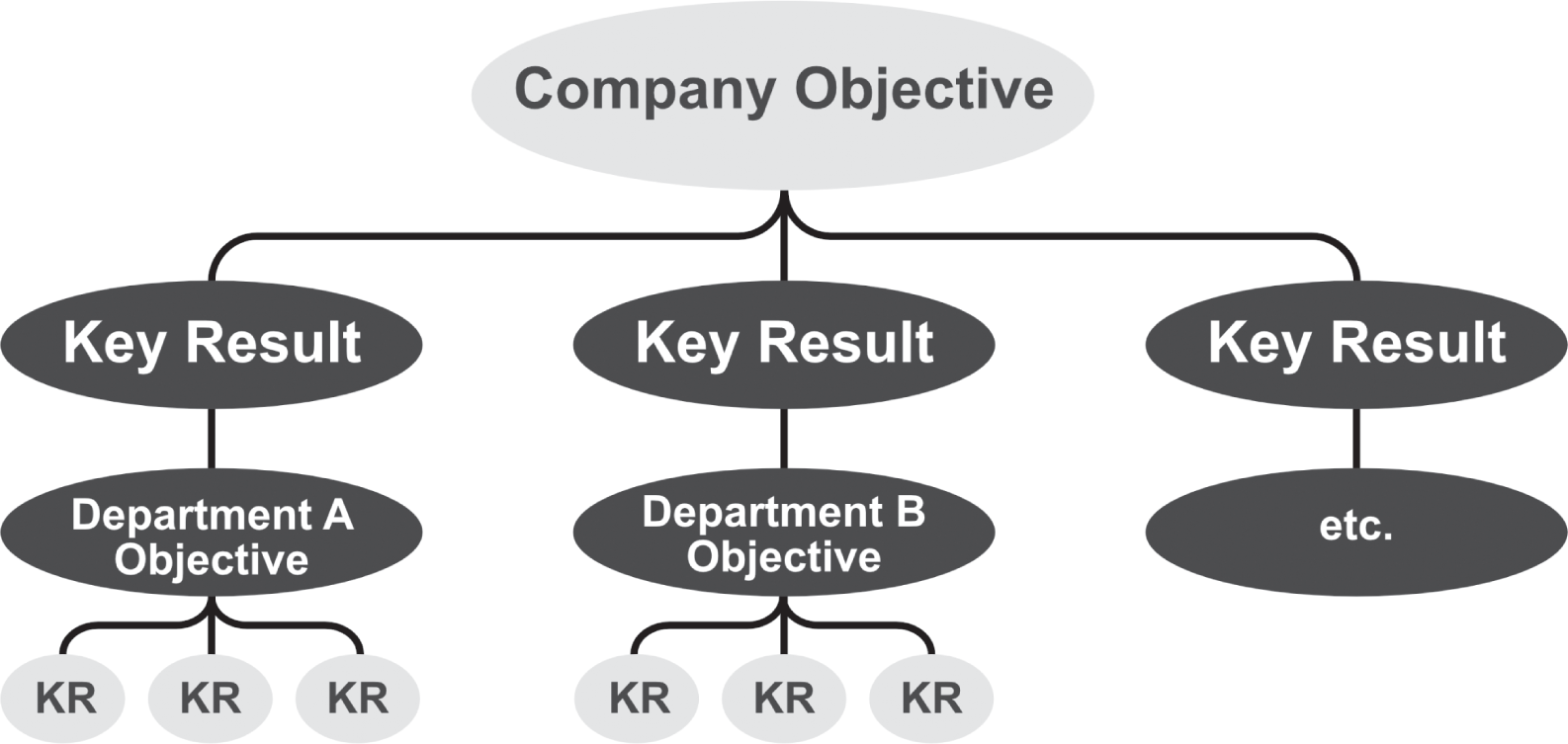

Before exploring how to help your client ensure that their OKRs are aligned, let's look at the wrong approach, the direct cascade. This approach begins with OKRs at the highest level, which is a good thing. However, in a direct cascade, lower‐level OKRs are required to be subsets of higher‐level OKRs. John Doerr provided the OKRs community with a great example of a direct cascade with his hypothetical football team example. This example illustrates how higher‐level key results become the objectives for teams at lower levels.32

We have seen several OKRs software vendors demonstrate how OKRs cascade to illustrate the simplicity of aligning OKRs at every level in an organization. We have even seen presentations of the direct cascade that refer to higher‐level key results as “parents of lower‐level child objectives.” While it is appealing in theory, the practice of cascading OKRs is rarely, if ever, a viable approach for aligning OKRs.

The direct cascade approach violates two foundational elements of OKRs. First, it implies that a higher‐level key result becomes the objective for a lower‐level department or team as shown in Figure 3.6. This violates the fundamental distinction between an objective and a key result. An objective is broad and qualitative; a key result is measurable and quantitative. Objectives are measured by the achievement of their underlying key results; however, key results should not be measured by achievement of an underlying objective.

The direct cascade approach also violates a second foundational element, as it inherently does not engage lower‐level teams. Lower‐level teams begin their drafting sessions by copying and pasting OKRs from higher levels. They are forced to choose from a list of predefined options rather than creating and aligning on their team's objective through critical thinking.

A direct cascade might work for an organization seeking to use OKRs to reinforce a hierarchical culture. The direct cascade provides a visual chart that illustrates how OKRs are connected from the top down. In theory, this approach holds lower‐level workers accountable for impacting higher‐level goals and enables all teams to see how they contribute to top‐level goals. However, every organization that we have seen attempt to implement the direct cascade approach has failed. Even worse, we often find cascading OKRs reduces cross‐functional alignment. If each top‐level key result is designed to be owned by a single team, then each team simply focuses on their assignments and returns to their silos. So, if the direct cascade doesn't work, what does?

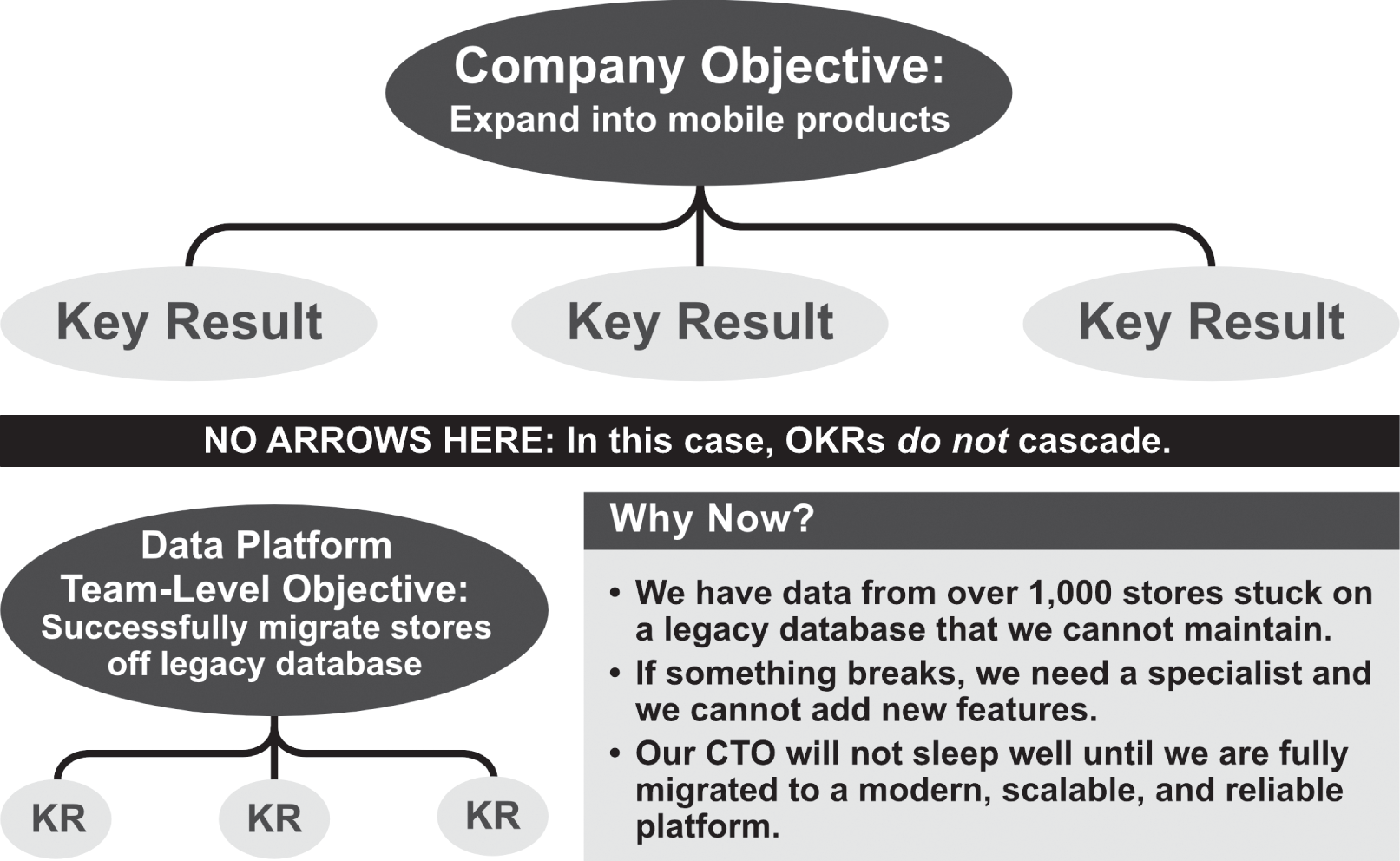

FIGURE 3.6 The direct cascade approach in which parent key results have objectives as their children

We recommend that each team documents how their objectives are aligned to top‐level strategy (and/or horizontally aligned with other teams) as part of their analysis of why their team's objectives are important now. Team leads and executives have conversations to confirm objectives are aligned. Figure 3.7 illustrates how a data platform team confirmed that their team's OKR was aligned even though it did not connect to a higher‐level OKR. In this case, it was the conversation, not a set of arrows, that confirmed alignment. We chose this example because alignment is not even possible with a direct cascade.

The data platform team did not create their objective by copying and pasting a higher‐level key result. Instead, they leveraged the OKRs critical thinking framework and agreed as a team that migrating stores off a legacy database was the most important area to focus on making measurable progress in the near term. Next, the data platform team lead discussed the proposed objective with the CEO and presented why it was such an important objective right now. Alignment happened the moment the CEO agreed with the data platform lead. Alignment did not require a set of arrows showing how the OKR cascaded from a higher‐level OKR. The data platform team's objective was still valid even though it did not relate to the company's objective to expand into mobile products.

FIGURE 3.7 Using “Why now?” to align OKRs instead of a direct cascade

This section concludes with the three‐step process used at Huawei to ensure OKRs are aligned. Huawei emphasizes OKRs discussions to align on OKRs rather than a direct cascade. We find Huawei's approach insightful, as OKRs are set for teams and individuals.

While we generally do not advise setting OKRs at the individual level, Huawei has found a way to make this work. Huawei embraces the fact that higher‐level OKRs do not attempt to capture all work at lower levels. They expand the definition of OKRs at the individual level to capture work that does not connect to higher‐level OKRs. Individual contributors prioritize their work and are validated even if they do not contribute to higher‐level OKRs.

10. How Do We Ensure Team Members Define Most Key Results “Bottom‐Up”?

Everyone we know in the OKRs community agrees that most key results should include input from team members rather than be mandated by the boss. When OKRs are working well, team members often feel like their ideas help shape goals. Taking a more bottom‐up approach is essential to creating engagement in the OKRs development process as well as driving alignment. Some organizations take an extreme top‐down or bottom‐up approach.

We met one CEO who wrote down OKRs for each team across the entire company, presented these OKRs, and then asked, “Any questions?” In this extreme top‐down approach to OKRs, the CEO simply developed OKRs in isolation and issued a command. Team members felt excluded from the OKRs drafting process.

On the other extreme, we encountered a CEO who took a completely bottom‐up approach. We facilitated a workshop with all 150 employees at this company and took several hours drafting company‐level OKRs. While this approach engaged everyone, we do not generally recommend this approach, as it is not efficient to develop top‐level OKRs with such a massive group. So, how can your client find their ideal balance between these two extremes? Here is what we find works best in practice.

Team leads often create objectives and present them to their team as context at the start of an OKRs drafting workshop. The team lead often solicits input on the objective from higher‐level executives prior to the drafting workshop to confirm alignment. Then, with the essence of the objective in place, team members draft most of the key results. Some leaders choose to remain in the room but let the team members drive the process. Other leaders choose to introduce objectives, align on why each objective is important, then leave the room to allow team members to think freely about key results. In this approach, the team leader returns to the room a couple hours later, or the next day, to review and refine OKRs with team members.

Some organizations choose a purely top‐down approach to defining OKRs just for their first cycle. They intentionally emphasize the top‐down approach, so that leadership gets comfortable with how OKRs work before scaling the program. They then engage team members in the second cycle. This approach works because the first cycle introduces team members to OKRs in the context of their actual OKRs. Not surprisingly, these team members provide feedback that their first cycle failed because it was too top‐down. However, these team members are then quite engaged and happy to contribute to helping shape their team's OKRs for the next cycle. By the end of the second or third cycle, these teams use OKRs as a framework for aligning on the areas to focus on making measurable improvement.

Other Deployment Parameters

We just analyzed 10 universal deployment parameters in detail. We advise you to take time to address all 10 in every engagement as part of Phase 1. While these universal parameters may be sufficient for some organizations, take a step back before moving on to Phase 2. Go over the deployment plan with your OKRs project lead and executive sponsor. Ask your client if they are ready to announce their deployment plan.33 Remind your client that organizations often define additional parameters to reflect their unique culture and approach to OKRs. It might help to provide a few examples to your client to get them thinking. Some other parameters are:

- How can we ensure our key results balance quantity with quality?

- How do we balance leading and lagging indicators?

- At what level in the organization will OKRs be made visible?

- How will we deal with dependencies on India?

- Shall we specify where we are choosing to not allocate resources?34

NOTES

- 1. The football example is detailed later in this chapter. It is misleading because OKRs do not directly cascade.

- 2. One of our clients was concerned with resource allocation between India and the US, so they decided to add a region parameter. All their key results were tagged as “India” or “USA.” Clearly this is not a universal parameter.

- 3. Ideally, each individual employee should be able to articulate why leadership wants to implement OKRs and how they can benefit from OKRs as an individual. To this end, we are seeing more and more organizations add questions about how (and to what extent) the use of OKRs is beneficial as part of their employee engagement surveys.

- 4. Marty Cagan, Inspired (John Wiley and Sons, 2017), p. 144.

- 5. We have seen several sets of individual‐level OKRs used at Google. One memorable key result reflected a personal goal to “buy a 3‐bedroom house in Mountain View.” Should OKRs be a place to capture personal goals for all staff? We think not.

- 6. For more on the pros and cons (mostly cons) of setting individual OKRs, see Paul Niven and Ben Lamorte, Objectives and Key Results: Driving Focus Alignment, and Engagement with OKRs (John Wiley and Sons, 2016), pp. 101–102.

- 7. Refer to the ninth deployment parameter in this chapter to see how individuals at Huawei align their OKRs to higher‐level OKRs and health metrics.

- 8. One of our clients required every team to define exactly three objectives with exactly three key results per objective. The OKRs project leads quickly implemented our advice to make this less restrictive. They modified their guidance to “at most three objectives with a total of at most nine key results per team.”

- 9. Some of our clients ask each team to estimate the percentage of their effort that will be allocated to OKRs versus other work.

- 10. Christina Wodtke is the most prominent OKRs expert recommending a single OKR.

- 11. To see “Why now?” for these three internal objectives, refer to step 3 of the seven steps for creating OKRs in Chapter 5. To see “Why now?” for the three external objectives, refer to the sample email in Chapter 4.

- 12. We say this is possible! Alignment is based on a conversation rather than a mathematical or logical relationship. Refer to Figure 3.7 for an example.

- 13. We often hear from organizations that scoring objectives creates more problems than it solves. At best, the process is a waste of time. Some organizations build charts based on objective scores to stack rank team performance. These ranking charts defeat the purpose of OKRs, penalizing teams for aiming high and forcing teams to defend low scores rather than emphasizing learning. If you or anyone you know can report a positive impact from scoring objectives, please email me via [email protected].

- 14. My scoring system was originally called “aspirational with prescoring.” We changed the name to “stretch target commit” in 2021 based on client feedback. For more on scoring, see this YouTube interview: https://youtu.be/xMRlI6cJwQg.

- 15. Source: Private discussions with Jeff Walker, who referred to “set the bar high and overachieve” as the cultural approach to OKRs while he served as CFO of Oracle in the late 1980s.

- 16. Note Google's use of the word grade. This word is often used interchangeably with score. We use “score” because “grade” emphasizes evaluation rather than learning. We believe OKRs should focus on learning more than evaluation.

- 17. This story is taken from John Doerr's presentation at the 2015 Goal Summit in San Francisco. Whether the story is true or not does not matter; it's the spirit of the story that is relevant.

- 18. Here's what Christina Wodtke has to say on this topic: “If you set a confidence of five out of ten, has that moved up or down? Have a discussion about why.” Christina Wodtke, Radical Focus: Achieving Your Most Important Goals with Objectives and Key Results (Boxes and Arrows, 2017).

- 19. The Measure What Matters approach often equates a key result's numerical score to a color of red, yellow, or green. The color does not capture data about how the key result champion is feeling about the key result beyond the numbers. I was lucky enough to work with Joel Trammel and the team at Khorus who were early promoters of a two‐dimensional scoring system that he referred to as “likelihood and quality.”

- 20. Instead of writing each key result as a stretch outcome, some of our clients prefer to write each key result as a target level. They then specify the commit and stretch levels of progress in parentheses. Take whichever approach works best for your client, just be sure to be consistent.

- 21. Our clients find it best to stick to a single cycle time for the first cycle to optimize learning. You may want to even require everyone to stay on the same cycle time for a second cycle. However, if a given team wants to deviate from the default cycle time, we recommend having that conversation instead of simply requiring all teams stick to a single cycle time.

- 22. Continuing with this logic, individual‐level OKRs, if they are part of an organization's OKRs program, tend to be defined for extremely short time frames. In fact, Mulyadi Oey, an OKRs Coach Network founding member based in Indonesia, finds that individuals often benefit by defining super‐short OKRs cycles of a week or two.

- 23. For more on baseline key results, refer to the first coaching excerpt in Chapter 1 and the NPS story in the Epilogue.

- 24. In reviewing John Doerr's book, Felipe Castro, an OKRs expert and good friend of mine, notes: “Out of the 60 Key Results listed, 32 (53%) lack numbers. They include things such as “Create a retirement plan for all legacy technology,” and “Focus on hiring player managers/leaders.” Even John Doerr's own OKRs from his days at Intel lack numbers (e.g., “Develop a Demo”).

- 25. Good news: We confirmed in 2020 that at least one OKRs software tool now offers draft mode.

- 26. Refer to the Epilogue for a sample OKRs drafting handout.

- 27. Refer to Chapter 5 for more on the OKRs tracker and how to use it with your client throughout the OKRs cycle.

- 28. We advise you review page 269 of Measure What Matters by John Doerr for additional questions that managers can ask their direct reports in Resource 3, All Talk: Performance Conversations.

- 29. One of our clients had the single KPI, “room nights booked.” Everyone in the company knew the goal was to get more room nights booked. They monitored thousands of metrics, but they chose to define only one metric as a KPI. We call this example out because it is not the norm. Most of our clients have dozens, even hundreds, of KPIs.

- 30. At a 2015 interview in San Francisco, John Doerr shared an example in which OKRs were posted above the urinals in the men's bathroom! That may be a bit extreme, but it's one way to make OKRs visible.

- 31. While we've not conducted a formal study, anecdotal data indicates that limiting the ability to view OKRs to certain management levels is a more common practice in Europe than in the United States.

- 32. The same football example John Doerr once used to explain how OKRs cascade to Google many years ago appears on page 81 of his 2018 book, Measure What Matters. In 2015, Doerr clarified that “OKRs need not be hierarchically coupled.” However, the football example reinforces the myth that OKRs are “tightly coupled.”

- 33. Offer to help your OKRs project lead develop an OKRs FAQs document. A sample OKRs FAQs template is available to OKRs Coach Network members.

- 34. John Doerr told a story of how the CEO of Lotus – recall Lotus 123 – adopted OKRs and created “NOKRs.” The “N” stands for “Not.” Lotus used NOKRs to specify what they would not do: “Lotus should not do hardware.” Some of our clients adopt the “NOKR” concept to help communicate what they are choosing to not focus on.