5

Incubate: How Corporate Explorers Learn Through Experimentation

In 2013, Balaji Bondili was a consultant for Deloitte Consulting in New York.1 He had built a successful career helping firms in the medical and life sciences industries. However, he was tired of the consulting grind. Each project looked much like the last. He wanted to build something new, even if it meant having to leave the firm. When a new Office for Innovation opened, Balaji saw it as an opportunity to build something from within, to become a Corporate Explorer. He made it his mission to figure out how Deloitte could access a wider pool of expertise in digital technologies, such as machine learning and artificial intelligence. The reality was that neither Deloitte nor its large, established client firms were first choice for digital natives and data scientists. The market for this talent was as hot then as it is now. Those with the best skills wanted to work for young, bold, and cool firms or shop their talent to the highest bidder on a project-to-project basis. Signing up to a corporate career with a multiyear progression to partner was not attracting the right candidates to Deloitte. Even with aggressive large-scale hiring, they had a difficult time keeping up with demand.

Bondili proposed giving Deloitte access to high-value, hard to hire expertise via a crowd-sourced platform. Crowdsourcing had emerged as a means for engaging experts remotely, without employing them directly. Experts are recruited to the crowd and then posed carefully framed challenges to solve in return for financial incentives. Bondili believed that setting up a Deloitte crowd could open an entirely new channel to the skills that the firm needed. He called his new venture Deloitte Pixel. The name reflected Bondili's belief that it could break big picture problems into individual components, or pixels, that the experts in the crowd could solve. He anticipated Deloitte being able to pixelate almost any complex client project. It would be a special service that Deloitte's existing consulting teams could call on to supplement their work. Teams would have access to experts that could bring a wide breadth of expertise to solve problems. The network increased the capabilities available to Deloitte Consulting by giving them access to hundreds if not thousands of experts in the crowd. There was no long-term commitment, and it could adapt supply to match demand, so helping to manage the firm's profitability.

Bondili was now a Corporate Explorer pursuing the second of the innovation disciplines: incubation. This is a great moment – the starting gun has been fired and there is a clear license to act and make the concept a tangible reality. There is also a tremendous pressure to perform and deliver a business outcome that the organization is familiar with and rewards executives for achieving. However, the Corporate Explorer is not yet ready to deliver a tangible outcome. There is still too much to learn. Which of Deloitte's customers will be most likely to buy Pixel? Can Pixel deliver tangible results? What are the benefits of Pixel versus a regular Deloitte consulting project? Answering these sorts of questions is the work of incubation – many small experiments to learn what it will take to succeed.

These experiments help Corporate Explorers manage the uncertainty inherent in any new venture. Startups achieve this through sheer volume. As noted in Chapter 2, for every successful entrepreneur there are nine that fail. This is an evolutionary process that weeds out the good ideas from the bad, the ones that could build a viable business from those that could not. However, in the past decade, methodologies such as lean startup, proposed by Steve Blank and further developed by Eric Ries, have transformed entrepreneurship from a mysterious art form into a disciplined method.2 This is part of what has helped corporations become more successful at launching new ventures.

It is fashionable to talk about this process of experimentation as being based on a “fail fast” culture. However, the goal is not failure, it is rapid learning. When you learn, you can make faster, more informed decisions. This learning approach can be hard to execute in companies obsessed with operational performance. Eager executives with resources to commit get restless. They worry that waiting for more evidence just risks letting the competition in and missing the market window. Managers are expected to know the solutions to any problems, not to say, “I have two hypotheses that I am testing!” Unfortunately, without a way to manage uncertainty, corporations get forced to one of two extremes. They decide that they have something great coming out of ideation and decide to invest ahead of learning, without really understanding what it will take to make a new venture succeed. Or, they are frozen by the uncertainty surrounding a new venture, and fail to commit for fear of losing. Corporate Explorers manage uncertainty through experimentation, helping to reduce or retire risk before the need for large expenditure. It can be painstaking work.

Business Experiments

Bondili, like all Corporate Explorers, believed in his new venture. He had ideas about how to make Pixel work technically, using a range of third-party crowdsourcing vendors. He had a plan to get it to market through Deloitte client teams and the partners that owned customer relationships. He was also confident that customers would see advantages in Pixel and be willing to spend money on it. Even so, this was an unproven assumption.

Business experimentation has its roots in the scientific method. In the seventeenth century, science had yet to emerge as a discipline that could explain how the world works. Scientific pioneers were called “natural philosophers” and tended to either be wealthy, landed men and women with time to spare, or instrument makers who turned out to have more ability than the customers they served. A key moment in the history of science was when chemist Robert Boyle wrote down his experiments to allow others to replicate his results. This gave scientists a way to posit a hypothesis, demonstrate how they had tested it, and describe the outcome. It was one of the sparks that converted “natural philosophers” into scientists, so inspiring the centuries of scientific progress that followed. Just as writing down the steps in an experiment, a Corporate Explorer needs to record the hypotheses they plan to test. Boyle's innovation helped to eliminate variability from science, thereby recording assumptions that would help to improve the rigor of a business experiment.

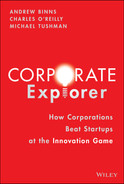

Incubation takes the outputs from ideation – the customer's most important problems and how to solve them – and uses multiple cycles of experimentation to test each element of the business. These experimentation cycles follow a simple repeating pattern like that shown in Figure 5.1. It goes like this:

Figure 5.1 Experiment cycles.

- Hypothesis – stating our assumptions about what make this business succeed

- Test – exposing those assumptions to live customers in as close to real conditions as possible

- Learn – extracting the important insights from the test

- Iterate – designing a new test to find out if you are getting closer to the answer

- Decide – concluding, after multiple cycles, whether to invest, kill, or pivot (e.g., going back to ideate a different solution to the problem or revising the business concept)

Balaji Bondili did not slavishly follow these learning cycles. Few Corporate Explorers are so deliberate and methodological. However, he did follow the same logic and principles, as did Kevin Carlin and Krisztian Kurtisz. Bondili asked himself and his team: “What needs to be true for Pixel to be successful?” The answer to this question is an assumption or hypothesis about what will make the business successful. (We take the terms assumption and hypothesis to mean essentially the same thing and will use them interchangeably.)

What Needs to Be True? (Hypothesis)

Corporate Explorers should strive to have explicit, comprehensive, and testable hypotheses about what it will take to make a business idea succeed.

Writing down your hypotheses makes them explicit. You put a stake in the ground about what you believe. You are aware of your biases and willing to subject them to empirical verification. If a Corporate Explorer is new to this practice, then they often struggle with creating clear, testable, and refutable hypotheses. Some object: “How can we make such definitive declarations, when we do not know what the answer is?” Although logical, what they are really saying is this: “It is not safe for me to be that explicit because I could be wrong.” Corporate cultures tend to favor safety over risk. A vague high-level hypothesis is much less likely to be disproved. You cannot be wrong if you never say what you believe. It is understandable to want to lower the risk of being wrong in cultures where confidence and certainty is rewarded. However, this is self-defeating as it limits your opportunity to learn. In an experiment, you should expect to be wrong often, not because you want to fail but because you want to rule out other possibilities. That way you can learn what works and what does not.

A Corporate Explorer needs to have hypotheses about the key issues that will contribute to the venture's commercial viability. There are many popular business-model frameworks that describe the elements of a business model for new ventures. These do a good job of creating a checklist for the Corporate Explorer. Some are focused on fit between customer expectations and the solution; others organize the key questions around desirability, feasibility, and viability.3 Our work on IBM's Emerging Business Opportunities taught us that there are six “business design” questions that a Corporate Explorer should address. If you have at least one hypothesis for all six, then you have been comprehensive in articulating your assumptions for the business. The answers to the first two business design questions come from work already done in ideation:

- Customer selection: Who will buy and use Pixel?

- High-value customer problem: Does Pixel address a problem customers care about?

The next four questions are the primary focus for Incubation:

- Value proposition: Does Pixel solve the customer's problem in a way that they find compelling?

- Value capture: Will Pixel generate enough value for Deloitte to make it worth doing?

- Ecosystem adoption: Will it be easy for customers to adopt and use Pixel?

- Strategic control: What level of sustainable competitive advantage or differentiation can the business achieve?

Deloitte Pixel's Business Design circa 2015 would have looked something like what is shown in Table 5.1.

A good hypothesis has a clear testable point of view. That means you can disagree with the statement and generate to prove or disprove it. Look at the value proposition hypothesis shown in Table 5.1. It is not testable to say, “Pixel will be valuable to Deloitte clients in helping them solve complex problems.” It is just a generalized statement of goodness, not a testable proposition. A better hypothesis could be:

Table 5.1 Business design.

| Deloitte Pixel Business Design Hypotheses | |

|---|---|

| Customer | Deloitte clients of a [defined size] work on digital transformation strategies in [targeted industries]. |

| High-value customer problem | Clients (and Deloitte) cannot implement strategies for digital transformation because they are constrained in their access to key sources of technical talent. |

| Value proposition | Pixel will be valuable to Deloitte clients in helping them solve complex problems. |

| Value capture | Pixel will be additive to Deloitte's services and not dilute existing project fees. |

| Ecosystem adoption | Deloitte teams will adopt Pixel as a part of existing projects and enable us to reach x% of the firm's clients in three years. |

| Strategic control | The depth of Deloitte's client relationships will prevent commoditization of the offering by low-cost providers. |

- Hypothesis 2: Pixel will deliver results to clients on digital transformation projects faster and more cheaply than existing approaches offered by Deloitte.

This has a clearer point of view about the value to be delivered. It tells us that Pixel plans to be cheaper and faster, so that gives us something to measure. However, it does not say what outcome Pixel is targeting, so it is not fully measurable. Adding a metric to describe the anticipated outcome – faster and cheaper –could mean:

- Hypothesis 3: Deloitte clients will pay for Pixel to help solve complex problems if it generates faster results (cuts time to answer by 50%) and cheaper (reduces fees by 30%) than a traditional Deloitte project.

This is a measurable and specific hypothesis; something that can be tested. At the end of the experiment, Bondili could find out how close he came to his expectations and so decide if the hypothesis is proven or refuted. You are most likely looking to create an outcome that affects the quality of what customers experience (e.g., higher reliability), the quantity or availability of a product (e.g., frequency or accessibility of a service), the timing (e.g., increasing speed of getting to an outcome), or cost (e.g., its cheaper!).

Run Experiments (Test)

Important though it is to create a comprehensive list of testable hypotheses, testing them all at once is expensive and time consuming. It is better to narrow in on the most critical hypotheses for the initial tests. Bondili started by proving that Pixel could generate results using crowd-source talent that were consistently as good as or superior to that of in-house teams. They asked supportive colleagues if they could take recent client projects and rerun the problem solving using open talent. These tests generated useful outcomes quickly, however, they simply validated something that was already happening outside of Deloitte. Crowdsourcing solutions were already available and large corporate firms were willing to pay to use them. He relied on many other assumptions for which he had no data. For example, could Pixel deliver results faster and cheaper than a traditional team; could Pixel earn fees without undermining Deloitte's revenue from consulting; and could Deloitte's partners be sales channels to reach the firm's existing client base? Which of these assumptions should Bondili test first?

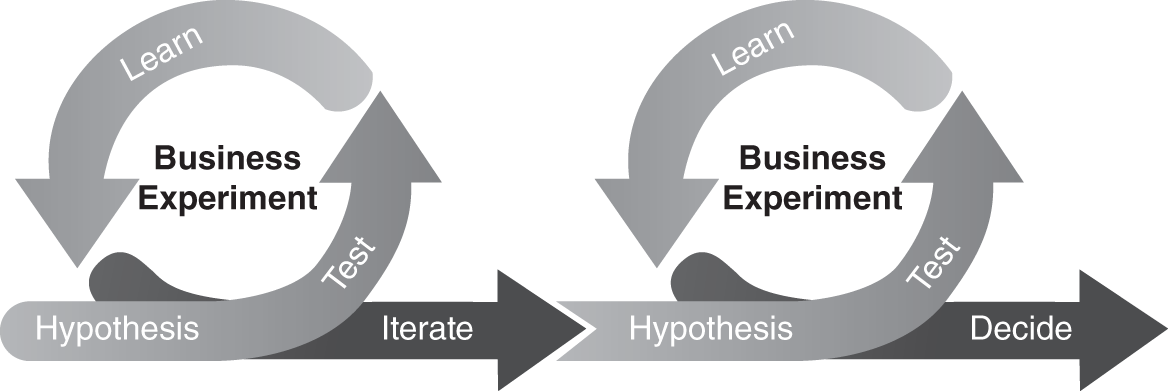

Deciding which assumption is the most critical is key to learning. One approach is to build a tree that describes the logical connection between each assumption. Let's take Uber's initial business model as an example. When Uber started in 2008, it had to decide which element of its business design to test first – cars, drivers, an app, payments, pickup points, and so forth. Does payment matter as much as whether you can recruit drivers? Does having a user-friendly app matter as much as finding riders at this stage? One way to decide which is most critical is to plot them on a two-by-two matrix (see Figure 5.2). One axis reflects your judgment of how important the assumption is to the innovation (i.e., how vital it is to solving the customer problem or how much novelty it creates for the customer) and the other axis is the level of uncertainty (i.e., how much do we know about whether we are right).

Figure 5.2 Critical assumptions matrix.

It is vital that Uber has enough drivers and that they get riders to their destination. But there are already limousine and taxi services doing this, so there is a low level of uncertainty regarding that assumption. Similarly, it matters that a customer pays for the ride, but there are other ways to pay for services. What matters is that riders book a ride and that drivers find them. These are the two most critical assumptions that Uber needed to test first. Uber tested this critical assumption with the simplest approach that it could design. Riders ordered a car via an “Ubercab” SMS text message code. The riders were picked up by a professional driver – no test here of the ability to recruit “gig economy” drivers – and payment was accepted over the phone via credit card. Uber's fundamental transaction and the main value they bring clients is based on whether they can get a driver to a rider in an amount of time that is acceptable for the rider. That is what they tested first, with payments handled later. This is the role of a minimum viable offering or product (MVO or MVP). That is the most basic version of your idea that you can test with customers. They enable you to learn about customers’ needs and preferences as fast as possible and at the lowest cost, minimizing your risk of investing ahead of learning.4

The MVP approach can be tough for a Corporate Explorer to adopt. Some find engaging customers with nothing more than a drawing or a barely functioning prototype to be uncomfortable. It is more familiar and comfortable to build a fully functioning prototype. The more elaborate a prototype, the more you are locked into a strong point of view and, in turn, not testing a hypothesis. You need to stay true to the principle of committing minimum resources to prove or disprove the hypothesis. This can take some creativity. Minimalism was Phillip Morris International's (PMI) approach when they designed an experiment to find out if consumers would buy its revolutionary IQOS (I Quit Original Smoking) product from a vending machine. IQOS is part of PMI's strategy to end smoking by helping smokers’ transition to non-lethal alternatives to cigarettes.

IQOS heats tobacco to release nicotine with fewer of the toxic chemicals associated with burning a regular cigarette. It is still subject to age verification for purchase in most markets because it sustains people's addiction to nicotine. A PMI team in South America faced the puzzle of how to find out if consumers would provide proof of age by having a machine scan a driver's license. The high cost of the scanning technology would be wasted if consumers were unwilling to put their licenses into the slot on the machine. PMI decided to build the vending machine with a slot but with a person secretly hidden inside to read the driver's license. The consumers thought it was a machine and PMI learned how consumers behaved without having to invest in the scanning technology.

PMI's crude experiment allowed the team to generate data to find out if the action they took – putting the slot in the machine – had the result they anticipated. Every experiment has an independent and dependent variable. The independent variable is the channel – the vending machine – and the dependent variable is the rate at which customers provided a driver's license. The data from the experiment showed that customers would put their driver's license into the machine. They confirmed a hypothesis without committing resources.

Make Sense of Your Results (Learn)

Bondili's most critical assumption was that Pixel could answer problems faster, cheaper, and at the same quality as a regular consulting team. Bondili needed to show Deloitte colleagues that the advantages of sourcing open talent were not just a theory, but a reality in practice. Testing this assumption required a real client project. Bondili got help from a key ally to have Pixel join a client project for a company seeking to develop a new regulatory compliance dashboard. The client needed to integrate information across multiple geographies and legal jurisdictions. This was exactly the type of complex challenge that Bondili anticipated crowdsourcing could support. There was an opportunity to use crowd-sourced software development skills to shorten the lead time for producing an answer for the customer. Pixel performed ahead of expectations delivering a result in five weeks versus six months and at 10% of the cost of traditional consultants.

However, even after this and other similar results in trial projects, Bondili continued to struggle to fill his sales pipeline. The premise of Pixel was that it was additive to the existing Deloitte Consulting offering. Commercial success depended on Deloitte's partners wanting to sell Pixel to clients as a part of a project. He had assumed that they would embrace Pixel when he demonstrated that the service was faster and cheaper, at the same quality. However, as Bondili engaged with Deloitte Partners seeking new, larger engagements, he learned that there was reluctance to adopt the new service. Some partners felt crowdsourcing was inferior to using consultants. It was one thing for it to generate fast, cheap ideas. It was another to reach the quality threshold that they, as professional advisors, had reached over decades of experience.

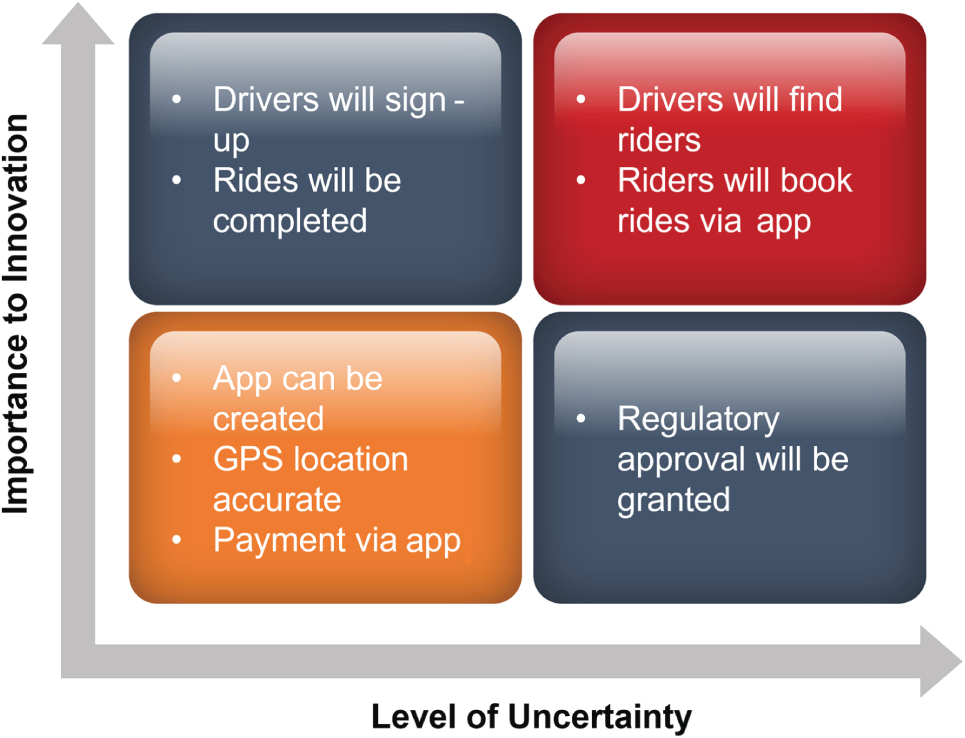

Bondili discovered that he had an untested assumption in his business design, that Deloitte partners were motivated to sell Pixel as a part of their engagements. Pixel had to demonstrate that it would add value not just by being faster and cheaper, but also by generating outcomes a client would recognize as better. This is an ecosystem or “adoption chain” problem. The adoption chain is the sequence of steps needed for a product to be used. There are usually main players in this chain – suppliers, distributors, agents, retailers, and so forth. However, they do not always share equally in the incentives for bringing a new product to market.5

Ecosystem problems often do not receive the attention they deserve in business experiments. Sometimes with dire consequences. For example, pharmaceutical giant Pfizer spent almost $3 billion developing a breathable insulin product with tremendous potential benefits to people with diabetes – a $1 billion a year revenue opportunity for the company. They had tested extensively with patients and endocrinologists, with tremendous success. The US Food and Drug Administration approved the therapy on condition that doctors administered lung capacity tests before being prescribed to patients. The equipment for this is common to endocrinologists but not to primary care or general practitioners, who write most prescriptions for insulin. As a result, the new therapy could not reach its intended target. This adoption-chain barrier caused actual annual sales to peak at $12 million and the drug was withdrawn.6

Bondili's adoption chain is relatively simple and internal to his firm (see Figure 5.3).

Corporate Explorers map ecosystems by identifying each of the players involved in delivering an innovation to its intended user. These could be further up the supply chain – producers and manufacturers – or closer to the customer, such as integrators or distributors. Then, for each one assessing, what do you need them to do? What are their motivations or incentives for doing what you need them to do? Finally, how can you make this happen? Each step in the chain adds risk and complexity. You need to find out whether there are individuals or entities that may become a bottleneck for the innovation, as they did for Pfizer and Pixel. Bondili learned about this problem in time and now needed a pilot project that would teach him what it would take to persuade skeptical Deloitte partners to sell the Pixel solution as a part of their client offerings.

Figure 5.3 Ecosystem adoption chain.

Run a New Experiment (Iterate)

Bondili's situation at this point was normal. Business designs evolve, becoming more robust as experiments teach us about what it will take to make a commercial success of the business. Corporate Explorers should expect to iterate the business design. One of the values of writing it down when you start is that you know what has changed.

Bondili chose as a challenge a project to design a new industrial printer for a client. The existing Deloitte team had completed an initial round of options, all of which were an incremental improvement on existing designs, thus falling short of the client's expectations for a radical new product. This created an opening for Pixel to see if it could generate a different outcome. The Pixel team opened a printer design challenge to a crowd of thousands. The challenge ran in two phases. There were 15 printer designs submitted in the first phase, which they narrowed to three. These three then came back with detailed computer-aided designs with details on how to change ink, use a voice assistant interface, and how the product would look. The crowd-sourced input took two to three weeks to gather. The winning submission won $4,000 and the vendor cost was $15,000. Additionally, a historically multiyear product development process, from concept to prototype, was reduced to six months. Most important, however, was that the design was radically new. By taking the problem outside the realm of industrial product designers, he found solutions that were unlike anything the industry had seen before. Bondili could now legitimately claim a premium for Pixel's services in exchange for generating distinctive value for clients. Bondili generated the key proof point that his stakeholders needed – that Pixel could do better than traditional consulting teams. This was a triumph for the Deloitte customer and, understandably, the consulting team that was working with them.

Follow the Evidence (Decide)

Bondili started with a radical proposal for reinventing how professional services were delivered to clients. At first, it was a proposition with high uncertainty. It was a solution that others were using, but it was unknown whether it would be adopted for consulting-type projects and whether Deloitte clients and partners embrace it. The mathematician Claude Shannon said that “information reduces uncertainty.” Bondili's evidence that Pixel can be faster, cheaper, and better than a consulting team, helped to reduce Deloitte's uncertainty. It helped make uncertainties into known variables, thereby helping to make decisions about long-term investment less dependent on gut instinct or executive impatience.

Such evidence is never entirely objective. There are too many possible sources of bias and error involved in how humans process information to accept the data from a business experiment as truth. The scientific method helps people manage the psychological biases in how decisions are made. Human brains do not like looking for alternative solutions to problems. It is easier to accept an answer that confirms an existing bias than it is to work hard and find evidence (e.g., such as deciding that a population without refrigerators must be a market for a new product). The Nobel-prize winning psychologist Daniel Kahneman calls this the fast and slow brain. The fast brain moves quickly to find the answer that fits with what we already know and is essential to completing most tasks in our lives. The slow brain is more rarely engaged, Kahneman calls it “lazy” and is open to finding new evidence. The scientific method has evolved over many centuries to fight the impact of the fast brain, making sure that a scientist's discoveries are subjected to a rigorous process of verification and falsification (i.e., saying why you might be wrong, not just proving that you are right).7

Business experimentation practices encourage Corporate Explorers to be evidence-driven, not simply self-confident. That can be counter cultural. It is for a manager in a quarterly performance review to say, “I don't know how we are going to win in this market, though I have two hypotheses that I would like to test.” Managers would risk being viewed as weak or indecisive. It is more likely that they select facts to support their strategies that justify preexisting beliefs, past practices, or proposals that the manager is advocating. Experiments operate with a different logic, with an openness to finding out why you might be wrong, as well as why you might be right. It is vital to remain skeptical otherwise you will be less likely to stop an experiment when there is data that disputes your point of view.

Incubation generates information to reduce uncertainty about the decision to scale a new venture. It makes the decision on when to scale evidence based. You are ready to scale when you have disproved, iterated, and validated enough of your assumptions to invest with confidence. Reducing uncertainty does not mean you can eliminate it altogether. It simply narrows the range of what is unknown, it retires some of the risk. Critically, Balaji had the data and insights to engage Deloitte's senior leadership in a discussion about what it would take to scale the venture. He had evidence of client enthusiasm for the results, and this was starting to build his group of internal champions. Scaling is also an evidence-based discipline as well. You are still working with hypotheses: What are the customers, capabilities, and capacities you will need to scale? However, the method is different, and aims to accelerate the pace toward converting an experiment into a sustainable business.

Chapter Summary

In this chapter, we described how incubation is about learning through experimentation. Corporate Explorers ask themselves, “What needs to be true for my venture to be commercially viable?” They design business experiments to test the answers to this question in a series of learning cycles. This is the scientific method applied to business – testing and iterating hypotheses.

Corporate Explorers should strive to have explicit, comprehensive, and testable hypotheses. Explicit means writing the hypotheses down so they are known and understood. A business design framework can guide Corporate Explorers to develop a comprehensive set of hypotheses to test. A testable hypothesis has a clear point of view and defines a measurable outcome.

A test of the new venture starts with a focus on the most critical hypotheses underpinning the new business. Corporate Explorers use a two-by-two matrix to decide which hypothesis is most critical – one axis measures novelty of the innovation, the other how much confidence that the hypothesis is proven. This allows a new venture team to create its first test using a low fidelity, stripped down version of the offering. This minimum viable offering or product (MVO or MVP) enables the team to confirm or refute a hypothesis without committing too much resource.

Corporate Explorers learn from these experiments what they know and what they still need to better understand. One area that is a frequent challenge is the ecosystem's readiness to adopt an innovation. Mapping the ecosystem adoption chain can help to design specific experiments to learn about this.

The team can then iterate the experiment using new or revised hypotheses. Over a series of cycles the information generated will reduce uncertainty about the new venture, enabling the Corporate Explorer and their sponsors to decide what to do next: invest, pivot, or kill the venture.

Notes

- 1. Michael Tushman, John Winsor, and Kerry Herman, “Deloitte's Pixel (A): Consulting with Open Talent,” Harvard Business School Case 9-420-003.

- 2. Steve Blank and Bob Dorf, Startup Owner's Manual: The Step-by-Step Guide for Building a Great Company (Wiley, 2020); Eric Ries, The Lean Startup (Currency, 2011).

- 3. David Kelley and Tom Kelley, Creative Confidence (Currency, 2013).

- 4. Source missing – with apologies to whomever first shared this example with Andy.

- 5. Ron Adner, The Wide Lens (Penguin Portfolio, 2012).

- 6. Ibid.

- 7. Daniel Kahneman, Thinking, Fast and Slow (Farrar, Straus and Giroux, 2011).