CHAPTER

9

Moving Data

There are probably very few (likely no) Oracle administrators charged with the care of a solitary database who don’t need to share data among other databases. Equally implausible is an administrator who doesn’t need to upgrade or move a database to a different platform at some point. In most situations, at one point or another, the administrator is required to move data at the physical level—that is, the admin will be required to move the datafiles or entire databases from one place to another.

Multitenant architecture brings new scenarios and challenges into play that must be addressed by the DBA, because it is the DBA’s job to manage the database at this level. This includes new features such as the ability to move complete pluggable databases (PDBs), along with extensions to existing features, and it presents new opportunities regarding how to approach some core DBA tasks.

The multitenancy architecture encompasses multiple PDBs. The first feature we will explore is disassociating a PDB from the container database (CDB)—that is, moving the physical files, and then making the PDB part of another CDB. This unplugging/plugging in ability with a PDB basically involves a database move.

We also want to be able to copy a database—and even better, we want to let Oracle perform the file copy for us. Database cloning extends past a simple local machine copy, because in Oracle Database 12c we can clone from a remote database or harness storage features to make “cheap” copies. One facet of this feature is that the source can be an Oracle Database 12c non-CDB, thus enabling a simple way of converting a non-CDB into a PDB.

Oracle Database 12c also introduces many new data movement features that are not PDB-specific, and one of the most interesting is the ability to move databases between previously incompatible platforms.

And last, but not least, we can move PDBs to and from the cloud.

Grappling with PDB File Locations

Moving all the PDB files involves, of course, altering the file locations. The easiest approach is to work with Oracle Managed Files (OMF) and let Oracle generate the file paths and names, using the CDB name and PDB GUID—for example, /u01/app/oracle/data/SRC/29E63B5BE26B3ABEE053050011ACA3F3/datafile/o1_mf_system_cb3gffcj_.dbf. In other words, you can specify the DB_CREATE_FILE_DEST initialization parameter and let Oracle take care of this for you.

However, if you want to manage the locations yourself, you can use the FILE_NAME_CONVERT parameter to map the files to new locations in the create pluggable database statements.

If the datafiles for the target database are already in place, you can specify NOCOPY, so that Oracle uses the files already present. And if the new location is different than the source location, you can use the SOURCE_FILE_NAME_CONVERT clause to map the files to the new location.

The examples in this chapter show how these various options are used.

Plugging In and Unplugging

As you know, a PDB is an independent subset of a CDB. As such, it enjoys a kind of independence, which you have already learned about in the chapters on management and security. Therefore, it makes sense to be able to move data from a single PDB, or indeed the whole PDB, from one database to another.

Moving an entire non-CDB (or the CDB as whole) has always been possible with Oracle. You simply move the datafiles to a different place; set up the configuration files for networking; set up the password file, init.ora, and so on; and then start the database from the new location. The Recovery Manager (RMAN) duplicate command further simplifies this process, but we won’t go into detail on this here. However, you should review Chapter 11, where we explain how to create a standby database, because the two processes are similar.

With multitenancy, moving databases has become easier, and the expectation is that these moves will now occur more frequently as a result. First of all, you don’t need to move the entire CDB, which means that much of the tedious configuration work disappears. There is no need to handle relocating the password file, init.ora, /etc/oratab, for example, and this makes the move simpler, and thus a potentially viable option in many more scenarios.

Second, PDBs contain significantly less Oracle internal data dictionary information. So, as discussed in Chapter 4, you can create a new CDB with a new patch version, and then move the PDBs to this new CDB. From there, you can patch the PDB, which is actually much faster, because there is less dictionary data to update.

Furthermore, multitenancy is often thought of as being synonymous with consolidation, which means having many databases in a CDB. This can inevitably lead to situations in which the number of PDBs outgrows the server capacity. The easy solution now is to move some of the PDBs someplace else.

NOTE

Unlike other cloning options, the plug-in operation does not need a source database up and running; only its datafiles and an XML parameter file must be available. This opens up new scenarios for provisioning, such as making the files available on various media or for download.

Unplug and Plug In a PDB

Let’s start with the simplest scenario—unplugging a PDB and then plugging it into a different CDB, as depicted in the following diagram. Note that the PDB must be closed during this operation.

![]()

After this command has completed, the unplugged database (PDB) consists of its datafiles, which are still in place as they were, along with a new XML file that describes the database (version, DBID), tablespaces, datafiles, installed options, and database parameters set at the PDB level.

Now we copy the files to the target database location. A simple scp to the target server will do:

![]()

The third step is to plug in the database. Note that the files will probably be in a different location than they were on the source, as recorded in the XML parameter file, so remapping may be required.

![]()

An Unplugged Database Stays in the Source

Even after we unplug a PDB and produce the related XML file, the PDB continues to be part of the source database, as shown in v$pdbs and dba_pdbs:

![]()

This also means that the PDB is still part of any RMAN backups, as you can see in the following:

![]()

This ensures that the PDB’s data is still protected, even before we plug it into the target database and begin backing it up there. It also means that all the original datafiles are still in place, and we have ample time to copy them to the desired location for the plug-in. However, there is little else that can be done with this unplugged PDB for now:

![]()

Once the PDB is plugged in and backed up in its new location, we can drop the PDB from the original source CDB to clean up:

![]()

NOTE

The default setting is KEEP DATAFILES. This prevents us from accidentally dropping the data files before we copy or move them to the target location.

What Exactly Is in the XML File?

It’s worth reviewing the XML file that describes the unplugged PDB. In doing so, we can see exactly what information is retained when we move the PDBs from one CDB to another, and it also shows what Oracle can actually check when considering whether the PDB is compatible to be plugged in (as we will discuss in the next section).

The following are some parameters for a PDB named PDBXML:

![]()

In this section we see various version and compatibility-level listings. The vsn refers to the version (203424000 is 0xC200100 in hex, which is an internal representation for 12.2.0.1.0—convert the number digit-by-digit to decimal, 0xC is 12). This ensures that the versions match or, if required, whether an upgrade is needed upon plug-in. Note also the byte order; 1 is little endian.

We also see the different PDB IDs: cid (CON_ID), DBID, cdbid (CON_UID), and guid. This is also not a non-CDB (hence ncdb2pdb is 0), but later in this chapter we will discuss the details for converting such a database.

For each tablespace, we see the following section:

![]()

This covers name, type (temporary tablespace has 1), encryption, list of files—file name, absolute file number, relative file number, create SCN, size in blocks, block size, version, DBID, checkpoint SCN, and autoextend settings.

![]()

The csid is the database character set (873 is AL32UTF8), and ncsid is the national character set (2000 is AL16UTF16; we can verify this with SQL function NLS_CHARSET_ID). The remainder lists all the installed options and their various versions:

![]()

This penultimate section specifies the following:

![]() Whether Data Vault is enabled

Whether Data Vault is enabled

![]() The APEX version

The APEX version

![]() Parameters set at the PDB level

Parameters set at the PDB level

![]() Installed SQL patches

Installed SQL patches

![]() Versions of time zone file

Versions of time zone file

![]() Wallet

Wallet

![]() Defined services

Defined services

![]() Patches installed by OPatch

Patches installed by OPatch

Finally, the XML file includes AWR load profile information, and whether local undo has been enabled.

Check Compatibility for Plug-In

In the examples so far we have taken a few shortcuts, ignoring the fact that Oracle imposes some limitations on PDBs with respect to installed options, character sets, and versions. You can either read in detail the limitations or, perhaps more effectively, ask Oracle to perform these checks, using the supplied DBMS_PDB package.

Get the XML Describing the Database

First, we need to get the XML file that describes the unplugged database. If we have unplugged the PDB, then we already have it on hand and can skip to the next step.

However, we can ask Oracle to generate the XML file even if we haven’t yet actually unplugged the PDB. Although this requires an extra step, it is definitely better to have this information ahead of time, knowing the results of the compatibility check well before we perform the unplug. This will give us ample time to rectify the issues or come up with an alternate solution—such as creating a new target CDB—and all this can occur well before we incur any downtime on the database.

![]()

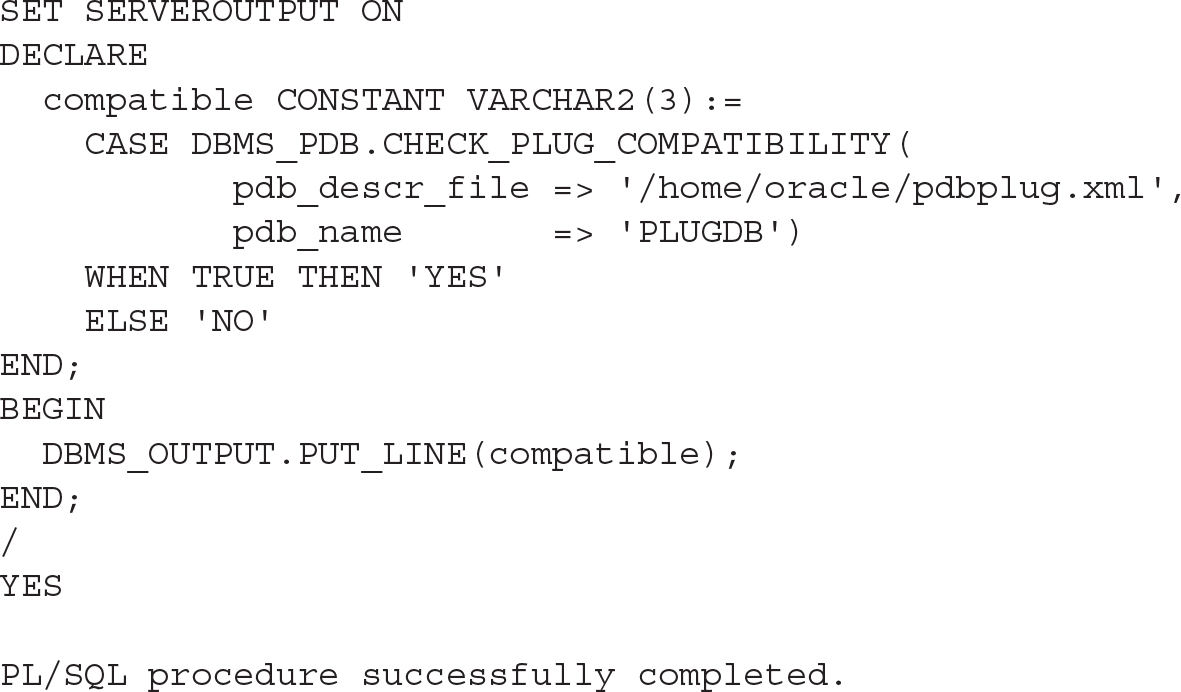

Run the Compatibility Check

Running the compatibility check is simple and is achieved by executing one PL/SQL function in the target database root or application root:

![]()

Of course, if the answer is NO, we want more detail, and this is readily available in the PDB_PLUG_IN_VIOLATIONS view. The view can contain data even if the answer is YES, so it is a good idea to review this regardless of the answer.

NOTE

If you are plugging in databases as part of an upgrade, the compatibility check will return NO, because the versions are different. This is to be expected, and you can still plug in that database, although you won’t be able to open it until you have first finished the upgrade. This is covered in more detail in Chapter 4

Read the Requirements

The list of compatibility requirements is changing with each version, so it is advisable always to review the list for the particular version in use. In short, however, there are four main requirements:

![]() Platform The databases need to be of the same endianness.

Platform The databases need to be of the same endianness.

![]() Options The databases must have the same options installed (partitioning, data mining, and so on).

Options The databases must have the same options installed (partitioning, data mining, and so on).

![]() Versions The source PDB must be the same version as the target CDB before the PDB can be opened. However, it is possible to plug in a PDB of an older version and upgrade it, and then it can be opened.

Versions The source PDB must be the same version as the target CDB before the PDB can be opened. However, it is possible to plug in a PDB of an older version and upgrade it, and then it can be opened.

![]() Character sets The character sets must match. Version 12.2 eases this condition: if the CDB uses AL32UTF8, the PDB can use any character set.

Character sets The character sets must match. Version 12.2 eases this condition: if the CDB uses AL32UTF8, the PDB can use any character set.

Plug In a PDB as Clone

To this point we have been thinking of the plug-in/unplug process as a move operation, with a single PDB at the start and end, even if the PDB at the end runs somewhere else. Sometimes, however, we need to create a copy of the PDB. Although the other options described in the upcoming “Cloning” section are usually used for this, it is possible to use pluggable functionality to create a copy, too. The only principal difference is the number of copies that will reside in the environment, and, as with non-CDB databases, every copy needs to be uniquely identifiable; otherwise, we end up with collisions when DBID, GUID, or CON_UID is used. Note that the RMAN catalog mandates that DBID be unique, and a single database won’t allow multiple identical PDBs, either. Fortunately, the solution is easier than the description of the problem itself: just add the AS CLONE clause when you’re performing the plug-in operation.

Let’s start with a simple PDB, created at the source database:

![]()

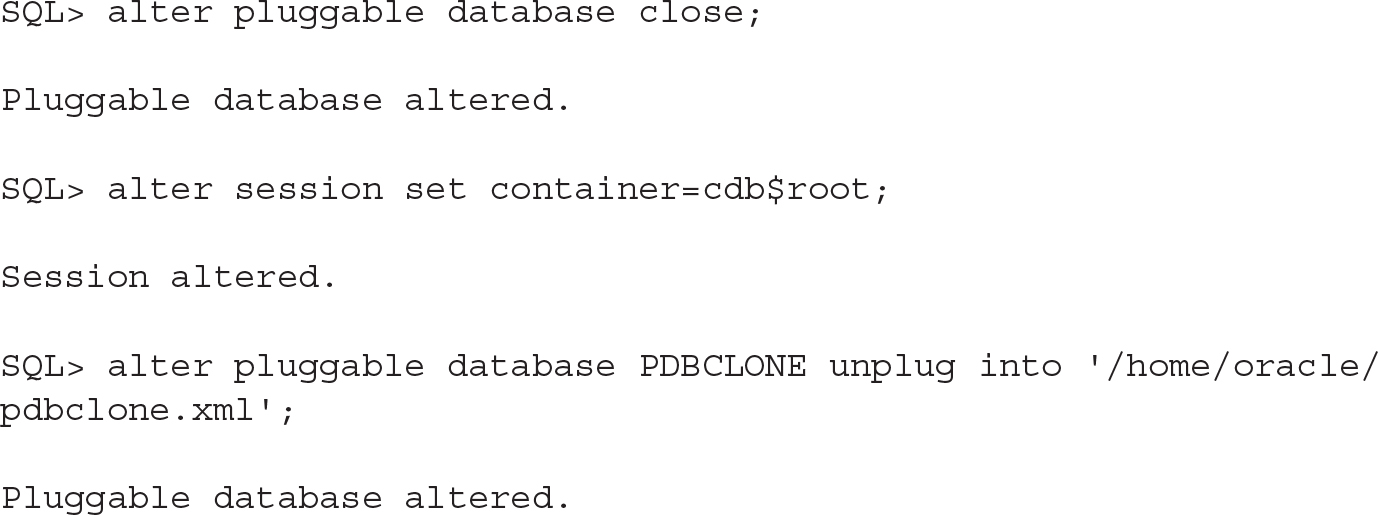

Now let’s unplug it:

![]()

Now plug it into the target database. This will be a move operation, so it will keep its existing IDs.

![]()

The PDB has been created:

![]()

You can see that Oracle changed the CON_UID, but the DBID and GUID values are still the same.

As mentioned, Oracle won’t allow the same database to be plugged in again:

![]()

The AS CLONE prompts Oracle to generate new identifiers, thus allowing us to have multiple copies of the same source PDB in the CDB:

![]()

PDB Archive File

Starting with Oracle Database version 12.2, the plug-in/unplug operations can also work with PDB archive files. A PDB archive is a compressed file containing the XML parameter file and its data files, as well as any other necessary auxiliary files (wallet). This is really just a convenience option, because it’s easier to copy one compressed file than multiple files.

The basic syntax is identical to that used to unplug to XML file, with the only difference being the specified extension of the target file:

![]()

And if we look at the output file more closely, we can see that it’s just a plain ZIP file:

![]()

Note that the XML file inside the archive still refers to the same paths for the PDB on the source, so it’s the same result that we would get from unplugging into XML; however, these paths are ignored. Instead, Oracle unpacks all the datafiles to the directory where the PDB archive is located. This is only a temporary location, Oracle then moves the files to the proper place. NOCOPY is not a valid option here.

![]()

Cloning

In contrast to unplugging operations, cloning does not go through the intermediate step of working with a staged set of files (datafiles and XML file or a PDB archive). Instead it, more directly, reads the files of a running CDB and copies them to a new PDB. This copy can either be local—inside a single CDB—or remote—to a different database, copying the datafiles over a database link.

A clone operation always assigns a new GUID and UID, and there is no concept of moving, as in plug-in/unplug. The relocate feature is no exception to this, assigning new IDs in the process.

Cloning a Local PDB

The easiest use case is a clone inside a single CDB—a local clone, in which no other CDB is involved. The following diagram illustrates this operation:

With Active Data Guard, the standby database will also do the same copy. Therefore, no manual intervention on the standby side is needed; this is unlike in virtually all other cases described in this chapter.

Although a local clone is the simplest use case, there are still multiple options to consider. Let’s start with the most basic one:

![]()

In Oracle Database 12.1, the source PDB must be open read-only (there are even bugs when Oracle doesn’t check the open mode; clone in read/write mode then fails with various internal errors). From Oracle Database 12.2 on, the source PDB can be open read/write, provided that the CDB is in ARCHIVELOG mode and has local UNDO enabled. Either way, we must be logged in as a common user in the CDB root or in the application root.

After the clone finishes, we must open the database in read/write mode, because Oracle won’t allow any other operation on it until the database has been opened at least once:

![]()

Snapshot Copy

Given that a clone is a one-to-one copy of the source PDB, you might wonder whether the underlying file system can help in performing such copies more efficiently. After all, snapshot and copy-on-write functionality are, nowadays, widely used and proven storage features.

The answer is a “limited yes.” If the database is on a supported file system, or Oracle Direct NFS is in use, a clone can be created as follows:

![]()

This is one of the features that we expect will evolve rapidly, changing with every patch set, so it’s advisable that you regularly review the latest status in the Oracle documentation and in My Oracle Support note 1597027.1.

Generally, Oracle favors and promotes ASM Clustered File System (ACFS) and Direct NFS. In the former, the file system provides read/write snapshots, which means that a source PDB can be open and written to. By contrast, the Direct NFS approach mandates a read-only source PDB, but on the other hand it supports many more file systems, essentially needing only sparse file support for the target files. In both cases, the source PDB cannot be unplugged or dropped.

The specification as to which snapshot technology to use is regulated by the CLONEDB initialization parameter; TRUE implies use of Direct NFS clones. Also note that Direct NFS might require setting up credentials in the keystore; refer to the documentation for more information and specific syntax.

Cloning a Remote PDB

The remote clone process extends the local clone concept, with the differentiator being that the source and target database are distinct, and the communication between the two happens over a database link, as shown in the following diagram:

You must be logged in as a common user, either in the CDB root or in an application root. In addition, a database link must be available, because this link will be used to transfer all the metadata and datafiles. This link should be able to connect either to the target CDB or to the PDB.

With plug-in/unplug operations, the database must be compatible, as described previously in this chapter. In a local clone, these conditions are trivial to fulfill, but in a remote clone we must check them. We can use the same steps you used with the DBMS_PDB package, as outlined in the section “Run the Compatibility Check,” earlier in the chapter.

As with a local clone, if we want to keep the source PDB open read/write, we need to use version 12.2+, with ARCHIVELOG mode and local UNDO enabled. In case of a remote clone, it’s sufficient if just one of the sides involved in the clone has local UNDO and ARCHIVELOG mode.

![]()

Splitting the PDB

In all the clone operations, we can specify the USER_TABLESPACES clause. This is a list of all the tablespaces that we want actually cloned; Oracle will add the necessary system tablespaces, but all other tablespaces will be omitted.

In respect to this, two use cases come to mind: First, we may want to provide our developers a subset of the production database, omitting some archival data and keeping the overall size of the clones smaller. Or, second, we may want to split a large, monolith PDB into more manageable, smaller PDBs. A good example of this would be if the source PDB was created by importing a non-CDB database that consolidated multiple applications, each in its own schemas and tablespaces.

![]()

Nodata Clone

A nodata clone is a special type of clone. It does not contain any user data, and all the tables listed in user tablespaces are imported empty, thus effectively creating a metadata-only copy. This being the case, its value is for specific use cases only.

This function is possible only from 12.1.0.2. Furthermore, it cannot be used in conjunction with index-organized tables, table clusters, or Advanced Queuing tables, additionally constraining its usefulness.

![]()

With this function, the new database has the same tablespaces as the source; however, the datafiles are not copied, but instead are created empty. The size of the datafiles matches those on the source, and in the case of datafiles set to autoextend, the new files are created with the initial size.

Refreshable Copy

A hot clone, or a clone from a read/write source PDB, works by recovering all the changes that occur during the copy itself, by use of the ARCHIVELOGs and UNDO data.

Extending on this idea, Oracle can also use this data to recover the clone repeatedly, regularly bringing it up-to-date with the source PDB. This type of clone in Oracle Database 12.2 is a refreshable copy, and it can be refreshed automatically (EVERY nn MINUTES) or manually on demand.

Note, however, that the PDB must be closed for the refresh to occur, both in on-demand and automatic modes. The PDB cannot ever be open read/write, as any local change would prevent Oracle from applying undo from the source. Thus this is the only case where a newly cloned PDB is not first opened read/write in order to be usable.

![]()

As an example, let’s request a manual refresh. Again, note that this must be done from within the PDB and, as mentioned, the PDB must be closed:

![]()

A manual refresh is possible for PDBs configured for both manual and automatic refresh.

A PDB configured for refresh has the status REFRESHING in the CDB_PDBS view.

Relocate

A common use case in database administration is the move of a database, not just a plain copy. To this end, version 12.2 introduces the relocate feature, which makes this explicit. Working in conjunction with the hot-cloning method introduced in the same version, this allows much shorter downtime, instead of doing a clone and then dropping the source.

When we issue the RELOCATE statement, the source database is still open, so it needs to be open read/write and requires local UNDO and ARCHIVELOGs, as expected. The first stage of this process is to clone the PDB while the users are still connected to the source:

![]()

After this, the old PDB remains open and read/write, and it can still be accessed and used. At this point, the new PDB is mounted and has the status of RELOCATING:

![]()

It’s the opening of the new, relocated PDB that finishes the relocation and drops the old database.

![]()

Note that this feature requires more privileges on the source side than all the other options—namely, that the user that the database link connects to needs the sysoper and create pluggable database privileges.

![]()

We can also keep the existing PDB around (in RELOCATED state), to redirect the connections, if the listeners can’t do it for us. This is enabled by specifying AVAILABILITY MAX in the CREATE PLUGGABLE DATABASE … RELOCATE command, and in this case, it’s up to us to drop the source PDB, when it’s no longer needed.

Proxy PDB

Sometimes, with PDBs moving around, it can be difficult for you to keep track of all the various PDBs and updating clients to connect to the correct location for each. Perhaps even more critically, features such as container map and queries using the CONTAINERS() clause (both described in more detail in Chapter 12) require all the participating PDBs to be in the same container database.

To address this, Oracle Database 12.2 brings to the table the concept of a proxy PDB. These PDBs act as a façade, redirecting all requests to the referenced underlying PDB location via an internal database link. So any user commands executed while connected to the proxy PDB affect the remote PDB, not the proxy itself, with these exceptions: ALTER PLUGGABLE DATABASE and ALTER DATABASE statements.

A proxy database has the IS_VIEW_PDB column set to YES in the CDB_PDBS view, so this is a way of identifying one:

![]()

Note that a proxy PDB actually clones the SYSTEM, SYSAUX, and temporary tablespaces of its referent, so it’s not a completely empty shell.

A proxy requires a database link to be defined, and this is one that connects to the target CDB root or the PDB. Although a proxy can point back to the same source database, a database link is always required. This database link is used only during the setup, as the proxy PDB actually creates a new internal database link for passing the user requests. However, this internal database link is not a simple copy of the link that was manually created; instead, Oracle generates this using the host name of the target CDB and port 1521. Note that it is possible to specify the PORT and HOST if these defaults do not match your environment.

![]()

Application Container Considerations

An application container, introduced in Oracle Database 12.2, does not significantly extend cloning functionality. At this stage, only individual PDBs—not the whole application—can be unplugged/plugged in and cloned, and an application root can be unplugged only when empty.

NOTE

The location of a new PDB, created with clone or plug-in, is determined by the current container used when executing the create pluggable database command, and this can be run in the CDB root or in an application root.

Converting Non-CDB Database

As a special case, Oracle allows a database that does not use the multitenant architecture to be plugged in. Such an operation is more of a migration path from the legacy architecture to the new container-based one, not merely a move of data.

For this feature to work, both the source non-CDB and the target CDB versions must be 12.1.0.2 or later. The endian must also match, as the datafiles cannot be converted this way and, as usual, the installed options must also cohere.

There are multiple ways to approach this procedure using PDB operations. The non-CDB can be plugged in, or it can be cloned. Both options are shown in the following illustration.

![]()

Plug In a Non-CDB

For a non-CDB plug-in, we need to run the compatibility check, and the source database must be open or open read-only. Also, as usual, we should back it up before we attempt the plug-in, in case anything goes wrong.

We create the XML file describing an unplugged database:

![]()

Next, we can check for any issues or violations, running a simple script in the target CDB:

![]()

In this output, the first message is obvious and expected, because we are going to plug in a non-CDB. However, the second message is an example of a parameter mismatch. Some of the parameters can be set at the CDB level only, so upon plugging in the PDB, the value of the CDB will override it. It is therefore up to us to determine whether we set the CDB parameter to match the original non-CDB one or not. This is the same issue we face when putting multiple different PDBs into the same CDB, as many parameters are global across all PDBs.

When we are satisfied with these settings, we are ready to perform the actual plug-in operation.

The XML parameter file must be created from a read-only non-CDB in order for the SCNs to be consistent. Thus, if it was generated from a read/write database, we’d set that non-CDB to read-only and create the XML file again. Following on from there, we can shut down the source database, because it won’t be needed anymore. Note, however, that after the plug-in completes, we could continue to use this non-CDB source database, because unlike an unplug of a PDB, it is not marked for drop.

Then we can plug in the database:

![]()

Alternatively, we can plug it in, keeping the files in place. In that case, we should be aware of the TEMP file trap that may be encountered in many scenarios: unless we specify TEMPFILE REUSE, Oracle will try to create new tempfile(s) and fail when it finds the file already exists:

![]()

As indicated in our earlier violation check, we need to run the conversion script, noncdb_to_pdb.sql, at this point. This step is mandatory. Although we might be able to open the database without it, we would face various issues later, such as having a corrupted dictionary, and similar. In version 12.1, it’s not even possible to rerun the script if it fails, because that also corrupts the dictionary. For more on this, refer to My Oracle Support note 2039530.1.

In our tests, the longest running section of this script was in fact utl_recomp, which compiles all invalid objects.

![]()

Now we can open the new PDB and begin to use it:

![]()

Cloning a Non-CDB

Another approach for non-CDB conversion is to use cloning. The difference between this and the previous approach is the same as those with unplug/plug-in versus cloning methods for PDBs. This means that a clone requires fewer steps, can be done remotely, and does not require the physical copying and transmission of files.

The clone of a non-CDB is always remote; the source database is different from the target.

In the following command, we specify the special NON$CDB name for the PDB:

![]()

Once these commands are completed, we need to run the noncdb_to_pdb.sql script, and then we can open the database:

![]()

Moving PDBs to the Cloud

Another use case of PDB operations is to move the database to the cloud—or back from cloud to on-premise. In essence, this does not differ from moving the PDB around our on-premise servers. The cloud (Oracle or other) database is just another Oracle database and all the operations described in this chapter are valid there as well, whether using the command line or Enterprise Manager.

Thus, for the move, we can use ordinary unplug and plug-in, remote clone, or the full range of options, such as relocate or proxy PDB. For some of the basic scenarios, note that Enterprise Manager Cloud Control has a wizard we can use, shown in Figure 9-1.

FIGURE 9-1. Clone to Oracle Cloud in Oracle Enterprise Manager

Triggers on PDB Operations

Like CDBs, PDBs support database event triggers, including opening and closing the database, server error, logon/logoff, and so on. The only trigger that is CDB-only is AFTER DB_ROLE_CHANGE, as switching primary/standby can occur on CDB only (we discuss Data Guard in detail in Chapter 11).

A new trigger introduced in 12c is the BEFORE SET CONTAINER or AFTER SET CONTAINER, fired when a session changes the current container using ALTER SESSION SET CONTAINER. The use of this trigger is very similar to a logon/logoff trigger, especially for connection pools and the like, where one session switches between containers; the single logon/logoff trigger is not enough to cover different requirements for the various PDBs.

Finally, and perhaps most importantly in the context of this chapter, as they apply to the types of operations described herein, two new trigger types were added that work at the PDB level only: AFTER CLONE and BEFORE UNPLUG.

As the name suggests, the AFTER CLONE trigger fires at the new database, after it is cloned. If the trigger fails, the new database is left in an UNUSABLE state and the only operation permitted is to drop it.

The BEFORE UNPLUG trigger fires when the unplug operation starts. If the trigger fails, the operation does not happen, so no XML file is created and the PDB remains part of the CDB.

In both cases, when the trigger succeeds, it is deleted.

There are several use cases for triggers—for example, if we want to delete any stored passwords and keys to external systems and drop database links before we unplug the database and distribute it. Another use case would be to employ it to mask data after we have cloned a PDB to a test environment.

Full Transportable Export/Import

The transportable database feature is a logical step-up from the trusted and proven transportable tablespace feature, first introduced way back in 8i.

Transportable tablespace functionality was originally introduced to move just one or a few tablespaces, such as from an OLTP system to a data warehouse. But over the years, its usage developed to moving large amounts of data, such as all of the user datafiles during a migration or upgrade.

Additionally, transportable tablespace was also enhanced to handle cross-endian data movement, although one gap still existed, in that it could not move non-table objects such as views or PL/SQL.

The new transportable database feature now addresses this. It handles user tablespaces like transportable tablespace always did and, in addition, it can also export the other objects as a Data Pump export would do, in a single step. And being based on the proven foundation, it includes cross-endian conversion possibilities.

Like transportable tablespaces, the transported tablespaces must be read-only during the import process. RMAN allows a workaround for this for transportable tablespaces, in that it can create an auxiliary instance and run the export there, but this is not available for full transportable export.

This full transportable export feature has been available since version 11.2.0.3.

The multitenant twist on this feature is that the result can be imported into an existing CDB. In other words, it can be used to plug in a non-CDB into a CDB. The advantage of this, as opposed to the simpler way described earlier, is the cross-endian support. It also does not automatically copy the files, so if the conversion happens on the same machine, we do not need to copy the datafiles at all, speeding up the conversion and saving disk space.

Another scenario is plugging in a PDB that would otherwise not meet the requirements: so we can move a database cross-endian, or get around an unmatched list of installed features. (Of course, the target database needs to have installed any features the new PDB and its users and applications will use.)

Unlike the simple and standard plug-in, full transportable import requires that an already existing (empty) database be created first. The import process then imports only user data.

Note also that only one PDB is exported at a time; and when we specify a connection to the CDB, only the CDB user data is exported, not the PDB’s.

As an example, let’s export a non-CDB database and import it into a fresh new PDB.

First, all of the user tablespaces must be made read-only. However, the database itself must be read/write, as Data Pump creates a job table in the SYS schema:

![]()

Then we run the Data Pump export, creating a directory beforehand, if necessary. Because this exports all the object definitions, it does take some time, although this is minimal in comparison to a data export:

![]()

As you can see, the export conveniently prints the list of necessary files—the dump file and all the datafiles—at the footer of the screen output and the log.

Now we create a new PDB:

![]()

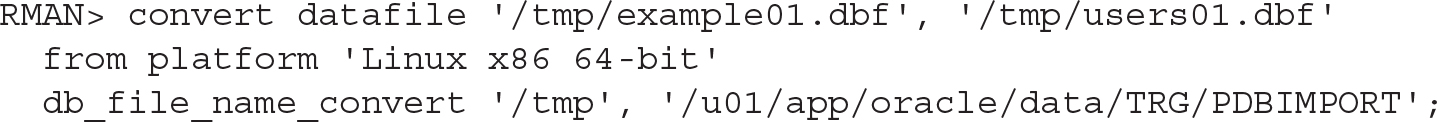

The next step is to copy the files to the destination directories and change the endian using RMAN if necessary:

![]()

Now we import the dump:

![]()

Transportable Tablespaces

Not much has been altered with this functionality since 11g. The transportable tablespace feature is still present in multitenant and can be used to move data from one database to another, but it’s more complicated than unplug/plug-in and limited when compared to a full transportable export, although it still has its use cases.

One notable unique feature is the ability to use RMAN to obtain the datafiles without setting them to read-only, thanks to using an auxiliary instance. In 12c, RMAN has been further enhanced with the addition of syntax to specify a tablespace in a particular PDB:

![]()

![]()

See Chapter 12 for more examples of how transportable databases can be used.

Summary

In this chapter we covered one of the most interesting topics which the multitenant feature has given rise to: separation of the PDBs and their movement from a CDB to another one. As we have seen, there are a multitude of options, with many ways leading to Rome, and each has its own pros and cons.

As you gain more real-world experience with multitenant, you’ll find this functionality more and more useful for solving problems in ways not possible before. This creative leverage comes with real-world experience, lots of experimentation and testing, and a fresh mind when approaching problems.