CHAPTER 2

Server Hardware

In this chapter, you will

• Learn about server form factors: tower servers, rack-mounted servers, and blade servers

• Learn how BIOS works

• Learn how UEFI works

• Review the basics of CPUs and how they handle data

• Review the different types of memory

• Learn about bus types

• Learn about NICs

• Review storage types

• Learn about power and environmental controls

Server hardware isn’t just about the components inside the server—it’s also about the size of the server case and its components. This chapter will cover what you need to know when ordering and replacing server components.

Server Form Factors

Physical servers and their parts come in a variety of shapes and sizes, or form factors. Not all components will fit into every server. For example, adapter cards that fit well within a server tower will not fit into a blade server, and a tower server can’t be rack-mounted in a server room rack.

Form factors also apply to computer cases, power supplies, motherboards, expansion cards, and so on. The dimensions of these pieces determine which ones fit together properly. Data centers need to fit as many servers as possible within a finite amount of space, so increasing server density is possible using smaller server form factors.

Other components such as PCI Express (PCIe) expansion cards adhere to industry-standard form factors. Perhaps you want to add a 10 Gbps (gigabits per second) Ethernet network card to your rack-mounted server. This could be a problem, because a standard PCIe card won’t fit within most rack-mounted servers, which are much thinner and smaller than tower servers.

Server technicians need to know all the details related to the types of servers they are responsible for. Let’s say it’s the first day on the job for a CompTIA Server+ certified tech. How can this person know what servers are in place? Clearly, one way is to inventory servers physically in server rooms or data centers, but larger enterprises will have automated solutions that inventory physical and virtual servers and store the results in a database that can be queried and reported on. Virtual servers, of course, don’t have form factors.

Tower Servers

Tower servers have been around for a long time, and the tower is what most people think of when they hear the word “server.” Powerful desktop computers (such as those used by gamers) are often towers. This server form factor isn’t screwed into any type of mounting device; it is a standalone computer that can be easily moved without removing screws or sliding it out of a rack.

In this server form factor, all server components are housed within a single case that can sit directly on the floor or on a desk. Server components are easy to find, because this is a tried-and-true hardware technology.

Adding dedicated components such as disk storage to the server is easy, because there is plenty of physical space inside a tower server. This isn’t the case with other server form factors such as blade servers, however, although blades can use rack-mounted storage devices; the storage isn’t physically installed inside the blade. Imagine trying to accommodate hundreds or thousands of tower servers in a server room or data center: the cost of real estate alone would be tremendous! Suffice it to say that tower servers don’t scale well. They take up a lot of space and they can’t be rack-mounted, as you can see in Figure 2-1.

Figure 2-1 Dell PowerEdge T620 tower server, courtesy of Dell, Inc.

Tower servers are often used in smaller offices. If IT budgets are stretched, towers might be an attractive option, because even a standard desktop PC could be configured with a server operating system—but, of course, this isn’t designed for large-scale use.

Another possibility is a central IT office that preconfigures servers to be used in branch offices. Perhaps only a single server is needed at a branch office to localize user access to server services, so it might make sense to ship a tower server to that location instead of purchasing expensive server racks and a single-rack mounted device.

When it comes to component redundancy, most tower servers fall short. They can accommodate standard hardware components, but they don’t often come with redundant power supplies. There’s also the issue of power and data cables. If your server room contains only towers, you may find it tricky to organize all of the cables. Server racks have conduits into which cables are easily and neatly arranged, which makes labeling and troubleshooting easier. Main distribution frames (MDFs) are cable racks that organize data cabling from intermediary distribution frames (IDFs), which organize cables plugged into equipment. (Just make sure you label both ends of each cable!)

Rack-mounted Equipment

The 1990s produced the server rack form factor courtesy of Compaq, which was acquired by Hewlett-Packard in 2002. Computing and the Internet were taking off, and many companies realized they needed servers on premises. Nowadays that has shifted to running servers in the public cloud on somebody else’s equipment.

Rack-mounting increases the potential server density in a server room or a data center, and using this equipment can increase security, because most racks have front and back doors that can be locked. In a large data center that accommodates multiple customers or tenants, controlling physical access to rack-mounted servers and equipment is important. The size of your enterprise and its data requirements, and the size of the data center, determine how many racks you can use. Server technicians will sometimes need to identify a physical server is a server rack; unit identification (UID) LED lights on the front and back of a rack-mounted server will blink when the server is being managed remotely or when the UID button is physically pressed on the server. This is useful when technicians must access the back of a rack-mounted server and want to ensure they are working on the correct server.

Special cases and rails are used so that rack-mounted equipment can be easily inserted and removed from racks. Rack-mounted servers ship with their cases, and sometimes they also include rails and the screws needed to secure the rails on the rack.

Rack-mounted Servers

Rack-mounted servers will appeal to those who prefer tidiness and organization; they are essentially thin computers (from 2 to 12 inches wide) that are designed to be stacked vertically in a metal framework, or rack. This keeps things tidy and uses a minimum of space, so this form factor is definitely scalable. Rack-mounted equipment can be blocky, with sharp edges, however.

To assist in removing single points of failure, rack-mounted servers normally have dual power supplies. There are normally at least two network cards and in some cases management ports, and all of these connections are on the back of the device. Figure 2-2 shows ports on the front of the server; these allow a keyboard, mouse, video display, or a KVM (keyboard, video, mouse) switch to be connected.

Figure 2-2 Dell PowerEdge R515 rack server, courtesy of Dell, Inc.

Racks

Full metal racks can be heavy, and server room and data center construction must account for that by including floors that can safely handle the weight. And because you don’t want racks tipping over, balancing and bolting them to the floor is recommended.

Most racks are 19 inches wide (they do come wider), and they often use metal sliding rails that the servers and other types of appliances (such as network firewalls, storage enclosures, and so on) neatly fit into. Racks may not come with rails, so you may have to acquire rail kits. Rack-mounted equipment such as servers usually comes with a rail kit. (Be careful not to get pinched when inserting into or removing equipment from these sliding rails.)

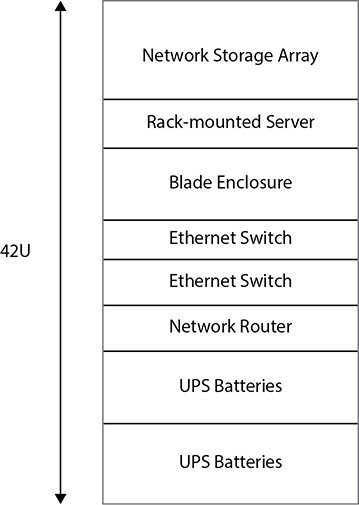

Not all racks are equal, and they are available in different widths and heights. You may use one rack to accommodate servers and another to accommodate storage arrays. Rack-mountable devices use their own units of measurement for height, called rack units (U)—1U is 1 3/4 inches, 2U is 3 1/2 inches, 4U is 7 inches, and so on. This measurement is a standard that refers to the vertical distance between the holes in the rack to which rails and rack-mounted equipment are secured. A single rack-mounted device may be from 1U up to 7U in height. Most racks have a maximum of 42U (see Figure 2-3). When you’re ordering rack-mountable servers, the specs will detail how many Us the enclosure requires so you can plan placement within the rack.

Figure 2-3 A 42U server rack can contain various pieces of equipment.

Now, because you can place many servers together in a rack, it just makes sense to place server storage appliances, power sources (including UPSs), and network cables in the rack, too. Too many times I’ve seen messy racks with cables hanging everywhere, both in front of and behind the rack; not only is this a safety hazard, but tracing cables when troubleshooting will be next to impossible!

Power distribution units (PDUs) provide power outlets to racks in server rooms and data centers. To eliminate a single point of failure, redundant PDUs should be plugged into separate circuits. To extend this point, redundant server power supplies should each plug into separate PDUs. Data centers normally have alternate sources or providers of power, such as diesel generators, in the case of a power outage.

Because many different types of items can draw power from PDUs, you should check your PDU’s rating to ensure that your equipment doesn’t draw more power than the PDU’s load capacity can accommodate.

Cable Management Arm

A cable management arm is a metal or plastic folding component that is attached to the back of a rack-mounted device. All cables from the device (power, network, and so on) are fitted into the arm, which serves as a conduit or trench in which the cables are placed. You would also normally use cable zip ties to bundle together cables from a device. (A standard rack-mounted server has dual power supplies and at least two network cards, so that’s four cables right away for a single device.) When you pull out a rack-mounted device (on the sliding rails), the cable arm expands so that you don’t pull the cables out.

After your rack-mounted devices and cabling are in place, use rack fillers (blanking panels) to cover empty spaces in the rack. These, in addition to cable management arms, ensure that fan intake vents are not blocked. This can improve airflow, which can also save money by saving energy in the long run. Rack fillers are also measured using the U system and are available with venting holes.

Blade Servers

Blade servers make me think of Star Trek: a technician inserts a highly sophisticated card into a slot to prevent the destruction of the USS Enterprise. And that’s essentially what the blade server form factor is—a circuit board containing its own processors, memory, and network capabilities, and, in some cases, a small amount of storage, but no power supply or cooling mechanisms. Blade servers cannot run on their own. Most blades have a USB connector on the front in case you want to connect external components such as a DVD drive.

This small server form factor (Figure 2-4) will most likely replace rack-mounted servers at some point. Large data centers can increase their server density using blades, so scalability is not a problem. Like everything in IT, it’s a tradeoff—sure, you can fit more blades than towers in a fixed amount of space, but towers are cheaper and easier to expand if you need expansion cards or additional storage.

Figure 2-4 Dell PowerEdge M520 blade server, courtesy of Dell, Inc.

Blade Enclosure

A blade enclosure is a proprietary chassis that can house several blade servers from the same vendor, and it can measure from 6U to 12U. Blade servers slide into the blade enclosure. The enclosure provides

• Temperature control mechanisms, including fans

• Power

• Network connectivity

• Storage connectivity

• Server remote management connections

Within the enclosure, the backplane connects server and I/O blades. I/O blades (or cards) can provide faster network connectivity, storage for blade servers, management capabilities, and other things. The midplane is a printed circuit board (PCB) with server blades that connect on one side (the front) and other components accessible on the other side (the back). If, for example, 10Gb network switching is required, you would have to use a specific midplane with this support.

Note that some manufacturers provide backplane and midplane redundancy to reduce single points of failure. Blanking panels are used where there are empty slots in the enclosure for better cooling and airflow.

Server Components

Whether you are working with tower, rack-mounted, or blade servers, they all have components that give them specific functionality:

• Multiple processors (each with multiple cores)

• Memory (RAM)

• Storage (local and/or network accessible)

• Network connectivity (servers often have multiple cards)

• Management capabilities (for blade systems or hardware-level remote control)

Firmware is essentially software stored in a chip, and it’s used all over the place—a server’s motherboard BIOS, smartphones, and expansion cards, to name a few. Like operating system or application software, firmware comes in different versions with different capabilities and needs to be updated periodically. Updating server motherboard firmware can sometimes update the unique 128-bit universal unique identifier (UUID) that is used in firmware as a global server identifier for asset-tracking purposes.

Hardware problems can sometimes masquerade as software issues. For instance, flawed firmware code could cause server operating system instability. The solution is sometimes as simple as downloading and applying a firmware update from the manufacturer’s web site.

Even though it is considered obsolete, some manufacturers still supply an MD5 hash value on the download web page that you can recompute after downloading to verify that the file, such as a firmware update, hasn’t been changed. MD5 has been superseded by Secure Hashing Algorithm (SHA), so you’re more likely to see SHA hashes than MD5. Plus, when updating firmware, you need to be sure you’re applying the correct version of the update. The big guys (Dell, HP, IBM) often offer rollback options if you don’t like the applied firmware update.

BIOS

The basic input-output system (BIOS) is firmware built into a circuit board such as a motherboard or a RAID (Redundant Array of Inexpensive Disks) disk controller. BIOS has been around for decades. This is the magic that kicks in the moment you turn on the power for your server or when a card initializes.

When starting up, the server BIOS checks critical hardware components such as power, CPU, RAM, and video to make sure they are in place and functional. If the components are not functional, you’ll get various beep codes or error numbers and messages, provided video is working. This is called the power on self test (POST). Assuming things are working, the BIOS then checks the master boot record (MBR) to hand control over to an installed operating system.

Disks are initialized on a computer as either MBR or GUID Partition Table (GPT), depending on operating system support. There is only one copy of the MBR on an MBR disk, and it sits on the first sector of the disk before the first disk partition. GPT disks store multiple copies of this data throughout the disk for additional resiliency.

MBR disks are limited to four partitions. GPT disks can have up to 128 partitions on a Windows system. So on a unified extensible firmware interface (UEFI) system, booting from a GPT disk is possible as part of the POST.

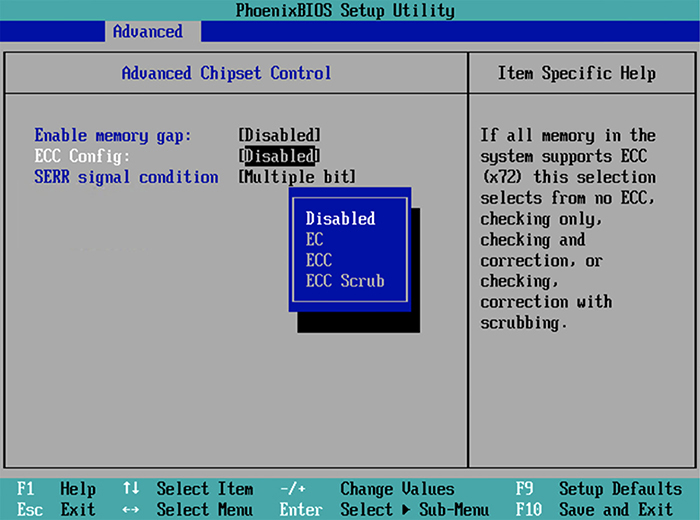

The complementary metal oxide semiconductor (CMOS) is essentially the specific configuration of hardware settings supported by the BIOS. For example, you might change the boot order on your server to boot from USB (requiring a password of course!) first, and then from a local hard disk. Or you might enable or disable the CPU execute disable (XD) bit. With this option enabled, the processor flags specific areas of memory where data can reside and code cannot execute. The BIOS has the capabilities, and the CMOS retains your configuration of those capabilities. Figure 2-5 shows a basic BIOS configuration screen.

Figure 2-5 BIOS screen

But how can you configure CMOS settings? In other words, how can you configure the BIOS? It depends on the BIOS manufacturer. The following list shows a few common BIOS manufacturers:

• ASUS

• Phoenix

• IBM

• Dell

• HP

If you’re using a Dell system, for example, when the system is first powering up, you can press the F2 key on the keyboard to enter the BIOS settings. Some BIOS firmware will briefly display a message telling you what to press. Properly configured servers will require a password before you can view or change these settings.

UEFI

Unified extensible firmware interface (UEFI) is the new BIOS: BIOS is old, and UEFI is new. On PCs, UEFI has become common over the last few years, but Apple computers have used UEFI for a long time.

Here’s a list of a few UEFI features that most BIOS firmware does not support:

• Graphical interface with mouse support

• GPT hard-disk support, including booting from GPT

• IPv6 support during boot

• Support for Secure Boot

• Support for new modules for additional functionality

Let’s zoom in to the UEFI Secure Boot feature for a moment. If malware can inject itself into the pre-boot operating system (OS) environment, then even the most secure OSs can be compromised. Think about it: Code is in place before the OS boots and then has direct access to hardware. It sounds nasty, and it is. Secure Boot prevents untrusted executable code from running before the OS initializes. Digital signatures are used to validate trusted code.

CPUs

The central processing unit (CPU) is the brain of the server and comes on a chip. Of course, to handle more intense workloads, having more brains helps. The first version of a CPU is referred to as step 0. As the manufacturer releases the same CPU with improvements, the step value increases—for example, from 0 to 1. Adding more horsepower to a server is called scaling up, while adding additional servers to handle a workload is called scaling out.

CPU Architecture

Although most modern CPUs have a 64-bit data path, you may also come across 32-bit. Newer server operating systems, however, such as Microsoft Windows Server 2012 R2, support only 64-bit. The 32-bit machines have a maximum addressable memory limit of 4GB, while 64-bit machines have a limit of 16EB (exabytes).

Modern mobile devices use Advanced RISC Machine (ARM) processors. A few examples include Apple iPod, iPad, and iPhone products; Raspberry Pi devices; and Microsoft Surface tablets. ARM processors come in both x86 (32-bit) and x64 (64-bit) flavors.

If you plan on running a hypervisor (such as Microsoft Hyper-V or VMware vSphere Hypervisor), your CPUs must support hardware virtualization. AMD processors must support AMD Virtualization (AMD-V), and Intel processors must support Intel Virtualization Technology (Intel VT).

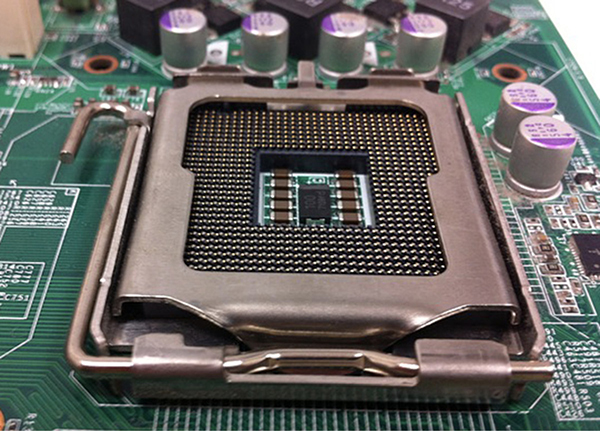

When planning server hardware, you should also consider the CPU speed. Naturally, a 4 GHz processor gets things done more quickly than a 2 GHz processor. But this speed refers to how fast instructions are processed internally; you have to ask yourself, “How quickly can data get to and from the CPU on the motherboard?” The following illustration shows a CPU in its motherboard socket.

Waiting for the Bus

Buses move data among the various components within a computing device, so every server motherboard always includes several buses, such as a PCIe expansion card bus and the main system bus. The bus speed (usually expressed in megahertz, or MHz) is different from the internal CPU speed, and it determines how quickly data gets to and from certain areas of the system.

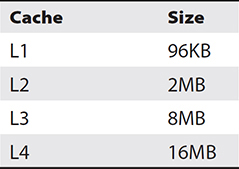

CPU Cache

CPUs have small amounts of high-speed memory, or caches, to speed up the execution of anticipated CPU instructions—or, said differently, to increase the hit rate. The hit rate indicates that when the CPU needed instructions or data, it was retrieved from cache (a hit) instead of from another slower location (such as finding data in L1 cache instead of L2). L1, L2, and L3 caches are common in today’s server hardware: L1 is the fastest and (the less common) L4 is the slowest (and largest).

The L1, L2, and L3 caches are typically built into the processor, while L4 uses main system memory. The reason L1 cache, for example, is faster than normal system memory is because it uses static RAM (SRAM) as opposed to dynamic RAM (DRAM) or synchronous DRAM (SDRAM). SRAM uses transistors to retain data, which speeds up data access. Table 2-1 shows common CPU cache sizes, which will vary between CPUs and motherboards. Bear in mind that L3 and L4 cache memory is used by all CPU cores for CPU chips in a socket.

Table 2-1 CPU Cache Sizes

The least recently used (LRU) caching algorithm ensures that cached items are listed in the order they were processed, so that the oldest used items can be removed from cache, thus making space available for new items. LRU refers to how older cached items are replaced with new items. Most recently used (MRU) algorithms do the exact opposite: the newest items in cache are removed when space is needed. The specific type of processing requirement determines whether LRU or MRU is appropriate.

A server can have multiple identical physical CPUs working together via symmetric multiprocessing (SMP), but of course the server motherboard must have sockets to accommodate the CPUs. So by distributing work among a group of CPUs, you reduce the amount of time it takes to complete the work. Reduced Instruction Set Computing (RISC) refers to processors that are very efficient in that they are designed to work with simpler and smaller instruction sets, resulting in a smaller chip size than traditional Complex Instruction Set Computing (CISC)–based processors. Today, RISC processors are often found in mobile devices.

On the server side of things, Table 2-2 outlines common CPU sockets and processors. You can’t just plug any CPU into any socket; they have to match!

Table 2-2 CPU Sockets and Processors

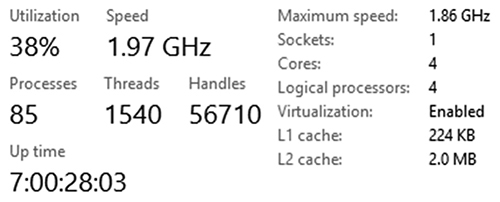

Taking the discussion a step further, each physical CPU chip can have multiple cores—essentially, multiple CPUs within the same chip plug into a single motherboard socket, which means more computing power while using less space than an SMP system. Figure 2-6 shows a single CPU socket Windows host with four internal cores and with L1 and L2 caches.

Figure 2-6 Multicore CPU and cache listing

GPUs

Modern graphic computing demands can sometimes require hardware dedicated to processing complex visuals related to gaming, 3-D modeling, video rendering, high-resolution image editing, and so on. Graphics processing units (GPUs) are specialized processors designed to handle computationally intensive graphic operations, thereby freeing up the CPU(s) in the server to process other instructions.

GPUs can be embedded within the server motherboard as part of a chipset or are available as expansion video cards, and they can also have dedicated video memory to isolate graphic operations from nongraphic operations handled at the CPU level. Specialized high-end desktops are more likely to contain GPUs than servers, such as for video animation; however, remote desktop access to a server could require the server to be configured with GPUs depending on the type of work being done. Typical smartphones also use GPUs for modern graphic rendering, but to a lesser degree than specialized GPU cards.

Cloud-computing customers can also benefit from running cloud-based virtual machines or remote desktops (Desktop as a Service, or DaaS) with enhanced GPU capabilities. This normally incurs a greater usage cost than non-GPU–enhanced virtual machines running in the cloud.

Memory

Random access memory (RAM) is the electronic volatile memory used by running programs; it needs electricity to retain data, unlike disk storage devices. Servers can accommodate different amounts and types of RAM, and motherboards can have specific memory module placement rules.

Server motherboards normally require memory chips to be installed in pairs for efficient use by CPU sockets. Pairing, along with other memory requirements, can differ from one motherboard to the next, so be sure to read the motherboard documentation.

Memory Timing

Schemes such as double pumping can transmit data on the rise and fall of a clocking, or timing, signal. This means your data transfer rate is effectively doubled. You can think of electronic memory being organized into rows and columns.

The trick is getting data into or out of memory addresses as quickly as possible. Factors influencing memory speed include

• How long it takes to select a memory column

• How long it takes to select a memory row

• How long it takes to read data when a memory row is selected

ECC

Most server motherboards can accommodate error correcting code (ECC) memory. This type of memory chip has the added capability of detecting and fixing memory errors at the bit (0 or 1) level. It does this by using an extra bit of information (the parity bit) for each group of memory bits.

Although most non-ECC modules can detect memory errors, they don’t do anything about it. You’ll find this often in consumer-grade computing equipment as opposed to servers. Non-ECC memory is cheaper than ECC memory. Your motherboard documentation will specify what type of memory chip can be used and how to enable ECC. The following illustration shows how ECC can be enabled in the BIOS.

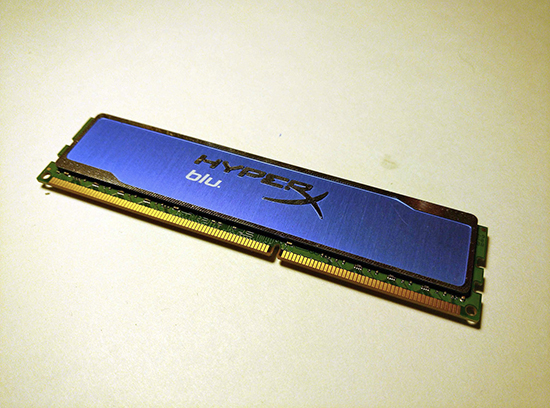

DDR

Double data rate (DDR) memory increases data transfer rates to and from memory, compared to single data rate (SDR); it’s all based on timing. As any musician will tell you, timing is crucial, and the same is true in the world of computing. There are different DDR standards, or versions, and they are not compatible with one another. This means DDR3 chip sockets on a motherboard will accept only DDR3 memory chips.

DDR2 supersedes DDR1, DDR3 supersedes DDR2, and so on. So if your server motherboard supports DDR2 through DDR4 RAM chips, you should choose DDR4 for the best performance. DDR3 memory is shown in Figure 2-7. The column access strobe (CAS) latency defines how long it takes for requested memory contents to leave the actual memory chip on their way to the bus. Table 2-3 shows DDR memory details.

Figure 2-7 DDR3 240-pin memory module

Table 2-3 DDR Memory Details

Buses

Motherboards and expansion cards need a way to move data around, and this is where the bus comes in. Think of computer buses like you think of real buses, which move people around using roads; a computer moves data bits around the system using buses.

There are many different types of buses, and some motherboards accommodate multiple buses. The buses we discuss here are for expansion cards plugged into expansion slots and include the PCI, PCI-X, and PCIe standards (discussed in the following sections). Using additional buses not supported by the motherboard is possible by using host bus adapter (HBA) cards, such as those used for storage area network (SAN) connectivity. Slimmer server form factors (such as rack-mounted servers) benefit from riser circuit boards that plug into the motherboard and enable expansion cards that otherwise wouldn’t fit to be plugged in.

PCI

Peripheral Component Interconnect (PCI) is a 32- and 64-bit bus and expansion slot card standard, but you won’t find it on many of today’s server motherboards. Back in the 1990s, PCI was a big deal, since it really was plug-and-play (PnP). This meant you didn’t have to fiddle around with jumpers and DIP switches to configure the card. As long as the BIOS and operating system supported PnP, all was good.

Interestingly, 64-bit PCI cards could be plugged into 32-bit PCI expansion slots and would still function, although at a slower rate. Another consideration was the card height, and this is an issue affecting more than just PCI cards. PCI full-height cards take more space than the related lower profile cards, and depending on the server case and motherboard form factors, a full-height card may not fit properly. PCI is rated between 133 and 800 MBps, depending on 32- or 64-bit and the frequency used.

PCI-X

PCI Extended supersedes PCI by improving transfer rates up to four times to and from slots with bit transfer rates between 2 and 4 GBps. This 64-bit parallel transmission standard runs at various frequencies (often the case with expansion slots and cards), which influences the data transmission speed.

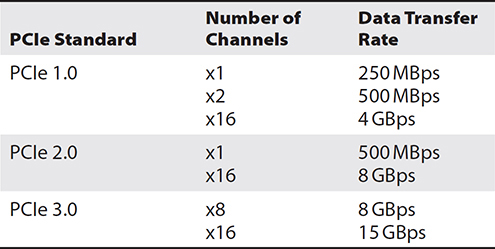

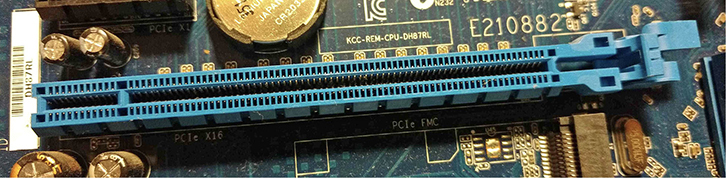

PCIe

The serial transmission-based PCI Express standard supersedes both PCI and PCI-X, with more bandwidth, which is especially useful with video, storage, and network cards. The bandwidth will vary depending upon the specific version of PCIe and how many lanes (channels of communication) are used. Table 2-4 shows examples of PCIe speeds in megabytes per second (MBps) as well as gigabytes per second (GBps). (The number of PCIe lanes is denoted using an x symbol, so a single-channel configuration is listed as x1, a two-channel configuration is x2, and so on.)

Table 2-4 PCI Express Speed Examples

If your PCIe slots can accommodate x16, like the example shown in Figure 2-8, then of course you should opt for that type of card if you have the option, since it has the highest transmission rate. Direct memory access (DMA) is supported by some devices and enables access to system memory without involving the CPU.

Figure 2-8 A PCIe x16 motherboard slot

PCIe cards are not as tall as PCI cards, and most modern servers support PCIe cards. In some cases, the PCIe card may be hot-pluggable. The great thing about PCIe is that you can, for example, plug an x8 card into an x16 slot.

NICs

Most physical servers have at least two integrated network interface cards (NICs), but it’s pretty easy to find servers with four or more interfaces in some cases. Virtual servers can have as many virtual NICs (vNICs) as required for the server role. For instance, a virtual private network (VPN) server should have at least two interfaces.

If you’re adding NICs to a physical server, you need to know which type of unused expansion slots are in your motherboard (PCI, PCI-X, or PCIe). The network transmission speed is a big deal; your network infrastructure might support 10 Gbps, so you want to get the fastest NIC possible that will work in your environment.

Each NIC needs to be configured with the appropriate IPv4 or IPv6 settings (which will be discussed in detail in Chapter 5). Even though the NIC is specific to the server, you should consider what it is plugging into. For example, a multihomed server (which has more than one NIC) may have each NIC plugged into different network switches or a specific switch virtual LAN (VLAN) port. Then there’s NIC teaming—grouping NICs together for load balancing or better throughput; this applies both to physical and virtual machine NICs. Virtual machine NICs can be tied to specific underlying physical NICs.

Storage

Storage is sometimes embedded on a server motherboard, such as with some blade servers. Other times, servers have local disks available, and yet another possibility is a server reaching out over a network to use disk space. Storage attached to a local server is known as direct-attached storage (DAS), whereas network storage can come in the form of network-attached storage (NAS) or storage area networks (SANs). Chapter 4 covers storage in greater detail.

Virtual machines running server operating systems have a variety of options, including these:

• Using a physical disk partition available from the underlying hypervisor

• Using storage accessible over a SAN through the underlying hypervisor

• Using a virtual hard disk (VHD) file

VHD files may be stored on a local disk on the underlying physical server or on a network storage location. For physical servers, you may also have to add an expansion card that connects the server to additional storage.

RAID Controllers

RAID groups physical disks together as a logical unit to increase disk performance and/or to provide disk-level fault tolerance. You can set this up at the software level (within the server OS) or at the hardware level using a RAID controller. RAID levels will be discussed in Chapter 4.

Most server motherboards include an integrated RAID controller that connects to multiple physical disks. RAID controllers have their own firmware configuration utility to set up the specifics. Disk arrays normally have hot spares, disk drives plugged in and ready to take over if disks currently active in the array fail. Now if this happens, you need to remove the failed disk itself and replace it with a functional one—this is called hot-swapping.

Battery-backed write caching is used for disk array controllers for a number of reasons. On occasion, you may find yourself reconfiguring or migrating disk stripe settings and data, and this feature proves useful; however, a common use is when stopping and restarting a disk array. Upon restart, data not yet written is cached (so it is not lost during a power cycle), so it gets written to disk.

Consider these items when working with hardware RAID:

• Don’t worry about whether your server OS supports hardware RAID; this is a nonissue. The hardware will present what appears to be a single disk to the server OS.

• Ensure that you have access to two or more disks either locally or over a storage network. This could involve asking your storage administrator to “carve out” a LUN (a number identifying a chunk of disk space) for you.

• Decide which level of RAID makes sense for your situation. Are you concerned with fault tolerance, improved performance through disk striping, or both? Check out Chapter 4 for details on RAID levels.

• Keep performing server backups! Some RAID levels duplicate data, but this doesn’t mean you shouldn’t continue server backup procedures.

USB

The fastest transmission technologies are serial, not parallel, and that includes universal serial bus, or USB. USB creators weren’t kidding when they selected the word “universal”—you can plug just about anything into a USB port:

• Keyboard

• Storage device (see Figure 2-9)

Figure 2-9 An external USB solid-state drive

• Smartphone

• Tablet

• USB hub

• Coffee mug warmer

• Lamp

• Cute mechanical puppy

Servers commonly use USB for keyboards and mice, which are referred to as human interface devices (HIDs). Storage is usually connected to a RAID controller. A few USB versions have evolved over the years, from USB 1.x all the way up to 4.x. You’ll find these details in Table 2-5.

Table 2-5 USB Standards and Transfer Rates

USB 3.0 is backward-compatible, so you can plug a USB 3.0 device into a non-USB 3.0 port. USB hubs enable you to plug in more devices than your machine could otherwise accommodate, but watch out for the power draw for USB devices that don’t have their own external power source. Also watch out for the appropriate use of USB cables—for example, one cable may be used only to charge a smartphone, while a similar-looking cable may be used for both charging and data transfer.

Power

From the smallest server in the enthusiast’s basement to thousands of servers in a data center, power is essential. Server virtualization reduces power consumption by consolidating multiple physical servers into virtual machines, but virtual machines must run on physical server hardware, and it needs to be powered.

Power connections (plugs and sockets) come in a variety of standards. The standard two-prong (or three-prong with ground) plug used in North America, the NEMA connector (National Electronic Manufacturers Association), is shown in Figure 2-10. Edison plugs are more rounded than NEMA plugs, but like NEMA, Edisons have three prongs. Industrial-grade equipment uses a twist-lock mechanism to prevent accidental disconnection from the socket.

Figure 2-10 NEMA three-prong socket and plug

Voltage

When two points in a circuit have differing potential electrical charges, they are said to differ in voltage. This difference encourages the flow of electrons, so, with a higher voltage (difference in charge), we have a stronger flow of electrons being forced through a circuit. This is a good thing when we want to power electrical components in a controlled manner, but it’s bad when we (humans) come into contact with components.

Different parts of the world provide electricity at different voltages using alternating current (AC) as opposed to direct current (DC), which is provided by batteries. In North America, the standard is 110 to 120 volts (V), but in Jamaica and most of Europe, 220 to 240V is the norm. Some equipment (notably telecommunications) reduces voltage (–48VV) for the proper functioning of integrated circuits (ICs).

CPUs don’t need as much power as the amount often fed from power supplies in the form of +5 or +12V, so the voltage regulator module (VRM) ensures that the CPU gets only the voltage it needs. This is normally built into the server motherboard.

Industrial environments using specialized equipment such as robotic machinery, motors, hydraulics, and the like will have different power requirements, such as 208, 440, 460, or even 480V. Transformers are used to supply the correct voltage. Most power companies around the world deliver 1-phase and 3-phase power. One-phase (1-phase) power is designed for moderate power requirements, and it’s what you’ll find being used in most households. Industrial environments use 3-phase power.

Electrostatic Discharge

In a perfect world, our bodies (and clothing, jewelry, and so on) would have the exact same charge as the electrical components that we touch, which would prevent the flow of electrons. In reality, the stronger flow of electrons is of concern for personal safety and to prevent damage to equipment, but the good news is there are preventative measures we can take! Electrostatic discharge (ESD) can be controlled in a number of ways:

• ESD strap (bracelet) Connect the alligator clip to the computer chassis.

• ESD mat Stand on this while servicing hardware.

• ESD footwear Seriously, this exists—the footwear contains resistors.

• Touch the computer chassis Do this as often as possible to dissipate any built-up static charge.

The ideal way to minimize ESD would be to ensure that everything is at the voltage as earth ground. What’s more important is that you, the chassis, and the components are at the same electrical potential in terms of voltage.

Our bodies and clothing can build up a static charge, especially in a low-humidity environment. (We’ll talk about environment controls later in this chapter.) Sensitive electronic components, when not in use, should be stored entirely in ESD bags (those shiny, metal-looking bags). So when we are working with electronic components, including customer replaceable units (CRUs) provided under warranty by a vendor, you must take care. Certified technicians, such as those from a reputable vendor, will follow proper procedures such as wearing an ESD wrist strap connected to an unpainted part of a computer chassis or some other method of grounding when working with field replaceable units (FRUs).

Wattage

Server components draw power from the power supply unit (PSU), and most server-class hardware uses redundant PSUs in case one fails. It’s important that you calculate the sum of power required for all internal components and to make sure the PSU form factor matches the server chassis form factor.

The more CPUs and RAM in a system, the greater the power draw. The more disks you have connected to a RAID controller, the more power is drawn. Bear in mind that solid-state drives (SSDs) draw less power than traditional spinning hard disks. Most servers these days use network storage, so the power consumption in this case would apply to the storage enclosure itself and not the server.

PSUs have total wattage listings, such as 500W, 1400W, and so on. Some equipment will sometimes specify the power draw in volt-amperes (VA) instead of watts. When you’re planning server capacity, be sure to think about all components and their power requirements.

Uninterruptible Power Supply

Uninterruptible power supply (UPS) batteries are constantly charged from the standard electricity power grid so that in the event of a power outage, servers plugged into the UPS can at least shut down gracefully. Devices should be plugged into UPSs for protection against power outages. Standby UPS systems quickly switch to battery power when the main power source (power grid) fails. Online UPS systems always use their batteries, which are constantly refreshed when the main power source is available, and they ensure a consistent regulated flow of electricity to devices; this means online UPS systems are more expensive than standby systems, but they are appropriate for minimizing disruptions to mission-critical IT services.

UPS devices are normally rack-mounted in server rooms or data centers, and you should make sure the floor can handle their weight. But how can you make sure the UPS batteries can supply enough power to devices? Kilowatts (kW), or thousands of watts, are usually used for UPS power ratings, although sometimes the rating is in volt-amperes (VA). You should total the draw for a UPS to ensure that there’s enough power to go around.

The UPS runtime capacity specifies how long UPS batteries can support a specific wattage load when the power goes out. For example, a UPS with a certain number of batteries might supply 900W of power for 9 minutes, whereas with a draw of only 300W, it might supply power for 50 minutes. You can also acquire UPS systems designed for entire data center or industrial use.

Calculating UPS Power Draw

Calculating the power drawn by IT equipment in server rooms and data centers is important in ensuring adequate power, including backup power provided by UPS systems. Consider the amount of power needed for an entire rack of equipment or multiple racks such as in a data center. To do this you will need to consider the following:

• Number of servers in the rack

• Power draw per server

• Power draw per storage array (storage controllers in the enterprise often take the form of a rack-mounted server)

• How busy each server is (a server running on average at only 30 percent capacity draws less power than one running at 90 percent)

Next, perform the calculation:

1. Determine the standard power draw for a device by referring to the product documentation or tags on PSUs, which may express this in amps and volts, or watts. In the case of amps and volts, multiply the amps by the volts for each device. In the case of watts, divide this value by the power factor ratio for the server, which is commonly 0.9. So 2700W equals 3000VA. Volt-amps (VA) is a common unit of measurement often expressed when discussing a UPS system. One kilovolt-amp (kVA) is 1000 volt-amps.

2. Add up the kVA values for all equipment to be serviced by the UPS and multiply this by a factor slightly larger than 1 (such as 1.3) to account for future growth and power draw fluctuations based on running workloads.

3. Ensure the watt or VA for the UPS system is a larger value than your calculated power draw, such as the draw for a single rack of equipment.

UPS Monitoring and Management

Conduct UPS battery testing periodically to ensure that the batteries will provide power when needed. Many UPS devices have built-in options where you can schedule the battery test interval on a recurrent basis. There are also battery testers you can use that work even when the batteries are in use, but some of these can be expensive. When maintenance of the UPS or its batteries is required, you can use bypass devices to ensure that the UPS power load is not interrupted.

As a Server+ technician, once servers are up and running, you may not find yourself physically near them for day-to-day management, and this also includes UPS devices. Here are a few UPS remote management considerations:

• The UPS can be connected to the server via a USB cable.

• The UPS can be plugged into the network.

• Remote monitoring and management is possible using protocols such as Simple Network Management Protocol (SNMP).

• Most vendors offer a mobile device app for UPS monitoring.

Environmental Controls

Too much heat is bad for electrical equipment and shortens its life, and not enough humidity increases the likelihood of ESD. HVAC (heating, ventilation, air conditioning) is a big deal for server rooms and data centers.

Temperature

Some CPUs will throttle their throughput if the temperature gets too high. Some have pointy thermal dissipation (heat sinks) on the chip; more surface area helps to dissipate heat quickly. If the CPU gets too hot, it will run much slower than it normally does (faster CPU speeds means more heat), and it’s not just CPUs you should be concerned with—it’s all electronic equipment. Generally speaking, server rooms and data centers should fall somewhere in the range of 65° to 80° Fahrenheit (18 to 27° C).

Sensors can detect temperature and humidity levels to control room temperature and humidity, but internal server temperature is more directly controlled with fans or liquid cooling systems, in which pipes carry cool liquid throughout the system to cool the surrounding air. Computer case fans are designed to take in cooler room air to pass over internal components and then expel the warmed air out to the room.

Airflow

Larger server rooms and data centers have racks of heat-generating equipment. Cool air needs to be fed into the rooms for cooling, and the expelled warmer air needs to be kept separate—but how?

Hot and Cold Air Separation

Separating hot and cold air is achieved using hot and cold aisles. Racks of equipment are arranged in rows, or aisles, for optimal airflow. Baffles are used to control the flow of cooler air into rack-mounted equipment intake fans and to keep the resulting warmer air separate. So, stated simply, cool air should be channeled using baffles and fed to the front of equipment (assuming intake fans are on the front), and baffles or shrouds should channel the resulting warm air from the back away from the cooler air.

Server room racks may not be fully populated with devices, and remember that vented rack blanking panels can be used to control airflow. The added benefit of properly designed airflow systems is that they are less wasteful of energy and thus save money.

Humidity

Controlling the amount of moisture in the air is crucial for the proper functioning of equipment and to prevent ESD. Too little humidity increases the likelihood of ESD; too much humidity results in water condensation on equipment.

Temperature and humidity sensors are a must in server rooms and data centers. More sophisticated HVAC systems will provide a way to configure threshold and alert notifications when values are unacceptable, or they can automatically make adjustments. As a rule of thumb, relative humidity levels should fall somewhere within 40 to 60 percent. The longer temperature and humidity levels are out of range, the more likely equipment will fail.

Fire Suppression

The problem with baffles and shrouds being used to funnel airflow is that they are bulky. In the event of a server room or data center fire, this can impede fire extinction. Of course, the safe evacuation of personnel always takes priority over replaceable equipment. Periodic fire drills are a must!

Smoke detectors can alert technicians to impending fire issues, but the detectors used in data centers are much more sensitive than those you would use in your home. Clean-agent fire suppression systems remove heat to extinguish fires, whereas inert-gas solutions remove oxygen from the fire. Halon systems were used in the past but are no longer the norm because of their negative environmental effects.

Water sprinkler systems should be avoided, unless they are a secondary system when primary fire suppression systems fail. Water will certainly damage electronic equipment, and it can increase the possibility of electrocution (water conducts electricity). As if that isn’t scary enough, pooling and running water can spread fires throughout a facility. There are variations of water “misting” solutions that can help with these problems.

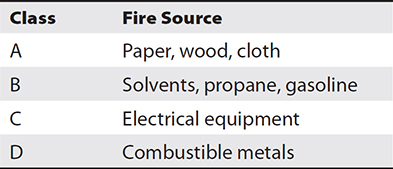

Fire suppression systems, including fire extinguishers (Figure 2-11), can help minimize equipment damage, including smoke damage, but no system is perfect.

Figure 2-11 A Class C fire extinguisher is capable of putting out electrical fires.

Table 2-6 lists common fire extinguisher classes and when they should be used. As with any crucial system, periodic testing, monitoring, and drills can make all the difference when a fire incident actually occurs.

Table 2-6 Classes of Fire Extinguishers

Hands-on Exercises

Exercise 2-1: Calculate Rack Space

A server room rack measures 42U. Currently the rack contains

• Three rows of 7U UPS batteries

• A 10U blade enclosure

How many additional 7U UPS batteries can be mounted in the rack?

The Answer

Since the server room rack totals 42U and 31U are currently used, that leaves us with 11U (42–31). Therefore, only one row of 7U UPS batteries can be mounted in the rack.

Exercise 2-2: Create a Microsoft Windows Server 2019 Virtual Machine Configuration

Make sure the lab setup instructions in Appendix A have been completed before attempting this exercise. This exercise does not actually install the Windows Server operating system; it just gets the virtual hardware configured for use in a later exercise.

1. From the Windows Start menu, choose VMware Workstation Pro. In VMware Workstation, choose File | New Virtual Machine.

2. Choose Typical (Recommended) and click Next.

3. Choose I Will Install The Operating System Later, and then click Next.

4. From the Guest Operating System list, ensure that Microsoft Windows is selected, and from the Version drop-down list, ensure that Windows Server 2019 is selected. Click Next.

5. Name the virtual machine Srv2019-1 and specify a location that has sufficient free hard disk space. Click Next.

Note that this name applies only to VMware. The name configured within the operating system can be something completely different, although in further exercises it will be set to the same value.

6. On the Specify Disk Capacity wizard page, accept the defaults and click Next. Then, on the final wizard page, click Finish.

7. We want our server to have four hard disks in total so that we can configure software RAID later. In VMware Workstation, click Edit Virtual Machine Settings on the left side of the screen. Notice the existing single virtual hard disk of 60GB.

8. Click the Add button at the bottom left of the window, select Hard Disk, and click Next.

9. Select NVMe (Recommended) and click Next. Choose Create A New Virtual Disk and click Next.

10. Notice that the default size is 60GB. Leave this unchanged and click Next.

11. On the Specify Disk File page, accept the default filename and click Finish. Notice the New Hard Disk (NVMe) 60GB listing.

12. Repeat steps 8 through 11 two more times to add more disk space. Your virtual machine settings will then list four virtual hard disks, as shown in the following illustration. Click OK.

Exercise 2-3: Create an Ubuntu Linux Server 20.04.1 Virtual Machine Configuration

Make sure the lab setup instructions in Appendix A have been completed before attempting this exercise. This exercise does not actually install the Linux operating system; it just gets the virtual hardware configured for use in a later exercise.

1. If it’s not already running, start VMware Workstation Pro from the Windows Start menu. Choose File | New Virtual Machine.

2. Choose Typical (Recommended) and click Next.

3. Choose I Will Install The Operating System Later, and then click Next.

4. From the Guest Operating System list, ensure that Linux is selected, and from the Version drop-down list, ensure that Ubuntu 64-bit is selected. Click Next.

5. Name the virtual machine Ubuntu-1 and specify a location that has sufficient free hard disk space. Click Next.

Note that this name applies only to VMware. The name configured within the operating system can be something completely different, although in further exercises it will be set to the same value.

6. On the Specify Disk Capacity wizard page, accept the defaults and click Next. On the final wizard page, click Finish.

7. We want our server to have three hard disks in total so that we can configure software RAID later. In VMware Workstation, click Edit Virtual Machine Settings on the left side of the screen. Notice the existing single Hard Disk (SCSI) 20GB listing.

8. Click the Add button in the bottom left of the window, select Hard Disk, and click Next.

9. Select SCSI (Recommended) and click Next. Choose Create A New Virtual Disk, and click Next.

10. Change the size to 10GB and click Next.

11. On the Specify Disk File page, accept the default filename and click Finish. Notice the new 10GB hard disk is now listed.

12. Repeat steps 8 through 11 one more time to add more disk space. Your virtual machine settings will then list three virtual hard disks, as shown in the following illustration. Click OK.

Chapter Review

In this chapter, you learned that servers consist of various hardware components that must be carefully selected to meet functionality needs. This is true of both physical and virtual server computing environments.

Server Form Factors

The most common form factors are tower, rack-mounted, and blade servers. Blade servers take the least amount of space because they are essentially circuit boards. Racks and rack-mounted equipment are measured in Us, where 1U is 1 3/4 inches in height. Server components such as disks and PSUs, as well as rack components such as PDUs, are often duplicated to eliminate single points of hardware failure.

Server Components

Server motherboards use either the older BIOS or the newer UEFI firmware standard. UEFI supports enhanced features such as IPv6 boot support and Secure Boot. Modern motherboards have many components built-in or integrated, such as NICs, RAID controllers, and video. CPUs are mostly 64-bit. SMP server motherboards have multiple CPU sockets, and each CPU chip can contain multiple cores, which speeds up processing.

Buses move data around in a computer system. The FSB quickly moves data between system RAM and the CPU. Other motherboard chipsets control data flow to slower components such as expansion card slots and USB devices. CPU processing can be sped up using L1 to L3 caches, where L1 is the fastest (and most expensive).

Servers often use ECC memory modules, which use an extra parity bit to detect and correct memory corruption problems. ECC chips should be paired together in the correct motherboard slots. DDR4 modules transfer data more quickly than DDR2. Motherboard documentation will detail support for types of memory and denominations.

The most common type of expansion card slots on motherboards are

• PCI (older standard)

• PCI-X

• PCIe

Peripheral Component Interconnect (PCI) is an older standard, and most newer motherboards do not have standard PCI slots. PCI Extended (PCI-X) and PCI Express (PCIe) are newer card types; modern motherboards tend to have PCIe slots rather than PCI. PCIe uses lanes to transmit and receive data. An x16 PCIe card transfers data much more quickly than an x4 or x8 PCIe card. Servers, either directly (tower) or indirectly (blade via backplane or midplane), often use PCIe NICs. Blade systems could require a backplane/midplane upgrade before supporting faster network speeds through expansion cards.

Server storage can come in the form of DAS, NAS, or SAN. RAID is used to group physical disks together to improve disk I/O performance (striping) or for disk fault tolerance. Server hardware normally supports RAID integrated on the motherboard; otherwise, software RAID within the operating system can be used.

Servers can use USB for input devices such as keyboards. USB standards and speeds are as follows:

• USB 1: 12 Mbps

• USB 2: 480 Mbps

• USB 3: 5 Gbps

• USB 4: 40 Gbps

Power

The main types of power connectors are NEMA, Edison, and twist-lock. Wattage refers to a unit of power. Voltage refers to the potential electrical charge difference between two points in a circuit. Kilowatts (kW) and volt-amperes (VA) are units of measurement used with UPS power ratings. To prevent ESD, which can ruin electronics, ESD straps or mats should be used.

Environmental Controls

Properly designed HVAC systems provide the correct temperature and humidity for the optimal operations of electronic equipment. Airflow in server rooms and data centers is crucial to keep incoming cool air separated from outgoing warmer air. Hot and cold aisles, baffles, and shrouds are used to control airflow.

Water should not be used as the primary fire suppressant when electronics are involved. Modern solutions aim to remove heat or oxygen from the fire.

Questions

1. Data center equipment racks are normally how wide?

A. 16 inches

B. 19 inches

C. 24 inches

D. 32 inches

2. Which unit of measurement is used for racks and rack-mounted devices?

A. W

B. Inches

C. Centimeters

D. U measure

3. Which term describes the chassis housing multiple blade servers?

A. Blade house

B. Blade enclosure

C. Blade box

D. Blade baffle

4. Which firmware standard supports the Secure Boot feature?

A. UEFI

B. CMOS

C. BIOS

D. PCIe

5. Larry, a server technician, is attempting to start the Linux operating system on a blade server but keeps getting “Access Denied” messages. What should Larry do?

A. Reinstall Linux.

B. Update the motherboard firmware.

C. Disable UEFI Secure Boot.

D. Enable UEFI Secure Boot.

6. You are ordering server hardware that will run mathematically intensive engineering applications. Which server component can help the most in speeding up processing time?

A. RAM

B. Greater disk storage capacity

C. L1 cache

D. L4 cache

7. A newly installed tower server will function as a packet-filtering firewall. Currently, the server has three NICs, each connected to separate VLANs. A fourth NIC is required for connectivity to a perimeter network. When choosing a new NIC, what factor should be considered?

A. PCIe versus PCI

B. RAID 0 versus RAID 1

C. IPv4 versus IPv6

D. BIOS versus UEFI

8. Which USB standard supports transmission rates up to 480 Mbps?

A. USB 1

B. USB 2

C. USB 3

D. USB 4

9. Which term identifies the flow of electrons between two differently charged endpoints in a circuit?

A. Volts

B. Watts

C. PSD

D. ESD

10. You are planning to upgrade your existing UPS system. How can you ensure device power needs will be met?

A. Determine UPS kW or VA rating, calculate power draw sum of devices.

B. Determine UPS V or W rating, calculate power draw sum of devices.

C. Determine UPS kW or VA rating, calculate power draw average of devices.

D. Determine UPS V or W rating, calculate power draw average of devices.

11. Which two items are critical when planning server room racks? Choose two.

A. Length of network cables

B. Total amount of CPU computing power

C. Rack weight

D. Airflow

12. A line-of-business database application is regularly accessed by many users concurrently. After monitoring server performance metrics, you conclude that disk I/O is the bottleneck. What can you do to optimize application performance?

A. Configure RAID disk striping.

B. Configure BIOS disk striping.

C. Configure RAID disk fault tolerance.

D. Configure BIOS disk fault tolerance.

13. What can be used to prevent server interruptions when disks fail?

A. Hot-swappable disks

B. RAID fault tolerance

C. RAID disk striping

D. UEFI

14. In which type of environment is 1-phase power most often used?

A. Industrial

B. Data center

C. Manufacturing

D. Residential

15. What purpose does a UPS serve?

A. Enables servers to perform graceful shutdowns

B. Keeps servers running when the power goes out

C. Increases wattage for components requiring it

D. Protects server from malicious attacks

16. Which items are affected by server form factors? Choose two.

A. Type of expansion card

B. Amount of supported RAM

C. Rack mount ability

D. Disk space capacity

17. Which server rack item prevents cables from pulling out when you slide out a server for servicing?

A. Rack cable arm

B. Cable management arm

C. Rack cable extender

D. Cable management extender

18. Which statement regarding blade servers is true?

A. The server is a standalone piece of equipment.

B. The server is mounted into rails on a server rack.

C. The server has its own power supply.

D. The server slides into an enclosure.

19. How is data transmitted throughout a computer system?

A. PCI

B. Bus

C. PCIe

D. CMOS

20. You want to ensure that your RAID controller caches disk writes. Which option must the controller support?

A. Write-back caching

B. Read-write caching

C. Restart-enabled write caching

D. Battery-backed write caching

21. What is a KVM switch used for?

A. Network device interconnect

B. Server management

C. Redundancy

D. RAID controllers

22. Why are server racks arranged in rows?

A. To optimize airflow

B. To allow access to servicing panels

C. To reduce the impact of fire

D. To increase security

23. Which class of fire extinguisher is used to extinguish server equipment fires?

A. A

B. C

C. D

D. B

24. You are the server technician for a Caribbean telecommunications provider. Power on the island is unreliable, and you want to prevent servers from abruptly shutting down. What should you acquire?

A. ABS

B. UPS

C. RDP

D. ESD

25. One of your clients needs a new physical server to store files and run a small customer database application. The IT budget is very small, and the company wants to reuse any existing hardware components, such as network cards, that they have on hand. Which server form factor should you recommend to your client?

A. Tower

B. Rack-mounted

C. Blade

D. Virtual

Questions and Answers

1. Data center equipment racks are normally how wide?

A. 16 inches

B. 19 inches

C. 24 inches

D. 32 inches

B. Most rack systems are 19 inches wide, although they are available in wider formats such as 23 inches. A, C, and D are incorrect. These values are not used for rack widths.

2. Which unit of measurement is used for racks and rack-mounted devices?

A. W

B. Inches

C. Centimeters

D. U measure

D. A U equals 1 3/4 inches. This is used to measure racks and the devices that fit into them. A, B, and C are incorrect. These units of measurement are not used in rack-mounted systems.

3. Which term describes the chassis housing multiple blade servers?

A. Blade house

B. Blade enclosure

C. Blade box

D. Blade baffle

B. A blade enclosure contains multiple blade servers. A, C, and D are incorrect. These blade terms are fictitious.

4. Which firmware standard supports the Secure Boot feature?

A. UEFI

B. CMOS

C. BIOS

D. PCIe

A. UEFI supersedes the older BIOS standard and supports the Secure Boot feature, which ensures that only trusted (digitally signed) operating systems are allowed to start. B, C, and D are incorrect. CMOS refers to your specific configuration of hardware settings supported by the BIOS. The PCIe expansion card standard supersedes both PCI and PCI-X, with more bandwidth.

5. Larry, a server technician, is attempting to start the Linux operating system on a blade server but keeps getting “Access Denied” messages. What should Larry do?

A. Reinstall Linux.

B. Update the motherboard firmware.

C. Disable UEFI Secure Boot.

D. Enable UEFI Secure Boot.

C. Some operating systems cannot be used with the UEFI Secure Boot feature because they are not digitally signed. This lack of a trusted signature can result in error messages when the system boots if the Secure Boot feature is enabled, so one solution is to disable UEFI Secure Boot. A, B, and D are incorrect. Reinstalling Linux, updating motherboard firmware, and enabling UEFI Secure Boot will not solve the problem. Linux is already installed (it had to have worked somehow at some point for the installation to complete); the solution is to disable UEFI Secure Boot.

6. You are ordering server hardware that will run mathematically intensive engineering applications. Which server component can help the most in speeding up processing time?

A. RAM

B. Greater disk storage capacity

C. L1 cache

D. L4 cache

C. L1 cache is high-speed memory that is used by the CPU to fetch instructions and/or data quickly that has already been accessed. A, B, and D are incorrect. Adding RAM enables more programs and services to run simultaneously, but it doesn’t help with the requirements in this scenario. More disk space will not speed up programs that are mathematically intensive, and L4 cache is not accessed as quickly by the CPU as L1 cache is.

7. A newly installed tower server will function as a packet-filtering firewall. Currently, the server has three NICs, each connected to separate VLANs. A fourth NIC is required for connectivity to a perimeter network. When choosing a new NIC, what factor should be considered?

A. PCIe versus PCI

B. RAID 0 versus RAID 1

C. IPv4 versus IPv6

D. BIOS versus UEFI

A. Tower server motherboards will most likely accommodate PCIe cards, but older ones might support PCI. B, C, and D are incorrect. RAID is related to multidisk configuration and not networking. IPv4 and IPv6 are software protocols that are not directly impacted when choosing a server NIC. Whether the older BIOS or newer UEFI firmware standard is used has no impact on the NIC selection, as long as the selected NIC can be accommodated by the motherboard slots.

8. Which USB standard supports transmission rates up to 480 Mbps?

A. USB 1

B. USB 2

C. USB 3

D. USB 4

B. In theory, the USB 2 standard can transfer data at approximately 480 Mbps. A, C, and D are incorrect. The USB 1 maximum speed is 12 Mbps, USB 3 is 5 Gbps, and USB 4 maximum speed is 40 GGbps.

9. Which term identifies the flow of electrons between two differently charged endpoints in a circuit?

A. Volts

B. Watts

C. PSD

D. ESD

D. ESD, or electrostatic discharge, is the transfer of electrons between two differently charged points in a circuit. ESD can damage sensitive electronic components, so precautions such as ESD wrist straps or ESD mats should be used. A, B, and C are incorrect. Volts are a unit of measurement related to expressing the difference in electrical potential. Watts are used to measure units of power. PSD is not a valid acronym in this context.

10. You are planning to upgrade your existing UPS system. How can you ensure device power needs will be met?

A. Determine UPS kW or VA rating, calculate power draw sum of devices.

B. Determine UPS V or W rating, calculate power draw sum of devices.

C. Determine UPS kW or VA rating, calculate power draw average of devices.

D. Determine UPS V or W rating, calculate power draw average of devices.

A. Most UPSs express their power ratings in either kilowatts (kW) or volt-amperes (VA). The power draw for all devices that will be plugged into the UPS system should be totaled to ensure that enough power will be supplied in the event of a power failure. B, C, and D are incorrect. Volts and watts are not used to rate power supplied from a UPS system. When determining whether the UPS can support devices, the total power draw of those devices must be compared to the UPS rating.

11. Which two items are critical when planning server room racks? Choose two.

A. Length of network cables

B. Total amount of CPU computing power

C. Rack weight

D. Airflow

C, D. A full rack can weigh hundreds of pounds; floors must be able to support these loads properly. Airflow can be controlled around racks using baffles, shrouds, and vented filler panels. A and B are incorrect. Although recommended network cable lengths should not be exceeded, this is not as important as rack weight and airflow. CPU computing power is a server planning consideration, not a rack planning consideration.

12. A line-of-business database application is regularly accessed by many users concurrently. After monitoring server performance metrics, you conclude that disk I/O is the bottleneck. What can you do to optimize application performance?

A. Configure RAID disk striping.

B. Configure BIOS disk striping.

C. Configure RAID disk fault tolerance.

D. Configure BIOS disk fault tolerance.

A. RAID disk striping arranges multiple disks to work as a single unit to optimize disk reads and writes. B, C, and D are incorrect. There is no such thing as BIOS disk striping. Disk fault tolerance will not improve application performance.

13. What can be used to prevent server interruptions when disks fail?

A. Hot-swappable disks

B. RAID fault tolerance

C. RAID disk striping

D. UEFI

A. Hot-swapping enables the exchanging of hardware components while the server is running. B, C, and D are incorrect. Even though there are types of RAID configurations that can ensure that things keep running when disks fail, it depends on the implementation; hot-swapping is a better answer. UEFI is a firmware standard that replaces the BIOS standard; it is not related to disk failures.

14. In which type of environment is 1-phase power most often used?

A. Industrial

B. Data center

C. Manufacturing

D. Residential

D. One-phase power is used for small power draw needs such as heating systems and lighting. A, B, and C are incorrect. Industrial and manufacturing environments, along with data centers, often use 3-phase power because of the larger power draw.

15. What purpose does a UPS serve?

A. Enables servers to perform graceful shutdowns

B. Keeps servers running when the power goes out

C. Increases wattage for components requiring it

D. Protects server from malicious attacks

A. UPS systems enables servers to shut down properly instead of abruptly, which can corrupt open files. B, C, and D are incorrect. UPS systems are not designed to keep servers running other than for very short periods, nor do they increase wattage or protect from attacks.

16. Which items are affected by server form factors? Choose two.

A. Type of expansion card

B. Amount of supported RAM

C. Rack mount ability

D. Disk space capacity

A, C. Expansion cards, such as PCI, may not fit into slimmer server profiles such as rack-mounted servers. Tower servers cannot be rack-mounted, so rack mount ability is another item affected by server form factors. B and D are incorrect. The amount of RAM or disk space is not affected by server form factors.

17. Which server rack item prevents cables from pulling out when you slide out a server for servicing?

A. Rack cable arm

B. Cable management arm

C. Rack cable extender

D. Cable management extender

B. Cable management arms contain server cables and fold out when the server is slid out on rails. A, C, and D are incorrect. Rack cable arm, rack cable extender, and cable management extender are not proper terms.

18. Which statement regarding blade servers is true?

A. The server is a standalone piece of equipment.

B. The server is mounted into rails on a server rack.

C. The server has its own power supply.

D. The server slides into an enclosure.

D. A blade enclosure can contain many blade servers that slide in to make a connection to the midplane. A, B, and C are incorrect. Blade servers cannot run on their own; they must be placed in a blade enclosure. Blades themselves are not rack-mounted, although blade enclosures are. Blades do not have on-board power sources; this comes from the enclosure.

19. How is data transmitted throughout a computer system?

A. PCI

B. Bus

C. PCIe

D. CMOS

B. Computers contain multiple buses that transmit data throughout the system. A, C, and D are incorrect. PCI and PCIe are expansion card standards that have their own buses for moving data into and out of card slots. CMOS refers to your specific configuration of hardware settings supported by the BIOS.

20. You want to ensure that your RAID controller caches disk writes. Which option must the controller support?

A. Write-back caching

B. Read-write caching

C. Restart-enabled write caching

D. Battery-backed write caching

D. Battery-backed caching uses a battery to supply power to cached data writes. A, B, and C are incorrect. Write-back caching, read-write caching, and restart-enabled write caching are incorrect terms in this context.

21. What is a KVM switch used for?

A. Network device interconnect

B. Server management

C. Redundancy

D. RAID controllers

B. KVM switches have a keyboard, video display, and mouse attached along with cables plugged into numerous servers; the servers can be managed locally without each needing a keyboard, video, and mouse. A, C, and D are incorrect. KVM switches do not interconnect network devices, and they do not provide redundancy or RAID controller features.

22. Why are server racks arranged in rows?

A. To optimize airflow

B. To allow access to servicing panels

C. To reduce the impact of fire

D. To increase security

A. Rows of racks enable cool air to be taken into devices and warm air to be exhausted out the back; these are called hot and cold aisles. B, C, and D are incorrect. Rack rows do not facilitate servicing, and they do not reduce fire impact or increase security.

23. Which class of fire extinguisher is used to extinguish server equipment fires?

A. A

B. C

C. D

D. B

B. Class C fire extinguishers are designed to put out fires based on electrical components. A, C, and D are incorrect. Class A extinguishers are for paper, wood, or cloth fires. Class D fire extinguishers are for combustible metal fires, and class B fire extinguishers are for flammable liquid fires.

24. You are the server technician for a Caribbean telecommunications provider. Power on the island is unreliable, and you want to prevent servers from abruptly shutting down. What should you acquire?

A. ABS

B. UPS

C. RDP

D. ESD

B. UPS systems enable servers to shut down properly instead of abruptly, which can corrupt open files. A, C, and D are incorrect. ABS is not a valid term in this context. RDP is the Remote Desktop Protocol used to manage a computer remotely over the network. ESD is electrostatic discharge, which occurs when two devices in close proximity have varying electrical charges.

25. One of your clients needs a new physical server to store files and run a small customer database application. The IT budget is very small, and the company wants to reuse any existing hardware components, such as network cards, that they have on hand. Which server form factor should you recommend to your client?

A. Tower

B. Rack-mounted

C. Blade

D. Virtual

A. Tower servers are generally cheaper than blade or rack-mounted servers, and because they contain standard hardware, reusing components is not a problem. B, C, and D are incorrect. Rack-mounted and blade servers can be expensive and are not the best choices for a small shop. A virtual server is not an option because the question states a physical server is required.